Abstract

Older individuals prefer to maintain their autonomy while maintaining social connection and engagement with their family, peers, and community. Though individuals can encounter barriers to these goals, socially assistive robots (SARs) hold the potential for promoting aging in place and independence. Such domestic robots must be trusted, easy to use, and capable of behaving within the scope of accepted social norms for successful adoption to scale. We investigated perceived associations between robot sociability and trust in domestic robot support for instrumental activities of daily living (IADLs). In our multi-study approach, we collected responses from adults aged 65 years and older using two separate online surveys (Study 1, N = 51; Study 2, N = 43). We assessed the relationship between perceived robot sociability and robot trust. Our results consistently demonstrated a strong positive relationship between perceived robot sociability and robot trust for IADL tasks. These data have design implications for promoting robot trust and acceptance of SARs for use in the home by older adults.

1. Introduction

Enabling “aging in place” has become a global imperative [1,2]. The process involves a person’s evolving circumstances throughout their life course and could include their financial situation, retirement status, family roles, and other aspects of their social, cognitive, and physical functioning. One of the primary goals is to support older adults in their preferred place of residence rather than transitioning to institutional care or, if they are in an institutional setting, to enable them to maintain functioning and independence [1].

Socially assistive robots (SARs) may support a wide array of aspects of daily living. SARs vary widely in terms of social abilities and physical form factors. Robot care providers can be purely mobile platforms, mobile manipulators, or completely stationary; range in humanoid features; and have various autonomy capabilities and self-directedness. Specifically, mobile manipulators (e.g., Stretch, Tiago), which are robot caregiver systems with both mobility capabilities and a robotic arm for manipulation [3], can support instrumental activities of daily living (IADLs) such as cleaning, assistance with grabbing, or reaching objects. In contrast, exclusively socially assistive robots do not perform manipulation tasks (e.g., NAO, Jibo), but instead provide social support capabilities for IADLs (e.g., medication management, alarms, and reminders). Other contexts for social robots include education, medicine, and rehabilitation [4].

By assisting with activities, SARs have the potential to support older adults’ needs in their own home and community. For robots to be successfully implemented in this context, they will need to be trusted by older users [5,6]. Trust is an important factor to consider when designing for long-term interactions with SARs [7,8].

1.1. Trust in Robot Care Support

Within the human–robot interaction (HRI) literature, several consistent factors emerge as potential determinants of trust. Common human factors include performance, preferences for robot appearance, physical touch, form factors [9,10,11], safety, and predictability [12]. In addition, user characteristics such as age and technology experience play a role in shaping trust [13].

Although studies have found that age can play a role in shaping human trust, less research has focused on factors that shape older adults’ trust in robots. A mixed-method interview study by Stuck and Rogers (2018) [8] explored dimensions of robot care provider trust among older adults and found that robot competency and professional skills were a prominent trust-determining theme. Their study also revealed a novel trust factor, sociability, which refers to perceptions of a robot as kind, friendly, polite, and benevolent.

The study by [8] illustrated the importance of robot communication in establishing trust, with subdimensions such as task-specific communication, responsiveness, and interpersonal communication. These findings are consistent with the findings from a general review by [14] on HRI nonverbal communication in shaping users’ perceptions of robot politeness, friendliness, and trust. However, the relationship between robot sociability and trust among older adults still lacks quantitative and empirical evaluation.

1.2. Robot Sociability and Trust

Sociable robots have friendly and benevolent attributes, which may be associated with capabilities for understanding and appropriately responding to human social cues such as body language, tone of voice, and facial expression [15]. Robots that demonstrate empathy, emotional responsiveness, and adaptability, and that can learn from interactions to tailor their behaviors and responses to individual users, are likely to be perceived as sociable [13,14].

Theoretically, a positive relationship between robot sociability and trust can be explained in part by the social identity and similarity-attraction paradigms [16,17]. These perspectives posit that individuals tend to trust and feel closer to interaction partners that exhibit behaviors or traits that they identify with. Robots demonstrating human-like social skills, politeness, and emotional acuity can foster a sense of familiarity and comfort, subsequently reducing perceived risks and nurturing trust [14]. Similarly, the uncertainty reduction theory underscores that predictability and politeness during social interactions can contribute to the establishment of trust [18].

Aspects of robot sociability could be especially critical when considering robots to support activities in the home for older adults. A sociable robot’s capability to engender trust and comfort could potentially mitigate apprehension that older adults might have towards robotic support in their home, thereby enhancing adoption and user experience [19].

1.3. Overview of Studies

To explore robot sociability and trust among older adults, we conducted two online survey studies. In both studies, participants were shown a series of videos of robots performing common everyday living tasks. A total of six SAR videos were chosen to represent three levels of robot social capabilities (low, medium, high) in performing two IADL tasks (manipulation/delivery and medication management). The vignettes used in the survey video stimuli were selected from a sample of robot videos evaluated by subject matter experts (SMEs). In the survey for Study 1, the robots were shown to participants in order from low-to-high robot social capabilities, which could have engendered order-effects and influenced participants’ perceptions of robot sociability (friendliness) and trust.

We conducted Study 2 to implement a counter-balancing technique for how the videos were displayed to participants. In addition, we re-examined the robot form factors and capabilities displayed by the robots to rule out potential confounding factors that could have been present in Study 1, and to assess whether the findings for the relationship between robot sociability and trust were replicated with different participants.

2. Study 1

2.1. Method

- Recruitment and Sample Characteristics

We recruited participants with a snowball approach by reaching out to older adults through registries used in other studies within our laboratory, as well as personal contacts, and the Illinois Health and Engagement through the Lifespan Project Registry (I-HELP). The participants were 51 older adults aged 65–85 (M = 71.34, SD = 5.75) and intermediate (24%) or fluent English speakers (76%) who could fully understand and interpret online survey questions. On average, participants were highly educated, married older adults living in an apartment or condominium (see Table 1).

Table 1.

Demographic information of participants in Study 1 (N = 51).

We utilized the REDCap platform for online survey data collection [20]. The online survey included a consent form, an image and video for each robot, and questionnaires for perceived robot sociability and perceived robot trust. For compensation, participants were given the opportunity to provide their email to be entered into a raffle to win an Amazon gift card. When participants selected the web link to the survey from our email recruitment materials, a consent form was presented on the REDCap landing page. The participants were required to read the form and consent to participate prior to viewing the next page where the survey began. All procedures were approved by the Institutional Review Board of the Office for the Protection of Research Subjects at a large Midwestern university.

2.2. Study 1 Materials and Measures

- Video Stimuli Development

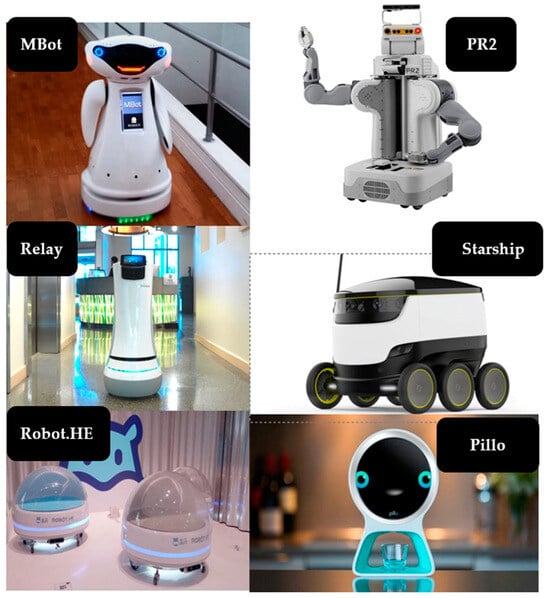

To select the sample of robots to include in the survey, we conducted an evaluation of existing SARs’ social characteristics and features with HRI SMEs. Our goal was to select video stimuli that represented SARs that had a variety of form factors and social capabilities—such as “the robot expressed emotions”, “the robot communicated with users verbally or with sounds”, and “the robot had a distinct personality”. We down-selected from twelve to six robots by choosing robots that scored low, medium, and high in social capabilities across two tasks (Figure 1). Response options assessed the level of agreement 1 = strongly disagree to 5 = strongly agree for each item.

Figure 1.

Robot images that were selected for this study.

Each video included the robot completing an example IADL task (i.e., medication management or delivery) and a human–robot interaction (i.e., robots were shown interacting with collocated humans) [21,22]. Prior to reviewing each robot video, participants responded to questions about the perceived sociability and perceived trust of the robot. All participants received the robot stimuli and survey questions in the same order.

- Survey Material

After watching each robot video, participants responded to a set of Likert scale questions about their perceptions of robot sociability and robot trust. This cycle was iterated for each of the six robots selected for the study. The online questionnaire also included several sets of questions on demographics and the initial perceptions of robot characteristics.

- Perception of Sociability

We measured perceived robot sociability using an 8-item questionnaire. Participants rated their level of agreement with the following statements for each of the six robots: I think the robot is a pleasant conversational partner; I think the robot is pleasant to interact with; I think the robot understands me; I think the robot is nice; I think the robot is relatable; I think the robot is friendly; I think the robot is polite; I think the robot is sociable. Response options ranged from 1 = strongly disagree to 7 = strongly agree. The items were adapted from previously validated measures [19] (Table 2).

Table 2.

Robot Sociability Example Items and Reference.

- Perceived Trust

We operationalized perceived robot trust using an 8-item questionnaire. Participants rated their level of agreement with the following items for each of the six robots: I think I would trust the robot if it gave me advice; I think if I would give the robot information, it would not abuse this; I think the robot is reliable; I think I have confidence that the robot guides the task; I think the robot is precise; I think the robot poses risk to the user; I think the robot has integrity; I think the robot is trustworthy. Response options ranged from 1 = strongly disagree to 7 = strongly agree. The items were adapted from previously validated measures [19] (Table 3). In addition, similar measures have been recently utilized and validated in a related context [23].

Table 3.

Robot Trust Example Items and References.

- Demographics

Participants were asked demographic questions, including their age, gender, household income, race, and level of education.

- Study Procedure

A link to the survey was provided via emails and flyers during recruitment. Once a participant entered the link, they were led to the survey. The initial page of the survey was the consent form. Once consented into the study, the first few questions asked the participants their gender, date of birth, and if they could speak English fluently.

After completion of the initial background questions, the first SAR was displayed followed by three questions asking about the participants’ initial perceptions of that SAR. Participants then watched a video of the same SAR performing either delivery or a medication management task.

After completing the questions for all six SARs, participants completed a questionnaire that included questions about primary language for communication, education, marital status, racial groups, housing information, household income, employment, and disability benefits. Participants were then able to choose whether they would be willing to provide identifiable information for raffle compensation. If the participant agreed, they provided their name, address, and phone number.

2.3. Study 1 Analytical Procedures

For the initial analysis, we performed descriptive statistical procedures to assess the sample characteristics and to evaluate measures of central tendency for our key variables of interest (sociability and trust). In addition, we performed a simple bivariate Pearson’s correlation analysis and scatter-plot data visualization to assess the primary relationship between sociability and trust.

3. Study 1 Results

Study 1 Relationship between Sociability and Trust

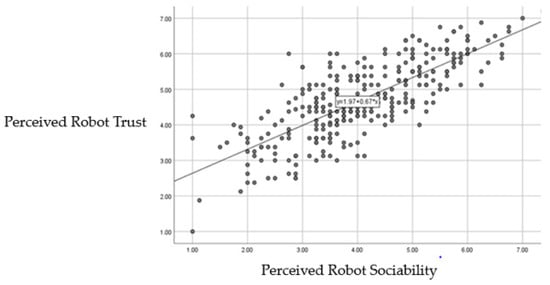

Our initial bivariate Pearson’s correlation results (Figure 2) indicated a strong positive linear association between robot sociability and trust (r = 0.77, p < 0.001). In addition, robot sociability was associated with almost 60% of the variance in robot trust (R^2 = 0.59). These findings clearly show that, on average, robots that were perceived as more sociable were likely to be rated as more trustworthy.

Figure 2.

Bivariate correlation between sociability and trust (1 = strongly disagree; 7 = strongly agree).

4. Study 1 Discussion

The quantitative assessment strongly supported the notion that perceived sociability and robot trust are positively associated, which was consistent with previous qualitative research on HRI trust by older adults [8]. These initial findings provide evidence for the potential influence sociability may have on HRI trust. Further investigation into how to design robots to be appropriately sociable for a given use–case/context is required to calibrate HRI trust for specific robot form factors. However, this study was not without limitations; specifically, we only exposed the participants to the robot videos in one order, which could have positively biased results. We thus conducted a replication study to reduce this bias and to evaluate the sociability–trust relationship in an independent sample of older adults.

In Study 2, we incorporated a counter-balanced study design, such that half of the participants were randomly selected to view the robot demonstration videos from high social characteristics to low, whereas the other half were shown the robots in the reverse order to minimize potential order-effects. In addition, we revised our sampling procedures to broaden the participant characteristics.

5. Study 2

5.1. Method

- Recruitment and Sample Characteristics

The Study 2 methodology was generally similar to that used in Study 1 while addressing the limitations described to improve rigor. For the second study, we wanted to achieve more consistency on who our participants were by constraining participation to living in Illinois. We kept our participant age requirement set from 65 to 85. To recruit participants, we engaged community groups across the state, university news outlets, and email listservs. Participants had a mean age of 72 years old (SD = 5.4). See Table 4 for more details.

Table 4.

Demographic information of participants in Study 2 (N = 43).

As in Study 1, REDCap was used for data collection and included the consent form, an age and zip code check, and general demographic questions, and then the participants were introduced to the socially assistive robot videos. They were then asked perceived sociability questionnaires and perceived trust questionnaires for each robot.

5.2. Study 2 Materials and Measures

- Video Stimuli Development

We revised the video stimuli to show less commercialized videos and less human–robot interaction, to highlight the sociability of the robot itself. Our first enhancement was to ensure that the sampled robots had similar capabilities for manipulation and mobility. In addition, we changed the activities the robots were completing to delivery and cleaning (instead of medication management) to more appropriately match the robots’ actual capabilities. We then conducted similar SME testing (N = 9 SMEs) with 13 robots to evaluate the robots’ social capabilities to make sure there was a stratification between the robots. The robots selected are shown in Figure 3.

Figure 3.

Robot images that were selected for this study.

- Survey Updates

The online questionnaire was slightly updated from Study 1. All other materials not listed below remained the same. To update and refine the survey, we added counterbracing to the robot stimuli as well as the presentation order (robot video then image). In addition, we added a question in the beginning asking if they felt robots could be used in the future to support older adults and included four more questions about perceptions of sociability for each robot.

- Study 2 Survey

In our second online survey, we again showed participants robot videos followed by a series of questions about the stimuli. Immediately following each video, participants responded to questionnaires to rate their perceptions of that robot’s sociability and trust.

We used the same eight statements about trust and sociability as in Study 1, with some minor adjustments—mainly to convert the tense from past tense to future tense given that participants did not have physical interactions with each robot. Additional details about each measure are described below.

- Perceived Trust

We operationalized perceived robot trust with an 8-item questionnaire. Participants rated their level of agreement with the following statements for each of the six robots in Study 2: I think I would trust the robot if it gave me advice; I think the robot would be reliable; I think if I gave the robot personal information, it would not misuse it; I think the robot would be able to guide me through tasks; I think the robot would be precise; I think the robot would be safe for a person to use; I think the robot would have integrity; I think the robot would be trustworthy. Response options ranged from 1 = strongly disagree to 7 = strongly agree.

- Perception of Sociability

We measured perceived robot sociability using an 8-item questionnaire. Participants rated their level of agreement with the following statements for each of the six robots in Study 2: I think the robot would be a good conversational partner; I think the robot would be pleasant to interact with; I think the robot would understand me; I think the robot would be nice; I think the robot would be relatable; I think the robot would be friendly; I think the robot would be polite; I think the robot would be sociable. Response options ranged from 1 = strongly disagree to 7 = strongly agree.

5.3. Data Analyses Procedures

Like Study 1, we performed a simple bivariate Pearson’s correlation analysis and scatter-plot data visualization. In addition to replicating Study 1, we conducted additional analyses with the new sample of data by performing multivariate linear regression analysis as well as additional descriptive statistics. For the regression analysis, we computed averaged composite measures for sociability and trust to assess the overall association across the entire sample of robots.

6. Study 2 Results

6.1. Study 2 Descriptive Statistics

The tables below display the descriptive statistics (mean, SDs) for robot sociability (Table 5) and trust (Table 6) for each robot. Relay and Hollie were top-rated for sociability and trust and Care-O-Bot and PR2 were ranked in the middle, while Stretch and Tiago were consistently rated as the least sociable and least trustworthy robots.

Table 5.

Descriptive statistics for robot sociability.

Table 6.

Descriptive statistics for robot trust.

6.2. Study 2 Relationship between Sociability and Trust

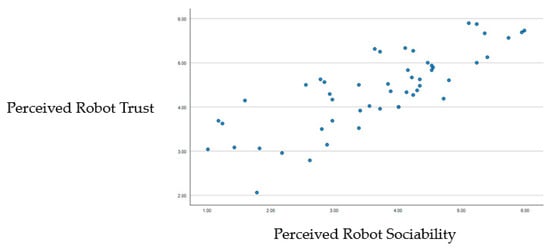

Consistent with Study 1, we observed a strong positive bivariate correlation between sociability (Figure 4) and trust (r = 0.82, p < 0.001). Robot sociability was associated with approximately 63% of the variance in robot trust (R^2 = 0.67).

Figure 4.

Bivariate correlation between robot trust and robot sociability.

6.3. Study 2 Full Regression Model Results

We performed a multivariate regression analysis with overall perceived trust as the dependent variable and perceived robot sociability as the main independent variable. In addition, we controlled demographic factors in the model. Our multivariate regression results suggested that robot sociability was positively associated with robot trust (B = 0.56, SE = 0.08, p < 0.001)—net of all controls (Age: B = −0.01, SE = 0.02, p > 0.05; Gender: B = −0.29, SE = 0.22, p > 0.05; Education: B = −0.11, SE = 0.09, p > 0.05; SRHS: B = 0.12, SE = 0.10, p > 0.05; Race: p > 0.05; Marital Status: p > 0.05). The full regression model was associated with approximately 63% of the variance in robot trust (Adj. R^2 = 0.68, SE = 0.51, p < 0.001) (Table 7).

Table 7.

Multivariate regression results predicting perceived trust.

7. Study 2 Discussion

Our regression analysis replicated the positive bivariate correlation findings in Study 1 between sociability and trust with a different sample of participants and robots. On average, when a robot was perceived as more sociable, it was also rated with a higher level of trust. This could suggest that the relationship between perceived sociability and trust is robust. In addition to the directionality of the association, key test statistics for each analysis were similar across both studies (e.g., bivariate Pearson’s correlation coefficient results and R^2 variance). However, future work could benefit from assessing these relationships with a larger and more diverse sample of older adult participants.

8. General Discussion and Future Work

Overall, the results of our two studies have implications for the design and implementation of socially assistive robots to support daily activities for older adults. Trust is a crucial factor in determining the acceptance and use of potentially supportive SARs. By designing SARs that are perceived as sociable and friendly, developers can help establish trust and increase the likelihood of successful adoption and sustained use by older adults. Our findings contribute to this domain by providing novel quantitative evidence for the positive association between SAR sociability and trust among older adults. Though the studies utilized different samples of participants and SARs/robot capabilities and form factors, we found that older adults consistently reported higher trust for robots they perceived as more sociable. In simple terms, this means that regardless of form factor, SARs should be designed to interact and communicate with older adults in a friendly manner to foster the development of trust and potential long-term acceptance.

To ensure SAR designs meet older adults’ needs, future research could further explore the specific features and characteristics of SARs that contribute to perceived sociability during co-located interactions with SARs. In addition, longitudinal studies could examine the long-term effects of SAR use on trust, as well as the potential benefits and challenges of incorporating SARs into older adults’ daily lives. Effective communication of the robot’s intentions and behaviors is essential to ensure successful coordination and interaction with humans and other smart technologies in the home [25].

To do so, we recommend that future work incorporates theory-driven approaches—such as the principles of the Uncertainty Reduction Theory [18], which may guide developers in creating human–robot interfaces that proactively address older adults’ uncertainties about SARs’ capabilities and behaviors. Likewise, leveraging related frameworks such as the Similarity-Attraction Paradigm [17] could help robot designers to integrate familiar features or configurable/calibrated attributes that resonate more deeply with the elderly, thereby fostering trust and acceptance. This blend of addressing both cognitive uncertainties and emotional connections could be the key to unlocking the potential of SARs in older adults’ daily routines. In addition to these suggestions, we encourage researchers to consider other theories as well, given a multitude of frameworks could help lead to greater understanding in the HRI and aging domain—which is currently lacking in theory-driven approaches.

SARs have tremendous potential to support older adults. Understanding the social characteristics that relate to trust can lead to the design of robots that are more likely to be accepted by older adults. Such acceptance could lead to an enhanced quality of life. However, it is important to consider the potential risks of over-trusting and over-relying on SARs once they are accepted. Over-reliance on the capabilities of a robotic system can lead to adverse and dangerous outcomes, especially in the context of aging in places where older adults may have limited physical or cognitive abilities to intervene in case of a malfunction or misuse. Therefore, user-centered design and participatory design approaches are encouraged to calibrate robotic systems’ sociability and communication behaviors for given use–cases, and specific user needs and capabilities [26].

Future research will need to identify the specific features and characteristics of highly sociable/friendly SARs that may contribute to over-trust and over-reliance, and the potential negative impacts associated with it, such as a lack of vigilance.

9. Conclusions

Our study lays a foundation for advancing the concept of robot sociability in the field of socially assistive robots (SARs) to enhance the lives of older adults. SARs that can build trust with their users have the potential to combat feelings of isolation and loneliness, enhancing wellbeing for older adults living independently. In addition, SARs may assist in physical tasks, supporting continued independence and reducing caregiver burden. However, for SARs to be trusted and accepted, our study strongly suggests that robots must be perceived as benevolent/sociable by older adults.

The personalized, user-centered approach optimizing for sociability could potentially lead to better health outcomes by improving medication adherence, supporting consistent exercise, and enabling timely response to health emergencies. Nonetheless, it is crucial to avoid over-reliance and ensure that the design incorporates fail-safe mechanisms and ethical considerations.

Building sociable and trustworthy SARs while respecting older adults’ autonomy and providing adequate safeguards will optimize the balance between the benefits of technological support and the potential risks associated with its misuse or malfunction. Such research insights underscore the importance of continuous learning and adaptation in the design and deployment of SARs to deliver meaningful and safe interactions that respect older adults’ needs, capabilities, and preferences.

Author Contributions

Conceptualization, T.K., M.A.B. and W.A.R.; methodology, T.K., M.A.B. and W.A.R.; analysis, T.K., M.A.B. and W.A.R.; writing—original draft preparation, T.K., M.A.B. and W.A.R.; writing—review and editing, T.K., M.A.B. and W.A.R. All authors have read and agreed to the published version of this manuscript.

Funding

This research received no externally funding.

Data Availability Statement

De-identified data and analytical syntax can be made available upon request.

Acknowledgments

We thank Jennifer Lee, Leo Galoso, and Yi Cao for their support on this project. Study 1 was based in part on Danniel Rhee’s master’s thesis [27] and we appreciate his contributions to this project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rogers, W.A.; Ramadhani, W.A.; Harris, M.T. Technologies for ageing in place in the real world. Gerontologist 2020, 60, 610–618. [Google Scholar] [CrossRef]

- Wiles, J.L.; Leibing, A.; Guberman, N.; Reeve, J.; Allen, R.E. The meaning of “aging in place” to older people. Gerontologist 2012, 52, 357–366. [Google Scholar] [CrossRef] [PubMed]

- Kadylak, T.; Bayles, M.A.; Galoso, L.; Chan, M.; Mahajan, H.; Kemp, C.; Edsinger, A.; Rogers, W.A. A human factors analysis of the Stretch mobile manipulator robot. In Proceedings of the Human Factors and Ergonomics Society 65th Annual Meeting, Baltimore, MD, USA, 3–8 October 2021; pp. 442–446. [Google Scholar]

- Sharkawy, A.N. A survey on applications of human-robot interaction. Sens. Transducers 2021, 251, 19–27. [Google Scholar]

- Broadbent, E.; Stafford, R.; MacDonald, B. Acceptance of healthcare robots for the older population: Review and future directions. Int. J. Soc. Robot. 2009, 1, 319–330. [Google Scholar] [CrossRef]

- Breazeal, C.L.; Ostrowski, A.K.; Singh, N.; Park, H.W. Designing social robots for older adults. Natl. Acad. Eng. Bridge 2019, 49, 22–31. [Google Scholar]

- Langer, A.; Feingold-Polak, R.; Mueller, O.; Kellmeyer, P.; Levy-Tzedek, S. Trust in socially assistive robots: Considerations for use in rehabilitation. Neurosci. Biobehav. Rev. 2019, 104, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Stuck, R.E.; Rogers, W.A. Older adults’ perceptions of supporting factors of trust in a robot care provider. J. Robot. 2018, 2018, 6519713. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.; De Visser, E.J.; Parasuraman, R. A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef] [PubMed]

- Haring, K.S.; Matsumoto, Y.; Watanabe, K. How do people perceive and trust a lifelike robot. In Proceedings of the World Congress on Engineering and Computer Science, Las Vegas, NV, USA, 22–25 July 2013; Volume 1, pp. 425–430. [Google Scholar]

- Mazursky, A.; DeVoe, M.; Sebo, S. Physical Touch from a Robot Caregiver: Examining Factors that Shape Patient Experience. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022; pp. 1578–1585. [Google Scholar]

- Townsend, D.; MajidiRad, A. Trust in Human-Robot Interaction Within Healthcare Services: A Review Study. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, St. Louis, MO, USA, 14–17 August 2022; Volume 86281, p. V007T07A030. [Google Scholar]

- May, D.C.; Holler, K.J.; Bethel, C.L.; Strawderman, L.; Carruth, D.W.; Usher, J.M. Survey of factors for the prediction of human comfort with a non-anthropomorphic robot in public spaces. Int. J. Soc. Robot. 2017, 9, 165–180. [Google Scholar] [CrossRef]

- Saunderson, S.; Nejat, G. How robots influence humans: A survey of nonverbal communication in social human–robot interaction. Int. J. Soc. Robot. 2019, 11, 575–608. [Google Scholar] [CrossRef]

- Breazeal, C. Toward sociable robots. Robot. Auton. Syst. 2003, 42, 167–175. [Google Scholar] [CrossRef]

- Tajfel, H. Turner An integrative theory of intergroup conflict. Organ. Identity A Read. 1979, 56, 9780203505984-16. [Google Scholar]

- Byrne, D. The Attraction Paradigm; Academic Press: New York, NY, USA, 1971. [Google Scholar]

- Berger, C.R.; Calabrese, R.J. Some explorations in initial interaction and beyond: Toward a developmental theory of interpersonal communication. Hum. Commun. Res. 1974, 1, 99–112. [Google Scholar] [CrossRef]

- Heerink, M.; Krose, B.; Evers, V.; Wielinga, B. Assessing acceptance of assistive social agent technology by older adults: The Almere model. Int. J. Soc. Robot. 2010, 2, 361–375. [Google Scholar] [CrossRef]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef]

- Sabanović, S.; Chang, W.L.; Bennett, C.C.; Piatt, J.A.; Hakken, D. A robot of my own: Participatory design of socially assistive robots for independently living older adults diagnosed with depression. In International Conference on Human Aspects of IT for the Aged Population; Springer: Berlin/Heidelberg, Germany, 2015; pp. 104–114. [Google Scholar]

- Louie, W.Y.G.; McColl, D.; Nejat, G. Acceptance and attitudes toward a human-like socially assistive robot by older adults. Assist. Technol. 2014, 26, 140–150. [Google Scholar] [CrossRef]

- Tamantini, C.; di Luzio, F.S.; Hromei, C.D.; Cristofori, L.; Croce, D.; Cammisa, M.; Cristofaro, A.; Marabello, M.V.; Basili, R.; Zollo, L. Integrating Physical and Cognitive Interaction Capabilities in a Robot-Aided Rehabilitation Platform. EEE Syst. J. 2023, 1–12. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=10269076 (accessed on 4 September 2023). [CrossRef]

- Casas, J.A.; Céspedes, N.; Cifuentes, C.A.; Gutierrez, L.F.; Rincón-Roncancio, M.; Múnera, M. Expectation vs. reality: Attitudes towards a socially assistive robot in cardiac rehabilitation. Appl. Sci. 2019, 9, 4651. [Google Scholar] [CrossRef]

- Bradshaw, J.M.; Hoffman, R.R.; Woods, D.D.; Johnson, M. The seven deadly myths of “autonomous systems”. IEEE Intell. Syst. 2013, 28, 54–61. [Google Scholar] [CrossRef]

- Rogers, W.A.; Kadylak, T.; Bayles, M.A. Maximizing the benefits of participatory design for human–robot interaction research with older adults. Hum. Factors 2022, 64, 441–450. [Google Scholar] [CrossRef] [PubMed]

- Rhee, D. Older Adults’ Perceptions towards Socially Assistive Robots: Connecting Trust and Perceived Sociability. Master’s Thesis, University of Illinois Urbana-Champaign, Champaign, IL, USA, 2020, unpublished. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).