Telepresence in the Recent Literature with a Focus on Robotic Platforms, Applications and Challenges

Abstract

1. Introduction

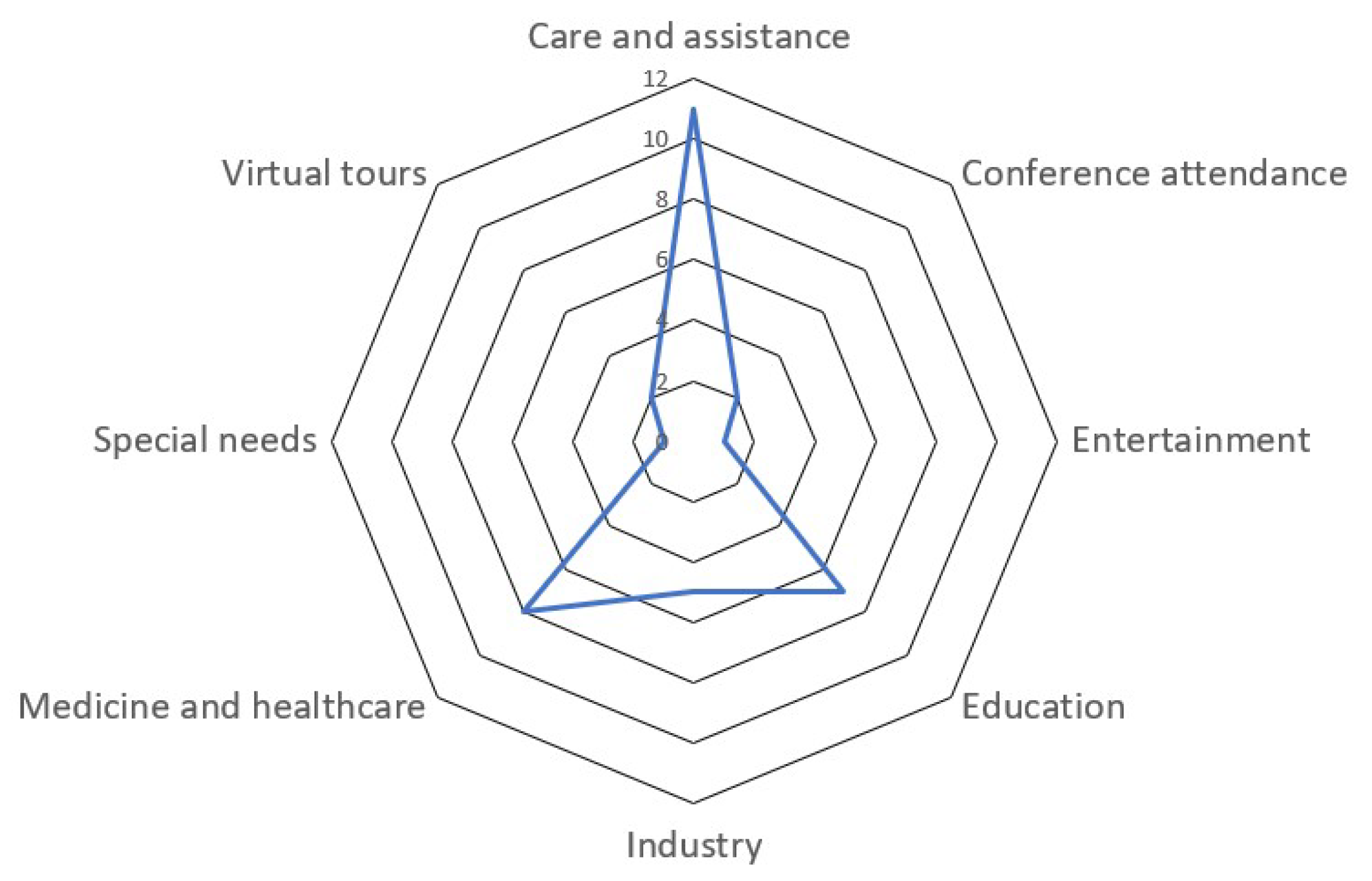

2. Applications of Telepresence Systems

2.1. Care and Assistance

2.2. Medicine and Health Care

2.3. Education

2.4. Industry

2.5. Other Applications

- Attendance at academic conferences: In [57,58], a study of the use of telepresence robots at conferences was presented. Robots were used in different ways, for example, in dedicated configurations, where each remote conference attendee had their own robot, and in configurations in which robots were shared between multiple people at the same time;

- Work: In [59], the usage of mobile remote presence systems (MRPs) that remote workers use to drive and communicate in a workplace was surveyed. It was reported that MRPs can support informal communications in distributed teams. However, other questions about dealing with MRPs were raised;

- Entertainment: A telepresence system for entertainment and meetings was presented in [60]. It used a microphone array with 3D sound localization, a depth camera and a webcam with a computer and Internet connection. It was presented as a teleimmersive entertaining video-chat application;

- People with special needs: A review was conducted on the usage of telepresence robots for people with special needs in [61]. The review considered age-related special needs and disability and showed several applications and robots but concluded that there are still barriers for people with auditory or verbal disabilities. The review also pointed to the lack of clarity of the impact of telepresence robots on quality of life;

- Virtual tours: Another application for telepresence systems is allowing users to take tours in remote environments. For example, a system called “Virtual Tour” was presented in [62], which consists of a 360° camera and an audio system capturing a remote environment. Captured signals are streamed to the user side, with the user equipped with a VR HMD. In a related application, HRI for telepresence robots was addressed in [63], where a user interface for a telepresence robot was used to visit a remote art gallery. It targeted residents of healthcare facilities and showed their ability to operate a telepresence robot.

3. Telepresence Platforms

3.1. Double

3.2. Immersive Telepresence System [70]

3.3. 3DMVIS

3.4. RDW Telepresence Systems

3.5. Highly Immersive Telepresence [18]

3.6. Immersive Telepresence with Mobile and Wearable Devices [69]

3.7. Collaborative Control in a Telepresence System [67]

3.8. Improving the Comfort of Telepresence Communication [79]

3.9. Beaming System [73]

3.10. Multi-Destination Beaming [16]

3.11. Geocaching with a Beam [75]

3.12. Bidirectional Telepresence Robots [76]

3.13. Telesuit

4. Components of a Telepresence System

4.1. Signal Acquisition

4.2. Signal Transmission

4.3. Signal Output

4.4. Mobility

4.5. Motion Control

5. Discussion and Novelties

5.1. Discussion

- Equipping a mobile robot with a camera, microphone and speaker on its head and equipping the user with a VR headset with earphones, a microphone and a remote controller for speed and direction;

- Equipping a robot with an arm and the user with a remote controller with the aim of enabling the ability to grab physical objects;

- Using other devices like a glove with finger sensors and a body suit with joint sensors to improve the body control of a robot.

5.2. Novelties

6. Conclusion and Future Work

- A separate focus on the different applications mentioned in this paper;

- User acceptability of telepresence systems and ways to evaluate the user perceptions of telepresence systems they use. This can be studied as a function of each application and can be achieved with questionnaires. A review of user acceptability of VR and AR systems can also be of importance in this field;

- An important aspect to study deeper is the relation of telepresence with user experiences that can have negative effects on users, such as VR sickness and oscillopsia. This is an important question to address when designing telepresence systems, and a clear understanding of this topic must be obtained.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hartmann, T.; Klimmt, C.; Vorderer, P. Telepresence and entertainment. In Immersed in Media. Telepresence in Everyday Life; Bracken, C., Skalski, P., Eds.; Routledge: Milton Park, UK, 2010; pp. 137–157. [Google Scholar]

- Draper, J.V.; Kaber, D.B.; Usher, J.M. Telepresence. Hum. Factors 1998, 40, 354–375. [Google Scholar] [CrossRef] [PubMed]

- Steuer, J. Defining Virtual Reality: Dimensions Determining Telepresence. J. Commun. 1992, 42, 73–93. [Google Scholar] [CrossRef]

- Minsky, M. Telepresence. OMNI, June 1980; pp. 44–52. [Google Scholar]

- Furht, B. (Ed.) Virtual Presence. In Encyclopedia of Multimedia; Springer: Boston, MA, USA, 2008; pp. 967–968. [Google Scholar] [CrossRef]

- Kristoffersson, A.; Coradeschi, S.; Loutfi, A. A Review of Mobile Robotic Telepresence. Adv. Hum-Comput. Interact. 2013, 2013, 902316. [Google Scholar] [CrossRef]

- Niemelä, M.; Van Aerschot, L.; Tammela, A.; Aaltonen, I.; Lammi, H. Towards Ethical Guidelines of Using Telepresence Robots in Residential Care. Int. J. Soc. Robot. 2021, 13, 431–439. [Google Scholar] [CrossRef]

- Cesta, A.; Cortellessa, G.; Orlandini, A.; Tiberio, L. Long-Term Evaluation of a Telepresence Robot for the Elderly: Methodology and Ecological Case Study. Int. J. Soc. Robot. 2016, 8, 421–441. [Google Scholar] [CrossRef]

- Darvish, K.; Penco, L.; Ramos, J.; Cisneros, R.; Pratt, J.; Yoshida, E.; Ivaldi, S.; Pucci, D. Teleoperation of Humanoid Robots: A Survey. IEEE Trans. Robot. 2023, 39, 1706–1727. [Google Scholar] [CrossRef]

- Beck, S. Immersive Telepresence Systems and Technologies. Ph.D. Thesis, Bauhaus-Universität Weimar, Weimar, Germany, 2019. [Google Scholar] [CrossRef]

- Stotko, P.; Krumpen, S.; Weinmann, M.; Klein, R. Efficient 3D Reconstruction and Streaming for Group-Scale Multi-Client Live Telepresence. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, 17–21 October 2019; pp. 19–25. [Google Scholar] [CrossRef]

- Wissmath, B.; Weibel, D.; Schmutz, J.; Mast, F.W. Being Present in More Than One Place at a Time? Patterns of Mental Self-Localization. Conscious. Cogn. 2011, 20, 1808–1815. [Google Scholar] [CrossRef]

- Gooskens, G. Where Am I? The Problem of Bilocation in Virtual Environments. Postgrad. J. Aesthet. 2010, 7, 13–24. [Google Scholar]

- Furlanetto, T.; Bertone, C.; Becchio, C. The bilocated mind: New perspectives on self-localization and self-identification. Front. Hum. Neurosci. 2013, 7, 71. [Google Scholar] [CrossRef]

- Lenggenhager, B.; Mouthon, M.; Blanke, O. Spatial aspects of bodily self-consciousness. Conscious. Cogn. 2009, 18, 110–117. [Google Scholar] [CrossRef]

- Kishore, S.; Xavier, M.; Bourdin Kreitz, P.; Berkers, K.; Friedman, D.; Slater, M. Multi-Destination Beaming: Apparently Being in Three Places at Once Through Robotic and Virtual Embodiment. Front. Robot. AI 2016, 3, 65. [Google Scholar] [CrossRef]

- Petkova, V.; Ehrsson, H. If I Were You: Perceptual Illusion of Body Swapping. PLoS ONE 2008, 3, e3832. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Joo, Y.; Cho, H.; Park, I. Highly Immersive Telepresence with Computation Offloading to Multi-Access Edge Computing. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 21–23 October 2020; pp. 860–862. [Google Scholar] [CrossRef]

- Păvăloiu, I.B.; Vasilățeanu, A.; Popa, R.; Scurtu, D.; Hang, A.; Goga, N. Healthcare Robotic Telepresence. In Proceedings of the 2021 13th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Pitesti, Romania, 1–3 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Youssef, K.; Said, S.; Alkork, S.; Beyrouthy, T. Social Robotics in Education: A Survey on Recent Studies and Applications. Int. J. Emerg. Technol. Learn. (IJET) 2023, 18, 67–82. [Google Scholar] [CrossRef]

- Youssef, K.; Said, S.; Alkork, S.; Beyrouthy, T. A Survey on Recent Advances in Social Robotics. Robotics 2022, 11, 75. [Google Scholar] [CrossRef]

- Belay Tuli, T.; Olana Terefe, T.; Ur Rashid, M.M. Telepresence Mobile Robots Design and Control for Social Interaction. Int. J. Soc. Robot. 2020, 13, 877–886. [Google Scholar] [CrossRef] [PubMed]

- Van Erp, J.B.; Sallaberry, C.; Brekelmans, C.; Dresscher, D.; Ter Haar, F.; Englebienne, G.; Van Bruggen, J.; De Greeff, J.; Pereira, L.F.S.; Toet, A.; et al. What Comes After Telepresence? Embodiment, Social Presence and Transporting One’s Functional and Social Self. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 2067–2072. [Google Scholar] [CrossRef]

- Marques, B.; Ferreira, C.; Silva, S.; Dias, P.; Santos, B.S. Is social presence (alone) a general predictor for good remote collaboration? comparing video and augmented reality guidance in maintenance procedures. Virtual Real. 2023, 24, 1–4. [Google Scholar] [CrossRef]

- Altalbe, A.A.; Khan, M.N.; Tahir, M. Design of a Telepresence Robot to Avoid Obstacles in IoT-Enabled Sustainable Healthcare Systems. Sustainability 2023, 15, 5692. [Google Scholar] [CrossRef]

- Velinov, A.; Koceski, S.; Koceska, N. Review of the Usage of Telepresence Robots in Education. Balk. J. Appl. Math. Informat. 2021, 4, 27–40. [Google Scholar] [CrossRef]

- Telepresence Robots: Worldwide Markets to 2026 by Type, Component and Application-ResearchAndMarkets.com. Available online: https://apnews.com/article/technology-robotics-4d39044f033e48419591712c12f7ce08 (accessed on 12 June 2023).

- Telepresence Robots Market Worth $8 Billion by 2023 Says a New Research at ReportsnReports. Available online: https://www.prnewswire.com/news-releases/telepresence-robots-market-worth-8-billion-by-2023-says-a-new-research-at-reportsnreports-629894233.html (accessed on 12 June 2023).

- Smith, C.; Gregorio, M.; Hung, L. Facilitators and barriers to using telepresence robots in aged care settings: A scoping review protocol. BMJ Open 2021, 11, e051769. [Google Scholar] [CrossRef]

- Laniel, S.; Létourneau, D.; Grondin, F.; Labbé, M.; Ferland, F.; Michaud, F. Toward enhancing the autonomy of a telepresence mobile robot for remote home care assistance. Paladyn J. Behav. Robot. 2021, 12, 214–237. [Google Scholar] [CrossRef]

- Introduction|Telepresence in the Healthcare Setting. Available online: https://mypages.unh.edu/telepresence/introduction (accessed on 23 February 2023).

- Koceska, N.; Koceski, S.; Beomonte Zobel, P.; Trajkovik, V.; Garcia, N. A Telemedicine Robot System for Assisted and Independent Living. Sensors 2019, 19, 834. [Google Scholar] [CrossRef] [PubMed]

- Ha, V.K.L.; Chai, R.; Nguyen, H.T. A Telepresence Wheelchair with 360-Degree Vision Using WebRTC. Appl. Sci. 2020, 10, 369. [Google Scholar] [CrossRef]

- Elgibreen, H.; Ali, G.; AlMegren, R.; AlEid, R.; AlQahtani, S. Telepresence Robot System for People with Speech or Mobility Disabilities. Sensors 2022, 22, 8746. [Google Scholar] [CrossRef] [PubMed]

- Isabet, B.; Pino, M.; Lewis, M.; Benveniste, S.; Rigaud, A.S. Social Telepresence Robots: A Narrative Review of Experiments Involving Older Adults before and during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2021, 18, 3597. [Google Scholar] [CrossRef] [PubMed]

- Fiorini, L.; Rovini, E.; Russo, S.; Toccafondi, L.; D’Onofrio, G.; Cornacchia Loizzo, F.; Bonaccorsi, M.; Giuliani, F.; Vignani, G.; Sancarlo, D.; et al. On the Use of Assistive Technology during the COVID-19 Outbreak: Results and Lessons Learned from Pilot Studies. Sensors 2022, 22, 6631. [Google Scholar] [CrossRef] [PubMed]

- Ha, V.K.L.; Nguyen, T.N.; Nguyen, H.T. Real-time transmission of panoramic images for a telepresence wheelchair. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3565–3568. [Google Scholar] [CrossRef]

- Telehealth: Technology Meets Healthcare-Mayo Clinic. Available online: https://www.mayoclinic.org/healthy-lifestyle/consumer-health/in-depth/telehealth/art-20044878 (accessed on 23 February 2023).

- Catania, L.J. 6-Current AI applications in medical therapies and services. In Foundations of Artificial Intelligence in Healthcare and Bioscience; Catania, L.J., Ed.; Academic Press: Cambridge, MA, USA, 2021; pp. 199–291. [Google Scholar] [CrossRef]

- Carranza, K.; Day, N.; Lin, L.; Ponce, A.; Reyes, W.; Abad, A.; Baldovino, R. Akibot: A Telepresence Robot for Medical Teleconsultation. In Proceedings of the 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, PH, USA, 29 November–2 December 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Leite, I.; Castellano, G.; Pereira, A.; Martinho, C.; Paiva, A. Long-Term Interactions with Empathic Robots: Evaluating Perceived Support in Children. In Social Robotics; Ge, S.S., Khatib, O., Cabibihan, J.J., Simmons, R., Williams, M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 298–307. [Google Scholar]

- Anvari, M. Robot-Assisted Remote Telepresence Surgery. Semin. Laparosc. Surg. 2004, 11, 123–128. [Google Scholar] [CrossRef] [PubMed]

- Croghan, S.; Carroll, P.; Reade, S.; Gillis, A.; Ridgway, P. Robot Assisted Surgical Ward Rounds: Virtually Always There. J. Innov. Health Informat. 2018, 25, 041. [Google Scholar] [CrossRef]

- Sucher, J.; Todd, S.; Jones, S.; Throckmorton, T.; Turner, K.; Moore, F. Robotic telepresence: A helpful adjunct that is viewed favorably by critically ill surgical patients. Am. J. Surg. 2011, 202, 843–847. [Google Scholar] [CrossRef]

- Fowler, C.; Mayes, J. Applying Telepresence to Education. BT Technol. J. 1997, 15, 188–195. [Google Scholar] [CrossRef]

- Page, A.; Charteris, J.; Berman, J. Telepresence Robot Use for Children with Chronic Illness in Australian Schools: A Scoping Review and Thematic Analysis. Int. J. Soc. Robot. 2021, 13, 1–13. [Google Scholar] [CrossRef]

- Yovera Chavez, D.; Villena Romero, G.; Barrientos Villalta, A.; Cuadros Gálvez, M. Telepresence Technological Model Applied to Primary Education. In Proceedings of the 2020 IEEE XXVII International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 3–5 September 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Lei, M.; Clemente, I.; Liu, H.; Bell, J. The Acceptance of Telepresence Robots in Higher Education. Int. J. Soc. Robot. 2022, 14, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Leoste, J.; Virkus, S.; Talisainen, A.; Tammemäe, K.; Kangur, K.; Petriashvili, I. Higher education personnel’s perceptions about telepresence robots. Front. Robot. AI 2022, 9, 976836. [Google Scholar] [CrossRef] [PubMed]

- Liao, J.; Lu, X. Exploring the affordances of telepresence robots in foreign language learning. Lang. Learn. Technol. 2018, 22, 20–32. [Google Scholar]

- Lipton, J.; Fay, A.; Rus, D. Baxter’s Homunculus: Virtual Reality Spaces for Teleoperation in Manufacturing. IEEE Robot. Autom. Lett. 2017, 3, 179–186. [Google Scholar] [CrossRef]

- Kuo, C.Y.; Huang, C.C.; Tsai, C.H.; Shi, Y.S.; Smith, S. Development of an immersive SLAM-based VR system for teleoperation of a mobile manipulator in an unknown environment. Comput. Ind. 2021, 132, 103502. [Google Scholar] [CrossRef]

- Schmidt, L.; Hegenberg, J.; Cramar, L. User studies on teleoperation of robots for plant inspection. Ind. Robot. Int. J. 2014, 41, 6–14. [Google Scholar] [CrossRef]

- Luo, L.; Weng, D.; Hao, J.; Tu, Z.; Jiang, H. Viewpoint-Controllable Telepresence: A Robotic-Arm-Based Mixed-Reality Telecollaboration System. Sensors 2023, 23, 4113. [Google Scholar] [CrossRef]

- Schouten, A.P.; Portegies, T.C.; Withuis, I.; Willemsen, L.M.; Mazerant-Dubois, K. Robomorphism: Examining the effects of telepresence robots on between-student cooperation. Comput. Hum. Behav. 2022, 126, 106980. [Google Scholar] [CrossRef]

- Keller, L.; Pfeffel, K.; Huffstadt, K.; Müller, N.H. Telepresence Robots and Their Impact on Human-Human Interaction. In Proceedings of the Learning and Collaboration Technologies. Human and Technology Ecosystems: 7th International Conference, LCT 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 448–463. [Google Scholar]

- Rae, I.; Neustaedter, C. Robotic Telepresence at Scale. In Proceedings of the 2017 Chi Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 313–324. [Google Scholar] [CrossRef]

- Neustaedter, C.; Singhal, S.; Pan, R.; Heshmat, Y.; Forghani, A.; Tang, J. From Being There to Watching: Shared and Dedicated Telepresence Robot Usage at Academic Conferences. Acm Trans. Comput-Hum. Interact. 2018, 25, 1–39. [Google Scholar] [CrossRef]

- Lee, M.K.; Takayama, L. “Now, I have a body”: Uses and social norms for mobile remote presence in the workplace. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 33–42. [Google Scholar] [CrossRef]

- Nguyen, V.; Luo, Z.; Zhao, S.; Vu, T.; Yang, H.; Douglas, J.; Do, M. ITEM: Immersive Telepresence for Entertainment and Meetings-A Practical Approach. IEEE J. Sel. Top. Signal Process. 2014, 9, 546–561. [Google Scholar] [CrossRef]

- Zhang, G.; Hansen, J. Telepresence Robots for People with Special Needs: A Systematic Review. Int. J. Hum-Comput. Interact. 2022, 38, 1651–1667. [Google Scholar] [CrossRef]

- Kachach, R.; Perez, P.; Villegas, A.; Gonzalez-Sosa, E. Virtual Tour: An Immersive Low Cost Telepresence System. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 504–506. [Google Scholar] [CrossRef]

- Tsui, K.; Dalphond, J.; Brooks, D.; Medvedev, M.; McCann, E.; Allspaw, J.; Kontak, D.; Yanco, H. Accessible Human-Robot Interaction for Telepresence Robots: A Case Study. Paladyn, J. Behav. Robot. 2015, 6, 000010151520150001. [Google Scholar] [CrossRef]

- Youssef, K.; Said, S.; Beyrouthy, T.; Alkork, S. A Social Robot with Conversational Capabilities for Visitor Reception: Design and Framework. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 8–10 December 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Avalos, J.; Cortez, S.; Vasquez, K.; Murray, V.; Ramos, O.E. Telepresence using the kinect sensor and the NAO robot. In Proceedings of the 2016 IEEE 7th Latin American Symposium on Circuits & Systems (LASCAS), Florianopolis, Brazil, 28 February–2 March 2016; pp. 303–306. [Google Scholar] [CrossRef]

- Facilitate a Smooth Connection between People with Pepper’s Telepresence Capabilities! Available online: https://www.aldebaran.com/en/pepper-telepresence (accessed on 18 June 2023).

- Du, J.; Do, H.; Sheng, W. Human–Robot Collaborative Control in a Virtual-Reality-Based Telepresence System. Int. J. Soc. Robot. 2021, 13, 1–12. [Google Scholar] [CrossRef]

- Zhang, J. Extended Abstract: Natural Human-Robot Interaction in Virtual Reality Telepresence Systems. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Reutlingen, Germany, 18–22 March 2018; pp. 812–813. [Google Scholar] [CrossRef]

- Young, J.; Langlotz, T.; Cook, M.; Mills, S.; Regenbrecht, H. Immersive Telepresence and Remote Collaboration using Mobile and Wearable Devices. IEEE Trans. Vis. Comput. Graph. 2019, 5, 1908–1918. [Google Scholar] [CrossRef]

- Gaemperle, L.; Seyid, K.; Popovic, V.; Leblebici, Y. An Immersive Telepresence System Using a Real-Time Omnidirectional Camera and a Virtual Reality Head-Mounted Display. In Proceedings of the IEEE International Symposium on Multimedia, Taichung, Taiwan, 10–12 December 2015; pp. 175–178. [Google Scholar] [CrossRef]

- Osawa, M.; Imai, M. A Robot for Test Bed Aimed at Improving Telepresence System and Evasion from Discomfort Stimuli by Online Learning. Int. J. Soc. Robot. 2020, 12, 187–199. [Google Scholar] [CrossRef]

- Matsumura, R.; Shiomi, M.; Nakagawa, K.; Shinozawa, K.; Miyashita, T. A Desktop-Sized Communication Robot: “robovie-mR2”. J. Robot. Mechatronics 2016, 28, 107–108. [Google Scholar] [CrossRef]

- Steed, A.; Steptoe, W.; Oyekoya, W.; Pece, F.; Weyrich, T.; Kautz, J.; Friedman, D.; Peer, A.; Solazzi, M.; Tecchia, F.; et al. Beaming: An Asymmetric Telepresence System. IEEE Comput. Graph. Appl. 2012, 32, 10–17. [Google Scholar] [CrossRef] [PubMed]

- Jung, M.; Kim, J.; Han, K.; Kim, K. Social Telecommunication Experience with Full-Body Ownership Humanoid Robot. Int. J. Soc. Robot. 2022, 14, 1–14. [Google Scholar] [CrossRef]

- Heshmat, Y.; Jones, B.; Xiong, X.; Neustaedter, C.; Tang, A.; Riecke, B.; Yang, L. Geocaching with a Beam: Shared Outdoor Activities through a Telepresence Robot with 360 Degree Viewing. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar] [CrossRef]

- Katayama, N.; Inoue, T.; Shigeno, H. Sharing the Positional Relationship with the Bidirectional Telepresence Robots. In Proceedings of the 2018 IEEE 22nd International Conference on Computer Supported Cooperative Work in Design (CSCWD), Nanjing, China, 9–11 May 2018; pp. 325–329. [Google Scholar] [CrossRef]

- Cardenas, I.S.; Vitullo, K.A.; Park, M.; Kim, J.H.; Benitez, M.; Chen, C.; Ohrn-McDaniels, L. Telesuit: An Immersive User-Centric Telepresence Control Suit. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 654–655. [Google Scholar] [CrossRef]

- Luo, H.; Pan, T.S.; Pan, J.S.; Chu, S.C.; Yang, B. Development of a Three-Dimensional Multimode Visual Immersive System With Applications in Telepresence. IEEE Syst. J. 2015, 4, 2818–2828. [Google Scholar] [CrossRef]

- Osawa, M.; Okuoka, K.; Takimoto, Y.; Imai, M. Is Automation Appropriate? Semi-autonomous Telepresence Architecture Focusing on Voluntary and Involuntary Movements. Int. J. Soc. Robot. 2020, 12, 1119–1134. [Google Scholar] [CrossRef]

- Hinterseer, P.; Steinbach, E.; Buss, M. A novel, psychophysically motivated transmission approach for haptic data streams in telepresence and teleaction systems. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Singapore, 22–27 May 2005; Volume 2, pp. ii/1097–ii/1100. [Google Scholar] [CrossRef]

- Hinterseer, P.; Hirche, S.; Chaudhuri, S.; Steinbach, E.; Buss, M. Perception-Based Data Reduction and Transmission of Haptic Data in Telepresence and Teleaction Systems. IEEE Trans. Signal Process. 2008, 56, 588–597. [Google Scholar] [CrossRef]

- Syawaludin, M.F.; Kim, C.; Hwana, J.I. Hybrid Camera System for Telepresence with Foveated Imaging. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1173–1174. [Google Scholar] [CrossRef]

- Kiselev, A.; Kristoffersson, A.; Loutfi, A. The effect of field of view on social interaction in mobile Robotic telepresence systems. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3 March 2014; pp. 214–215. [Google Scholar] [CrossRef]

- Pandav, K.; Te, A.G.; Tomer, N.; Nair, S.S.; Tewari, A.K. Leveraging 5G technology for robotic surgery and cancer care. Cancer Rep. 2022, 5, e1595. [Google Scholar] [CrossRef]

- Qureshi, H.N.; Manalastas, M.; Ijaz, A.; Imran, A.; Liu, Y.; Al Kalaa, M. Communication Requirements in 5G-Enabled Healthcare Applications: Review and Considerations. Healthcare 2022, 10, 293. [Google Scholar] [CrossRef] [PubMed]

- Tota, P.; Vaida, M.F. Light Fidelity (Li-Fi) Communications Applied to Telepresence Robotics. In Proceedings of the 2020 21th International Carpathian Control Conference (ICCC), High Tatras, Slovakia, 27–29 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Ţoţa, P.; Vaida, M.F. Solutions for the design and control of telepresence robots that climb obstacles. In Proceedings of the 2020 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj Napoca, Romania, 19–21 May 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Soomro, S.R.; Eldes, O.; Urey, H. Towards Mobile 3D Telepresence Using Head-Worn Devices and Dual-Purpose Screens. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Reutlingen, Germany, 18–22 May 2018; pp. 1–2. [Google Scholar] [CrossRef]

- Hepperle, D.; Ödell, H.; Wölfel, M. Differences in the Uncanny Valley between Head-Mounted Displays and Monitors. In Proceedings of the 2020 International Conference on Cyberworlds (CW), Caen, France, 29 September–22 October 2020; pp. 41–48. [Google Scholar] [CrossRef]

- Mbanisi, K.; Gennert, M.; Li, Z. SocNavAssist: A Haptic Shared Autonomy Framework for Social Navigation Assistance of Mobile Telepresence Robots. In Proceedings of the 2021 IEEE 2nd International Conference on Human-Machine Systems (ICHMS), Magdeburg, Germany, 8–10 September 2021; pp. 1–3. [Google Scholar] [CrossRef]

- Zhang, G.; Hansen, J.P.; Minakata, K.; Alapetite, A.; Wang, Z. Eye-Gaze-Controlled Telepresence Robots for People with Motor Disabilities. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 574–575. [Google Scholar] [CrossRef]

- Hung, L.; Wong, J.; Smith, C.; Berndt, A.; Gregorio, M.; Horne, N.; Jackson, L.; Mann, J.; Wada, M.; Young, E. Facilitators and barriers to using telepresence robots in aged care settings: A scoping review. J. Rehabil. Assist. Technol. Eng. 2022, 9, 20556683211072385. [Google Scholar] [CrossRef]

- Perifanou, M.; Economides, A.A.; Häfner, P.; Wernbacher, T. Mobile Telepresence Robots in Education: Strengths, Opportunities, Weaknesses, and Challenges. In Proceedings of the Educating for a New Future: Making Sense of Technology-Enhanced Learning Adoption, Toulouse, France, 12–16 September 2022; Springer: Cham, Switzerland, 2022; pp. 573–579. [Google Scholar]

- Teng, R.; Ding, Y.; See, K.C. Use of Robots in Critical Care: Systematic Review. J. Med. Internet Res. 2022, 24, e33380. [Google Scholar] [CrossRef]

- Chu, H.; Ma, S.; De la Torre, F.; Fidler, S.; Sheikh, Y. Expressive Telepresence via Modular Codec Avatars. In Proceedings of the Computer Vision—ECCV 2020, Milan, Italy, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 330–345. [Google Scholar]

- Yoon, L.; Yang, D.; Chung, C.; Lee, S.H. A Full Body Avatar-Based Telepresence System for Dissimilar Spaces. arXiv 2021, arXiv:abs/2103.04380. [Google Scholar]

- Cymbalak, D.; Jakab, F.; Szalay, Z.; Turnň, J.; Bilský, E. Extending Telepresence Technology as a Middle Stage between Humans to AI Robots Transition in the Workplace of the Future. In Proceedings of the 2019 17th International Conference on Emerging eLearning Technologies and Applications (ICETA), Virtual, 27–28 September 2019; pp. 133–138. [Google Scholar] [CrossRef]

- Said, S.; AlAsfour, G.; Alghannam, F.; Khalaf, S.; Susilo, T.; Prasad, B.; Youssef, K.; Alkork, S.; Beyrouthy, T. Experimental Investigation of an Interactive Animatronic Robotic Head Connected to ChatGPT. In Proceedings of the 2023 5th International Conference on Bio-engineering for Smart Technologies (BioSMART), Paris, France, 7–9 June 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Bhattacharyya, A.; Sau, A.; Roychoudhury, R.D.; Banerjee, S.; Sarkar, C.; Pramanick, P.; Ganguly, M.; Bhowmick, B.; Purushothaman, B. Teledrive: An Intelligent Telepresence Solution for “Collaborative Multi-presence” through a Telerobot. In Proceedings of the 2022 14th International Conference on COMmunication Systems & NETworkS (COMSNETS), Bengaluru, India, 3–8 January 2022; pp. 433–435. [Google Scholar] [CrossRef]

- Marques, B.; Silva, S.; Alves, J.; Araújo, T.; Dias, P.; Santos, B.S. A Conceptual Model and Taxonomy for Collaborative Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2022, 28, 5113–5133. [Google Scholar] [CrossRef]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Zhang, X.; Wang, S.; He, W.; Yan, Y.; Ji, H. AR/MR Remote Collaboration on Physical Tasks: A Review. Robot. Comput-Integr. Manuf. 2021, 72, 102071. [Google Scholar] [CrossRef]

- Sereno, M.; Wang, X.; Besançon, L.; McGuffin, M.J.; Isenberg, T. Collaborative Work in Augmented Reality: A Survey. IEEE Trans. Vis. Comput. Graph. 2022, 28, 2530–2549. [Google Scholar] [CrossRef] [PubMed]

- De Belen, R.A.J.; Nguyen, H.; Filonik, D.; Del Favero, D.; Bednarz, T. A systematic review of the current state of collaborative mixed reality technologies: 2013–2018. Aims Electron. Electr. Eng. 2019, 3, 181–223. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, S.; Billinghurst, M.; Bai, X.; He, W.; Wang, S.; Sun, M.; Zhang, X. A comprehensive survey of AR/MR-based co-design in manufacturing. Eng. Comput. 2020, 36, 1715–1738. [Google Scholar] [CrossRef]

- Kim, S.; Billinghurst, M.; Kim, K. Multimodal interfaces and communication cues for remote collaboration. J. Multimodal User Interfaces 2020, 14, 313–319. [Google Scholar] [CrossRef]

- Kim, K.; Schubert, R.; Hochreiter, J.; Bruder, G.; Welch, G. Blowing in the wind: Increasing social presence with a virtual human via environmental airflow interaction in mixed reality. Comput. Graph. 2019, 83, 23–32. [Google Scholar] [CrossRef]

- Kristoffersson, A.; Eklundh, K.; Loutfi, A. Measuring the Quality of Interaction in Mobile Robotic Telepresence: A Pilot’s Perspective. Int. J. Soc. Robot. 2013, 5, 89–101. [Google Scholar] [CrossRef]

- Chang, E.; Kim, H.T.; Yoo, B. Virtual Reality Sickness: A Review of Causes and Measurements. Int. J. Hum-Comput. Interact. 2020, 36, 1658–1682. [Google Scholar] [CrossRef]

- Allison, R.; Harris, L.; Jenkin, M.; Jasiobedzka, U.; Zacher, J. Tolerance of temporal delay in virtual environments. In Proceedings of the IEEE Virtual Reality 2001, Yokohama, Japan, 13–17 March 2001; pp. 247–254. [Google Scholar] [CrossRef]

- Tilikete, C.; Pisella, L.; Pélisson, D.; Vighetto, A. Oscillopsies: Approches physiopathologique et thérapteutique. Rev. Neurol. 2007, 163, 421–439. [Google Scholar] [CrossRef]

- Tilikete, C.; Vighetto, A. Oscillopsia: Causes and management. Curr. Opin. Neurol. 2011, 24, 38–43. [Google Scholar] [CrossRef]

- Buhler, H.; Misztal, S.; Schild, J. Reducing VR Sickness Through Peripheral Visual Effects. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Reutlingen, Germany, 18–22 March 2018; pp. 517–519. [Google Scholar] [CrossRef]

- Suomalainen, M.; Sakcak, B.; Widagdo, A.; Kalliokoski, J.; Mimnaugh, K.J.; Chambers, A.P.; Ojala, T.; LaValle, S.M. Unwinding Rotations Improves User Comfort with Immersive Telepresence Robots. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Online, 7–10 March 2022; pp. 511–520. [Google Scholar] [CrossRef]

| Journal/Conference | Cited Papers |

|---|---|

| International Journal of Social Robotics | 10 |

| Sensors | 4 |

| IEEE Conference on Virtual Reality and 3D User Interfaces | 4 |

| IEEE Transactions on Visualization and Computer Graphics | 3 |

| ACM/IEEE International Conference on Human-Robot Interaction (HRI) | 3 |

| Paladyn, Journal of Behavioral Robotics | 2 |

| Consciousness and Cognition | 2 |

| Frontiers in Robotics and AI | 2 |

| International Journal of Human-Computer Interaction | 2 |

| International Conference on Bio-Engineering for Smart Technologies | 2 |

| System | Remote Side | User Side | Other Features |

|---|---|---|---|

| Virtual-reality-based telepresence system [67] | Mobile platform, computer, microphone array, speakers and RGB-D camera | Head-mounted display | 3D visual data transmission to the user; intentions human head movement used in the control of the mobile platform motion |

| RDW telepresence systems [68] | Mobile platform and 360-degree camera | Head-mounted display | Motion control of the mobile platform using the user’s walking |

| Framework for immersive telepresence [69] | Handheld mobile device and camera or panoramic camera | Head-mounted display | The user and remote user communicate through voice, and hand gestures of the user can be transmitted to the camera side |

| Immersive telepresence system [70] | Fixed panoptic camera | Head-mounted display | The user can naturally look around due to the omnidirectionality of the panoptic camera |

| Akibot [40] | Mobile platform with a screen and devices like an otoscope and a stethoscope | A computer | Designed to be maneuverable and used in medical consultation between doctors and patients |

| Semiautonomous telepresence [71] | robovie-mR2 robot [72] | A computer | The robot is semiautonomous, automating movements with and without the intention of the user |

| Beaming system used in [73] | VR system with surround visuals and audio and tactile and haptic and biosensing systems | Head-mounted display and motion-tracking suit | Recreates a real environment in a virtual model using portable or mobile technical interventions |

| Beaming system used in [74] | NAO V6 robot with two webcams | Head-mounted display and motion-capture system | The system makes the robot mimic the human user’s movement |

| Geocaching activity shown in [75] | Beam+ robot with a 360-degree camera | Smartphone in a plastic case worn by the user and an iMac computer | The robot is driven by the user using a PlayStation 3 controller and a desktop application |

| Bidirectional telepresence in [76] | Beam+ robot with a 360-degree camera | Smartphone in a plastic case worn by the user and an iMac computer | The robot is driven by the user using a PlayStation 3 controller and a desktop application |

| Telesuit in [77] | A humanoid robot | A suit with sensors and a head-mounted display | The suit is equipped with inertial measurement units and other sensors to capture movements of the operator and monitor his health |

| Mobile Robotic Presence system in [34] | Loomo mobile robot | A mobile system | The system allows for text-to-speech and emoji communication, with audio and video streaming and navigation for mobility |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Youssef, K.; Said, S.; Al Kork, S.; Beyrouthy, T. Telepresence in the Recent Literature with a Focus on Robotic Platforms, Applications and Challenges. Robotics 2023, 12, 111. https://doi.org/10.3390/robotics12040111

Youssef K, Said S, Al Kork S, Beyrouthy T. Telepresence in the Recent Literature with a Focus on Robotic Platforms, Applications and Challenges. Robotics. 2023; 12(4):111. https://doi.org/10.3390/robotics12040111

Chicago/Turabian StyleYoussef, Karim, Sherif Said, Samer Al Kork, and Taha Beyrouthy. 2023. "Telepresence in the Recent Literature with a Focus on Robotic Platforms, Applications and Challenges" Robotics 12, no. 4: 111. https://doi.org/10.3390/robotics12040111

APA StyleYoussef, K., Said, S., Al Kork, S., & Beyrouthy, T. (2023). Telepresence in the Recent Literature with a Focus on Robotic Platforms, Applications and Challenges. Robotics, 12(4), 111. https://doi.org/10.3390/robotics12040111