The Influence of Visible Cables and Story Content on Perceived Autonomy in Social Human–Robot Interaction

Abstract

1. Introduction

2. Related Work

2.1. Robot Autonomy

2.2. Robot-Task Fit

3. Methods

3.1. Participants

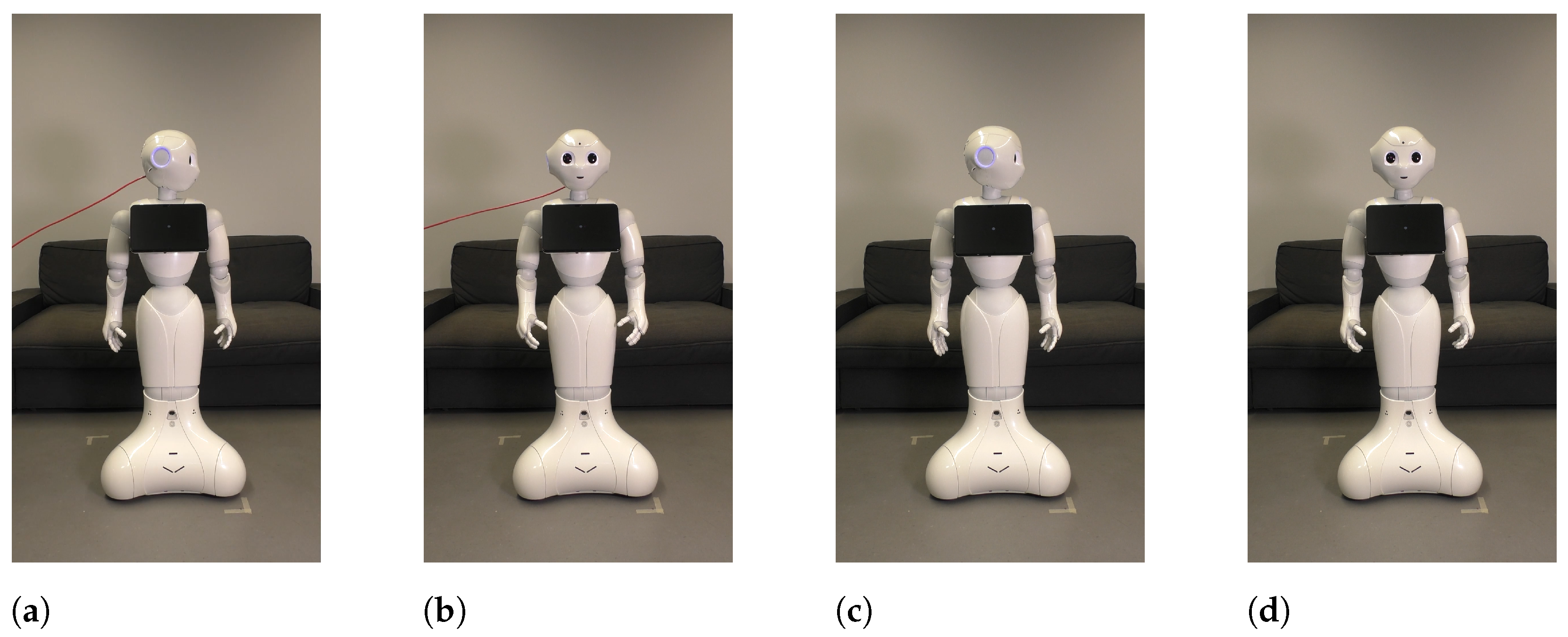

3.2. Task and Apparatus

3.3. Design

3.4. Dependent Measures

3.5. Procedure

4. Results

4.1. Autonomy and Role Perception

4.2. General Perception

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Breazeal, C.; Dautenhahn, K.; Kanda, T. Social Robotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1935–1972. [Google Scholar] [CrossRef]

- Lugrin, B. Introduction to Socially Interactive Agents. In The Handbook on Socially Interactive Agents; Lugrin, B., Pelachaud, C., Traum, D., Eds.; ACM: New York, NY, USA, 2021; pp. 1–18. [Google Scholar]

- Sheridan, T.B. Human–Robot Interaction: Status and Challenges. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 525–532. [Google Scholar] [CrossRef] [PubMed]

- Murphy, R.; Nomura, T.; Billard, A.; Burke, J. Human—Robot Interaction. IEEE Robot. Autom. Mag. 2010, 17, 85–89. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Schultz, A.C. Human–Robot Interaction: A Survey. Found. Trends-Hum.-Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Young, J.E.; Sung, J.; Voida, A.; Sharlin, E.; Igarashi, T.; Christensen, H.I.; Grinter, R.E. Evaluating Human–Robot Interaction. Int. J. Soc. Robot. 2011, 3, 53–67. [Google Scholar] [CrossRef]

- Feil-Seifer, D.; Haring, K.S.; Rossi, S.; Wagner, A.R.; Williams, T. Where to next? The impact of COVID-19 on human–robot interaction research. J. Hum.-Robot Interact. 2020, 10, 1–7. [Google Scholar] [CrossRef]

- Onnasch, L.; Roesler, E. Anthropomorphizing Robots: The Effect of Framing in Human–Robot Collaboration; SAGE Publications Inc: Thousand Oaks, CA, USA, 2019; Volume 63, pp. 1311–1315. [Google Scholar] [CrossRef]

- Ho, C.C.; MacDorman, K.F. Revisiting the uncanny valley theory: Developing and validating an alternative to the Godspeed indices. Comput. Hum. Behav. 2010, 26, 1508–1518. [Google Scholar] [CrossRef]

- Steinhaeusser, S.C.; Gabel, J.J.; Lugrin, B. Your New Friend NAO vs. Robot No. 783—Effects of Personal or Impersonal Framing in a Robotic Storytelling Use Case. In Proceedings of the Companion of the 2021 ACM/IEEE International Conference on Human–Robot Interaction, Boulder, CO, USA, 8–11 March 2021; Bethel, C., Paiva, A., Broadbent, E., Feil-Seifer, D., Szafir, D., Eds.; ACM: New York, NY, USA, 2021; pp. 334–338. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2021, 13, 833–849. [Google Scholar] [CrossRef]

- Yanco, H.A.; Drury, J. Classifying human–robot interaction: An updated taxonomy. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004; pp. 2841–2846. [Google Scholar] [CrossRef]

- Roesler, E.; Manzey, D.; Onnasch, L. Embodiment Matters in Social HRI Research: Effectiveness of Anthropomorphism on Subjective and Objective Outcomes. J. Hum.-Robot Interact. 2022. Just Accepted. [Google Scholar] [CrossRef]

- Lund, H.H.; Miglino, O. From simulated to real robots. In Proceedings of the IEEE International Conference on Evolutionary Computation, Nagoya, Japan, 20–22 May 1996; pp. 362–365. [Google Scholar] [CrossRef]

- Smithers, T. Autonomy in robots and other agents. Brain Cogn. 1997, 34, 88–106. [Google Scholar] [CrossRef]

- de Santis, A.; Siciliano, B.; de Luca, A.; Bicchi, A. An atlas of physical human—robot interaction. Mech. Mach. Theory 2008, 43, 253–270. [Google Scholar] [CrossRef]

- Harbers, M.; Peeters, M.M.M.; Neerincx, M.A. Perceived Autonomy of Robots: Effects of Appearance and Context. In A World with Robots; Aldinhas Ferreira, M.I., Silva Sequeira, J., Tokhi, M.O., Kadar, E., Virk, G.S., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 84, pp. 19–33. [Google Scholar] [CrossRef]

- Donnermann, M.; Schaper, P.; Lugrin, B. Integrating a Social Robot in Higher Education—A Field Study. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 573–579. [Google Scholar] [CrossRef]

- Riedmann, A.; Schaper, P.; Lugrin, B. Integration of a social robot and gamification in adult learning and effects on motivation, engagement and performance. AI Soc. 2022. [Google Scholar] [CrossRef]

- Bono, A.; Augello, A.; Pilato, G.; Vella, F.; Gaglio, S. An ACT-R Based Humanoid Social Robot to Manage Storytelling Activities. Robotics 2020, 9, 25. [Google Scholar] [CrossRef]

- Häring, M.; Kuchenbrandt, D.; André, E. Would you like to play with me? In Proceedings of the 2014 ACM/IEEE International Conference on Human–Robot Interaction, Bielefeld, Germany, 3–6 March 2014; Sagerer, G., Imai, M., Belpaeme, T., Thomaz, A., Eds.; ACM: New York, NY, USA, 2014; pp. 9–16. [Google Scholar] [CrossRef]

- Berghe, R.; Haas, M.; Oudgenoeg-Paz, O.; Krahmer, E.; Verhagen, J.; Vogt, P.; Willemsen, B.; Wit, J.; Leseman, P. A toy or a friend? Children’s anthropomorphic beliefs about robots and how these relate to second–language word learning. J. Comput. Assist. Learn. 2021, 37, 396–410. [Google Scholar] [CrossRef]

- Gomez, R.; Szapiro, D.; Galindo, K.; Merino, L.; Brock, H.; Nakamura, K.; Fang, Y.; Nichols, E. Exploring Affective Storytelling with an Embodied Agent. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 1249–1255. [Google Scholar] [CrossRef]

- Mirnig, N.; Stadler, S.; Stollnberger, G.; Giuliani, M.; Tscheligi, M. Robot humor: How self-irony and Schadenfreude influence people’s rating of robot likability. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 166–171. [Google Scholar] [CrossRef]

- Mohammad, Y.; Nishida, T. Human-like motion of a humanoid in a shadowing task. In Proceedings of the 2014 International Conference on Collaboration Technologies and Systems (CTS), Minneapolis, MN, USA, 19–23 May 2014; pp. 123–130. [Google Scholar] [CrossRef]

- Beer, J.M.; Fisk, A.D.; Rogers, W.A. Toward a framework for levels of robot autonomy in human–robot interaction. J. Hum.-Robot. Interact. 2014, 3, 74–99. [Google Scholar] [CrossRef]

- Friedman, M. Autonomy, Gender, Politics; Oxford University Press: Oxford, England, 2003. [Google Scholar]

- Ryan, R.M.; Deci, E.L. Self-regulation and the problem of human autonomy: Does psychology need choice, self-determination, and will? J. Personal. 2006, 74, 1557–1585. [Google Scholar] [CrossRef]

- Deci, E.L.; Flaste, R. Why We Do What We Do: The Dynamics of Personal Autonomy; GP Putnam’s Sons: New York City, NY, USA, 1995. [Google Scholar]

- Sheridan, T.B.; Verplank, W.L. Human and Computer Control of Undersea Teleoperators; Massachusetts Inst of Tech Cambridge Man-Machine Systems Lab.: Cambridge, MA, USA, 1978. [Google Scholar]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. Situation Awareness, Mental Workload, and Trust in Automation: Viable, Empirically Supported Cognitive Engineering Constructs. J. Cogn. Eng. Decis. Mak. 2008, 2, 140–160. [Google Scholar] [CrossRef]

- Schwarz, M.; Stückler, J.; Behnke, S. Mobile teleoperation interfaces with adjustable autonomy for personal service robots. In Proceedings of the 2014 ACM/IEEE International Conference on Human–Robot Interaction, Bielefeld, Germany, 3–6 March 2014; Sagerer, G., Imai, M., Belpaeme, T., Thomaz, A., Eds.; ACM: New York, NY, USA, 2014; pp. 288–289. [Google Scholar] [CrossRef]

- Stapels, J.G.; Eyssel, F. Robocalypse? Yes, Please! The Role of Robot Autonomy in the Development of Ambivalent Attitudes Towards Robots. Int. J. Soc. Robot. 2022, 14, 683–697. [Google Scholar] [CrossRef]

- Vlachos, E.; Schärfe, H. Social Robots as Persuasive Agents. In Social Computing and Social Media; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Kobsa, A., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Pandu Rangan, C., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8531, pp. 277–284. [Google Scholar] [CrossRef]

- Weiss, A.; Bartneck, C. Meta analysis of the usage of the Godspeed Questionnaire Series. In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–1 September 2015; pp. 381–388. [Google Scholar] [CrossRef]

- Riek, L. Wizard of Oz Studies in HRI: A Systematic Review and New Reporting Guidelines. J. Hum.-Robot. Interact. 2012, 1, 119–136. [Google Scholar] [CrossRef]

- Baba, J.; Sichao, S.; Nakanishi, J.; Kuramoto, I.; Ogawa, K.; Yoshikawa, Y.; Ishiguro, H. Teleoperated Robot Acting Autonomous for Better Customer Satisfaction. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Bernhaupt, R., Mueller, F.F., Verweij, D., Andres, J., McGrenere, J., Cockburn, A., Avellino, I., Goguey, A., Bjørn, P., Zhao, S., et al., Eds.; ACM: New York, NY, USA, 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Song, S.; Baba, J.; Nakanishi, J.; Yoshikawa, Y.; Ishiguro, H. Costume vs. Wizard of Oz vs. Telepresence: How Social Presence Forms of Tele-operated Robots Influence Customer Behavior. In Proceedings of the 2022 ACM/IEEE International Conference on Human–Robot Interaction, Sapporo, Japan, 7–10 March 2022; pp. 521–529. [Google Scholar]

- Sundar, S.S.; Nass, C. Source Orientation in Human–Computer Interaction. Commun. Res. 2000, 27, 683–703. [Google Scholar] [CrossRef]

- Bekey, G.A. Autonomous Robots: From Biological Inspiration to Implementation and Control; MIT Press: Cambridge, UK, 2005. [Google Scholar]

- Millo, F.; Gesualdo, M.; Fraboni, F.; Giusino, D. Human Likeness in robots: Differences between industrial and non-industrial robots. In Proceedings of the European Conference on Cognitive Ergonomics, Siena, Italy, 26–29 April 2021; Marti, P., Parlangeli, O., Recupero, A., Eds.; ACM: New York, NY, USA, 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Cramer, H.; Kemper, N.; Amin, A.; Wielinga, B.; Evers, V. ‘Give me a hug’: The effects of touch and autonomy on people’s responses to embodied social agents. Comput. Animat. Virtual Worlds 2009, 20, 437–445. [Google Scholar] [CrossRef]

- Choi, J.J.; Kim, Y.; Kwak, S.S. The autonomy levels and the human intervention levels of robots: The impact of robot types in human–robot interaction. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 1069–1074. [Google Scholar] [CrossRef]

- Kwak, S.S.; Kim, Y.; Kim, E.; Shin, C.; Cho, K. What makes people empathize with an emotional robot?: The impact of agency and physical embodiment on human empathy for a robot. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Republic of Korea, 26–29 August 2013; pp. 180–185. [Google Scholar] [CrossRef]

- Srinivasan, V.; Takayama, L. Help Me Please: Robot Politeness Strategies for Soliciting Help From Humans. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; Kaye, J., Druin, A., Lampe, C., Morris, D., Hourcade, J.P., Eds.; ACM: New York, NY, USA, 2016; pp. 4945–4955. [Google Scholar] [CrossRef]

- Azhar, M.Q.; Sklar, E.I. A study measuring the impact of shared decision making in a human–robot team. Int. J. Robot. Res. 2017, 36, 461–482. [Google Scholar] [CrossRef]

- Lee, H.; Choi, J.J.; Kwak, S.S. Will you follow the robot’s advice? In Proceedings of the Second International Conference on Human–Agent Interaction, Tsukuba, Japan, 29–31 October 2014; Kuzuoka, H., Ono, T., Imai, M., Young, J.E., Eds.; ACM: New York, NY, USA, 2014; pp. 137–140. [Google Scholar] [CrossRef]

- Dole, L.D.; Sirkin, D.M.; Currano, R.M.; Murphy, R.R.; Nass, C.I. Where to look and who to be Designing attention and identity for search-and-rescue robots. In Proceedings of the 2013 8th ACM/IEEE International Conference on Human–Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 119–120. [Google Scholar] [CrossRef]

- Weiss, A.; Wurhofer, D.; Lankes, M.; Tscheligi, M. Autonomous vs. tele-operated: How People Perceive Human–Robot Collaboration with HRP-2. In Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction—HRI ’09, La Jolla, CA, USA, 9–13 March 2009; Scheutz, M., Michaud, F., Hinds, P., Scassellati, B., Eds.; ACM Press: New York, NY, USA, 2009; p. 257. [Google Scholar] [CrossRef]

- Dinet, J. “Would You be Friends with a Robot?”: The Impact of Perceived Autonomy and Perceived Risk. Hum. Factors Robot. Drones Unmanned Syst. 2022, 57, 25. [Google Scholar] [CrossRef]

- Ioannou, A.; Andreou, E.; Christofi, M. Pre-schoolers’ Interest and Caring Behaviour Around a Humanoid Robot. TechTrends 2015, 59, 23–26. [Google Scholar] [CrossRef]

- Striepe, H.; Lugrin, B. There Once Was a Robot Storyteller: Measuring the Effects of Emotion and Non-verbal Behaviour. Soc. Robot. 2017, 10652, 126–136. [Google Scholar] [CrossRef]

- Mubin, O.; Stevens, C.J.; Shahid, S.; Mahmud, A.A.; Dong, J.J. A Review of the Applicability of Robots in Education. Technol. Educ. Learn. 2013, 1. [Google Scholar] [CrossRef]

- Xu, J.; Broekens, J.; Hindriks, K.; Neerincx, M.A. Effects of a robotic storyteller’s moody gestures on storytelling perception. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 449–455. [Google Scholar]

- Spitale, M.; Okamoto, S.; Gupta, M.; Xi, H.; Matarić, M.J. Socially Assistive Robots as Storytellers that Elicit Empathy. ACM Trans. Hum.-Robot. Interact. 2022, 11, 1–29. [Google Scholar] [CrossRef]

- Wiese, E.; Weis, P.P.; Bigman, Y.; Kapsaskis, K.; Gray, K. It’s a Match: Task Assignment in Human—Robot Collaboration Depends on Mind Perception. Int. J. Soc. Robot. 2022, 14, 141–148. [Google Scholar] [CrossRef]

- Goetz, J.; Kiesler, S.; Powers, A. Matching robot appearance and behavior to tasks to improve human–robot cooperation. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human–Robot Interaction—HRI ’06, Salt Lake City, UT, USA, 2–3 March 2003; pp. 55–60. [Google Scholar] [CrossRef]

- Hoffmann, L.; Bock, N.; Rosenthal Pütten, A.M. The Peculiarities of Robot Embodiment (EmCorp-Scale): Development, Validation and Initial Test of the Embodiment and Corporeality of Artificial Agents Scale. In Proceedings of the 2018 ACM/IEEE International Conference on Human–Robot Interaction, Chicago, IL, USA, 5–8 March 2018; Kanda, T., Ŝabanović, S., Hoffman, G., Tapus, A., Eds.; ACM: New York, NY, USA, 2018; pp. 370–378. [Google Scholar] [CrossRef]

- SoftBank Robotics. Pepper [Apparatus]. 2021. Available online: https://www.aldebaran.com/pepper (accessed on 22 December 2022).

- Aldebaran Robotics. Choregraphe [Software]. 2016. Available online: https://www.aldebaran.com/en/support/pepper-naoqi-2-9/downloads-softwares (accessed on 22 December 2022).

- Brinkmeier, M. Die Maus, die sich fledermauste. In 5-Minuten-Märchen zum Erzählen und Vorlesen; Brinkmeier, M., Ed.; Königsfurt-Urania: Krummwisch, Germany, 2019; pp. 11–12. [Google Scholar]

- LimeSurvey GmbH. LimeSurvey. 2021. Available online: https://www.limesurvey.org/de/ (accessed on 22 December 2022).

- Blanca, M.J.; Alarcón, R.; Arnau, J.; Bono, R.; Bendayan, R. Non-normal data: Is ANOVA still a valid option? Psicothema 2017, 29, 552–557. [Google Scholar] [CrossRef]

- Belpaeme, T. Advice to new human–robot interaction researchers. In Human–Robot Interaction; Springer: Cham, Switzerland, 2020; pp. 355–369. [Google Scholar]

- Li, D.; Rau, P.L.P.; Li, Y. A Cross-cultural Study: Effect of Robot Appearance and Task. Int. J. Soc. Robot. 2010, 2, 175–186. [Google Scholar] [CrossRef]

- Wainer, J.; Feil-Seifer, D.J.; Shell, D.A.; Mataric, M.J. The role of physical embodiment in human–robot interaction. In Proceedings of the ROMAN 2006-The 15th IEEE International Symposium on Robot and Human Interactive Communication, Hatfield, UK, 6—8 September 2006; pp. 117–122. [Google Scholar]

- Deng, E.; Mutlu, B.; Mataric, M.J. Embodiment in socially interactive robots. Found. Trends Robot. 2019, 7, 251–356. [Google Scholar] [CrossRef]

- Bainbridge, W.A.; Hart, J.; Kim, E.S.; Scassellati, B. The effect of presence on human–robot interaction. In Proceedings of the RO-MAN 2008—The 17th IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 701–706. [Google Scholar] [CrossRef]

- Kiesler, S.; Powers, A.; Fussell, S.; Torrey, C. Anthropomorphic Interactions with a Robot and Robot-Like Agent. Soc. Cogn. 2008, 26, 169–181. [Google Scholar] [CrossRef]

- Roesler, E.; Naendrup-Poell, L.; Manzey, D.; Onnasch, L. Why Context Matters: The Influence of Application Domain on Preferred Degree of Anthropomorphism and Gender Attribution in Human–Robot Interaction. Int. J. Soc. Robot. 2022. [Google Scholar] [CrossRef]

- Georges, R.A. Toward an understanding of storytelling events. J. Am. Folk. 1969, 82, 313–328. [Google Scholar] [CrossRef]

- Phillips, E.; Zhao, X.; Ullman, D.; Malle, B.F. What is Human-like?: Decomposing Robots’ Human-like Appearance Using the Anthropomorphic roBOT (ABOT) Database. In Proceedings of the 2018 13th ACM/IEEE International Conference on Human–Robot Interaction (HRI), Chicago, IL, USA, 5–8 March 2018; pp. 105–113. [Google Scholar]

- Button, G.; Coulter, J.; Lee, J.; Sharrock, W. Computers, Minds and Conduct; Polity: Cambridge, UK, 1995. [Google Scholar]

- Belpaeme, T.; Baxter, P.; Greeff, J.d.; Kennedy, J.; Read, R.; Looije, R.; Neerincx, M.; Baroni, I.; Zelati, M.C. Child-robot interaction: Perspectives and challenges. In International Conference on Social Robotics; Springer: Cham, Switzerland, 2013; pp. 452–459. [Google Scholar]

- Salem, M.; Eyssel, F.; Rohlfing, K.; Kopp, S.; Joublin, F. Effects of Gesture on the Perception of Psychological Anthropomorphism: A Case Study with a Humanoid Robot. Soc. Robot. 2011, 7072, 31–41. [Google Scholar] [CrossRef]

- Breazeal, C. Emotive qualities in robot speech. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems, Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No.01CH37180), Maui, HI, USA, 29 October–3 November 2001; pp. 1388–1394. [Google Scholar] [CrossRef]

- Steinhaeusser, S.C.; Schaper, P.; Lugrin, B. Comparing a Robotic Storyteller versus Audio Book with Integration of Sound Effects and Background Music. In Proceedings of the Companion of the 2021 ACM/IEEE International Conference on Human–Robot Interaction, Boulder, CO, USA, 8–11 March 2021; Bethel, C., Paiva, A., Broadbent, E., Feil-Seifer, D., Szafir, D., Eds.; ACM: New York, NY, USA, 2021; pp. 328–333. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roesler, E.; Steinhaeusser, S.C.; Lugrin, B.; Onnasch, L. The Influence of Visible Cables and Story Content on Perceived Autonomy in Social Human–Robot Interaction. Robotics 2023, 12, 3. https://doi.org/10.3390/robotics12010003

Roesler E, Steinhaeusser SC, Lugrin B, Onnasch L. The Influence of Visible Cables and Story Content on Perceived Autonomy in Social Human–Robot Interaction. Robotics. 2023; 12(1):3. https://doi.org/10.3390/robotics12010003

Chicago/Turabian StyleRoesler, Eileen, Sophia C. Steinhaeusser, Birgit Lugrin, and Linda Onnasch. 2023. "The Influence of Visible Cables and Story Content on Perceived Autonomy in Social Human–Robot Interaction" Robotics 12, no. 1: 3. https://doi.org/10.3390/robotics12010003

APA StyleRoesler, E., Steinhaeusser, S. C., Lugrin, B., & Onnasch, L. (2023). The Influence of Visible Cables and Story Content on Perceived Autonomy in Social Human–Robot Interaction. Robotics, 12(1), 3. https://doi.org/10.3390/robotics12010003