Abstract

Holistic local visual homing based on warping of panoramic images relies on some simplifying assumptions about the images and the environment to make the problem more tractable. One of these assumptions is that images are captured on flat ground without tilt. While this might be true in some environments, it poses a problem for a wider real-world application of warping. An extension of the warping framework is proposed where tilt-corrected images are used as inputs. The method combines the tilt correction of panoramic images with a systematic search through hypothetical tilt parameters, using an image distance measure produced by warping as the optimization criterion. This method not only improves the homing performance of warping on tilted images, but also allows for a good estimation of the tilt without requiring additional sensors or external image alignment. Experiments on two newly collected tilted panoramic image databases confirm the improved homing performance and the viability of the proposed tilt-estimation scheme. Approximations of the tilt-correction image transformations and multiple direct search strategies for the tilt estimation are evaluated with respect to their runtime vs. estimation quality trade-offs to find a variant of the proposed methods which best fulfills the requirements of practical applications.

1. Introduction

A fundamental building block of visual robot navigation methods is the estimation of relative camera poses from images taken at nearby locations, in the following referred to as local visual homing. In this publication, we focus on methods using pairs of panoramic images, e.g., obtained from an upward facing camera equipped with a fisheye lens.

Local visual homing can be coarsely divided into two main branches: feature-based (e.g., [,]) and holistic methods (e.g., [,]). Feature-based methods detect interest points in images, describe features in the vicinity of the interest points and build a pose estimation pipeline on matched feature descriptors. Holistic methods use the images as a whole at the pixel level without any previous feature extraction. One holistic local visual homing method is MinWarping, first proposed in [] as a reformulation and extension of 2D-warping [] which in turn derives from 1D-warping []. Given two input images, one taken at the current position (current view) and one at a previously visited position (snapshot), MinWarping computes a compass angle and the angle of a home vector pointing from the current position to the snapshot position. MinWarping includes a systematic search through these two angles and optimizes a pixelwise image distance measure. The computations are based on geometric relationships between hypothetical camera poses and on pixelwise matches between the images under simplifying assumptions. A direct comparison of feature-based methods and MinWarping regarding quality, speed, and robustness can be found in []. In this comparison, no method was clearly predominant considering all evaluation criteria. MinWarping produced competitive results while being very fast.

Multiple improvements have been proposed for MinWarping to handle illumination changes. MinWarping in combination with edge-filtered input images and a suitable distance function reaches good homing performance under varying illumination conditions in indoor environments [,]. Additional preprocessing is able to improve the performance in challenging outdoor environments by suppressing misleading cloud edges [].

Another important issue for visual homing is robustness against the tilt of the camera. MinWarping is built on the assumption of an underlying planar movement. In outdoor environments, this is violated by a slanted or uneven ground; in indoor environments, especially carpet borders or doorsteps lead to camera tilt. These violations of the planar-motion assumption lead to errors in the home-direction estimates (see Figure 1), which increase with the amount of tilt (see [] Figure 6.4). Tilted images can be corrected by an additional sensor, such as an inertial measurement unit, but this involves higher costs and a further calibration effort and is not pursued here. For indoor environments, an approach for visual tilt estimation was proposed in [] using vertical image edges and the vanishing point theory. In [], a visual 3D compass was presented to compute the rotation between two hemispherical images. This compass was based on the concept of real spherical harmonics and an exhaustive search with a coarse-to-fine approach. The main problem of the 3D compass was that the results were only reliable if no or only small translations occurred between the camera positions. However, in many applications, the translational offset between camera poses cannot be neglected. A generalization of 2D-warping to handle nonplanar movement was proposed in [] and was called 3D-warping. The approach of 3D-warping was related to the 3D compass and was also based on real spherical harmonics. Nonplanar movements were divided into a translational and a rotational part, and warped versions of the current view were computed for a set of movement hypotheses. The best match between the snapshot and all warped current views gave the estimated 3D movement. For nonplanar movement, 3D-warping increased the homing accuracy compared to 2D-warping and MinWarping, especially when computed on skyline-segmented images. However, for planar movements, 2D-warping and MinWarping outperformed 3D-warping.

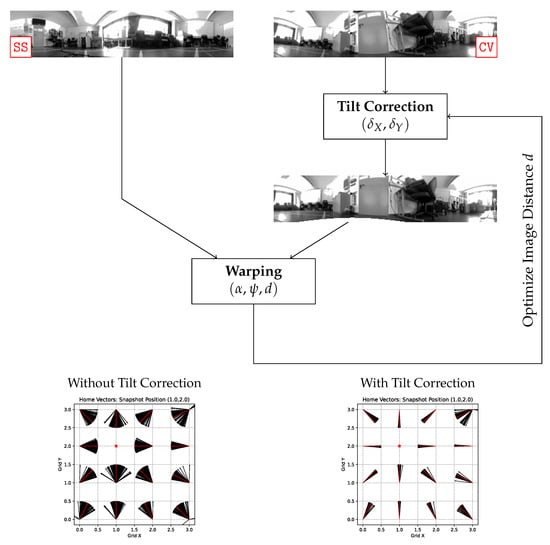

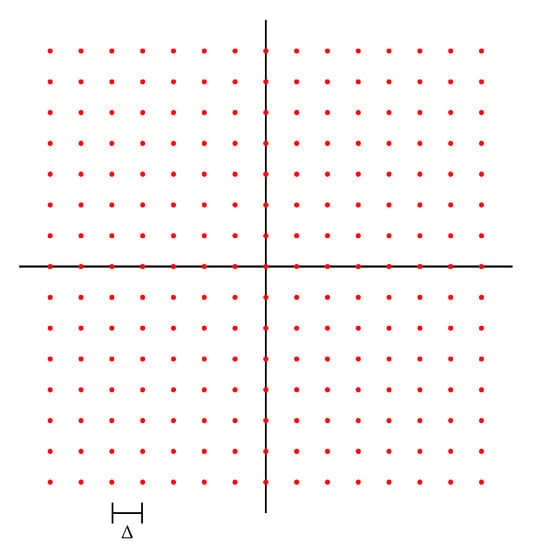

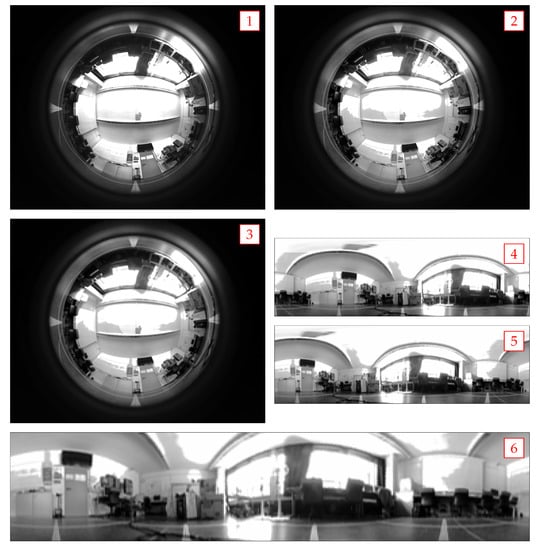

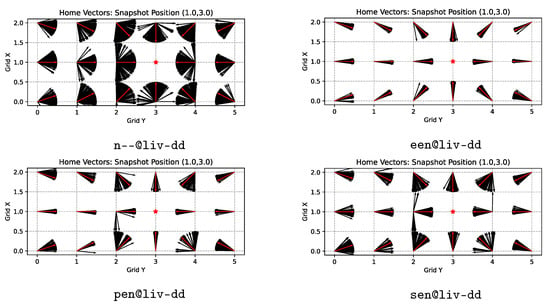

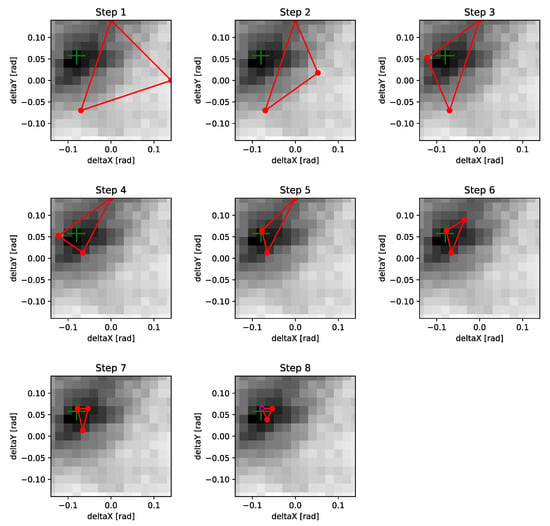

Figure 1.

Overview and exemplary results of the proposed tilt-correction warping methods; see the text for a detailed explanation of the algorithm. The vector-field plots below visualize the home-direction estimates by warping at each position over the different tilts in the database, in case of the original warping algorithm without tilt correction (left) and of the proposed extension incorporating a search for the tilt correction of the current view image. Warping with tilt-corrected images (right) shows a markedly reduced spread of the direction estimates. The sample without tilt correction is produced from the n--@lab-dd experiment. The sample with tilt correction is produced from the pen@lab-dd experiment, showing the results of one of the heuristic search strategies, pattern search.

In this paper, we propose to include tilt estimation in the MinWarping framework. We present a solution to camera-pose estimation between one upright and one tilted view; the more general problem of two tilted views will be explored in future work. Figure 2 visualizes this simplified and the more general case as well. The main approach is to perform a search over two tilt parameters within MinWarping. The potentially tilted view is transformed according to a set of hypothetical tilt parameters and matched to the upright view by using MinWarping as the objective function. A search for the best match is performed. Different search strategies are implemented and evaluated with respect to speed and homing performance. At the moment, the tilt angles are restricted to small values, as they are typical in indoor settings. The proposed extension of MinWarping improves the camera-pose estimation, but is also relevant in other applications, e.g., when MinWarping is used for loop-closure detection [], where invariance to the tilt is also likely to be beneficial.

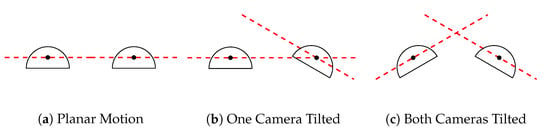

Figure 2.

Progressive violations of the planar-motion assumption. The panoramic camera is visualized by the outermost lens as it appears in an ultrawide-angle fisheye lens system. (a) depicts the planar-motion assumption underlying the original warping method. None of the cameras is tilted and the horizontal plane (red, dashed line) of each intersects with the optical center (black dots) of the other, i.e., both horizontal planes are identical. (b) depicts a first violation of this planar-motion assumption by tilting one of the cameras out of this shared horizontal plane. The optical center of this tilted camera still lies within the horizontal plane of the not-tilted camera, but not vice versa. This intermediate case is the one handled by our newly proposed method, when tilt correction is applied to the view of the camera depicted on the right side. Finally, (c) depicts the more general case of both cameras tilted out of a shared plane connecting the optical centers. The horizontal planes now intersect outside of both of the cameras. This more general case is left for future work and might also extend to or even require out-of-plane translations (see [] for the case of the generalized 3D-warping).

The paper is structured as follows: In Section 2, the coordinate systems and parametrization for a small out-of-plane tilt are introduced. Using these, solutions for transforming panoramic images for tilt correction are derived based on previous work []. These are combined with warping as an objective function via one of three proposed direct search methods to optimize for the ideal tilt estimation. To test the proposed methods, two newly collected indoor panoramic image databases are presented in Section 3, comprising images with a systematic tilt applied to the camera. In Section 4 and Section 5, the homing, tilt-correction, and runtime performance of the proposed methods are evaluated and discussed in the context of practical applicability with suggestions for further improvements or open research questions. Finally, a conclusion is drawn in Section 6.

2. Methods

Figure 1 provides an overview of the proposed extension of the warping algorithm by tilt-correction methods and gives an impression of the improvement of the home-direction estimates achieved by including a tilt correction. Given are two input panoramic images: the snapshot (ss) and the potentially tilted current view (cv). The current view undergoes a tilt-correcting transformation as derived in Section 2.3. This explicit transformation of the image according to hypothetical movement parameters follows the same general idea as the original warping method. The transformations account for a small tilt of the camera, which leads to deviations from the planar motion assumption underlying the warping algorithm. The movement parameters are the two tilt parameter angles and which are introduced in Section 2.1 together with their corresponding coordinate systems.

From the snapshot image and the tilt-corrected current view, the warping algorithm estimates the relative movement parameters and between the two image locations and an image distance measure d. This image distance d is used in turn as an optimization criterion (as motivated in Section 2.2) to search for the best-matching tilt correction using one of the direct, gradient-free search strategies proposed in Section 2.4. This optimization scheme requires multiple evaluations of the original warping method (for each transformed current view) to estimate the tilt correction; this is indicated in Figure 1 by the looping connection from the warping block back to the tilt correction block. This connection is where the proposed search strategies are implemented on a search space spanned by the two tilt parameter angles.

While the primary intention of this extension is to improve the homing performance of the warping method in case of small out-of-plane tilts of one of the cameras (as visualized in Figure 1 in the vector-field plots), the estimation of the tilt parameter angles is a first step towards a full three-dimensional, relative pose estimation between two panoramic images.

2.1. Tilt-Angle Representations

For describing the tilt of a robot with respect to the horizontal reference plane, i.e., flat ground, two representations are proposed: axis-angle and roll-pitch representations. In both cases, the tilt is assumed to be a small rotation out of the horizontal plane without affecting the view direction of the robot. The initial (not-tilted) coordinate system is mounted on the robot such that the X- and Y-axis span the horizontal plane, where the X-axis is pointing in the forward direction of the robot and the Y-axis is pointing to the left. The Z-axis is pointing upwards. A second coordinate system is then obtained by tilting the first one out of the horizontal plane. To be fully specified, the tilting out of a 2D plane requires two parameters as this is a 2-degree-of-freedom (DoF) subset of the general 3-DoF problem of describing arbitrary 3D orientations, which would also include changes in the view direction of the robot. In case of the roll-pitch representation, these two parameters are given by consecutive rotations about the two coordinate axes X and Y spanning the reference plane. This can be expressed by multiplying the corresponding 3D basic rotation matrices:

Here and in the following, is short for , and is short for . The two parameters are the tilt angles about their corresponding axis, together controlling the direction and total amount of tilt. An axis-angle representation can be constructed by specifying only a single rotation by an angle about a tilt axis which remains fixed. Here, this tilt axis can be specified by only one parameter which places it within the reference plane relative to the X-axis:

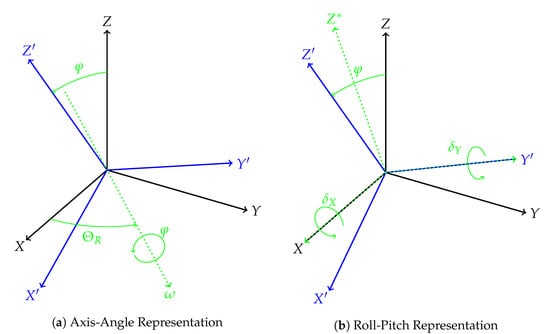

Thus, the parameters and of the axis-angle representation independently control the direction of the tilt axis and the total amount of tilt . The layout of the coordinate systems and the effect of the tilt according to both representations is depicted in Figure 3.

Figure 3.

Depiction of a coordinate system tilted according to the two proposed representations. The axis-angle representation (a) describes the tilt as a single rotation by a small angle about the tilt axis , which lies within the reference plane of the nontilted coordinate system and is described relative to the X-axis by an angle . This angle does not correspond to a real rotation of the robot as it just specifies the axis of rotation. Furthermore, the angle typically is not small, i.e., it can range from 0 to 360 as the robot may tilt in any direction. The roll-pitch representation (b) describes the tilt as two consecutive small rotations and about the X- and Y-axis which span the reference plane of the nontilted coordinate system. As two rotations generally do not commute, the order of these two matters: the X-axis rotation by is always carried out first, i.e., the Y-axis rotation is about the already tilted axis . The green, dotted coordinate system with the axis indicates this intermediate step where only the X-axis rotation is performed. In both representations, the total amount of tilt is given by the angle between the nontilted and tilted Z-axis, which is pointing upwards: .

As both representations are useful for different parts of the tilt-correction process described in the following, conversions between the two parameter sets are derived under the assumption of a small tilt, i.e., , , and are all close to 0. This approximation is required since the equivalence of roll-pitch and axis-angle representations can only exactly be established—according to Euler’s rotation theorem []—if three angles are used for both representations.

The first set of conversions determines the tilt direction and the amount of tilt of the axis-angle representation from the rotations about the X- and Y-axis of the roll-pitch representation:

Equation (4) can also be interpreted as measuring the magnitude of the tilt of the roll-pitch representation, which is useful, for example, when analyzing results with respect to the amount of tilt irrespective of the direction in which the robot is tilted.

The other set of conversions computes the opposite direction, i.e., determines the tilt angle per axis and from the tilt direction and the total amount of tilt given by the axis-angle representation:

This can be thought of as projecting the shared amount of tilt to its X- and Y-axis components. Both conversion directions are derived in the Appendix A.1.

2.2. Outline of the Tilt-Correction Warping Algorithm

To give an outline of the tilt-correction extension of warping (that means MinWarping according to []), we ignore the inner details and interpret warping as a function mapping two images—the snapshot (goal location) and the current view —to the warp parameters , , and the corresponding image distance d:

The image distance d gives a measure of how well the two images match after applying the transformation by and . It is assumed that the smaller the distance d warping yields, the better the ss-cv image pair matches. This means it is easier to warp one image into the other for a smaller image distance d. Furthermore, it is assumed that a smaller d coincides with better matching estimates of the movement parameters and .

This interpretation of the image distance d allows warping itself to be used as a distance measure/criterion for other optimization algorithms: given a transformation for tilt correction of an image with some parametrization of the tilt , it is now possible to use the interpretation of warping as a quality measure for image matching to optimize this with respect to the distance measure d. This is done by running warping multiple times while applying a systematic tilt distortion to one image and selecting the result with the minimal distance measure d:

The best set of warp parameters is then obtained by warping using the tilt transformation with the best set of tilt parameters found by the optimization procedure:

The complexity of this sketched algorithm is in where n is the number of warping invocations necessary to find the optimum. This number depends on the strategy used to realize the operation as well as the resolution of the tilt-parameter space.

2.3. Tilt Correction in Panoramic Images

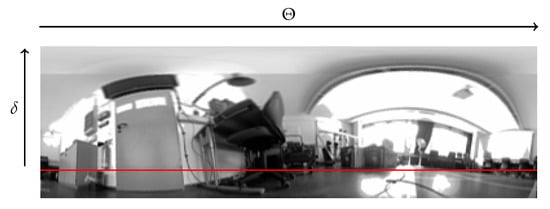

In the proposed extension of the warping method, a panoramic image obtained from a potentially tilted camera is tilted back according to hypothetical tilt-correction angles. For this, it is necessary to describe how an image is affected by tilt. Following [], a relation between the angular pixel coordinates and the 3D coordinate system is established by projecting the panoramic image onto (parts of) the unit sphere. This relation is illustrated in Figure 4.

Figure 4.

Angular coordinates of pixels in the panoramic image: modified spherical coordinates where the vertical angle is measured from the horizon (red line) which lies somewhere inside the vertical range of the image. The horizontal angle runs from left to right in the reading direction.

The conversion between Cartesian and these modified spherical coordinates for the original and tilted coordinate system is then expressed in the following way:

Tilt correction is applied to the image by copying the source pixel at to the destination pixel at . A relation between angular coordinates and pixel indices is established via the horizontal resolution and vertical resolution of the panoramic image. These parameters (in ) are stored as image support data.

The pixel indices i and j are generally real-valued and require rounding or interpolation to be mapped to integer-valued pixel indices.

The original and the tilted Cartesian coordinates are related according to the tilt described in the axis-angle representation by applying the rotation of the tilt angle about the X-axis:

This assumes the coordinate system to already be aligned with the tilt axis, which can be realized most conveniently by introducing an offset in the horizontal angle corresponding to . Before computing the source coordinates , the destination horizontal angle is shifted by to align the subsequent computations with the X-axis. Note the backwards direction as is measured in a mathematically positive direction but the horizontal angle in reading direction, i.e., mathematically negative:

Afterwards, to not introduce an actual image rotation, the source horizontal angle is shifted back by in the opposite direction:

The tilt direction can be neglected in the following, deriving the special case for a tilt aligned with the X-axis, which can be achieved in the general case by the offsetting as just described. By inserting Equation (12) into (10), the following exact solution in terms of angular pixel coordinates can be obtained []:

To accelerate computations, an approximate solution is derived by eliminating multiplications and trigonometrical functions from Equations (15) and (16). Again this is done by following [] assuming a small tilt angle and small vertical angle (note, however, that is not small as it covers the whole 0 to 360 range):

In addition to the exact and approximate solutions, the so-called vertical solution is derived by further approximating from Equation (17), assuming the effect of the tilt on the horizontal coordinate to be negligible. Thus, this method for tilt correction operates only within vertical image columns which are just shifted vertically:

This simplification reduces the computational effort from two computations per image pixel, i.e., and for each – pair, to only one computation per image column, i.e., only for each .

All three tilt-correction solutions (exact, approximate, and vertical) can finally be combined with either nearest-neighbor or bilinear interpolation schemes involving the 4 nearest neighbors when mapping from real-valued angular or pixel coordinates (see Equation (11)) to integer-valued pixel indices. In total, this gives six methods for tilt correction in panoramic images. When transferring a pixel from source to destination , it can happen that the source pixel (for the nearest-neighbor interpolation) or any of the source pixels (in case of a bilinear interpolation) is located outside of the original image: In case the source is only located horizontally outside of the image (, to the left or right), the cyclic property of 360 panoramic images is used to access the corresponding pixel from the other side by taking horizontal pixel indices modulo the width of the image. If the source pixel is (additionally) located vertically (, to the top or bottom) outside of the image, there is no way to use or substitute a meaningful value from the original image and accessing such a pixel would practically result in an out of bounds access. Therefore, this is handled by a special case by inserting a predefined, unique, and reserved value to indicate invalid regions of the image, which is handled appropriately by all following image operations []. This value is called the mask value. It is typically set to the maximum of the range of possible pixel values, i.e., 255 (white) in case of 8-bit-per-pixel images. These regions with invalid pixels can appear at the upper (only in case of the approximate and vertical solution) or lower border of the image. An example of these invalid regions is shown in Figure 5.

Figure 5.

Example of a tilt-corrected panoramic image showing white invalid regions introduced at the bottom to prevent out of bounds accesses. This sample image is produced using the exact tilt-correction solution with a nearest-neighbor interpolation.

2.4. Search Strategies

Using the roll-pitch representation of the tilt, a uniform search space with equal range and scaling for both parameter axes (in contrast to the axis-angle representation) can be constructed:

The two parameters, and , were chosen to be small and symmetric (in their absolute values) about the zero tilt configuration in order to model the tilt as a small perturbation out of the plane as described before. In the most general case, the search range can be limited by forcing all candidate solutions outside to evaluate to a value bigger than any solution inside of the range. This is done by introducing a penalty or constraint term added to the objective function value:

To solve the optimization scheme sketched in Equation (8) using the uniform and limited search space, three direct search methods, i.e., methods only comparing function values of the objective without requiring it to be differentiable, are proposed in the following.

2.4.1. Exhaustive Search

The exhaustive search strategy constructs a discretized search grid from the limited but continuous search range specified in Equation (20) by sampling points with a fixed step size along both axes:

This does not require the introduction of the limiting penalty term (21), as the search grid is already a limited search space by construction. As this gives a finite and enumerable set of candidate solutions, an approximation of the optimal tilt correction can then be found among these grid points by systematically running an exhaustive search of warping over all tilt configurations in the search grid:

An example of such a uniform search grid is depicted in Figure 6.

Figure 6.

Exhaustive search: depiction of the uniform search grid (red dots) of spacing . The dots mark the tilt configurations which are systematically probed to find the one minimizing the warping objective. The depicted grid has a total of 225 points, i.e., 15 tilt configurations per axis. The black lines mark the center axes of the search space, crossing at the tilt configuration.

It is straightforward to improve the quality of this approximation by increasing the resolution of the search grid. However, this comes at a runtime cost: the number of candidate solutions which need to be exhaustively probed, i.e., the size of the search grid , is quadratic in the number of points along each axis and thus scales inversely proportional to the square of the step size, i.e., the resolution of the search grid:

Thus, halving the step size (doubling the resolution) increases the number of candidate solutions (warping runs to be done, and thereby the runtime) by a factor of four. Therefore, the exhaustive search provides a single parameter for controlling the trade-off between estimation quality and runtime.

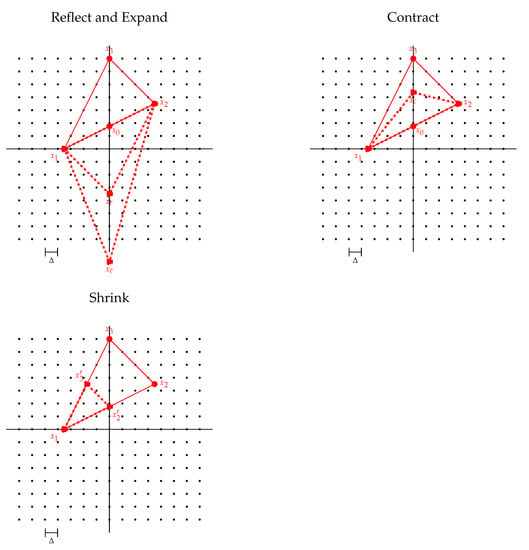

2.4.2. Pattern Search

The heuristic pattern search strategy [,] tries to reduce the number of function evaluations by considering only a small subset P of points of the search space. This is achieved by surrounding an initial guess of the optimum at the center with a cross-shaped pattern of candidate solutions. This pattern has a total of five points—two along each axis of the search space plus the center point—and is used to iteratively construct improved guesses by finding the optimum among these candidate solutions. At each step of the iterative procedure (for a depiction of the control flow see Figure 7), the pattern is characterized by two parameters: the center point c tracking the current optimum and the width w describing the distance between the center and the outer points. These are then adapted according to one of two update rules depending on the location of the new optimum among the five pattern points: the pattern can either move to the new optimum if it is one of the outer points or it can shrink in place if the new optimum is the old center.

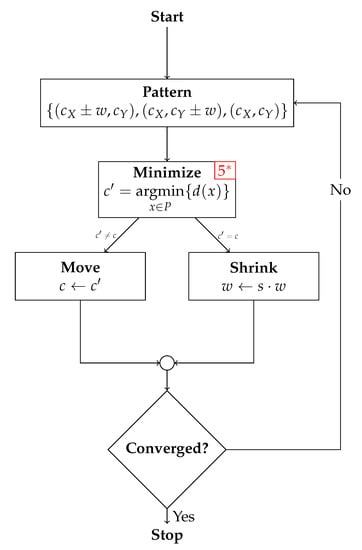

Figure 7.

Flowchart of the pattern search algorithm. A red number at the corner of a box indicates the number of evaluations of the warping objective necessary at that step. However, by remembering already evaluated points (not all change at each update step), this number can in practice be reduced to 3 or 4 evaluations per iteration (to indicate this, the number is marked by a red star). Arrows annotated with inequalities indicate mutually exclusive branches and should be read like decision boxes, which are not drawn to keep the figure more compact.

The shrinking of the pattern is controlled by a coefficient which controls the rate at which the width of the pattern decreases. As this parameter is smaller than 1, the pattern only ever gets smaller. Typically it is set to 0.5, meaning the pattern halves at each shrinking step. Some pattern followed by the two update rules is depicted in Figure 8.

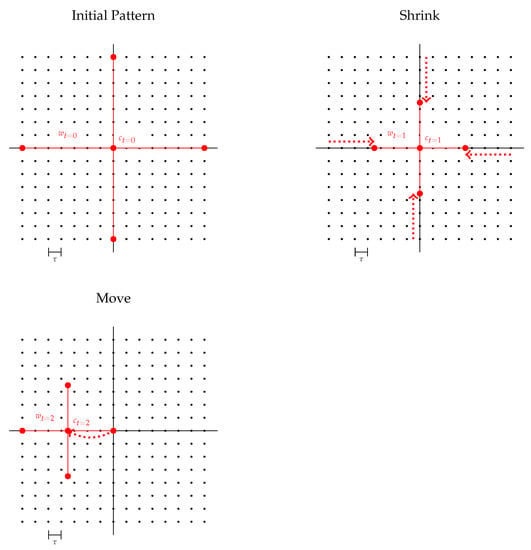

Figure 8.

Pattern search: depiction of a sequence of the shrink and move operation starting from an initial pattern covering the whole search space (black dots). The spacing between the dots indicates the threshold resolution of the search pattern to test for convergence. The black lines mark the center axes of the search space, crossing at the tilt configuration.

While no assumptions about continuity or differentiability of the objective are made, for the pattern search (as well as the Nelder–Mead search introduced below) to converge fast to the correct solution, it needs to yield at least a favorable landscape with a unique global minimum surrounded by a valley of decreasing function values. Furthermore, the landscape should not be too “bumpy”, i.e., contain no or only few local minima in which the search pattern may get trapped. To prevent the pattern from diverging, i.e., running away out of a reasonable search range, here it is indeed necessary to introduce the limiting penalty term (21). This forces a shrink step (or a move to some suboptimal but within-range solution) once the new optimum would be outside of the range of valid tilt configurations.

Finally, as already hinted at in Figure 7, a convergence criterion must be given to stop the iterative procedure once a sufficiently good solution is found; this can be stated most naturally by comparing the width of the pattern w to some predefined threshold value beyond which the pattern is considered small enough to stop:

To make the performance of the pattern search relatable to the exhaustive search, this threshold was chosen to be the same as the step size of the grid, meaning the pattern search stopped at solutions of similar resolution: . The initial center and initial width of the pattern were already given by the underlying search space as shown in Figure 8, the convergence threshold was chosen just as described to match the desired resolution of the search space , and the shrink coefficient s was set without further exploration to the reasonable default of .

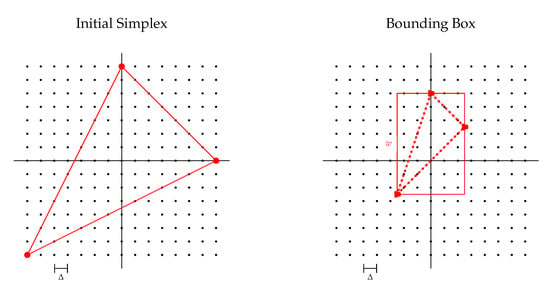

2.4.3. Nelder–Mead Search

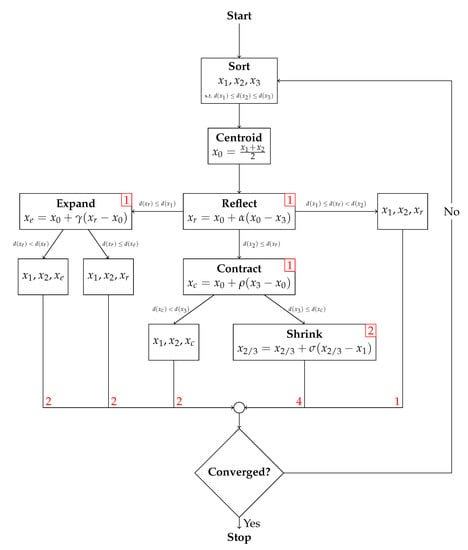

Similar to the pattern search, the Nelder–Mead search strategy according to [] is a heuristic and iterative procedure trying to reduce the number of function evaluations to minimize the objective. This method is also known as the simplex method, as it uses a simplex S—a polytope of points in n dimensions, i.e., a triangle in the two dimensions used here—as the search pattern. At each step of the iterative procedure (for a depiction of the control flow see Figure 9), a replacement of the worst candidate among the vertices of the simplex is sought by constructing new candidates relative to these three points. The simplex can either reflect, expand, or contract along the line connecting the worst candidate to the so-called centroid between the two best candidates or shrink towards the best candidate if this yields no improvement. These four operations are each controlled by their corresponding coefficients: the reflection , expansion , contraction , and shrink coefficient . These parameters were all selected without further exploration to the best choice of parameters according to []: , , , and . An example simplex and the four update rules are shown in Figure 10. As for the pattern search, it is necessary to prevent the procedure from diverging by introducing the limiting penalty term (21). Here, this effectively constrains the applicability of the reflection or expansion step, i.e., forces a contraction, when these would result in a vertex outside of the range of valid tilt configurations.

Figure 9.

Flowchart of the Nelder–Mead search algorithm. A red number at the corner of a box indicates the number of evaluations of the warping objective necessary at that step. Right before merging all paths, red numbers indicate the total number of evaluations along each path. Arrows annotated with inequalities indicate mutually exclusive branches and should be read like decision boxes, which are not drawn to keep the figure more compact.

Figure 10.

Nelder–Mead (simplex) search: depiction of the four update operations on the search simplex superimposed on the uniform search grid (black dots). Starting from the ordered set of vertices , an improvement is sought by reflecting , expanding , or contracting along the line connecting the worst candidate to the centroid between the best candidates. If no such improvement is found, the simplex shrinks towards the best candidate . The black lines mark the center axes of the search space, crossing at the tilt configuration.

The initial simplex is constructed as shown in Figure 11 by placing one vertex at a corner of the search space and the other two at the center of the opposing edges. However, in contrast to the pattern search, it is not possible to construct a simplex of three points fully covering a square search space. While this naive initialization is sufficient for a first comparison of the search strategies, it is probably worthy of future investigations.

Figure 11.

Nelder–Mead (simplex) search. The left figure shows the initialization of the simplex covering most parts of the search space by placing the vertices at two edges and a corner of the search space. The right figure shows the axis-aligned bounding box used to estimate the size of the simplex by the length w of the longer side of the box.

Finally, to stop the iterative procedure once a sufficiently good solution is found, a convergence criterion must be given. Originally, ref. [] proposed to use some predefined threshold compared to the sample standard deviation of the objective function values at the vertices, which in the case of the three-vertex simplex looks as follows:

However, as there is no prior knowledge on the range and distribution of the function values d produced by warping, besides the assumption that they are decreasing for better matching image pairs, this criterion yields no meaningful interpretation and is hard to adjust; the absolute value and variation of the distance estimate depends on aspects of the image pair and the configuration of the warping algorithm and does not allow to control the resolution in tilt-parameter space. Thus, a simpler and more interpretable criterion based on the size w of the simplex in search space is proposed, resembling that of the pattern search:

This size of the simplex is estimated by the length of the longer side of an axis-aligned rectangular bounding box, which can be quickly computed by (elementwise) minimum and maximum operations on the set of vertices:

The elementwise minimum operation (and the maximum analogously) over the three two-dimensional vertex coordinates is defined in the following way:

The procedure of constructing this bounding box is depicted in Figure 11.

While other notions of the size of the simplex might be conceivable, e.g., computing the area or volume of the search space covered by the simplex, this bounding-box approach is the easiest to interpret (it also yields an upper bound on the area), as the width of the bounding box can be directly related to the width of the search pattern or the step size of the search grid. This allows us to adjust the threshold parameter to a comparable resolution, , as the pattern measures a center-to-edge distance while the bounding box measures an edge-to-edge distance (twice the center-to-edge distance).

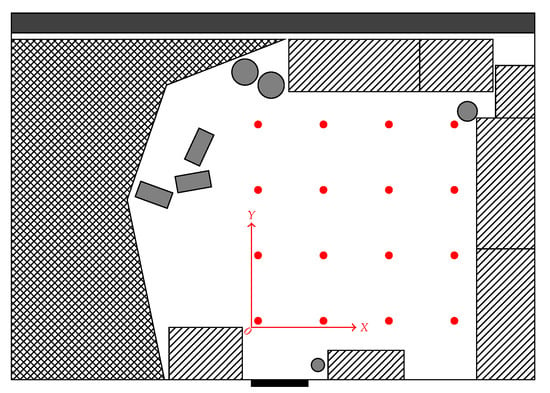

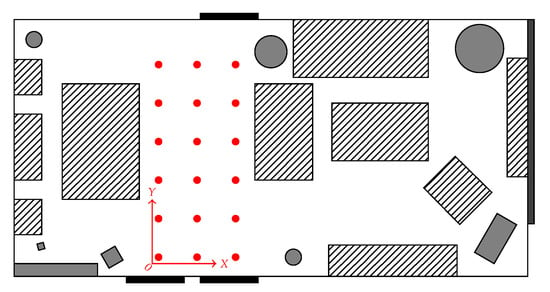

3. Image Databases

The proposed methods were evaluated by conducting experiments on two newly collected real-world tilted panoramic image databases. These databases were collected by placing the tiltable panoramic camera (iDS UI-3241LE-M-GL with a 220 Lensagon BF16M220DC fisheye lens by Lensation) on all intersection points of a regular Cartesian grid on the horizontal ground plane. The LAB database was collected in a laboratory room of the Computer Engineering Group at Bielefeld University. The layout of this room and the location of the grid with a total of 16 positions at a spacing of is shown in Figure 12. The laboratory environment comprised a tidy workspace of desks and office chairs directly adjacent to the database grid and a more cluttered area filled with various items of lab equipment on the opposite side of the room. The LIV database was collected in a living room with a high ceiling on a more elongated grid of 18 positions at a spacing of . This environment comprised mostly large pieces of furniture such as tables, cupboards, and sofas as shown in Figure 13.

Figure 12.

Layout of the laboratory room where the LAB database was collected. The database covered an area of in steps of . The grid of image locations is marked in red. The whole room had a size of . The areas comprising furniture such as cupboards, desks, or chairs are marked by hatched regions, and gray boxes and circles mark the position and rough shape of small items such as card boxes and fans. The door of the room is marked by the black bar at the bottom wall. The cross-hatched area on the left half of the room comprised a variety of laboratory equipment, including robots, cables, and tools. The dark gray area along the top wall of the room marks the window facade, which was partially covered by curtains.

Figure 13.

Layout of the living room where the LIV database was collected. The database covers an area of in steps of . The grid of image locations is marked in red. The whole room had a size of and each of the furniture items was at least partially visible at one of the locations. The areas comprising furniture such as cupboards, tables, chairs, or sofas are marked by hatched regions, and gray boxes and circles mark the position and rough shape of decor items. The three doors of the room are marked by the black bars. The dark gray area along the right wall of the room marks the window facade, which was partially covered by shutters and curtains.

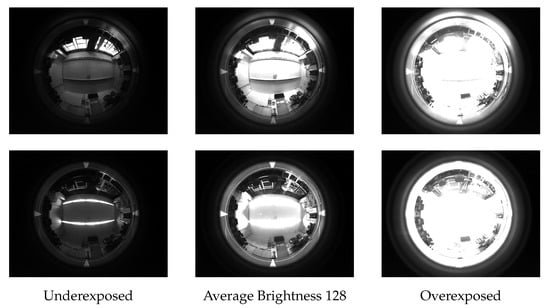

Variants under varying conditions of illumination—one during the day and one during the night—were collected for both databases. During these two data collection sessions, the illumination was kept as constant as possible, and an exposure control mechanism was used to keep the average brightness of the images constant as well. Additionally, to make the databases reusable for future high-dynamic-range (HDR) experiments, under- and overexposed variants of each image were collected using an exposure time which was derived from the controlled average brightness by a constant factor: for the underexposed and for the overexposed image. Thus, there were in total three differently illuminated images at each position in the night variant. In the daylight variant, three differently illuminated images were saved for each combination of position and tilt. A set of sample images of these variants from the LAB and LIV database is shown in Figure 14 and Figure 15, respectively.

Figure 14.

Sample images from the LAB database. All images were collected at position of the database grid in the , i.e., no-tilt, configuration. The images of the top row were collected during the day with bright light shining through the windows and artificial lighting turned off. The images of the bottom row were collected during the night at the same pose. At night there was no light shining through the windows and the artificial lighting was turned on. There were no changes to the environment between the two variants besides the illumination conditions.

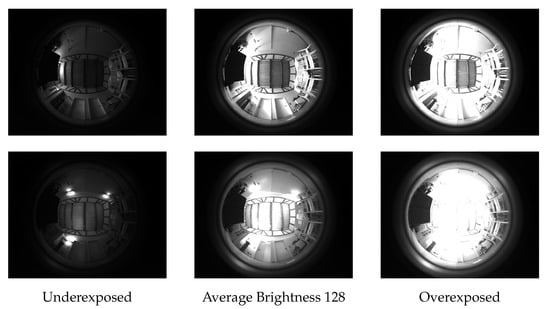

Figure 15.

Sample images from the LIV database. All images were collected at position of the database grid in the , i.e., no-tilt, configuration. The images of the top row were collected during the day with bright light shining through the windows and artificial lighting turned off. The images of the bottom row were collected during the night at the same pose. At night there was no light shining through the windows and the artificial lighting was turned on. There were no changes to the environment between the two variants besides the illumination conditions.

3.1. Pan–Tilt Unit

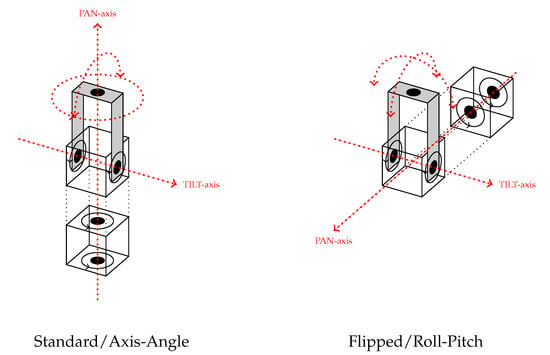

The day variant of both databases comprised images captured with a systematic tilt applied to the camera at each grid location. This was done by mounting the camera on top of a pan–tilt unit (PTU) (PTU-C46 by Directed Perception, Inc., Burlingame, California/ FLIR Systems) allowing for rotations about two orthogonal axes, i.e., the PAN- and the TILT-axis. In its standard configuration, the PTU was setup in a way corresponding to the axis-angle representation of the tilt: rotated about the PAN-axis to select the direction of the tilt axis and then rotated by around the TILT-axis. Using the conversion derived earlier in Equations (3) and (4), it was also possible to control the PTU with angles given in the roll-pitch representation. However, in contrast to the roll-pitch and axis-angle representations, which were formulated to avoid any relation between the tilt direction and the view direction of the robot, the PTU actually changed the view direction when applying tilt. This was due to the PAN-axis not only selecting the direction of the TILT-axis but also rotating the camera about its optical axis. This caused a coupling of the view direction of the robot to the tilt which was always present when using the PTU in its standard configuration.

To avoid this problem, the PTU was mounted in a flipped configuration, flipping the PAN-axis horizontally by rotating 90 about the TILT-axis. In this configuration, the PAN- and TILT-axis spanned the horizontal plane and could be directly associated with the roll-pitch representation of the tilt. As the camera was still mounted upright with its optical axis aligned to a third axis orthogonal to the rotating axes, this configuration had no relation between the tilt and the view direction. Thus, the view direction was fully determined by the static orientation of the camera mount. This view direction needed to be taken into account during the image preprocessing as well as during the evaluation of the results, as it corresponded to one of the homing parameters estimated by warping. A schematic of both PTU configurations is shown in Figure 16 and a photo of the modified PTU is shown in Figure 17. The operating range of the PTU was on the PAN- and on the TILT-axis, which was more than sufficient for the small amounts of tilt considered in this work. In both configurations, tilting the camera led to a small change in the camera position, i.e., a small offset in all three spatial dimensions. However, considering the small amounts of tilt, this change in position was small enough with respect to the database grid resolution to be neglected in the experiments—in the worst case, this yielded a displacement of only about 3 compared to the 100 or 60 resolution of the grid. The change in position depended on the tilt and the offset of the camera mount above the plane spanned by the moving PAN- and TILT-axis: in the standard configuration, the camera was mounted about and in the flipped configuration about 17 above the intersection of the PAN- and TILT-axis. Including the immovable parts of the setup, these offsets corresponded to images collected about or 36 , respectively, above the ground.

Figure 16.

Two configurations of the pan–tilt unit (PTU). The standard configuration (left) is the one giving the PTU its name with the two servo modules mounted on top of each other. This configuration matched the axis-angle representation of tilt where the PAN-axis rotation selected the tilt axis and the TILT-axis rotation the amount of tilt. However, with the camera mounted rigidly on top of the setup, this caused a change of view direction due to the panning as well and thus coupled the view direction to the tilt. To get a configuration which decoupled the view direction from the tilt, the PTU was flipped on its side (right) by mounting it at a 90° angle about the TILT-axis while keeping the camera mount upright. This way, the PAN- and TILT-axis spanned the horizontal plane and could be associated with the X- and Y-axis of the roll-pitch representation. See Figure 3 for a depiction of the two tilt representations.

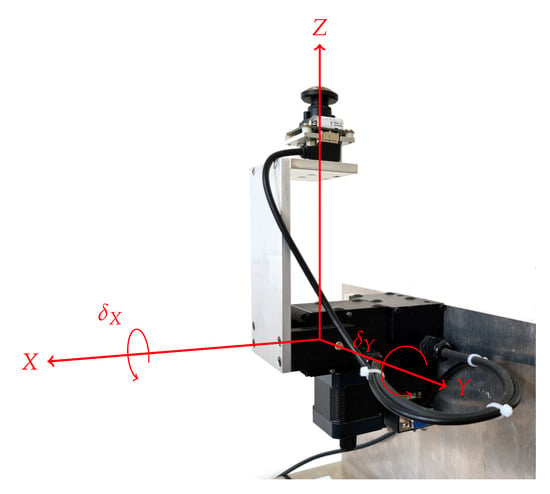

Figure 17.

PTU in flipped/roll-pitch configuration. Photo of the modified PTU and camera mount for decoupling the view direction from the tilt. See Figure 16 for a schematic view of this configuration. The coordinate system associating the X- and Y-axis of the roll-pitch representation with the PAN- and TILT-axis, respectively, is drawn in red. The origin is placed at the intersection of the PAN- and TILT-axis. The camera mount is centered above (along the Z-axis) the origin.

- LAB Tilt Range For collecting the LAB database, the PTU was used in its standard configuration with the tilt parameters converted to the axis-angle representation from a uniform grid in the roll-pitch representation. This grid of tilt parameters covered a range of of tilt per X- and Y-axis in steps of :In total, there were 121 different tilt configurations per position in the day variant of the LAB database. In terms of magnitude (see Equation (4)), this comprised up to of tilt. From using the PTU in its standard configuration and mounting the camera facing backwards relative to the forward facing X-axis (i.e., at 180) and requiring a 180 PAN range to select all tilt directions, the view direction systematically varied between 180 and 360, depending on the PAN-axis directions, which themselves depended on the two tilt parameters and .

- LIV Tilt Range For collecting the LIV database, the PTU was used in the flipped configuration, with the tilt parameters in the roll-pitch representation taken from the uniform grid without conversion. The LIV database comprised a slightly larger range of tilt of per X- and Y-axis in steps of about :In total, there were 225 different tilt configurations per position in the day variant of the LIV database. In terms of magnitude, this comprised up to of tilt. With the PTU used in the flipped configuration, the view direction was constant and fully determined by the camera mount, which was oriented at relative to the forward facing X-axis.

3.2. Image Processing

The image processing generally followed the scheme described by [,]. The path of a sample image from the LAB database through the preprocessing stages is shown in Figure 18. Starting from the original image (step 1) with a size of , histogram equalization was applied to increase the contrast (step 2). The equalization was implemented such that only the ring-shaped region of valid pixels indicated by the mask shown in Figure 19 was considered. The equalized image was then low-pass-filtered to avoid aliasing artifacts due to the subsampling of the images while applying transformations to panoramic images (step 3). For all experiments, a third-order Butterworth low-pass filter with relative cutoff frequency of 0.2 was used.

Figure 18.

Path of a sample image from the day variant of the LAB database at position without tilt through the image processing pipeline: (1) original image from the database; (2) improved contrast after histogram equalization; (3) low-pass-filtered using a 3rd-order Butterworth filter with relative cutoff frequency of 0.2; (4) unrolled panoramic representation; (5) simulated rotation of by horizontal left shift with wrap-around; (6) cropping of upper to remove the distorted ceiling and potentially invalid regions at the top.

From this preprocessed image, the panoramic representation (step 4) was unrolled such that the horizontal dimension corresponded to the azimuthal angle of a pixel and the vertical dimension to its elevation angle. This unrolling was done by a mapping projecting the image onto the unit sphere, which was sampled with an equidistant spacing. To construct the projection onto the unit sphere, a camera model was required, which was obtained from a set of images showing a checkerboard calibration pattern as shown in Figure 19. These calibration images were processed into a set of camera parameters using the OCamCalib Toolbox []. During the unrolling process, all images were brought into the same orientation—facing forward aligned with the X-axis of the database grid—by accounting for the view direction due to the camera mount and PTU configuration mentioned earlier. The panoramic representation comprised all of the available field of view of the camera, meaning it ranges from below the horizon to above the horizon. The resulting image had a size of pixels at a vertical resolution within an image column of approximately and the horizon level at pixels counting from the top. The sampling of the unit sphere used a bilinear interpolation.

Figure 19.

Camera calibration and mask image. The calibration image is one example of several images collected showing a checkerboard pattern []. These calibration images were used to obtain the camera parameters necessary for “unrolling” the image into a panoramic representation [,]. The mask image was used for exposure control and preprocessing to ignore those regions of the image which were not exposed at all or contain no meaningful information, such as the typically overly bright ceiling. The ring-shaped region extended from about 20 on the inner side to about 110 on the outer side, or in terms of elevation and depression, up to 70 above and 20 below the horizon.

All preprocessing steps described until now (steps 1–4 in Figure 18) were applied only once for each database (for each image) and thus were not included in the time measurements of the experiments. The final two preprocessing steps (described below) were applied once per image pair (step 5, rotation) or even multiple times per image during the tilt correction search, every time the image was warped (step 6, cropping).

As all panoramic images had the same orientation after the preprocessing, warping would always find an estimate of the view direction at , which was exactly contained in the discrete search space over and as described in more detail by []. To avoid this and get a more realistic homing performance, both the snapshot and current view were rotated by random azimuth angles and similar to []. This rotation corresponded to a horizontal shift with a wrap-around in the panoramic representation; while the images were unrolled in the reading direction, a positive rotation corresponded to a left shift against the reading direction. This randomization of the view directions was done for each snapshot–current view pair and kept the same for all methods evaluated on the image pair. As the tilt corresponding to each image was described in roll-pitch representation relative to the fixed coordinate system aligned with the database grid, the rotation of the image required the tilt parameters to be adapted into this rotated coordinate system to remain valid for the tilt correction or as ground truth for matching the tilt estimates: under the assumption of a small tilt, i.e., still keeping the tilted plane close to being parallel to the ground plane, the introduction of an additional rotation of about the Z-axis approximately corresponded to a rotation of the two roll-pitch parameters within the X–Y plane:

A detailed derivation of the exact adaptation of the tilt parameters under rotation and these approximations is given in the Appendix A.2.

Some image transformations, in particular the tilt correction, yielded invalid regions at the top and bottom border of panoramic images. As the scale-plane stack computations of the given warping implementation only handled these regions at the bottom border, the upper part needed to be removed. To have comparable images throughout all experiments, a constant cropping of from the top was applied to all images, i.e., tilt-corrected and not tilt-corrected, just before being passed to warping. This chosen amount of cropping was more than sufficient to remove the biggest invalid regions to be expected at the most extreme tilt correction of . Furthermore, this cropping may be beneficial to the homing performance of warping as most images showed only distorted ceiling without much detail in the cropped region. At the vertical resolution of the panoramic representation of approximately , this cropping corresponded to approximately pixels, resulting in a final size of the panoramic image of pixels and a horizon level at pixels counting from the top. By using the fact that the images were stored in row-major order, the image cropping was implemented efficiently by making a view into the memory of the original image at the cropping offset. Thus, even though the cropping needed to be applied multiple times throughout the search for an optimal tilt correction, this added no significant runtime overhead.

4. Results

From each of the two databases LAB and LIV, three datasets of snapshot/current view image pairs were generated. These datasets represented situations of varying difficulty for the tested methods. The DxD datasets took both snapshot and current view from the day variant of the database, meaning the illumination conditions did not vary by much. As the proposed tilt-correction scheme handled only one image being tilted, the snapshot images were constrained to those (one per position) with no tilt applied to the camera. The NxD datasets yielded so-called cross-database tests with snapshots taken from the night variant and current views from the day variant, resulting in substantial illumination differences between snapshot and current view images. These datasets allowed us to test the methods for tolerance against changes in illumination which are known to affect the performance of visual navigation methods in general [,]. Finally, the NxN dataset was generated from only the night variant of the databases representing the most simple case with no illumination changes and no tilt applied to any of the images. This last dataset served more as a reference for testing tilt-correction methods when no tilt was actually present. Experiments were conducted over all image pairs from these datasets testing all method combinations of components proposed in Section 2, i.e., all combinations of search strategy, tilt correction, and interpolation methods.

4.1. Nomenclature and Parameters

From the three tilt-correction solutions and the two interpolation schemes proposed in Section 2.3, six tilt correction methods were implemented. The identifiers used to distinguish the tested methods were composed from the initial letters of the methods and are listed in Table 1.

Table 1.

Identifiers of the six tilt-correction methods. The identifiers are composed of the initial letters of the methods, naming the tilt correction solution first, followed by the interpolation scheme.

These tilt-correction methods were then combined with warping and one of the three search strategies proposed in Section 2.4. The resulting methods are referred to by prepending the identifier of the search strategy to that of the tilt correction. In addition to the three search strategies, two baseline strategies were added to the method pool. The true tilt strategy used the known ground truth tilt parameters as recorded in the database. This corresponded to cases where an additional sensor, such as an inertial measurement unit, was combined with warping to provide the tilt parameter estimates. Furthermore, this allowed us to investigate how the different tilt-correction methods affected the homing performance without interference of a particular search strategy. Finally, the none tilt strategy used the warping algorithm as it was, without any form of tilt correction. All 25 method combinations are listed in Table 2. When referring to the results on a particular dataset later on, an identifier of the dataset—comprising the database and the variants used—is appended to the method identifier: e.g., ten@lab-nd refers to the true tilt strategy using an exact tilt correction with a nearest-neighbor interpolation evaluated on the NxD cross-database test of the LAB database. All methods combined with warping [,] used the same configuration and parameters as listed in Table 3, Table 4 and Table 5. As these parameters never changed, they are not indicated by the method identifiers. As the first phase of warping, the distance image or the scale-plane stack computation, a modified version of the NSAD distance measure [,] was used, adapted to properly handle the invalid regions introduced by the tilt-correction methods []. Finally, Table 6 states the range and resolution parameters used to constrain the tilt search strategies.

Table 2.

Identifiers of the tilt-correction warping schemes. The identifier of the tilt (search) strategy is prepended to that of the tilt-correction method (see Table 1). The Nelder–Mead search uses s for the simplex method. The true tilt strategy applied a single ground-truth tilt correction as recorded in the databases, before running warping once. The none tilt strategy was a baseline test running warping without any tilt correction. This baseline was the same for both interpolation schemes as there was nothing to interpolate. In total, there were 25 combinations.

Table 3.

Configuration of the image distance and scale-plane stack computation. A scale-plane stack of 9 distance images was computed with a scale factor up to 2.0 using a modified NSAD column distance measure, adapted to handle the invalid regions due to the tilt-correcting image transformations.

Table 4.

Number of steps, search range, and resolution of the warp parameter search space used by MinWarping.

Table 5.

Configuration of the MinWarping searcher. MinWarping was configured to perform a double search, i.e., a second run with exchanged snapshot/current view, with compass acceleration.

Table 6.

Range and resolution parameters used by the tilt search strategies. All three strategies were set up to cover the same symmetric search range of up to of tilt per axis in the roll-pitch representation. This range comprised the maximum tilt of both databases (i.e., the range of the LIV database) and was used for experiments on both. The grid and pattern search resolution also matched the step size between tilt configurations of the LIV database, and the simplex threshold was derived from these as , as described earlier.

4.2. Image Differences

For comparing the effect of the tilt-correction solutions on panoramic images, a pairwise difference measure was used to compare images transformed by two different solutions on the same input image. This was done by computing a normalized sum of absolute pixelwise differences:

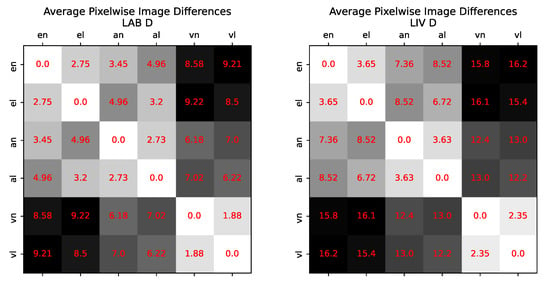

where and are transformed from the same input image by different tilt-correction solutions or using different interpolation methods. W and H are the width and height of the panoramic image. Using this difference measure, a distance matrix of the pairwise comparisons of all tilt-correction solutions combined with the two interpolation methods (nearest neighbor and bilinear) was plotted. These comparison matrices were produced for each database by averaging over all images and are shown in Figure 20. Note that this image difference experiment omitted the last two preprocessing steps of rotating and cropping the image, as these were only required for the homing experiments. These average image differences clearly set the strongest, vertical approximation apart from the other two, which were more similar to each other than to the vertical. This was not surprising as the vertical approximation removed one degree of freedom, the horizontal direction.

Figure 20.

Plot of the average pixelwise image differences between all tilt-correction solutions. The LAB and LIV database are reported separately as the ranges of tilt and thus the expected deviations were different (LIV comprised more tilt). The results were averaged by taking the mean per method over all images contained in the database. For the tilt correction, the ground-truth tilt parameters as recorded in the database were used. The image difference measure is a symmetric distance measure, therefore the plotted matrices are symmetric about the main diagonal as well. With a stronger approximation, the effect of the different interpolation methods becomes smaller. The exact e* and approximate solutions a* are more similar to each other than to the vertical approximation v*.

The choice of interpolation method was only subordinate to the choice of approximation and the solutions were more similar to their interpolation variant than to the next level of approximation. This was also expected as the interpolation methods did not change anything about how the tilt correction operated but only improved on the image quality by smoothing out the lines and edges in the image. Figure 21 shows an example of an image tilt-corrected from one of the most extreme tilt configurations of the LAB database; the exact and approximate solutions were capable of straightening out most of the distortions due to tilt. With approximations, the deviations from straight lines became more noticeable farther away from the horizon, in particular at the top, where the small opening angle assumption was clearly violated. Without the horizontal component, the vertical approximation was not capable of straightening out curved vertical lines, and thus, noticeable distortions remained even close to the horizon, making this strongest approximation clearly inferior to the other two. This hinted at the fact that a single transforming degree of freedom, i.e., vertical shift, was not sufficient to do proper tilt corrections.

Figure 21.

Sample image from the LAB database at position in the most extreme tilt configuration. The original tilted image is shown at the top. Below is the image after tilt correction based on the ground truth using the three approximation levels with a nearest-neighbor interpolation. Invalid regions appear at the bottom of the exact solution and at the top and bottom of the approximate solutions. The jagged edges are an artifact of the nearest-neighbor interpolation. While the exact and approximate solutions clearly straighten out most of the edges, the vertical approximation still seems to be more similar to the original, tilted image than to the other tilt-corrected images, in particular in regions farther away from the horizon. The effects become most obvious when looking at the door, which should be perfectly straight and upright after tilt correction. Note that this image difference experiment omits the last two preprocessing steps of rotating and cropping the image, and thus invalid regions can be seen at the top of the approximate and vertical solution.

4.3. Homing Performance

The homing performance was evaluated by comparing the angular error between the true home vector and the home vector estimated by warping:

These home vectors were unit-length vectors with direction and , respectively, relative to the world coordinate system, i.e., the database grid. The estimated home direction was computed from the parameters and estimated by warping []:

The view direction angle was subtracted to convert from robot coordinates back to world coordinates to make the estimate comparable to the true home direction. This accounted for the randomized view direction which was introduced during preprocessing. The true home direction was derived as the angle between the positive X-axis and the vector pointing from the current view to the snapshot location on the database grid:

Plotting all home directions per snapshot as arrows placed at the corresponding current view positions yielded a qualitative visualization of the homing performance. Each home vector was computed as the two-dimensional unit vector with polar angles or , respectively:

These vector field plots allowed us to observe the spread of the home direction estimates around the true home direction.

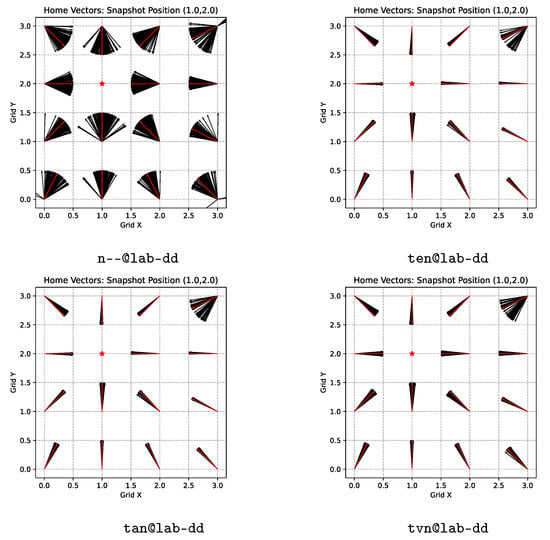

Figure 22 visualizes the effect of the different tilt-correction solutions on the home-direction estimates compared for the true tilt strategy. Furthermore, plotting the results of the no-tilt strategy clearly demonstrated how severely the homing performance of warping was affected by the tilt. While the differences between no-tilt correction and any tilt correction were vast, there was not much difference to observe when comparing the three tilt-correction solutions. Only in case of the vertical approximation could a slightly increased spread of the home vectors about the true home direction be observed. However, these differences were almost negligible compared to the no-tilt-correction baseline.

Figure 22.

Home vector field plots comparing the tilt-correction solutions with a nearest-neighbor interpolation on the true tilt strategy. One sample snapshot location from the LAB DxD dataset is shown. The red arrows show the true home direction per position, the black arrows show the estimates obtained by the applied method—as there were 121 images per position in the dataset, there are also 121 home-direction estimates per position even though they might overlap in these plots. For this test, the snapshot position near the middle of the grid was chosen and hence the arrows should ideally point there. The location in the top right corner is a known “bad spot” of the LAB environment, near to multiple furniture items.

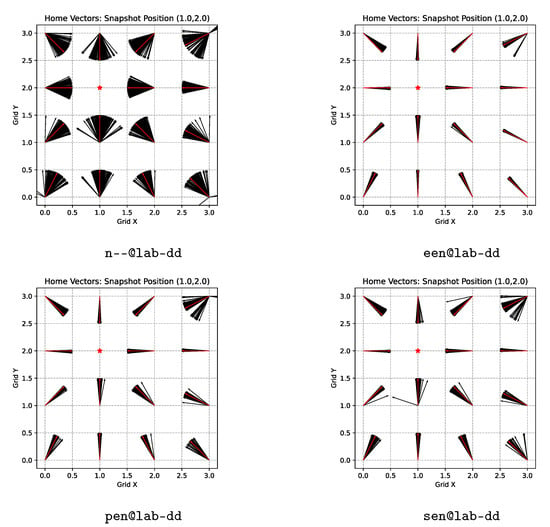

Figure 23 and Figure 24 visualize the effect of the different search strategies on the home direction estimates when paired with the exact tilt correction and nearest-neighbor interpolation; almost all estimated home vectors aligned with the ground truth, and the exhaustive search strategy was even capable of fixing the “bad spot” in the top right corner of the LAB database. As this was not the case for the true-tilt strategy, the exhaustive search must have found some image distortion not related to the ground-truth tilt but somehow improving the homing performance of warping. Another explanation for this might be wrong ground-truth data recorded for this position, although this was rather unlikely as the heuristic search strategies behaved similarly to the true-tilt strategy. Using the heuristic search strategies resulted in a markedly larger spread of the home direction estimates and, in particular for the LIV database, in more complete failures, i.e., home direction estimates not even roughly pointing in the right direction. The increased spread, which was still better than for the no-tilt correction, could probably be attributed to slight deviations of the tilt-correction estimate from the true tilt configuration. The complete failures were probably due to the heuristic strategies converging to a totally wrong minimum which potentially caused even worse distortions to the image than those due to the original tilt.

Figure 23.

Home vector field plots comparing the tilt search strategies with a nearest-neighbor interpolation on the exact tilt-correction solution; the same sample snapshot location from the LAB DxD dataset as in Figure 22 is shown.

Figure 24.

Home vector field plots comparing the tilt search strategies with a nearest-neighbor interpolation on the exact tilt-correction solution; one sample snapshot location from the LIV DxD dataset is shown. As the LIV database had 225 tilt configurations (images) per position, there are 225 potentially overlapping black arrows per position showing the estimated home directions. For this test, the snapshot position near the middle of the grid was chosen and hence the arrows should ideally point there.

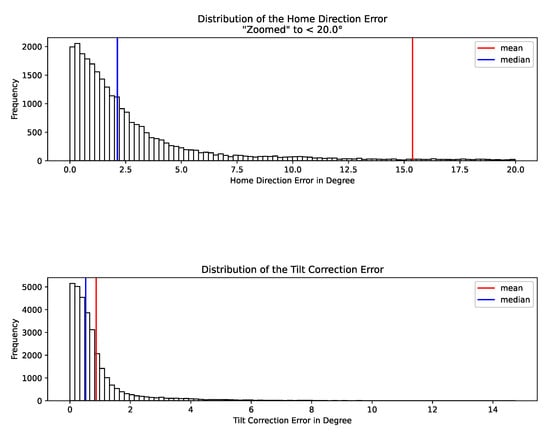

Finally, Table 7 summarizes the quantitative results of all methods on all datasets in terms of the angular error of the home direction . The results were averaged using the median as this represents the better measure for the skewed error distribution which is exemplarily plotted for one of the experiments in Figure 25.

Table 7.

Median of the home-direction error in degrees. The methods are grouped by tilt (search) strategy first and then arranged by tilt correction and interpolation method. In each group, the best-performing methods per dataset, i.e., those with the smallest home-direction error, are highlighted in red.

Figure 25.

Distribution of the home direction error and tilt correction error , exemplarily shown for the exhaustive tilt search using an exact tilt correction with a nearest-neighbor interpolation on the LAB DxD dataset (een@lab-dd). The median yields the better measure to summarize the homing performance, compared to the mean, as the error of the home direction tends to show a skewed distribution with a long tail of rarely occurring large errors. Though not as pronounced, the tilt correction error behaves similarly.

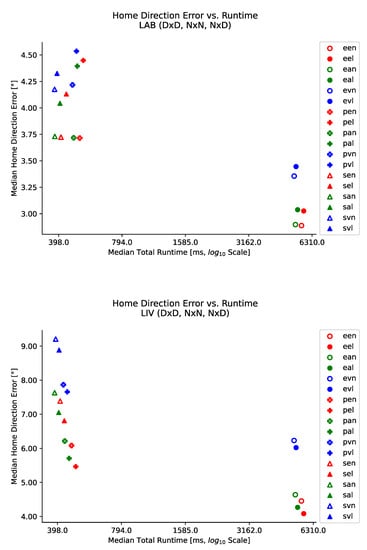

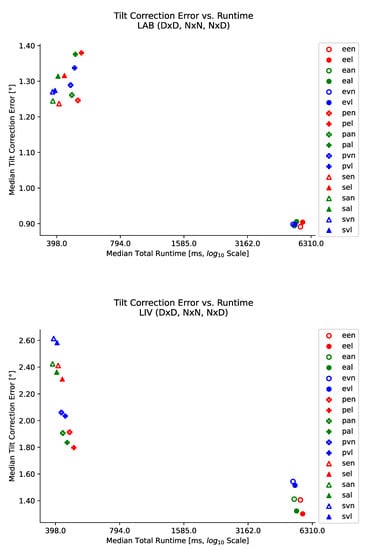

As already seen in the home-vector-field plots, there was not much difference between the approximations of the tilt correction when comparing within the groups of search strategies. The effect of the choice of the interpolation method was even almost negligible. Thus, the biggest influence stemmed from the search strategies. However, in the LAB database (with smaller amounts of tilt) the differences between the search strategies were still rather small. There were two noteworthy observations when comparing the search strategies to the true tilt strategy. First of all, on the same-database experiments DxD and NxN, the exhaustive search strategy was somehow capable of outperforming the true tilt baseline. This might be explained by the previous observation on the home-vector fields: the exhaustive search strategy might be capable of improving the homing performance of warping on “bad spots” in general, probably at the cost of tilt-correction performance. However, this effect was not observed on any of the cross-database experiments. Secondly, the heuristic search strategies showed worse performance on the no-tilt experiments NxN compared to the true tilt and exhaustive search strategy. This indicated that they tried to tilt-correct images which were not tilted at all and thus introduced misleading distortions.

In general, as expected, the tilt search was worse than the ground-truth tilt correction, and the heuristic search strategies yielded the worst performance. However, the differences between the pattern and the Nelder–Mead search strategies were rather small and inconclusive, only slightly favoring the pattern search (in particular on the LIV database comprising the bigger range of tilt). While all search strategies yielded similar performance over the same-database experiments (all similar within 2), there was a distinctively bigger difference over the cross-database experiments: in the worst case (LIV NxD), the pattern and Nelder–Mead search strategies were worse than the true tilt strategy almost by a factor of two, with the exhaustive search roughly in between, depending on the tilt-correction solution. However, the worst case of was still better than the baseline without tilt correction at by slightly more than a factor of two.

4.4. Tilt-Correction Performance

Similar to the homing performance, the tilt-correction performance was evaluated by comparing the angular error between the true tilt vector and the tilt vector estimated by the tilt correction search:

These tilt vectors were computed as three-dimensional unit vectors from spherical coordinates given by the tilt parameters in the roll-pitch representation or , respectively:

The ground-truth tilt parameters of a snapshot/current view pair were those of the current view as the snapshots were always selected with no tilt. Table 8 summarizes the quantitative results of all methods on all datasets in terms of the angular error of the tilt correction . The results were averaged using the median. See again Figure 25 for a similar argument of the skewed error distribution as that for the home direction error discussed previously. In the case of no tilt correction n--, there was not really any angular error of the tilt correction according to Equation (37). However, when inserting a zero tilt estimate into this equation, it reduced to the magnitude of tilt described in Equation (4), which was consequently reported for all n-- experiments. The median of this magnitude of tilt could be interpreted as the average amount of tilt which needed to be corrected in the dataset: half of the experiment samples showed images tilted less, the other half images tilted more than this value. The maximum amount of tilt was about 7 for the LAB and about 11 for the LIV database, thus the reported median was slightly above the middle of this range.

Table 8.

Median of the tilt-correction error in degrees. The methods are grouped by tilt (search) strategy first and then arranged by tilt correction and interpolation method. In each group, the best-performing methods per dataset, i.e., those with the smallest tilt-correction error, are highlighted in red.

Overall, all proposed methods were capable of actually estimating the tilt of the images. Compared to the baseline test (average amount of tilt in the dataset), an improvement of a factor between two and five was achieved. The median tilt-correction error could then be interpreted as the amount of tilt remaining in the dataset after correction. The choice of the tilt-correction solution had an almost negligible impact on the tilt-correction performance, especially, the exact and approximate solution were nearly identical. Although the vertical solution was noticeably worse, it was still within of the other solutions.

One noteworthy observation was the variants with linear interpolation: in many cases these yielded slightly worse (or at least equivalent) tilt correction than their nearest-neighbor interpolation counterparts (although not by much). This was somewhat unexpected, as the linear interpolation was expected to produce images with an overall better quality, being beneficial to the underlying warping method and in turn making the tilt correction easier. This particular effect seemed to be more pronounced on the LAB database than on the LIV database, indicating it might be related to the content or overall structure of the images (the environment) or to the different amounts of tilt comprised in the databases, i.e., indicating that linear interpolation became beneficial only for larger amounts of tilt.

Though all search strategies were capable of finding a good tilt correction, there were more differences between the search strategies compared to those between the tilt-correction solutions. Still, they were all quite similar within 1. Noteworthy is the pattern search strategy, which was rather consistent in correctly estimating the no-tilt condition of the NxN datasets. This was probably due to the initial center test point placed exactly in the middle of the search space at the no-tilt configuration, making convergence to the right tilt correction easier. The differences between the search strategies were rather independent of the chosen tilt correction, and in many cases, the interpolation was even the second most important influence, making the choice of approximation almost insignificant to the tilt-correction performance.

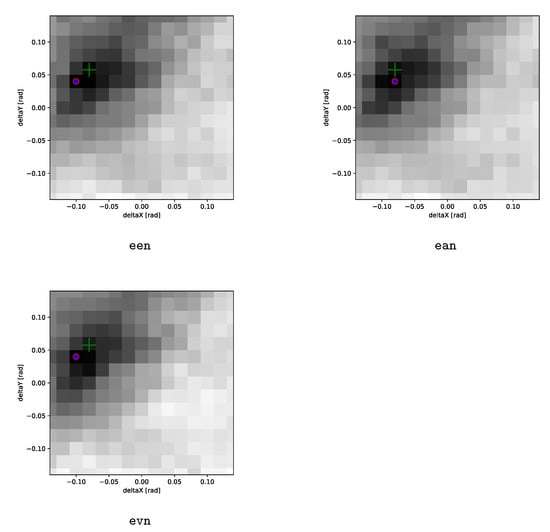

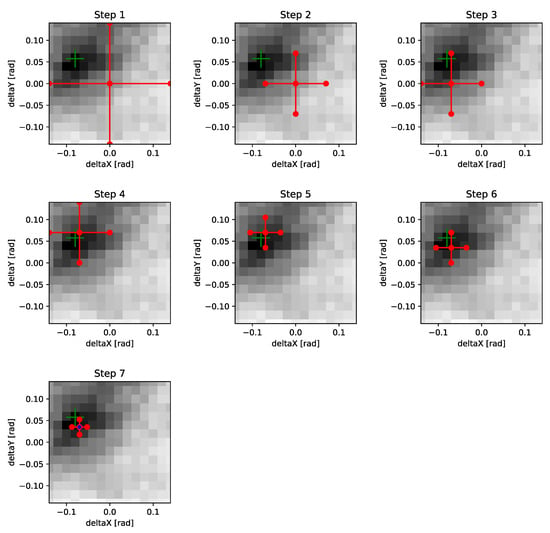

Figure 26, Figure 27 and Figure 28 visualize the search process of the different search strategies and the underlying objective function by plotting a heatmap of the function values.

Figure 26.

Visualization of the exhaustive search with the different approximations of the tilt correction and with a nearest-neighbor interpolation. For each of the tilt configurations from the exhaustive search parameter grid, the d value of the corresponding warping run, i.e., the objective function value, is plotted. The darker the pixel, the lower the d, and hence the better the match of the tilt correction. The green cross marks the location of the ground-truth tilt parameters, i.e., the expected minimum, and the red-blue circle marks the minimum found by the search procedure. For all tilt-correction solutions, good matches are clearly concentrated around the true minimum with the objective function values decreasing towards it. A pronounced valley is visible and the overall terrain is not “too bumpy”, as necessary for the heuristic search methods to be applicable. The two approximations show a slightly more elongated valley of potential or near matches. In these cases, the estimated minimum of all solutions is between 1 and 2 off of the true tilt configuration, i.e., at one of the adjacent grid points. This particular sample was produced from the LAB DxD dataset taking the snapshot image at position with no tilt and with an added rotation of and the current view image at position in the tilt configuration with an added rotation of . The rotation added to the current view image caused the tilt configuration to be rotated as well to the location marked by the green cross (see Equation (31) for more details).

Figure 27.

Visualization of the pattern search strategy pen. As a reference, the exhaustive search heatmap of the een strategy (see Figure 26 for information on the selected image pair) is plotted as the background. The green cross marks the location of the ground truth tilt parameters, i.e., the expected minimum. At each step, the state of the search pattern is visualized as a red cross connecting the test points. At the final step, the red-blue filled center circle marks the minimum found by the search procedure. After only four update steps the pattern is already centered at the top left quadrant containing the minimum. In total, only seven update steps corresponding to 22 warping evaluations are necessary to find a good approximation of the tilt correction—this is in contrast with the 225 evaluations of the exhaustive search grid. According to Table 11, this actually represents a worse-than-average performance with a mean and median number of evaluations of about 18 if tilt is present.

Figure 28.

Visualization of the Nelder–Mead search strategy sen. As a reference, the exhaustive search heatmap of the een strategy (see Figure 26 for information on the selected image pair) is plotted as the background. The green cross marks the location of the ground truth tilt parameters, i.e., the expected minimum. At each step, the state of the search simplex is visualized as a red triangle connecting the test points. At the final step, the red-blue filled circle marks the minimum found by the search procedure. After only three update steps, the minimum already lies inside the simplex. In total, only eight update steps corresponding to 13 warping evaluations are necessary to find a good approximation of the tilt correction—this is in contrast with the 225 evaluations of the exhaustive search grid. Note that most of the update steps were contractions, requiring two evaluations of the warping objective. However, in steps 2, 4, and 6 the reflected point, which was computed before the contraction, would be outside of the search range causing the warping evaluation to be skipped, reducing the number of evaluations to one. According to Table 11, this actually represents a slightly better-than-average performance with respect to the median number of evaluations of 15 (and a mean number of 30), if tilt is present.