Digital Twin as Industrial Robots Manipulation Validation Tool

Abstract

1. Introduction

1.1. Related Studies

1.1.1. Input Modality Evaluation

1.1.2. Human Robot Collaboration and Work Cell Optimization

1.1.3. Ergonomics and Safety Evaluation

1.1.4. Robot Programming

2. Methods

2.1. Human-Robot Interaction Metrology

- the average time to complete the task working with the physical robot versus working in the virtual environment;

- the average total duration of the experiments using the VR interface including both the time to complete the task and the time to adjust to the virtual environment;

- the average duration using or focusing on the different virtual interface commands (e.g., adjusting joint speeds/positions or changing operational modes);

- the average total time spent looking at the elements of the VR interface, the time spent looking at the virtual robot, and the time spent doing something else (e.g., doing some other task work not directly related to the robot).

- the demographics of the human operators, including age, gender, and nationality;

- the operators’ previous experience with working with both robots.

- the NASA-TLX captures the operator’s mental and physical effort required to complete a task;

- the Godspeed questionnaire [38] records the operators’ perspectives of the anthropomorphism, animacy (i.e., how lifelike something appears), likeability, perceived intelligence, and safety of the real and virtual robot systems.

2.2. Data Collection: Integrating Metrics

2.3. Technical Implementation

2.3.1. The Digital Twin System

2.3.2. Attention Tracking System

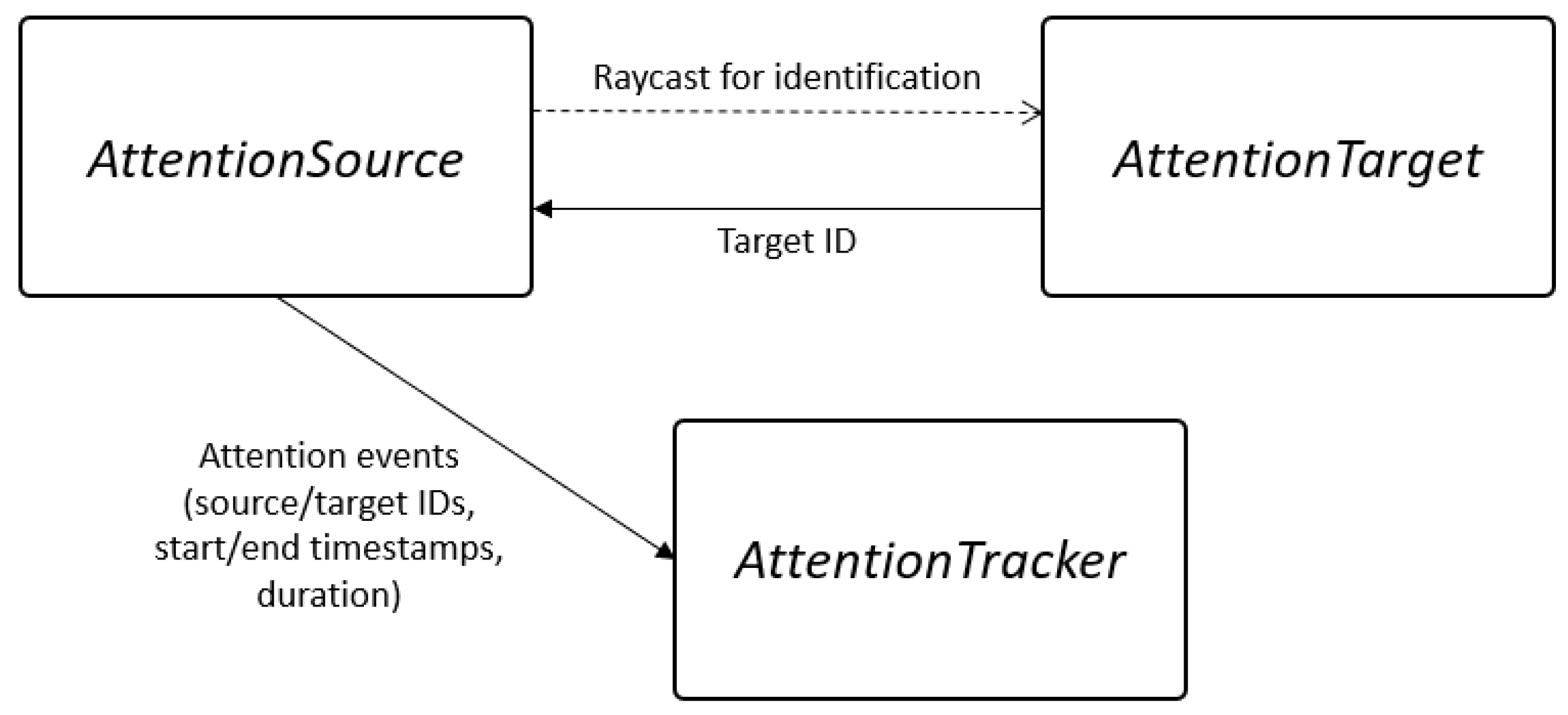

- AttentionTarget—a script which marks an object as a target for the attention tracking system. It is a Unity Component script, which means it can be attached to any 3D object in the virtual environment. AttentionTarget must have a trigger volume attached to it to be detected by AttentionSources.

- AttentionSource—a script which is responsible for detecting AttentionTargets. AttentionSource uses raycasting to detect trigger volumes with associated AttentionTargets in the virtual environment. If the “line of sight”–originating from the normal surface of the HMD–check encounters an AttentionTarget, a new attention event is triggered for that object. Once this object is no longer along the line of sight for some n number of computational cycles, the attention tracking event is considered finished and its duration and timestamps (in milliseconds) for the beginning and end of the event are written into the session file for the AttentionTracker.The precision of events duration is equal to the simulation’s clock cyclek length. Here, the environment used in the experiment is executed at 120 Hz, which yields a maximum precision of 8.3 ms. AttentionSource is thus leverages head tracking as an approximation of eye tracking and attention monitoring.

- AttentionTracker—a core script which provides methods to start and stop the recording of the attention tracking session, register attention events, and export recorded data in JSON format for later retrieval and analysis.

2.3.3. Stress Level Approximation

3. Experimental Protocol

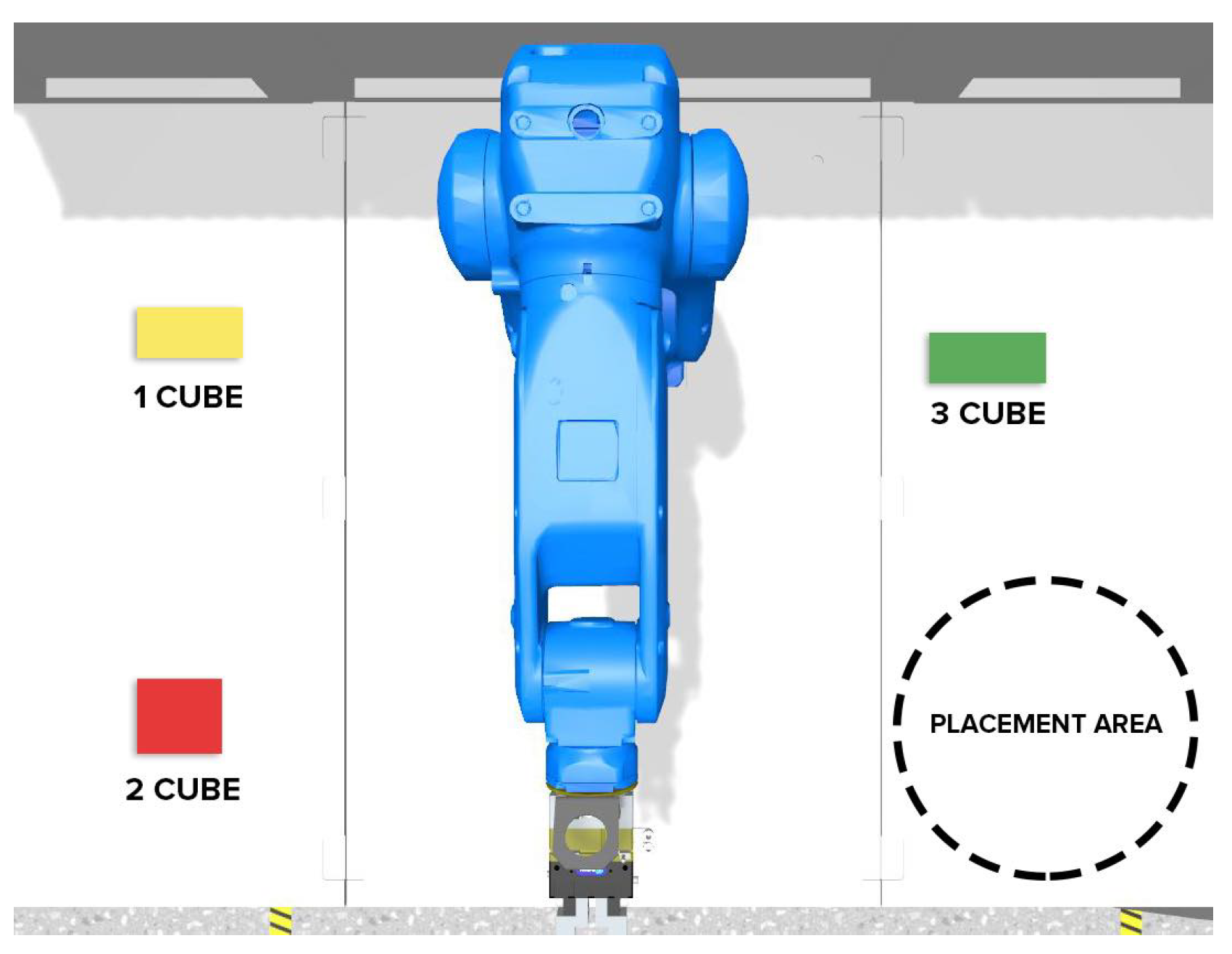

3.1. Robot Control Task

3.2. Participants

4. Results

4.1. Task Timing

4.2. Subjective Survey Responses

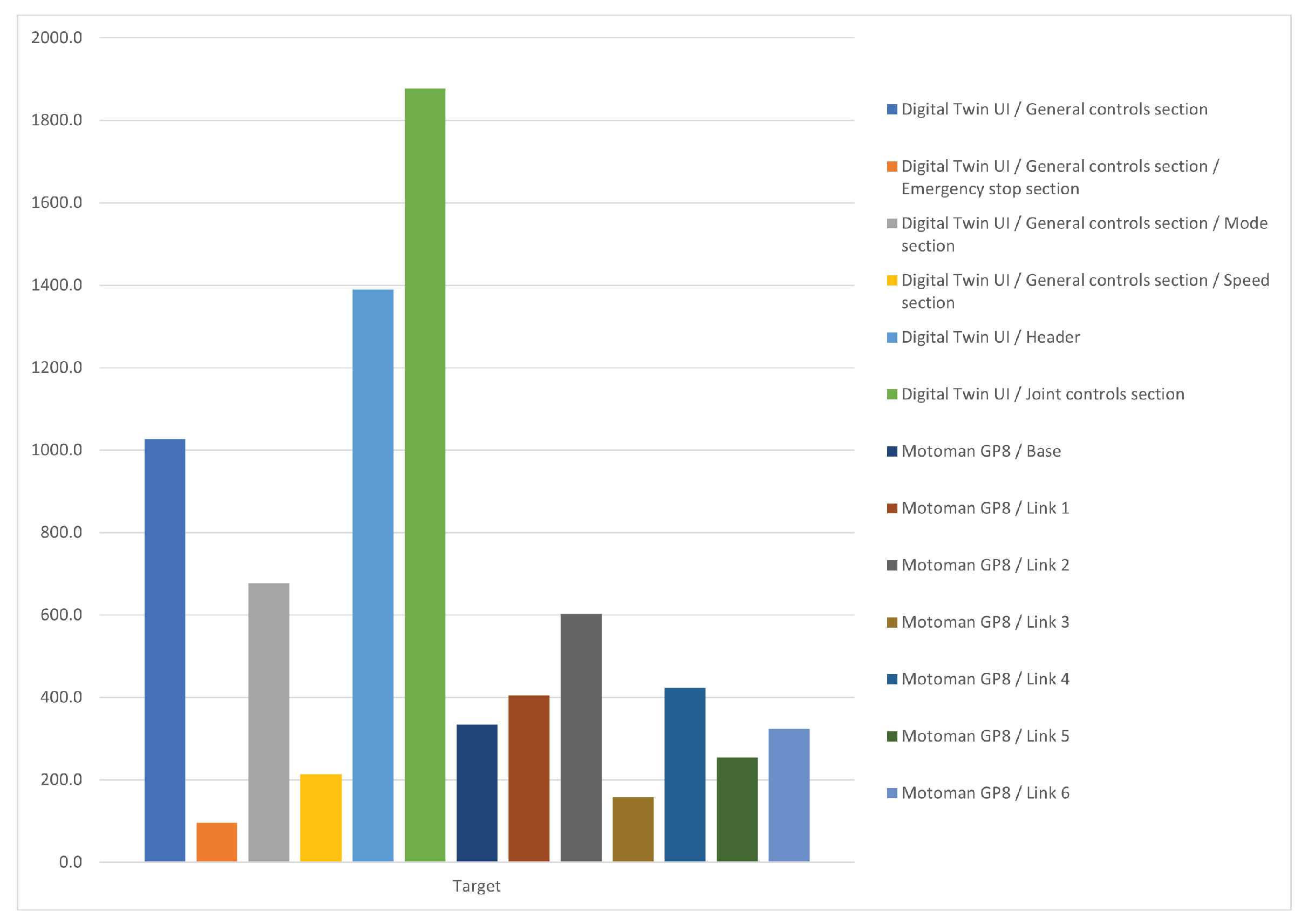

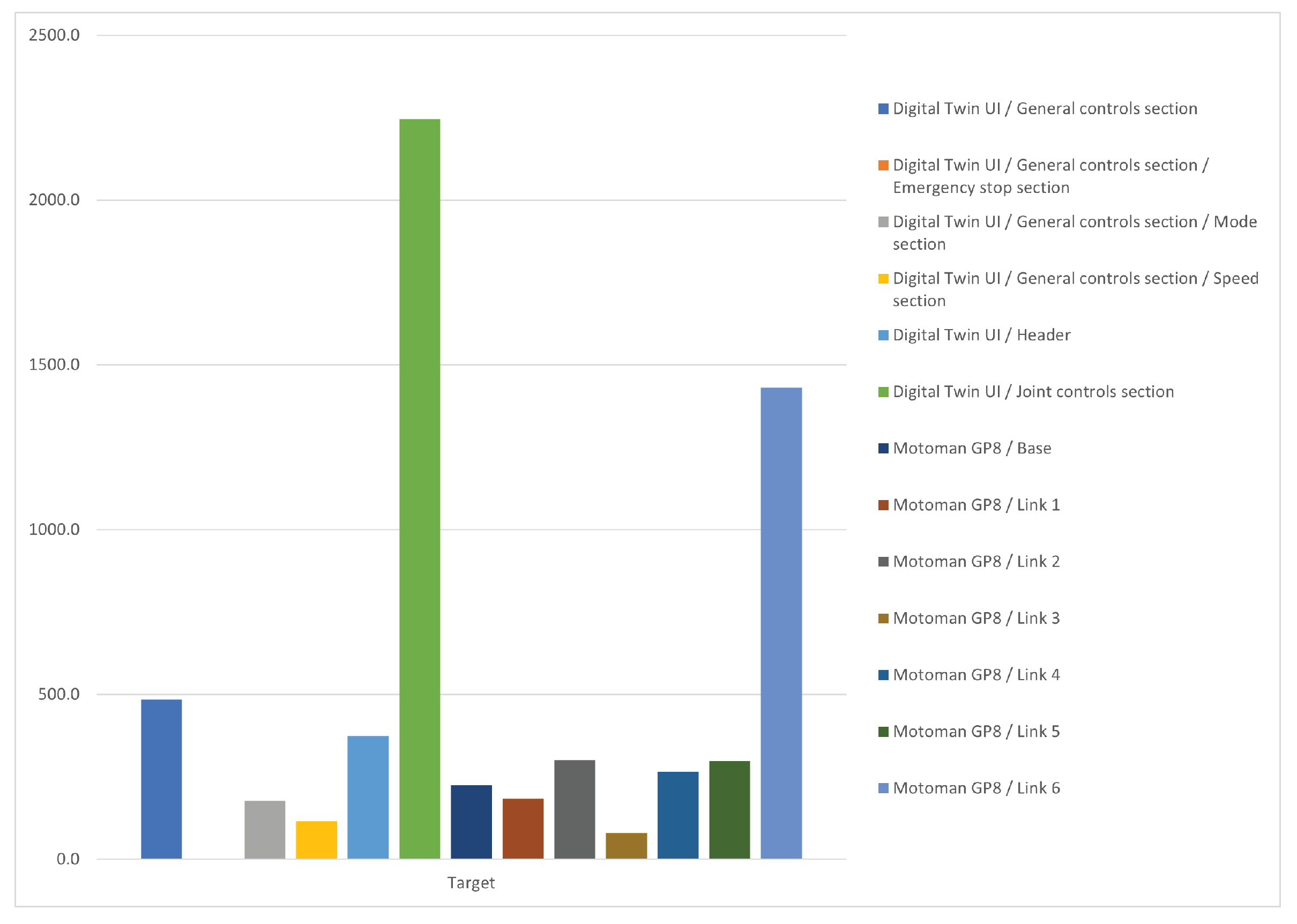

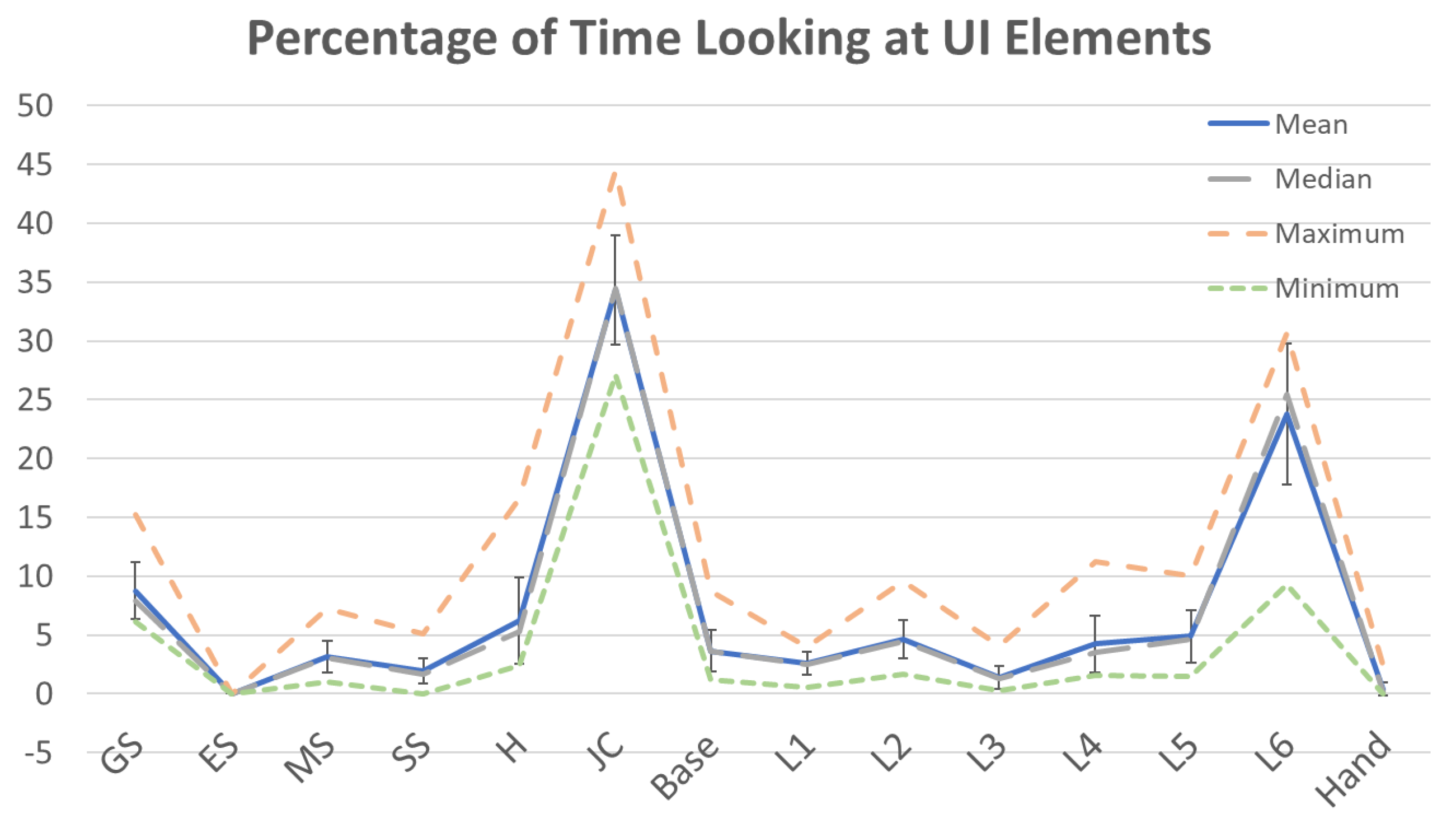

4.3. Eye Tracking

4.4. Physiological Stress Monitoring

5. Discussion

5.1. Advantages and Limitations of the DT System

5.2. Potential Future Developments (Based on the Findings)

6. Conclusions

7. Disclaimer

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of digital twin about concepts, technologies, and industrial applications. J. Manuf. Syst. 2020, 58, 346–361. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Berg, J.; Lu, S. Review of Interfaces for Industrial Human-Robot Interaction. Curr. Robot. Rep. 2020, 1, 27–34. [Google Scholar] [CrossRef]

- Krupke, D.; Steinicke, F.; Lubos, P.; Jonetzko, Y.; Görner, M.; Zhang, J. Comparison of multimodal heading and pointing gestures for co-located mixed reality human–robot interaction. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Brooke, J. SUS-A “quick and dirty” usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. In Mensch & Computer 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 187–196. [Google Scholar]

- Whitney, D.; Rosen, E.; Phillips, E.; Konidaris, G.; Tellex, S. Comparing robot grasping teleoperation across desktop and virtual reality with ROS reality. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2020; pp. 335–350. [Google Scholar]

- Tadeja, S.K.; Lu, Y.; Seshadri, P.; Kristensson, P.O. Digital Twin Assessments in Virtual Reality: An Explorational Study with Aeroengines. In Proceedings of the 2020 IEEE Aerospace Conference, Missoula, MT, USA, 7–14 March 2020; pp. 1–13. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Rheinberg, F.; Engeser, S.; Vollmeyer, R. Measuring components of flow: The Flow-Short-Scale. In Proceedings of the 1st International Positive Psychology Summit, Washington, DC, USA, 3–6 October 2002. [Google Scholar]

- Schubert, T.; Friedmann, F.; Regenbrecht, H. Igroup presence questionnaire. Teleoperators Virtual Environ. 2001, 41, 115–124. [Google Scholar]

- Laaki, H.; Miche, Y.; Tammi, K. Prototyping a Digital Twin for Real Time Remote Control Over Mobile Networks: Application of Remote Surgery. IEEE Access 2019, 7, 20325–20336. [Google Scholar] [CrossRef]

- Concannon, D.; Flynn, R.; Murray, N. A Quality of Experience Evaluation System and Research Challenges for Networked Virtual Reality-Based Teleoperation Applications. In Proceedings of the 11th ACM Workshop on Immersive Mixed and Virtual Environment Systems; Association for Computing Machinery, MMVE’19, New York, NY, USA, 18 June 2019; pp. 10–12. [Google Scholar] [CrossRef]

- Matsas, E.; Vosniakos, G.C.; Batras, D. Effectiveness and acceptability of a virtual environment for assessing human–robot collaboration in manufacturing. Int. J. Adv. Manuf. Technol. 2017, 92, 3903–3917. [Google Scholar] [CrossRef]

- Oyekan, J.O.; Hutabarat, W.; Tiwari, A.; Grech, R.; Aung, M.H.; Mariani, M.P.; López-Dávalos, L.; Ricaud, T.; Singh, S.; Dupuis, C. The effectiveness of virtual environments in developing collaborative strategies between industrial robots and humans. Robot. Comput.-Integr. Manuf. 2019, 55, 41–54. [Google Scholar] [CrossRef]

- Sievers, T.S.; Schmitt, B.; Rückert, P.; Petersen, M.; Tracht, K. Concept of a Mixed-Reality Learning Environment for Collaborative Robotics. Procedia Manuf. 2020, 45, 19–24. [Google Scholar] [CrossRef]

- Yap, H.J.; Taha, Z.; Dawal, S.Z.M.; Chang, S.W. Virtual reality based support system for layout planning and programming of an industrial robotic work cell. PLoS ONE 2014, 9, e109692. [Google Scholar]

- Malik, A.A.; Masood, T.; Bilberg, A. Virtual reality in manufacturing: Immersive and collaborative artificial-reality in design of human–robot workspace. Int. J. Comput. Integr. Manuf. 2020, 33, 22–37. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez-Jiménez, S.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning. Appl. Sci. 2020, 10, 3633. [Google Scholar] [CrossRef]

- Peruzzini, M.; Grandi, F.; Pellicciari, M. Exploring the potential of Operator 4.0 interface and monitoring. Comput. Ind. Eng. 2020, 139, 105600. [Google Scholar] [CrossRef]

- Karwowski, W. The OCRA Method: Assessment of Exposure to Occupational Repetitive Actions of the Upper Limbs. In International Encyclopedia of Ergonomics and Human Factors-3 Volume Set; CRC Press: Boca Raton, FL, USA, 2006; pp. 3337–3345. [Google Scholar]

- Havard, V.; Jeanne, B.; Lacomblez, M.; Baudry, D. Digital twin and virtual reality: A co-simulation environment for design and assessment of industrial workstations. Prod. Manuf. Res. 2019, 7, 472–489. [Google Scholar] [CrossRef]

- McAtamney, L.; Corlett, N. Rapid upper limb assessment (RULA). In Handbook of Human Factors and Ergonomics Methods; CRC Press: Boca Raton, FL, USA, 2004; pp. 86–96. [Google Scholar]

- Rosen, E.; Whitney, D.; Phillips, E.; Chien, G.; Tompkin, J.; Konidaris, G.; Tellex, S. Communicating robot arm motion intent through mixed reality head-mounted displays. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2020; pp. 301–316. [Google Scholar]

- Moniri, M.M.; Valcarcel, F.A.E.; Merkel, D.; Sonntag, D. Human gaze and focus-of-attention in dual reality human–robot collaboration. In Proceedings of the 2016 12th International Conference on Intelligent Environments (IE), London, UK, 14–16 September 2016; pp. 238–241. [Google Scholar]

- Wassermann, J.; Vick, A.; Krüger, J. Intuitive robot programming through environment perception, augmented reality simulation and automated program verification. Procedia CIRP 2018, 76, 161–166. [Google Scholar] [CrossRef]

- Vosniakos, G.C.; Ouillon, L.; Matsas, E. Exploration of two safety strategies in human–robot collaborative manufacturing using Virtual Reality. Procedia Manuf. 2019, 38, 524–531. [Google Scholar] [CrossRef]

- Maragkos, C.; Vosniakos, G.C.; Matsas, E. Virtual reality assisted robot programming for human collaboration. Procedia Manuf. 2019, 38, 1697–1704. [Google Scholar] [CrossRef]

- Manou, E.; Vosniakos, G.C.; Matsas, E. Off-line programming of an industrial robot in a virtual reality environment. Int. J. Interact. Des. Manuf. 2019, 13, 507–519. [Google Scholar] [CrossRef]

- Pérez, L.; Diez, E.; Usamentiaga, R.; García, D.F. Industrial robot control and operator training using virtual reality interfaces. Comput. Ind. 2019, 109, 114–120. [Google Scholar] [CrossRef]

- Ostanin, M.; Klimchik, A. Interactive Robot Programming Using Mixed Reality. IFAC-PapersOnLine 2018, 51, 50–55. [Google Scholar] [CrossRef]

- Nathanael, D.; Mosialos, S.; Vosniakos, G.C. Development and evaluation of a virtual training environment for on-line robot programming. Int. J. Ind. Ergon. 2016, 53, 274–283. [Google Scholar] [CrossRef]

- Ong, S.; Yew, A.; Thanigaivel, N.; Nee, A. Augmented reality-assisted robot programming system for industrial applications. Robot. Comput.-Integr. Manuf. 2020, 61, 101820. [Google Scholar] [CrossRef]

- Burghardt, A.; Szybicki, D.; Gierlak, P.; Kurc, K.; Pietruś, P.; Cygan, R. Programming of Industrial Robots Using Virtual Reality and Digital Twins. Appl. Sci. 2020, 10, 486. [Google Scholar] [CrossRef]

- Kuts, V.; Otto, T.; Tähemaa, T.; Bondarenko, Y. Digital twin based synchronised control and simulation of the industrial robotic cell using virtual reality. J. Mach. Eng. 2019, 19, 128–144. [Google Scholar] [CrossRef]

- Marvel, J.A.; Bagchi, S.; Zimmerman, M.; Antonishek, B. Towards Effective Interface Designs for Collaborative HRI in Manufacturing: Metrics and Measures. ACM Trans. Hum.-Robot. Interact. 2020, 9, 1–55. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulic, D.; Croft, E.A. Measuring the anthropomorphism, animacy, likeability, perceived intelligence and perceived safety of robots. In Proceedings of the Metrics for Human-Robot Interaction, ACM/IEEE International Conference on Human-Robot Interaction, Amsterdam, The Netherlands, 12–15 March 2008; ACM: Amsterdam, The Netherlands, 2008. [Google Scholar]

- ISO 25010; Systems and Software Engineering-Systems and Software Quality Requirements and Evaluation (SQuaRE)-System and Software Quality Models. International Organization for Standardization: Geneva, Switzerland, 2011.

- Kuts, V.; Modoni, G.E.; Otto, T.; Sacco, M.; Tähemaa, T.; Bondarenko, Y.; Wang, R. Synchronizing physical factory and its digital twin throughan iiot middleware: A case study. Proc. Est. Acad. Sci. 2019, 68, 364–370. [Google Scholar] [CrossRef]

- Kuts, V.; Cherezova, N.; Sarkans, M.; Otto, T. Digital Twin: Industrial robot kinematic model integration to the virtual reality environment. J. Mach. Eng. 2020, 20, 53–64. [Google Scholar] [CrossRef]

| Cube Re-Positioning Task | Average Physical Machine Duration | Average VR Process Duration |

|---|---|---|

| Cube #1 | 225 | 144 |

| Cube #2 | 210 | 115 |

| Cube #3 | 184 | 105 |

| Cube Re-Positioning Task | Average VR Process Duration | Average Physical Machine Duration |

|---|---|---|

| Cube #1 | 114 | 178 |

| Cube #2 | 92 | 130 |

| Cube #3 | 61 | 159 |

| Trial 1 | ||||

|---|---|---|---|---|

| Expertise in robotics (1–10) | 2 | 9 | 1 | 2 |

| 1 cube end | 360 | 120 | 180 | 120 |

| 2 cube end | 240 | 120 | 120 | 90 |

| 3 cube end | 60 | 180 | 120 | 90 |

| Trial 2 | ||||

| 1 cube end | 300 | 120 | 60 | 60 |

| 2 cube end | 240 | 60 | 60 | 45 |

| 3 cube end | 60 | 45 | 60 | 45 |

| Anthropomorphism-Scale 1–5 | Physical | Virtual |

|---|---|---|

| Fake-Natural | 4 | 2.65 |

| Machine-like-Human-like | 2.6 | 2.5 |

| Unconscious-Conscious | 2.45 | 2.3 |

| Artificial-Lifelike | 2.55 | 2.15 |

| Moving Rigidly-Moving Elegantly | 3.25 | 2.75 |

| Animacy-Scale 1–5 | Physical | Virtual |

| Dead-Alive | 2.5 | 2.4 |

| Stagnant-Lively | 2.85 | 2.85 |

| Mechanical-Organic | 2.3 | 2.25 |

| Artificial-Lifelike | 2.3 | 1.9 |

| Inert-Interactive | 2.75 | 3.35 |

| Apathetic-Responsive | 3.4 | 3.45 |

| Likeability-Scale 1–5 | Physical | Virtual |

| Dislike-Like | 4 | 3,.5 |

| Unfriendly-Friendly | 3.55 | 3.3 |

| Unkind-Kind | 3.4 | 3.35 |

| Unpleasant-Pleasant | 3.6 | 3.55 |

| Awful-Nice | 3.9 | 3.55 |

| Perceived Intelligence-Scale 1–5 | Physical | Virtual |

| Incompetent-Competent | 3.2 | 3.3 |

| Ignorant-Knowledgeable | 2.95 | 3.45 |

| Irresponsible-Responsible | 3.45 | 3.5 |

| Unintelligent-Intelligent | 2.9 | 3.2 |

| Foolish-Sensible | 3.1 | 3.25 |

| Perceived Safety-Scale 1–5 | Physical | Virtual |

| Anxious-Relaxed | 4.1 | 3.55 |

| Agitated-Calm | 3.85 | 3.9 |

| Quiescent-Surprised | 2.95 | 3.05 |

| Anthropomorphism-Scale 1–5 | Virtual | Physical |

|---|---|---|

| Fake-Natural | 3.55 | 3.45 |

| Machine-like-Human-like | 1.95 | 1.9 |

| Unconscious-Conscious | 2.35 | 2.3 |

| Artificial-Lifelike | 2.45 | 2.2 |

| Moving Rigidly-Moving Elegantly | 3.05 | 3.45 |

| Animacy-Scale 1–5 | Virtual | Physical |

| Dead-Alive | 2.9 | 2.45 |

| Stagnant-Lively | 3.2 | 3 |

| Mechanical-Organic | 2.25 | 1.85 |

| Artificial-Lifelike | 2.4 | 2.05 |

| Inert-Interactive | 4.1 | 3.35 |

| Apathetic-Responsive | 4.25 | 3.7 |

| Likeability-Scale 1–5 | Virtual | Physical |

| Dislike-Like | 4.45 | 3.8 |

| Unfriendly-Friendly | 3.95 | 3.1 |

| Unkind-Kind | 3.7 | 3.1 |

| Unpleasant-Pleasant | 3.95 | 3.35 |

| Awful-Nice | 4.3 | 3.65 |

| Perceived Intelligence-Scale 1–5 | Virtual | Physical |

| Incompetent-Competent | 3.65 | 3.2 |

| Ignorant-Knowledgeable | 3.45 | 3.05 |

| Irresponsible-Responsible | 3.3 | 3.15 |

| Unintelligent-Intelligent | 3.2 | 2.8 |

| Foolish-Sensible | 3.35 | 3.15 |

| Perceived Safety-Scale 1–5 | Virtual | Physical |

| Anxious-Relaxed | 3.7 | 2.6 |

| Agitated-Calm | 3.8 | 2.95 |

| Quiescent-Surprised | 3.7 | 3 |

| Criteria | Scale | Physical | Virtual |

|---|---|---|---|

| Mental Demand | Low-High | 4.55 | 5.5 |

| Physical Demand | Low-High | 2.925 | 2.575 |

| Temporal Demand | Low-High | 4.35 | 3.75 |

| Performance | Good-Poor | 3.125 | 3.5 |

| Effort | Low-High | 3.425 | 4.325 |

| Frustration | Low-High | 3.175 | 4.125 |

| Criteria | Scale | Virtual | Physical |

|---|---|---|---|

| Mental Demand | Low-High | 4.35 | 6 |

| Physical Demand | Low-High | 2.25 | 4.65 |

| Temporal Demand | Low-High | 4.07 | 5.55 |

| Performance | Good-Poor | 3.85 | 4.55 |

| Effort | Low-High | 4.07 | 6.25 |

| Frustration | Low-High | 2.1 | 4.75 |

| Target | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| User Interface | Robot | Self | ||||||||||||

| General Controls | ||||||||||||||

| Sub. # | ES | MS | SS | H | JC | Base | J1 | J2 | J3 | J4 | J5 | J6 | Hand | |

| nr.3 | 38.49 | 15.28 | 13.49 | 5.77 | 21.57 | 117.46 | 15.77 | 13.72 | 25.98 | 5.48 | 50.79 | 60.5 | 99.12 | 0.13 |

| nr.5 | 244.86 | 7.7 | 201.4 | 44.38 | 12.52 | 534.92 | 0.66 | 0.14 | 0.1 | 0 | 0 | 0 | 0 | 0 |

| nr.6 | 22.72 | 2.15 | 13.62 | 7.56 | 112.42 | 28.82 | 39.08 | 53.49 | 50.04 | 2.38 | 6.46 | 2.39 | 4.24 | 0 |

| nr.7 | 0.96 | 0 | 0.7 | 0 | 9.06 | 0.26 | 15.2 | 13.2 | 6.39 | 1.27 | 1.87 | 0.32 | 2.57 | 0 |

| nr.8 | 16.19 | 0 | 6.61 | 0 | 51.49 | 1.8 | 5.34 | 2.08 | 2.04 | 0 | 0 | 0 | 6.25 | 0 |

| nr.9 | 38.9 | 0 | 19.8 | 1.72 | 114 | 62.9 | 0.79 | 0.21 | 1.37 | 0.73 | 0.47 | 0.13 | 2.58 | 0 |

| nr.10 | 29.5 | 0 | 22.97 | 5.11 | 208.51 | 41.03 | 112 | 160.19 | 194.15 | 39.03 | 71.7 | 26.82 | 29.46 | 3.84 |

| nr.11 | 60.7 | 13.6 | 14 | 6.9 | 21.1 | 281 | 13.4 | 24.2 | 77.9 | 26 | 161 | 110 | 86.3 | 1.51 |

| nr.15 | 34.94 | 0.48 | 27.36 | 7.89 | 71.44 | 90.32 | 2.37 | 2.23 | 4.1 | 0 | 0 | 0 | 0 | 1.84 |

| nr.16 | 131 | 16.1 | 71.6 | 31.7 | 120 | 173 | 13.9 | 4.01 | 2.69 | 0.04 | 0.1 | 0.37 | 8.5 | 0.12 |

| nr.17 | 22.02 | 0 | 13.88 | 0 | 177.68 | 22.6 | 2.36 | 0.69 | 3.83 | 1.26 | 1.79 | 1.29 | 2.6 | 0 |

| nr.19 | 16.9 | 3.14 | 8.76 | 6.17 | 52.8 | 31.5 | 57.5 | 52.4 | 51.7 | 0 | 0.48 | 0.71 | 2.58 | 0.17 |

| nr.20 | 15.72 | 0 | 13.35 | 2.07 | 51.94 | 26.27 | 4.72 | 7.17 | 14.05 | 3.56 | 16.57 | 7.74 | 8.08 | 0 |

| nr.21 | 55.1 | 9.35 | 34.5 | 16.3 | 17.9 | 115 | 0.18 | 0.15 | 0.12 | 0 | 0 | 0 | 0 | 1.86 |

| nr.22 | 1.92 | 0 | 0.11 | 0 | 9.16 | 0 | 0.92 | 9.57 | 34.42 | 31.08 | 11.64 | 2.6 | 2.57 | 0 |

| nr.23 | 44.78 | 6.86 | 29.87 | 8.76 | 2.14 | 141.4 | 0.35 | 0.02 | 0 | 0 | 0 | 0 | 0 | 0 |

| nr.24 | 35.12 | 0 | 12.34 | 1.27 | 199.63 | 26.83 | 21.24 | 4.36 | 4.24 | 0.06 | 0.64 | 0.36 | 1.1 | 0 |

| nr.25 | 58.4 | 3.91 | 40.74 | 3.1 | 79.99 | 43.5 | 23.65 | 55.07 | 127.48 | 46.86 | 99.97 | 40.28 | 66.29 | 6.25 |

| nr.26 | 26.73 | 9.09 | 12.81 | 9.97 | 49.97 | 60.19 | 4.11 | 0.96 | 0.73 | 0 | 0 | 0 | 1.48 | 0 |

| nr.27 | 130.74 | 8.55 | 119.3 | 54.72 | 6.71 | 79 | 1.02 | 1.1 | 1.1 | 0 | 0 | 0 | 0 | 0 |

| Target | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| User Interface | Robot | Self | ||||||||||||

| General Controls | ||||||||||||||

| Sub. # | ES | MS | SS | H | JC | Base | J1 | J2 | J3 | J4 | J5 | J6 | Hand | |

| nr.1 | 21.86 | 0 | 7.48 | 3.17 | 5.44 | 69.44 | 4.23 | 4.11 | 8.86 | 4.21 | 15.81 | 17.28 | 64.82 | 0 |

| nr.2 | 10.07 | 0 | 3.07 | 3.38 | 2.76 | 23.18 | 2.47 | 1.41 | 3.64 | 1.38 | 2.10 | 1.65 | 10.89 | 0 |

| nr.3 | 70.08 | 0 | 26.25 | 18.41 | 60.93 | 433.33 | 33.55 | 38.96 | 47.88 | 3.01 | 18.74 | 25.02 | 174.91 | 23.68 |

| nr.4 | 24.11 | 0 | 10.89 | 4.17 | 19.75 | 107.11 | 16.42 | 14.14 | 21.51 | 6.09 | 16.59 | 23.07 | 88.35 | 1.31 |

| nr.5 | 17.85 | 0 | 6.03 | 3.07 | 22.16 | 57.04 | 13.07 | 3.70 | 6.32 | 0.46 | 2.30 | 2.18 | 15.20 | 0 |

| nr.6 | 18.52 | 0 | 7.37 | 4.04 | 10.88 | 41.03 | 5.67 | 4.60 | 5.78 | 1.16 | 2.37 | 4.27 | 45.73 | 0 |

| nr.7 | 16.29 | 0 | 12.78 | 0 | 9.14 | 49.26 | 4.40 | 4.47 | 7.05 | 1.35 | 9.03 | 13.36 | 49.17 | 1.11 |

| nr.8 | 27.41 | 0 | 10.54 | 7.31 | 47.44 | 80.44 | 11.94 | 3.17 | 4.86 | 1.36 | 10.01 | 10.22 | 68.31 | 1.76 |

| nr.9 | 29.25 | 0 | 12.16 | 8.16 | 12.75 | 153.43 | 8.76 | 16.61 | 28.51 | 7.67 | 39.47 | 47.69 | 112.09 | 0.02 |

| nr.10 | 14.11 | 0 | 4.03 | 2.96 | 6.87 | 67.36 | 9.08 | 3.21 | 4.93 | 2.07 | 6.31 | 10.57 | 44.03 | 0.78 |

| nr.11 | 10.36 | 0 | 2.79 | 1.71 | 7.27 | 40.57 | 5.15 | 3.5 | 4.19 | 0.70 | 2.82 | 5.28 | 28.54 | 0.01 |

| nr.12 | 39.16 | 0 | 6.71 | 7.47 | 35.49 | 248.73 | 33.66 | 25.26 | 30.94 | 6.76 | 30.49 | 35.78 | 137.63 | 5.26 |

| nr.13 | 14.26 | 0 | 2.86 | 2.87 | 6.39 | 64.82 | 3.52 | 4.53 | 12.10 | 6.13 | 9.44 | 11.95 | 59.93 | 1.53 |

| nr.14 | 25.47 | 0 | 10.30 | 11.72 | 34.35 | 119.12 | 9.71 | 7.50 | 15.20 | 5.22 | 10.2 | 9.74 | 89.67 | 0.05 |

| nr.15 | 30.68 | 0 | 10.94 | 7.79 | 14.22 | 93.00 | 16.63 | 12.01 | 14.72 | 1.98 | 8.64 | 10.25 | 77.72 | 2.15 |

| nr.16 | 23.94 | 0 | 6.15 | 4.36 | 19.10 | 125.42 | 12.28 | 5.45 | 10.47 | 5.67 | 11.47 | 15.47 | 91.14 | 0.41 |

| nr.17 | 9.99 | 0 | 3.34 | 0.82 | 5.56 | 30.95 | 1.17 | 0.49 | 3.79 | 1.56 | 5.71 | 7.17 | 24.62 | 0 |

| nr.18 | 34.53 | 0 | 14.36 | 8.56 | 25.70 | 228.72 | 19.79 | 14.24 | 24.97 | 3.05 | 14.85 | 16.61 | 140.37 | 1.43 |

| nr.19 | 18.33 | 0 | 5.73 | 3.30 | 10.94 | 82.12 | 5.98 | 5.17 | 10.75 | 3.85 | 7.69 | 8.1 | 72.66 | 2.21 |

| nr.20 | 27.81 | 0 | 12.15 | 12.09 | 16.92 | 130.90 | 7.51 | 10.88 | 34.94 | 14.98 | 41.29 | 22.25 | 34.12 | 0.21 |

| Process | Maximum (BPM) | Minimum (BPM) | Average (BPM) | SD |

|---|---|---|---|---|

| Physical robot programming | 117 | 75 | 90.05 | 11.99 |

| Virtual robot programming | 105 | 76 | 85.05 | 7.53 |

| Process | Maximum (BPM) | Minimum (BPM) | Average (BPM) | SD |

|---|---|---|---|---|

| Physical robot programming | 99 | 83 | 90.55 | 4.27 |

| Virtual robot programming | 100 | 79 | 90.1 | 6.66 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuts, V.; Marvel, J.A.; Aksu, M.; Pizzagalli, S.L.; Sarkans, M.; Bondarenko, Y.; Otto, T. Digital Twin as Industrial Robots Manipulation Validation Tool. Robotics 2022, 11, 113. https://doi.org/10.3390/robotics11050113

Kuts V, Marvel JA, Aksu M, Pizzagalli SL, Sarkans M, Bondarenko Y, Otto T. Digital Twin as Industrial Robots Manipulation Validation Tool. Robotics. 2022; 11(5):113. https://doi.org/10.3390/robotics11050113

Chicago/Turabian StyleKuts, Vladimir, Jeremy A. Marvel, Murat Aksu, Simone L. Pizzagalli, Martinš Sarkans, Yevhen Bondarenko, and Tauno Otto. 2022. "Digital Twin as Industrial Robots Manipulation Validation Tool" Robotics 11, no. 5: 113. https://doi.org/10.3390/robotics11050113

APA StyleKuts, V., Marvel, J. A., Aksu, M., Pizzagalli, S. L., Sarkans, M., Bondarenko, Y., & Otto, T. (2022). Digital Twin as Industrial Robots Manipulation Validation Tool. Robotics, 11(5), 113. https://doi.org/10.3390/robotics11050113