I Let Go Now! Towards a Voice-User Interface for Handovers between Robots and Users with Full and Impaired Sight

Abstract

1. Introduction

1.1. Research on Object Handover under Visual Restrictions

1.2. Voice-User Interfaces in Human–Robot Interaction for Sighted and Visually Impaired Users

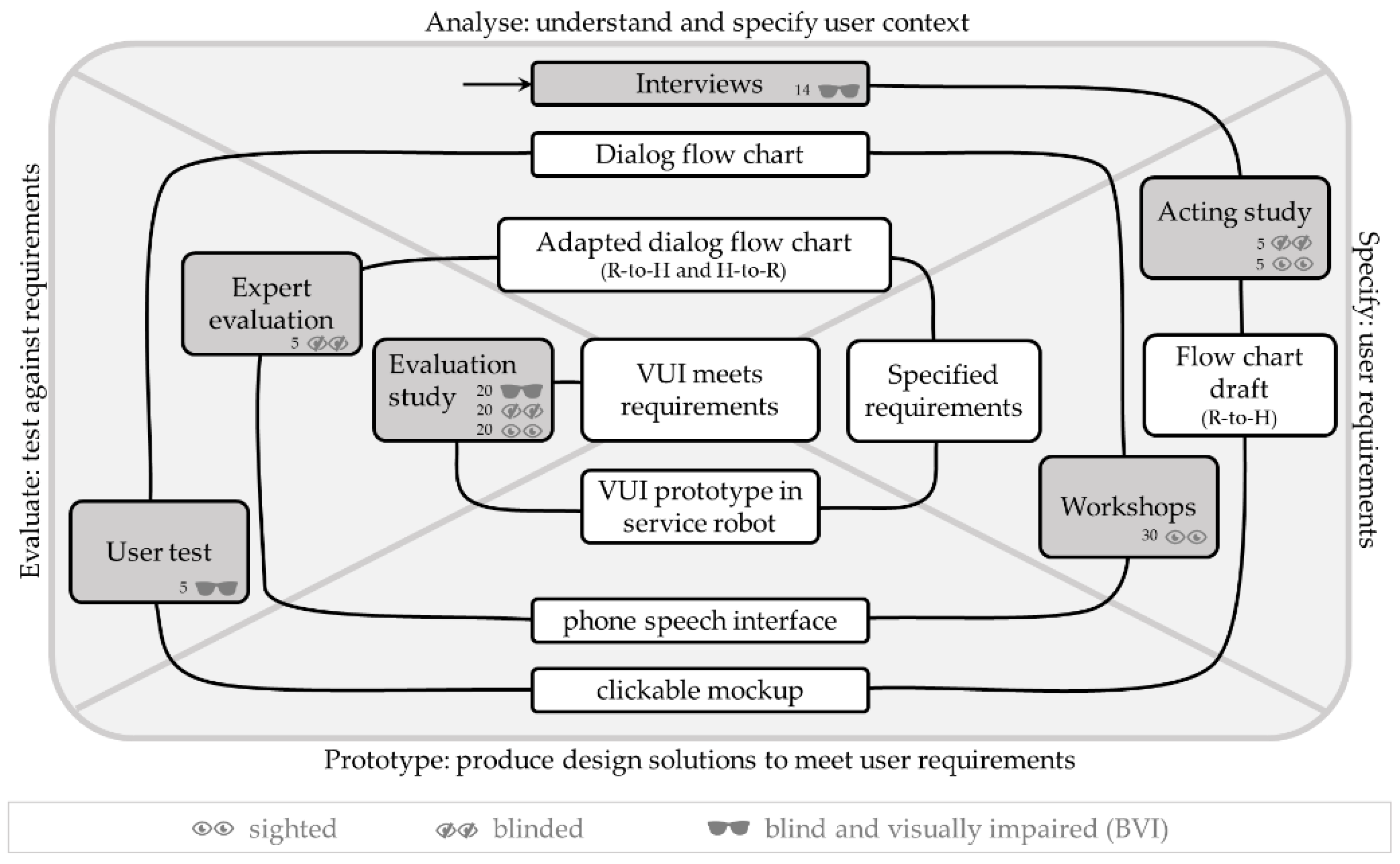

1.3. Voice-User Interfaces Design for Diverse Users with User-Centered Design (UCD)

- The design is based upon a precise understanding of users, their tasks, and their environments; deeply dive into their living environment instead of using vague ideas;

- Users are involved throughout the design and development process; users’ perspectives affect the development and are not only requested for evaluation of the final product;

- User-centered evaluation drives and refines design; every prototype is evaluated by users and their feedback is used for further product development;

- This process is iterative; stages of the development process are not linear and are done more than once; user feedback creates the necessity to repeat stages multiple times;Design of products does not only address the ease of use, but the whole user experience; products create emotions, provide real solutions, and encourage repeated usage;

- The design team must include multidisciplinary skills and perspectives; designers, human factor experts, programmers, and engineers closely cooperate.

- Analyzing: Information about relevant user groups is collected and summarized, to understand their tasks, goals, and why and in which context they will use the product;

- Specifying: From the collected information, user requirements are derived to set design guidelines and goals;

- Prototyping: Concepts and design solutions are created and implemented. Early prototypes are typically simple, with no or reduced functionality, becoming more and more realistic with every iteration cycle;

- Evaluating: Users try out prototypes and give feedback to test the design against the user requirements.

1.4. Research Objectives and Paper Structure

- (1)

- User requirements: What information conveyed by the VUI do users need to perform a handover interaction under visual restrictions? Which features of the speech interaction support the perception of the handover robot as a social partner?

- (2)

- Definition of VUI dialog: What dialog flow and commands are necessary to verbally coordinate the process of robotic handover interaction for diverse user groups?

- (3)

- Evaluating the VUI prototype: Does the VUI prototype enable a successful handover? How is the VUI evaluated by users regarding support ability and satisfaction with social characteristics of the social robot? Is the VUI inclusively applicable for BVI, blinded, and sighted users, despite not involving all user groups in every stage of the UCD process?

2. Materials and Methods

2.1. Samples

2.2. Materials

2.3. Design, Procedures and Measures

- Beginner: wants a detailed dialog; each activity of the robot should be commented on in detail by voice outputs; robot as assistant worker;

- Intermediate: wants articulated status messages, but no detailed explanations; robot as an intelligent machine;

- Expert user: wants short commands and short messages; robot as a tool.

| Step | Research Aims | Method/Design/Material 1 | Procedure |

|---|---|---|---|

| Interviews (BVI) |

| M: structured interviews |

|

| Acting Study (blinded, sighted) |

| M: laboratory study D: 2 × 2 within-design: Role of giving participant human assistant vs. assistant robot Modality midair handover vs. placing on table |

|

| User Test (BVI) |

| M: Wizard-of-Oz (WOZ) ser test (structured, unstandardized) D: 3 handover scenarios (within) reach (midair interaction) vs. take (midair interaction) vs. place (indirect interaction) MA: clickable mockup |

|

| Workshops (sighted) |

| M: remote workshop D: 3 × 4 mixed-design: Persona—between (beginner vs. intermediate vs. expert user) Scenario—within (ideal scenario vs. speech recognition failure vs. problems finding object vs. path to user is blocked) MA: digital whiteboard (real-time, collaborative) |

|

| Expert evaluation (blinded) |

| M: Unstandardized expert evaluation MA: phone-based speech interface |

|

| Evaluation study (BVI, blinded, sighted) |

| M: standardized experiment D: 3 × 3 × 2 mixed design User groups—between (BVI vs. blinded vs. sighted) Objects—within (knife vs. cup vs. spanner) Modalities—within (midair vs. placing) MA: VUI prototype in assistant robot |

|

2.4. Data Analysis

3. Results

3.1. Requirements for VUI Design

3.2. VUI Prototype Dialog Content and Structure

3.3. Evaluation of VUI Prototype

4. Discussion

4.1. Recommendations for Inclusive VUI Design for Robot Handover under Visual Restriction

- Actively provide status and position information during passive user phases. This confirms the literature, as recalling status information from an assistive system was found to be key information and a goal of VUIs [17]. Depending on the complexity of information, this can be done by continuous verbal communication about current robotic action or unique sounds instead of unnecessary voice outputs. If the mechanics of the robot are noiseless, offer generated artificial sounds to facilitate position estimation in case of visual restrictions.

- Before handover, provide precise information to the user about the object, its orientation, and its position. Users required that orientation of an object in the robot hand should be communicated, which was already mentioned by [4], along with the recommendation to orient objects corresponding to human conventions. As [18] found before, unusual properties of the object should be announced, with users mentioning properties such as being hazardous, sharp, sticky, or wet. Additionally, this research found that the position of the object should be communicated by giving clockwise (e.g., “The object is at the 9 o’clock position in front of your hand.”) or front/side direction in relation to receiver’s hand (e.g., “The object is in front, slightly left from your hand.”).

- Announce and request active confirmation of users for their readiness to handover, reinsurance of safe grasp, and for releasing. Already, Ref. [18] postulated communicating intention to handover and reinsurance of safe grasp, which was found in this research as well, complemented by the requirement to request active confirmation for releasing.

- Use sounds to show readiness for voice input and to immediately confirm command or input processing. Users require immediate confirmation of command processing and input confirmation to avoid user insecurity as to whether they are heard.

- Allow users to interrupt robot speech, but avoid interrupting user speech. Prioritized short commands for interruption of robot speech should be possible at all times, e.g., for emergency call or correcting input. Shortening time latencies between two speech outputs can prevent unintended interruptions by users.

- Enable good natural language recognition by understanding easy, but grammatical correct inputs. This further contributes to anthropomorphism and personification of the social robot [22], as do the features of speech interaction described next.

- When designing voice output, pay attention to social manners and etiquette. Output should be kind and nice, but stay task-oriented. There should be no chattering or colloquial language, and no excessive use of the word “please”. For voice input, allow a commanding style, but also kind communication, such as the possibility to thank the robot with an appropriate answer.

- Include anthropomorphic features in social robot communication. Users like the introduction of a robot by name and expect it to react when calling this name. They require warm and natural communication, with only slight preference for female voice. Hence, if possible, offering female and male voices is recommended, which users can choose according to individual preference.

- Allow individualization to address differing needs of diverse user groups. Expert and novice modes, the latter with more extensive communication, ensure a continuous understanding of the situation for new or BVI users. In line with [13], which showed that BVI people often use screen readers with synthesized robotic-sounding voices and a higher than natural speaking output rate, voice output tempo should be adjustable. An acoustic guidance system could provide sounds at the robot gripper to facilitate localizing the object for BVI users.

4.2. Recommendations on Dialog Flow and Commands

4.3. Success of VUI Prototype

4.4. Limitations and Future Research

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

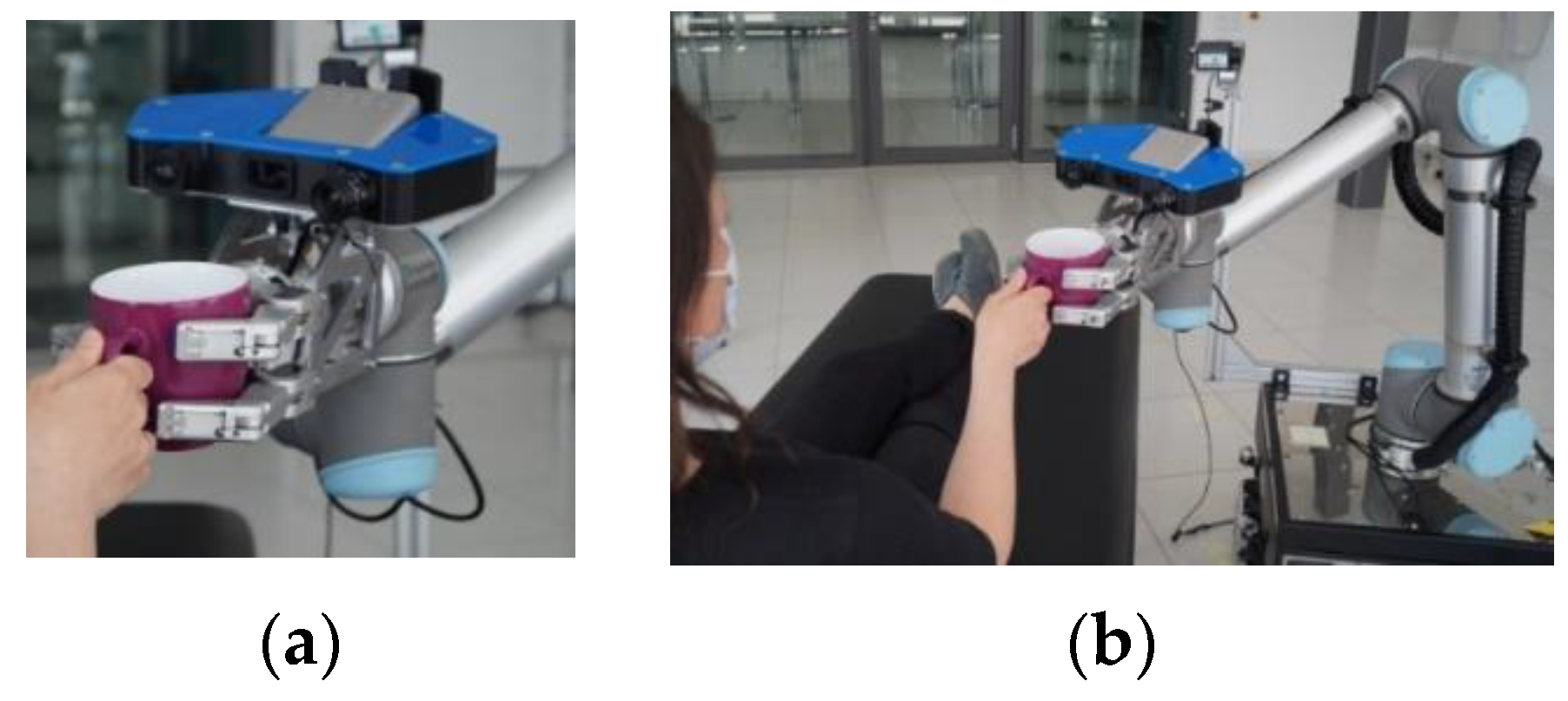

Appendix A. Description of the Robot for Handover under Visual Restrictions

Appendix B. Flowchart Draft

Appendix C. Example of the Final VUI Dialog

| 1: User is awakening the robot by phone call. | ||

| 2: Hello, my name is [name]. I can bring and hand over objects to you or pick up them up from you. Thereby I support two types of handover. Either directly into your hand or indirectly, by placing the object on a table. You can also interrupt me while I am speaking. 3: Manual following on demand by saying ‘Manual’ and explaining that the robot asks for three information—object, handover direction, and handover modality—and introduction of available short commands. 4: How can I help you? [e]2 5: Hello, [name]. Please bring me a cup of coffee. [e] [2 out of 3 necessary information given] 6: I’m supposed to bring you a cup. Shall I hand you the cup directly or put it on the table? [e] | ||

| 7. Directly into my hand.[e] | 7. On the table, please.[e] | |

| 8. I’m supposed to bring you a cup of coffee. The transfer should take place directly from hand to hand. Did I understand the task correctly? [e] | 8. I’m supposed to bring you a cup of coffee. The transfer should be done indirectly by placing it on the table. Did I understand the task correctly? [e] | |

| 9. Yes [Yes, you did]. [e] 10. I am looking for the cup. | ||

| 11. I have localized the cup. 12. I am moving to the cup and getting it for you. 13. I am opening my hand. 14. I am moving my arm to the object. 15. I am closing my hand. 16. I gripped the cup safely. 17. I am moving back a little. | ||

| 18. I am approaching you for the handover, but still keeping a little distance. [e] | 18. I am going to you now to put the item on the table. | |

| 19. Confirmation required. I would now like to hand the cup directly into your hand. Say ‘Go’ as soon as you’re ready to go. [e] 20. Go. [e] | [only robotic sounds perceptible] 19. Current status? | |

| 21. Okay. Please hold out your hand to me so that I can navigate towards you. [e] 22. I hold the cup directly in front of your hand with the handle first. Follow the light air flow 1 with your hand and reach for it. [e] | 20. I am going to you now to put the item on the table. | |

| 23. Confirmation required. Say ‘Go’ if I may now open my hand and let go of the cup. [e] 24. Okay, go. [e] | ||

| 25. I’m letting go now. [hand is opening after finishing the sentence] [e] | 21. I put the cup down on the table and let go now. [e] | |

| 26. Thanks. 27. You are very welcome. 28. I am moving back a little. 29. Is there anything else I can do for you? 30. No, not at the moment. 31. I am driving back to my base station. 31. I have reached my base station. [e] | ||

References

- Richert, A.; Shehadeh, M.; Müller, S.; Schröder, S.; Jeschke, S. Robotic Workmates: Hybrid Human-Robot-Teams in the Industry 4.0. In Proceedings of the International Conference on E-Learning, Kuala Lumpur, Malaysia, 2–3 June 2016. [Google Scholar]

- Müller, S.L.; Schröder, S.; Jeschke, S.; Richert, A. Design of a Robotic Workmate. In Digital Human Modeling. In Applications in Health, Safety, Ergonomics, and Risk Management: Ergonomics and Design, 1st ed.; Duffy, V., Ed.; Springer: Cham, Switzerland, 2017; Volume 1, pp. 447–456. [Google Scholar] [CrossRef]

- Breazeal, C.; Dautenhahn, K.; Kanda, T. Social Robotics. In Springer Handbook of Robotics, 2nd ed.; Siciliano, B., Khatib, O., Eds.; Springer: Cham, Switzerland, 2016; Volume 1, pp. 1935–1972. [Google Scholar] [CrossRef]

- Cakmak, M.; Srinivasa, S.S.; Lee, M.K.; Kiesler, S.; Forlizzi, J. Using spatial and temporal contrast for fluent robot-human hand-overs. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011. [Google Scholar]

- Aleotti, J.; Micelli, V.; Caselli, S. An Affordance Sensitive System for Robot to Human Object Handover. Int. J. Soc. Robot. 2014, 6, 653–666. [Google Scholar] [CrossRef]

- Koene, A.; Remazeilles, A.; Prada, M.; Garzo, A.; Puerto, M.; Endo, S.; Wing, A. Relative importance of spatial and temporal precision for user satisfaction in Human-Robot object handover Interactions. In Proceedings of the 50th Annual Convention of the AISB, London, UK, 18–21 April 2014. [Google Scholar]

- Langton, S.; Watt, R.; Bruce, V. Do the eyes have it? Cues to the direction of social attention. TiCS 2000, 4, 50–59. [Google Scholar] [CrossRef]

- Käppler, M.; Deml, B.; Stein, T.; Nagl, J.; Steingrebe, H. The Importance of Feedback for Object Hand-Overs Between Human and Robot. In Human Interaction, Emerging Technologies and Future Applications III. IHIET 2020. Advances in Intelligent Systems and Computing; Ahram, T., Taiar, R., Langlois, K., Choplin, A., Eds.; Springer: Cham, Switzerland, 2021; Volume 1253, pp. 29–35. [Google Scholar] [CrossRef]

- Cochet, H.; Guidetti, M. Contribution of Developmental Psychology to the Study of Social Interactions: Some Factors in Play, Joint Attention and Joint Action and Implications for Robotics. Front. Psychol. 2018, 9, 1992. [Google Scholar] [CrossRef]

- Strabala, K.; Lee, M.K.; Dragan, A.; Forlizzi, J.; Srinivasa, S.S.; Cakmak, M.; Micelli, V. Toward seamless human-robot handovers. JHRI 2013, 2, 112–132. [Google Scholar] [CrossRef]

- Bdiwi, M. Integrated sensors system for human safety during cooperating with industrial robots for handing-over and assembling tasks. Procedia CIRP 2014, 23, 65–70. [Google Scholar] [CrossRef]

- Costa, D.; Duarte, C. Alternative modalities for visually impaired users to control smart TVs. Multimed. Tools Appl. 2020, 79, 31931–31955. [Google Scholar] [CrossRef]

- Branham, S.M.; Roy, A.R.M. Reading Between the Guidelines: How Commercial Voice Assistant Guidelines Hinder Accessibility for Blind Users. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’19), Pittsburgh, MD, USA, 29 October–1 November 2019. [Google Scholar]

- Bonani, M.; Oliveira, R.; Correia, F.; Rodrigues, A.; Guerreiro, T.; Paiva, A. What My Eyes Can’t See, A Robot Can Show Me: Exploring the Collaboration Between Blind People and Robots. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ‘18), Galway, Ireland, 22–24 October 2018. [Google Scholar]

- Angleraud, A.; Sefat, A.M.; Netzev, M.; Pieters, R. Coordinating Shared Tasks in Human-Robot Collaboration by Commands. Front. Robot. AI. 2021, 8, 734548. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.S.; Chen, T.; Jain, A.; Anderson, C.; Glass, J.D.; Kemp, C.C. Hand it over or set it down: A user study of object delivery with an assistive mobile manipulator. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009. [Google Scholar] [CrossRef]

- Leporini, B.; Buzzi, M. Home automation for an independent living: Investigating the needs of visually impaired people. In Proceedings of the 15th International Web for All Conference (W4A ’18), New York, NY, USA, 23–25 April 2018. [Google Scholar]

- Walde, P.; Langer, D.; Legler, F.; Goy, A.; Dittrich, F.; Bullinger, A.C. Interaction Strategies for Handing Over Objects to Blind People. In Proceedings of the Human Factors and Ergonomics Society (HFES) Europe Chapter Annual Meeting, Nantes, France, 2–4 October 2019. [Google Scholar]

- Oumard, C.; Kreimeier, J.; Götzelmann, T. Pardon? An Overview of the Current State and Requirements of Voice User Interfaces for Blind and Visually Impaired Users. In Proceedings of the Computers Helping People with Special Needs: 18th International Conference (ICCHP), Lecco, Italy, 11–15 July 2022. [Google Scholar]

- Oliveira, O.F.; Freire, A.P.; Winckler De Bettio, R. Interactive smart home technologies for users with visual disabilities: A systematic mapping of the literature. Int. J. Comput. Appl. 2021, 67, 324–339. [Google Scholar] [CrossRef]

- Azenkot, S.; Lee, N.B. Exploring the use of speech input by blind people on mobile devices. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility, New York, NY, USA, 21–23 October 2013. [Google Scholar]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2021, 13, 833–849. [Google Scholar] [CrossRef]

- Jee, E.S.; Jeong, Y.J.; Kim, C.H.; Kobayashi, H. Sound design for emotion and intention expression of socially interactive robots. Intel. Serv. Robotics 2010, 3, 199–206. [Google Scholar] [CrossRef]

- Majumdar, I.; Banerjee, B.; Preeth, M.T.; Hota, M.K. Design of weather monitoring system and smart home automation. In Proceedings of the IEEE International Conference on System, Computation, Automation, and Networking (ICSCA), Pondicherry, India, 6–7 July 2018. [Google Scholar]

- Ramlee, R.A.; Aziz, K.A.A.; Leong, M.H.; Othman, M.A.; Sulaiman, H.A. Wireless controlled methods via voice and internet (e-mail) for home automation system. IJET 2013, 5, 3580–3587. [Google Scholar]

- Raz, N.; Striem, E.; Pundak, G.; Orlov, T.; Zohary, E. Superior serial memory in the blind: A case of cognitive compensatory adjustment. Curr. Biol. 2007, 17, 1129–1133. [Google Scholar] [CrossRef] [PubMed]

- Newell, A.F.; Gregor, P.; Morgan, M.; Pullin, G.; Macauly, C. User-sensitive inclusive design. Univ. Access Inf. Soc. 2011, 10, 235–243. [Google Scholar] [CrossRef]

- Deutsches Institut für Normung. DIN EN ISO 9241-210 Ergonomie der Mensch-System-Interaktion—Teil 210: Menschzentrierte Gestaltung Interaktiver Systeme; Beuth Verlag GmbH: Berlin, Germany, 2019. [Google Scholar]

- Harris, M.A.; Weistroffer, H.R. A new look at the relationship between user involvement in systems development and system success. CAIS 2009, 24, 739–756. [Google Scholar] [CrossRef]

- Kujala, S. User involvement: A review of the benefits and challenges. Behav. Inf. Technol. 2003, 22, 1–16. [Google Scholar] [CrossRef]

- Ladner, R.E. Design for user empowerment. Interactions 2015, 22, 24–29. [Google Scholar] [CrossRef]

- Philips, G.R.; Clark, C.; Wallace, J.; Coopmans, C.; Pantic, Z.; Bodine, C. User-centred design, evaluation, and refinement of a wireless power wheelchair charging system. Disabil. Rehabil. Assist. Technol. 2020, 15, 1–13. [Google Scholar]

- Vacher, M.; Lecouteux, B.; Chahuara, P.; Portet, F.; Meillon, B.; Bonnefond, N. The Sweet-Home speech and multimodal corpus for home automation interaction. In Proceedings of the 9th Edition of the Language Resources and Evaluation Conference (LREC), Reykjavik, Iceland, 26–31 May 2014; European Language Resources Association: Paris, France; pp. 4499–4506. [Google Scholar]

- Miao, M.; Pham, H.A.; Friebe, J.; Weber, G. Contrasting usability evaluation methods with blind users. UAIS 2016, 15, 63–76. [Google Scholar] [CrossRef]

- Universal Robots (2016) Die Zukunft ist Kollaborierend. Available online: https://www.universal-robots.com/de/download-center/#/cb-series/ur10 (accessed on 8 December 2021).

- Hone, K.S.; Graham, R. Towards a tool for the Subjective Assessment of Speech System Interfaces (SASSI). Nat. Lang. Eng. 2000, 6, 287–303. [Google Scholar] [CrossRef]

- Kocaballi, A.B.; Laranjo, L.; Coiera, E. Understanding and Measuring User Experience in Conversational Interfaces. Interact. Comput. 2019, 2, 192–207. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. In R Foundation for Statistical Computing; Chapman and Hall/CRC: Vienna, Austria, 2019; Available online: https://www.R-project.org/ (accessed on 16 December 2019).

| Step | Involved Groups and Sample Size | Gender Ratio (Female:Male) | Mean Age (in Years) | Group Comparison Regarding Age |

|---|---|---|---|---|

| Interviews | BVI (14) | 6:8 | ~45 | - |

| Acting Study | blinded (5) sighted (5) | 5:5 | ~35 | - |

| User Test | BVI (5) | 2:3 | ~58 | - |

| Workshops | sighted (30) | - 1 | - 1 | - |

| Expert Evaluation | blinded (5) | 1:4 | ~35 | - |

| Evaluation Study | BVI (20), blinded (20), sighted (20) | 37:23 | ~42 | MBVI = 40.3 (SDBVI = 15.3) Mblinded = 44.6 (SDblinded = 19.9) Msighted = 42.2 (SDsighted = 19.8) |

| Design Requirement and General Insights | UCD Step 1 |

|---|---|

| Dialog content | |

| Placing on table: feedback on orientation of objects on table using clock direction or verbal front/side directions | U, (I) |

| Reassurance about safe and stable grasp before hand opening | I |

| Announcing if object is potentially hazardous | I |

| Communicating specific feature of objects (e.g., sharp, sticky, wet) | I |

| Requirement for position of object in relation to receiver hands | I |

| Standardization of robotic dialog to develop a routine | I |

| Introduction with guidance on desired speech input | EE |

| If equal robotic actions are performed during various phases of handover (e.g., ‘opening hand’ to take up object and release object), a one-to-one mapping of speech outputs with specific phase by using literal speech variations is necessary (e.g., “I am opening my hand” during object grasping vs. “I am letting go now” during handover) | EE |

| Continuous verbal communication about current robotic action | A |

| Selectable option: offering placing on the table instead of midair handover | I |

| Usage of communication to support both passive as well as active phases during robotic handovers (for details see Section 3.2) | I, A, U, W, EE, EV |

| Acoustic confirmation of command processing | U |

| Information regarding system failures always being speech-based | U |

| Information on orientation of objects in robot hand | I, (A), (U) |

| Confirmation loop for releasing the object during handover | I, (EE) |

| Usage of a tone to explicate input confirmation | U |

| Information on robot intent to handover | I |

| Social manners and etiquette | |

| Task-oriented speech outputs, no chattering | U |

| Short speech output | U |

| No overuse of “please” in robotic outputs | U |

| Kind and nice | U |

| Commanding tone for input confirmation | U |

| Formal speech (age-independent), no colloquial utterances | U |

| Possibility to thank the robot and robotic reaction to gratitude by users | U |

| Aesthetics/anthropomorphism | |

| Slight preference for female voice | U |

| Introduction of the robot by name | U |

| Reaction to calling the name of the robot | EE |

| Desire to use easy, but complete and grammatical correct speech inputs at first contact | U |

| Natural communication that feels ‘warm’ | I |

| Supporting passive user phases | |

| Possibility to actively retrieve status information during passive times | I, (U) |

| If true: usage of artificial sounds made by the VUI to replace quiet mechanics of the robot | EV |

| Usage of sounds instead of voice outputs to reduce output length | U |

| Short commands | |

| Possibility to interrupt robot speech at all times (interrupt-events with prioritization) | U |

| Emergency call | I |

| Individualization | |

| Speaker voice | U |

| Amount of speech outputs (novice/expert) | U, (W), (EE) |

| Replacing of unneeded voice outputs during all phases by sounds for experienced users | W |

| Speed of speaker voice (BVI prefer faster output) | U |

| Individualizing name of robot | U |

| Blinded users: more communication to ensure a situation awareness | I |

| Acoustic guidance (by sounds) for user hand positioning during midair handovers | EV |

| Speech Recognition and Speech Output | |

| Short time latencies between two speech outputs to prevent unintended interruptions by users | EE |

| Acoustic signal of the VUI to show readiness for voice input | EV |

| Good natural language recognition | EV |

| Part | Communication |

|---|---|

| 1 | Welcoming/start of interaction including introduction by name |

| 2 | Obtaining order information |

| 3 | Passive status information during searching and grasping of the object |

| 4 | Approaching towards the human, keeping a distance to the user |

| 5 | Confirmation loop for intention to hand over |

| 6 | Actual approach towards user’s hand including an expression of required user actions |

| 7 | Information regarding orientation and features of the handover object and an expression of required user actions |

| 8 | Confirmation loop for safe grasp during handover |

| 9 | Final announcement of forthcoming hand opening |

| SASSI Subscale | F | p-Value | eta2P |

|---|---|---|---|

| System Response Accuracy | 2.14 | 0.149 | 0.036 |

| Likeability | 0.84 | 0.362 | 0.014 |

| Annoyance | 5.35 | 0.024 | 0.084 |

| Habitability | 2.34 | 0.132 | 0.039 |

| Speed | 3.86 | 0.054 | 0.062 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Langer, D.; Legler, F.; Kotsch, P.; Dettmann, A.; Bullinger, A.C. I Let Go Now! Towards a Voice-User Interface for Handovers between Robots and Users with Full and Impaired Sight. Robotics 2022, 11, 112. https://doi.org/10.3390/robotics11050112

Langer D, Legler F, Kotsch P, Dettmann A, Bullinger AC. I Let Go Now! Towards a Voice-User Interface for Handovers between Robots and Users with Full and Impaired Sight. Robotics. 2022; 11(5):112. https://doi.org/10.3390/robotics11050112

Chicago/Turabian StyleLanger, Dorothea, Franziska Legler, Philipp Kotsch, André Dettmann, and Angelika C. Bullinger. 2022. "I Let Go Now! Towards a Voice-User Interface for Handovers between Robots and Users with Full and Impaired Sight" Robotics 11, no. 5: 112. https://doi.org/10.3390/robotics11050112

APA StyleLanger, D., Legler, F., Kotsch, P., Dettmann, A., & Bullinger, A. C. (2022). I Let Go Now! Towards a Voice-User Interface for Handovers between Robots and Users with Full and Impaired Sight. Robotics, 11(5), 112. https://doi.org/10.3390/robotics11050112