Sim-to-Real Deep Reinforcement Learning for Safe End-to-End Planning of Aerial Robots

Abstract

1. Introduction

Contributions

- A novel DRL simulation framework is proposed for training an end-to-end planner for a quadrotor flight, including a faster training strategy using non-dynamic state updates and highly randomized simulation environments.

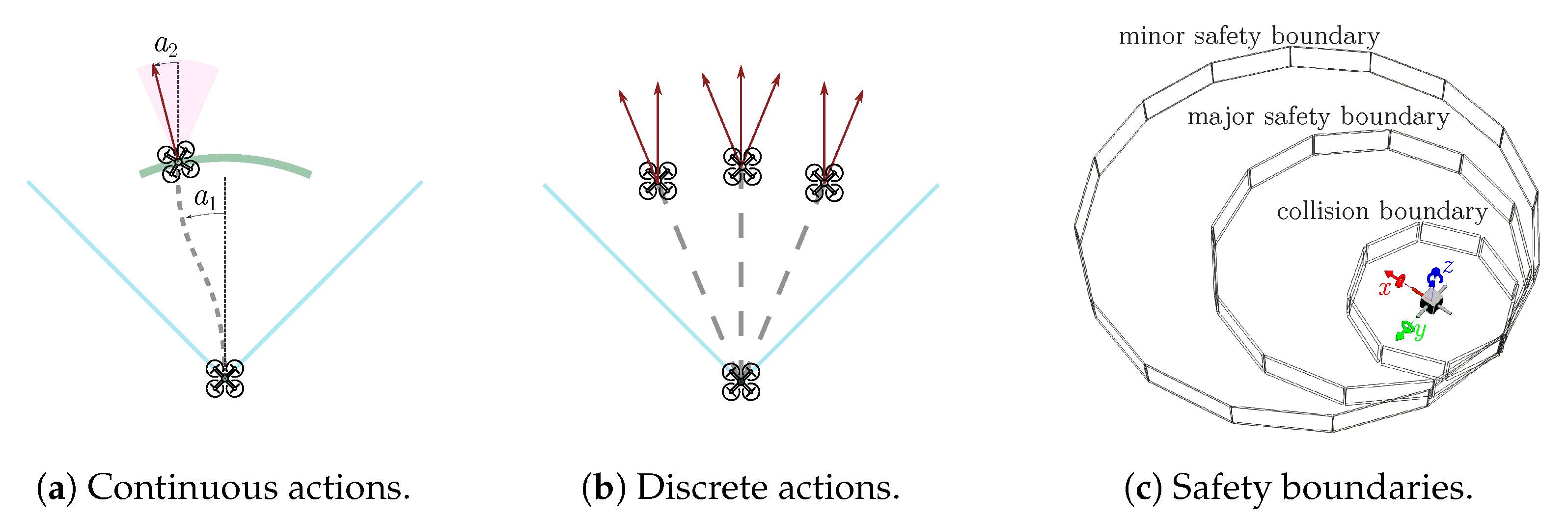

- The impact of continuous/discrete actions and proposed safety boundaries in RL training are investigated.

- We open-source the Webots-based DRL framework, including all training and evaluation scripts (the code, trained models, and simulation environment will be available at https://github.com/open-airlab/gym-depth-planning, accessed on 1 July 2022).

- The method is evaluated with extensive experiments in Webots-based simulation environments and multiple real-world scenarios, transferring the network from simulation to real without further training.

2. Related Work

3. End-to-End Motion Planning of UAV

3.1. Reinforcement Learning Formalization of the Environment

3.2. Randomization of the Environment

| Algorithm 1 Randomized obstacle environment. |

| if then |

| end if |

| for each obstacle do |

| if is cylinder then |

| end if |

| if is box then |

| end if |

| if is sphere then |

| end if |

| end for |

3.3. Deep Reinforcement Learning: Actor and Critic Network Architecture

4. Experiments and Results

4.1. Simulation Setup

4.2. Training in Simulation

4.3. Simulation Results

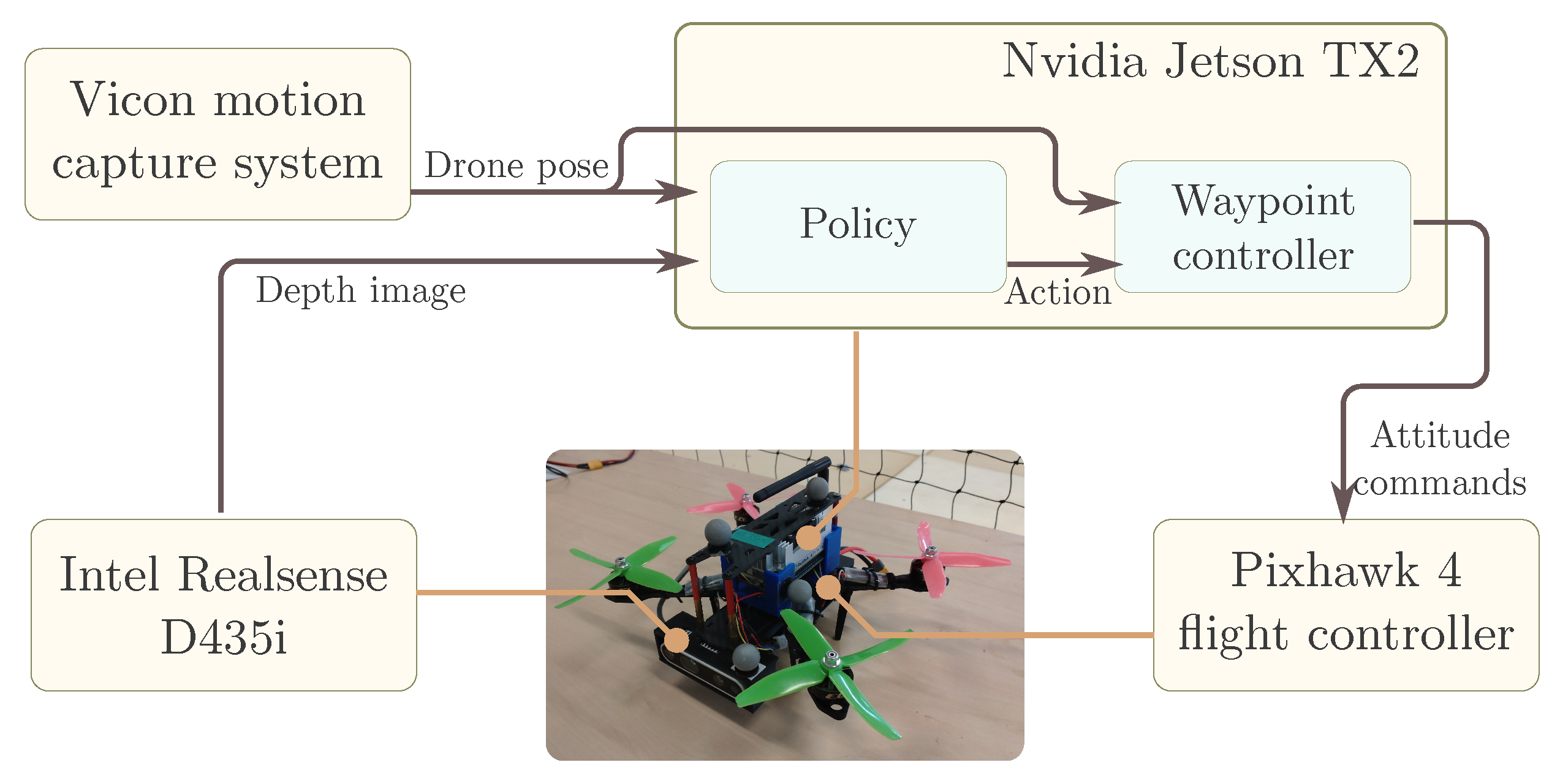

4.4. Real-Time Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DRL | deep reinforcement learning |

| UAV | unmanned aerial vehicle |

| PPO | proximal policy optimization |

| FOV | field of view |

| PID | proportional-integral-derivative |

| MDP | Markov decision process |

| SITL | software-in-the-loop |

| ROS | robot operating system |

| SCDP | safe continuous depth planner |

| CDP | continuous depth planner |

| DDP | discrete depth planner |

| APF | artificial potential field |

Appendix A

| t | discrete timestep |

| state at timestep t | |

| action at timestep t | |

| reward at timestep t | |

| matrix representing the depth image | |

| L | length of the global trajectory |

| uniform distribution | |

| normal distribution |

References

- Pham, H.X.; Ugurlu, H.I.; Le Fevre, J.; Bardakci, D.; Kayacan, E. Deep learning for vision-based navigation in autonomous drone racing. In Deep Learning for Robot Perception and Cognition; Elsevier: Amsterdam, The Netherlands, 2022; pp. 371–406. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6 July–11 July 2015; pp. 1889–1897. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Muratore, F.; Ramos, F.; Turk, G.; Yu, W.; Gienger, M.; Peters, J. Robot learning from randomized simulations: A review. Front. Robot. AI 2022, 9, 799893. [Google Scholar] [CrossRef] [PubMed]

- Hoeller, D.; Wellhausen, L.; Farshidian, F.; Hutter, M. Learning a state representation and navigation in cluttered and dynamic environments. IEEE Robot. Autom. Lett. 2021, 6, 5081–5088. [Google Scholar] [CrossRef]

- Pham, H.X.; Sarabakha, A.; Odnoshyvkin, M.; Kayacan, E. PencilNet: Zero-Shot Sim-to-Real Transfer Learning for Robust Gate Perception in Autonomous Drone Racing. arXiv 2022, arXiv:2207.14131. [Google Scholar] [CrossRef]

- Molchanov, A.; Chen, T.; Hönig, W.; Preiss, J.A.; Ayanian, N.; Sukhatme, G.S. Sim-to-(multi)-real: Transfer of low-level robust control policies to multiple quadrotors. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 59–66. [Google Scholar]

- Morales, T.; Sarabakha, A.; Kayacan, E. Image generation for efficient neural network training in autonomous drone racing. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Koch, W.; Mancuso, R.; West, R.; Bestavros, A. Reinforcement learning for UAV attitude control. ACM Trans. Cyber Phys. Syst. 2019, 3, 22. [Google Scholar] [CrossRef]

- Ugurlu, H.I.; Kalkan, S.; Saranli, A. Reinforcement Learning versus Conventional Control for Controlling a Planar Bi-rotor Platform with Tail Appendage. J. Intell. Robot. Syst. 2021, 102, 77. [Google Scholar] [CrossRef]

- Camci, E.; Kayacan, E. Learning motion primitives for planning swift maneuvers of quadrotor. Auton. Robot. 2019, 43, 1733–1745. [Google Scholar] [CrossRef]

- Dooraki, A.R.; Lee, D.J. An innovative bio-inspired flight controller for quad-rotor drones: Quad-rotor drone learning to fly using reinforcement learning. Robot. Auton. Syst. 2021, 135, 103671. [Google Scholar] [CrossRef]

- Brunke, L.; Greeff, M.; Hall, A.W.; Yuan, Z.; Zhou, S.; Panerati, J.; Schoellig, A.P. Safe learning in robotics: From learning-based control to safe reinforcement learning. Annu. Rev. Control Robot. Auton. Syst. 2022, 5, 411–444. [Google Scholar] [CrossRef]

- Han, H.; Cheng, J.; Xi, Z.; Yao, B. Cascade Flight Control of Quadrotors Based on Deep Reinforcement Learning. IEEE Robot. Autom. Lett. 2022, 7, 11134–11141. [Google Scholar] [CrossRef]

- Kaufmann, E.; Bauersfeld, L.; Scaramuzza, D. A Benchmark Comparison of Learned Control Policies for Agile Quadrotor Flight. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 10504–10510. [Google Scholar]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A formal basis for the heuristic determination of minimum cost paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Stentz, A. Optimal and efficient path planning for partially known environments. In Intelligent Unmanned Ground Vehicles; Springer: Berlin/Heidelberg, Germany, 1997; pp. 203–220. [Google Scholar]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; Volume 2, pp. 500–505. [Google Scholar]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning. 1998. Available online: https://citeseerx.ist.psu.edu/viewdoc/citations;jsessionid=FDD7D4058FECC1206F4FA333A3286F56?doi=10.1.1.35.1853 (accessed on 14 July 2022).

- Zhou, D.; Wang, Z.; Schwager, M. Agile coordination and assistive collision avoidance for quadrotor swarms using virtual structures. IEEE Trans. Robot. 2018, 34, 916–923. [Google Scholar] [CrossRef]

- Raigoza, K.; Sands, T. Autonomous Trajectory Generation Comparison for De-Orbiting with Multiple Collision Avoidance. Sensors 2022, 22, 7066. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Sebastian, B.; Ben-Tzvi, P. A Collision Avoidance Method Based on Deep Reinforcement Learning. Robotics 2021, 10, 73. [Google Scholar] [CrossRef]

- Dooraki, A.R.; Lee, D.J. An end-to-end deep reinforcement learning-based intelligent agent capable of autonomous exploration in unknown environments. Sensors 2018, 18, 3575. [Google Scholar] [CrossRef] [PubMed]

- Kang, K.; Belkhale, S.; Kahn, G.; Abbeel, P.; Levine, S. Generalization through simulation: Integrating simulated and real data into deep reinforcement learning for vision-based autonomous flight. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6008–6014. [Google Scholar]

- Bonatti, R.; Madaan, R.; Vineet, V.; Scherer, S.; Kapoor, A. Learning visuomotor policies for aerial navigation using cross-modal representations. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 1637–1644. [Google Scholar]

- Bonatti, R.; Wang, W.; Ho, C.; Ahuja, A.; Gschwindt, M.; Camci, E.; Kayacan, E.; Choudhury, S.; Scherer, S. Autonomous aerial cinematography in unstructured environments with learned artistic decision-making. J. Field Robot. 2020, 37, 606–641. [Google Scholar] [CrossRef]

- Polvara, R.; Patacchiola, M.; Hanheide, M.; Neumann, G. Sim-to-Real Quadrotor Landing via Sequential Deep Q-Networks and Domain Randomization. Robotics 2020, 9, 8. [Google Scholar] [CrossRef]

- Bartolomei, L.; Kompis, Y.; Pinto Teixeira, L.; Chli, M. Autonomous Emergency Landing for Multicopters Using Deep Reinforcement Learning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Muñoz, G.; Barrado, C.; Çetin, E.; Salami, E. Deep reinforcement learning for drone delivery. Drones 2019, 3, 72. [Google Scholar] [CrossRef]

- Doukhi, O.; Lee, D.J. Deep reinforcement learning for end-to-end local motion planning of autonomous aerial robots in unknown outdoor environments: Real-time flight experiments. Sensors 2021, 21, 2534. [Google Scholar] [CrossRef] [PubMed]

- Loquercio, A.; Kaufmann, E.; Ranftl, R.; Müller, M.; Koltun, V.; Scaramuzza, D. Learning High-Speed Flight in the Wild. In Proceedings of the Science Robotics, New York, NY, USA, 27 June–1 July 2021. [Google Scholar]

- Camci, E.; Campolo, D.; Kayacan, E. Deep reinforcement learning for motion planning of quadrotors using raw depth images. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar]

- Dooraki, A.R.; Lee, D.J. A Multi-Objective Reinforcement Learning Based Controller for Autonomous Navigation in Challenging Environments. Machines 2022, 10, 500. [Google Scholar] [CrossRef]

- Michel, O. Cyberbotics Ltd. Webots™: Professional mobile robot simulation. Int. J. Adv. Robot. Syst. 2004, 1, 5. [Google Scholar] [CrossRef]

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. Openai gym. arXiv 2016, arXiv:1606.01540. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Raffin, A.; Hill, A.; Gleave, A.; Kanervisto, A.; Ernestus, M.; Dormann, N. Stable-Baselines3: Reliable Reinforcement Learning Implementations. J. Mach. Learn. Res. 2021, 22, 1–8. [Google Scholar]

- Meier, L.; Tanskanen, P.; Fraundorfer, F.; Pollefeys, M. Pixhawk: A system for autonomous flight using onboard computer vision. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2992–2997. [Google Scholar]

- Faessler, M.; Franchi, A.; Scaramuzza, D. Differential flatness of quadrotor dynamics subject to rotor drag for accurate tracking of high-speed trajectories. IEEE Robot. Autom. Lett. 2017, 3, 620–626. [Google Scholar] [CrossRef]

- Ku, J.; Harakeh, A.; Waslander, S.L. In Defense of Classical Image Processing: Fast Depth Completion on the CPU. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 9–11 May 2018; pp. 16–22. [Google Scholar]

| Choice | Corresponding Continuous Action |

|---|---|

| Action 1 | |

| Action 2 | |

| Action 3 | |

| Action 4 | |

| Action 5 | |

| Action 6 | |

| Action 7 |

| Route 1 | Route 2 | Route 3 | Route 4 | Route 5 | Route 6 | Overall | Safety Cost | ||

|---|---|---|---|---|---|---|---|---|---|

| SCDP | distance | 30 | 30 | 30 | 25.8 | 30 | 30 | 29.7 | 0.51 |

| success rate | 100 | 100 | 100 | 70 | 100 | 90 | 93 | ||

| CDP | distance | 30 | 30 | 30 | 19.4 | 30 | 27.4 | 28.0 | 0.52 |

| success rate | 100 | 100 | 100 | 0 | 100 | 80 | 80 | ||

| DDP | distance | 30 | 28.8 | 8.93 | 16.6 | 28.0 | 25.8 | 23.1 | 0.57 |

| success rate | 100 | 90 | 0 | 0 | 80 | 60 | 55 | ||

| APF | distance | 27.5 | 24.7 | 29.0 | 10.7 | 9.7 | 20.7 | 20.4 | 0.87 |

| success rate | 90 | 10 | 90 | 10 | 0 | 60 | 43 | ||

| Moderate Scenario | Difficult Scenario | ||

|---|---|---|---|

| SCDP | success rate | 100% | 80% |

| collision rate | 0% | 20% | |

| distance (meters) | 8 | 7.4 | |

| DDP | success rate | 80% | 0% |

| collision rate | 20% | 20% | |

| distance (meters) | 7.7 | 7.1 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ugurlu, H.I.; Pham, X.H.; Kayacan, E. Sim-to-Real Deep Reinforcement Learning for Safe End-to-End Planning of Aerial Robots. Robotics 2022, 11, 109. https://doi.org/10.3390/robotics11050109

Ugurlu HI, Pham XH, Kayacan E. Sim-to-Real Deep Reinforcement Learning for Safe End-to-End Planning of Aerial Robots. Robotics. 2022; 11(5):109. https://doi.org/10.3390/robotics11050109

Chicago/Turabian StyleUgurlu, Halil Ibrahim, Xuan Huy Pham, and Erdal Kayacan. 2022. "Sim-to-Real Deep Reinforcement Learning for Safe End-to-End Planning of Aerial Robots" Robotics 11, no. 5: 109. https://doi.org/10.3390/robotics11050109

APA StyleUgurlu, H. I., Pham, X. H., & Kayacan, E. (2022). Sim-to-Real Deep Reinforcement Learning for Safe End-to-End Planning of Aerial Robots. Robotics, 11(5), 109. https://doi.org/10.3390/robotics11050109