1. Introduction

Robots were originally designed to assist or replace humans by performing repetitive and/or dangerous tasks which humans usually prefer not to do, or are unable to do because of physical limitations imposed by extreme environments. Those include the limited accessibility of narrow, long pipes underground, anatomical locations in specific minimally invasive surgery procedure, objects at the bottom of the sea, for example. With the continuous developments in mechanics, sensing technology [

1], intelligent control and other modern technologies, robots have improved autonomy capabilities and are more dexterous. Nowadays, commercial and industrial robots are in widespread use with lower long-term cost and greater accuracy and reliability, in the 2 fields like manufacturing, assembly, packing, transport, surgery, earth and space exploration, etc.

There are different types of robots available, which can be grouped into several categories depending on their movement, Degrees of Freedom (DoF), and function. Articulated robots, are among the most common robots used today. They look like a human arm and that is why they are also called robotic arm or manipulator arm [

2]. In some contexts, a robotic arm may also refer to a part of a more complex robot. A robotic arm can be described as a chain of links that are moved by joints which are actuated by motors. We will start from a brief explanation of these mechanical components of a typical robotic manipulator [

3,

4].

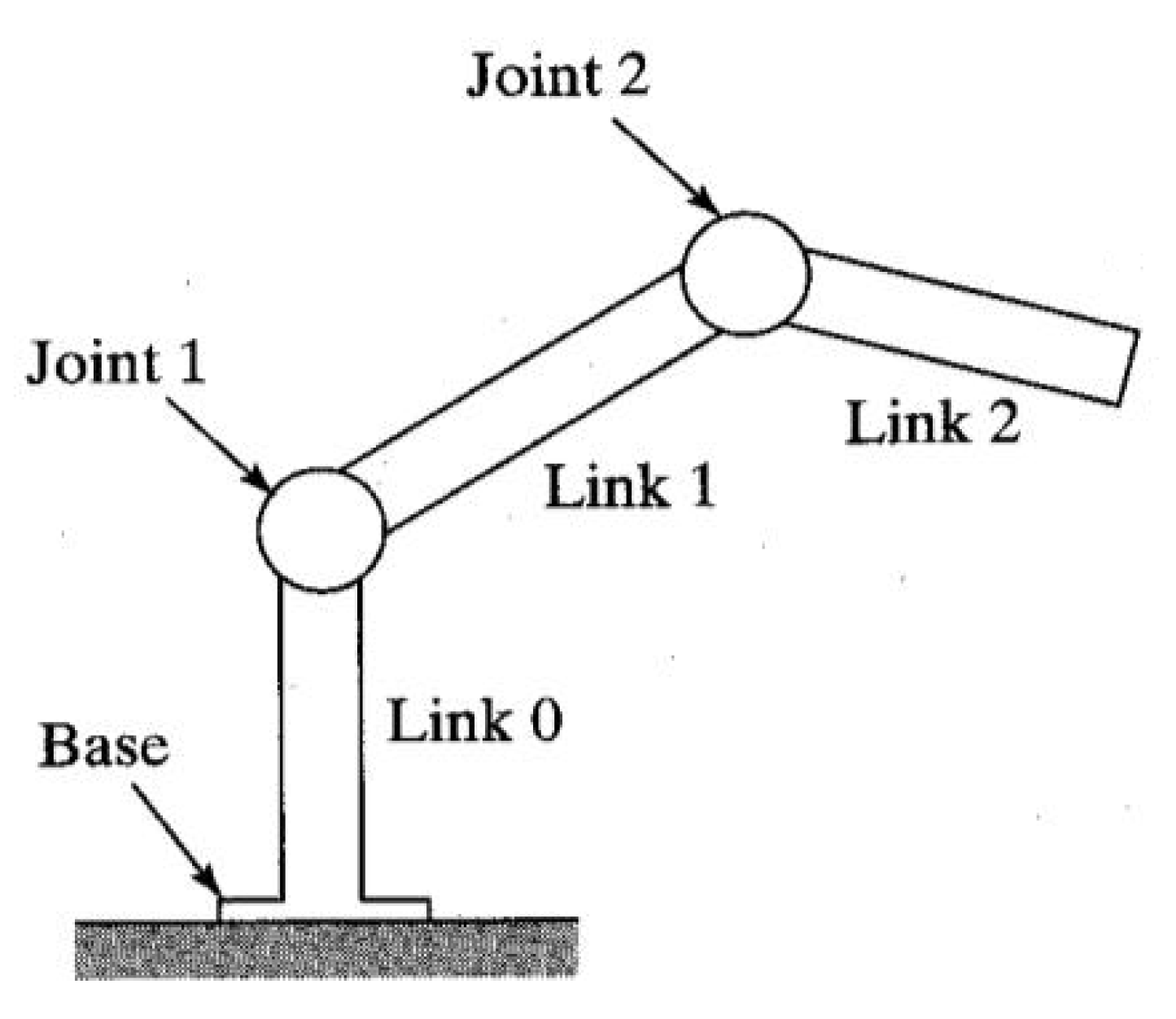

Figure 1 shows the schematic diagram of a simple two-joint robotic arm mounted on a stationary base on the floor.

Joints are similar to joints in the human body, which provide relative motion between two parts of the body. In robotic field, each joint is a different axis and provides an additional DoF of controlled relative motion between adjacent links, as shown in

Figure 1. In nearly all cases, the number of degrees of freedom is equal to the number of joints [

5].

An end-effector, as the name suggests, is an accessory device or tool which is attached at the end of the chain, and actually accomplishes the intended task. The simplest end-effector is the gripper, which is capable of opening and closing for gripping objects, but it can also be designed as a screw driver, a brush, a water jet, a thruster, or any mechanical device, according to different applications. An end-effector can also be called a robotic hand.

Links are the rigid or nearly rigid components that connect either the base, joints or end effector, and bear the load of the chain.

An actuator is a device that converts electrical, hydraulic, or pneumatic energy into robot motion.

Currently, the control sequence of a robotic manipulator is mainly achieved by solving inverse kinematic equations to move or position the end effector with respect to the fixed frame of reference [

6,

7]. The information is stored in memory by a programmable logic controller for fixed robotic tasks [

8]. Robots can be controlled in open-loop or with an exteroceptive feedback. The open-loop control does not have external sensors or environment sensing capability, but heavily relies on highly structured environments that are very sensitively calibrated. If a component is slightly shifted, the control system may have to stop and to be recalibrated. Under this strategy, the robot arm performs by following a series of positions in memory, and moving to them at various times in their programming sequence. In some more advanced robotic systems, exteroceptive feedback control is employed, through the use of monitoring sensors, force sensors, even vision or depth sensors, that continually monitor the robot’s axes or end-effector, and associated components for position and velocity. The feedback is then compared to information stored to update the actuator command so as to achieve the desired robot behavior. Either auxiliary computers or embedded microprocessors are needed to perform interface with these associated sensors and the required computational functions. These two traditional control scenarios are both heavily dependent on hardware-based solutions. For example, conveyor belts and shaker tables, are commonly used in order to physically constrain the situation.

With the advancements in modern technologies in artificial intelligence, such as deep learning, and recent developments in robotics and mechanics, both the research and industrial communities have been seeking more software based control solutions using low-cost sensors, which has less requirements for the operating environment and calibration. The key is to make minimal but effective hardware choices and focus on robust algorithms and software. Instead of hard-coding directions to coordinate all the joints, the control policy could be obtained by learning and then be updated accordingly. Deep Reinforcement Learning (DRL) is among the most promising algorithms for this purpose because no predefined training dataset is required, which ideally suits robotic manipulation and control tasks, as illustrated in

Table 1. A reinforcement learning approach might use input from a robotic arm experiment, with different sequences of movements, or input from simulation models. Either type of dynamically generated experiential data can be collected, and used to train a Deep Neural Network (DNN) by iteratively updating specific policy parameters of a control policy network.

This review paper tries to provide a brief and self-contained review of DRL in the research of robotic manipulation control. We will start with a brief introduction of deep learning, reinforcement learning, and DRL in the second section. The recent progress of robotic manipulation control with the DRL based methods will be then discussed in the third section. What need to mention here is that, we can not cover all the brilliant algorithms in detail in a short paper. For the algorithms mentioned here, one still need to refer to those original papers for the detailed information. Finally, we follow the discussion and present other real-world challenges of utilizing DRL in robotic manipulation control in the forth section, with a conclusion of our work in the last section.

2. Deep Reinforcement Learning

In this part, we will start from deep learning and reinforcement learning, to better illustrate their combination version, DRL.

2.1. Deep Learning

Deep learning is quite popular in the family of machine learning, with its outstanding performance in a variety of domains, not only in classical computer vision tasks, but also in many other practical applications; to just name a few, natural language processing, social network filtering, machine translation, bioinformatics, material inspection and board games, where these deep-learning based methods have produced results comparable to, and in some cases surpassing human expert performance. Deep learning has changed the way we process, analyze and manipulate data.

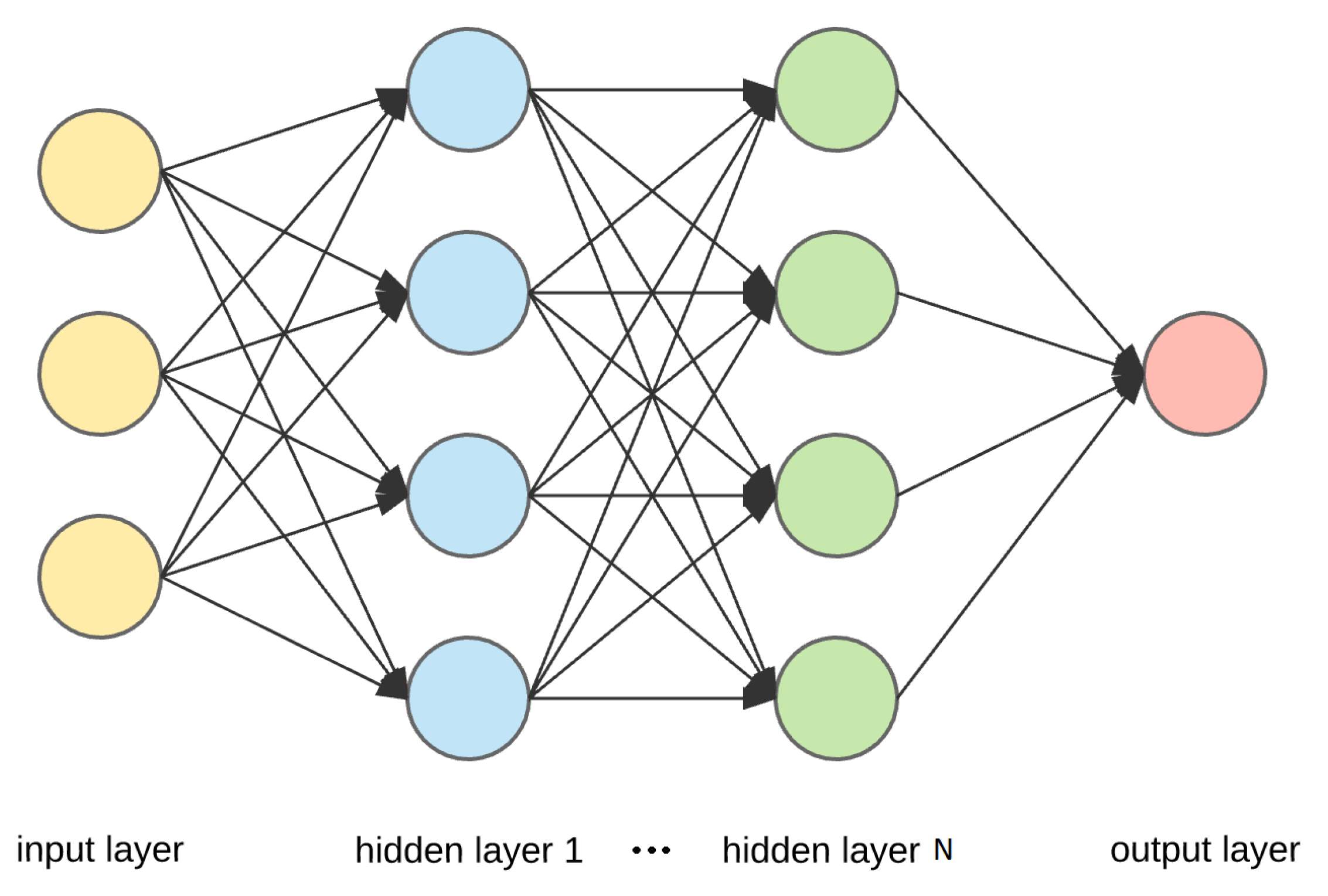

The adjective “deep” in deep learning comes from the use of multiple layers in the network.

Figure 2 demonstrates a simple deep learning architecture with basic fully connected strategy. A general comparison is conducted in

Table 2 between deep learning and traditional machine learning. With deep learning, the raw data like images are directly fed into a deep neural network multiple layers that progressively extract higher-level features, while with traditional machine learning, the relevant features of input data are manually extracted by experts. Besides, deep learning often requires a large amount of data to reach optimal results, thus it is also computationally intensive.

Deep models can be interpreted as artificial neural networks with deep structures. The idea of artificial neural networks is not something new, which can date back to 1940s [

9]. In the following years, the research community witnessed many important milestones with perceptrons [

10], backpropagation algorithm [

11,

12], Rectified Linear Unit, or ReLU [

13], Max-pooling [

14], dropout [

15], batch normalization [

16], etc. It is all these continuous algorithmic improvements, together with the emergence of large-scale training data and the fast development of high-performance parallel computing systems, such as Graphics Processing Units (GPUs) that allow deep learning to prosper nowadays [

17].

The first great success for deep learning is based on a convolutional neural network for classification in 2012 [

18]. It applies hundreds of thousands data-label pairs iteratively to train the parameters with loss computation and backpropagation. Although this technique has been improved continuously and rapidly since it took off, and is now one of the most popular deep learning structures, it is not quite suitable for robotic manipulation control, as it is too time-consuming to obtain large number of images of joints angles with labeled data to train the model. Indeed, there are some researches using convolutional neural network to learn the motor torques needed to control the robot with raw RGB video images [

19]. However, a more promising and interesting idea is using DRL, as we will discuss hereafter.

2.2. Reinforcement Learning

Reinforcement learning [

20] is a subfield of machine learning, concerned with how to find an optimal behavior strategy to maximize the outcome though trial and error dynamically and autonomously, which is quite similar with the intelligence of human and animals, as the general definition of intelligence is the ability to perceive or infer information, and to retain it as knowledge to be applied towards adaptive behaviors in the environment. This autonomous self-teaching methodology is actively studied in many domains, like game theory, control theory, operations research, information theory, system optimization, recommendation system and statistics [

21].

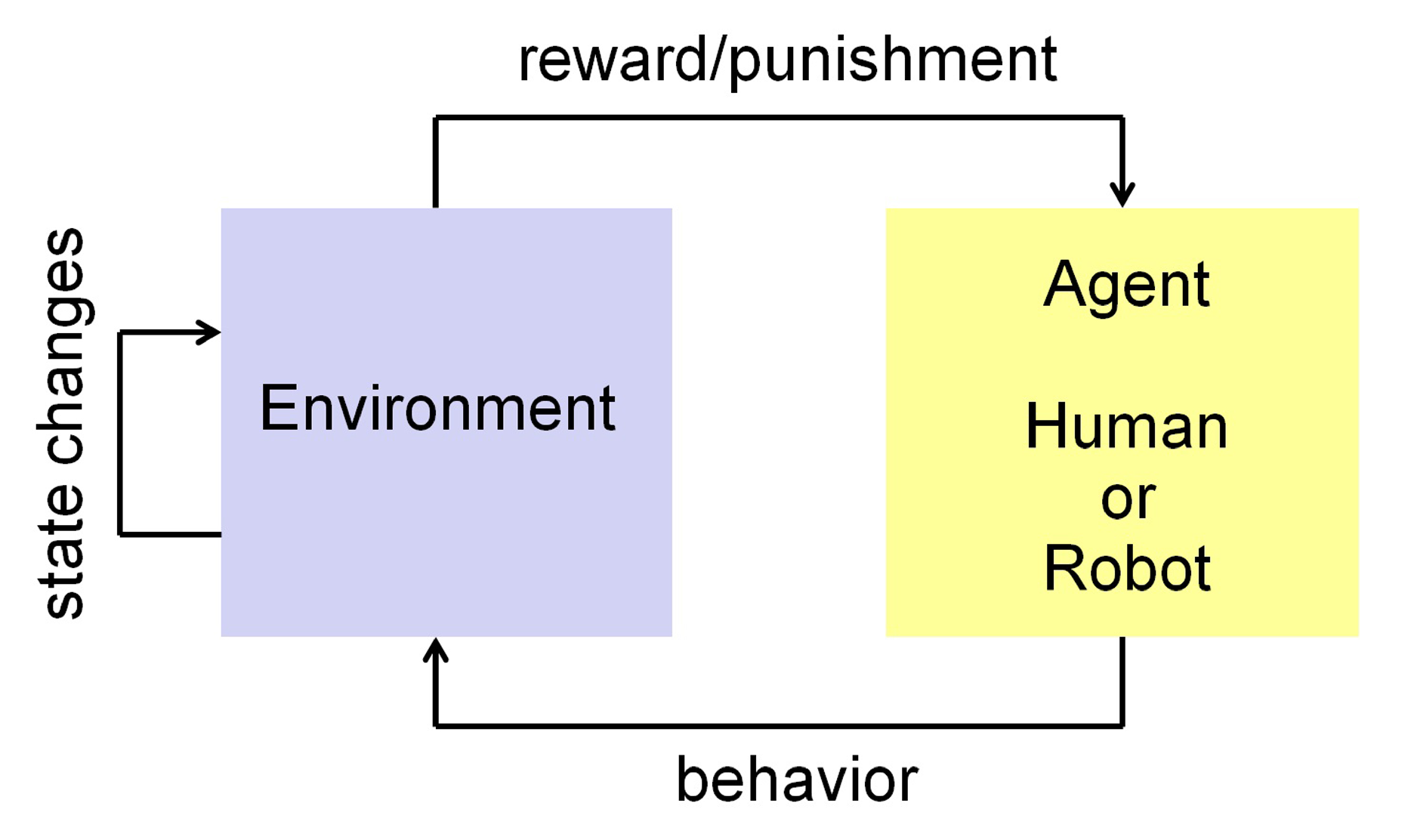

Figure 3 illustrates the universal model of reinforcement learning, which is biologically plausible, as it is inspired by learning through punishment or reward due to state changes in the environment, which are either favorable (reinforcing) to certain behaviors/actions, or unfavourable (suppressing). Natural reinforcement learning is driven by the evolutionary pressure of optimal behavioral adaptation to environmental constraints.

When an agent is in a state, it chooses an action according to its current policy and then it receives a reward from the environment for executing that action. By learning from this reward, it transitions to a new state, chooses a new action and then iterates through this process. In order to make it even easier to understand, one can compare reinforcement learning to the structure of how we play a video game, in which the character, namely the agent, engages in a series of trials, or actions, to obtain the highest score, which is reward.

Reinforcement learning is different from supervised learning, where a training set of labeled examples is available. In interactive problems like robot control domain using reinforcement learning, it is often impractical to obtain examples of desired behavior that are both correct and representative of all the situations in which the agent has to act. Instead of labels, we get rewards which in general are weaker signals. Reinforcement learning is not a kind of unsupervised learning, which is typically about finding structure hidden in collections of unlabeled data. In reinforcement learning, the agent has to learn to behave in the environment based only on those sparse and time-delayed rewards, instead of trying to find hidden structure. Therefore, reinforcement learning can be considered as a third machine learning paradigm, alongside supervised learning and unsupervised learning and perhaps other future paradigms as well [

22].

2.3. Deep Reinforcement Learning

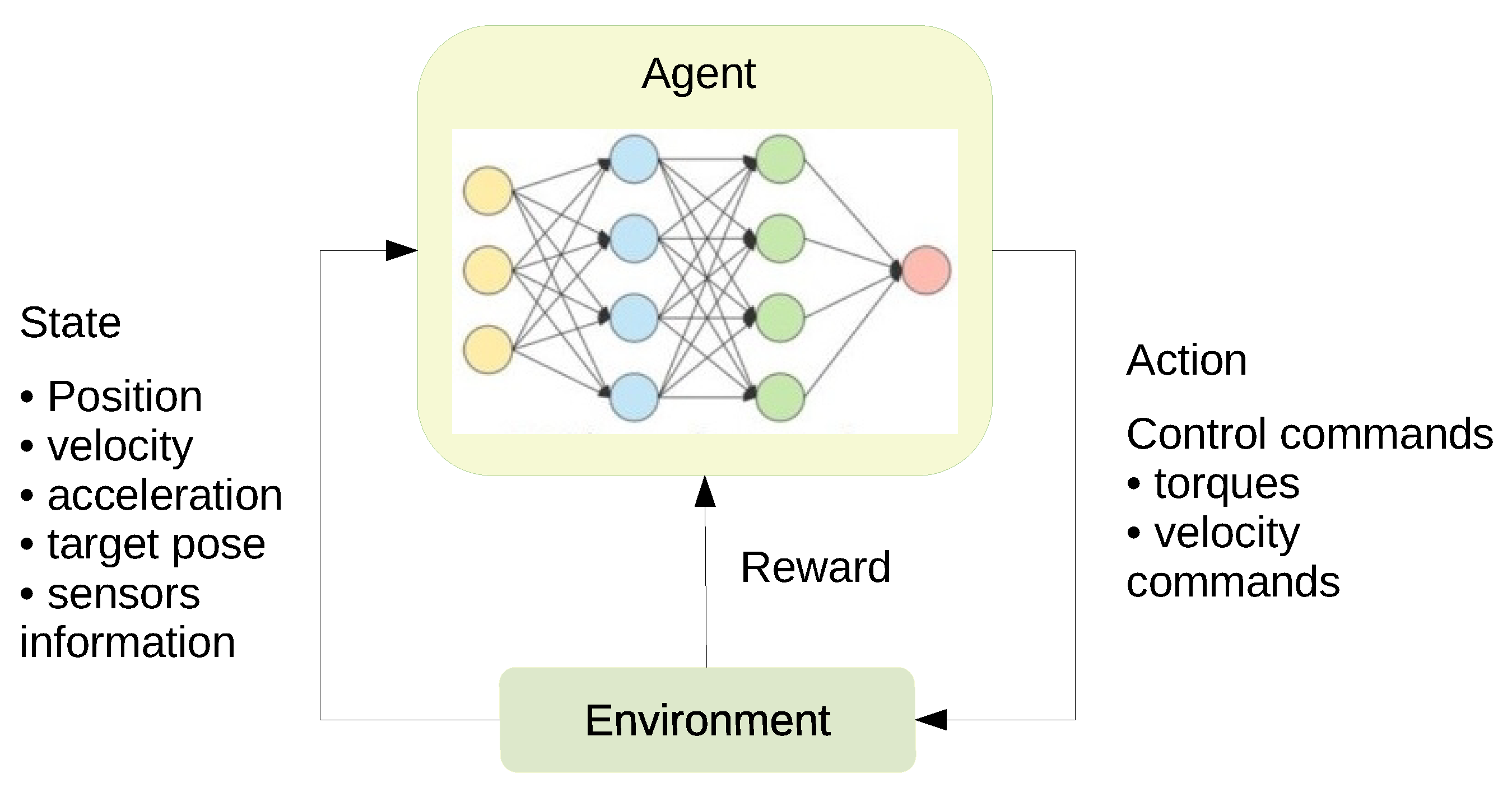

As the name suggests, DRL emerges from reinforcement learning and deep learning, and can be regarded as the bridge between conventional machine learning and true artificial intelligence, as illustrated in

Figure 4. It combines both the technique of giving rewards based on actions from reinforcement learning, and the idea of using a neural network for learning feature representations from deep learning. Traditional reinforcement learning is limited to domains with simple state representations, while DRL makes it possible for agents to make decisions from high-dimensional and unstructured input data [

23] using neural networks to represent policies. In the past few years, research in DRL has been highly active with a significant amount of progress, along with the rising interest in deep learning.

FDRL has gained a lot of attraction, especially due to its well-known achievement in games. Beginning around 2013, DeepMind showed impressive learning results in Atari video games at human level proficiency with no hand coded features using unprocessed pixels for input [

24,

25], which can be regarded as the creation of this subfield. Another milestone was in 2016 when AlphaGo [

26] first beat a human professional player of Go, which is a game from ancient China. This computer program was improved by AlphaZero [

27] in 2017, together with its efficiency also in chess and shogi. In 2019, Pluribus [

28] showed its success over top human professionals in multiplayer poker, and OpenAI Five [

29] beat the previous world champions in a Dota 2 demonstration match.

Apart from the field of games, it has large potential in other domains, including but not limited to, robotics [

30], natural language processing [

31], computer vision [

32], transportation [

33], finance [

34] and healthcare [

35]. Many exciting breakthroughs of this research have been published by both of giant companies, which include Google Brain, DeepMind and Facebook, and top academic labs such as in Berkeley, Stanford and Carnegie Mellon University, together with some independent non-profit research organizations like openAI and some other industrially focused companies.

The most commonly used DRL algorithms can be categorized in value-based methods, policy gradient methods and model-based methods. The value-based methods construct a value function for defining a policy, which is based on the Q-learning algorithm [

36] using the Bellman equation [

37] and its variant, the fitted Q-learning [

38,

39]. The Deep Q-Network (DQN) algorithm used with great success in [

25] is the representative of this class, followed by various extensions, such as double DQN [

40], Distributional DQN [

41,

42], etc. A combination of these improvements has been studied in [

43] with a state-of-the-art performance on the Atari 2600 benchmark, both in terms of data efficiency and final performance.

However, the DQN-based approaches are limited to problems with discrete and low-dimensional action spaces, and deterministic policies, while policy gradient methods are able to work with continuous action spaces and can also represent stochastic policies. Thanks to variants of stochastic gradient ascent with respect to the policy parameters, policy gradient methods are developed to find a neural network parameterized policy to maximize the expected cumulative reward [

44]. Like other policy-based methods, policy gradient methods typically require an estimate of a value function for the current policy and a sample efficient approach is to use an actor-critic architecture that can work with off-policy data. The Deep Deterministic Policy Gradient (DDPG) algorithm [

45,

46] is a representation of this type of methods. There are also some researchers working on combining policy gradient methods with Q-learning [

47].

Both value-based and policy-based methods do not make use of any model of the environment and are also called model-free methods, which limits their sample efficiency. On the contrary, in the model-based methods, a model of the environment is either explicitly given or learned from experience by the function approximators [

48,

49] in conjunction with a planning algorithm. In order to obtain advantages from both sides, there are many researches available integrating model-free and model-based elements [

50,

51,

52], which are among the key areas for the future development of DRL algorithms [

53].

3. Deep Reinforcement Learning in Robotic Manipulation Control

In this section, the recent progress of DRL in the domain of robotic manipulation control will be discussed. Two of the most important challenges here concern sample efficiency and generalization. The goal of DRL in the context of robotic manipulation control is to train a deep policy neural network, like in

Figure 2, to detect the optimal sequence of commands for accomplishing the task. As illustrated in

Figure 5, the input is the current state, which can include the angles of joints of the manipulator, position of the end effector, and their derivative information, like velocity and acceleration. Moreover, the current pose of target objects can also be counted in the current state, together with the state of corresponding sensors if there are some equipped in the environment. The output of this policy network is an action indicating control commands to be implemented to each actuator, such as torques or velocity commands. When the robotic manipulator accomplishes a task, a positive reward will be generated. With these delayed and weak signals, the algorithm is expected to find out the most successful control strategy for the robotic manipulation.

3.1. Sample Efficiency

As we know, in supervised deep learning, a training set of input-output pairs are fed to neutral networks to construct an approximation that maps an input to an output [

54,

55]. This learned target function can then be used for labeling new examples when a test set is given. The study of sample efficiency for supervised deep learning tries to answer the question of how large a training set is required in order to learn a good approximation to the target concept [

56]. Accordingly, for DRL in robotic manipulation control, the study of sample efficiency discusses how much data need to be collected in order to build an optimal policy to accomplish the designed task.

The sample efficiency for DRL is considerably more challenging than that for supervised learning for various reasons [

57]. First, the agent can not receive a training set provided by the environment unilaterally, but information which is determined by both the actions it takes and dynamics of the environment. Second, although the agent desires to maximize the long-term reward, the agent can only observe the immediate reward. Additionally, there is no clear boundary between training and test phases. The time the agent spends trying to improve the policy often comes at the expense of utilizing this policy, which is often referred to as the exploration–exploitation trade-off [

53].

Since gathering experiences by interacting with the environment for robots is relatively expensive, a number of approaches have been proposed in the literature to address sample efficient learning. For example, in [

58], which is the first demonstration of using DRL on a robot, trajectory optimization techniques and policy search methods with neural networks were applied to achieve reasonable sample efficient learning. A range of dynamic manipulation behaviors were learned, such as stacking large Lego blocks at a range of locations, threading wooden rings onto a tight-fitting peg, screwing caps onto different bottles, assembling a toy airplane by inserting the wheels into a slot. Videos and other supplementary materials can be found online [

59]. Millimeter level precision can be achieved with dozens of examples by this algorithm, but the knowledge of the explicit state of the world at training time is required to enable sample efficiency.

In [

60], a novel technique called Hindsight Experience Replay (HER) was proposed. Each episode was replayed but with a different goal than the one the agent was trying to achieve. With this clever strategy for augmenting samples, the policy for the pick-and-place task, which was learned using only sparse binary rewards, performed well on the physical robot without any finetuning. The video presenting their experiments is available online [

61]. But this algorithm relies on special value function approximators, which might not be trivially applicable to every problem. Besides, this technique can not be extended well with the use of reward shaping.

Some other researchers try to achieve sample efficient learning through demonstrations in imitation learning [

62,

63], where a mentor provides demonstrations to replace the random neural network initialization. [

64] was an extension of DDPG algorithm [

46] for tasks with sparse rewards, where both demonstrations and actual interactions were used to fill a replay buffer. Experiments of four simulation tasks and a real robot clip insertion problem were conducted. A video demonstrating the performance can be viewed online [

65]. However, the object location and the explicit states of joints, such as position and velocity, must be provided to move from simulation to real-world, which limits its application to high-dimensional data.

Based on the work of generative adversarial imitation learning in [

66,

67] used Generative Adversarial Networks (GANs) [

68] to generate an additional training data to solve sample complexity problem, by proposing a multi-modal imitation learning framework that was able to handle unstructured demonstrations of different skills. The performance of this framework was evaluated in simulation for several tasks, such as reacher and gripper-pusher. The video of simulated experiments is available online [

69]. Like most GANs techniques, it is quite difficult to train and many samples are required.

3.2. Generalization

Generalization, refers to the capacity to use previous knowledge from a source environment to achieve a good performance in a target environment. It is widely seen as a step necessary to produce artificial intelligence that behaves similarly to humans. Generalization may improve the characteristics of learning the target task by increasing the starting reward on the target domain, the rate of learning for the target task, and the maximum reward achievable [

70].

In [

71], Google proposed a method to learn hand–eye coordination for robot grasp task. In the experiment phrase, they collected about 800,000 grasp attempts over two months from multiple robots operating simultaneously, and then used these data to train a controller that work across robots. These identical uncalibrated robots had differences in camera placement, gripper wear or tear. Besides, a second robotic platform with eight robots collected a dataset consisting of over 900,000 grasp attempts, which was used to test transfer between robots. The results of transfer experiment illustrated that data from different robots can be combined to learn more reliable and effective grasping. One can refer to the video online [

72] for supplementary results. In contrast to many prior methods, there is no simulation data or explicit representation, but an end-to-end training directly from image pixels to gripper motion in task space by learning just from this high-dimensional representation. Despite of its attractive success, this method still can not obtain satisfactory accuracy for real application, let alone it is very hardware and data intensive.

To develop generalization capacities, some researchers turn to meta learning [

73], which is also known as learning to learn. The goal of meta learning is to train a model on a variety of learning tasks, such that it can solve new learning tasks using only a small number of training samples [

74]. In [

75], meta learning was combined with aforementioned imitation learning in order to learn to perform tasks quickly and efficiently in complex unstructured environments. The approach was evaluated on planar reaching and pushing tasks in simulation, and visual placing tasks on a real robot, where the goal is to learn to place a new object into a new container from a single demonstration. The video results are available online [

76]. The proposed meta-imitation learning method allows a robot to acquire new skills from just a single visual demonstration, but the accuracy needs to be further improved.

There are also many other researches available to tackle other challenges in this domain. For example, no matter whether a control policy is learned directly for a specific task, or transferred from previous tasks, another important but understudied question is how well will the policy performs, namely policy evaluation problem. A behavior policy search algorithm was proposed in [

77] for more efficiently estimating the performance of learned policies.

4. Discussion

Although algorithms of robotic manipulation control using DRL have been emerging in large numbers in the past few years, some even with demonstration videos showing how an experimental robotic manipulator accomplishes a task with the policy learned, as we have illustrated above, the challenges of learning robust and versatile manipulation skills for robots with DRL are still far from being resolved satisfactorily for real-world application.

Currently, robotic manipulation control with DRL may be suited to fault tolerant tasks, like picking up and placing objects, where a disaster will not be caused if the operation fails occasionally. It is quite attractive in situations, where there is enough variation that the explicit modeling algorithm does not work. Potential applications can be found in warehouse automation to replace human pickers for objects of different size and shape, clothes and textiles manufacturing, where cloth is difficult to manipulate by nature, and food preparation industry, where, for example, every chicken nugget looks different, and it is not going to matter terribly if a single chicken nugget is destroyed.

However, even in this kind of applications, DRL-based methods are not widely used in real-world robotic manipulation. The reasons are multiple, including the two concerns we have discussed in the previous section, sample efficiency and generation, where more progress is still required, as both gathering experiences by interacting with the environment and collecting expert demonstrations for imitation learning are expensive procedures, especially in situations where robots are heavy, rigid and brittle, and it will cost too much if the robot is damaged in exploration. Another very important issue is safety guarantee. Not like simulation tasks, we need to be very careful that learning algorithms are safe, reliable and predictable in real scenarios, especially if we move to other applications that require safe and correct behaviors with high confidence, such as surgery or household robots taking care of the elder or the disabled. There are also other challenges including but not limited to the algorithm explainability, the learning speed, high-performance computational equipment requirements.

5. Conclusions

The scalability of DRL, discussed and illustrated here, is well-suited for high-dimensional data problems in a variety of domains. In this paper, we have presented a brief review of the potential of DRL for policy detection in robotic manipulation control and discussed the current research and development status of real-world applications. Through a joint development of deep learning and reinforcement learning, with inspiration from other machine learning methods like imitation learning, GANs, or meta learning, new algorithmic solutions can emerge, and are still needed, to meet challenges in robotic manipulation control for practical applications.

Author Contributions

Conceptualization, R.L. and B.D.-L.; methodology, R.L. and B.D.-L.; investigation, R.L., F.N., P.Z. and B.D.-L.; resources, R.L., F.N., P.Z. and B.D.-L.; writing—original draft preparation, R.L.; writing—review and editing, F.N., P.Z. and B.D.-L.; supervision, B.D.-L.; project administration, B.D.-L. and M.d.M.; funding acquisition, B.D.-L. and M.d.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research work is part of a project funded by the University of Strasbourg’s Initiative D’EXellence (IDEX).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The support of the CNRS is gratefully acknowledged.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DoF | Degrees of Freedom |

| DRL | Deep Reinforcement Learning |

| DNN | Deep Neural Network |

| DQN | Deep Q-Network |

| DDPG | Deep Deterministic Policy Gradient |

| HER | Hindsight Experience Replay |

| GANs | Generative Adversarial Networks |

References

- Dresp-Langley, B.; Nageotte, F.; Zanne, P.; Mathelin, M.D. Correlating grip force signals from multiple sensors highlights prehensile control strategies in a complex task-user system. Bioengineering 2020, 7, 143. [Google Scholar] [CrossRef] [PubMed]

- Eranki, V.K.P.; Reddy Gurudu, R. Design and Structural Analysis of a Robotic Arm. Master’s Thesis, Blekinge Institute of Technology, Karlskrona, Sweden, 2016. [Google Scholar]

- Christ, R.D.; Wernli, R.L. The ROV Manual: A User Guide for Remotely Operated Vehicles, 2nd ed.; Butterworth-Heinemann: Oxford, UK, 2013; pp. 503–534. [Google Scholar]

- Marghitu, D.B. Mechanical Engineer’s Handbook; Academic Press: San Diego, CA, USA, 2001; pp. 611–714. [Google Scholar]

- Savatekar, R.D.; Dum, A.A. Design of control system for articulated robot using leap motion sensor. Int. Res. J. Eng. Technol. 2016, 3, 1407–1417. [Google Scholar]

- Wei, H.; Bu, Y.; Zhu, Z. Robotic arm controlling based on a spiking neural circuit and synaptic plasticity. Biomed. Signal Process. Control 2020, 55, 101640. [Google Scholar] [CrossRef]

- Božek, P.; Al Akkad, M.A.; Blištan, P.; Ibrahim, N.I. Navigation control and stability investigation of a mobile robot based on a hexacopter equipped with an integrated manipulator. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417738103. [Google Scholar]

- Safdar, B. Theory of Robotics Arm Control with PLC; Saimaa University of Applied Sciences: South Karelia, Finland, 2015. [Google Scholar]

- Pitts, W.; McCulloch, W.S. How we know universals the perception of auditory and visual forms. Bull. Math. Biophys. 1947, 9, 127–147. [Google Scholar] [CrossRef] [PubMed]

- Rosenblatt, F. Perceptron simulation experiments. Proc. IRE 1960, 48, 301–309. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; California Univ San Diego La Jolla Inst for Cognitive Science: San Diego, CA, USA, 1985. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Jarrett, K.; Kavukcuoglu, K.; Ranzato, M.A.; LeCun, Y. What is the best multi-stage architecture for object recognition. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2146–2153. [Google Scholar]

- Ciresan, D.C.; Meier, U.; Masci, J.; Gambardella, L.M.; Schmidhuber, J. Flexible, high performance convolutional neural networks for image classification. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 6–12 July 2011. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Liu, R. Multispectral Images-Based Background Subtraction Using Codebook and Deep Learning Approaches. Ph.D. Thesis, Université Bourgogne Franche-Comté, Belfort, France, 2020. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Levine, S.; Finn, C.; Darrell, T.; Abbeel, P. End-to-end training of deep visuomotor policies. J. Mach. Learn. Res. 2016, 17, 1334–1373. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement learning: A survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Kober, J.; Bagnell, J.A.; Peters, J. Reinforcement learning in robotics: A survey. Int. J. Robot. Res. 2013, 32, 1238–1274. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Dresp-Langley, B.; Ekseth, O.K.; Fesl, J.; Gohshi, S.; Kurz, M.; Sehring, H.W. Occam’s Razor for Big Data? On detecting quality in large unstructured datasets. Appl. Sci. 2019, 9, 3065. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. Mastering chess and shogi by self-play with a general reinforcement learning algorithm. arXiv 2017, arXiv:1712.01815. [Google Scholar]

- Brown, N.; Sandholm, T. Superhuman AI for multiplayer poker. Science 2019, 365, 885–890. [Google Scholar] [CrossRef]

- Berner, C.; Brockman, G.; Chan, B.; Cheung, V.; Dębiak, P.; Dennison, C.; Farhi, D.; Fischer, Q.; Hashme, S.; Hesse, C.; et al. Dota 2 with large scale deep reinforcement learning. arXiv 2019, arXiv:1912.06680. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the IEEE international conference on robotics and automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Sharma, A.R.; Kaushik, P. Literature survey of statistical, deep and reinforcement learning in natural language processing. In Proceedings of the International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 350–354. [Google Scholar]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Young Choi, J. Action-decision networks for visual tracking with deep reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2711–2720. [Google Scholar]

- Farazi, N.P.; Ahamed, T.; Barua, L.; Zou, B. Deep Reinforcement Learning and Transportation Research: A Comprehensive Review. arXiv 2015, arXiv:2010.06187. [Google Scholar]

- Mosavi, A.; Ghamisi, P.; Faghan, Y.; Duan, P. Comprehensive Review of Deep Reinforcement Learning Methods and Applications in Economics. arXiv 2015, arXiv:2004.01509. [Google Scholar]

- Liu, Y.; Logan, B.; Liu, N.; Xu, Z.; Tang, J.; Wang, Y. Deep reinforcement learning for dynamic treatment regimes on medical registry data. In Proceedings of the IEEE International Conference on Healthcare Informatics (ICHI), Park City, UT, USA, 23–26 August 2017; pp. 380–385. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Bellman, R.E.; Dreyfus, S.E. Applied Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 2015. [Google Scholar]

- Gordon, G.J. Stable fitted reinforcement learning. Adv. Neural Inf. Process. Syst. 1995, 8, 1052–1058. [Google Scholar]

- Riedmiller, M. Neural fitted Q iteration—First experiences with a data efficient neural reinforcement learning method. In Proceedings of the European Conference on Machine Learning, Porto, Portugal, 3–7 October 2005; pp. 317–328. [Google Scholar]

- Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double Q-Learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2094–2100. [Google Scholar]

- Bellemare, M.G.; Dabney, W.; Munos, R. A distributional perspective on reinforcement learning. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 449–458. [Google Scholar]

- Dabney, W.; Rowl, M.; Bellemare, M.G.; Munos, R. Distributional reinforcement learning with quantile regression. In Proceedings of the 32th AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Hessel, M.; Modayil, J.; Van Hasselt, H.; Schaul, T.; Ostrovski, G.; Dabney, W.; Horgan, D.; Piot, B.; Azar, M.; Silver, D. Rainbow: Combining improvements in deep reinforcement learning. arXiv 2017, arXiv:1710.02298. [Google Scholar]

- Salimans, T.; Ho, J.; Chen, X.; Sidor, S.; Sutskever, I. Evolution strategies as a scalable alternative to reinforcement learning. arXiv 2017, arXiv:1703.03864. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the 31st International Conference on International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- O’Donoghue, B.; Munos, R.; Kavukcuoglu, K.; Mnih, V. Combining policy gradient and Q-learning. arXiv 2016, arXiv:1611.01626. [Google Scholar]

- Oh, J.; Guo, X.; Lee, H.; Lewis, R.L.; Singh, S. Action-conditional video prediction using deep networks in atari games. Adv. Neural Inf. Process. Syst. 2015, 28, 2863–2871. [Google Scholar]

- Nagab, I.A.; Kahn, G.; Fearing, R.S.; Levine, S. Neural network dynamics for model-based deep reinforcement learning with model-free fine-tuning. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7559–7566. [Google Scholar]

- Silver, D.; Hasselt, H.; Hessel, M.; Schaul, T.; Guez, A.; Harley, T.; Dulac-Arnold, G.; Reichert, D.; Rabinowitz, N.; Barreto, A.; et al. The predictron: End-to-end learning and planning. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 3191–3199. [Google Scholar]

- Tamar, A.; Wu, Y.; Thomas, G.; Levine, S.; Abbeel, P. Value iteration networks. Adv. Neural Inf. Process. Syst. 2016, 29, 2154–2162. [Google Scholar]

- François-Lavet, V.; Bengio, Y.; Precup, D.; Pineau, J. Combined reinforcement learning via abstract representations. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3582–3589. [Google Scholar]

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An introduction to deep reinforcement learning. arXiv 2018, arXiv:1811.12560. [Google Scholar]

- Wandeto, J.M.; Dresp-Langley, B. The quantization error in a Self-Organizing Map as a contrast and colour specific indicator of single-pixel change in large random patterns. Neural Netw. 2019, 119, 273–285. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B.; Wandeto, J.M. Pixel precise unsupervised detection of viral particle proliferation in cellular imaging data. Inform. Med. Unlocked 2020, 20, 100433. [Google Scholar] [CrossRef] [PubMed]

- Anthony, M.; Bartlett, P.L. Neural Network Learning: Theoretical Foundations; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Kakade, S.M. On the Sample Complexity of Reinforcement Learning. Ph.D. Thesis, University of London, London, UK, 2003. [Google Scholar]

- Sergey, L.; Wagener, N.; Abbeel, P. Learning contact-rich manipulation skills with guided policy search. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 30 May 2015; pp. 26–30. [Google Scholar]

- Learning Contact-Rich Manipulation Skills with Guided Policy Search. Available online: http://rll.berkeley.edu/icra2015gps/ (accessed on 22 January 2021).

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, O.P.; Zaremba, W. Hindsight experience replay. Adv. Neural Inf. Process. Syst. 2017, 30, 5048–5058. [Google Scholar]

- Hindsight Experience Replay. Available online: https://goo.gl/SMrQnI (accessed on 22 January 2021).

- Tai, L.; Zhang, J.; Liu, M.; Burgard, W. A survey of deep network solutions for learning control in robotics: From reinforcement to imitation. arXiv 2016, arXiv:1612.07139. [Google Scholar]

- Bagnell, A.J. An Invitation to Imitation; Technical Report; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2015. [Google Scholar]

- Vecerik, M.; Hester, T.; Scholz, J.; Wang, F.; Pietquin, O.; Piot, B.; Heess, N.; Rothörl, T.; Lampe, T.; Riedmiller, M. Leveraging demonstrations for deep reinforcement learning on robotics problems with sparse rewards. arXiv 2017, arXiv:1707.08817. [Google Scholar]

- Leveraging Demonstrations for Deep Reinforcement Learning on Robotics Problems with Sparse Rewards. Available online: https://www.youtube.com/watch?v=TyOooJC$_$bLY (accessed on 22 January 2021).

- Ho, J.; Ermon, S. Generative adversarial imitation learning. arXiv 2016, arXiv:1606.03476. [Google Scholar]

- Hausman, K.; Chebotar, Y.; Schaal, S.; Sukhatme, G.; Lim, J.J. Multi-modal imitation learning from unstructured demonstrations using generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 1235–1245. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Multi-modal Imitation Learning from Unstructured Demonstrations using Generative Adversarial Nets. Available online: https://sites.google.com/view/nips17intentiongan (accessed on 22 January 2021).

- Spector, B.; Belongie, S. Sample-efficient reinforcement learning through transfer and architectural priors. arXiv 2018, arXiv:1801.02268. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Learning Hand-Eye Coordination for Robotic Grasping. Available online: https://youtu.be/cXaic$_$k80uM (accessed on 22 January 2021).

- Thrun, S.; Pratt, L. Learning to Learn; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. arXiv 2017, arXiv:1703.03400. [Google Scholar]

- Finn, C.; Yu, T.; Zhang, T.; Abbeel, P.; Levine, S. One-shot visual imitation learning via meta-learning. arXiv 2017, arXiv:1709.04905. [Google Scholar]

- One-Shot Visual Imitation Learning via Meta-Learning. Available online: https://sites.google.com/view/one-shot-imitation (accessed on 22 January 2021).

- Hanna, J.P.; Thomas, P.S.; Stone, P.; Niekum, S. Data-efficient policy evaluation through behavior policy search. arXiv 2017, arXiv:1706.03469. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).