An Architecture for Safe Child–Robot Interactions in Autism Interventions

Abstract

1. Introduction

- The robot is able to identify possible risks of interactions with the child in autism interventions that take place in clinics or home settings.

- An interaction assessment is to be performed by the robot in real time and before the actual intervention, avoiding the omission of risks.

- The implementation of robots in autism interventions will be empowered, showing whether the interaction between the child and robot is safe or not.

- The robot is able to show that it is capable of helping children, gaining the trust of specialists and parents in order to be accepted as an assistive tool.

- Better relations between child and robot are established, ensuring the child is able to benefit the most from it.

- Therapists are aided in creating more personalized interventions, according to the needs of every child.

2. Background

2.1. Socially Assistive Robots in Autism Interventions

2.2. Safety in Human–Robot Interaction

3. Materials and Methods

3.1. Child–Robot Interaction Taxonomy in Autism Interventions

- Task: the child’s deficits that are going to be developed during an interaction;

- Environment of interaction: the setting in which the interaction could take place;

- Type of interaction: the relationship between child, robot, and therapist and the duration of it;

- Robot appearance: different types of robots that are being used in an interaction;

- Roles of robot: the potential roles that a robot can take during the interaction;

- Interaction modalities: means that a robot uses for interaction with the child;

- Roles of therapist: the acts that a therapist may perform during interaction;

- Interaction stage: the phases that an interaction contains;

- Risks: possible risks that may or may not occur during the interaction with the robot.

3.1.1. Task

3.1.2. Environment of Interaction

3.1.3. Type of Interaction

3.1.4. Robot Appearance

3.1.5. Roles of Robot

3.1.6. Interaction Modalities

3.1.7. Roles of the Therapist

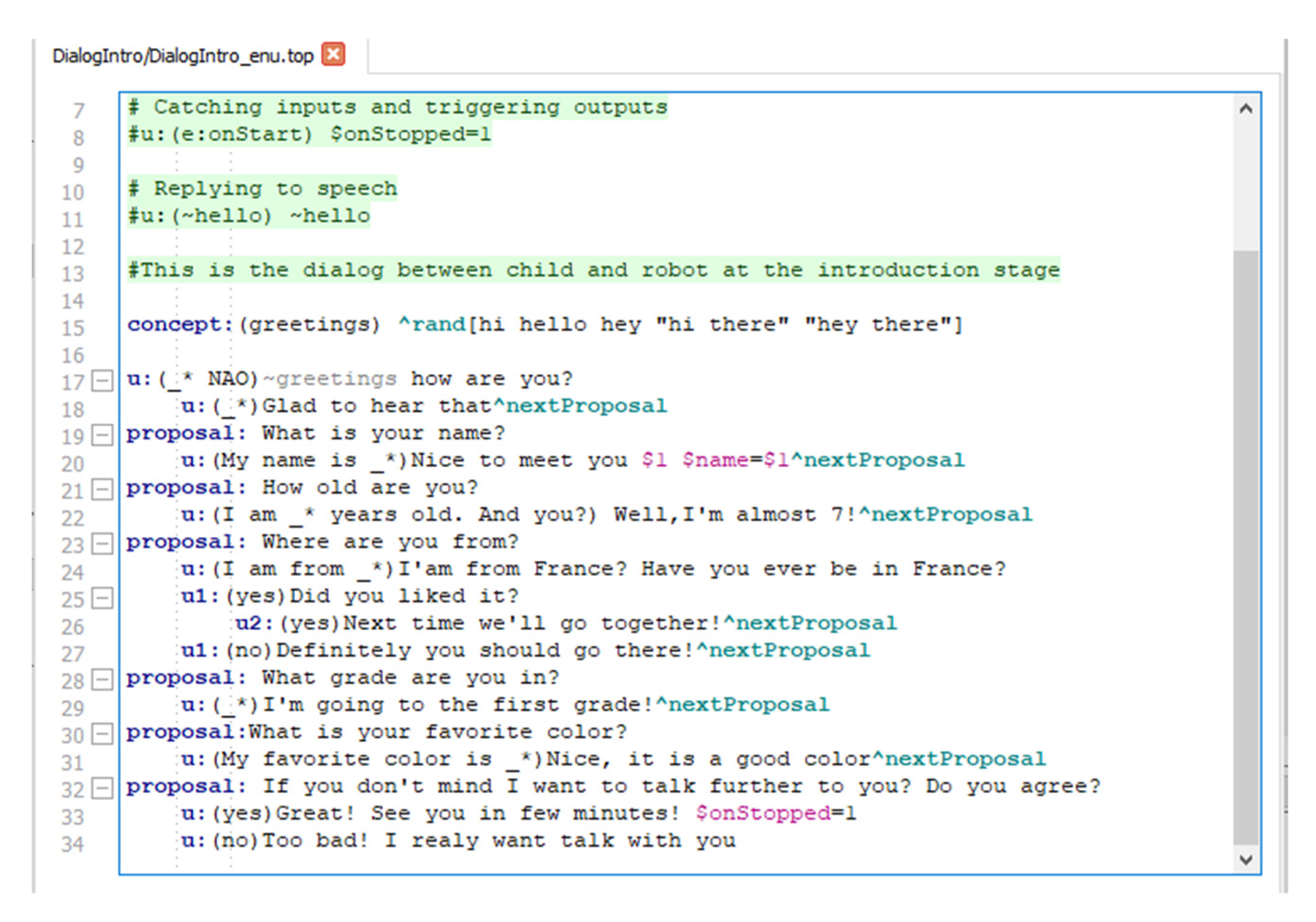

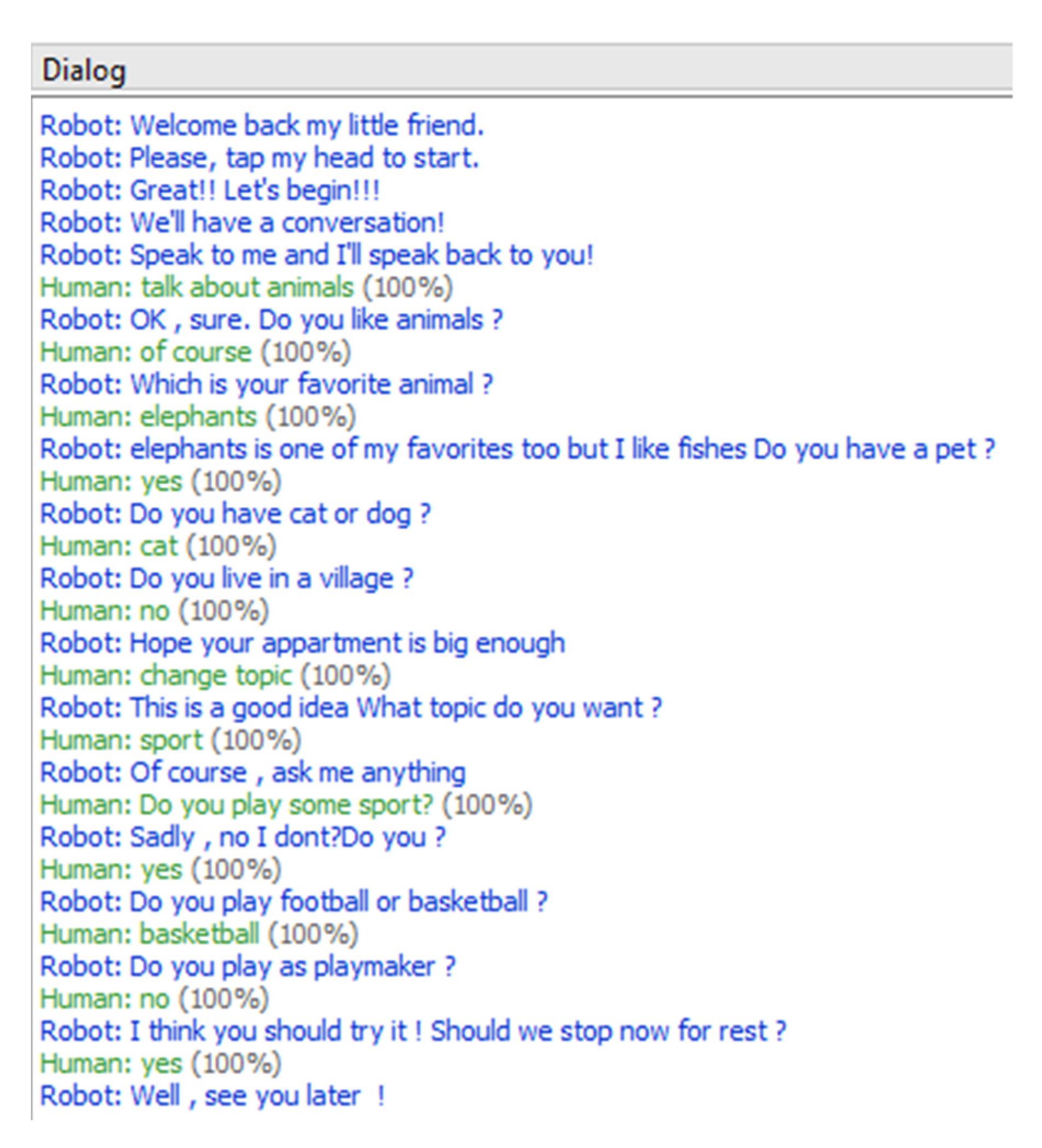

3.1.8. Interaction Stage

3.1.9. Risks

3.2. Modelling the Interaction

3.3. Designing the Safety Architecture

3.3.1. Risk Assessment Process

3.3.2. Safety Architecture for Autism Interventions

<Rule1>::=<emotion_recognition>:IFchild_detected|object_distance≤1000mmTHEN“put_object_aside”

| Algorithm 1. RiskAssessmentProcess. |

|

| Algorithm 2. ProposeCountermeasure. |

|

| Algorithm 3. TaskDetermination. |

|

3.3.3. Simulation Testing

4. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- American Psychiatric Association. Neurodevelopmental Disorders: DSM-5® Selections; American Psychiatric Association: Washington, DC, USA, 2015. [Google Scholar]

- Lord, C.; Risi, S.; DiLavore, P.S.; Shulman, C.; Thurm, A.; Pickles, A. Autism From 2 to 9 Years of Age. Arch. Gen. Psychiatry 2006, 63, 694–701. [Google Scholar] [CrossRef] [PubMed]

- Matarić, M.J.; Scassellati, B. Socially Assistive Robotics. In Springer Handbook of Robotics; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2016; pp. 1973–1994. [Google Scholar]

- Huijnen, C.A.; Lexis, M.A.; Jansens, R.; de Witte, L.P. Roles, strengths and challenges of using robots in interventions for children with autism spectrum disorder (ASD). J. Autism Dev. Disord. 2019, 49, 11–21. [Google Scholar] [CrossRef] [PubMed]

- Paola, P.; Tonacci, A.; Tartarisco, G.; Billeci, L.; Ruta, L.; Gangemi, S.; Pioggia, G. Autism and social robotics: A systematic review. Autism Res. 2016, 9, 165–183. [Google Scholar] [CrossRef]

- Feil-Seifer, D.; Mataric, M.J. Defining socially assistive robotics. In Proceedings of the 9th International Conference on Rehabilitation Robotics 2005 (ICORR 2005), Chicago, IL, USA, 28 June–1 July 2005; pp. 465–468. [Google Scholar]

- Robins, B.; Dautenhahn, K.; Dubowski, J. Does appearance matter in the interaction of children with autism with a humanoid robot? Interact. Stud. 2006, 7, 479–512. [Google Scholar] [CrossRef]

- Cabibihan, J.-J.; Javed, H.; Ang, M.; Aljunied, S.M. Why Robots? A Survey on the Roles and Benefits of Social Robots in the Therapy of Children with Autism. Int. J. Soc. Robot. 2013, 5, 593–618. [Google Scholar] [CrossRef]

- Feil-Seifer, D.; Viterbi, U. Development of Socially Assistive Robots for Children with Autism Spectrum Disorders; Technical Report CRES-09-001; USC Interaction Lab: Los Angeles, CA, USA, 2009. [Google Scholar]

- Huijnen, C.A.; Lexis, M.A.; De Witte, L.P. Robots as New Tools in Therapy and Education for Children with Autism. Int. J. Neurorehabilit. 2017, 4, 1–4. [Google Scholar] [CrossRef]

- Scassellati, B. How Social Robots Will Help Us to Diagnose, Treat, and Understand Autism. In Theory and Applications for Control of Aerial Robots in Physical Interaction through Tethers; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2007; pp. 552–563. [Google Scholar]

- Begum, M.; Serna, R.W.; Yanco, H.A. Are Robots Ready to Deliver Autism Interventions? A Comprehensive Review. Int. J. Soc. Robot. 2016, 8, 157–181. [Google Scholar] [CrossRef]

- Kim, E.; Paul, R.; Shic, F.; Scassellati, B. Bridging the Research Gap: Making HRI Useful to Individuals with Autism. J. Hum. Robot Interact. 2012, 1, 26–54. [Google Scholar] [CrossRef]

- Kim, E.; Berkovits, L.D.; Bernier, E.P.; Leyzberg, D.; Shic, F.; Paul, R.; Scassellati, B. Social Robots as Embedded Reinforcers of Social Behavior in Children with Autism. J. Autism Dev. Disord. 2013, 43, 1038–1049. [Google Scholar] [CrossRef] [PubMed]

- Fujimoto, I.; Matsumoto, T.; De Silva, P.R.S.; Kobayashi, M.; Higashi, M. Mimicking and evaluating human mo-tion to improve the imitation skill of children with autism through a robot. Int. J. Soc. Robot. 2011, 3, 349–357. [Google Scholar] [CrossRef]

- Di Nuovo, A.; Conti, D.; Trubia, G.; Buono, S.; Di Nuovo, S. Deep Learning Systems for Estimating Visual Attention in Robot-Assisted Therapy of Children with Autism and Intellectual Disability. Robotics 2018, 7, 25. [Google Scholar] [CrossRef]

- Salem, M.; Lakatos, G.; Amirabdollahian, F.; Dautenhahn, K. Towards Safe and Trustworthy Social Robots: Ethical Challenges and Practical Issues. In Social Robotics; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2015; pp. 584–593. [Google Scholar]

- Katsanis, I.A.; Moulianitis, V.C. Criteria for the Design and Application of Socially Assistive Robots in Interventions for Children with Autism. In Advances in Service and Industrial Robotics; Springer: Berlin/Heidelberg, Germany, 2020; pp. 159–167. [Google Scholar]

- ASDEU Consortium. Autism Spectrum Disorders in the European Union (ASDEU): Final Report: Main Results of the ASDEU Project-28/08/2018; ASDEU, European Commission: Brussels, Belgium, 2018. [Google Scholar]

- Dickstein-Fischer, L.A.; Crone-Todd, D.E.; Chapman, I.; Fathima, A.T.; Fischer, G.S. Socially assistive robots: Current status and future prospects for autism interventions. Innov. Entrep. Health 2018, 5, 15–25. [Google Scholar] [CrossRef]

- Costa, S.; Lehmann, H.; Dautenhahn, K.; Robins, B.; Soares, F. Using a Humanoid Robot to Elicit Body Awareness and Appropriate Physical Interaction in Children with Autism. Int. J. Soc. Robot. 2015, 7, 265–278. [Google Scholar] [CrossRef]

- Wainer, J.; Robins, B.; Amirabdollahian, F.; Dautenhahn, K. Using the Humanoid Robot KASPAR to Autonomously Play Triadic Games and Facilitate Collaborative Play among Children with Autism. IEEE Trans. Auton. Ment. Dev. 2014, 6, 183–199. [Google Scholar] [CrossRef]

- Esteban, P.G.; Baxter, P.E.; Belpaeme, T.; Billing, E.; Cai, H.; Cao, H.-L.; Coeckelbergh, M.; Costescu, C.; David, D.O.; De Beir, A.; et al. How to Build a Supervised Autonomous System for Robot-Enhanced Therapy for Children with Autism Spectrum Disorder. Paladyn J. Behav. Robot. 2017, 8, 18–38. [Google Scholar] [CrossRef]

- Alemi, M.; Meghdari, A.; Basiri, N.M.; Taheri, A. The Effect of Applying Humanoid Robots as Teacher Assistants to Help Iranian Autistic Pupils Learn English as a Foreign Language. In Social Robotics; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2015; Volume 9388, pp. 1–10. [Google Scholar]

- Anzalone, S.M.; Tilmont, E.; Boucenna, S.; Xavier, J.; Jouen, A.-L.; Bodeau, N.; Maharatna, K.; Chetouani, M.; Cohen, D. How children with autism spectrum disorder behave and explore the 4-dimensional (spatial 3D+time) environment during a joint attention induction task with a robot. Res. Autism Spectr. Disord. 2014, 8, 814–826. [Google Scholar] [CrossRef]

- Postlethwaite, I.; Leonards, U.B. Human-Humanoid Interaction: Overview; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2017; pp. 1–16. [Google Scholar]

- Vasic, M.; Billard, A. Safety issues in human-robot interactions. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 197–204. [Google Scholar]

- Thomas, C.; Busch, F.; Kuhlenkoetter, B.; Deuse, J. Ensuring human safety with offline simulation and real-time work-space surveillance to develope a hybrid robot assistance system for welding of assemblies. In Enabling Manufacturing Competitiveness and Economic Sustainability; Springer: Berlin/Heidelberg, Germany, 2012; pp. 464–470. [Google Scholar]

- Matthias, B.; Kock, S.; Jerregard, H.; Kallman, M.; Lundberg, I.; Mellander, R. Safety of collaborative industrial robots: Certification possibilities for a collaborative assembly robot concept. In Proceedings of the 2011 IEEE International Symposium on Assembly and Manufacturing (ISAM), Tampere, Finland, 25–27 May 2011; pp. 1–6. [Google Scholar]

- Argall, B.D.; Billard, A.G. A survey of tactile human–robot interactions. Robot. Auton. Syst. 2010, 58, 1159–1176. [Google Scholar] [CrossRef]

- ISO Standard. ISO/TS 15066: 2016 Robots and Robotic Devices-Collaborative Robots; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Robotic Industries Association. ANSI/RIA R15. 06: 2012 Safety Requirements for Industrial Robots and Robot Systems; Robotic Industries Association: Ann Arbor, MI, USA, 2012. [Google Scholar]

- Rushby, J. Just-in-Time Certification. In Proceedings of the 12th IEEE International Conference on Engineering Complex Computer Systems (ICECCS 2007), Auckland, New Zealand, 11–14 July 2007; pp. 15–24. [Google Scholar]

- Guiochet, J.; Martin-Guillerez, D.; Powell, D. Experience with model-based user-centered risk assessment for service robots. In Proceedings of the IEEE 12th International Symposium on High Assurance Systems Engineering, San Jose, CA, USA, 3–4 November 2010; pp. 104–113. [Google Scholar]

- Blum, C.; Winfield, A.F.T.; Hafner, V.V. Simulation-Based Internal Models for Safer Robots. Front. Robot. AI 2018, 4, 74. [Google Scholar] [CrossRef]

- Araiza-Illan, D.; Western, D.; Pipe, A.G.; Eder, K. Systematic and Realistic Testing in Simulation of Control Code for Robots in Collaborative Human-Robot Interactions. In Towards Autonomous Robotic Systems; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2016; pp. 20–32. [Google Scholar]

- Clabaugh, C.; Becerra, D.; Deng, E.; Ragusa, G.; Matarić, M. Month-long, In-home Case Study of a Socially Assistive Robot for Children with Autism Spectrum Disorder. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 87–88. [Google Scholar]

- Jain, S.; Thiagarajan, B.; Shi, Z.; Clabaugh, C.; Matarić, M.J. Modeling engagement in long-term, in-home socially assistive robot interventions for children with autism spectrum disorders. Sci. Robot. 2020, 5, eaaz3791. [Google Scholar] [CrossRef]

- Pennazio, V. Social robotics to help children with autism in their interactions through imitation. Res. Educ. Media 2017, 9, 10–16. [Google Scholar] [CrossRef]

- Yanco, H.; Drury, J. Classifying human-robot interaction: An updated taxonomy. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004; Volume 3, pp. 2841–2846. [Google Scholar]

- Bonarini, A. Communication in Human-Robot Interaction. Curr. Robot. Rep. 2020, 1, 279–285. [Google Scholar] [CrossRef]

- De Santis, A.; Siciliano, B.; De Luca, A.; Bicchi, A. An atlas of physical human–robot interaction. Mech. Mach. Theory 2008, 43, 253–270. [Google Scholar] [CrossRef]

- Laros, J.F.J.; Blavier, A.; Dunnen, J.T.D.; Taschner, P.E. A formalized description of the standard human variant nomenclature in Extended Backus-Naur Form. BMC Bioinform. 2011, 12, S5. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Katsanis, I.A.; Moulianitis, V.C. An Architecture for Safe Child–Robot Interactions in Autism Interventions. Robotics 2021, 10, 20. https://doi.org/10.3390/robotics10010020

Katsanis IA, Moulianitis VC. An Architecture for Safe Child–Robot Interactions in Autism Interventions. Robotics. 2021; 10(1):20. https://doi.org/10.3390/robotics10010020

Chicago/Turabian StyleKatsanis, Ilias A., and Vassilis C. Moulianitis. 2021. "An Architecture for Safe Child–Robot Interactions in Autism Interventions" Robotics 10, no. 1: 20. https://doi.org/10.3390/robotics10010020

APA StyleKatsanis, I. A., & Moulianitis, V. C. (2021). An Architecture for Safe Child–Robot Interactions in Autism Interventions. Robotics, 10(1), 20. https://doi.org/10.3390/robotics10010020