MetaScore: A Novel Machine-Learning-Based Approach to Improve Traditional Scoring Functions for Scoring Protein–Protein Docking Conformations

Abstract

1. Introduction

2. Materials and Methods

2.1. Training Data Set and Preprocessing

2.2. Test Data Set and Preprocessing

2.3. Comparison with State-of-the-Art Scoring Methods

2.4. Evaluation Metrics

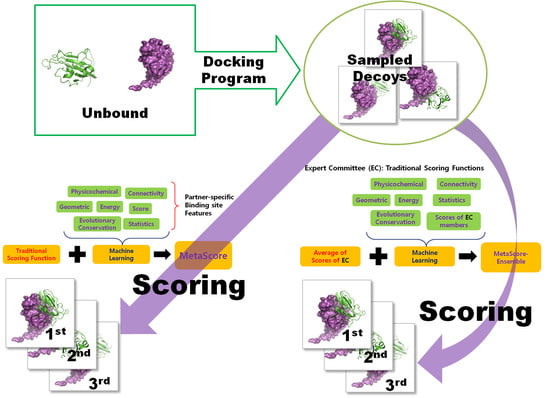

2.5. MetaScore, a Novel Approach Combining Scores from Machine Learning Classifier-Based Scoring Function with Scores from a Traditional Scoring Function

2.5.1. The RF Classifier

2.5.2. The Min-Max Normalization within Each Case

2.5.3. The Final Score of MetaScore

2.6. Features of MetaScore

2.6.1. Raw and Normalized Scores from Each Scoring Function (Score Features)

2.6.2. Evolutionary Features

2.6.3. Interaction-Propensity-Based Features (Statistical Features)

2.6.4. Hydrophobicity (Physicochemical Feature)

2.6.5. Energy-Based Features

2.6.6. Geometric Features

Shortest Distances of Interfacial Residue Pairs

Convexity-to-Concavity Ratio

Buried Surface Area

Relative Accessible Surface Area

Secondary Structure

2.6.7. Connectivity Features

3. Results

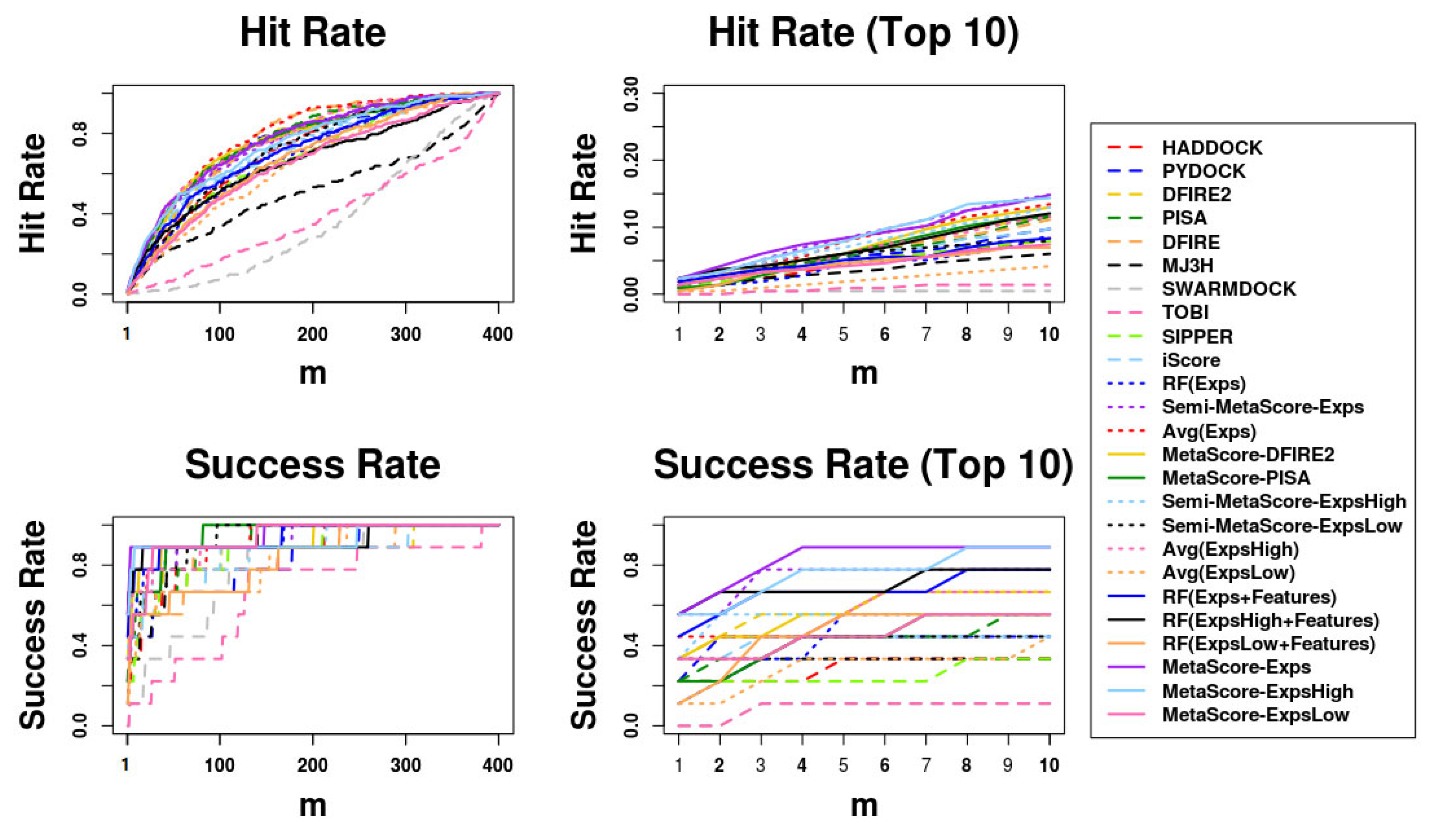

3.1. Combination of Scores from the RF Classifier and Scores from HADDOCK Can Improve the Performance of HADDOCK Scoring

3.2. Evaluation of Feature Importance

3.3. Combination of RF Classifier Scores and Scores from Other Scoring Methods Can Improve the Performance of Each Method

3.4. MetaScore-Ensemble Variants Combining MetaScore with Collections of Traditional Scoring Methods Improve on Both

- (1)

- RF(Group), which is the RF classifier trained using only the raw scores and the normalized scores of members in a group of scoring methods (group);

- (2)

- RF(Group + Features), which is the RF classifier trained using the protein–protein interface features including the raw scores and the normalized scores of members in a group;

- (3)

- Avg(Group), which is a method averaging the normalized scores of members in a group;

- (4)

- Semi-MetaScore-Group, which is a method combining the score from the RF classifier trained using only the raw scores and the normalized scores of members in a group with the averaged score of the normalized scores of members in the group;

- (5)

- MetaScore-Group, which is to combine the score from the RF classifier trained using the protein–protein interface features including the raw scores and the normalized scores of members in a group with the averaged score of the normalized scores of members in the group.

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Larrañaga, P.; Calvo, B.; Santana, R.; Bielza, C.; Galdiano, J.; Inza, I.; Lozano, J.A.; Armañanzas, R.; Santafé, G.; Pérez, A.; et al. Machine learning in bioinformatics. Brief. Bioinform. 2006, 7, 86–112. [Google Scholar] [CrossRef]

- Ryan, D.P.; Matthews, J.M. Protein-protein interactions in human disease. Curr. Opin. Struct. Biol. 2005, 15, 441–446. [Google Scholar] [CrossRef]

- Metz, A.; Ciglia, E.; Gohlke, H. Modulating protein-protein interactions: From structural determinants of binding to druggability prediction to application. Curr. Pharm. Des. 2012, 18, 4630–4647. [Google Scholar] [CrossRef]

- González-Ruiz, D.; Gohlke, H. Targeting protein-protein interactions with small molecules: Challenges and perspectives for computational binding epitope detection and ligand finding. Curr. Med. Chem. 2006, 13, 2607–2625. [Google Scholar] [CrossRef]

- Nisius, B.; Sha, F.; Gohlke, H. Structure-based computational analysis of protein binding sites for function and druggability prediction. J. Biotechnol. 2012, 159, 123–134. [Google Scholar] [CrossRef]

- Zhou, P.; Wang, C.; Ren, Y.; Yang, C.; Tian, F. Computational peptidology: A new and promising approach to therapeutic peptide design. Curr. Med. Chem. 2013, 20, 1985–1996. [Google Scholar] [CrossRef]

- Szymkowski, D.E. Creating the next generation of protein therapeutics through rational drug design. Curr. Opin. Drug Discov. Devel. 2005, 8, 590–600. [Google Scholar]

- Wanner, J.; Fry, D.C.; Peng, Z.; Roberts, J. Druggability assessment of protein-protein interfaces. Future Med. Chem. 2011, 3, 2021–2038. [Google Scholar] [CrossRef]

- Jung, Y.; Joo, K.M.; Seong, D.H.; Choi, Y.L.; Kong, D.S.; Kim, Y.; Kim, M.H.; Jin, J.; Suh, Y.L.; Seol, H.J.; et al. Identification of prognostic biomarkers for glioblastomas using protein expression profiling. Int. J. Oncol. 2012, 40, 1122–1132. [Google Scholar] [CrossRef]

- Shi, Y. A glimpse of structural biology through X-ray crystallography. Cell 2014, 159, 995–1014. [Google Scholar] [CrossRef]

- Hoofnagle, A.N.; Resing, K.A.; Ahn, N.G. Protein analysis by hydrogen exchange mass spectrometry. Annu. Rev. Biophys. Biomol. Struct. 2003, 32, 1–25. [Google Scholar] [CrossRef]

- Kaveti, S.; Engen, J.R. Protein interactions probed with mass spectrometry. Method. Mol. Biol. 2006, 316, 179–197. [Google Scholar] [CrossRef]

- van Ingen, H.; Bonvin, A.M. Information-driven modeling of large macromolecular assemblies using NMR data. J. Magn. Reson. 2014, 241, 103–114. [Google Scholar] [CrossRef]

- Rodrigues, J.P.; Karaca, E.; Bonvin, A.M. Information-driven structural modelling of protein-protein interactions. Method. Mol. Biol. 2015, 1215, 399–424. [Google Scholar] [CrossRef]

- Koukos, P.I.; Bonvin, A.M.J.J. Integrative Modelling of Biomolecular Complexes. J. Mol. Biol. 2019, 432, 2861–2881. [Google Scholar] [CrossRef]

- Mosca, R.; Céol, A.; Aloy, P. Interactome3D: Adding structural details to protein networks. Nat. Method. 2013, 10, 47–53. [Google Scholar] [CrossRef]

- Vakser, I.A. Protein-protein docking: From interaction to interactome. Biophys. J. 2014, 107, 1785–1793. [Google Scholar] [CrossRef]

- Park, H.; Lee, H.; Seok, C. High-resolution protein-protein docking by global optimization: Recent advances and future challenges. Curr. Opin. Struct. Biol. 2015, 35, 24–31. [Google Scholar] [CrossRef]

- Gromiha, M.M.; Yugandhar, K.; Jemimah, S. Protein-protein interactions: Scoring schemes and binding affinity. Curr. Opin. Struct. Biol. 2017, 44, 31–38. [Google Scholar] [CrossRef]

- Geng, C.; Xue, L.C.; Roel-Touris, J.; Bonvin, A.M. Finding the ΔΔG spot: Are predictors of binding affinity changes upon mutations in protein–protein interactions ready for it? Wiley Interdiscip. Rev. Comput. Mol. Sci. 2019, 9, e1410. [Google Scholar] [CrossRef]

- Dominguez, C.; Boelens, R.; Bonvin, A.M. HADDOCK: A protein-protein docking approach based on biochemical or biophysical information. J. Am. Chem. Soc. 2003, 125, 1731–1737. [Google Scholar] [CrossRef] [PubMed]

- Cheng, T.M.; Blundell, T.L.; Fernandez-Recio, J. pyDock: Electrostatics and desolvation for effective scoring of rigid-body protein-protein docking. Proteins 2007, 68, 503–515. [Google Scholar] [CrossRef] [PubMed]

- Lyskov, S.; Gray, J.J. The RosettaDock server for local protein-protein docking. Nucleic Acids Res. 2008, 36, W233–W238. [Google Scholar] [CrossRef] [PubMed]

- Pierce, B.; Weng, Z. ZRANK: Reranking protein docking predictions with an optimized energy function. Proteins 2007, 67, 1078–1086. [Google Scholar] [CrossRef] [PubMed]

- Vreven, T.; Hwang, H.; Weng, Z. Integrating atom-based and residue-based scoring functions for protein-protein docking. Protein Sci. 2011, 20, 1576–1586. [Google Scholar] [CrossRef]

- Yang, Y.; Zhou, Y. Specific interactions for ab initio folding of protein terminal regions with secondary structures. Proteins 2008, 72, 793–803. [Google Scholar] [CrossRef]

- Yang, Y.; Zhou, Y. Ab initio folding of terminal segments with secondary structures reveals the fine difference between two closely related all-atom statistical energy functions. Protein Sci. 2008, 17, 1212–1219. [Google Scholar] [CrossRef]

- Viswanath, S.; Ravikant, D.V.; Elber, R. Improving ranking of models for protein complexes with side chain modeling and atomic potentials. Proteins 2013, 81, 592–606. [Google Scholar] [CrossRef]

- Moal, I.H.; Bates, P.A. SwarmDock and the use of normal modes in protein-protein docking. Int. J. Mol. Sci. 2010, 11, 3623–3648. [Google Scholar] [CrossRef]

- Moont, G.; Gabb, H.A.; Sternberg, M.J. Use of pair potentials across protein interfaces in screening predicted docked complexes. Proteins 1999, 35, 364–373. [Google Scholar] [CrossRef]

- Liu, S.; Vakser, I.A. DECK: Distance and environment-dependent, coarse-grained, knowledge-based potentials for protein-protein docking. BMC Bioinform. 2011, 12, 280. [Google Scholar] [CrossRef] [PubMed]

- Pons, C.; Talavera, D.; de la Cruz, X.; Orozco, M.; Fernandez-Recio, J. Scoring by intermolecular pairwise propensities of exposed residues (SIPPER): A new efficient potential for protein-protein docking. J. Chem. Inf. Model. 2011, 51, 370–377. [Google Scholar] [CrossRef] [PubMed]

- Miyazawa, S.; Jernigan, R.L. Self-consistent estimation of inter-residue protein contact energies based on an equilibrium mixture approximation of residues. Proteins 1999, 34, 49–68. [Google Scholar] [CrossRef]

- Geppert, T.; Proschak, E.; Schneider, G. Protein-protein docking by shape-complementarity and property matching. J. Comput. Chem. 2010, 31, 1919–1928. [Google Scholar] [CrossRef] [PubMed]

- Mitra, P.; Pal, D. New measures for estimating surface complementarity and packing at protein-protein interfaces. FEBS Lett. 2010, 584, 1163–1168. [Google Scholar] [CrossRef]

- Gabb, H.A.; Jackson, R.M.; Sternberg, M.J. Modelling protein docking using shape complementarity, electrostatics and biochemical information. J. Mol. Biol. 1997, 272, 106–120. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, M.C.; Colman, P.M. Shape complementarity at protein/protein interfaces. J. Mol. Biol. 1993, 234, 946–950. [Google Scholar] [CrossRef]

- McCoy, A.J.; Epa, V.C.; Colman, P.M. Electrostatic complementarity at protein/protein interfaces. J. Mol. Biol. 1997, 268, 570–584. [Google Scholar] [CrossRef]

- Chang, S.; Jiao, X.; Li, C.H.; Gong, X.Q.; Chen, W.Z.; Wang, C.X. Amino acid network and its scoring application in protein-protein docking. Biophys. Chem. 2008, 134, 111–118. [Google Scholar] [CrossRef]

- Khashan, R.; Zheng, W.; Tropsha, A. Scoring protein interaction decoys using exposed residues (SPIDER): A novel multibody interaction scoring function based on frequent geometric patterns of interfacial residues. Proteins 2012, 80, 2207–2217. [Google Scholar] [CrossRef]

- Andreani, J.; Faure, G.; Guerois, R. InterEvScore: A novel coarse-grained interface scoring function using a multi-body statistical potential coupled to evolution. Bioinformatics 2013, 29, 1742–1749. [Google Scholar] [CrossRef] [PubMed]

- Bordner, A.J.; Gorin, A.A. Protein docking using surface matching and supervised machine learning. Proteins 2007, 68, 488–502. [Google Scholar] [CrossRef] [PubMed]

- Chae, M.H.; Krull, F.; Lorenzen, S.; Knapp, E.W. Predicting protein complex geometries with a neural network. Proteins 2010, 78, 1026–1039. [Google Scholar] [CrossRef] [PubMed]

- Bourquard, T.; Bernauer, J.; Azé, J.; Poupon, A. A collaborative filtering approach for protein-protein docking scoring functions. PLoS ONE 2011, 6, e18541. [Google Scholar] [CrossRef]

- Azé, J.; Bourquard, T.; Hamel, S.; Poupon, A.; Ritchie, D.W. Using Kendall-τ meta-bagging to improve protein-protein docking predictions. In Proceedings of the IAPR International Conference on Pattern Recognition in Bioinformatics, Delft, The Netherlands, 2–4 November 2011; pp. 284–295. [Google Scholar]

- Fink, F.; Hochrein, J.; Wolowski, V.; Merkl, R.; Gronwald, W. PROCOS: Computational analysis of protein-protein complexes. J. Comput. Chem. 2011, 32, 2575–2586. [Google Scholar] [CrossRef]

- Basu, S.; Wallner, B. Finding correct protein-protein docking models using ProQDock. Bioinformatics 2016, 32, i262–i270. [Google Scholar] [CrossRef]

- Li, H.; Leung, K.S.; Wong, M.H.; Ballester, P.J. Substituting random forest for multiple linear regression improves binding affinity prediction of scoring functions: Cyscore as a case study. BMC Bioinform. 2014, 15, 291. [Google Scholar] [CrossRef]

- Ashtawy, H.M.; Mahapatra, N.R. A Comparative Assessment of Predictive Accuracies of Conventional and Machine Learning Scoring Functions for Protein-Ligand Binding Affinity Prediction. IEEE ACM Trans. Comput. Biol. Bioinform. 2015, 12, 335–347. [Google Scholar] [CrossRef]

- Jiménez-García, B.; Roel-Touris, J.; Romero-Durana, M.; Vidal, M.; Jiménez-González, D.; Fernández-Recio, J. LightDock: A new multi-scale approach to protein-protein docking. Bioinformatics 2018, 34, 49–55. [Google Scholar] [CrossRef]

- Moal, I.H.; Barradas-Bautista, D.; Jiménez-García, B.; Torchala, M.; van der Velde, A.; Vreven, T.; Weng, Z.; Bates, P.A.; Fernández-Recio, J. IRaPPA: Information retrieval based integration of biophysical models for protein assembly selection. Bioinformatics 2017, 33, 1806–1813. [Google Scholar] [CrossRef] [PubMed]

- Geng, C.; Jung, Y.; Renaud, N.; Honavar, V.; Bonvin, A.M.J.J.; Xue, L.C. iScore: A novel graph kernel-based function for scoring protein-protein docking models. Bioinformatics 2020, 36, 112–121. [Google Scholar] [CrossRef] [PubMed]

- Lensink, M.F.; Wodak, S.J. Score_set: A CAPRI benchmark for scoring protein complexes. Proteins 2014, 82, 3163–3169. [Google Scholar] [CrossRef] [PubMed]

- Lensink, M.F.; Wodak, S.J. Docking, scoring, and affinity prediction in CAPRI. Proteins 2013, 81, 2082–2095. [Google Scholar] [CrossRef] [PubMed]

- Lensink, M.F.; Velankar, S.; Wodak, S.J. Modeling protein-protein and protein-peptide complexes: CAPRI 6th edition. Proteins 2017, 85, 359–377. [Google Scholar] [CrossRef] [PubMed]

- Lensink, M.F.; Velankar, S.; Baek, M.; Heo, L.; Seok, C.; Wodak, S.J. The challenge of modeling protein assemblies: The CASP12-CAPRI experiment. Proteins 2018, 86 (Suppl. 1), 257–273. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Vreven, T.; Moal, I.H.; Vangone, A.; Pierce, B.G.; Kastritis, P.L.; Torchala, M.; Chaleil, R.; Jiménez-García, B.; Bates, P.A.; Fernandez-Recio, J.; et al. Updates to the Integrated Protein-Protein Interaction Benchmarks: Docking Benchmark Version 5 and Affinity Benchmark Version 2. J. Mol. Biol. 2015, 427, 3031–3041. [Google Scholar] [CrossRef] [PubMed]

- Hwang, H.; Vreven, T.; Janin, J.; Weng, Z. Protein-protein docking benchmark Version 4.0. Proteins 2010, 78, 3111–3114. [Google Scholar] [CrossRef] [PubMed]

- de Vries, S.J.; van Dijk, M.; Bonvin, A.M. The HADDOCK web server for data-driven biomolecular docking. Nat. Protoc. 2010, 5, 883–897. [Google Scholar] [CrossRef] [PubMed]

- Tian, H.; Jiang, X.; Tao, P. PASSer: Prediction of Allosteric Sites Server. Mach. Learn. Sci. Technol. 2021, 2, 035015. [Google Scholar] [CrossRef]

- Khan, N.A.; Waheeb, S.A.; Riaz, A.; Shang, X. A Novel Knowledge Distillation-Based Feature Selection for the Classification of ADHD. Biomolecules 2021, 11, 1093. [Google Scholar] [CrossRef] [PubMed]

- Tobi, D. Designing coarse grained-and atom based-potentials for protein-protein docking. BMC Struct. Biol. 2010, 10, 40. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z. A method of SVM with normalization in intrusion detection. Procedia Environ. Sci. 2011, 11, 256–262. [Google Scholar] [CrossRef]

- Minhas, F.; Geiss, B.J.; Ben-Hur, A. PAIRpred: Partner-specific prediction of interacting residues from sequence and structure. Proteins 2014, 82, 1142–1155. [Google Scholar] [CrossRef] [PubMed]

- Xue, L.C.; Dobbs, D.; Bonvin, A.M.; Honavar, V. Computational prediction of protein interfaces: A review of data driven methods. FEBS Lett. 2015, 589, 3516–3526. [Google Scholar] [CrossRef] [PubMed]

- Berchanski, A.; Shapira, B.; Eisenstein, M. Hydrophobic complementarity in protein-protein docking. Proteins 2004, 56, 130–142. [Google Scholar] [CrossRef]

- Geng, H.; Lu, T.; Lin, X.; Liu, Y.; Yan, F. Prediction of Protein-Protein Interaction Sites Based on Naive Bayes Classifier. Biochem. Res. Int. 2015, 2015, 978193. [Google Scholar] [CrossRef]

- Jung, Y.; El-Manzalawy, Y.; Dobbs, D.; Honavar, V.G. Partner-specific prediction of RNA-binding residues in proteins: A critical assessment. Proteins 2019, 87, 198–211. [Google Scholar] [CrossRef]

- Xue, L.C.; Jordan, R.A.; El-Manzalawy, Y.; Dobbs, D.; Honavar, V. DockRank: Ranking docked conformations using partner-specific sequence homology-based protein interface prediction. Proteins 2014, 82, 250–267. [Google Scholar] [CrossRef]

- Schneider, T.D.; Stormo, G.D.; Gold, L.; Ehrenfeucht, A. Information content of binding sites on nucleotide sequences. J. Mol. Biol. 1986, 188, 415–431. [Google Scholar] [CrossRef]

- Altschul, S.F.; Madden, T.L.; Schäffer, A.A.; Zhang, J.; Zhang, Z.; Miller, W.; Lipman, D.J. Gapped BLAST and PSI-BLAST: A new generation of protein database search programs. Nucleic Acids Res. 1997, 25, 3389–3402. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Lu, L.; Skolnick, J. Development of unified statistical potentials describing protein-protein interactions. Biophys. J. 2003, 84, 1895–1901. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.Y.; Zou, X. An iterative knowledge-based scoring function for protein-protein recognition. Proteins 2008, 72, 557–579. [Google Scholar] [CrossRef] [PubMed]

- Nadalin, F.; Carbone, A. Protein–protein interaction specificity is captured by contact preferences and interface composition. Bioinformatics 2017, 34, 459–468. [Google Scholar] [CrossRef] [PubMed]

- Axenopoulos, A.; Daras, P.; Papadopoulos, G.E.; Houstis, E.N. SP-Dock: Protein-protein docking using shape and physicochemical complementarity. IEEE ACM Trans. Comput. Biol. Bioinform. 2013, 10, 135–150. [Google Scholar] [CrossRef]

- Sanchez-Garcia, R.; Sorzano, C.O.S.; Carazo, J.M.; Segura, J. BIPSPI: A method for the prediction of Partner-Specific Protein-Protein Interfaces. Bioinformatics 2018, 35, 470–477. [Google Scholar] [CrossRef]

- Esmaielbeiki, R.; Krawczyk, K.; Knapp, B.; Nebel, J.C.; Deane, C.M. Progress and challenges in predicting protein interfaces. Brief. Bioinform. 2016, 17, 117–131. [Google Scholar] [CrossRef]

- Malhotra, S.; Mathew, O.K.; Sowdhamini, R. DOCKSCORE: A webserver for ranking protein-protein docked poses. BMC Bioinform. 2015, 16, 127. [Google Scholar] [CrossRef]

- Chanphai, P.; Bekale, L.; Tajmir-Riahi, H. Effect of hydrophobicity on protein–protein interactions. Eur. Polym. J. 2015, 67, 224–231. [Google Scholar] [CrossRef]

- Dyson, H.J.; Wright, P.E.; Scheraga, H.A. The role of hydrophobic interactions in initiation and propagation of protein folding. Proc. Natl. Acad. Sci. USA 2006, 103, 13057–13061. [Google Scholar] [CrossRef]

- Jasti, L.S.; Fadnavis, N.W.; Addepally, U.; Daniels, S.; Deokar, S.; Ponrathnam, S. Comparison of polymer induced and solvent induced trypsin denaturation: The role of hydrophobicity. Colloids Surf. B Biointerfaces 2014, 116, 201–205. [Google Scholar] [CrossRef] [PubMed]

- Kawashima, S.; Pokarowski, P.; Pokarowska, M.; Kolinski, A.; Katayama, T.; Kanehisa, M. AAindex: Amino acid index database, progress report 2008. Nucleic Acids Res. 2008, 36, D202–D205. [Google Scholar] [CrossRef] [PubMed]

- Duan, G.; Ji, C.; Zhang, J.Z.H. Developing an effective polarizable bond method for small molecules with application to optimized molecular docking. RSC Adv. 2020, 10, 15530–15540. [Google Scholar] [CrossRef] [PubMed]

- de Vries, S.J.; van Dijk, A.D.; Krzeminski, M.; van Dijk, M.; Thureau, A.; Hsu, V.; Wassenaar, T.; Bonvin, A.M. HADDOCK versus HADDOCK: New features and performance of HADDOCK2.0 on the CAPRI targets. Proteins 2007, 69, 726–733. [Google Scholar] [CrossRef] [PubMed]

- Pintar, A.; Carugo, O.; Pongor, S. CX, an algorithm that identifies protruding atoms in proteins. Bioinformatics 2002, 18, 980–984. [Google Scholar] [CrossRef]

- Towfic, F.; Caragea, C.; Gemperline, D.C.; Dobbs, D.; Honavar, V. Struct-NB: Predicting protein-RNA binding sites using structural features. Int. J. Data Min. Bioinform. 2010, 4, 21–43. [Google Scholar] [CrossRef]

- Heinig, M.; Frishman, D. STRIDE: A web server for secondary structure assignment from known atomic coordinates of proteins. Nucleic Acids Res. 2004, 32, W500–W502. [Google Scholar] [CrossRef]

- Chothia, C. The nature of the accessible and buried surfaces in proteins. J. Mol. Biol. 1976, 105, 1–12. [Google Scholar] [CrossRef]

- Chakravarty, D.; Janin, J.; Robert, C.H.; Chakrabarti, P. Changes in protein structure at the interface accompanying complex formation. IUCrJ 2015, 2, 643–652. [Google Scholar] [CrossRef]

- Luo, J.; Liu, L.; Venkateswaran, S.; Song, Q.; Zhou, X. RPI-Bind: A structure-based method for accurate identification of RNA-protein binding sites. Sci. Rep. 2017, 7, 614. [Google Scholar] [CrossRef]

- Basu, S.; Bhattacharyya, D.; Banerjee, R. Mapping the distribution of packing topologies within protein interiors shows predominant preference for specific packing motifs. BMC Bioinform. 2011, 12, 195. [Google Scholar] [CrossRef] [PubMed]

- Yang, P.; Hwa Yang, Y.; Zhou, B.B.; Zomaya, A.Y. A review of ensemble methods in bioinformatics. Curr. Bioinform. 2010, 5, 296–308. [Google Scholar] [CrossRef]

- Rokach, L. Taxonomy for characterizing ensemble methods in classification tasks: A review and annotated bibliography. Comput. Stat. Data Anal. 2009, 53, 4046–4072. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble methods in machine learning. In Proceedings of the International Workshop on Multiple Classifier Systems, Cagliari, Italy, 21–23 June 2000; pp. 1–15. [Google Scholar]

| Data Sets | Method | ASR for Top 10 | AHR for Top 10 | ASR for Top 400 | AHR for Top 400 |

|---|---|---|---|---|---|

| BM4 decoy set | HADDOCK | 0.29 | 0.036 | 0.85 | 0.64 |

| RF classifier | 0.36 | 0.040 | 0.89 | 0.65 | |

| MetaScore-HADDOCK | 0.33 | 0.044 | 0.87 | 0.66 | |

| BM5 decoy set | HADDOCK | 0.29 | 0.048 | 0.86 | 0.72 |

| RF classifier | 0.38 | 0.032 | 0.89 | 0.65 | |

| MetaScore-HADDOCK | 0.44 | 0.056 | 0.9 | 0.72 | |

| CAPRI score set | HADDOCK | 0.80 | 0.044 | 0.97 | 0.68 |

| RF classifier | 0.72 | 0.032 | 0.97 | 0.65 | |

| MetaScore-HADDOCK | 0.80 | 0.044 | 0.97 | 0.68 |

| Method | Excluded Feature Type | ASR for Top 10 1 | AHR for Top 10 | ASR for Top 400 | AHR for Top 400 |

|---|---|---|---|---|---|

| RF classifier | Connectivity features | 0.28 | 0.044 | 0.87 | 0.65 |

| Statistical features | 0.29 | 0.044 | 0.87 | 0.66 | |

| Geometric features | 0.30 | 0.044 | 0.87 | 0.66 | |

| Score features | 0.31 | 0.044 | 0.85 | 0.65 | |

| Energy-based features | 0.32 | 0.036 | 0.88 | 0.62 | |

| Physicochemical feature | 0.32 | 0.044 | 0.88 | 0.64 | |

| Evolutionary features | 0.34 | 0.048 | 0.87 | 0.65 | |

| None | 0.36 | 0.040 | 0.89 | 0.65 | |

| MetaScore– HADDOCK | Connectivity features | 0.32 | 0.048 | 0.86 | 0.68 |

| Statistical features | 0.34 | 0.044 | 0.86 | 0.67 | |

| Geometric features | 0.31 | 0.048 | 0.85 | 0.67 | |

| Score features | 0.32 | 0.048 | 0.86 | 0.67 | |

| Energy-based features | 0.34 | 0.048 | 0.86 | 0.66 | |

| Physicochemical feature | 0.31 | 0.048 | 0.85 | 0.65 | |

| Evolutionary features | 0.34 | 0.052 | 0.86 | 0.68 | |

| None | 0.33 | 0.044 | 0.87 | 0.66 |

| Data Sets | Method | MetaScore Method | Original Method | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ASR Top 10 | AHR Top 10 | ASR Top 400 | AHR Top 400 | ASR Top 10 | AHR Top 10 | ASR Top 400 | AHR Top 400 | ||

| BM4 | HADDOCK | 0.33 (15.28%) | 0.044 (22.22%) | 0.87 (2.35%) | 0.66 (3.13%) | 0.29 | 0.036 | 0.85 | 0.64 |

| iScore | 0.48 (4.34%) | 0.074 (−10.84%) | 0.89 (0.00%) | 0.71 (−2.74%) | 0.46 | 0.083 | 0.89 | 0.73 | |

| DFIRE | 0.32 (29.03%) | 0.052 (44.44%) | 0.84 (6.33%) | 0.67 (3.08%) | 0.25 | 0.036 | 0.79 | 0.65 | |

| DFIRE2 | 0.27 (51.11%) | 0.044 (57.14%) | 0.85 (4.94%) | 0.67 (6.35%) | 0.18 | 0.028 | 0.81 | 0.63 | |

| MJ3H | 0.38 (9.20%) | 0.056 (7.69%) | 0.87 (2.35%) | 0.68 (−1.45%) | 0.35 | 0.052 | 0.85 | 0.69 | |

| PISA | 0.42 (6.12%) | 0.064 (14.29%) | 0.89 (0.00%) | 0.71 (1.43%) | 0.39 | 0.056 | 0.89 | 0.70 | |

| pyDOCK | 0.23 (42.50%) | 0.028 (75.00%) | 0.81 (8.00%) | 0.63 (8.62%) | 0.16 | 0.016 | 0.75 | 0.58 | |

| SIPPER | 0.26 (128.57%) | 0.024 (100.00%) | 0.88 (7.32%) | 0.62 (10.71%) | 0.11 | 0.012 | 0.82 | 0.56 | |

| SWARMDOCK | 0.27 (103.03%) | 0.028 (133.33%) | 0.86 (3.61%) | 0.61 (8.93%) | 0.13 | 0.012 | 0.83 | 0.56 | |

| TOBI | 0.14 (133.33%) | 0.012 (200.00%) | 0.84 (12.00%) | 0.54 (22.73%) | 0.06 | 0.004 | 0.75 | 0.44 | |

| BM5 | HADDOCK | 0.44 (52.79%) | 0.056 (16.67%) | 0.90 (4.65%) | 0.72 (0.00%) | 0.29 | 0.048 | 0.86 | 0.72 |

| iScore | 0.42 (27.27%) | 0.059 (47.5%) | 0.86 (13.16%) | 0.72 (1.41%) | 0.33 | 0.040 | 0.76 | 0.71 | |

| DFIRE | 0.49 (2.52%) | 0.064 (0.00%) | 0.92 (6.98%) | 0.77 (−1.28%) | 0.48 | 0.064 | 0.86 | 0.78 | |

| DFIRE2 | 0.57 (8.40%) | 0.072 (12.50%) | 0.93 (6.90%) | 0.76 (0.00%) | 0.52 | 0.064 | 0.87 | 0.76 | |

| MJ3H | 0.53 (70.51%) | 0.056 (55.56%) | 0.91 (0.00%) | 0.57 (9.62%) | 0.31 | 0.036 | 0.91 | 0.52 | |

| PISA | 0.43 (1.89%) | 0.064 (6.67%) | 0.95 (3.26%) | 0.76 (−2.56%) | 0.42 | 0.060 | 0.92 | 0.78 | |

| pyDOCK | 0.56 (31.13%) | 0.068 (21.43%) | 0.92 (10.84%) | 0.77 (2.67%) | 0.42 | 0.056 | 0.83 | 0.75 | |

| SIPPER | 0.43 (68.75%) | 0.060 (25.00%) | 0.90 (4.65%) | 0.72 (4.35%) | 0.26 | 0.048 | 0.86 | 0.69 | |

| SWARMDOCK | 0.22 (154.55%) | 0.016 (300.00%) | 0.86 (8.86%) | 0.50 (35.14%) | 0.09 | 0.004 | 0.79 | 0.37 | |

| TOBI | 0.23 (163.64%) | 0.028 (250.00%) | 0.78 (16.42%) | 0.47 (20.51%) | 0.09 | 0.008 | 0.67 | 0.39 | |

| Groups of Scoring Functions (Group) | Combination Approaches (Approach) |

|---|---|

| ExpertsHigh | RF(Group) 2 |

| RF(Group + Features 7) 3 | |

| ExpertsLow | |

| Avg(Group) 4 | |

| Semi-MetaScore-Group 5 | |

| Experts 1 | |

| MetaScore-Group 6 |

| Methods | ASR for Top 10 1 | AHR for Top 10 | ASR for Top 400 | AHR for Top 400 |

|---|---|---|---|---|

| MetaScore-Experts | 0.82 | 0.088 | 0.96 | 0.77 |

| MetaScore-ExpertsHigh | 0.76 | 0.088 | 0.93 | 0.75 |

| Semi-MetaScore-Experts | 0.73 | 0.088 | 0.94 | 0.76 |

| RF(ExpertsHigh + Features) | 0.70 | 0.068 | 0.92 | 0.66 |

| RF(Experts + Features) | 0.67 | 0.052 | 0.94 | 0.71 |

| MetaScore-DFIRE2 | 0.57 | 0.072 | 0.93 | 0.76 |

| Avg(Experts) | 0.57 | 0.080 | 0.93 | 0.80 |

| MetaScore-pyDOCK | 0.56 | 0.068 | 0.92 | 0.77 |

| RF(ExpertsHigh) | 0.54 | 0.060 | 0.89 | 0.72 |

| MetaScore-MJ3H | 0.53 | 0.056 | 0.91 | 0.57 |

| Semi-MetaScore-ExpertsHigh | 0.53 | 0.076 | 0.90 | 0.69 |

| Avg(ExpertsHigh) | 0.53 | 0.064 | 0.90 | 0.78 |

| DFIRE2 | 0.52 | 0.064 | 0.87 | 0.76 |

| MetaScore-DFIRE | 0.49 | 0.064 | 0.92 | 0.77 |

| DFIRE | 0.48 | 0.064 | 0.86 | 0.78 |

| MetaScore-ExpertsLow | 0.46 | 0.044 | 0.94 | 0.65 |

| RF(ExpertsLow + Features) | 0.46 | 0.044 | 0.84 | 0.68 |

| RF(Experts) | 0.45 | 0.044 | 0.93 | 0.67 |

| MetaScore-HADDOCK | 0.44 | 0.056 | 0.90 | 0.72 |

| MetaScore-PISA | 0.43 | 0.064 | 0.95 | 0.76 |

| MetaScore-SIPPER | 0.43 | 0.060 | 0.90 | 0.72 |

| pyDOCK | 0.42 | 0.056 | 0.83 | 0.75 |

| PISA | 0.42 | 0.060 | 0.92 | 0.78 |

| MetaScore-iScore | 0.42 | 0.059 | 0.86 | 0.72 |

| Semi-MetaScore-ExpertsLow | 0.41 | 0.056 | 0.94 | 0.72 |

| RF(Features) | 0.38 | 0.032 | 0.89 | 0.65 |

| iScore | 0.33 | 0.040 | 0.76 | 0.71 |

| MJ3H | 0.31 | 0.036 | 0.91 | 0.52 |

| RF(ExpertsLow) | 0.31 | 0.024 | 0.85 | 0.64 |

| HADDOCK | 0.29 | 0.048 | 0.86 | 0.72 |

| Avg(ExpertsLow) | 0.29 | 0.020 | 0.84 | 0.65 |

| SIPPER | 0.26 | 0.048 | 0.86 | 0.69 |

| MetaScore-TOBI | 0.23 | 0.028 | 0.78 | 0.47 |

| MetaScore-SWARMDOCK | 0.22 | 0.016 | 0.86 | 0.50 |

| TOBI | 0.09 | 0.008 | 0.67 | 0.39 |

| SWARMDOCK | 0.09 | 0.004 | 0.79 | 0.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, Y.; Geng, C.; Bonvin, A.M.J.J.; Xue, L.C.; Honavar, V.G. MetaScore: A Novel Machine-Learning-Based Approach to Improve Traditional Scoring Functions for Scoring Protein–Protein Docking Conformations. Biomolecules 2023, 13, 121. https://doi.org/10.3390/biom13010121

Jung Y, Geng C, Bonvin AMJJ, Xue LC, Honavar VG. MetaScore: A Novel Machine-Learning-Based Approach to Improve Traditional Scoring Functions for Scoring Protein–Protein Docking Conformations. Biomolecules. 2023; 13(1):121. https://doi.org/10.3390/biom13010121

Chicago/Turabian StyleJung, Yong, Cunliang Geng, Alexandre M. J. J. Bonvin, Li C. Xue, and Vasant G. Honavar. 2023. "MetaScore: A Novel Machine-Learning-Based Approach to Improve Traditional Scoring Functions for Scoring Protein–Protein Docking Conformations" Biomolecules 13, no. 1: 121. https://doi.org/10.3390/biom13010121

APA StyleJung, Y., Geng, C., Bonvin, A. M. J. J., Xue, L. C., & Honavar, V. G. (2023). MetaScore: A Novel Machine-Learning-Based Approach to Improve Traditional Scoring Functions for Scoring Protein–Protein Docking Conformations. Biomolecules, 13(1), 121. https://doi.org/10.3390/biom13010121