Abstract

We present an explicit numerical method to solve Milne’s phase–amplitude equations. Existing methods directly solve Milne’s nonlinear equation for amplitude. For that reason, they exhibit high sensitivity to errors and are prone to instability through the growth of a spurious, rapidly varying component of the amplitude. This makes the systematic use of these methods difficult. On the contrary, the present method is based on solving a linear third-order equation which is equivalent to the nonlinear amplitude equation. This linear equation was derived by Kiyokawa, who used it to obtain analytical results on Coulomb wavefunctions. The present method uses this linear equation for numerical computation, thus resolving the problem of the growth of a rapidly varying component.

1. Introduction

Accurate modeling of atomic processes in plasmas such as collisional excitation, photoionization, and Bremsstrahlung requires a precise computation of radial wavefunctions for continuum electron orbitals. In dense plasmas, one should in principle account self-consistently for continuum electrons in the calculation of the atomic structure (see, e.g., [1,2,3] for discussions on this subject). A rigorous approach requires sampling these oscillatory radial wavefunctions in both radius and momentum spaces (e.g., [4,5,6,7]), with the minimal requirement for sampling being governed by the Nyquist–Shannon theorem [8]. The latter constraint leads to a significant computational burden—demanding both substantial memory and substantial floating-point operations—which often hinders the feasibility of self-consistent, quantum-mechanical modeling of plasmas.

Alternative approaches to representing the one-electron basis can offer more efficient numerical solutions. Recently, Starrett et al. [9] proposed an average-atom algorithm based on Siegert states, building on the method of [10]. However, retaining a more conventional one-electron basis, Milne’s phase–amplitude representation [11] also provides an alternative pathway to efficiently represent the continuum. Being based on slowly varying, non-periodic amplitude and phase functions, it circumvents the stringent sampling requirements of the Nyquist–Shannon theorem and has long been recognized for its numerical advantages. It has been successfully applied to calculations of Bessel functions [12] and atomic physics problems [5,13,14]. Various solution methods have been proposed, including direct iterative schemes [15,16], spectral methods using Chebyshev expansions [17], and explicit predictor–corrector methods [13] implemented in codes like SCROLL [18] and HULLAC [19], as well as adaptive mesh refinement Rosenbrock methods [14] used in PURGATORIO [5].

Despite these advances, existing methods usually do not allow for simple and systematic use on a pre-defined grid, with a simple relationship between grid coarseness and precision. In particular, they show a rather high sensitivity to numerical errors. In this article, we first review the fundamentals of Milne’s phase–amplitude representation. We then discuss the limitations of some previously published methods [5,13,14]. Finally, we present a fully explicit, fixed-grid method based on solving a linear third-order equation, initially derived by Kiyokawa [20], which is equivalent to the nonlinear amplitude equation. This numerical method addresses the limitations mentioned, and we demonstrate its application on the illustrative example of phase-shift calculations.

2. Phase–Amplitude Representations

In the following, we will limit the discussion to the description of continuum radial wavefunctions , solutions of the radial Schrödinger equation:

where and . Most phase–amplitude (PA) representations may also be extended to bound states, considering an imaginary k. Moreover, PA representations can also be used in the framework of the Dirac radial equation set without any difficulty (see, for instance, [21], Section 2.3).

Phase–amplitude representation refers to a whole category, rather to a particular representation. Such representations consist of recasting the oscillatory wavefunction R, regular at zero, as follows:

where S is a given oscillatory function regular at zero. is called the amplitude function, and is the phase function.

Generalized PA representation may resort to any oscillatory function S corresponding to the regular solution of the Schrödinger equation for some reference system. The generalized Wentzel–Kramers–Brillouin (WKB) approach [22,23,24,25] may be considered from this standpoint.

In the present article, we focus on Milne’s PA representation, which uses a sine function for S.

The choice of a particular function S is not sufficient to completely determine the representation. In particular, the choice of the sine function for S is used in both Calogero’s and Milne’s PA representations.

Just to highlight their differences, let us briefly recall the basics of Calogero’s PA representation [26]. To obtain this PA representation, one requires, in addition to Equation (4), the following:

Therefore,

Since is regular at zero, Calogero’s amplitude function is regular at zero. Re-writing Equation (1) and using Equations (4) and (5) yields Calogero’s equations for phase and amplitude (see [26], Equations (6.16) and (4.7)):

From these equations, it appears clearly that the amplitude and phase functions of Calogero’s PA representation exhibit rapid variations, i.e., on the scale of r∼1/(2k). This representation was used to derive many useful analytical results in scattering theory [27], such as an analytic approximation to phase shifts in screened potentials [28]. Calogero’s PA representation was also used in order to build efficient numerical methods for searching the eigenvalues of bound states in an attractive potential [29,30]. However, when applied to continuum orbitals, this representation requires the same typical sampling of and as the radial wavefunction itself.

Milne’s PA representation is most often derived by inserting Equation (4) into Equation (1), yielding

One then requires the factors in front of the sine and cosine of the phase to be zero independently. This leads to Milne’s equations for the phase and amplitude, respectively,

with appearing as an arbitrary integration constant.

A somewhat more explicit definition of Milne’s phase and amplitude may be stated as follows. Let us consider , two linearly independent solutions of Equation (1), with being regular at , and define and as follows:

One has

Differentiating Equation (16), one obtains

where denotes the Wronskian. Differentiating Equation (15) two times, one obtains

Using Equation (1) for and , and the definition of Equation (15), one obtains

Defining , Equations (18) and (21) become Equations (11) and (12), respectively. This derivation has the advantage of showing explicitly how is related to the normalizations of the R and Q functions.

Two particular cases are those of free particles () and particles in a Coulomb potential (). For these, one has the following analytical solutions:

where and are the regular and irregular spherical Bessel functions, respectively, and and are the regular and irregular Coulomb wavefunctions. We use the conventions of [31]. The latter analytical solutions can be used together with Equation (15) for the evaluation of the boundary condition for fully screened and Coulomb-tail atomic potentials, respectively.

Equation (12) is a second-order nonlinear differential equation. Its solutions are nontrivial. However, in the asymptotic limit , Equation (12) simply becomes

One readily see that a constant can be solution of this equation, with its value being

This directly yields using Equation (11).

This asymptotic limit may also be recovered from the usual WKB approximation, which just consists of neglecting the second derivative in Equation (12), yielding:

Let us stress that the parameter is related through Equation (25) to the asymptotic value of the amplitude function, which determines the normalization of . Therefore, has to be consistent with the boundary condition applied when solving Equation (12) for the amplitude. Having a parameter in the equation which has to be consistent with the boundary condition is typical for a nonlinear equation. On the contrary, linear equations allow the boundary condition to be set with an arbitrary multiplicative constant.

The solution of Equation (12) fulfilling the condition corresponds to a slowly varying amplitude function, asymptotically tending to the value . This is the amplitude function we are interested in, as it allows us to circumvent the Nyquist–Shannon sampling limit related to the oscillatory wavefunction .

However, Equation (12) may have other solutions than the slowly varying one. Returning to its asymptotic limit Equation (24), let us try to find another solution by considering a first-order perturbation around the constant solution of Equation (25). The equation for the perturbation is

From this equation, we get , showing that other solutions exist and that they exhibit rapid variation, i.e., over the scale.

Equation (12) is thus a stiff differential equation in the sense that it has multiple solutions varying over very different scales. However, for the purpose of a sampling reduction, we are only interested in the slowly varying one. Moreover, being nonlinear, this equation can allow coupling among its various solutions, which may constitute a problem, as we will see in the next section.

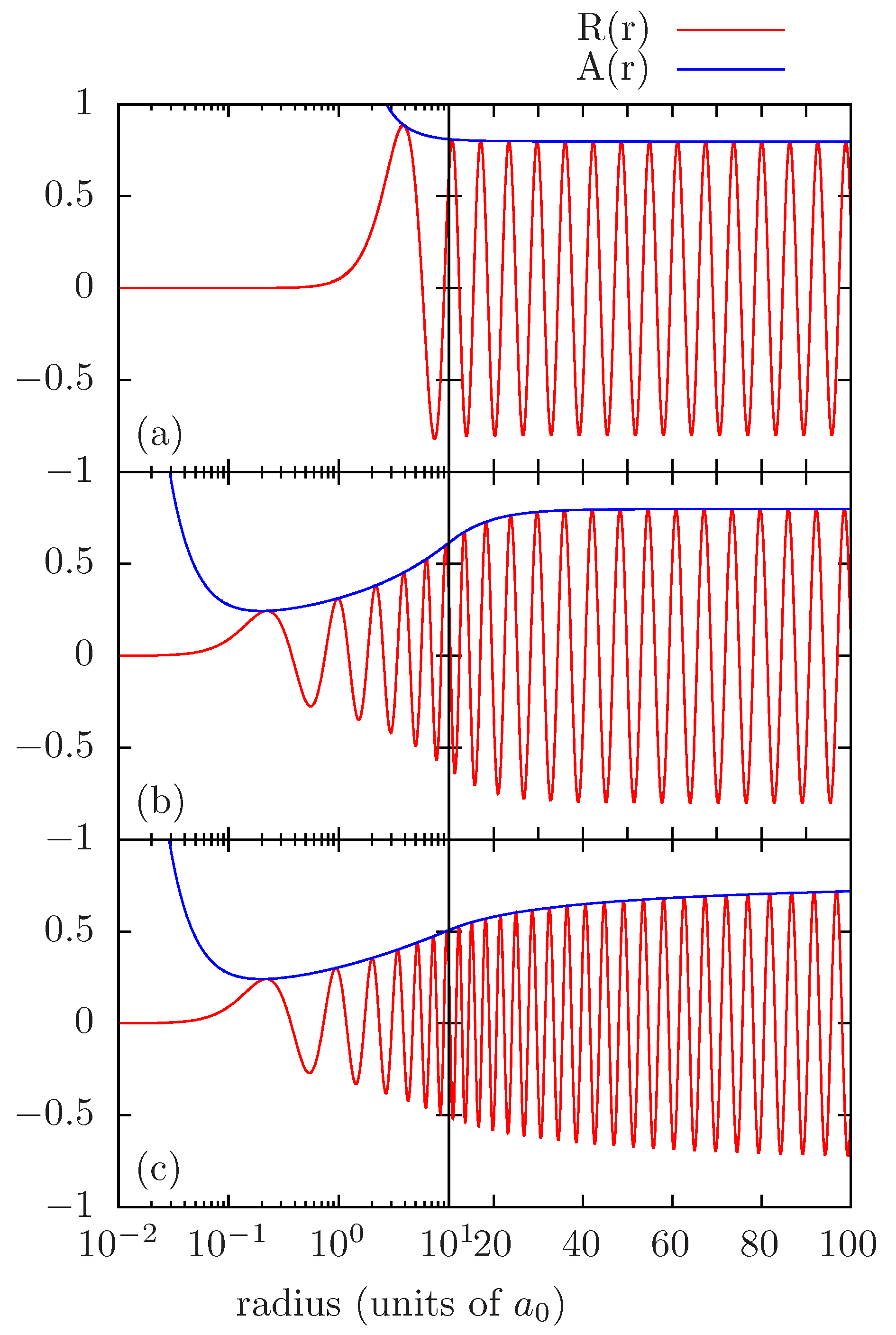

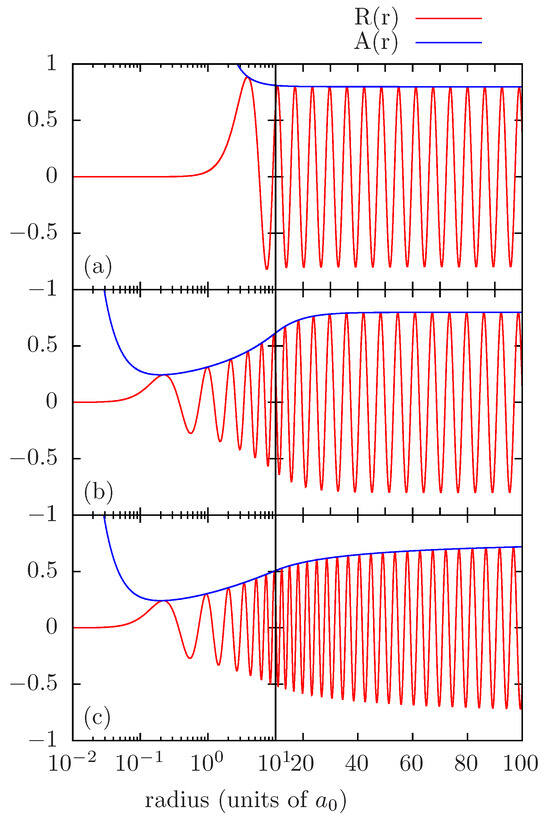

For the sake of illustrating the slowly varying amplitude function of Milne’s PA representation, in Figure 1, we present the radial wavefunctions and the corresponding amplitude functions , for , , in the case of a zero potential (a), of a screened Coulomb potential (b), and of a pure Coulomb potential (c). The slowly varying amplitude functions correspond to the envelopes of the related wavefunctions.

Figure 1.

Radial wavefunction and amplitude function for values and in three distinct potentials: zero potential (a), Yukawa potential with , (b), and pure Coulomb potential with (c).

Of course, the amplitude function can be obtained from a numerical solution of Equation (1), by finding the two linearly independent solutions R and Q and then combining them as in Equation (15). However, such a method would require sampling oscillatory functions, thus defeating the purpose of using the PA representation.

Thus, a number of authors have studied numerical methods for solving Equation (12) directly. Seaton and Peach, as well as Trafton, proposed iterative schemes, starting from an initial guess of the amplitude [15,16]. Bar Shalom et al. [13] proposed to use a predictor–corrector scheme due to Hamming (see [32] Sec. 24.3) when it is stable, and they proposed a modified predictor–corrector scheme for the region in which Hamming’s method is unstable. Wilson et al. [5,14] proposed to use a Kaps–Rentrop method [33,34]. Rawitscher [17] proposed a spectral iterative method based on a Chebyshev expansion.

In atomic physics applications, description of the continuum usually requires the calculation of a large number of wavefunctions in order to sample the momentum space. There is thus a special interest in fast, fully explicit numerical methods, such as that proposed in [13]. Before coming to the method that we propose, let us first present our motivation for the present work by discussing the limitation of some existing methods.

3. Limitations of Some Existing Methods

Bar Shalom et al. [13] propose an explicit method to numerically solve Equation (12) on a fixed grid of exponentially spaced points. The method in fact resorts to three distinct numerical schemes. We briefly recall its general principle below, as it guided many aspects of our method’s development.

In the innermost region, a standard Numerov scheme is used to solve Equation (1) for , up to a matching point , chosen close to the turning point , such that .

Beyond the matching point, Bar Shalom et al. [13] use a predictor–corrector method due to Hamming (see [32] Sec. 24.3) in order to solve Equation (12) for . Let us call this scheme Hamming’s predictor–corrector (HPC). However, they find that, as soon as is greater than one, then the corrector step amplifies the prediction error. Far from the origin, the slowly varying amplitude solution tends to a constant. Thus, takes small values which are obtained through Equation (12) as differences between two values that are comparatively large. This leads to large numerical errors, and this issue is common to all approaches that make use of Equation (12) to obtain from .

As a solution to this problem, Bar Shalom et al. [13] propose a modified predictor–corrector scheme for the outermost region. Its basic principle is to use the fact that varies slowly to build a predictor for and then use Equation (12) as a corrector, deducing a new value of from . This is carried out performing one step of a Newton method. Using the amplitude equation in this other way, the error amplification factor is inverted, leading to a stable scheme. Let us call this scheme Bar Shalom’s modified predictor–corrector (BSMPC).

In addition to the BSMPC scheme, the authors suggest to further improve the solution in the outermost region by re-evaluating from a high-order finite-difference scheme (five-point scheme) and correcting again using Equation (12). Adding such a step makes the method become partly an iterative method, and we did not find such an improvement useful in the present study.

The switching radius from the HPC to BSMPC scheme is located where , and these two schemes are actually employed in order to propagate the solution inwards, starting from the outer boundary condition at . Finally, solutions for and are matched at the radius , allowing one to normalize and determine the phase at the matching point. A summary of this method is given in Table 1.

Table 1.

Summary of the numerical method proposed in [13].

We found that the numerical method proposed in [13] performs very well on coarse grids. As described in their article, one really needs to apply both HPC and BSMPC schemes, paying attention to the switching radius . We verified that error amplification actually occurs when using any of the numerical schemes on the wrong side of , and found that the overall method is very sensitive to such error amplification.

In his book, Hamming derives a predictor–corrector numerical scheme of order 6. He also describes corrections to the predictor and corrector (see [32], end of Sec. 24.3), which are presented as optional, and makes the scheme of order 8. We found that in the present case, these corrections are required for the global stability of the numerical method, and we assume that Bar Shalom and his coauthors did use these corrections.

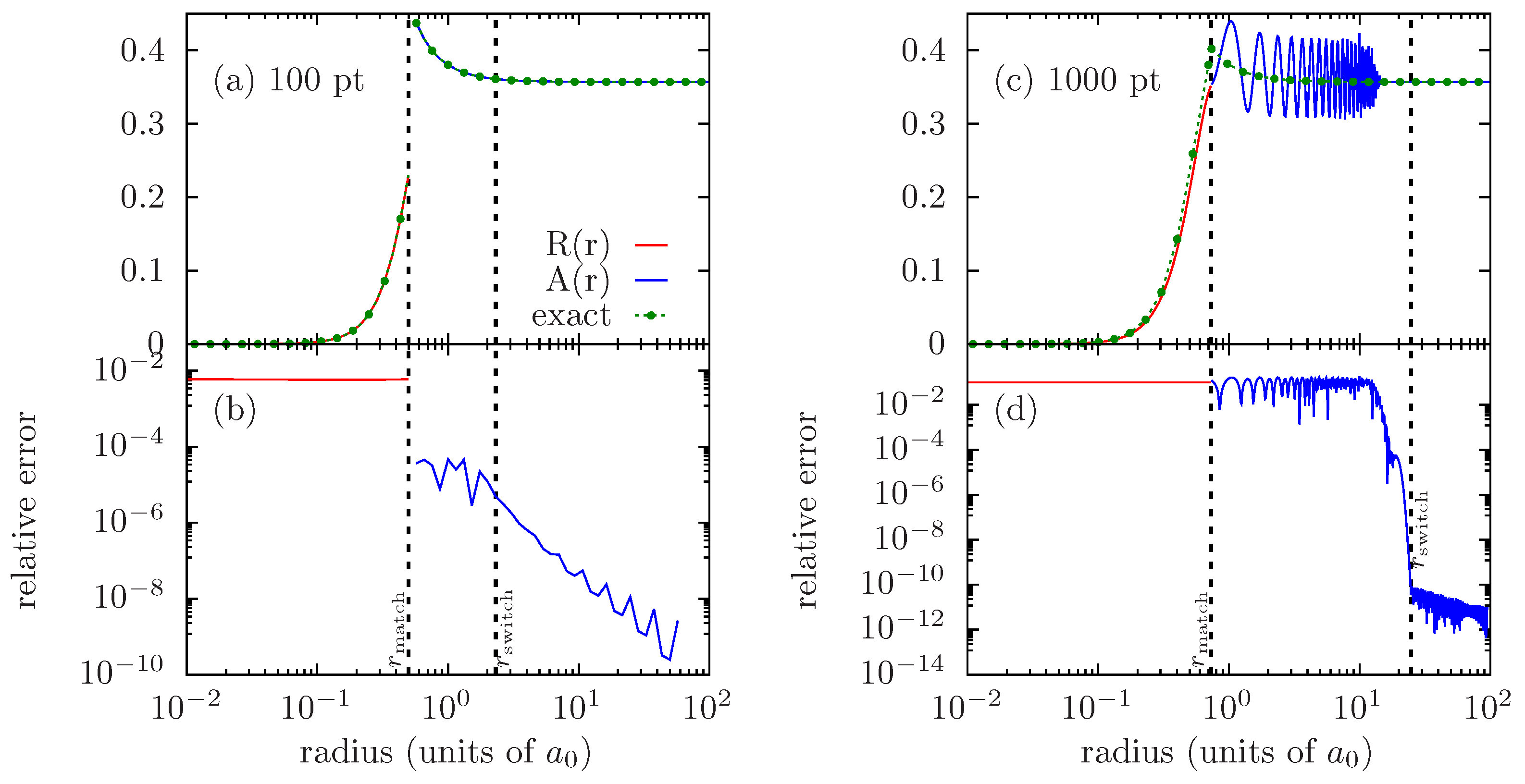

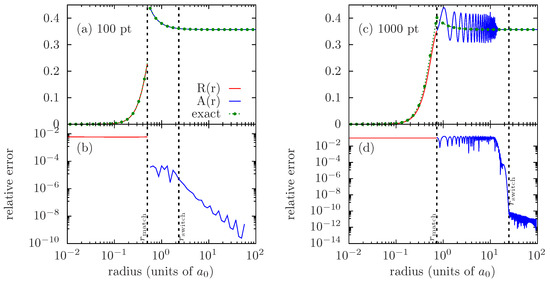

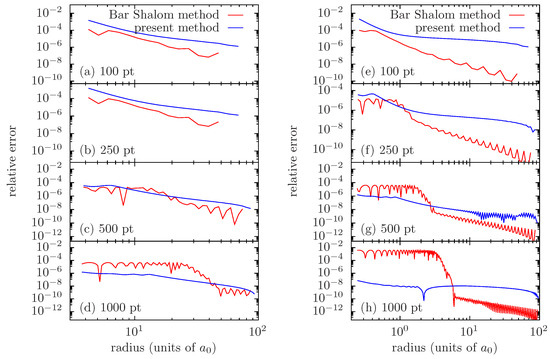

In principle, if one wants to increase the precision of the solution, refining the grid should be the way to proceed. It turns out that beyond a certain level of refinement, a numerical instability occurs, ruining the numerical solution. This issue is illustrated in Figure 2, which displays the results obtained in a case of zero potential (amplitude of spherical Bessel functions) when increasing the number of grid points from 100 to 1000.

Figure 2.

Radial wavefunction and amplitude function (a,c), with the corresponding relative errors (b,d) obtained with the method of [13] for the case of zero potential, , , using an exponentially spaced grid spanning from to . The exact solutions are shown with green dots and dashed line. Comparison of results obtained using grids of 100 (a,b) and 1000 (c,d) grid points in total, respectively. The 100-point grid has 38 steps between and , whereas the 1000-point grid has 272 steps in this region.

The triggering of an instability when considering finer grids constitutes a serious limitation to the systematic use of this numerical scheme, as it impairs the control of the numerical precision.

In Figure 2d, one may clearly identify a region of strong increase in errors. This region seems to always lie in the intermediate region, where the HPC scheme is used. By applying the predictor–corrector scheme step by step on a test case for which the solution is known exactly, one may precisely analyze the source of errors. By restarting at each step from the “exact” amplitude, one can see that the error growth is driven by the predictor; on the other hand, the corrector systematically improves the solution as expected.

An explanation for this large increase in error may be the nonlinear character of Equation (12). In principle, it allows for the coupling among various solutions and enables the growth of a spurious, rapidly varying component in the solution, starting from an initial seed.

In principle, we select the desired, slowly varying solution through the boundary condition. However, any error, either the truncation error on the boundary condition or the accumulation of numerical errors when propagating the solution, may be seen as a mix between the desired solution and the spurious ones, seeding the growth of the rapidly varying component.

A numerical limitation to the growth of a rapidly varying component is the sampling. A coarse grid acts as a low-pass filter and may temper the growth of a rapidly varying component. It turns out that the criterion for switching from the HPC to BSMPC is close to the Nyquist–Shannon sampling criterion for the rapidly varying solution. This probably explains why the region where the spurious component builds up is located inside the intermediate region, near the switching radius.

Regardless of the method used, any attempt to directly solve the nonlinear Equation (12) is likely to yield a result which is strongly sensitive to errors and prone to the growth of a rapidly varying component in the amplitude. This notably explains the crucial need for a high-order scheme in the method of [13] and why the corrections which allow one to reach order 8 matter so much.

In [5,14], the authors used a Rosenbrock method proposed by Kaps and Rentrop [33], which is frequently used for stiff equations [34]. Typical numerical methods for stiff equations are usually based on adaptive grid refinement, aiming at correctly sampling all solutions, with all their various scales of variation.

Certainly, Equation (12) is stiff, but our purpose is only to calculate its slowly varying solution, and not to achieve a correct sampling of all of its solutions. In this view, it is likely that such methods are of limited help for the present problem. However, using a very stringent precision target, adaptive grid refinement may also limit the accumulation of errors, therefore limiting the seed for a spurious component. One should, however, keep in mind that, in many cases of application, the potential associated with the wavefunctions is sampled on a predefined grid. Adaptive refinement methods then require to interpolate the potential at intermediate grid points, resulting in a supplementary loss of precision.

A simple, explicit method resorting to adaptive grid refinement is the Runge–Kutta–Merson (RKM) method [35]. We choose this method as a test-bed in order to illustrate a typical issue of using an adaptive grid refinement method for Equation (12).

The RKM method is based on a fourth-order Runge–Kutta (RK4) scheme, with a supplementary evaluation that allows one to estimate the truncation error. Let us first put Equation (12) under the canonical form:

with being and the bars denoting vector functions having two components labeled 0 and 1, respectively.

Solving for over one step h with a classical RK4 scheme requires the evaluation of the function at four different points:

One then expresses the fourth-order approximate solution as a weighted sum of the ’s.

Then, using only one more evaluation of the function ,

one may calculate a fifth-order approximation :

The truncation error can then be estimated, allowing one to choose the integration step according to a fixed precision target, or tolerance . The algorithm that we use is as follows. If , divide the integration step h by 2. If , multiply it by 2. If , accept the step and proceed to the next grid point .

As for the method of Bar Shalom et al. [13], we employ the RKM scheme to propagate the solution inwards, starting from the outer boundary condition at . We apply the RKM method successively for each interval between two points of the base grid.

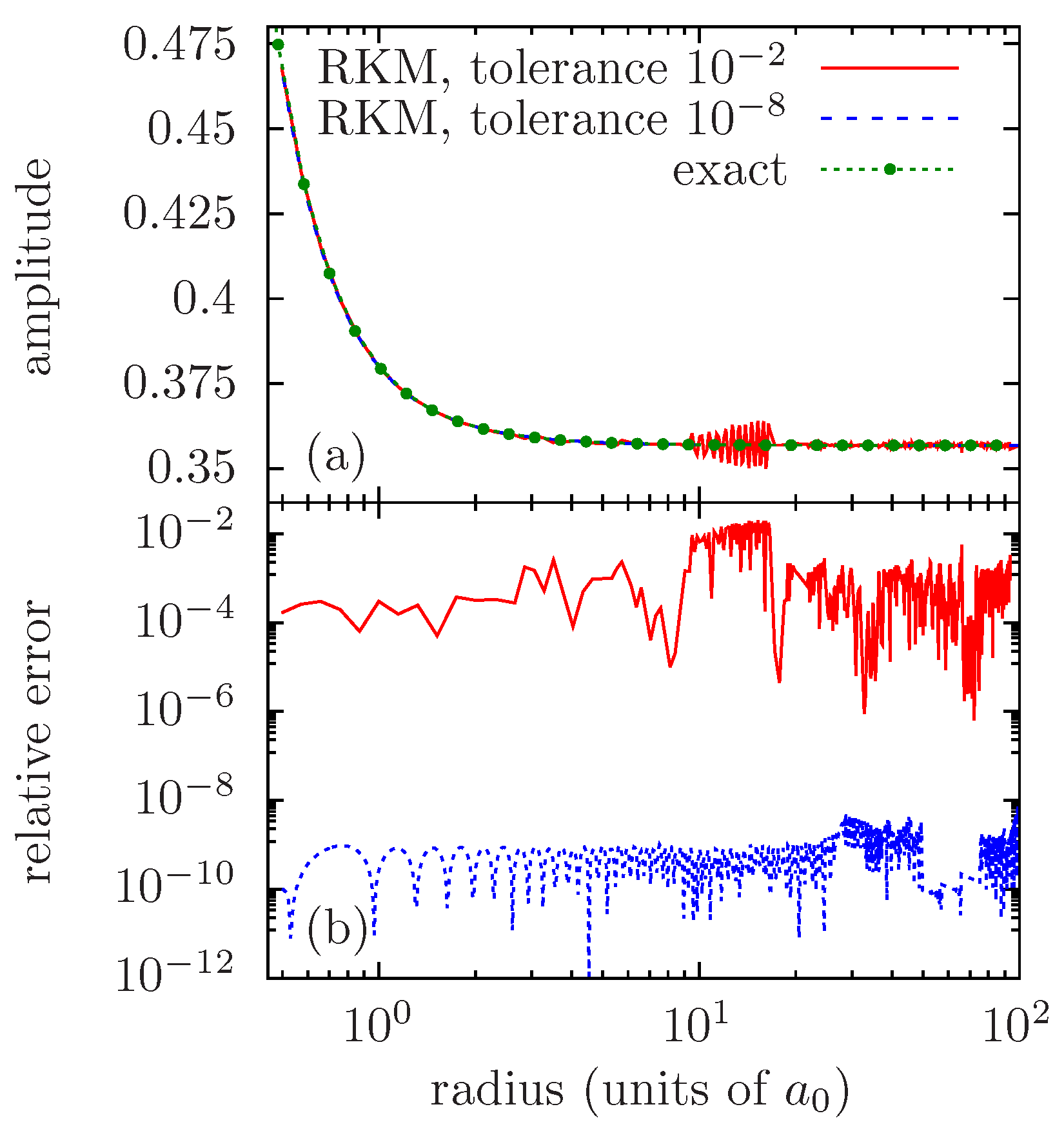

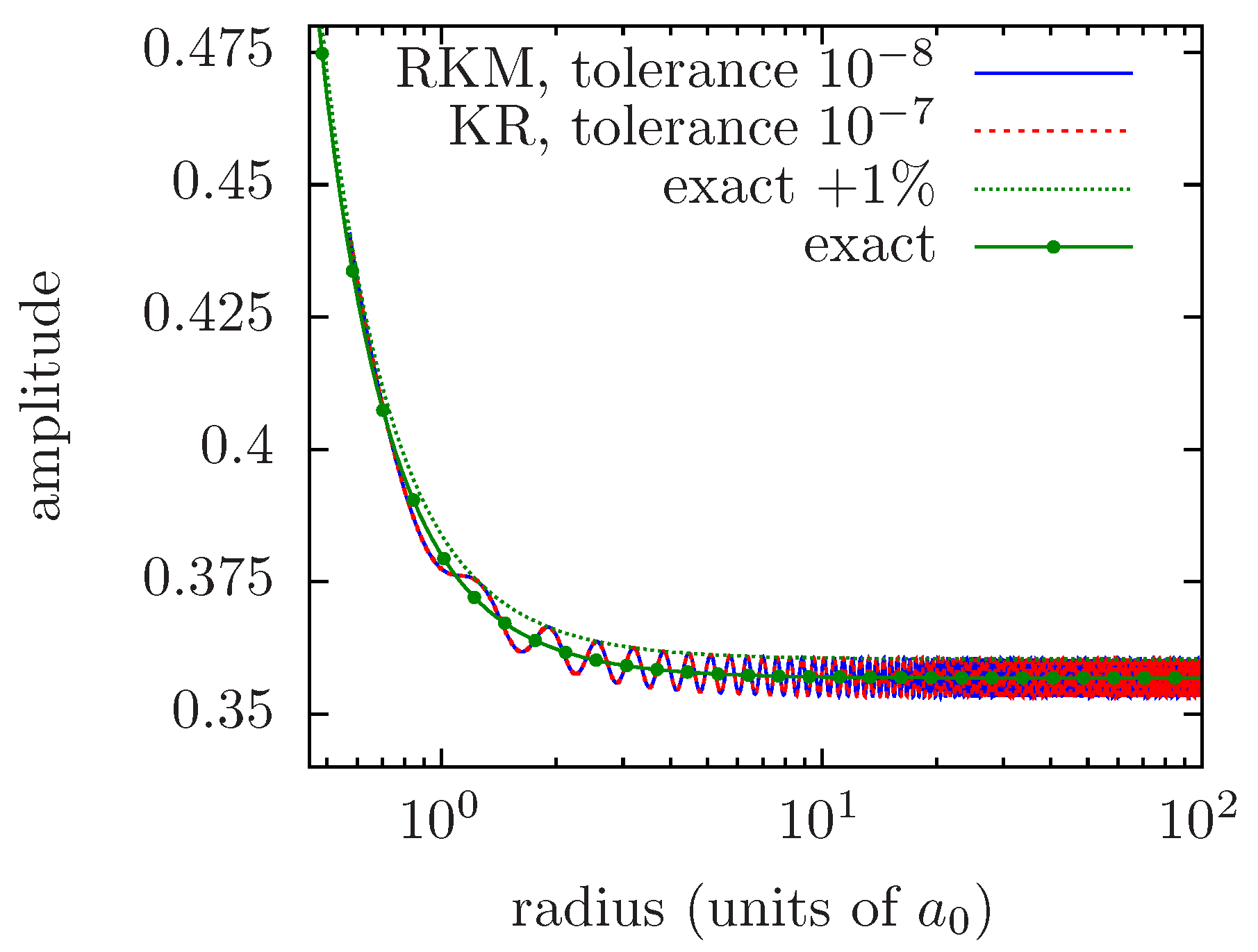

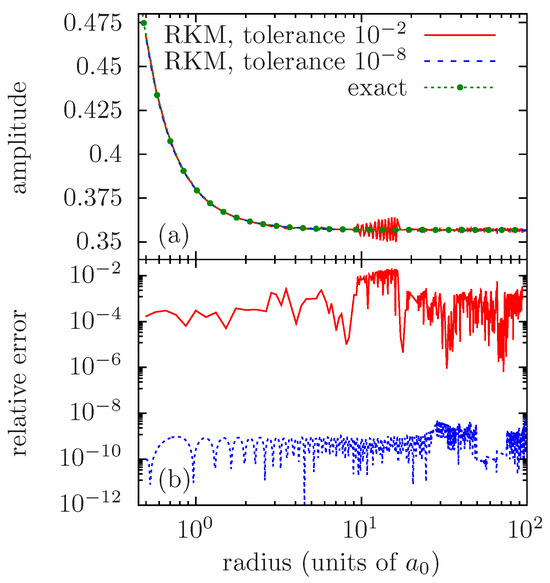

Figure 3 presents the results obtained with the RKM method on the same case as in Figure 2, with the base grid being that of Figure 2a,b (100 points, out of which 38 for the interval).

Figure 3.

Amplitude function (a), with the corresponding relative error (b) obtained with the Runge–Kutta–Merson adaptive refinement method, for the case of zero potential, , , using an initial grid of 100 exponentially spaced points, spanning from to . The presented results correspond to precision targets (or tolerances) of and . In the present case, the corresponding refined grids have 436 and 848 grid points between and , respectively. The initial grid has 38 steps in this region.

Setting the tolerance to , one can see how the spurious solution can grow, requiring the method to refine the grid in order to correctly sample its rapid variations. In the present case, the refined grid actually has 436 points, which should be compared with the 38 points between and of the initial grid.

By decreasing the tolerance of the integration steps to , one may efficiently prevent the growth of numerical errors; however, this is carried out at the price of refining the grid even more. In the present case, the refined grid for tolerance has 848 grid points.

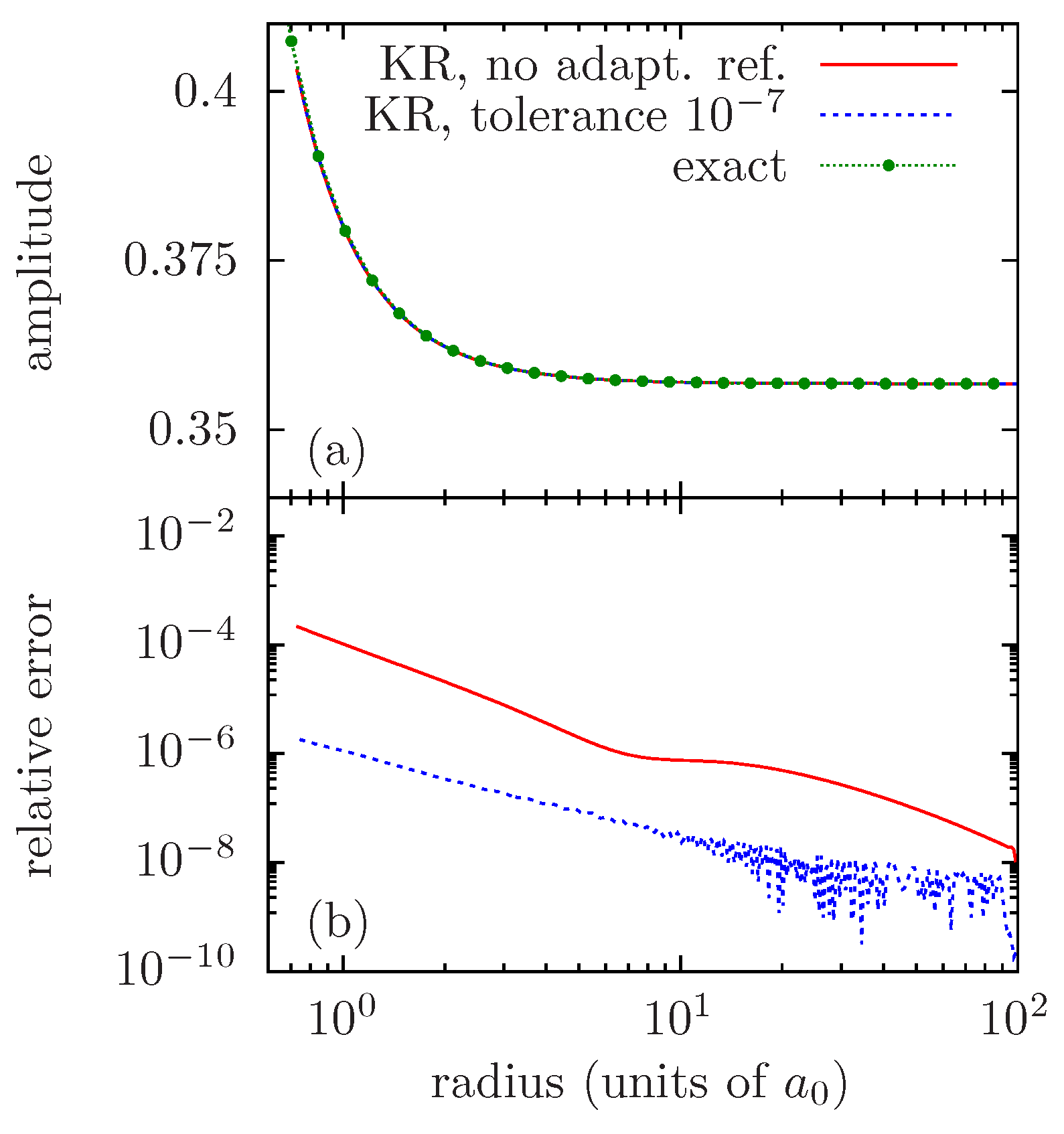

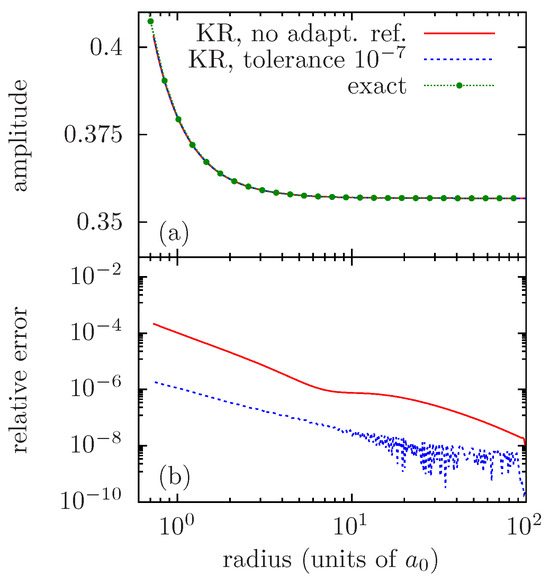

The Kaps–Rentrop (KR) method [33,34] was used by Wilson et al. [5,14] to solve the amplitude equation related to the Dirac equation, which is an analogue of Equation (12). It is an adaptive mesh refinement method based on an implicit scheme.

Figure 4 presents the results obtained with the KR method on the same case as in Figure 2, with the base grid being that of Figure 2c,d (1000 points, out of which 272 are for the interval). We tried using the KR numerical scheme both with and without performing an adaptive grid refinement.

Figure 4.

Amplitude function (a), with the corresponding relative error (b) obtained with the Kaps–Rentrop method, for the case of zero potential, , , using a exponentially spaced base grid of 1000 points, spanning from to . We present results obtained with and without adaptive grid refinement. In the present case, the refined grid corresponding to the precision target of has grid points between and . The initial grid has 272 steps in this region.

It may be seen in Figure 4a that the implicit scheme of the KR method seems stable over the whole region between and . By directly using the base grid, i.e., performing no adaptive refinement, the KR numerical scheme yields a result of limited precision, with the maximum relative error being about in this case. In order to improve precision, grid refinement has to be performed. Achieving a maximum relative error of about requires setting the precision target to , which results in a refined grid of points, which should be compared with the 272 points of the base grid.

The mechanism may be interpreted as follows. When propagating the numerical solution, errors accumulate, deviating the obtained solution from the slowly varying amplitude. The errors may be seen as a linear perturbation as that of Equation (29). As soon as the magnitude of these errors reaches the precision target of the method, the grid has to be refined in order to correctly describe the behavior of the perturbation, which actually has rapid variations.

A closely related issue is the sensitivity to errors on the boundary condition. Any inconsistency between the boundary condition and the value of can be viewed as a contribution from a rapidly varying component, which results in heavy grid refinement (see, for instance, [21], Figure 6.9, p. 115).

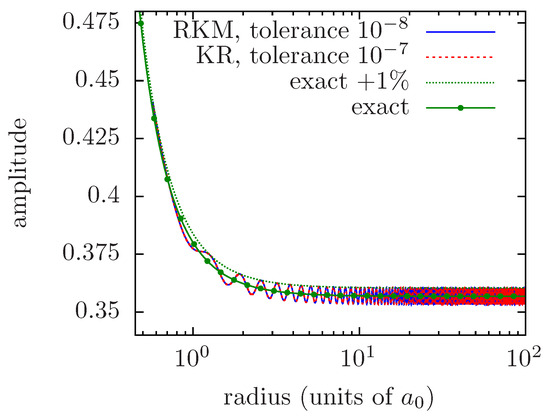

In order to illustrate the latter points, Figure 5 shows the results of the RKM and KR methods when a perturbation larger than the precision target is artificially introduced in the boundary condition. One clearly sees how these methods result in refining the grid so as to correctly sample the rapid variations of the perturbation. The same phenomenon occurs, with the much smaller perturbation induced by accumulated numerical errors, as soon as they reach the precision target.

Figure 5.

Amplitude functions obtained with the Runge–Kutta–Merson and Kaps–Rentrop methods, respectively, for the case of zero potential, , , artificially introducing a 1% error on the boundary condition. Results shows rapid oscillations due to the spurious component of the solution.

4. Proposed Numerical Method

4.1. General Principle

In reference [20], Kiyokawa uses the following transformation, initially pointed out by Trafton (see [16], Equations (8) and (9)). By multiplying Equation (12) by , and rearranging the terms, one can write

and perform the change of function . It was noted by Kiyokawa that, if one expresses and uses Equation (12), one obtains the following relation:

When inserted into Equation (36), the latter relation yields a linear third-order differential equation for Y:

where denotes the third derivative of Y. Kiyokawa used this linear equation in order to derive analytic results on Coulomb wavefunctions [20].

In this article, we propose to use Equation (38) for numerical calculations, instead of Equation (12). In principle, using a linear equation should exclude any coupling to spurious, rapidly varying solutions and completely solve the issue of the sensitivity to errors. We should then obtain a numerical method that is much more robust and can be applied more systematically. In particular, it should be tolerant with respect to any truncation error on the boundary condition. In this respect, it may be worth highlighting that in Equation (38), the parameter disappears, and the boundary condition may be defined with any multiplicative constant, as is expected and necessary for a linear equation.

Equation (38) involves the first derivative of the potential. In many cases, a solution method of the Schrödinger equation is used in an effective potential approach. Such approaches are most often based either on some analytical Ansatz for the potential, like the parametric potential approach of Klapisch [36], or on a self-consistent field model relying on some numerical solution of the Poisson equation. In both cases, the first derivatives of the potential can be easily obtained at the grid nodes, with virtually no additional cost.

In practice, the numerical scheme we propose is mostly an extension of the well-known Numerov scheme, plus an adaptation of the modified predictor–corrector scheme of Bar Shalom et al. [13].

4.2. Innermost Region

Milne’s amplitude function is singular at zero. For that reason, as is carried out in [13], we do not solve for the amplitude function close to zero. We instead solve for the wavefunction using a standard Numerov scheme, propagating the solution outwards. We usually set the matching point to the radius at which some chosen fraction of the sampling limit is reached. In order to minimize the error when matching, we also set it preferably near a maximum of the wavefunction.

4.3. Intermediate Region

We recast the linear third-order equation Equation (38) under the following partial canonical form:

where . We treat the first of these two equations using a standard Numerov scheme and the second one using a corollary result of the Numerov method for the derivative.

Let us consider the Taylor expansion for the q-th derivative of , :

Let us consider that r is uniformly sampled: . Using the Taylor expansion at and , we may write

where the n indices denote values of the functions taken at .

First, we use Equation (42) with , and with , , and we eliminate .

Using Equation (39), we obtain

which corresponds to the usual Numerov scheme for a second-order inhomogeneous equation with no first-order derivative.

Then, in order to express , we use Equation (43) with , , and with , , and we eliminate .

Using Equation (40) and its derivative, we obtain

Let us now use Equation (43) with , , reuse Equation (42) with , and eliminate :

Using Equation (48) in Equation (47), and using again Equation (39) to express , we obtain

Finally, we use the latter equation to eliminate in Equation (45):

Thus, we obtain a fully explicit expression for , relying solely on values of and at the points n and . At each step, we use Equation (50) to compute and then Equation (49) to compute . In practice, we use this numerical scheme in the inward direction. This numerical scheme is of order 6 for (regular Numerov scheme), and of order 5 for , which is the main quantity of interest. This is to be compared with order 8 of the HPC scheme used by Bar Shalom and coauthors.

Finally, looking at Equation (50), we can see that error amplification is to be expected whenever or , in the same way as pointed out in [13]. Although our numerical scheme is much less sensitive to the accumulation of numerical errors, we still need a complementary scheme for regions in which the grid is too coarse. A simple solution is to adapt the modified predictor–corrector scheme proposed in [13].

4.4. Outermost Region

For coarse grids, or in the outermost region of a grid of increasingly spaced points, where , we propose an adaptation of the modified predictor corrector of Bar Shalom et al. [13]. The key steps are 1) using the slow variation of the amplitude to build a predictor for (, ) and 2) using Equation (39) to obtain from , , thus avoiding error amplification.

Using Equation (43) with , , we have

Let us assume that is varying slowly and thus is roughly equal to its average value over three successive grid points, as follows:

Using this approximation in Equation (51), one obtains

where we define as follows:

Equation (53) may be used as a predictor for :

Let us now use Equation (43) with , :

Using Equation (40) and the definition of , we obtain

We finally use Equation (39) in order to obtain from :

Because Equation (39) is linear, we obtain a linear algebraic equation for , which we readily solve analytically:

In the method of [13], the equation was nonlinear, and one step of a Newton method was used. When we use Equation (60), the value of is estimated using the predictor of Equation (55). Looking at Equation (60), we clearly see that an amplification of the error on will occur when .

4.5. Method Summary

To summarize, our numerical method is as follows (see also Table 2). From zero to a matching point, we use the usual Numerov scheme outwards, in order to solve Equation (1) for . The inner boundary condition that we use corresponds to a radial wavefunction regular at zero. The chosen matching point is near the point at which some fraction of the sampling limit is reached, preferably close to a maximum of .

Table 2.

Summary of the numerical method proposed in the present work.

We then use Equations (55), (57) and (60) to propagate the solution inwards, starting from the outer boundary condition, until reaching a point where . The outer boundary condition is given by Equation (15), with R, Q given by Equations (22) and (23).

In the region where , we use Equations (49) and (50) to propagate the solution further inwards, up to the matching point.

Matching is performed in order to normalize the inner part of according to the outer boundary condition, as well as to determine the phase at the matching radius. The phase function is subsequently obtained by integrating the function outwards using, for instance, Simpson’s rule.

5. Test of the Method

We tested the present method using linear grids, quadratic grids, exponentially spaced grids, and mixed linear–exponential grids. The relations between the radius in these grids and the parameter r which is uniformly sampled are recalled in Table 3. Together with the change of variable from to r, we perform the following change of function , which leaves Equations (1), (12) and (38) invariant:

The relevant functions for each grid are also given in Table 3.

Table 3.

Change of the variables and functions (f) corresponding to various grids.

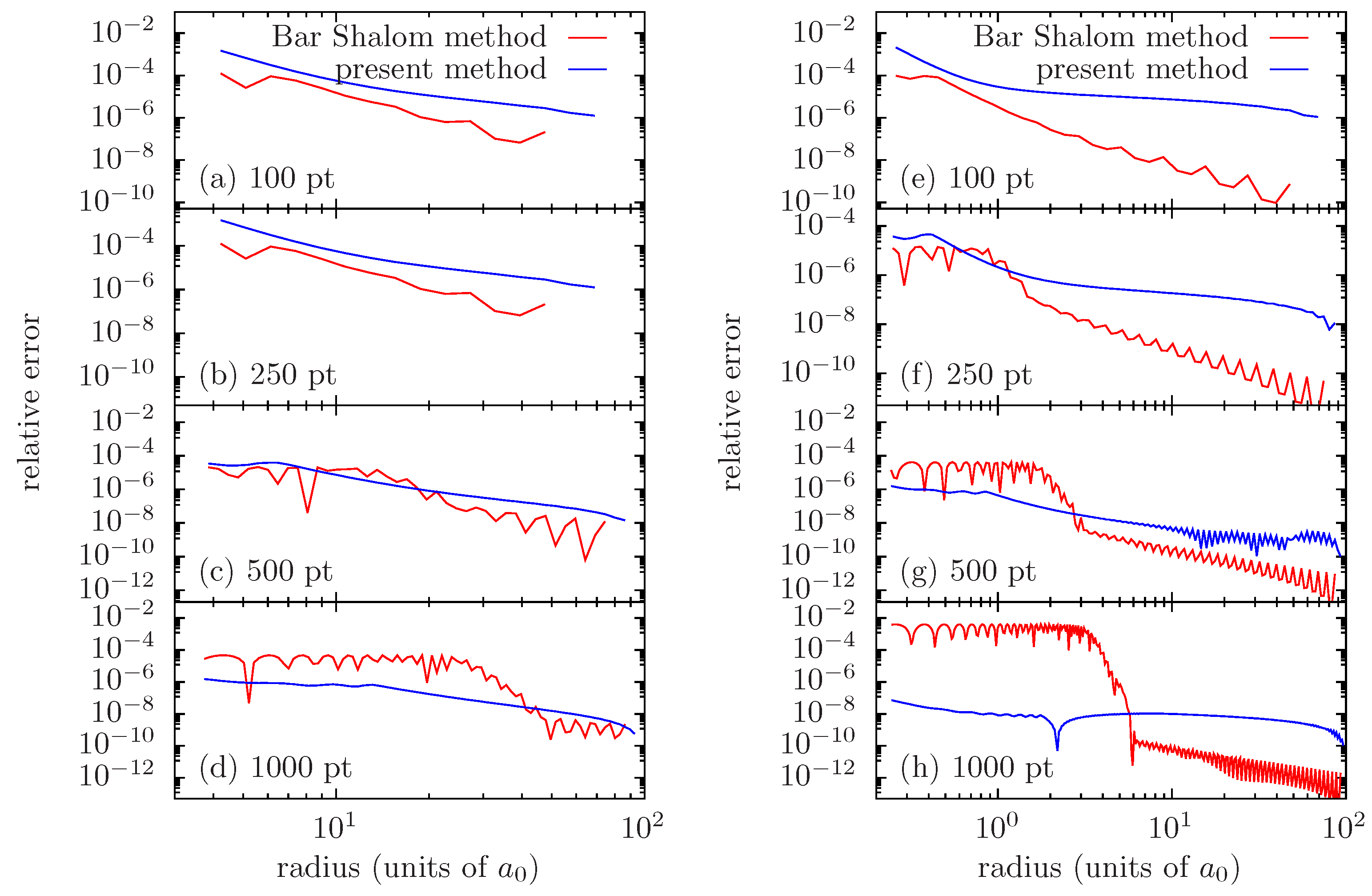

Figure 6 presents the relative error on the amplitude function obtained with our method, compared to that of [13], in the case of zero potential, for , and . In order to remain as close as possible to reference [13], we perform this comparison using an exponentially spaced grid, varying the number of grid points. We also set the matching point to be located not farther than the first maximum of .

Figure 6.

Relative error on the amplitude function obtained with the Bar Shalom method and with the present method. Case of zero potential, for , (a–d) and (e–h) using a exponentially spaced grid spanning from to with 100 (a,e), 250 (b,f), 500 (c,g), and 1000 (d,h) points, respectively. The error is shown only from the matching radius to the grid’s outer boundary.

Errors in the outermost region seem systematically larger with the present method than with the method of [13]. This may indicate that the approximation of Equation (52) is less justified for than it is for . However, the largest contribution to the numerical errors stems from error accumulation in the intermediate region, where the amplitude varies more significantly. Consequently, we do not consider the numerical scheme used in the outermost region as a limiting factor for our method.

Using a coarse grid (see Figure 6a,e), for which the method of [13] performs well, error growth in the intermediate region is stronger with our fifth-order scheme than with the HPC scheme, which is of the eighth order. This is fully expected.

Considering finer grids (see Figure 6b–d,f–h), one clearly sees the effect of the spurious component that appears with the method of [13], which puts a lower bound on the precision that may be achieved and ultimately ruins the solution. On the contrary, the present method exhibits a continuous improvement of precision, when one refines the grid. Beyond some grid refinement, it may become relevant to improve the solution in the outermost region. This can be carried out as suggested in [13], by making some iterative steps after applying the modified predictor–corrector scheme.

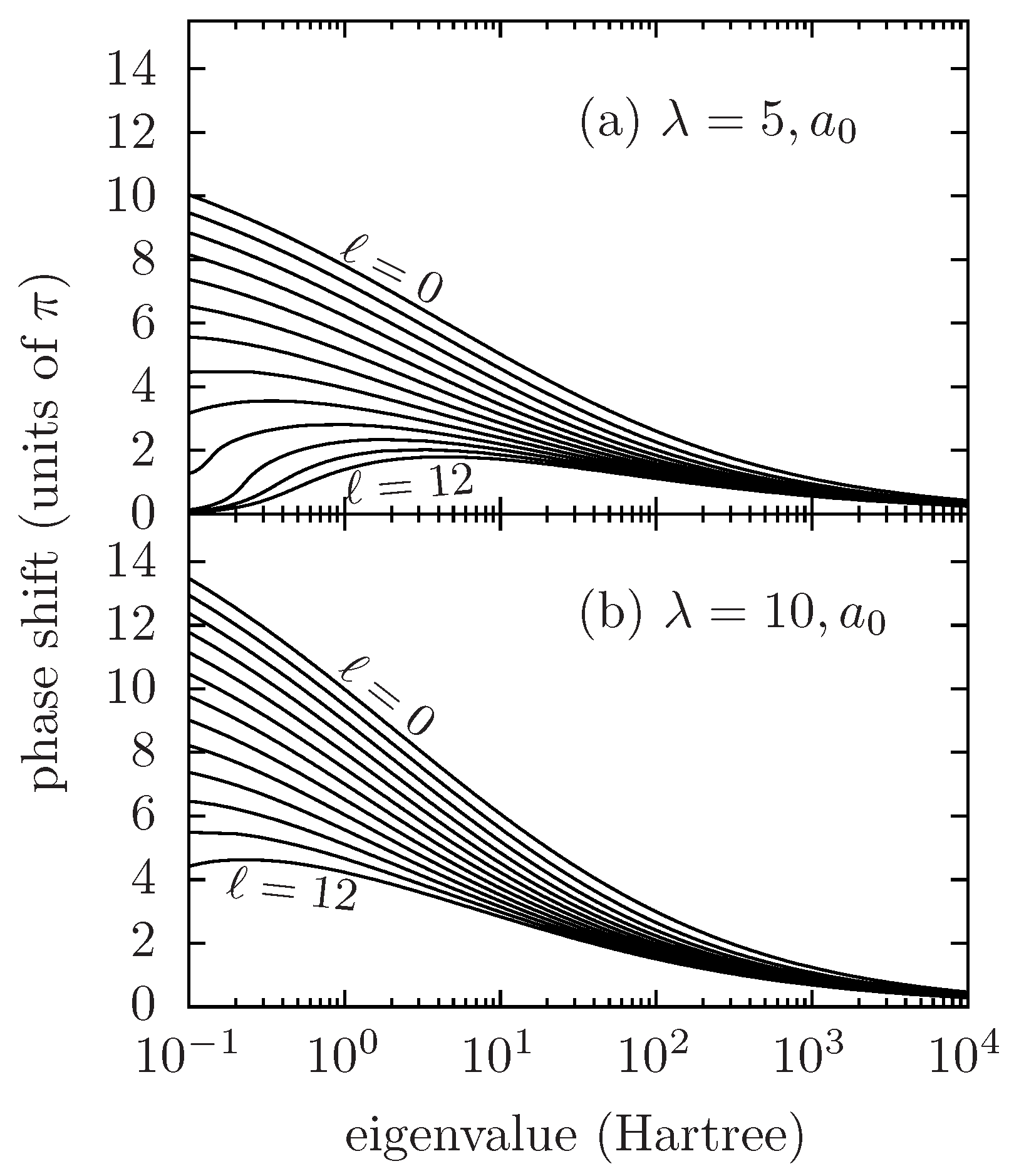

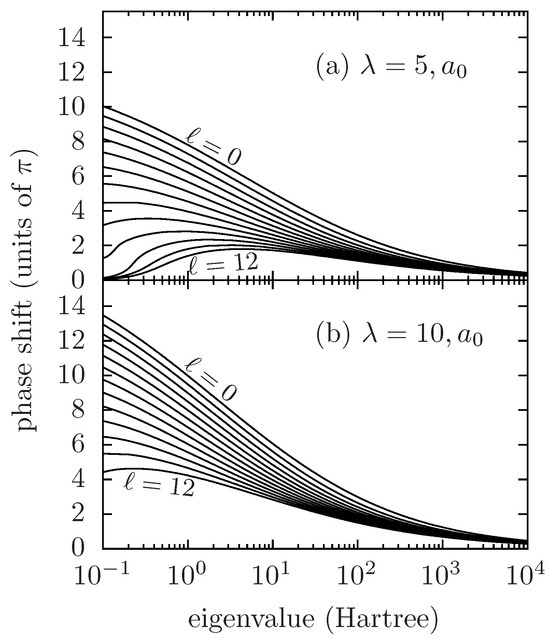

As an example of the systematic application of the present numerical method, we present, in Figure 7, the phase shifts as functions of eigenvalue (i.e., orbital energy) for two fully screened Coulomb potentials with , decay length and , for an orbital number ℓ ranging from 0 to 12. These curves were obtained using the present method on an exponentially spaced grid of 1000 points, spanning from to . Using the Milne PA representation with the present numerical method allows one to compute phase shifts for arbitrarily high eigenvalues while keeping this relatively coarse radial grid. Recovering comparable results from a computation of the oscillatory wavefunction on the same range of radii and energies typically requires a radial grid with a few hundred thousand points.

Figure 7.

Scattering phase shifts calculated using the present method for two cases of fully screened Coulomb potential with , (a) and (b) for eigenvalues ranging from to Hartree and an orbital quantum number ℓ ranging from 0 to 12.

The computation of wavefunctions for a partially screened potential, i.e., a potential having a Coulomb tail , can be carried out according to the same method, using the Coulomb wavefunctions in the boundary condition instead of Bessel functions (see Equations (22), (23) and (15)). One would then be interested in finding the phase shifts with respect to the Coulomb wavefunctions related to the asymptotic charge .

6. Conclusions

In the present article, we address the challenges inherent in solving Milne’s amplitude equation, a critical step in accurately modeling atomic processes in plasmas. We identified limitations in existing numerical methods, such as the predictor–corrector scheme of Bar Shalom et al. [13] and adaptive grid refinement techniques, stemming from their proneness to let spurious, rapidly varying solutions emerge. To overcome these limitations, we developed a fully explicit numerical method based on the linear equation derived by Kiyokawa [20]. This approach effectively eliminates the coupling to spurious solutions, resulting in a more robust scheme which allows for a systematic improvement in accuracy when grid’s resolution is increased. To outline an indication of the potential of this method, we illustrate its application with regard to the calculation of phase shifts. Applications of this method to the calculation of atomic cross-sections will be pursued in our subsequent work, notably elaborating from the previous works on collisional cross-sections [13] and free–free photoabsorption cross-sections [14].

Author Contributions

The authors’ respective contributions are as follows: R.P. studied the limitations of the method of Bar Shalom et al., conceptualized the present study, proposed the explicit numerical method and performed the comparisons with that of Bar Shalom et al.; M.T. explored the use of the Runge–Kutta–Merson method, and performed the comparisons with the Kaps–Rentrop method. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data is available upon reasonable request to the authors.

Acknowledgments

R.P. would like to acknowledge the discussions with B. G. Wilson and M. Klapisch on this subject at the 2008 and 2010 editions of the Radiative Properties of Hot Dense Matter conference. The authors would also like to thank the Academic Editor and the three anonymous referees for their relevant suggestions and for giving them the opportunity to enrich this paper with an additional figure.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Blenski, T.; Piron, R.; Caizergues, C.; Cichocki, B. Models of atoms in plasmas based on common formalism for bound and free electrons. High Energy Density Phys. 2013, 9, 687–695. [Google Scholar] [CrossRef]

- Piron, R. Atomic Models of Dense Plasmas, Applications, and Current Challenges. Atoms 2024, 12, 26. [Google Scholar] [CrossRef]

- Piron, R. Atoms in Dense Plasmas: Models, Applications, and Current Challenges. Habilitation Thesis, Sorbonne Université, Paris, France, 2024. [Google Scholar] [CrossRef]

- Liberman, D.A. Self-consistent field model for condensed matter. Phys. Rev. B 1979, 20, 4981–4989. [Google Scholar] [CrossRef]

- Wilson, B.; Sonnad, V.; Sterne, P.; Isaacs, W. Purgatorio—A new implementation of the Inferno algorithm. J. Quant. Spectrosc. Radiat. Transf. 2006, 99, 658–679. [Google Scholar] [CrossRef]

- Piron, R.; Blenski, T. Variational-average-atom-in-quantum-plasmas (VAAQP) code and virial theorem: Equation-of-state and shock-Hugoniot calculations for warm dense Al, Fe, Cu, and Pb. Phys. Rev. E 2011, 83, 026403. [Google Scholar] [CrossRef]

- Piron, R.; Blenski, T. Average-atom model calculations of dense-plasma opacities: Review and potential applications to white-dwarf stars. Contrib. Plasma Phys. 2018, 58, 30–41. [Google Scholar] [CrossRef]

- Shannon, C. Communication in the Presence of Noise. Proc. IRE 1949, 37, 10–21. [Google Scholar] [CrossRef]

- Starrett, C.E.; Shaffer, N.R. Average-atom model with Siegert states. Phys. Rev. E 2023, 107, 025204. [Google Scholar] [CrossRef]

- Batishchev, P.A.; Tolstikhin, O.I. Siegert pseudostate formulation of scattering theory: Nonzero angular momenta in the one-channel case. Phys. Rev. A 2007, 75, 062704. [Google Scholar] [CrossRef]

- Milne, W.E. The numerical determination of characteristic numbers. Phys. Rev. 1930, 35, 863–867. [Google Scholar] [CrossRef]

- Goldstein, M.; Thaler, R.M. Bessel Functions for Large Arguments. Math. Tables Other Aids Comput. 1958, 12, 18–26. [Google Scholar] [CrossRef]

- Bar-Shalom, A.; Klapisch, M.; Oreg, J. Phase-amplitude algorithms for atomic continuum orbitals and radial integrals. Comput. Phys. Commun. 1996, 93, 21–32. [Google Scholar] [CrossRef]

- Wilson, B.; Sonnad, V.; Sterne, P.; Isaacs, W. Improvements in the phase-amplitude method for calculating free-free gaunt factors and spherical bessel function of high angular momentum. J. Quant. Spectrosc. Radiat. Transf. 2003, 81, 499–512. [Google Scholar] [CrossRef]

- Seaton, M.J.; Peach, G. The Determination of Phases of Wave Functions. Proc. Phys. Soc. 1962, 79, 1296. [Google Scholar] [CrossRef]

- Trafton, L. A rapid numerical solution to the radial Schroedinger equation in the oscillatory region. J. Comput. Phys. 1971, 8, 64–72. [Google Scholar] [CrossRef]

- Rawitscher, G. A spectral Phase–Amplitude method for propagating a wave function to large distances. Comput. Phys. Commun. 2015, 191, 33–42. [Google Scholar] [CrossRef]

- Bar-Shalom, A.; Oreg, J.; Klapisch, M. Non-LTE Superconfiguration Collisional Radiative Model. J. Quant. Spectrosc. Radiat. Transf. 1997, 58, 427–439. [Google Scholar] [CrossRef]

- Bar-Shalom, A.; Klapisch, M.; Oreg, J. HULLAC, an integrated computer package for atomic processes in plasmas. J. Quant. Spectrosc. Radiat. Transf. 2001, 71, 169–188. [Google Scholar] [CrossRef]

- Kiyokawa, S. Exact solution to the Coulomb wave using the linearized phase-amplitude method. AIP Adv. 2015, 5, 087150. [Google Scholar] [CrossRef]

- Piron, R. Variational Average-Atom in Quantum Plasmas (VAAQP). Ph.D. Thesis, École Polytechnique, Palaiseau, France, 2009. [Google Scholar]

- Good, R.H. The Generalization of the WKB Method to Radial Wave Equations. Phys. Rev. 1953, 90, 131–137. [Google Scholar] [CrossRef]

- Rosen, M.; Yennie, D.R. A Modified WKB Approximation for Phase Shifts. J. Math. Phys. 1964, 5, 1505–1515. [Google Scholar] [CrossRef]

- Wald, S.S.; Lu, P. Modified WKB approximation for phase shifts of an attractive singular potential. Phys. Rev. D 1974, 10, 3434–3440. [Google Scholar] [CrossRef]

- Stein, J.; Ron, A.; Goldberg, I.B.; Pratt, R.H. Generalized WKB approximation to nonrelativistic normalizations and phase shifts in a screened Coulomb potential. Phys. Rev. A 1987, 36, 5523–5529. [Google Scholar] [CrossRef] [PubMed]

- Calogero, F. A novel approach to elementary scattering theory. Il Nuovo Cimento 1963, 27, 261–302. [Google Scholar] [CrossRef]

- Calogero, F. Variable Phase Approach to Potential Scattering. In Mathematics in Science and Engineering; Elsevier: Amsterdam, The Netherlands, 1967; Volume 35, ISBN 978-0121555504. [Google Scholar]

- Green, A.E.S.; Rio, D.E.; Schippnick, P.F.; Schwartz, J.M.; Ganas, P.S. Analytic Phase Shifts for Yukawa Potentials. Int. J. Quantum Chem. 1982, 16, 331–343. [Google Scholar] [CrossRef]

- Blenski, T.; Ligou, J. An improved shooting method for one-dimensional schrödinger equation. Comput. Phys. Commun. 1988, 50, 303–311. [Google Scholar] [CrossRef]

- Nikiforov, A.F.; Novikov, V.G.; Uvarov, V.B. Quantum-Statistical Models of Hot Dense Matter; Birkhauser: Basel, Switzerland, 2005; ISBN 978-3764321833. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables; Dover Publication, Inc.: New York, NY, USA, 1965; ISBN 978-0486612720. [Google Scholar]

- Hamming, R.W. Numerical Methods for Scientists and Engineers; Dover Publication, Inc.: Garden City, NY, USA, 1986; ISBN 978-0486652412. [Google Scholar]

- Kaps, P.; Rentrop, P. Generalized Runge-Kutta Methods of Order Four with Stepsize Control for Stiff Ordinary Differential Equations. Numer. Math. 1979, 33, 55–68. [Google Scholar] [CrossRef]

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T.; Flannery, B.P. Numerical Recipes, The Art of Scientific Computing; Cambridge University Press: Cambridge, UK, 2007; ISBN 978-0521880688. [Google Scholar]

- Merson, R.H. An operational method for the study of integration processes. In Proceedings of the Conference Data Processing and Automatic Computing Machines, Weapons Research Establishment Salisbury, Salisbury, Australia, 3–8 June 1957; pp. 110-1–110-26. [Google Scholar]

- Klapisch, M. A program for atomic wavefunction computations by the parametric potential method. Comput. Phys. Commun. 1971, 2, 239–260. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).