Abstract

The three-flavor neutrino oscillation paradigm is well established in particle physics thanks to the crucial contribution of accelerator neutrino beam experiments. In this paper, we review the most important contributions of these experiments to the physics of massive neutrinos after the discovery of and future perspectives in such a lively field of research. Special emphasis is given to the technical challenges of high power beams and the oscillation results of T2K, OPERA, ICARUS, and NOA. We discuss in detail the role of accelerator neutrino experiments in the precision era of neutrino physics in view of DUNE and Hyper-Kamiokande, the program of systematic uncertainty reduction and the development of new beam facilities.

1. Introduction

High intensity neutrino beams at energies above 100 MeV are produced at proton accelerators since 1962 and played a key role in the foundation of the electroweak theory and the study of nuclear structure. Unlike other neutrino sources, they provide an unprecedented level of control of the flavor at source, flux and energy, which has been prominent in confirming the very existence of neutrino oscillations and measuring with high precision the oscillation parameters. Accelerator neutrino beams [1] are mostly sources of beams because the vast majority of neutrinos produced at accelerators originate by the decay in flight of multi-GeV pions. As a consequence, they play a role in neutrino oscillation physics only if the oscillation length is within the earth diameter for the neutrino energy range E accessible to accelerators (0.1–100 GeV). The existence of at least one oscillation length smaller than the earth diameter and involving in the GeV-energy range has been established by Super-Kamiokande in 1998 [2,3]. In the modern formalism that describes neutrino oscillation (see Section 2), it corresponds to saying that both the mixing angle between the second and third neutrino mass eigenstates () and their squared mass difference ( ) are different from zero. Such statement opened up the modern field of neutrino oscillations at accelerators. Since eV and the oscillation length is:

oscillations are visible for a GeV neutrino if the source-to-detector distance (“baseline”, L) is . It is fortunate that oscillations are visible at propagation lengths smaller than the earth diameter because both the source and the detector can be artificial, i.e., designed by the experimentalist to maximise sensitivity and precision. On the other hand, it is unfortunate that the baselines of interest are hundreds of km long (“long-baseline experiments”): since neutrinos cannot be directly focused, the divergence of the beam is large and the transverse size of the flux at the detector is much larger than the size of the detector itself. As a consequence, the number of neutrinos crossing the detector decreases as and such a drop must be compensated by brute force, increasing the number of neutrinos at source. All beams discussed in this paper are high power beams driven by proton accelerators operating at an average power kW.

Even if the inception of the study of massive neutrinos at accelerators can be dated back to 1998, the golden age of accelerator neutrino physics has just started, triggered by the discovery of . In 2012 reactor (Daya-Bay [4], RENO [5]) and accelerator (T2K [6]), experiments demonstrated that even the mixing angle between the first and third neutrino mass eigenstate is non-zero. Indeed, is quite large () so that the entire neutrino mixing matrix (PMNS—see Section 2) has a very different structure compared with the Cabibbo–Kobayashi–Maskawa matrix. This result corroborates once more the fact that the Yukawa sector of the Standard Model is far from being understood both in the quark and lepton sector. From the experimental point of view, a large opens up the possibility of observing simultaneously and oscillations in long baseline experiments and, in general, three family interference effects. This is the reason why neutrino physicists refer to the current and future generation of beam experiments as the experiments for the “precision era” of neutrino physics. These modern experiments must disentangle the missing parameters of the Standard Model (SM) Lagrangian in the neutrino sector (the CP violating phase, the ordering of the mass eigenstates) from small perturbations in the oscillation probability at the GeV scale. Since the baselines of these experiments range from 295 km (T2K) to 1300 km (DUNE), beam intensity and statistics still play an important role. However, systematics will soon become the limiting factor for precision neutrino physics, especially in the DUNE and Hyper-Kamiokande era.

In this paper, we will review the most important results appeared after the discovery of in long baseline beams of increasing power. We will start in Section 3 from the CNGS, a 500 kW power beam operated up to 2012 to study oscillations. Subleading oscillations are currently investigated by two very high power beams at J-PARC and Fermilab serving T2K (Section 4) and NOA (Section 5), respectively. These acceleration complexes will be upgraded to the status of “Super-beams” (>1 MW power) to carry on the ambitious physics program of DUNE and Hyper-Kamiokande (Section 6). The ancillary systematic reduction program using current and novel acceleration techniques will be discussed in Section 7.

2. Three Family Neutrino Oscillations

The Standard Model (SM) of the electroweak interactions has been developed when no evidence of massive neutrinos was available. In its original formulation, therefore, the SM neutrinos were neutral massless fermions with left-handed (LH) chirality or, equivalently, LH helicity. The antineutrinos were their right-handed (RH) partners. The discovery of neutrino oscillations falsified the SM in its original formulation although the theory can be minimally extended to account for massive neutral leptons. In the minimally extended SM [7], massive neutral leptons are treated as (massive) quarks: their flavor eigenstates are linear combination of mass eigenstates and the linear operator that mixes the flavor and mass eigenfunctions is a complex matrix. In the quark sector, this matrix is called the Cabibbo–Kobayashi–Maskawa (CKM) matrix. The corresponding matrix in the lepton sector is the Pontecorvo–Maki–Nakagawa–Sakata (PMNS) matrix . The index runs over the flavor eigenstates () and the i index runs over the mass eigenstates . The flavor fields are thus:

Here, we dropped the dependence of the field on space-time and the subscript L. Note that, in the (minimally extended) SM, only fields with LH chirality () appear in the charged currents (CC) that describe the coupling of fermions with the bosons. In the original SM, describe neutrinos with LH chirality that can be observed as particles with LH helicity. In the minimally extended SM, neutrinos are massive and therefore neutrinos with LH chirality may be observed with both spin antiparallel (LH helicity) and parallel (RH helicity) to the direction of motion. Direct mass measurements and oscillation data, however, suggest by at least five order of magnitudes. As a consequence, for any practical purpose, the helicity of a LH chirality field can be considered LH, as well. The neutrinos fields in the SM are Dirac fields, as for the case of quarks. The anti-neutrino fields describe particles that are different from the neutrinos (“anti-neutrinos”) and only RH anti-neutrinos couple with the W bosons in CC currents. Unlike quarks, where particles are electrically charged and must be different from antiparticles of opposite electric charge, neutrinos are neutral particles and the anti-particle fields may be the same as for the particles. In this case, the neutrino fields are Majorana fields and not Dirac fields. This subtlety is immaterial for massless neutrinos because the RH neutrino field can be identified with the RH anti-neutrino field without changing the value of any observable. In the extended SM, there are observables that are sensitive to whether the neutrino are standard Dirac particles or “Majorana particles” (i.e., elementary fermions that are described by Majorana fields), although the correspondent measurements are extremely challenging due to the smallness of . The minimally extended SM Lagrangian is built applying the quark formalism to neutrinos and, hence, in this model neutrinos are Dirac particles. In this case, as for the CKM, the PMNS matrix is unitary () and can be parameterized [3] by three angles and one complex phase. The parameterization that has been adopted for the PMNS put the complex phase in the 1–3 sector, i.e., in the rotation matrix between the first and third mass eigenstate:

In Equation (2), the three rotation angle are labeled and , . In this parameterization, which is in use since more than 20 years, and . Clearly, physical observables are parameterization-independent and therefore the range of the angles and the choice of the complex phase in the 1–3 sector is done without loss of generalization. All parameters of the minimally extended SM can be completely determined by neutrino oscillation experiments except for an overall neutrino mass scale (see Table 1). The neutrino oscillation probability between two flavor eigenstates is the probability to observe a flavor in a neutrino detector located at a distance L from the source. The source produces neutrinos with flavor and energy E. The oscillation probability is given by:

where and is the distance travelled by the neutrino. For antineutrino oscillations, U must be replaced by in Equation (3), which corresponds to changing the sign of the third term. As a consequence, oscillation experiments can reconstruct all rotation angles and the CP phase. They also can measure the squared mass differences among eigenstates although the absolute mass scale must be measured in an independent manner. Note in particular that CP violation in the leptonic sector can be established just measuring the difference between P and P if . Since the leading oscillation terms, i.e., the second term of Equation (3), depend on a squared sign, determining the signs of is also very challenging. In fact, the sign of has been determined at the beginning of the century by solar neutrino experiments. The oscillation probabilities here are strongly perturbed by matter effects in the sun, which are sensitive to the sign of . As discussed in Section 5, matter effects on the earth are employed by long-baseline experiments to determine the sign of , which is not fully established yet.

Table 1.

Current value of the mass and mixing parameters as extracted from neutrino oscillation data under the hypothesis of normal hierarchy (current best fit) [14].

The choice of Dirac fields for the description of neutrinos in the minimally extended SM is the simplest from the technical point of view, but it is very disputable in the light of the gauge principle [8]. The most general Lagrangian compatible with the gauge symmetry and containing all SM particles (including massive neutrinos) allows for “Majorana mass terms” as , where C is the charge conjugation matrix. It is possible to show that these terms imply neutrinos to be Majorana particles and must be removed by hand from the minimally extended SM based on Dirac fields. A vast class of SM extensions is motivated by the existence of Majorana particles. Several of these models are also able to explain the smallness of neutrino masses through the “see-saw mechanism” [9,10,11,12,13]. Neutrino oscillations, however, cannot address these kind of issues. If neutrinos are Majorana particles, the PMNS matrix is only approximately unitary and can be parameterized with three rotation angles and three phases as:

where:

and is the PMNS in the minimally extended SM (Equation (2)). On the other hand, the oscillation formula of Equation (3) does not depend on these additional phases. The oscillation measurements are therefore robust against the Dirac vs. Majorana nature of neutrinos, but, on the other hand, do not provide information to determine such nature.

3. The CNGS Neutrino Beam

In 1998, disappearance measurements of atmospheric muon neutrinos—i.e., measurements of with neutrinos produced by interactions of cosmic rays with the earth atmosphere—provided evidence for non-zero value of both and . At that time, was not known and only upper limits from the Chooz reactor experiment [15] were available (). In those years, there was no evidence of oscillations in appearance mode, i.e., no measurements testifying non-zero values of for any . Such evidence was still lacking in 2002, when solar and reactor experiments employing and whose energy was well below the kinematic threshold for the production of muons, demonstrated that and eV. In order to observe in a neutrino detector the appearance of new flavors (), the energy of the neutrino must be well above the kinematic threshold for the production of the lepton of flavor . For solar and reactor neutrinos, this is not possible since the energies of their and are much smaller than . Atmospheric neutrinos, on the other hand, may be the right natural source since their energy spectrum extends well above the and even the kinematic threshold. At that time, there was no evidence of oscillations at the atmospheric energy scale and, therefore, the disappearance of was ascribed to oscillations. The CERN-to-Gran Sasso (CNGS) [16,17] neutrino beam was designed to provide evidence of oscillations in appearance mode, i.e., observing explicitly the appearance of by detecting leptons produced by CC events in the detector. The CNGS beam exploited the Gran Sasso laboratories (LNGS), an existing underground laboratory located 730 km from CERN. This constraint fixed the baseline of the CNGS experiments: OPERA and ICARUS. The CNGS has been the only long-baseline beam whose energy ( GeV) was well above the kinematic threshold for the production of the tau lepton (3.5 GeV for CC scattering in nuclei). The energy was tuned maximizing the number of observable CC events:

where is the CNGS flux, is the detector efficiency, and is the CC cross-section. In this formula, we expressed the oscillation phase in practical units: km for L and GeV for E. In particular, a high energy beam is beneficial to produce tau leptons with a large cross-section ( for E well above 3.5 GeV) and boosted in the laboratory frame. Such boost is needed to observe the decay in flight of the taus in the OPERA nuclear emulsion sheets (see below) and increases substantially . On the other hand, an energy much larger than reduces the oscillation probability as ∼ due to the fixed baseline of LNGS. The actual CNGS beam energy has thus been optimized accounting for these requirements and 17 GeV is the value that maximizes Equation (6). As a matter of fact, the CNGS observes oscillations off the peak of the oscillation maximum since:

In the framework of the minimally extended SM, the CNGS thus provides a direct test of the oscillation phenomenon in appearance mode () and a test of the oscillation pattern in a parameter phase far from the oscillation maximum.

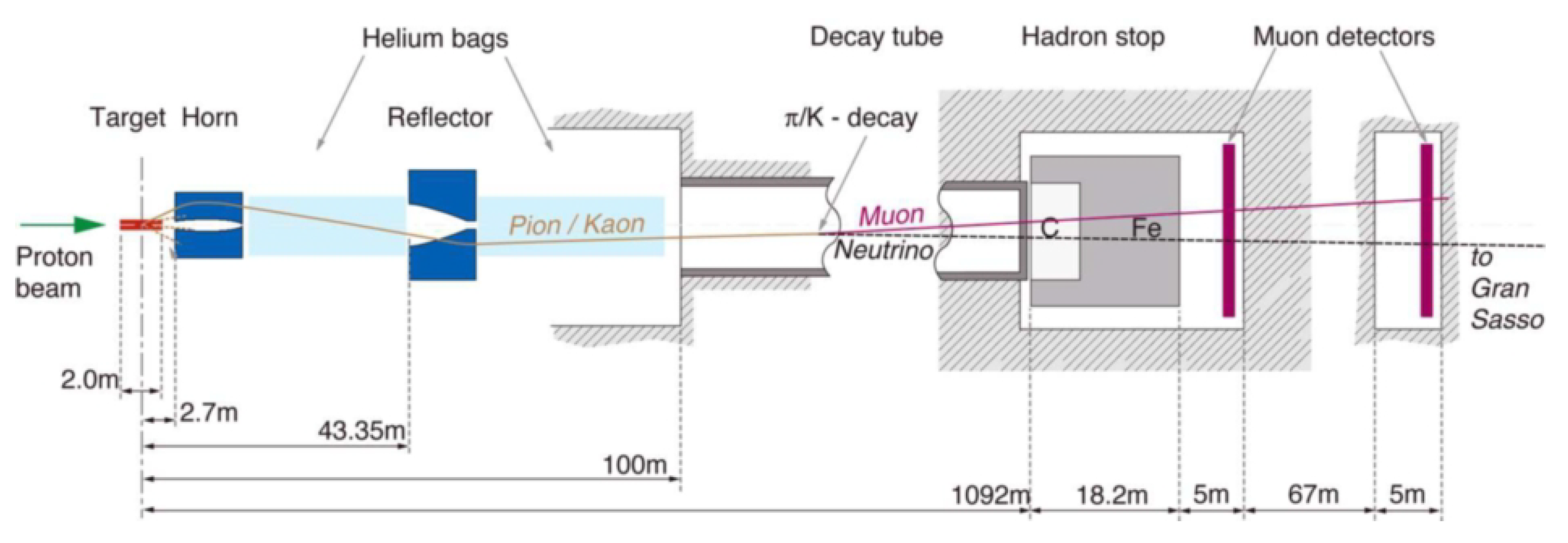

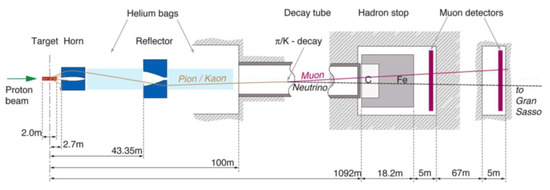

The CNGS neutrino beam (see Figure 1) was produced by 400 GeV/c protons extracted from the SPS accelerator at CERN and transported along a 840 m long beam-line to a carbon target producing kaons and pions [18]. The only potential source of was the decay of particles in the proximity of the target, which contributed in a negligible manner (<10) to the neutrino flux. The positively charged /K was energy-selected and guided with two focusing lenses (“horn” and “reflector”), in the direction of the Gran Sasso Laboratories in Italy. These particles decay into and in a 1 km long evacuated decay pipe. All the hadrons, i.e., protons that have not interacted in the target, pions, and kaons that have not decayed in flight, are absorbed in a hadron stopper. Only neutrinos and muons cross the 18 m long block of graphite and iron. The muons, which are ultimately absorbed downstream in around 500 m of rock, were monitored by muon detector stations.

Figure 1.

Layout of the CNGS neutrino beam.

Unlike all other long baseline facilities, the CNGS was not equipped with a near detector due to the cost and complexity of building a large underground experimental area located after the CNGS decay pipe. In addition, since the CNGS was designed to study the appearance of tau neutrinos in a beam with a negligible contamination of , the need for a near detector was less compelling than other long-baseline beams. The CNGS beam ran from 2008 to 2012, providing to OPERA an integrated exposure of protons on target (pot). OPERA exploited this large flux using real-time (“electronic”) detector interleaved with emulsion cloud chambers (ECC).

The OPERA [18] target had a total mass of about 1.25 kt composed of two identical sections. Each section consisted of 31 walls of Emulsion Cloud Chamber (ECC) bricks, interleaved by planes of horizontal and vertical scintillator bars (Target Tracker) used to select ECC bricks in which a neutrino interaction had occurred. Each ECC brick consisted of 57 emulsion films interleaved with lead plates (1 mm thickness), with a () cm cross-section and a total thickness corresponding to about 10 radiation lengths. Magnetic muon spectrometers were located downstream of each target section, and were instrumented by resistive plate chambers and drift tubes.

Over the years, a total of 19,505 neutrino interaction events in the target were recorded by the electronic detectors, of which 5603 were fully reconstructed in the OPERA emulsion films [19].

The construction and data analysis of OPERA was a tremendous challenge due to the need of identifying tau leptons on an-event-by-event basis. Even with current technologies, the only mean to achieve this goal by detecting the decay kink with very high purity is by using nuclear emulsions. This technique has been exploited in the 1990s by the CHORUS experiment to search for short baseline oscillations [20] and extended to a much larger scale by OPERA. Nuclear emulsions consist of AgBr crystals scattered in a gelatine binder. After the passage of a charged particle, the crystal produces electron–hole pairs proportional to the deposited energy. During a chemical-physical process known as development, the reducer in the developer gives electrons to the crystal through the latent image center and creates silver metal filaments scavenging silver atoms from the crystal. This process increases the number of metal silver atoms by many orders of magnitude (10–10). The grains of silver atoms reach a diameter about 0.6 mm and, therefore, become visible with an optical microscope.

The development procedure is irreversible, but it must be performed in a finite time scale (a few years) to prevent aging of the emulsions. As a consequence, the electronic detectors must locate the ECC brick in real time and a semi-automatic system must remove the brick while OPERA is still taking data and bring this component to the development facility. The scanning of the emulsions can be done at any time since the particle tracks are permanently fixed on the bulk of the emulsions, but a fast scanning time is a major asset to provide feedback on the detector performance and optimize the analysis chain.

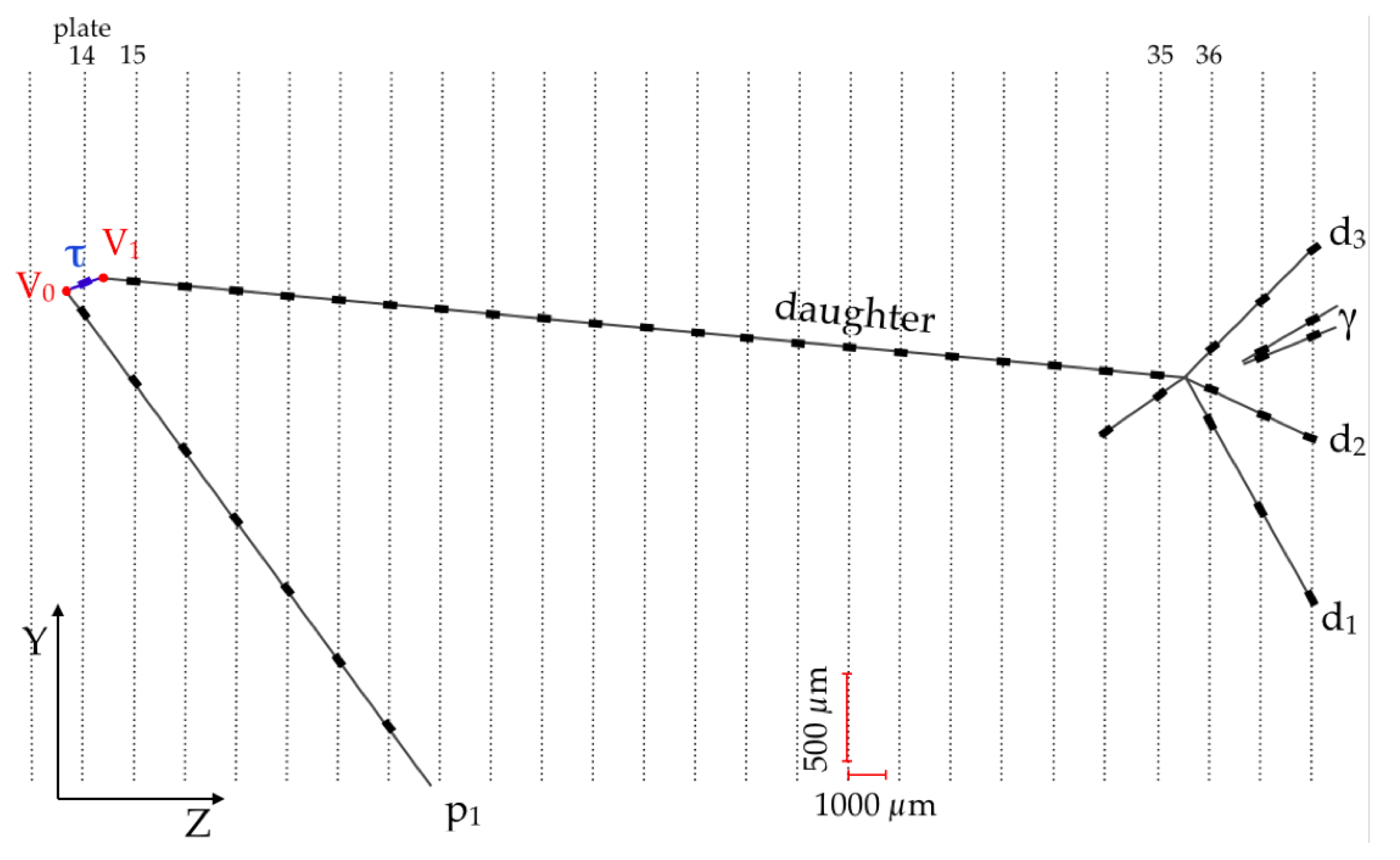

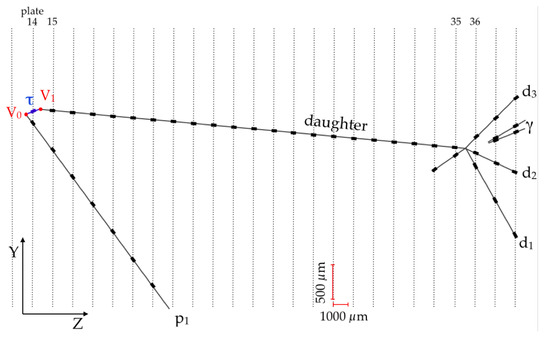

From the analysis point of view, OPERA is a rare event search experiment since just a handful of fully reconstructed tau events are expected from Equation (6). The tau events in OPERA are reconstructed as events with a primary track that shows a kink with respect to the initial direction. The kink testifies the decay in flight of the tau lepton in the lead of the ECC. In particular, OPERA has been seeking for tau leptons in most of its high branching ratio decay modes: (i.e., with a visible muon from the primary vertex), , (“one prong”, i.e., hadronic decay with only one visible charged hadron) and “three prongs” (i.e., hadronic decay with three visible charged hadrons). Rare event searches are plagued by potential biases due to the tuning of the selection procedure in the course of data taking. In order to minimize bias, the OPERA Collaboration followed two approaches. The most conservative one was implemented during data taking and the initial stage of data analysis, bringing to a discovery of tau appearance in 2015. Here, tau leptons were selected using topological and kinematic cuts defined a priori in the design phase of the experiment. The efficiency of these cuts were evaluated by a full simulation of OPERA performed with GENIE [21] (neutrino interactions), NEGN [22] (kinematic properties of final states) and GEANT4 [23] (detector response). The simulation was validated with a control sample based on CC events producing charmed particles in the final state. The 2015 analysis [24] is based on 5 CC candidates whose background is summarized in Table 2.

Table 2.

Summary of expected events and background contributions in the 2015 OPERA analysis.

The events observed in OPERA for the standard 2015 analysis are consistent with the “three flavor neutrino oscillation paradigm”, i.e., the minimally extended SM accounting for neutrino oscillations. In particular, given the superior background rejection capability of the ECC based analysis, appearance was established with a statistical significance of 5.1 with just five candidates (see Figure 2). For that particular analysis, the probability of detecting five events or more is 6.4%.

Figure 2.

The 5th candidate () observed in one of the OPERA ECC in 2015.

In 2018, OPERA developed a more sophisticated analysis reaping on the improvement in the knowledge of the detector response and background subtraction techniques accumulated during data taking. The final OPERA analysis is based on looser kinematic cuts applied to four variables: the distance between the decay vertex and the downstream face of the lead plate containing the primary vertex (), the momentum (p) and transverse momentum () of the secondary particle and the 3D angle between the parent particle and its daughters. The cuts are independent of the tau decay mode ( mm, rad, GeV) except for the transverse momentum ( GeV for and GeV for or ). In addition, GeV is requested for the tau decay into muon to suppress the high energy tail of CC events of the CNGS. After these cuts, a multivariate statistical approach is employed to separate signal from background and to produce a single-variable discriminant for the oscillation hypothesis test and parameters estimation. In this analysis, 10 observed events were selected. The expectation in the minimally extended SM was events plus a background of events. This analysis, which represents the final result of OPERA on search for appearance, establishes oscillations at 6.1 confidence level [25].

Beyond tau appearance, OPERA performed analyses in disappearance mode and in appearance mode. Even if these searches are not competitive with dedicated experiments equipped with a near detector and electron-sensitive far detectors with much larger mass than OPERA (see Section 4 and Section 5), the three neutrino analysis of OPERA [19] provides a consistency test of the minimally extended SM in an energy range between the atmospheric oscillation scale (few GeV neutrinos) and the energy scale of neutrino telescopes like IceCube/DeepCore. The OPERA and ICARUS (see below) experiments, in addition, provided significant constraints to the existence of light sterile neutrinos and, in general, to extension of the three family oscillation mechanism.

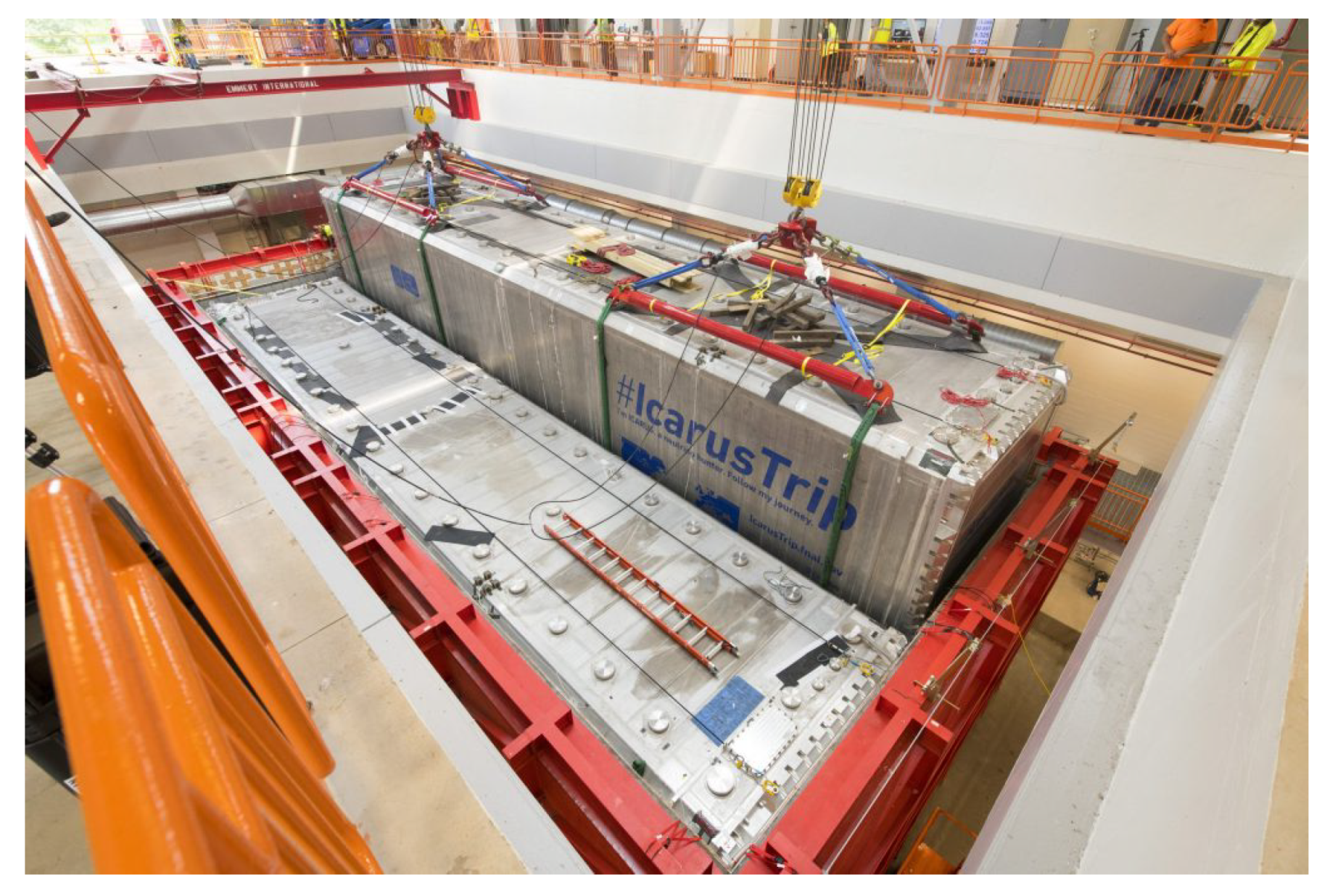

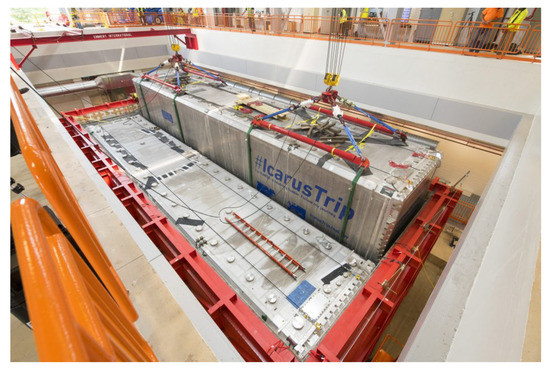

The CNGS served for several years also the ICARUS experiment [26] that took data at LNGS from 2010 to 2012. ICARUS was a 600 ton liquid Argon TPC (see Figure 3) composed of a large cryostat divided into two identical, adjacent half-modules, each one with internal dimensions of 3.6 m (width) × 3.9 m (height) × 19.6 m (length). The inner detector structure of each submodule (300 ton) consisted of two TPCs separated by a common cathode. Each TPC is made of three parallel wire planes, 3 mm apart and the relative angles between the two wire planes is 60. The distance between the wires is 3 mm (wire pitch). These wire planes constitute the anode of the TPC. The TPC volume is filled with high purity liquid Argon and the electrons produced by the charged particles drift toward the anode wires. A uniform electric field perpendicular to the wires drives the electrons in the LAr volume of each half-module by means of a HV system. The cathode plane, parallel to the wire planes, is placed in the center of the LAr volume of each half-module at a distance of about 1.5 m from the wires of each side. This distance defines the maximum drift path. Between the cathode and the anode, field-shaping electrodes are installed to guarantee the uniformity of the field along the drift direction. At the nominal voltage of 75 kV, corresponding to an electric field of 500 V/cm, the maximum drift time in LAr is about 1 ms. As a consequence, the purity of the liquid Argon must be such that the electron lifetime is comparable with the drift time, i.e., that electrons can drift in the active volume before recombining with electronegative impurities. Such a long drift length requires Argon purity part-per-billion (ppb). The space resolution that can be achieved in ICARUS is much worse than the ECC (1 mm versus a few microns), but this detector technology can be readout as a standard TPC and the observation and selection of CC events can be performed in real time. In addition, this technology is scalable to masses that are unpractical for the ECC (≫1 kton).

Figure 3.

The ICARUS detector, operatedunderground at LNGS during the run of CNGS, was moved to Fermilab in 2018 and installed at a shallow depth to search for sterile neutrinos in the framework of the Fermilab Short Baseline Programme (SBN) [27].

In fact, the mass of the TPC operated at LNGS—the largest LArTPC ever built—was still smaller than what was needed for the observation of CC events on a kinematical basis. Still, ICARUS achieved important results in the study of oscillations beyond the minimally extended SM (limits to sterile neutrino oscillations) [28] and in the clarification of the neutrino velocity anomaly reported in 2011 by OPERA [29]. Even more, the successful operation of ICARUS in a deep underground laboratory at the 600 t scale, opened up the possibility of building multi-kton detectors based on the LArTPC technology, paving the way to the design and construction of ProtoDUNE-SP and DUNE (see Section 6). At the end of the ICARUS data taking at LNGS, the Argon recirculation and purification systems reached a free electron lifetime exceeding 15 ms, corresponding to about 20 parts per trillion of O-equivalent contamination [30].

4. T2K and Its Upgrades

4.1. T2K

The T2K experiment is the successor of the former K2K experiment, [31], the first long-baseline neutrino experiment that confirmed the Super-Kamiokande discovery of neutrino oscillations [2] by detecting a reduction of the flux together with a distortion of the energy spectrum in an accelerator neutrino beam generated at KEK [32].

With respect to K2K, T2K had a number of substantial improvements, the most important being (a) a new neutrino beam created by the Japan Proton Accelerator Research Complex (J-PARC), designed to be about 50 times more intense than the K2K neutrino beam; (b) a beam line configuration where the far detector (again SK) was at an off-axis angle of and a baseline of 295 km; (c) a new close detector system, allowing a much more effective measurement of neutrino beam components, backgrounds, and neutrino cross-sections.

The T2K neutrino beam is generated at J-PARC where a 30 GeV proton beam impinges onto a graphite target [33]. The primary proton beam recently achieved an average power of 492 kW. The secondary hadrons, mostly pions, are charge-selected and focused by a system of three magnetic horns [34] and decay in a 96 m long decay volume. The axis of the beam is displaced by from the SK detector.

Thanks to the kinematics of the pion two-body decay, the resulting neutrino beam is narrower than an on-axis beam [35], better optimized to the expected neutrino oscillations (as already measured by SK and K2K), and with a smaller contamination of intrinsic (mostly generated by kaon decays, whose kinematics is different from pion decays, producing less neutrinos at the off-axis angle of ).

Two detectors are located 280 m downstream of the beam target, to measure neutrino interactions before they start oscillating. The INGRID detector [36] is located on-axis, and its main purpose is to monitor the direction and the flux stability of the neutrino beam.

The ND280 detector system is located as the same off-axis angle of SK and its main purpose is to measure all the neutrino beam components. It consists of three time projection chambers (TPCs) [37] interleaved with two fine-grained tracking detectors [38], a -optimized detector (P0D) [39], and an electromagnetic calorimeter [40]. ND280 is mounted inside the magnet of the former UA1 and Nomad experiments, donated by CERN to the T2K Collaboration; it allows for independently measuring both neutrino and antineutrino interaction rates. A side muon range detector instruments the magnet yoke [41].

An important set of external data for T2K comes from the NA61 experiment that measured hadroproduction by 30 GeV protons on a graphite target with a thickness of 4% of a nuclear interaction length [42]. These measurements drastically reduce systematic errors on the evaluation of the neutrino beam flux, resulting in a better determination of neutrino interaction rates by ND280 [43].

The neutrino beam is peaked around 0.6 GeV, at the energy where the oscillation probability is maximal. At those energies, the dominant process are Charged Current Quasi-Elastic scatterings (CCQE), where a charged lepton of the same flavor of the incoming neutrino is produced. In this class of interactions, the measurement of the momentum and direction of the outgoing lepton is sufficient to reconstruct the energy of the incoming neutrino. It has to be noted that the 295 km baseline is too short to allow good sensitivity to the measurement of neutrino mass ordering (see Section 5) and that the neutrino energy is well below the production threshold.

The far detector is the Super-Kamiokande (SK) detector [44], a deep underground 50 kton water Cherenkov detector, 39 m in diameter and 42 m tall, equipped with 11,129 inward facing 20-inch photomultiplier tubes (PMTs) and 1885 outward-facing 8-inch PMTs mainly used as a veto. The Cherenkov light emitted in water by charged particles above a momentum threshold allows for measure with great precision the momentum, the direction and the vertex of the leptons produced by neutrino interactions. The different topologies of the muon and electron tracks produce different patterns of hit phototubes; in this way, electron and muons are identified with great precision by SK. The quasi-elastic topology, where a single lepton is produced, is particularly favorable for the characteristics of the SK detector.

The first important result by T2K was published in 2011 [45] by observing an indication of appearance in data accumulated with proton on target collected from January 2010 to March 2011. Six events passed all the selection criteria in SK, with an expected background of events, corresponding to a significance of about . It represented the first indication of a non-zero value of : at 90% CL for = 0 and normal neutrino mass hierarchy, a fundamental milestone for any subsequent development in neutrino oscillation physics.

T2K had a long stop of more than one year soon after this result due to the catastrophic earthquake–tsunami that occurred in Japan in March 2011.

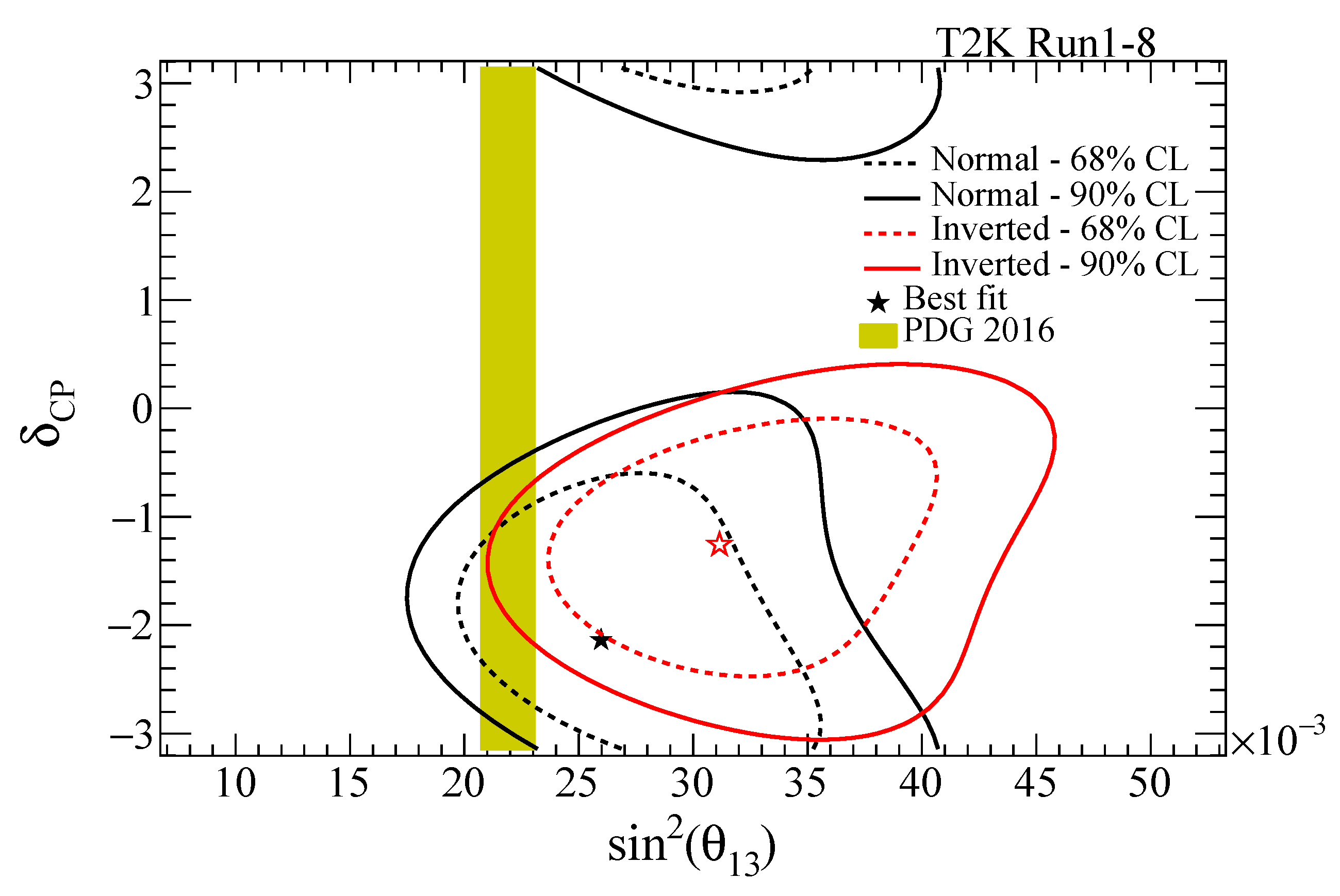

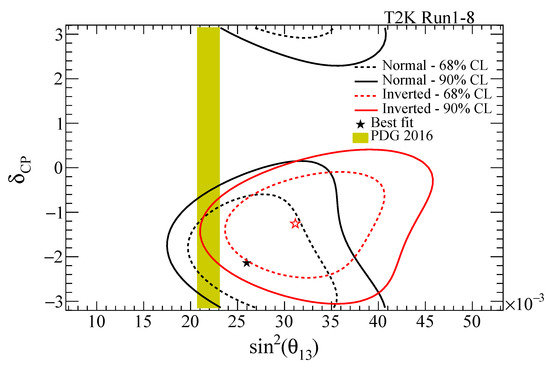

In this period, three reactor experiments published evidence of non-zero values of by measuring the flux of reactor antineutrinos with a clear indication of disappearance. Eventually, the determination by reactor experiments achieved a much better precision than T2K and today the global best-fit to is [3] with a allowed range of 0.0190–0.0240. The precise, unambiguous determination of by reactors greatly improved the sensitivity of long baseline experiments to and neutrino mass ordering. This is nicely illustrated in Figure 4, where the confidence regions measured by T2K without any input from reactor experiments are compared with the confidence region from reactors [46], indicating how better is constrained after the inclusion of reactor data.

Figure 4.

Confidence regions (68% and 90%) in the plane as measured by T2K without any prior information on compared with the 68% confidence regions from reactor experiments, shown by a yellow vertical band.

The most recent T2K published results are based on SK data collected between 2009 and 2017 in (anti)neutrino mode with a neutrino beam exposure of () protons on target [46].

Neutrino fluxes, interaction cross sections and detector responses are simulated, and a fit to the near detector data constrains them. The results of this fit are propagated to the far detector as a Gaussian covariance on the systematic parameters. The fit of the far detector data then constrains the oscillation parameters.

Primary proton interactions on the T2K target system are simulated with FLUKA2011 [47] and GEANT3 [48] together with the decay of hadrons and muons that produce neutrinos. The modeling is constrained by thin target hadron production data, including recent charged pion and kaon measurements from the NA61/SHINE experiment at CERN [42,49,50]. The systematic errors of beam prediction at this stage are of 8% and 7.3% for the neutrino and antineutrino beams, respectively. A significant reduction of these errors is expected with the inclusion of recent hadroproduction measurement of the T2K replica target by NA61/SHINE [51]. It is extremely important to have flux systematics as low as possible at this stage for any physics analysis at the close detector and to attenuate the strong correlation between neutrino fluxes and neutrino cross-sections at the close detector.

The neutrino interaction model is based on NEUT [52]. Simulated data are also generated with alternative models and fitted with NEUT in order to evaluate the systematic effects of wrong modelling to the oscillation results.

Events selected as charged current (CC) interactions in ND280 are used to constrain the flux and neutrino interaction uncertainties.

A total of 14 event samples are defined: six neutrino samples depending of the number of hadrons (0, 1, or more) and whether the interaction happens in FGD1 or FGD2, this latter being 40% enriched in water; eight samples are defined in the antineutrino mode, depending on the number of tracks (0 or 1), the charge of the outgoing muon and again on the position of the interaction vertex.

It is very important to have the possibility of defining such a large number of event samples; they are sensitive to different combinations of beam fluxes and neutrino interactions, helping to cover all the analysis parameters and to break parameter degeneracies and ambiguities.

A global likelihood is computed for the fit of all the event samples. The global fits greatly reduce systematic errors and increases by 10%–15% the interaction rate predictions at the far detector.

SK data are subdivided in five independent samples: events with a single muon-like or a single electron-like rings in neutrino and antineutrino beam modes plus a sample with an electron-like ring together with a pion track candidate in neutrino mode. Events are reconstructed with a new reconstruction algorithm, firstly introduced in [53] to suppress neutral current backgrounds, where a maximum likelihood algorithm exploiting the timing and charge information of all the photosensors simultaneously. This new alghorithm improves efficiency and purity of the selected samples and allows a fiducial volume expansion of 25% (14%) for () events.

The observed number of CCQE events with an electron candidate at SK are 74 in the neutrino run and seven in the anti-neutrino run, to be compared with the expectations for , normal hierarchy, of 61.4 (73.5) and 9.0 (7.9) respectively. The systematic errors of the two samples are 8.8% and 7.1%, respectively. The selected candidates in the neutrino and antineutrino modes are 240 and 68, respectively, with 5.1% and 4.3% systematic errors. Finally, 15 events are selected in the single-electron single-pion sample, with a prediction of 6.9 events and a systematic error of 18.4%. It should be noted that systematic errors already at this stage (29% of the design statistics) are sizable and are approaching the values of statistical errors.

Oscillation fits to the five samples of data show a preference for the normal mass ordering with a posterior probability of 87%. The oscillation angle is measured as for normal ordering, central value for inverted ordering is . Assuming the normal (inverted) mass ordering the best fit to the atmospheric mass splitting is . For , the best-fit value assuming the normal (inverted) mass ordering is , the confidence regions are for normal ordering and for inverted ordering. Both intervals rule out the CP conserving points, and .

A recent preprint of the T2K collaboration, which includes 2018 data and improves by a factor 2.2 the pot collected in antineutrino mode, reports for the first time a closed 99.73% () interval on the -violating phase [54].

Other notable results of the T2K Collaboration are several measurements of neutrino cross-sections [55], searches for light sterile neutrinos [56], and searches for heavy neutrinos [57].

4.1.1 T2K II

The exciting results achieved by the T2K experiment so far convinced the collaboration to propose an upgrade of the experimental setup, T2K II [58], in order to reach a sensitivity for a significant fraction of the possible values by the year 2026, when the next generation Hyper-Kamiokande experiment is expected to start.

The proposed upgrades, all re-usable by the Hyper-Kamiokande experiment, will be

- Upgrade the J-PARC main ring to the power of 1.3 MW. This would allow for collecting pot by 2026, three times more the original beam request of pot and more than six times the statistics collected so far in neutrino+antineutrino modes.

- Push the magnetic horns system to its ultimate performances, bringing its operation current from 250 to 320 kA. This upgrade would provide a 10% increment of neutrino fluxes reducing the wrong-sign contamination by 5%–10%

- Upgrade the ND280 close detector as proposed in [59]. A new tracker, consisting of a 2-ton horizontal plastic scintillator target sandwiched between two new horizontal TPCs, will substitute the present P∅D. The plastic scintillator, called Super-FGD [60], consists of a matrix of cm cubes made of extruded plastic scintillator, where each cube is crossed by three wavelength shifting fibers along the three directions, allowing a 3D reconstruction of the events.

The TPCs will be similar in design to the existing ones, with two major improvements: the Micromegas detector will be constructed with the "resistive bulk" technique, thereby allowing a lower density of readout pads and eliminating the discharges (sparks). The field cage will be realized with a layer of solid insulator laminated on a composite material. This will minimize the dead space and maximize the tracking volume.

This tracker would be surrounded by Time-of-Flight counters (made of plastic scintillator) to measure the direction of the tracks and reject out of fiducial volume events. The total mass of active target for neutrino interactions increases from 2.2 to 4.3 tons, allowing the doubling of the expected statistics for a given exposure. Furthermore, the new configuration will allow a better coverage of the neutrino quasi-elastic interactions, improving the acceptance for high angle tracks.

5. NOA in the NuMI Beam

The Neutrinos at the Main Injector (NuMI) neutrino beam [61] is currently the most powerful neutrino beam in the world and is running with a peak hourly-averaged power of 742 kW. It has been the workhorse of accelerator neutrino physics in the US since 2005 and served several experiments both at Fermilab (MINERA, ArgoNeuT) and at far locations (MINOS, MINOS+ in the Soudan mine and NOA on surface at Ash River, MN). In this review, we will mostly focus on its performance and results as neutrino beam for NOA, which commenced data taking on February 2014 [62].

The NUMI beam is produced by the 120 GeV protons extracted from the Fermilab Main Injector. Like the CNGS, it thus leverages the acceleration chain of proton colliders steering the protons toward a solid state target.

The protons from the Main Injector hit the graphite target and the produced hadrons are focused by two magnetic horns and then enter a 675 m long decay volume. The maximum proton energy that can be achieved at the Main Injector also offers a greater flexibility in the choice of the mean neutrino energy. This feature was successfully exploited during the MINOS data taking [63,64], when the uncertainty on was still quite large and the location of the first oscillation maximum was unclear. After 2014, however, NUMI was operated mostly in “medium energy” mode, producing neutrinos with a mean energy of about 2 GeV. This operation mode maximizes the number of oscillated neutrinos in NOA, which is located off-axis (14 mrad) 810 km far from Fermilab.

Compared with the NUMI run at the time of MINOS, the current facility exploits some modification of the acceleration complex that originates from the shutdown of Tevatron. The Fermilab Recycler is now used extensively for neutrino physics and stores the Booster cycles during acceleration in the Main Injector. In this way, the two most time-consuming operations of NUMI (the transfer from the Booster to the next accelerator, and the acceleration in the Main Injector) can be carried out in parallel rather than in series. As a consequence, the overall cycle time is reduced from 2 to 1.3 s. From February 2014 to June 2019, NOA accumulated pot in neutrino mode and pot in anti-neutrino mode, reversing the currents in the focusing horns. The purity of the () beam is 96% (83%), respectively.

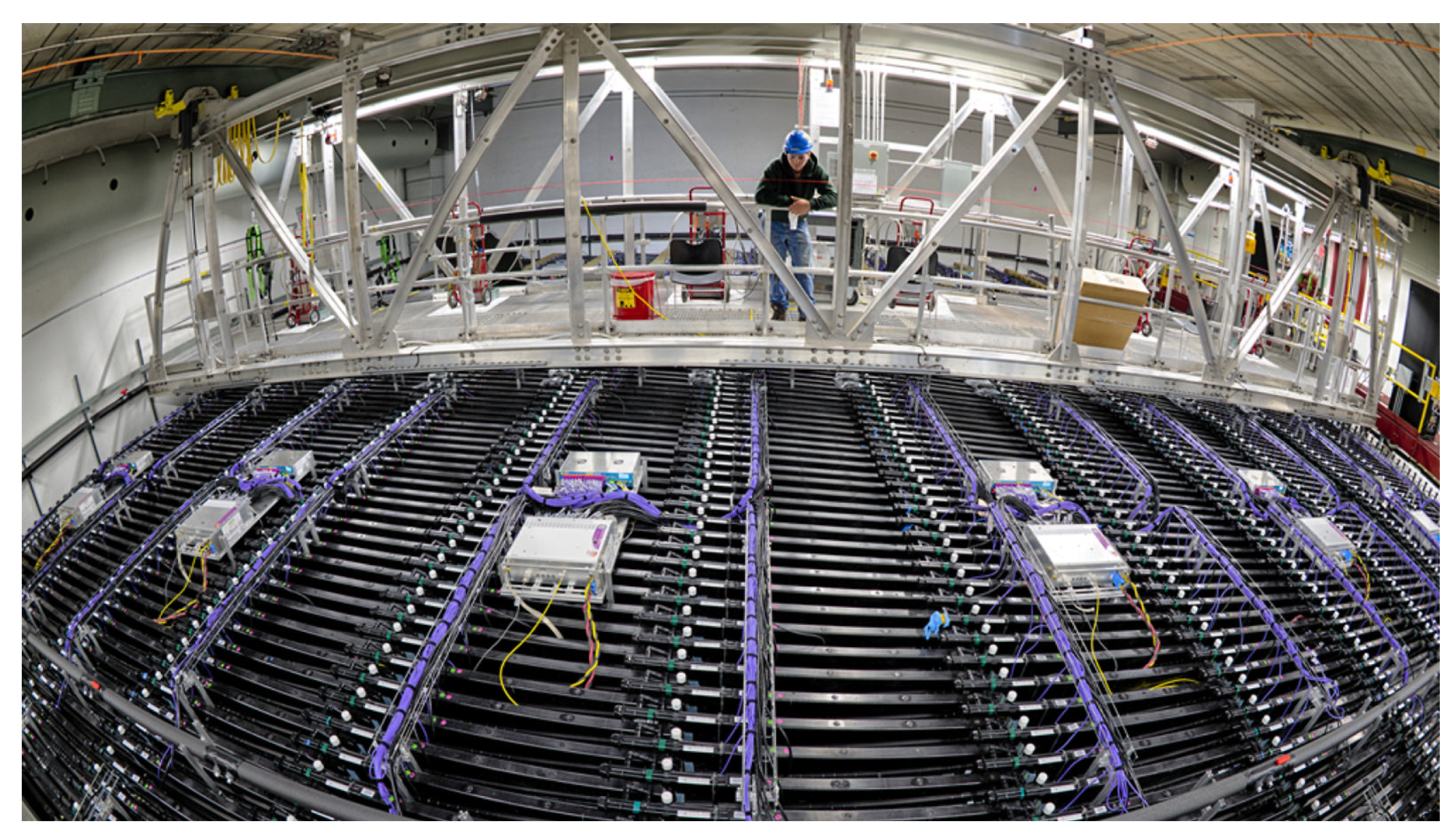

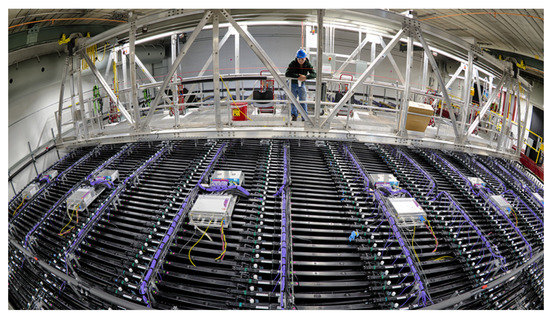

The NOvA experiment measures the energy spectra of neutrino interactions in two detectors. The Near Detector is placed in the proximity of NUMI at a distance of 1 km from the production target and is located 100 m underground. It is a 290 t liquid scintillator detector that measures 3.8 m × 3.8 m × 12.8 m and is followed by a muon range stack. The 14 kton far detector (see Figure 5) is the largest neutrino detector ever operated on surface and measures 15 m × 15 m × 60 m. Both detectors use liquid scintillator contained in PVC cells that are 6.6 cm × 3.9 cm (0.15 radiation lengths per cell) in cross-section and span the height and width of the detectors in planes of alternating vertical and horizontal orientation. The light is read out by wavelength-shifting fibers connected to APDs. Operation at surface, with practically no overburden (three meters of water equivalent, 130 kHz of cosmic-ray activity) is possible thanks to the short duration of the proton burst that allows for exploiting the time correlation between the events observed in the detector and the occurrence of the proton extraction to the target in NUMI. The analysis [65] is built upon the set of all APD signals above threshold around the 10 s beam spill. The cosmic ray background is directly measured by a dedicated dataset recorded in the 420 s surrounding the beam spill. This control sample is employed for calibration, study of stability response, and for the training of the event reconstruction algorithms.

Figure 5.

The NOA detector at Ash River.

NOA was designed to search for non-null values of and measure the leading oscillation parameters at the atmospheric scale (, ). Even if the discovery of has been achieved by Daya Bay, Double-Chooz, RENO and T2K well before the start of the NOVA data taking, the physics reach of this experiment has been significantly boosted by the large value of this angle. NOVA is the only long baseline experiment currently exploiting matter effects to determine the neutrino mass pattern. As mentioned in Section 2, the presence of a dense medium can perturb the oscillation probability of neutrinos because matter is rich in electrons and deprived of muons and tau leptons. As a consequence, the evolution of the flavor wavefunction is perturbed by the fact that and can interact only by neutral currents (NC) with the electrons of matter while both NC and CC elastic scattering can occur for . Elastic weak scattering can occur also in the nuclei, but, in this case, the have the same interaction probabilities as for or . The matter perturbations thus are only due to the density of the electrons in the medium and are parameterized by an effective potential . Here, is the Fermi constant and is the electron matter density at the point x. Unlike propagation in the sun, where is strongly varying and can cause highly nonlinear phenomena (MSW resonant flavor conversions [66]), the electron density in the earth crust and mantle is nearly constant. The oscillation formula corrected by matter effects in conditions interesting for long baseline experiment can be written as [67,68]:

where , and the electron density is embedded in . The probability for oscillations is the same as for neutrinos except for a change of the sign of the CP-violating phase and of the matter parameter A. Equation (8) is an approximate formula that results from expanding to second order in the small quantities and under the hypothesis of a constant electron density . Exact formulas can be obtained integrating the propagation in steps of x and are commonly embedded in software as GLoBES [69]. Still, Equation (8) is an excellent approximation for all current long-baseline experiments. The change of sign of A is hence a fake CP violation effect due to the presence of electrons in matter and should be decoupled by genuine CP effects. On the other hand, fake CP effects depend on the sign of and, therefore, provide an elegant tool to determine whether (“normal ordering”, NO) or (“inverse ordering”, IO). All other ordering options are already excluded by solar neutrino data that imply . Distinguishing (IO) from (NO) is extremely interesting not only for building theory models of neutrino masses and mixing. IO would imply that the eigenstate that is maximally mixed with is indeed the largest one. IO thus eases remarkably the measurement of the absolute neutrino masses and of neutrinoless double beta decay, which are entirely based on . NOVA is the only running experiment sensitive to matter effects because in T2K and in NOA. Similarly, the CNGS is not sensitive to matter effect because it is off the peak of the oscillation maximum () [70] and hence:

In the design phase of NOA, it was not clear whether it would had been possible (even with a combination with T2K) to disentangle mass ordering effects from genuine CP effects because accidental cancellations [71,72,73] arise for some values of and sign() (“ ambiguity”). Matter effects enhance the electron neutrino appearance probability in the case of normal mass hierarchy (NO) and suppress it for inverted mass hierarchy (IO). CP-conserving oscillations occur if or , while the rate of CC is enhanced around , and suppressed around . At NOA, the impact of these factors on the appearance probability are of similar magnitudes, which can lead to degeneracies between them, especially in neutrino runs alone. For antineutrinos, the mass hierarchy and CP phase have the opposite effect on the oscillation probability. Increasing values of increase the appearance probabilities for and alike. Still, the current best fit of are in the most favorable region of the parameter space and, if the central values are confirmed, NOA will be able to gain a strong evidence of NO in the next few years combining neutrino and antineutrino runs [74].

NOA is a detector with a very low density so that electromagnetic showers develop for several PVC cells easing the identification of CC events. In addition, NOA is equipped with a near detector, which opens up the possibility of measuring neutrinos at the far detector also in disappearance mode (). The energy of the beam is well below the kinematic threshold for production and therefore the contamination from CC events is negligible.

As for T2K, the simulation of NOA employs a detailed description of the beamline based on GEANT4. Again, the computed neutrino flux is corrected according to constraints on the hadron spectrum from thin-target hadroproduction data using the PPFX tools developed for the NuMI beam by the MINERvA collaboration [75]. Neutrino interactions in the detector are simulated with GENIE [21] while the detector response is simulated with GEANT4. Unlike T2K, NOA employs in a systematic manner analyses techniques inherited from the field of computer vision. The detector hits are formed into clusters by grouping hits in time and space to isolate individual interactions. A Convolutional Visual Network classifier employs the hits from these clusters, without any further reconstruction, as input and applies a series of trained operations to extract the classifying features from the image [76]. The classifier feeds an artificial neutral network, i.e., an input layer of perceptrons linked to additional hidden layers and an output node. The network is then trained to recognize different neutrino topologies: CC, CC, and NC. The CC and CC samples correspond to events whose score exceed a given threshold. Muon identification in CC events is performed employing the and multiple scattering of the track, the length of the track and the fraction of plane where the track is mip-like [65]. The NOA analysis selects CC events with an efficiency of 32.2%. In terms of purity, the final selected sample is 92.7% CC. The energy of the muon is estimated from the range and the hadronic energy from the number of hits. The energy resolution for the whole sample of CC is 9.1%. The quality of the simulation and event selection procedures are validated using the CC reconstructed spectrum at the near detector, which is not distorted by oscillations. Possible discrepancies (in the 4%–13% range for CC) are corrected to reach complete agreement with the near detector (ND) data. The and parameters are extracted from the spectrum of the CC events at the far detector compared with the corresponding spectrum at the near detector. The selection of the CC sample is particularly difficult due to the presence of electromagnetic components in NC events (e.g., production) or short muon in CC events. The near detector CC selected sample consists indeed of 42% CC, 30% NC and 28% CC. The selection efficiency—i.e., the probability to select a true CC event—amounts to 67.4%. The estimator of the energy of the electron candidate has a resolution of 11%. Since the NC and cosmogenic background is mostly in the low energy range of the electron spectrum and the CC from the contamination of the beam dominates at large energies, the analysis is performed only for events whose energy is between 1 and 4 GeV. Again, the quality of the simulation is validated by ND data. In particular, selected candidates are used to cross-check the selection efficiency and correct the expected signal at the far detector (FD). at ND are artificially created in the simulation replacing the muon with an electron of the same energy and direction. The CC selection algorithm is applied to this sample and the results match expectations within 2%. The intrinsic component of the beam (0.7% of all neutrinos) represents a nearly irreducible background. ND data are used to constrain such component beyond the estimate performed with GEANT4. ND data are also used to constrain the observed CC and NC events (see Figure 5 of Reference [65]). For the 2018 NOA analysis, corresponding to pot, the overall error budget for , and are , eV and 0.67, respectively. At present, the systematic budget is smaller than the statistical uncertainty and amounts to , eV and 0.12 for , and , respectively. However, it is likely that NOA will be systematic limited in the forthcoming years, well before the start of DUNE.

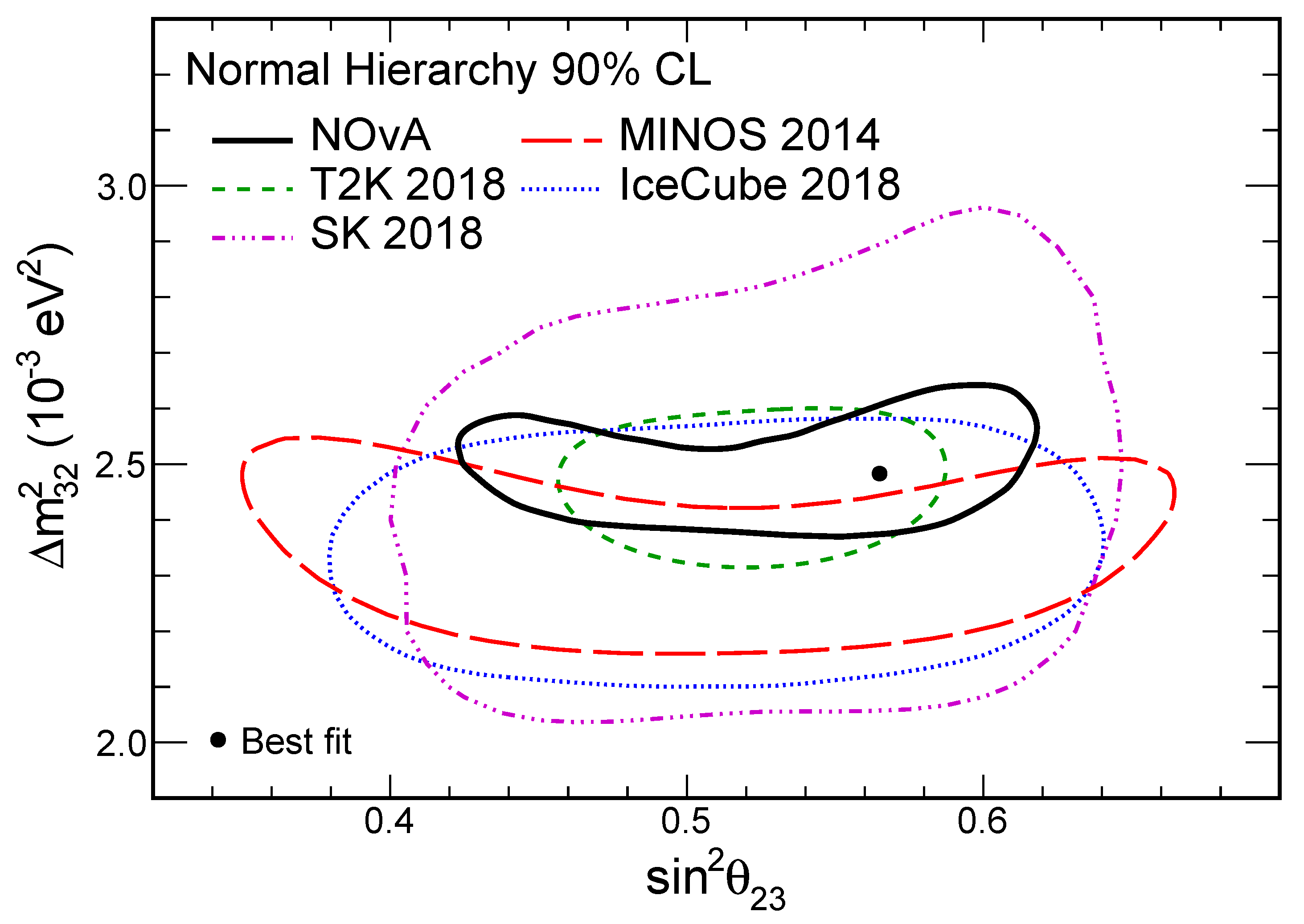

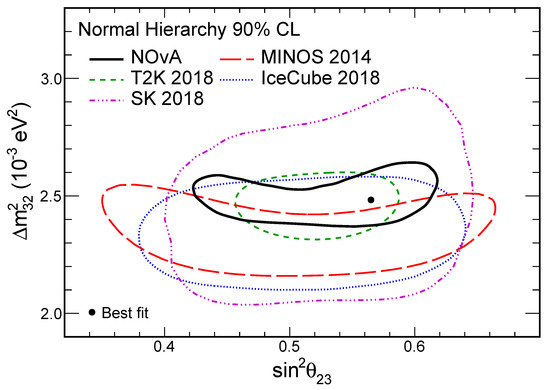

At the time of writing [77], the T2K and NOA results [65,78,79,80] are compatible for all oscillation parameters (see Figure 6). NOA data indicate a slight preference (1.8 ) for normal hierarchy (), which corroborates the hint from atmospheric neutrino data [14].

Figure 6.

versus (90% CL) measured by NOA, T2K, MINOS+, and atmospheric neutrino experiments.

6. DUNE and Hyper-Kamiokande

The optimal strategy to perform precision measurements and three family interference effects in the neutrino sector has been debated for decades. Accelerator neutrino beams became the technology of choice already in the late 1990s. At that time, experimental data start pointing toward two different mass scales (the atmospheric mass scale and the solar mass scale ), but the ratio of the scales ( in Equation (8)) was not too small to prevent the observation of perturbations driven by in the leading transitions at the atmospheric scale. As a consequence, an intense and controlled source of artificial neutrinos at the O(GeV) energy scale would have been the optimal facility to search, for instance, for CP violation in the leptonic sector. Unfortunately, at that time, was not known (the Chooz limits indicated ), and the overall size of the leading oscillation could have been arbitrarily small. For , the intensity of conventional beam would have been prohibitive at baselines O(1000 km) and the intrinsic contamination would have represented a major limitation to seek for oscillations at the per-mill level or below [81,82]. This consideration triggered an intense R&D toward new acceleration concepts, namely the Neutrino Factory (see Section 8) and the Beta Beam [83,84] that aimed at providing pure and intense sources of and . The discovery of at set the scale of the leading oscillation at the level of 7% (), which can be studied further increasing the power of current accelerator beams. Since 2012, therefore, R&D efforts have focused toward the design of “Superbeams”: conventional neutrino beams with beam power exceeding 1 MW.

Two Superbeams will drive the field of accelerator neutrino physics in the next twenty years: the Long Baseline Neutrino Facility (LBNF) at Fermilab serving the DUNE experiment in South Dakota and the J-PARC Neutrino Beamline upgrade serving Hyper-Kamiokande in Japan. Both facilities are able to address CP violation in a significant region of the parameter space, perform precision measurements of oscillation parameters (e.g., the octant of , which is still unknown), determine the mass hierarchy using matter effect in beam (DUNE) or atmospheric (Hyper-Kamiokande) neutrinos and, in general, establish stringent tests for the minimally extended SM and its competitors. Furthermore, both DUNE and Hyper-Kamiokande are sensitive to natural neutrino sources and can perform a rich astroparticle physics program.

6.1. DUNE

LBNF [85] is a new neutrino facility built upon the Fermilab acceleration complex, as it was in the past for NUMI. In the next few years, this complex will undergo a significant upgrade (Proton-Improvement-Plan PIP-II) that includes the construction and operation of a new 800 MeV superconducting linear accelerator. As for NUMI, the primary proton beam will be extracted from the Main Injector in the energy range of 60–120 GeV. With the Main Injector upgrades already implemented for NOA as well as with the expected implementation of PIP-II, each extraction will deliver protons in one machine cycle (0.7 s/60 GeV–1.2 s/120 GeV) to the LBNF target in 10 s. The complex delivers to DUNE an average power of 1.2 MW for 120 GeV protons. Neutrinos are produced after the protons hit a solid target and produce mesons which are subsequently focused by three magnetic horns into a 194 m long helium-filled decay pipe where they decay into muons and neutrinos. The facility is equipped with a near detector located 300 m after the absorber. Unlike its predecessor, LBNF has been designed to provide neutrinos over a wide energy range in order to cover both the first and the second oscillation maximum in DUNE. The beamline is designed to cope with a 2.4 MW power beam without retrofitting since an additional upgrade of the proton acceleration chain is planned (PIP-III) to be operational by 2030.

The 40 kt DUNE Far Detector [86] consists of four LArTPC detector modules, each with fiducial mass of about 10 kt, installed approximately 1.5 km underground and on-axis with respect to the LBNF beam. Each of the LArTPCs fits inside a cryostat of internal dimensions 18.9 m (width) × 17.8 m (height) × 65.8 m (length) that contains a total Argon mass of about 17.5 kt. The four identically sized modules provide flexibility for staging construction and for evolution of LArTPC technology. The baseline design has been recently validated at CERN by means of a 770 ton prototype (ProtoDUNE-SP [87], with a fiducial mass of about 400 ton) using charged particle beams and cosmic rays. This “single phase” (SP) designs inherits the most important techniques developed by ICARUS (see Section 3) for the charge readout, HV, and the Argon purification system. Unlike ICARUS, ProtoDUNE-SP (see Figure 7) is engineered to be scalable up to a DUNE module. It means that the components of the TPC are modular and can be assembled on-site in the underground laboratory and the cryogenic system has been greatly simplified. ProtoDUNE-SP demonstrated that it is possible to achieve an electron lifetime comparable with ICARUS using a cryostat based on a very cheap technology developed for industrial applications. A “membrane cryostat” is made of a corrugated membrane that contains the liquid and gaseous Argon, a passive insulation that reduces the heat leak, and a reinforced concrete structure to which the pressure is transferred. A secondary barrier system embedded in the insulation protects it from potential spills of liquid Argon, and a vapor barrier over the concrete protects the insulation from the moisture of the concrete. This system is used for a long time for the transportation of liquified natural gases and has been tailored for use with high purity Argon during the R&D for DUNE. Unlike ICARUS, the charge readout electronic is located inside the cold volume to reduce noise, and the light detection system is based on Silicon Photomultipliers (SiPMs) embedded in the anode wire planes instead of large area PMTs.

Figure 7.

The ProtoDUNE-SP and ProtoDUNE-DP detectors at CERN.

For the second module, DUNE is considering an innovative Double Phase LArTPC where the electrons are multiplied in the gas phase. The Double Phase (DP) readout allows for a lower energy threshold and a reduction of complexity and cost due to the longer drift length: in DP, the distance between the cathode and the anode is 6 m and the drift takes place in the vertical direction while the distance of the horizontal planes in a SP module is 3.5 m. This technique is currently under validation at CERN (ProtoDUNE-DP [88]).

Both DUNE and Hyper-Kamiokande have been designed firstly to provide a superior sensitivity to CP violation and mass hierarchy compared with the previous generation of long-baseline experiments. DUNE, in particular, is able to establish mass ordering at in 2.5 years of data taking whatever is the value of [89]. Similarly, it can establish CP violation in the leptonic sector at 5 (3) level in 10 (13) years for 50% (75%) of all possible values of . If the value of is large, as suggested by current hints, CP violation can be established in about six years of data taking: statistics and control of the systematics will thus be crucial to improve the precision in the measurement of (about for ). Table 3 summarizes the sensitivity for a selection of physics observables as a function of time. The number of years are computed assuming the standard DUNE staging scenario: the start of data taking of DUNE will commence after the commissioning of two DUNE modules (20 kton) with an average beam power of 1.2 MW (T0). In the next year (T0 + 1y), the third module will be in data taking (30 kton). DUNE will reach its full mass (40 kton) at T0 + 3y. The beam will be upgraded to 2.4 MW three years later, i.e., at T0 + 6y.

Table 3.

Time to achieve the most relevant physics milestones in DUNE for the current detector staging scenario (see text).

As noted above, this class of experiments is able to test in a single setup the consistency of the minimally extended SM employing the rate of and in neutrino and antineutrino runs. It thus has a rich search program of physics beyond the Standard Model. Natural sources are exploited in DUNE for the study of Supernovae Neutrino Bursts (SNB), atmospheric neutrinos, and the search for proton decay. In particular, DUNE is the only large mass experiment based on Argon. It has a particular sensitivity to the component of the SNB through the reaction and to proton decay in the channel where the kaon is below the kinematic threshold in Cherenkov detectors.

6.2. Hyper-Kamiokande

The possibility of a 5 sensitivity on the measurement of leptonic CP violation, the very reach program of astrophysical measurements, low energy neutrino multimessenger physics and proton decay, and the strength of the double Noble prize (Super)Kamiokande experiment, motivated a large international collaboration to propose the Hyper-Kamiokande (Hyper-K) project [90].

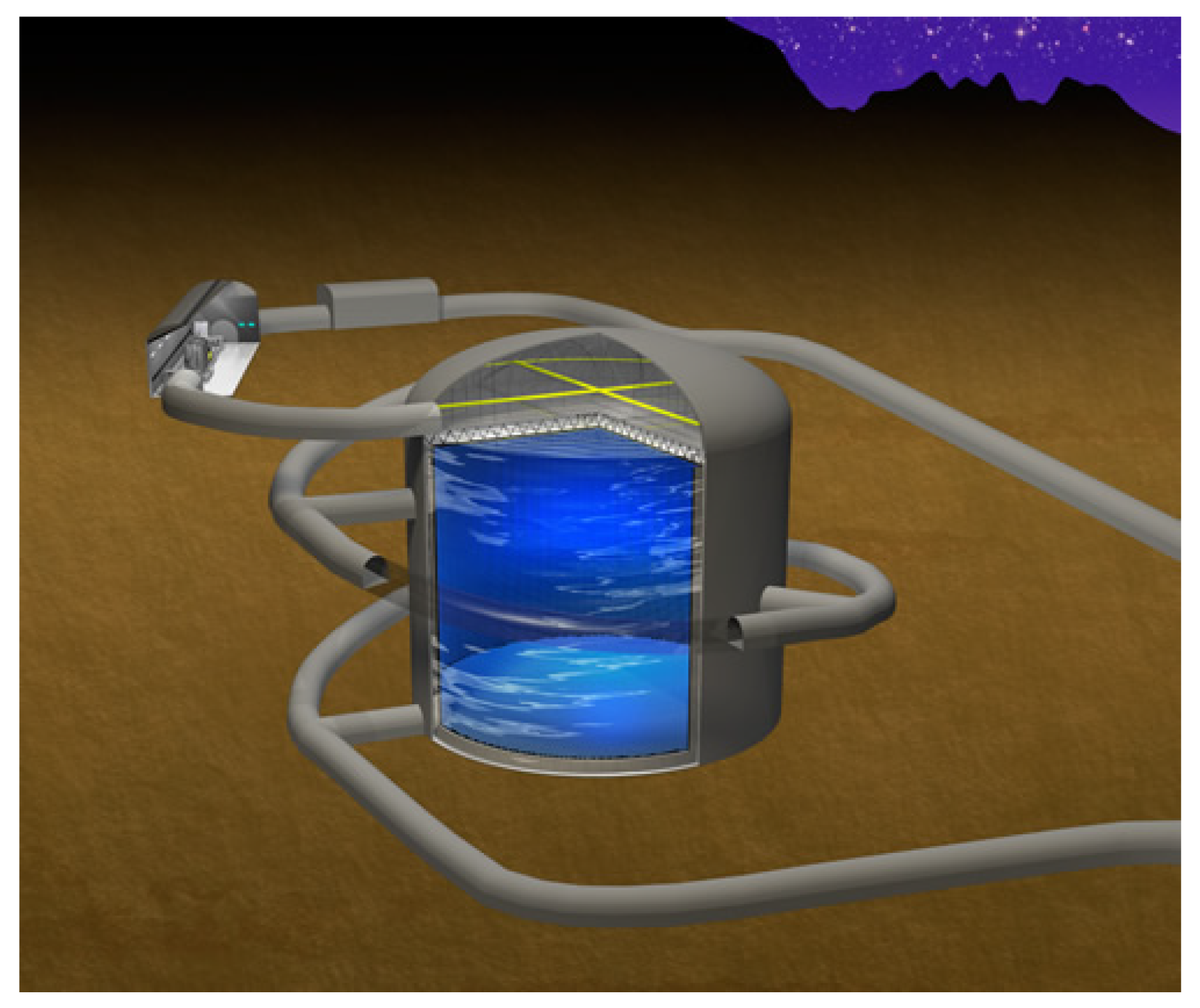

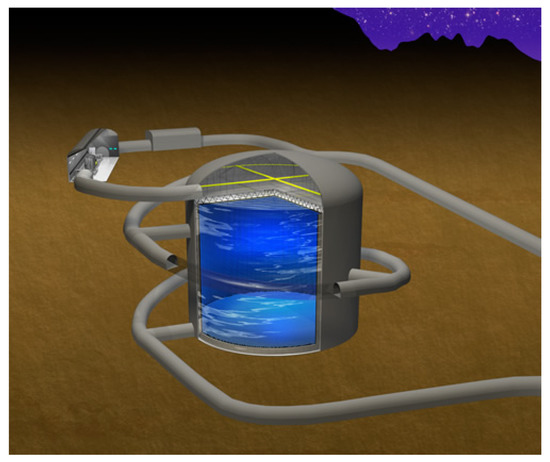

The design of Hyper-Kamiokande is a cylindrical tank with a diameter of 74 m and height of 60 m. The total (fiducial) mass of the detector is 258 (187) kt, for a fiducial mass eight times larger than Super-Kamiokande. A schematic view of Hyper-Kamiokande is shown in Figure 8.

Figure 8.

Layout of the Hyper-Kamiokande detector.

The Hyper-Kamiokande detector will be located in Tochibora mine, 8 km south of Super-Kamiokande, but at the same distance from J-PARC (295 km) and at the same off-axis angle (). With an overburden of 1750 meters-water-equivalent, the muon flux is about five times higher than in Super-Kamiokande. Hyper-Kamiokande will be instrumented with 40,000 inward facing PTMSs, the 50 cm diameter Hamamatsu R12860 PMTs that have about two times higher photon detection efficiency than those of Super-Kamiokande, for a photocoverage fraction of 40%.

Another possibility is to have a photocoverage fraction of 20% complemented by 5000 Multi-PMT derived from the design of KM3NeT [91]. Within a 50 cm diameter, they will contain 19 7.7-cm PMTs together with integrated readout and calibration [92]. They can allow an increased granularity, enhancing event reconstruction in particular for multi-ring events, and better timing, which could further reduce the dark hit background and event reconstruction. The improved granularity should also guarantee an increase of the fiducial volume. Simulations show that this second setting-up guarantees very similar performances of the original 40% coverage as far as concerns beam oscillation studies.

The outer detector veto will be similar to that of SK, with 20 cm PMTs resulting in a 1% photocathode coverage of the inner wall.

The neutrino beam configuration of Hyper-K would be the same as T2K, with an upgraded power of the main 30 GeV proton ring at 1.3 MW.

The close detector system is designed to be again ND280, in the upgraded version described in Section 4.1.1.

A water Cherenkov (WC) near detector can be used to measure the cross-section on directly. It could be placed at intermediate distance, ∼2 km, from the interaction target, in order to have a neutrino flux more similar to the flux at SK and an interaction rate not critical for the performance of a WC detector. These additional WC measurements are important to achieve the low systematic errors required by Hyper-K, and complements those of the ND280 magnetized tracking detector.

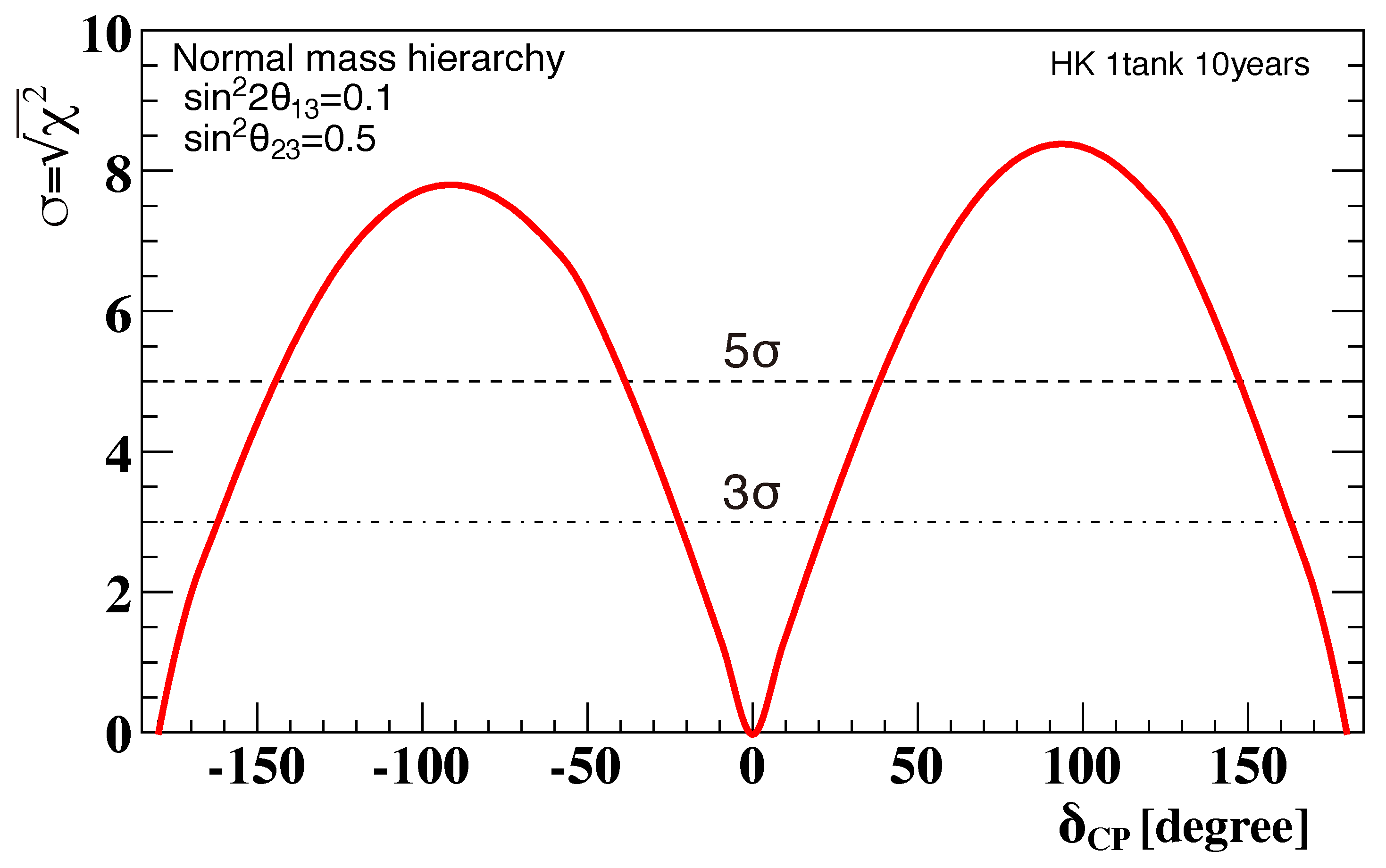

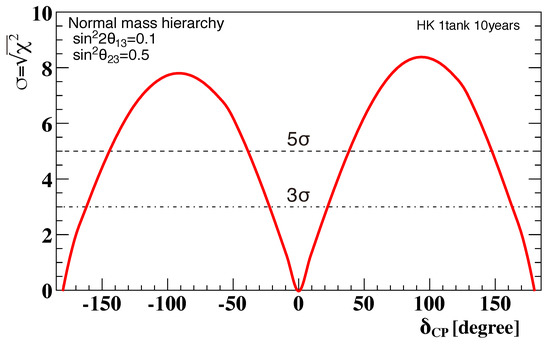

The studies of the sensitivity to CP violation assume an integrated beam power s corresponding to pot with the 30 GeV J-PARC proton beam. The selection criteria of and candidate events are based on those established in the T2K experiment. The total systematic uncertainties of the number of expected events are assumed to be 3.2% for the appearance and 3.9% for the appearance, assuming a 1:3 ratio of beam power for the : modes. These systematic error values roughly correspond to the statistical errors at full statistics. Figure 9 shows the expected significance to exclude the CP conserving cases at full statistics.

Figure 9.

Sensitivity of Hyper-K in measuring [90].

CP violation in neutrino oscillations can be observed with >5(3) significance for 57(80)% of the possible values of . Exclusion of can be obtained with a significance of 8 in the case of maximal CP violation with , the favored value of T2K. By combining the analysis of beam neutrinos with the analysis of atmospheric neutrinos, Hyper-K would have a sensitivity in rejecting the false value of neutrino mass ordering.

A powerful possible upgrade of Hyper-K would consist of building a second identical detector in Korea at a distance of ∼1100 km and larger overburden (∼2700 mwe) from J-PARC [93]. This second far detector can be exposed by a 1–3 degree off-axis neutrino beams from J-PARC and would be then mostly sensitive to neutrino oscillations at the second oscillation maximum.

This two-baseline setup could break the degeneracy of oscillation parameters and achieve a better precision in measuring .

Among the non-oscillation physics goals of Hyper-K, it is worth to noting the 90% sensitivity to proton decays of years for the decay channel (existing limit is years) and years for the channel (existing limit: years). Hyper-K would collect events for a 10 kpc supernova explosion and events for a supernova at the Large Magellanic Cloud where SN1987a was located. It could achieve a statistical sensitivity in detecting supernova relic neutrinos (SRN), integrating 10 years of data taking. These numbers are computed not assuming gadolinium doping in the detector that would be highly beneficial in all these channels [94]. In particlular with 0.1% by mass of gadolinium dissolved in water, the threshold for SRN detection would decrease from 16 to 10 MeV, allowing for exploring the history of supernova bursts back to the epoch of red shift . The number of detected SRN events with gadolinium loading would become about 280, compared with 70 events without loading.

7. The Systematic Reduction Program

Neutrino oscillation experiments mostly rely on the near-far detector cancellation to mitigate the effects of systematic errors. This technique is the cornerstone of long-baseline experiments, and it consists of building an additional detector at a distance from the source where oscillations have not developed yet. The near detectors thus measure the and interaction rate at source and are compared with the rate at the far detector. If the near detector is close to the source (a few hundreds meters) and identical to the far detector, it provides a normalization for the and rates that can be used to extract and probabilities accounting for the finite efficiency of the detector for and CC events, provided that the corresponding cross-sections are properly known. If the detectors can measure both and and the beam is monochromatic, the remaining systematics after the near-far cancellation is . Unfortunately, other effects make the near-far detector comparison more challenging if systematics must be kept well below 10%. Firstly, the beam spectrum observed at the far location is different from the beam at source as observed in the near detector. This is due to the large difference between the angular acceptance of the two detectors. Such effect is exacerbated if the far detector is located off-axis with respect to the beam. In addition, high power beams have such large rates at close sites that pile-up effects may jeopardize the efficiency of the detector, increase the background of interactions in the surrounding material, and change the performance of PID algorithms. This is particularly disturbing for technologies that are intrinsically slow (liquid Argon TPC) or difficult to be operated at high pile-up rates (Cherenkov detectors). Pile-up effects can be mitigated by using fast neutrino detectors at the price of having the near detector different from the far detector. This is the method of choice of T2K for the ND280 and its upgrades (see Section 4). Finally, since no beam is monochromatic, the incoming neutrino energy must be reconstructed from the kinematics of final state particles, which in turn depend on final state interactions and nuclear effects. The simulation of these effects from first principles is beyond the state of electroweak nuclear physics and the models implemented in the neutrino generators must be tuned and validated by experimental data from either the near detector or dedicated cross-section experiments.

Over the years, the most rewarding strategy has been constraining the near detector observables embedding all available information on flux, cross-section, energy reconstruction, and detector effects combining both measurements on-site (near detector) and data recorded in dedicated facilities (hadroproduction experiments, cross-section experiments, detector test-beam). In spite of the complexity of this approach, current long baseline experiments can claim an overall systematic budget at ∼5% level for events and 8%–9% for events (see Section 4).

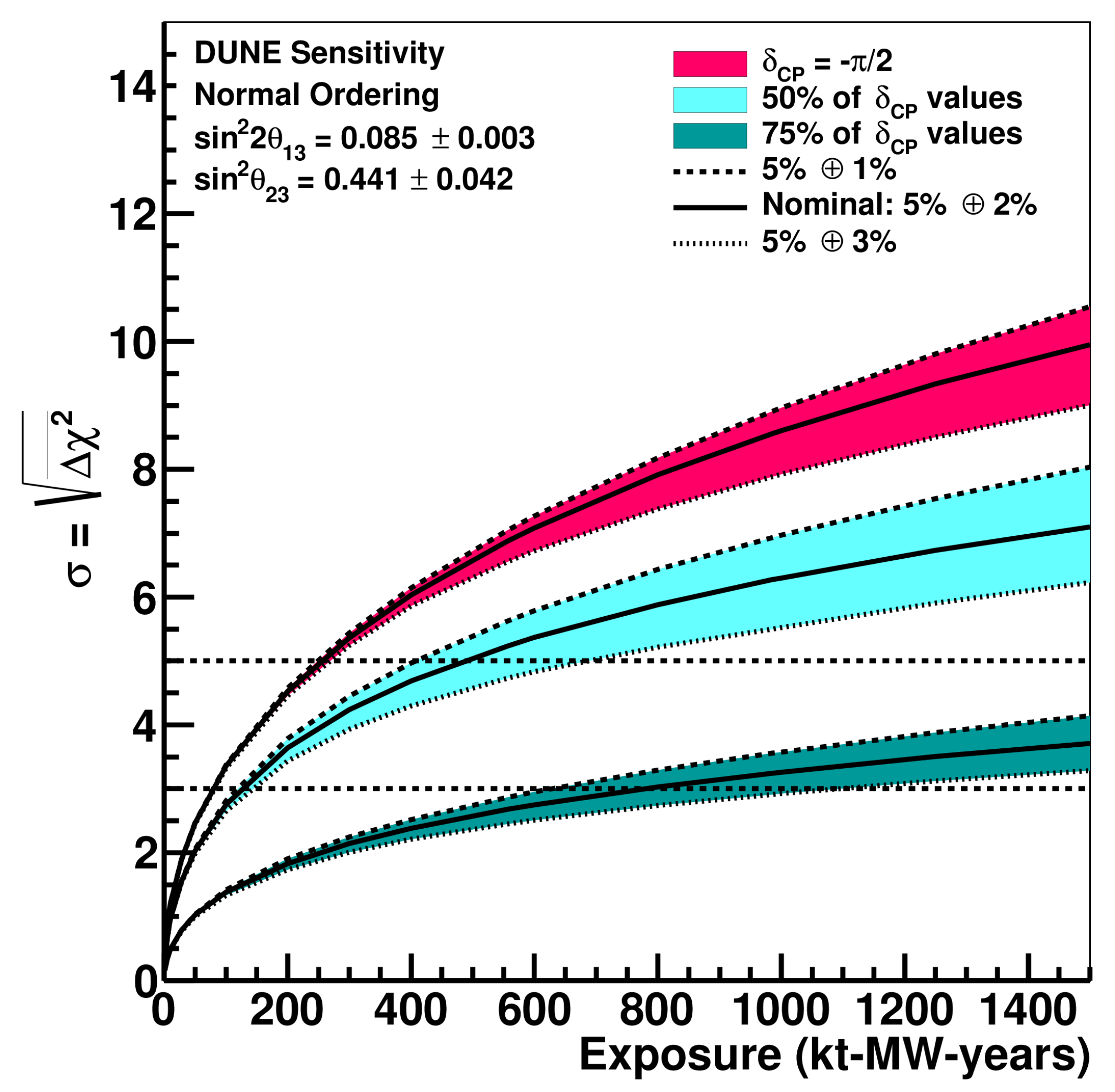

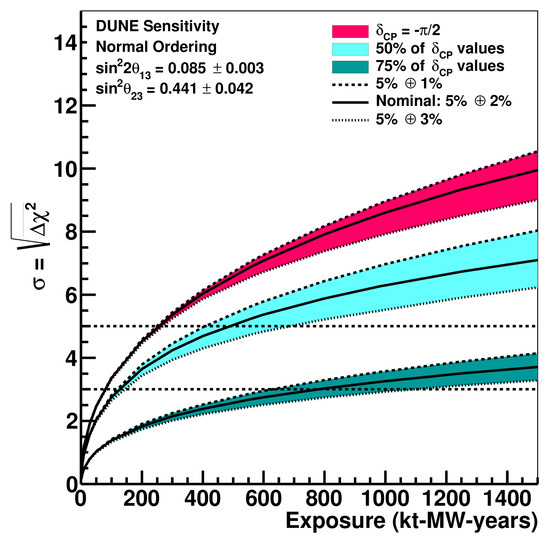

The next generation of Super-beams (DUNE and Hyper-Kamiokande) has a physics reach that will be mostly dominated by the residual systematic errors if they remain at the same values of T2K and NOA (statistical errors at full statistics will be below 3%). Systematic errors have to be roughly halved if the investments for the huge exposures of next generation experiments have to be fully exploited. For the sake of illustration, Figure 10 shows the impact on the normalization uncertainty for DUNE as a function of the exposure expressed in (mass) × (beam power) × year, i.e., kton × MW × years [86].

Figure 10.

CP violation sensitivity for DUNE as a function of the exposure (see text). The width of the band corresponds to the difference in sensitivity between signal normalization uncertainty of 1% and 3%. The nominal uncertainty of 5% on the disappearance mode and 2% on the appearance mode is shown as the solid line. The three curves show the significance when and the minimum significance for 50% and 75% of true values (CP coverage).

A vigorous systematic reduction program is therefore extremely cost effective and, in addition, provides robustness against hidden systematic biases in Super-beam experiments. This program is under development and follows three lines of research.

7.1. Near Detectors

Both for DUNE and Hyper-Kamiokande, the near detector will comprise a high granularity setup similar to ND280. Even if the technology envisaged in the upgrade of ND280 and in the DUNE high-pressure TPC are different from the far detector, they allow for a superior reconstruction of final state particles in CC events and can be operated at high rate. In DUNE, the high pressure (gas Argon) TPC is complemented by a modular liquid Argon TPC that is readout by pixels after a small (50 cm) drift length [95]. Unlike the on-axis detector (3DST embedded in the former KLOE magnet and calorimeter), this setup is movable horizontally in the plane perpendicular to the beam axis. It thus samples different beam angles to retrieve the relation between the incident and reconstructed neutrino energies in the detector (“"PRISM concept” [96]). The same concept is under consideration in the Hyper-Kamiokande intermediate detector. This detector is located at a larger distance (∼2 km) than the N280 to reduce pile-up nuisance. In its nominal position, it samples a beam whose spectrum is the same as for the far detector. The intermediate detector, however, is movable in the vertical axis and thus implements the PRISM concept in the energy range of interest for T2K and Hyper-K [90].

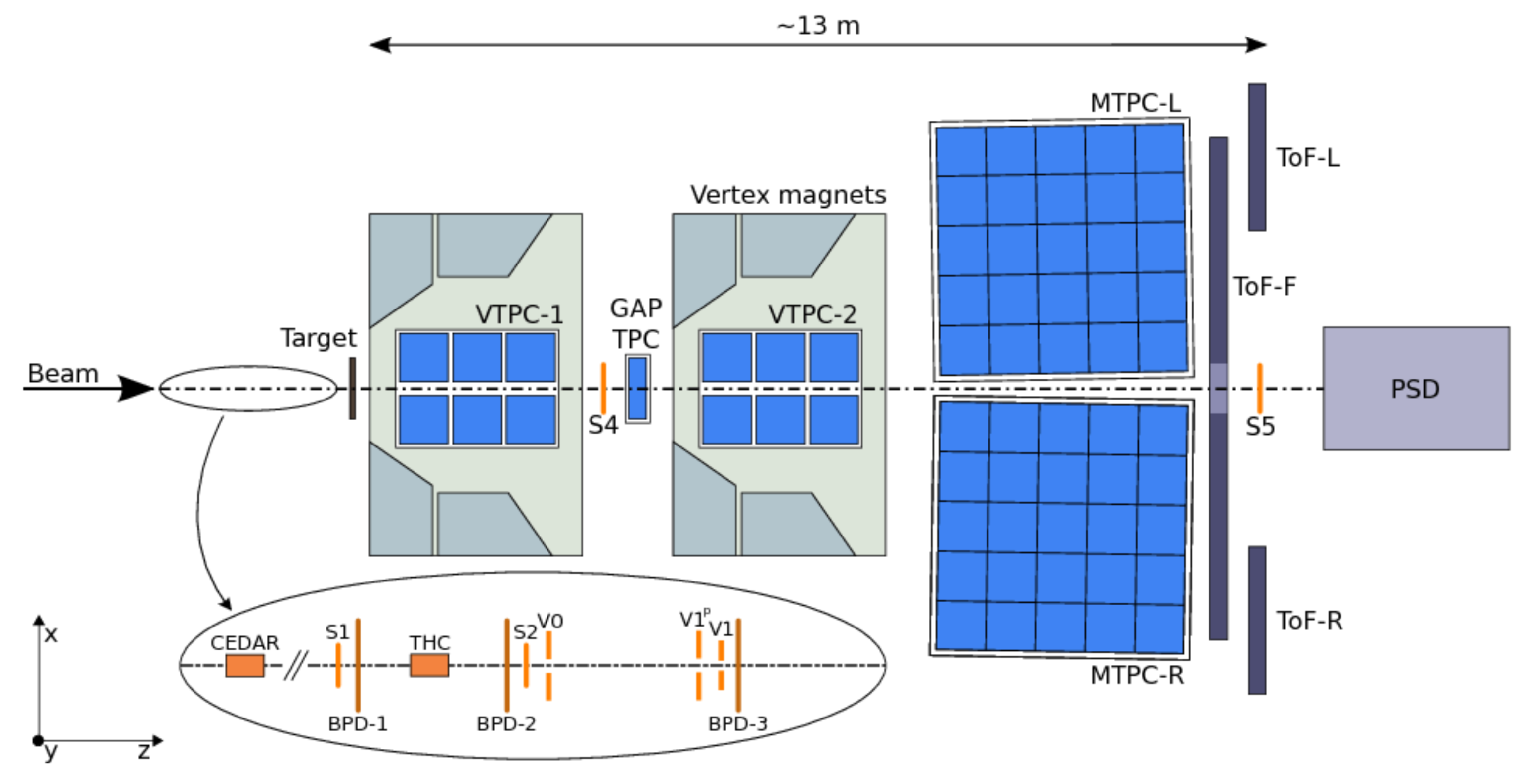

7.2. Hadroproduction Experiments

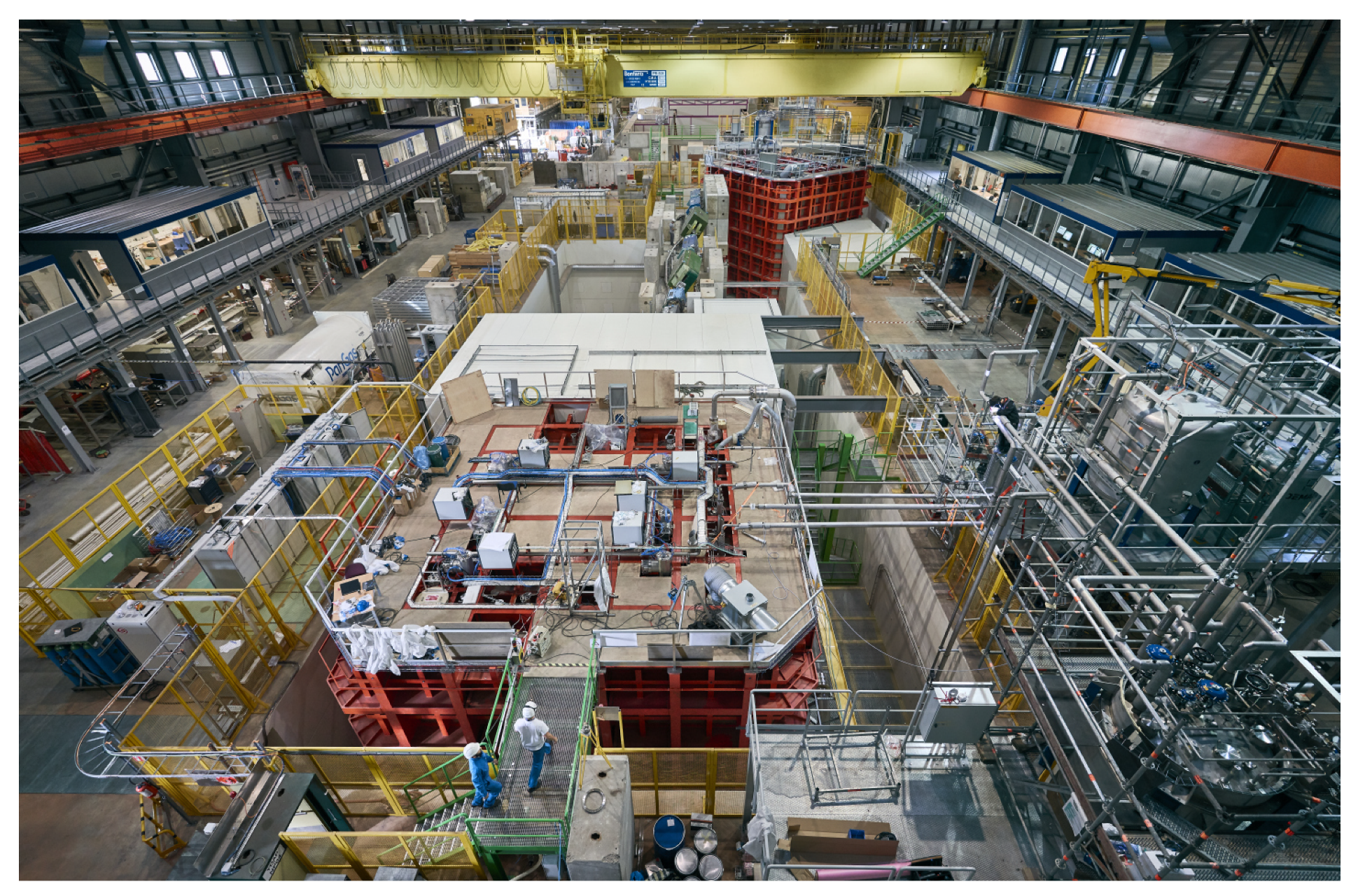

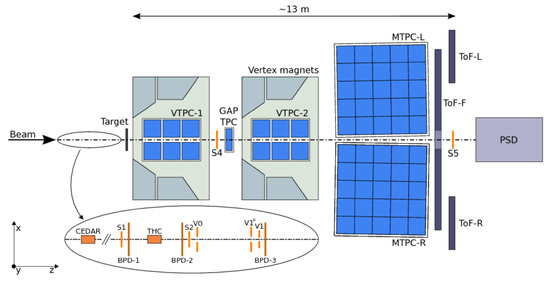

Hadroproduction experiments measure the total and differential yields of secondary particles (mostly pions, protons, and kaons) produced by high energy protons impinging on a target. They play a key role in the determination of the initial flux of neutrino beams because the secondary production is dominated by non-perturbative QCD effects, and it is thus the most systematic prone part of the beamline simulation. Dedicated hadroproduction experiments have been routinely performed since the 1990s as ancillary experiments for long and short baseline facilities and are essential for the DUNE/Hyper-K program. Following the experience of the HARP experiment that measured hadroproduction in the K2K [97] and MiniBoone [98] targets, the NA61 experiment [51] at CERN (see Figure 11) has been extended beyond the CERN Long Shutdown 2 [99] to perform measurement campaigns with thin and replica targets of T2K, NOA, Hyper-K, and DUNE.

Figure 11.

Layout of the NA61/SHINE experiment [99].

7.3. Cross-Section Experiments

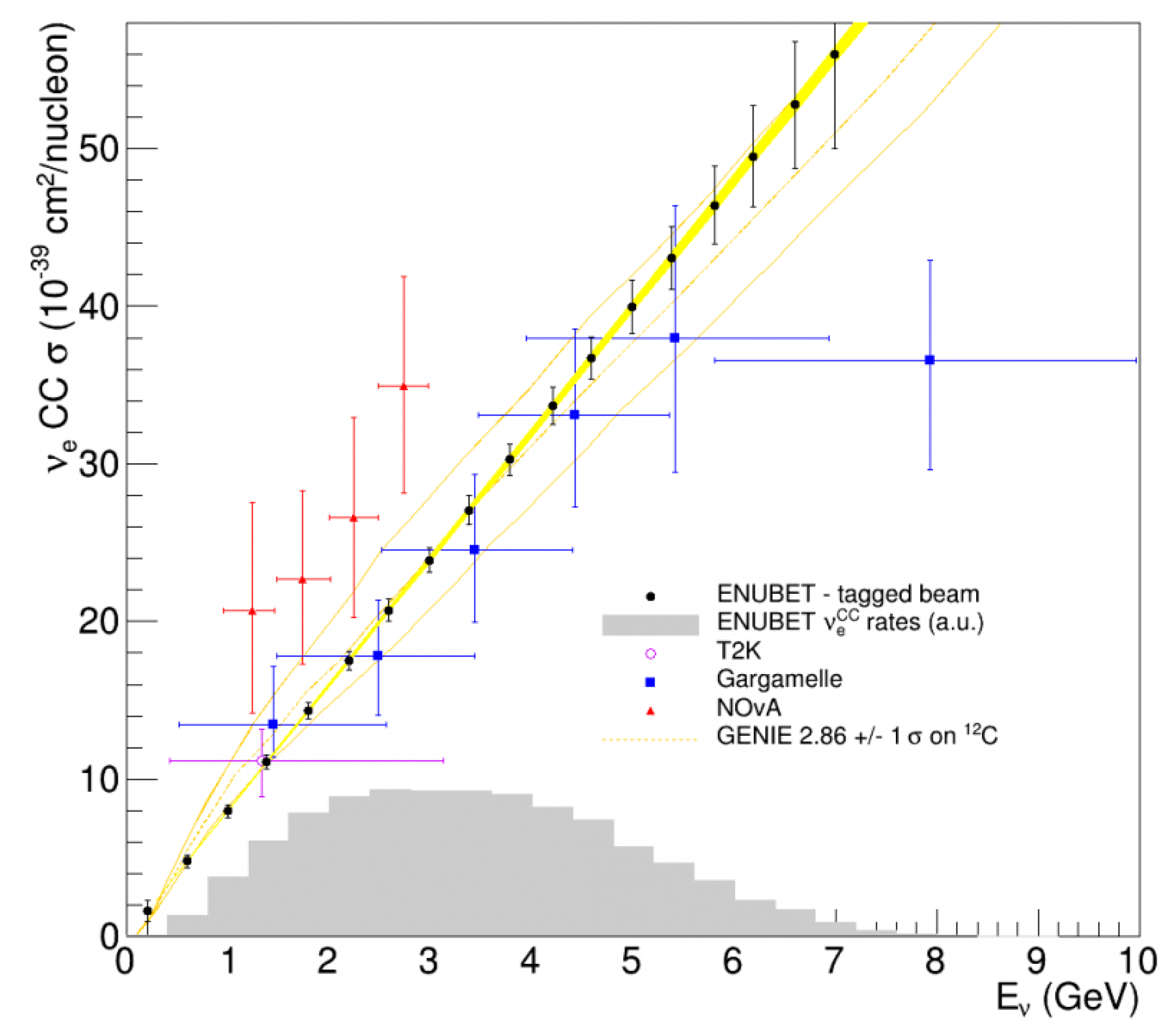

In the last decade, our knowledge of total and differential cross-sections at the GeV scale has grown significantly through dedicated experiments (SciBoone, Minerva, ArgoNeut), experiments operated in short baseline beams to search for sterile neutrinos (MiniBooNE, MicroBooNE), and the near detectors of short baseline experiments [100,101]. The results of these experiments showed significant disagreements with theory predictions and with the neutrino interaction generators used by long-baseline experiments. They hence played a prominent role in the systematic reduction program of T2K and NOA. In the DUNE/Hyper-K era, their role will be even more important [102,103]. In order to reduce the systematic budget at the percent level, we cannot rely only on cancellation effects in the ratio:

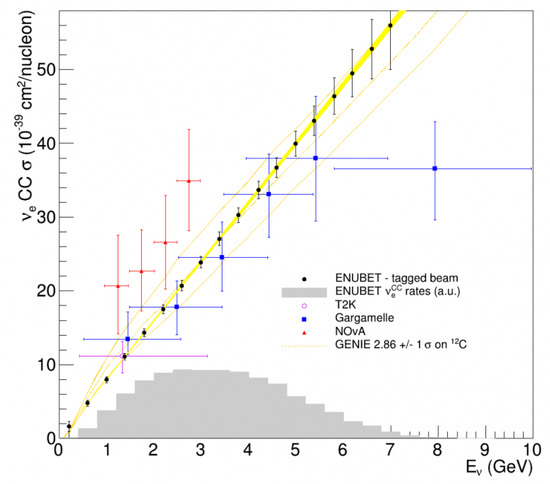

but we have to master each term of the integrals and, in particular and (currently known at the 25% and 10% level, respectively), and the corresponding differential cross-sections. Even if is still statistics dominated and can be improved by employing the near detectors of DUNE and T2K-II, the dominant systematic contribution to the muon and electron neutrino cross-sections will soon become the uncertainty on the flux of the short baseline beam used for the measurement of . This scenario advocates for a new generation of cross-section experiments with a highly controlled beam. The most promising candidates are the “monitored neutrino beams” [104] where the flux is measured in a direct manner monitoring the rate of leptons in the decay tunnel. In particular, the CERN NP06/ENUBET [105] experiment (ERC Consolidator Grant, PI A. Longhin) is aimed at designing a beamline where the positrons produced at large angles by decays are monitored at single particle level to measure the flux of . Monitored neutrino beams may achieve a precision of <1% in the flux determination and deliver () () CC events per year in a 500 ton detector as ProtoDUNE-SP (CERN) or ICARUS (Fermilab). In addition, the ENUBET beam is a narrow band beam where the position of the neutrino interaction vertex in the neutrino detector is strongly correlated to the energy of the neutrino. It can therefore provide a 8% (22%) precision measurement of the energy of the incoming neutrino at 3 (1) GeV without relying on the kinematics of the final state particle. Energy reconstruction in total and differential cross-sections is thus not biased by nuclear effects. The ENUBET beamline can be exploited not only by LArTPCs but also by dedicated high-granularity detectors specifically designed for cross-section measurements at low and high Z. The impact of the ENUBET beamline on the measurement of the cross-section is depicted in Figure 12.

Figure 12.

Impact of ENUBET on the knowledge of the cross-section. The ENUBET measurement and the statistical errors are shown with black dots. The orange bands represent the current systematic uncertainty from GENIE. The yellow band is the ENUBET systematic uncertainty on the flux. Past measurements from Gargamelle, T2K and NOA are also shown. The gray area shows the CC energy spectrum of ENUBET.

A much larger statistics of can be achieved by changing the core technology of accelerator neutrino beams. R&D on muon colliders and Neutrino Factories are ongoing for more than 30 years. In a Neutrino Factory [106,107], it is possible to produce () at the same pace as () from the three-body decay of muons stored in the accumulator ring. In its simplest design (NUSTORM [108,109]), a low energy Neutrino Factory can deliver up to CC events per year to the same detectors employed for ENUBET. As a consequence, NUSTORM would represent both a powerful source for cross-section measurements and a major step forward in the design of facilities for the post-DUNE era (see Section 8).

8. A Glimpse to the Long-Term Future