Multimodel Deep Learning for Person Detection in Aerial Images

Abstract

:1. Introduction

- (i)

- We propose a novel multimodel approach for person detection in aerial images in order to support SAR operations. The proposed model combines two different convolutional neural network architectures in the region proposal stage, as well as in the classification stage;

- (ii)

- We introduce the usage of contextual information contained in the surrounding area of the proposed region in order to improve the results in the classification stage;

- (iii)

- Our proposed approach achieves better results compared with state-of-the-art methods on the HERIDAL dataset.

2. Related Work

2.1. Small Object Detection

2.2. Search and Rescue Operations

2.3. Using Contextual Information

3. Proposed Methods

3.1. Dataset Description

3.2. Classification Stage

3.3. Region Proposal Stage

3.4. Multimodel Approach

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Waharte, S.; Trigoni, N. Supporting Search and Rescue Operations with UAVs. In Proceedings of the International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010; pp. 142–147. [Google Scholar]

- Goodrich, M.A.; Morse, B.S.; Gerhardt, D.; Cooper, J.L.; Quigley, M.; Adams, J.A.; Humphrey, C. Supporting wilderness search and rescue using a camera-equipped mini UAV. J. Field Robot. 2008, 25, 89–110. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.W.; Chen, J.; Liu, X.; Pietikainen, M. Deep Learning for Generic Object Detection: A Survey. Int. J. Comput. Vis. 2018, 128, 261–318. [Google Scholar]

- Bejiga, M.; Zeggada, A.; Melgani, F. Convolutional neural networks for near real-time object detection from UAV imagery in avalanche search and rescue operations. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 693–696. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Las Condes, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Shaoqing, R.; Kaiming, H.; Girshick, R.; Jian, S. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Valenti, C.F.; Nguyen, N.D.; Do, T.; Ngo, T.D.; Le, D.D. An Evaluation of Deep Learning Methods for Small Object Detection. J. Electr. Comput. Eng. 2020, 2020. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, R.; Xu, K.; Wang, J.; Sun, W. R-CNN-Based Ship Detection from High Resolution Remote Sensing Imagery. Remote Sens. 2019, 11, 631. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, Y.; Zhang, Z.; Shen, J.; Wang, H. Real-Time Water Surface Object Detection Based on Improved Faster R-CNN. Sensors 2019, 19, 3523. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lechgar, H.; Bekkar, H.; Rhinane, H. Detection of cities vehicle fleet using YOLO V2 and aerial images. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W12, 121–126. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Wu, J.; Liu, Y.; Yu, J. VaryBlock: A Novel Approach for Object Detection in Remote Sensed Images. Sensors 2019, 19, 5284. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [Green Version]

- Yun, K.; Nguyen, L.; Nguyen, T.; Kim, D.; Eldin, S.; Huyen, A.; Lu, T. Chow, Small target detection for search and rescue operations using distributed deep learning and synthetic data generation. In Proceedings of the Pattern Recognition and Tracking XXX, Baltimore, MD, USA, 14–18 April 2019; pp. 38–43. [Google Scholar]

- Liang, X.; Zhang, J.; Zhuo, L.; Li, Y.; Tian, Q. Small Object Detection in Unmanned Aerial Vehicle Images Using Feature Fusion and Scaling-Based Single Shot Detector With Spatial Context Analysis. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1758–1770. [Google Scholar] [CrossRef]

- Zhang, Z.; Guo, W.; Zhu, S.; Yu, W. Toward Arbitrary-Oriented Ship Detection With Rotated Region Proposal and Discrimination Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1745–1749. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xiao, Z.; Qian, L.; Shao, W.; Tan, X.; Wang, K. Axis Learning for Orientated Objects Detection in Aerial Images. Remote Sens. 2020, 12, 908. [Google Scholar] [CrossRef] [Green Version]

- Koester, R.J. A Search and Rescue Guide on where to Look for Land, Air, and Water; dbS Productions: Charlottesville, VA, USA, 2008. [Google Scholar]

- Music, J.; Orovic, I.; Marasovic, T.; Papić, V.; Stankovic, S. Gradient Compressive Sensing for Image Data Reduction in UAV Based Search and Rescue in the Wild. Math. Probl. Eng. 2016, 2016. [Google Scholar] [CrossRef] [Green Version]

- Burke, C.; McWhirter, P.R.; Veitch-Michaelis, J.; McAree, O.; Pointon, H.A.; Wich, S.; Longmore, S. Requirements and Limitations of Thermal Drones for Effective Search and Rescue in Marine and Coastal Areas. Drones 2019, 3, 78. [Google Scholar] [CrossRef] [Green Version]

- Leira, F.; Johansen, T.; Fossen, T. Automatic detection, classification and tracking of objects in the ocean surface from UAVs using a thermal camera. In Proceedings of the 2015 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2015. [Google Scholar] [CrossRef] [Green Version]

- Marušić, Z.; Božić-Štulić, D.; Gotovac, S.; Marušić, T. Region Proposal Approach for Human Detection on Aerial Imagery. In Proceedings of the 2018 3rd International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 26–29 June 2018; pp. 1–6. [Google Scholar]

- Božić-Štulić, D.; Marušić, Z.; Gotovac, S. Deep Learning Approach in Aerial Imagery for Supporting Land Search and Rescue Missions. Int. J. Comput. Vis. 2019, 127, 1256–1278. [Google Scholar]

- Divvala, S.; Hoiem, D.; Hays, J.; Efros, A.; Hebert, M. An empirical study of context in object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1271–1278. [Google Scholar]

- Mottaghi, R.; Chen, X.; Liu, X.; Cho, N.G.; Lee, S.W.; Fidler, S.; Urtasun, R.; Yuille, A. The Role of Context for Object Detection and Semantic Segmentation in the Wild. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 891–898. [Google Scholar]

- Torralba, A.; Sinha, P. Contextual Priming for Object Detection. Int. J. Comput. Vis. 2003, 53, 169–191. [Google Scholar] [CrossRef]

- Sun, J.; Jacobs, D. Seeing What Is Not There: Learning Context to Determine Where Objects Are Missing. In Proceedings of the 2017 Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5716–5724. [Google Scholar]

- Bell, S.; Zitnick, C.L.; Bala, K.; Girshick, R. Inside-Outside Net: Detecting Objects in Context with Skip Pooling and Recurrent Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2874–2883. [Google Scholar]

- Han, S.; Yoo, J.; Kwon, S. Real-Time Vehicle-Detection Method in Bird-View Unmanned-Aerial-Vehicle Imagery. Sensors 2019, 19, 3958. [Google Scholar] [CrossRef] [Green Version]

- Ma, D.; Wu, X.; Yang, H. Efficient Small Object Detection with an Improved Region Proposal Networks. IOP Conf. Ser. Mater. Sci. Eng. 2019, 533, 012062. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Zhang, L.; Belongie, S.; Luo, J.; Datcu, M.; Pelilo, M. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Marasović, T.; Papić, V. Person classification from aerial imagery using local convolutional neural network features. Int. J. Remote Sens. 2019, 40, 1–19. [Google Scholar] [CrossRef]

- Zitnick, C.; Dollar, P. Edge Boxes: Locating Object Proposals from Edges. In Proceedings of the European Conference on Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Volume 8693, pp. 391–405. [Google Scholar]

- Turić, H.; Dujmić, H.; Papić, V. Two stage Segmentation of Aerial Images for Search and Rescue. Inf. Technol. Control. 2010, 39, 138–145. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liang, Z.; Shao, J.; Zhang, D.; Gao, L. Small Object Detection Using Deep Feature Pyramid Networks. In Proceedings of the Advances in Multimedia Information Processing—PCM 2018, Hefei, China, 21–22 September 2018; pp. 554–564. [Google Scholar]

- Fang, P.; Shi, Y. Small Object Detection Using Context Information Fusion in Faster R-CNN. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications, Chengdu, China, 7–10 December 2018; pp. 1537–1540. [Google Scholar]

| TP | FP | FN | Precision | Recall | |

|---|---|---|---|---|---|

| Marušić et al. [25] | 301 | 146 | 40 | 67.30% | 88.30% |

| Božić-Štulić et al. [26] | 303 | 568 | 38 | 34.80% | 88.90% |

| Edge Boxes + classification | 214 | 581 | 123 | 26.91% | 63.50% |

| Mean Shift + classification | 172 | 154 | 165 | 52.76% | 51.03% |

| SSD | 318 | 7014 | 19 | 4.33% | 94.36% |

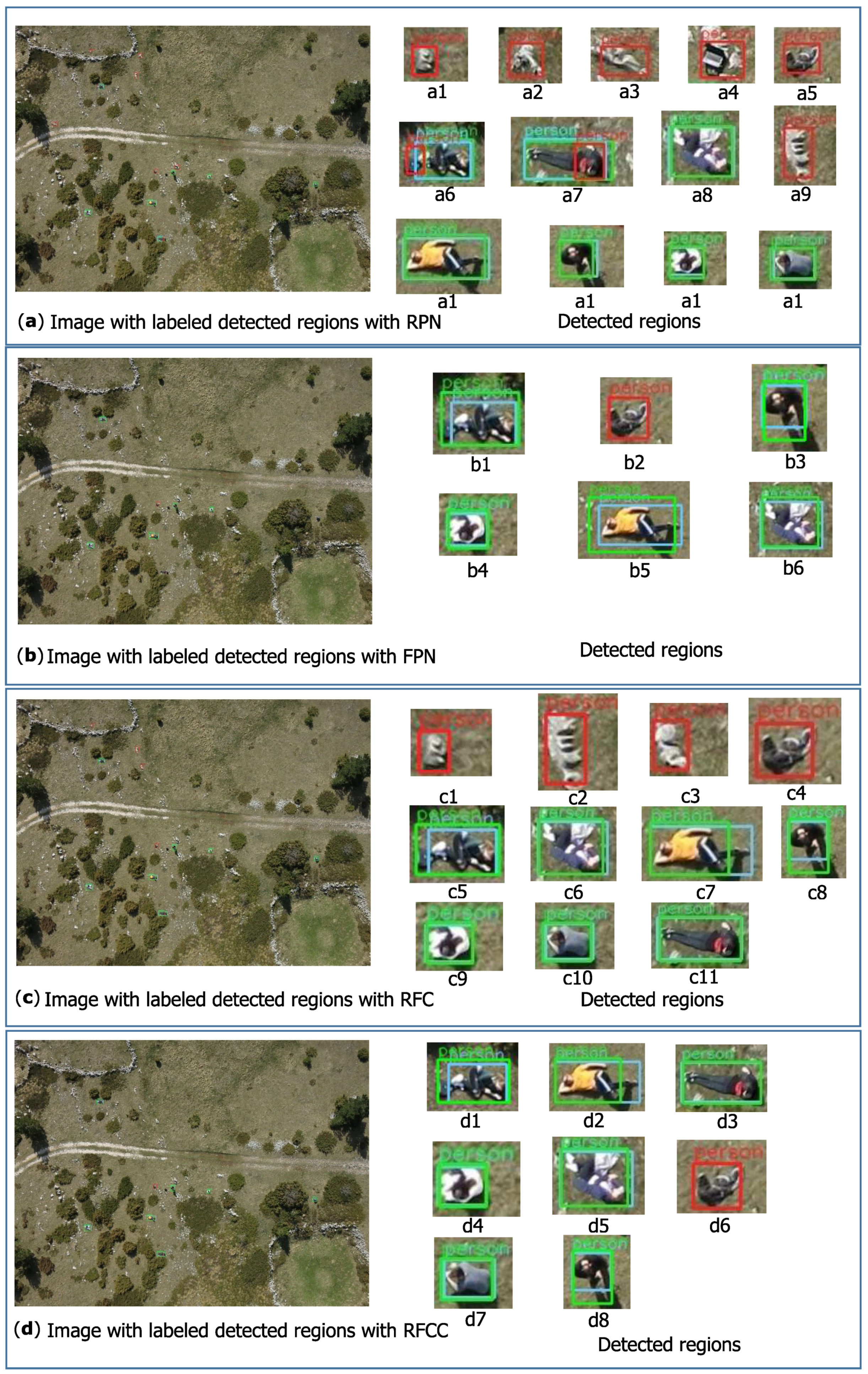

| RPN + classification | 322 | 453 | 15 | 41.54% | 95.54% |

| FPN + classification | 292 | 88 | 45 | 76.84% | 86.64% |

| RFC | 322 | 259 | 15 | 55.42% | 95.54% |

| RFCC | 320 | 163 | 17 | 66.25% | 94.95% |

| RFCCD | 319 | 144 | 18 | 68.89% | 94.65% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kundid Vasić, M.; Papić, V. Multimodel Deep Learning for Person Detection in Aerial Images. Electronics 2020, 9, 1459. https://doi.org/10.3390/electronics9091459

Kundid Vasić M, Papić V. Multimodel Deep Learning for Person Detection in Aerial Images. Electronics. 2020; 9(9):1459. https://doi.org/10.3390/electronics9091459

Chicago/Turabian StyleKundid Vasić, Mirela, and Vladan Papić. 2020. "Multimodel Deep Learning for Person Detection in Aerial Images" Electronics 9, no. 9: 1459. https://doi.org/10.3390/electronics9091459

APA StyleKundid Vasić, M., & Papić, V. (2020). Multimodel Deep Learning for Person Detection in Aerial Images. Electronics, 9(9), 1459. https://doi.org/10.3390/electronics9091459