Dehazing with Offset Correction and a Weighted Residual Map

Abstract

:1. Introduction

2. Materials and Methods

2.1. Image Degradation Model and Dark Prior

2.2. Transmission Estimation Using Offset Correction

2.3. A Weighted Residual Map

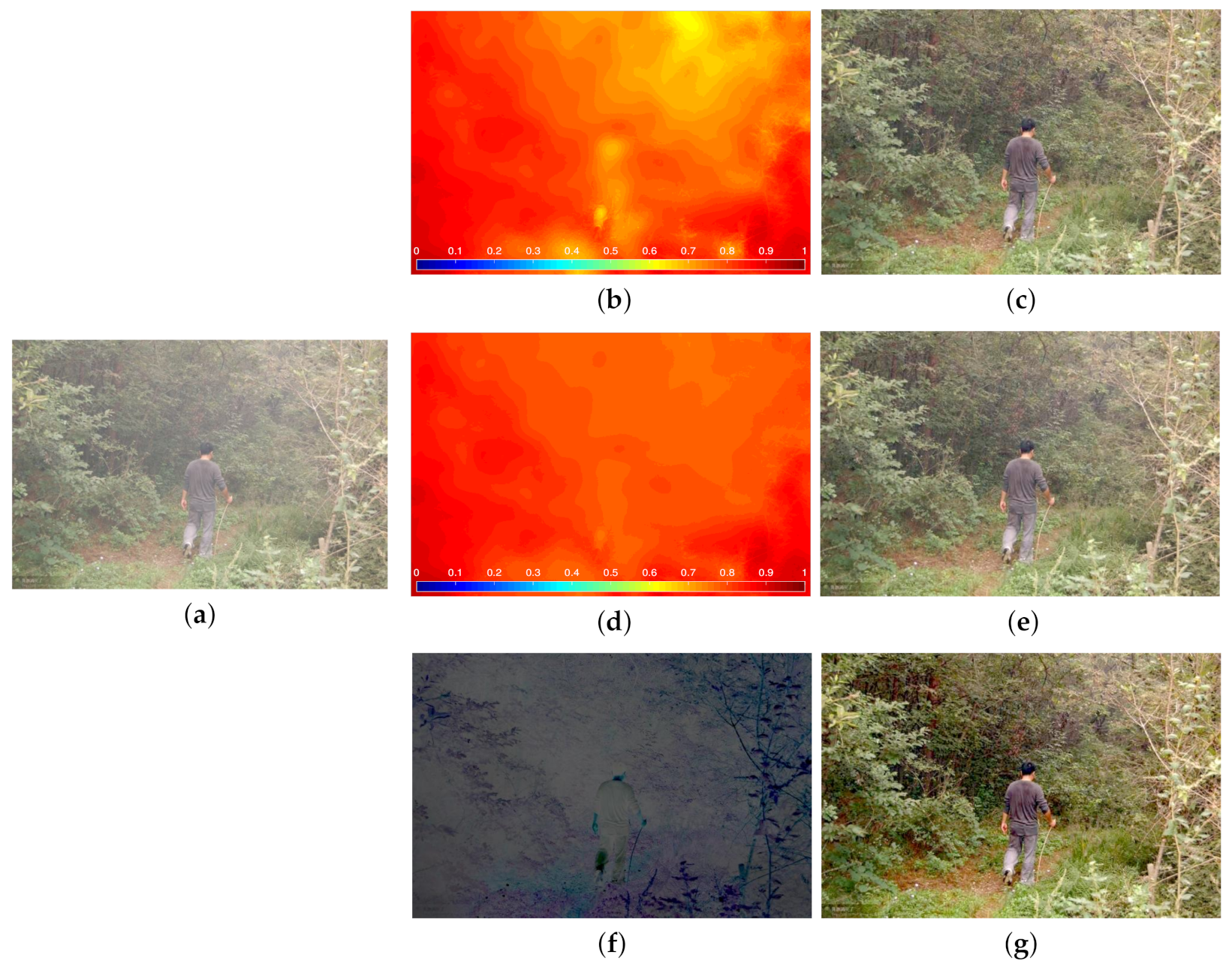

3. Results and Discussion

3.1. Ablation Study

3.2. Performance on Real-World Hazy Images

3.3. Performance on Synthetic Dataset

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lin, C.J.; Lin, C.H.; Wang, S.H. Using a Hybrid of Interval Type-2 RFCMAC and Bilateral Filter for Satellite Image Dehazing. Electronics 2020, 9, 710. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Chromatic framework for vision in bad weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR 2000 (Cat. No. PR00662), Hilton Head Island, SC, USA, 15 June 2000; Volume 1, pp. 598–605. [Google Scholar]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Instant dehazing of images using polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, USA, 8–14 December 2001; Volume 1, pp. 325–332. [Google Scholar]

- Kopf, J.; Neubert, B.; Chen, B.; Cohen, M.; Cohen-Or, D.; Deussen, O.; Uyttendaele, M.; Lischinski, D. Deep photo: Model-based photograph enhancement and viewing. ACM Trans. Graph. (TOG) 2008, 27, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Fattal, R. Single image dehazing. ACM Trans. Graph. (TOG) 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Kim, J.H.; Jang, W.D.; Sim, J.Y.; Kim, C.S. Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 2013, 24, 410–425. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. (TOG) 2014, 34, 1–14. [Google Scholar] [CrossRef]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed] [Green Version]

- Berman, D.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Pei, T.; Ma, Q.; Xue, P.; Ding, Y.; Hao, L.; Yu, T. Nighttime Haze Removal Using Bilateral Filtering and Adaptive Dark Channel Prior. In Proceedings of the 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC), Xiamen, China, 5–7 July 2019; pp. 218–222. [Google Scholar]

- Wang, Z.; Hou, G.; Pan, Z.; Wang, G. Single image dehazing and denoising combining dark channel prior and variational models. IET Comput. Vis. 2018, 12, 393–402. [Google Scholar] [CrossRef] [Green Version]

- Zhu, M.; He, B.; Wu, Q. Single Image Dehazing Based on Dark Channel Prior and Energy Minimization. IEEE Signal Process. Lett. 2018, 25, 174–178. [Google Scholar] [CrossRef]

- Bui, T.M.; Kim, W. Single Image Dehazing Using Color Ellipsoid Prior. IEEE Trans. Image Process. 2018, 27, 999–1009. [Google Scholar] [CrossRef] [PubMed]

- Golts, A.; Freedman, D.; Elad, M. Unsupervised Single Image Dehazing Using Dark Channel Prior Loss. IEEE Trans. Image Process. 2020, 29, 2692–2701. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mohamed, A.; Qian, K.; Elhoseiny, M.; Claudel, C. Social-STGCNN: A Social Spatio-Temporal Graph Convolutional Neural Network for Human Trajectory Prediction. arXiv 2020, arXiv:2002.11927. [Google Scholar]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, S.Y.; Wang, O.; Zhang, R.; Owens, A.; Efros, A.A. CNN-generated images are surprisingly easy to spot... for now. arXiv 2019, arXiv:1912.11035. [Google Scholar]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Qiao, T.; Zhang, J.; Xu, D.; Tao, D. Mirrorgan: Learning text-to-image generation by redescription. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1505–1514. [Google Scholar]

- He, Z.; Zuo, W.; Kan, M.; Shan, S.; Chen, X. Attgan: Facial attribute editing by only changing what you want. IEEE Trans. Image Process. 2019, 28, 5464–5478. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, T.; Xu, C.; Huang, J.; Wang, Y.; Shi, B.; Xu, C.; Tao, D. On Positive-Unlabeled Classification in GAN. arXiv 2020, arXiv:2002.01136. [Google Scholar]

- Dong, Y.; Liu, Y.; Zhang, H.; Chen, S.; Qiao, Y. FD-GAN: Generative Adversarial Networks with Fusion-discriminator for Single Image Dehazing. arXiv 2020, arXiv:2001.06968. [Google Scholar] [CrossRef]

- Li, R.; Pan, J.; Li, Z.; Tang, J. Single image dehazing via conditional generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8202–8211. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely Connected Pyramid Dehazing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Suárez, P.L.; Sappa, A.D.; Vintimilla, B.X.; Hammoud, R.I. Deep learning based single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1169–1176. [Google Scholar]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.H. Gated fusion network for single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3253–3261. [Google Scholar]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced Pix2pix Dehazing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Middleton, W.E.K. Vision through the Atmosphere; University of Toronto Press: Toronto, ON, Canada, 1952. [Google Scholar]

- El Khoury, J.; Thomas, J.B.; Mansouri, A. Does dehazing model preserve color information? In Proceedings of the 2014 Tenth International Conference on Signal-Image Technology and Internet-Based Systems, Marrakech, Morocco, 23–27 November 2014; pp. 606–613. [Google Scholar]

- Limare, N.; Lisani, J.L.; Morel, J.M.; Petro, A.B.; Sbert, C. Simplest color balance. Image Process. Line 2011, 1, 297–315. [Google Scholar] [CrossRef] [Green Version]

| Parameters: |

|---|

| Hazy image: , Atmospheric light: A, Number of pixels: MN |

| Procedures: |

| • Estimation of transmission |

| - Calculate the dark channel : |

| - Calculate the full scene prior : |

| - Calculate the offset-corrected transmission : |

| • Estimation of dehazed image |

| - Estimate the dehazed image by the offset-correcting approach: |

| - Calculate the residual map : |

| - Calculate the final dehazed image : |

| Method | Desk | Drawing Room | Sunlight | Bank | Buildings | Bird’s Nest Stadium |

|---|---|---|---|---|---|---|

| DCP | 16.3196 | 17.6363 | 16.7733 | 17.5155 | 16.9791 | 16.1003 |

| Berman et al. | 14.4116 | 15.7852 | 20.6931 | 13.8929 | 21.2273 | 14.9177 |

| DCPDN | 18.4555 | 14.2824 | 19.5346 | 18.5636 | 10.8738 | 14.5544 |

| Zhu et al. | 16.2172 | 20.0551 | 17.7547 | 15.9751 | 17.2879 | 19.1754 |

| Golts et al. | 14.0569 | 14.7816 | 18.1528 | 19.5449 | 23.4645 | 17.6003 |

| Our study | 18.2516 | 20.5433 | 20.7599 | 22.0458 | 21.1791 | 22.0905 |

| Method | Desk | Drawing Room | Sunlight | Bank | Buildings | Bird’s Nest Stadium |

|---|---|---|---|---|---|---|

| DCP | 4.1469 | 3.9854 | 4.2557 | 3.6922 | 3.6805 | 3.6869 |

| Berman et al. | 3.7576 | 3.9218 | 3.6357 | 3.4712 | 3.8265 | 3.9898 |

| DCPDN | 4.5575 | 4.2922 | 4.4491 | 4.1431 | 3.3413 | 3.9456 |

| Zhu et al. | 4.5649 | 4.4157 | 4.5340 | 3.9555 | 3.7579 | 4.1463 |

| Golts et al. | 4.5572 | 3.6419 | 4.2787 | 4.1060 | 4.2186 | 4.2094 |

| Our study | 4.5706 | 4.3003 | 4.5595 | 4.1577 | 4.4403 | 4.2952 |

| Method | PSNR |

|---|---|

| DCP | 18.7392 |

| Berman et al. | 17.3103 |

| DCPDN | 16.7077 |

| Zhu et al. | 19.4270 |

| Golts et al. | 19.3258 |

| Our study | 20.5538 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, C.; Wang, W.; Zhang, X.; Jin, L. Dehazing with Offset Correction and a Weighted Residual Map. Electronics 2020, 9, 1419. https://doi.org/10.3390/electronics9091419

Su C, Wang W, Zhang X, Jin L. Dehazing with Offset Correction and a Weighted Residual Map. Electronics. 2020; 9(9):1419. https://doi.org/10.3390/electronics9091419

Chicago/Turabian StyleSu, Chang, Wensheng Wang, Xingxiang Zhang, and Longxu Jin. 2020. "Dehazing with Offset Correction and a Weighted Residual Map" Electronics 9, no. 9: 1419. https://doi.org/10.3390/electronics9091419

APA StyleSu, C., Wang, W., Zhang, X., & Jin, L. (2020). Dehazing with Offset Correction and a Weighted Residual Map. Electronics, 9(9), 1419. https://doi.org/10.3390/electronics9091419