Gaze-Based Vehicle Driving Evaluation of System with an Actual Vehicle at an Intersection with a Traffic Light

Abstract

:1. Introduction

2. Previous Studies about Gaze Estimation Method for Driving the Car

3. Results

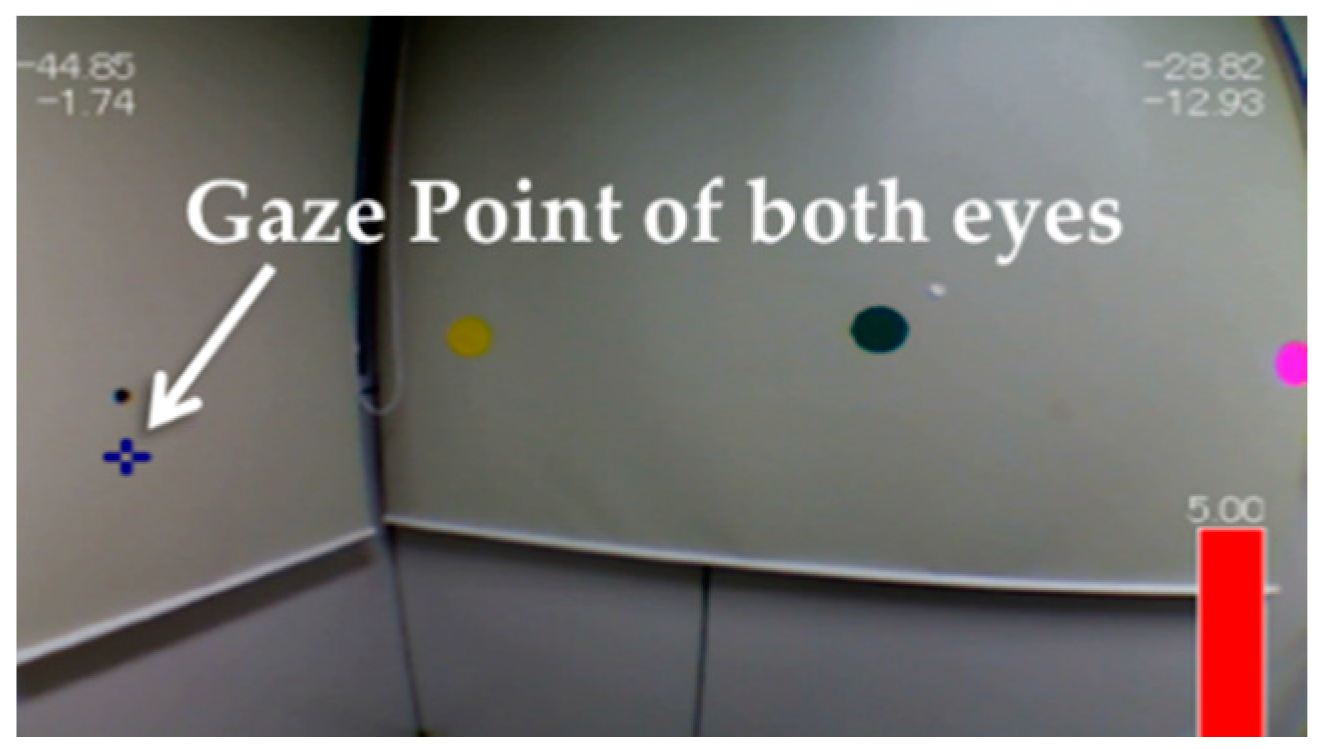

3.1. Measurement System Using TalkEye Lite

3.2. The Head Angle Estimation Method Using Template Matching

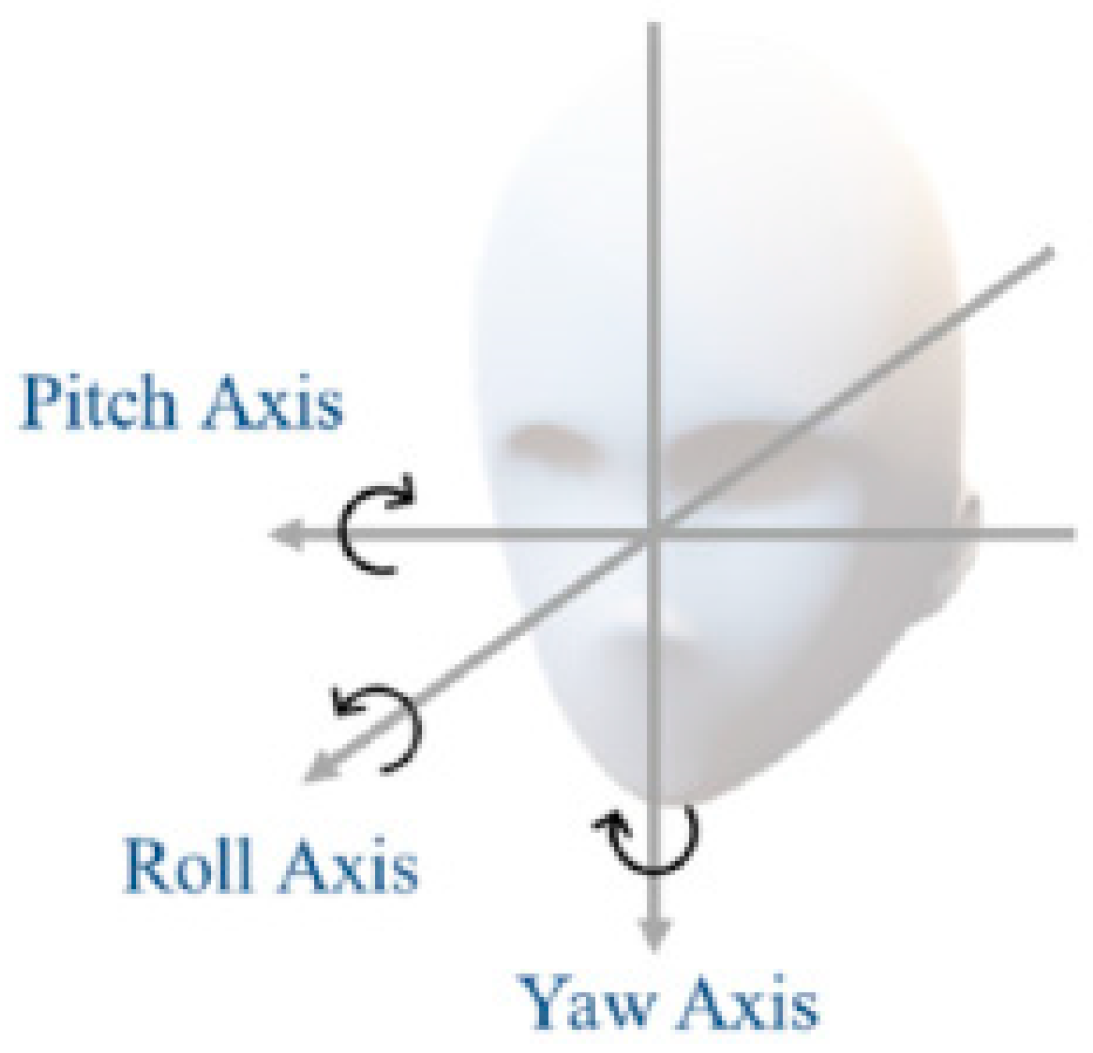

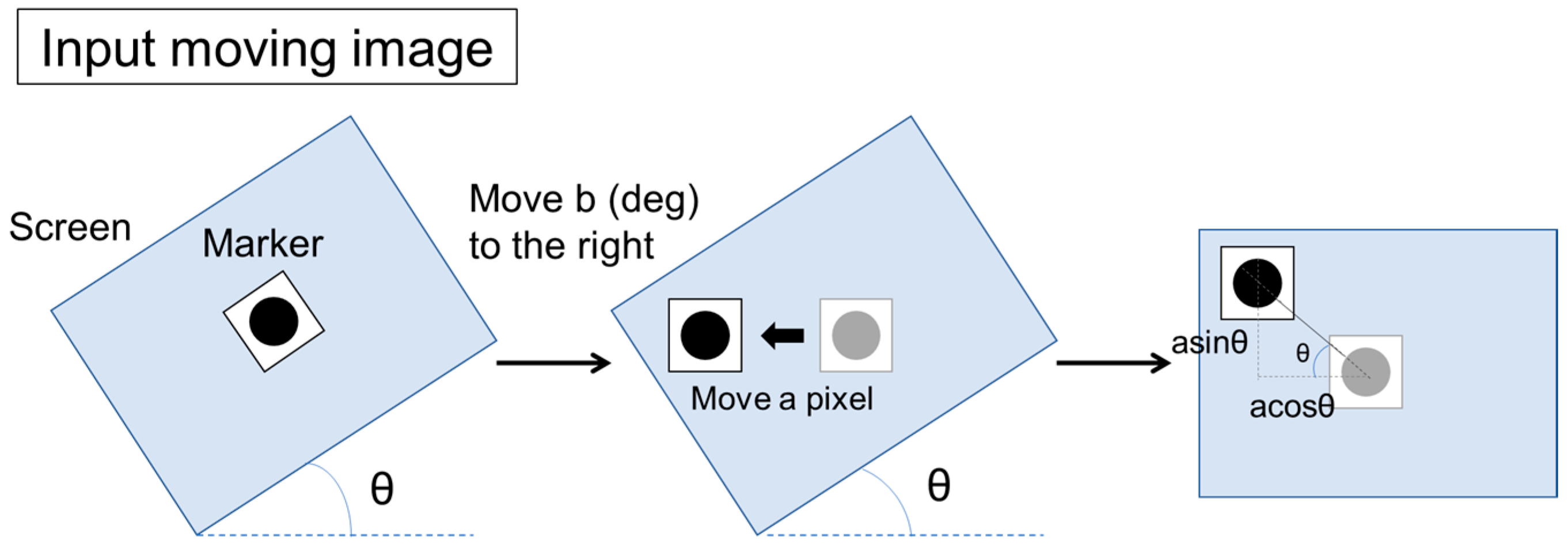

3.2.1. Estimating Inclination of Head

3.2.2. Calculation of the Roll Angle

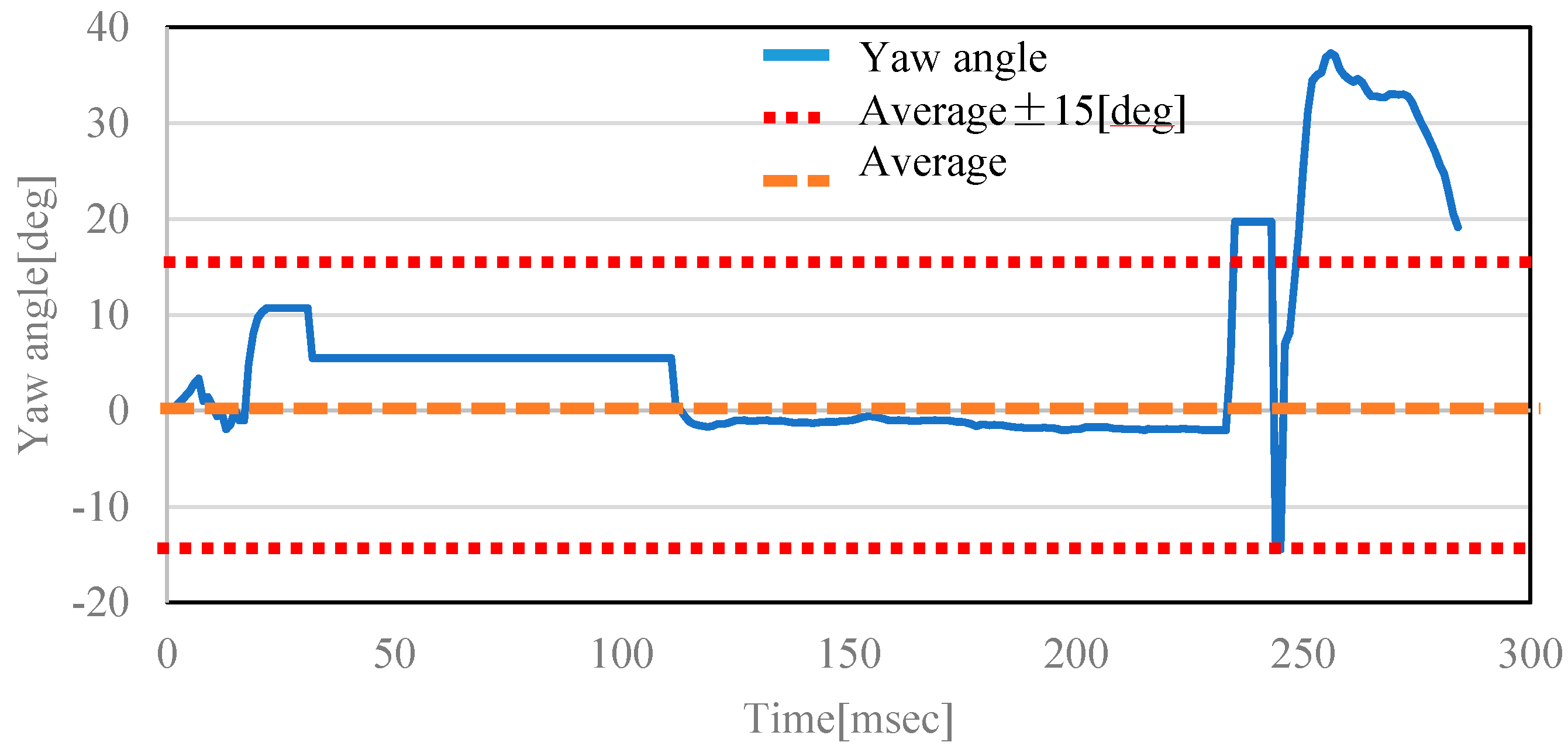

3.2.3. Calculation of Yaw Angle and Pitch Angle

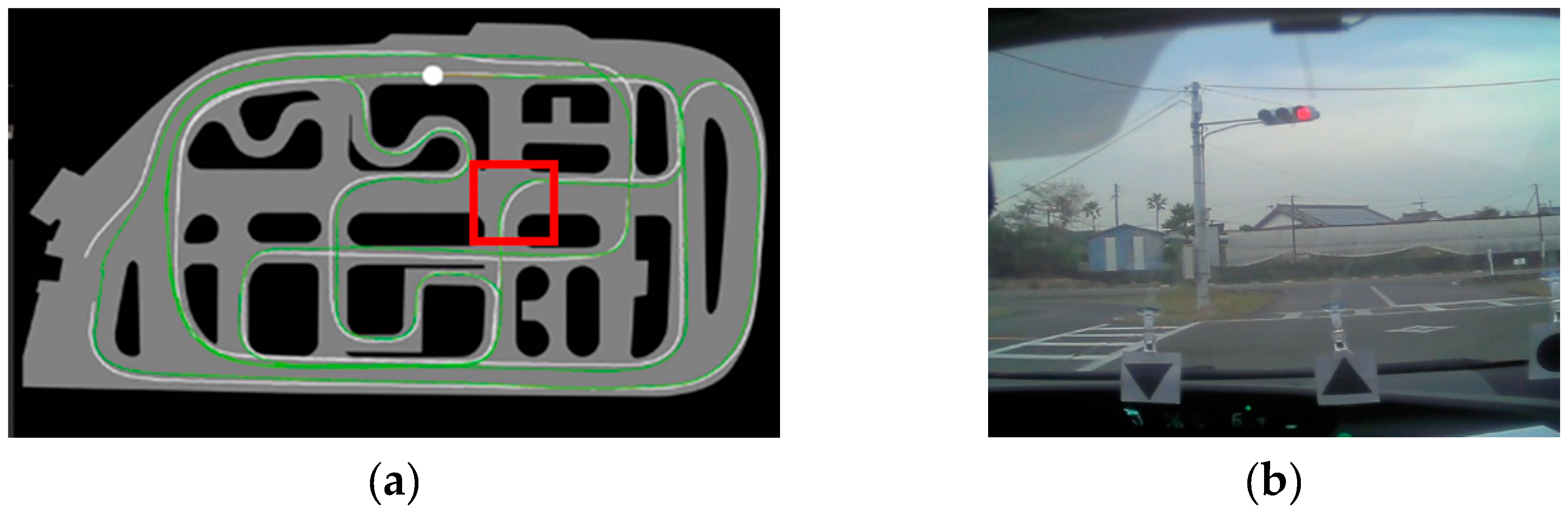

3.3. GPS Information

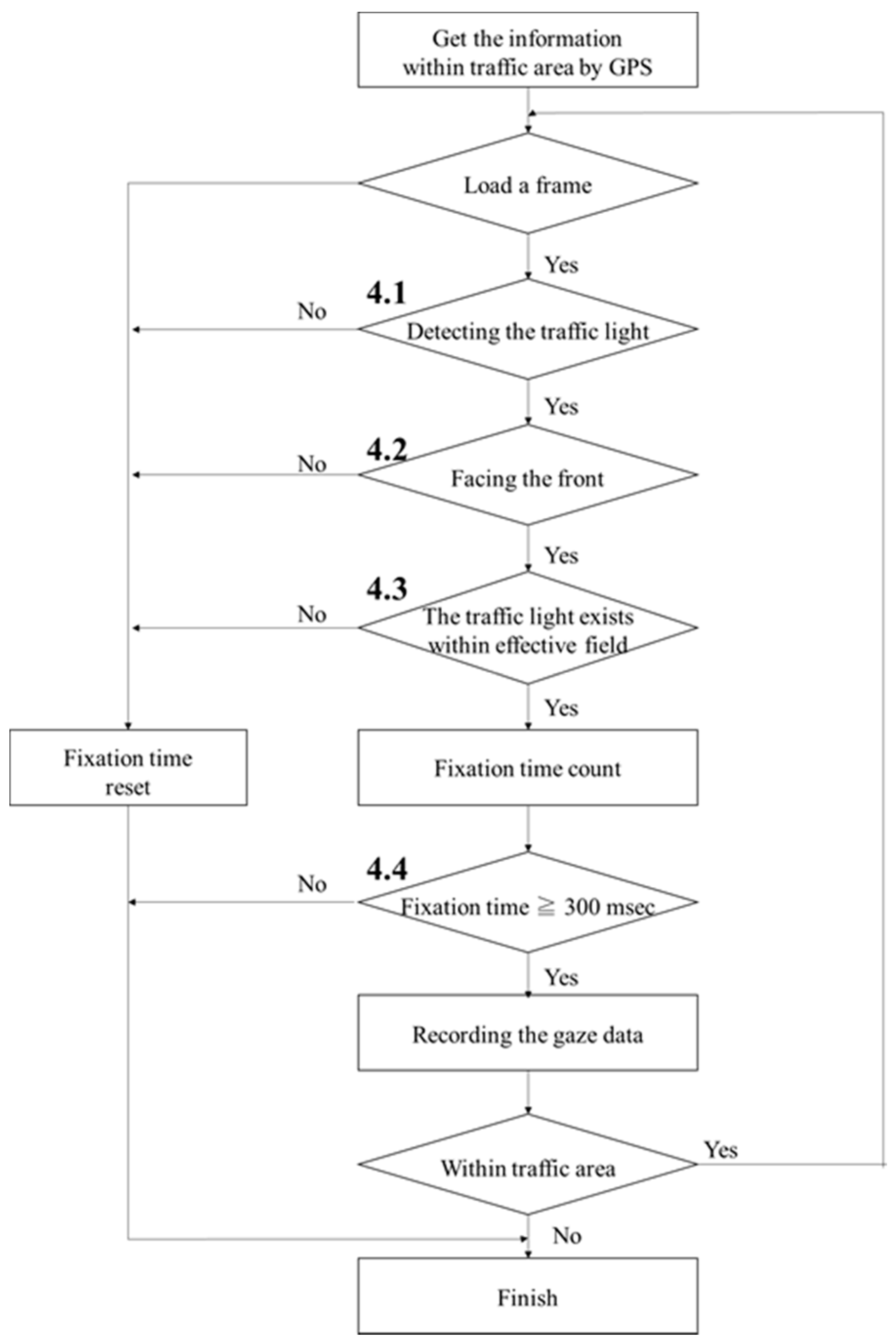

4. Proposed Method

4.1. The Traffic Light in the Video is Recognized by Image Processing

4.2. The Driver Faces the Front

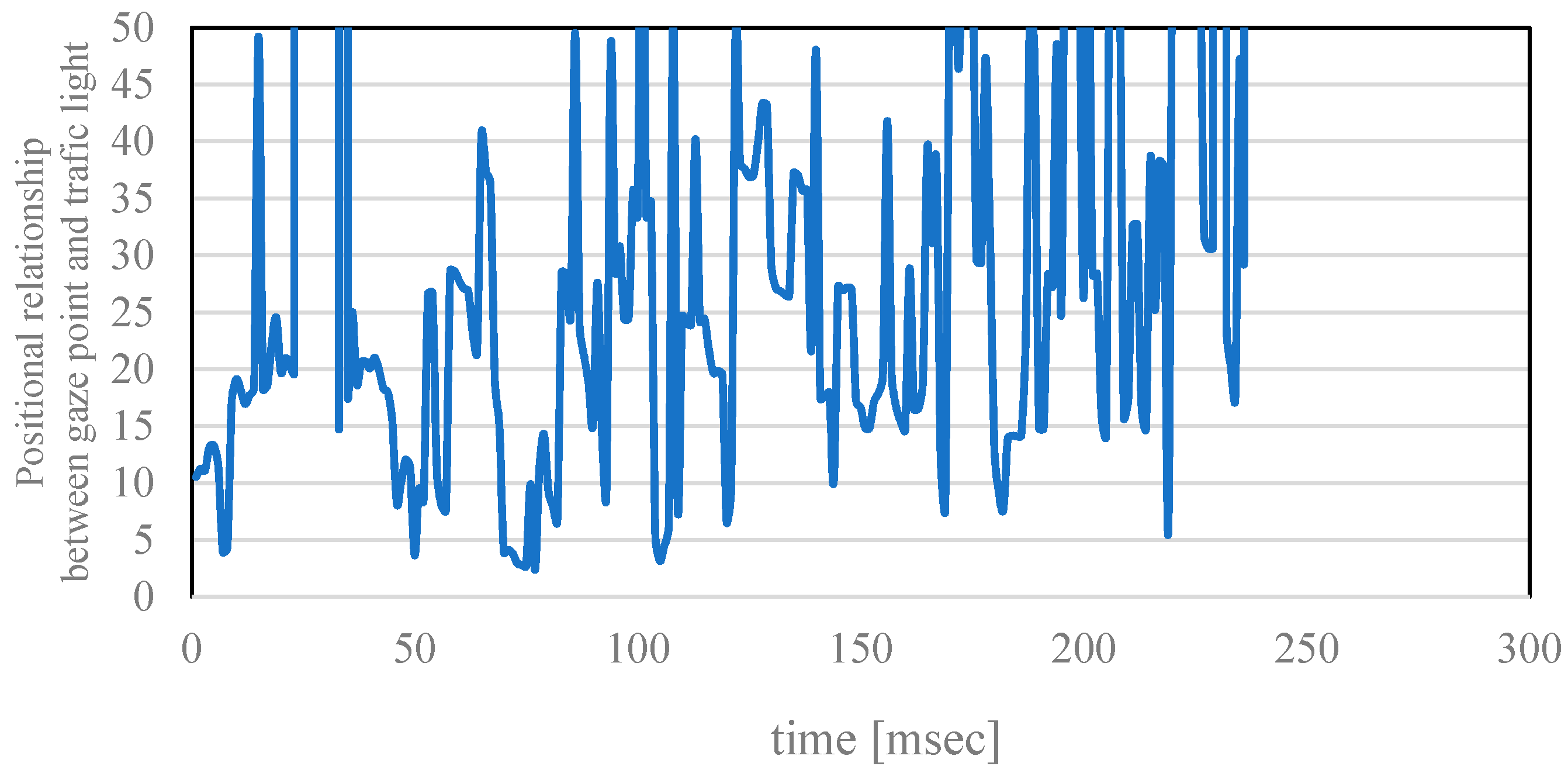

4.3. The Traffic Light Exists Within the Visual Effective Field

4.4. The Conditions from 4.1 to 4.3 are Satisfied for 300 Milliseconds or More

5. Experimental Methods and Results

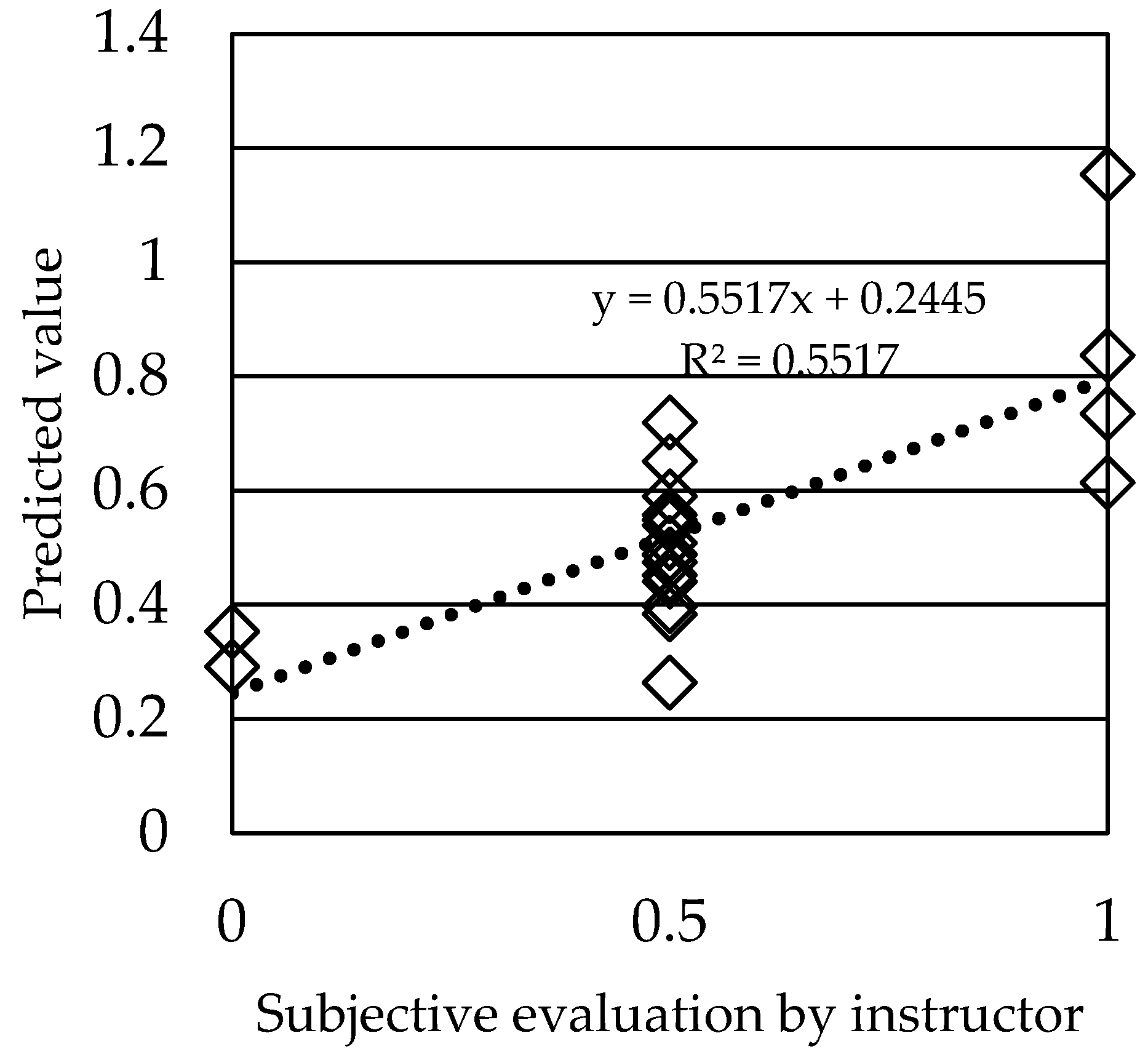

5.1. Result Using Various Variables as Inputs

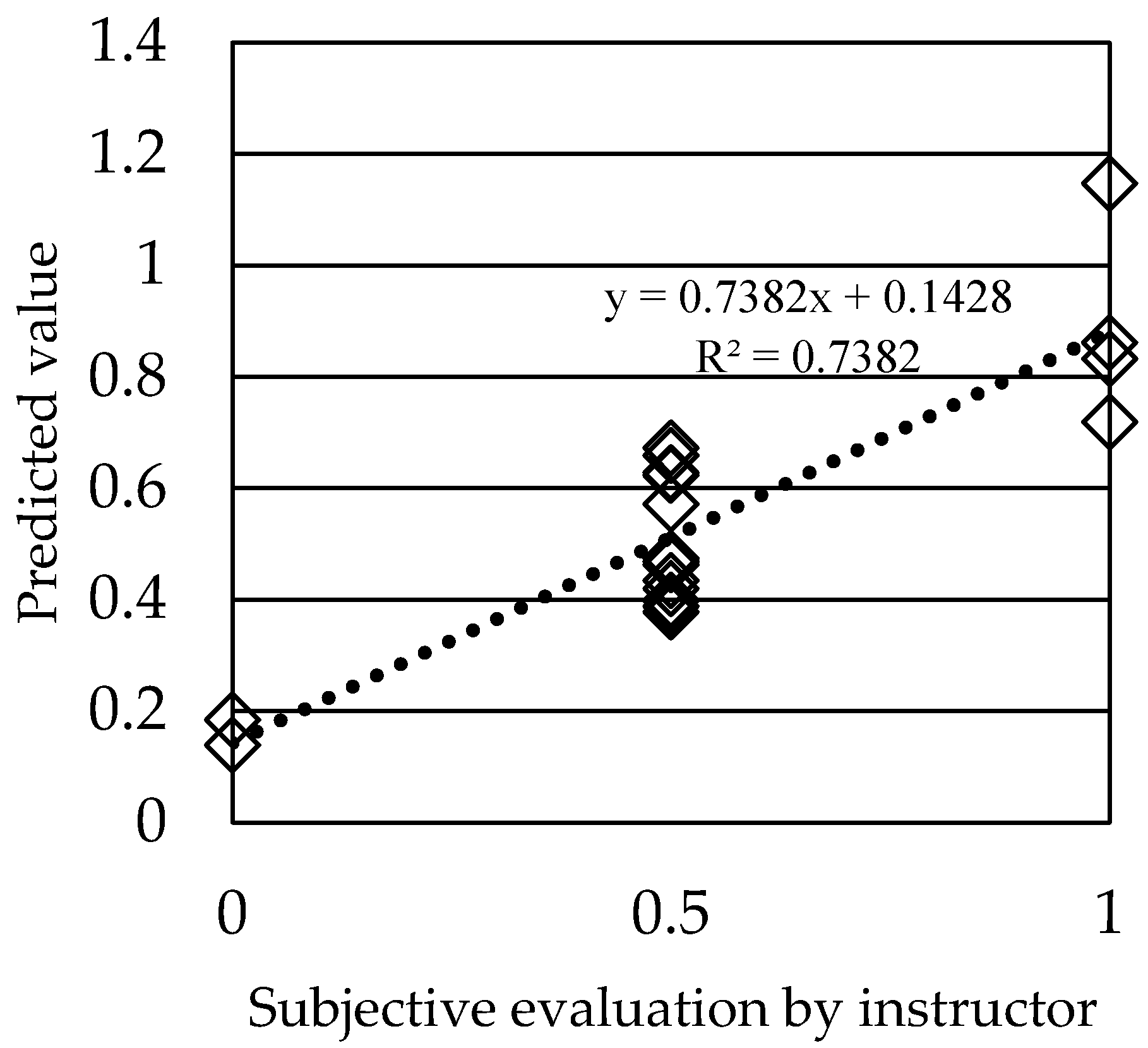

5.2. Result Using Subjects’ Age and the Number of Fixations as Inputs

5.3. Discussion

6. Conclusions

- (1)

- We defined the condition for eye fixation, one of the eye movements, using the coordinates of the traffic light in the video obtained by the image processing and the coordinates of the gaze obtained from the eye tracking device.

- (2)

- We constructed the system to extract the gaze information about eye fixation.

- (3)

- We investigated the ability of gaze information and drivers’ ages to predict the three-level subjective evaluation given by the professional driving instructor.

Author Contributions

Funding

Conflicts of Interest

References

- Cabinet Office in Japan. Preventing Traffic Accidents Involving the Elderly. The Current Situation Surrounding the Elderly. Available online: https://www8.cao.go.jp/koutu/taisaku/h29kou_haku/zenbun/genkyo/feature/feature_01.html (accessed on 16 March 2020). (In Japanese).

- Negative Effects of Operation Suspension. Available online: https://www.ncgg.go.jp/cgss/department/cre/gold/about/page2.html (accessed on 16 March 2020). (In Japanese).

- Shimada, H.; Makizako, H.; Tsutsumimoto, K.; Hotta, R.; Nakakubo, S.; Doi, T. Driving and Incidence of Functional Limitation in Older People: A Prospective Population-Based Study. Gerontology 2016, 62, 636–643. [Google Scholar] [CrossRef] [PubMed]

- Shimada, H.; Makizako, H.; Doi, T.; Lee, S. Lifestyle activities and the risk of dementia in older Japanese adults. Geriatr. Gerontol. Int. 2018, 18, 1491–1496. [Google Scholar] [CrossRef] [PubMed]

- Schultheis, M.T.; Deluca, J.; Chute, D.L. Handbook for the Assessment of Driving Capacity; Academic Press: San Diego, CA, USA, 2009. [Google Scholar]

- Hartman, E. Driver vision requirements. Soc. Automot. Eng. 1970, 629–630. [Google Scholar] [CrossRef]

- Owsley, C.; Ball, K.; Sloane, M.E.; Roenker, D.L.; Bruni, J.R. Visual/cognitive correlates of vehicle accidents in older drivers. Psychol. Aging 1991, 6, 403–415. [Google Scholar] [CrossRef] [PubMed]

- Takei Scientific Instruments Cooperation. Available online: https://www.takei-si.co.jp/en/productinfo/detail/65.html (accessed on 16 March 2020).

- Krajewski, J.; Trutschel, U.; Golz, M.; Sommer, D.; Edwards, D. Estimating fatigue from predetermined speech samples transmitted by operator communication systems. In Proceedings of the 5th International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Big Sky, MT, USA, 22–25 June 2009. [Google Scholar] [CrossRef]

- Daza, I.G.; Hernandez, N.; Bergasa, L.M.; Parra, I.; Yebes, J.J.; Gavilan, M.; Quintero, R.; Llorca, D.F.; Sotelo, M.A. Drowsiness monitoring based on driver and driving data fusion. In Proceedings of the 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1199–1204. [Google Scholar]

- Han, C.C.; Pai, Y.J.; Lee, C.H. A Fast Implementation Framework for Drowsy Driving Detection on Embedded Systems. In Proceedings of the 2019 International Conference on Machine Learning and Cybernetics (ICMLC), Kobe, Japan, 7–10 July 2019; pp. 854–860. [Google Scholar]

- Gao, F.; He, B.; He, Y. Detection of Driving Capability Degradation for Human-machine Cooperative Driving. Sensors 2020, 20, 1968. [Google Scholar] [CrossRef] [Green Version]

- Kunishige, M.; Fukuda, H.; Iida, T.; Kawabata, N.; Ishizuki, C.; Miyaguchi, H. Spatial navigation ability and gaze switching in older drivers: A driving simulator study. Hong Kong J. Occup. Ther. 2019, 32, 22–31. [Google Scholar] [CrossRef] [Green Version]

- Van Leeuwen, P.M.; Happee, R.; de Winter, J.C.F. Changes of driving performance and gaze behavior of novice drivers during a 30-min simulator-based training. Procedia Manuf. 2015, 3, 3325–3332. [Google Scholar] [CrossRef] [Green Version]

- Reimer, B. Impact of Cognitive Task Complexity on Drivers’ Visual Tunneling. Transp. Res. Rec. J. Transp. Res. Board 2009, 2138, 13–19. [Google Scholar] [CrossRef]

- Sakurai, K.; Tamura, H. A Study on Gaze Range Calculation Method during an Actual Car Driving Using Eyeball Angle and Head Angle Information. Sensors 2019, 19, 4774. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koga, K. The Eye Movement Research Handbook; Japan Institute for Science of Labor: Tokyo, Japan, 1998. (In Japanese) [Google Scholar]

- Ohsuga, T.; Tanaka, M.; Niiyama, Y.; Inoue, H. Experimental Study on Eye Fixation Time in Opinion Test with Food Pictures. Trans. Soc. Instrum. Control. Eng. 2013, 49, 880–886. [Google Scholar] [CrossRef]

- Matsuo, H.; Kimura, K. Traffic Lights Recognition Using Learning and Detecting Shape and Color, IPSJ SIG Technical Report. Ipsj Sig Notes Cvim 2014, 2014, 1–7. (In Japanese) [Google Scholar]

- Research on Traffic Light Recognition Method for Tsukuba Challenge. Available online: http://www.ail.cs.gunma-u.ac.jp/ailwiki/index.php (accessed on 16 March 2020). (In Japanese).

- Omachi, M.; Omachi, S. Fast Detection of Traffic Light with Color and Edge Information; The Institute of Image Electronics Engineers of Japan: Tokyo, Japan, 2009; Volume 38, pp. 673–679. (In Japanese) [Google Scholar]

- Moizumi, H.; Sugaya, Y.; Omachi, M.; Omachi, S. Traffic Light Detection Considering Color Saturation Using In-Vehicle Stereo Camera. J. Inf. Process. 2016, 24, 349–357. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Yamasaki, A.; Raksincharoensak, P.; Shino, M. Extraction of Driver’s Gaze Region by Face Direction Estimation Using On-board Cameras. In Transactions of the Society of Automotive Engineers of Japan; Society of Automotive Engineers of Japan: Tokyo, Japan, 2017; Volume 48, pp. 1113–1119. (In Japanese) [Google Scholar]

- Salvi, S.M.; Akhtar, S.; Currie, Z. Ageing changes in the eye. Postgrad. Med. J. 2006, 82, 581–587. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, J.E. Stages of processing in Visual Search and Attention. In Stratification in Cognition and Consciousness; Challis, B.H., Velichkovsky, B.M., Eds.; John Benjamins: Amsterdam, The Netherlands; Philadelphia, PA, USA, 1999; pp. 43–71. [Google Scholar]

- Trevarthen, C. Two visual systems in primates. Psychologische Forschung 1969, 31, 321–337. [Google Scholar]

- Velichkovsky, B.M. Visual Cognition and its Spatial-Temporal Context. In Cognitive Research in Psychology; Klix, F., Hoffmann, J., van der Meer, E., Eds.; North Holland: Amsterdam, The Netherlands, 1982; pp. 30–48. [Google Scholar]

- Pomplun, M. Analysis and Models of Eye Movements in Comparative Visual Search; Cuvillier: Göttingen, Germany, 1998. [Google Scholar]

- Velichkovsky, B.M.; Rothert, A.; Miniotas, D.; Dornhoefer, S.M.; Joos, M.; Pannasch, S. Visual Fixations as a Rapid Indicator of Hazard Perception. In Operator Functional State and Impaired Performance in Complex Work Environments Amsterdam/Washington; Hockey, G.H.R., Gaillard, A.W.K., Burov, O., Eds.; IOS Press: Amsterdam, The Netherlands, 2003; pp. 313–321. [Google Scholar]

- Sun, Q.; Xia, J.C.; He, J.; Foster, J.K.; Falkmer, T.; Lee, H. Towards unpacking older drivers’ visual-motor coordination: A gaze-based integrated driving assessment. Accid. Anal. Prev. 2018, 113, 85–96. [Google Scholar] [CrossRef] [PubMed]

- OFA Support. S.D.A.P. Available online: http://minamikyusyu-car.main.jp/sdap/ (accessed on 17 March 2020). (In Japanese).

| Method | Recognition Rate |

|---|---|

| Haar-like feature + Adaboost [19] | 80.0% |

| RGB →HSV + Extraction of specific color + Noise removal [20] | 84.0% |

| RGB → Normalized RGB + Extraction of candidate region + Extraction of edge + Apply to the circle equation [21] | 86.6% |

| Histogram + Kalman filter [22] | 86.0% |

| YOLOv2 [23] | 87.4% |

| YOLOv2-tiny [23] | 93.0% |

| Learning Environment | Execution Environment | ||

|---|---|---|---|

| Memory | 16 GB | Memory | 8 GB |

| CPU | Core i7 8700 (3.2 GHz) | CPU | Core i7 8700 (3.2 GHz) |

| GPU | Geforce RTX 2080(VRAM:8 GB) | OpenCV | 3.4.0 |

| CUDA | 10 |

| Subject | Age | Weather | Time Zone | Subjective Evaluation | S.D.A.P. Score [32] | Number of Fixations 10 | Total of Fixation Time 10 | Minimum Distance 10 | Average Distance 10 | Number of Fixations 15 | Total of Fixation Time 15 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| A | 82 | Rain | 2 p.m. | 0.5 | −766 | 10 | 7.8 | 0.82 | 5.08 | 12 | 12.6 |

| B | 66 | Rain | 3 p.m. | 0 | −1650 | 4 | 1 | 2.51 | 6.19 | 12 | 2.5 |

| C | 77 | Fine | 4 p.m. | 0.5 | −910 | 4 | 1.6 | 4.14 | 7.50 | 10 | 3.8 |

| D | 30 | Fine | 4 p.m. | 1 | −49 | 10 | 1.8 | 5.20 | 7.96 | 22 | 7.5 |

| E | 79 | Cloudy | 2 p.m. | 1 | −390 | 18 | 6.8 | 0.36 | 5.37 | 26 | 10.8 |

| F | 40 | Fine | 4 p.m. | 0.5 | −235 | 10 | 3.3 | 1.60 | 7.31 | 10 | 5.8 |

| G | 38 | Fine | 4 p.m. | 1 | −605 | 4 | 0.8 | 3.88 | 6.85 | 6 | 1.1 |

| H | 77 | Fine | 3 p.m. | 0.5 | −717 | 0 | 0 | – | – | 4 | 0.6 |

| I | 53 | Cloudy | 3 p.m. | 1 | −440 | 10 | 2.6 | 1.55 | 6.30 | 22 | 5.7 |

| J | 67 | Cloudy | 3 p.m. | 0.5 | −195 | 10 | 3.2 | 4.49 | 6.93 | 10 | 6.2 |

| K | 59 | Fine | 4 p.m. | 0.5 | −355 | 10 | 2.7 | 2.11 | 4.39 | 12 | 2.7 |

| L | 57 | Fine | 1 p.m. | 0.5 | −193 | 12 | 12.5 | 0.88 | 6.19 | 8 | 15 |

| M | 44 | Fine | 1 p.m. | 0.5 | −738 | 8 | 1.3 | 2.48 | 7.96 | 12 | 5.1 |

| N | 84 | Cloudy | 1 p.m. | 0 | −957 | 4 | 0.6 | 2.55 | 5.85 | 12 | 2.1 |

| O | 67 | Cloudy | 2 p.m. | 0.5 | −450 | 12 | 9.4 | 1.44 | 6.76 | 10 | 14.5 |

| P | 49 | Cloudy | 11 a.m. | 0.5 | −217 | 16 | 3.9 | 3.10 | 7.19 | 14 | 5.6 |

| Q | 69 | Cloudy | 11 a.m. | 0.5 | −1312 | 6 | 1.7 | 3.43 | 5.37 | 14 | 4.4 |

| R | 74 | Fine | 10 a.m. | 0.5 | −785 | 16 | 6.1 | 1.72 | 5.76 | 8 | 7.5 |

| S | 40 | Fine | 2 p.m. | 0.5 | −733 | 10 | 2.8 | 0.87 | 5.77 | 20 | 6.3 |

| T | 67 | Cloudy | 1 p.m. | 0.5 | −822 | 6 | 4.8 | 3.75 | 7.06 | 4 | 5 |

| U | 67 | Cloudy | 1 p.m. | 0.5 | −645 | 4 | 1.7 | 1.99 | 6.90 | 2 | 1.5 |

| V | 46 | Cloudy | 4 p.m. | 0.5 | −360 | 0 | 0 | – | – | 2 | 0.4 |

| Subject | Minimum Distance 15 | Average Distance 15 | Number of Fixations 20 | Total of Fixation Time 20 | Minimum Distance 20 | Average Distance 20 | Number of Fixations 25 | Total of Fixation Time 25 | Minimum Distance 25 | Average Distance 25 |

|---|---|---|---|---|---|---|---|---|---|---|

| A | 0.82 | 7.67 | 10 | 15.1 | 0.70 | 8.35 | 6 | 15.6 | 0.82 | 9.57 |

| B | 2.51 | 9.31 | 4 | 5.1 | 2.15 | 11.37 | 20 | 6 | 2.51 | 14.99 |

| C | 4.14 | 10.23 | 12 | 5.8 | 3.55 | 11.02 | 12 | 6.8 | 4.14 | 13.29 |

| D | 5.20 | 11.17 | 29 | 15.3 | 4.46 | 12.48 | 18 | 16.5 | 5.20 | 15.20 |

| E | 0.36 | 7.35 | 28 | 13.8 | 0.31 | 8.00 | 34 | 15.2 | 0.36 | 9.72 |

| F | 1.22 | 8.17 | 13 | 9.8 | 1.05 | 8.71 | 14 | 8.7 | 1.22 | 11.10 |

| G | 3.88 | 8.03 | 8 | 2.5 | 3.33 | 11.78 | 10 | 2.9 | 3.88 | 14.59 |

| H | 12.41 | 14.22 | 3 | 1.8 | 9.01 | 14.71 | 8 | 2.9 | 9.01 | 16.81 |

| I | 1.55 | 8.85 | 17 | 10.3 | 1.55 | 11.98 | 40 | 15.5 | 1.55 | 14.50 |

| J | 4.49 | 9.90 | 10 | 10.6 | 4.49 | 12.62 | 16 | 13.5 | 4.49 | 14.67 |

| K | 2.11 | 4.39 | 8 | 3.8 | 2.11 | 6.47 | 20 | 6.9 | 0.60 | 14.00 |

| L | 0.88 | 7.09 | 3 | 15.6 | 1.17 | 7.46 | 4 | 15.8 | 0.88 | 7.65 |

| M | 2.48 | 10.92 | 5 | 7.9 | 2.48 | 12.96 | 10 | 8.4 | 2.48 | 13.39 |

| N | 2.55 | 9.42 | 6 | 3.1 | 2.94 | 11.55 | 10 | 3.8 | 2.55 | 13.50 |

| O | 1.44 | 8.48 | 17 | 16.9 | 1.24 | 8.49 | 12 | 18 | 1.44 | 10.48 |

| P | 3.10 | 8.82 | 8 | 7.2 | 3.10 | 10.74 | 14 | 7.7 | 3.10 | 11.51 |

| Q | 3.43 | 9.02 | 9 | 7.5 | 2.94 | 10.34 | 16 | 9.2 | 3.43 | 13.26 |

| R | 1.72 | 6.80 | 14 | 7.5 | 1.47 | 7.46 | 12 | 10 | 0.07 | 9.53 |

| S | 0.87 | 9.06 | 4 | 6.1 | 0.74 | 10.10 | 14 | 9.5 | 0.87 | 12.06 |

| T | 3.75 | 7.61 | 8 | 6.1 | 3.22 | 10.78 | 20 | 9.6 | 3.75 | 13.56 |

| U | 1.99 | 7.92 | 13 | 5.8 | 1.71 | 12.82 | 12 | 6.5 | 1.99 | 16.14 |

| V | 9.94 | 11.90 | 9 | 1.2 | 11.63 | 15.90 | 4 | 2.1 | 9.94 | 18.42 |

| Coefficient | p-Value | |

|---|---|---|

| Intercept | 1.444 | 0.003 |

| Age | −0.007 | 0.008 |

| Number of fixations 20 | 0.027 | 0.000 |

| Minimum distance 15 | 0.161 | 0.012 |

| Average distance 15 | −0.133 | 0.020 |

| Minimum distance 20 | −0.120 | 0.041 |

| Average distance 20 | 0.122 | 0.041 |

| Coefficient | p-Value | |

|---|---|---|

| Intercept | 1.444 | 0.003 |

| Age | −0.007 | 0.008 |

| Number of fixations 20 | 0.027 | 0.000 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shimauchi, T.; Sakurai, K.; Tate, L.; Tamura, H. Gaze-Based Vehicle Driving Evaluation of System with an Actual Vehicle at an Intersection with a Traffic Light. Electronics 2020, 9, 1408. https://doi.org/10.3390/electronics9091408

Shimauchi T, Sakurai K, Tate L, Tamura H. Gaze-Based Vehicle Driving Evaluation of System with an Actual Vehicle at an Intersection with a Traffic Light. Electronics. 2020; 9(9):1408. https://doi.org/10.3390/electronics9091408

Chicago/Turabian StyleShimauchi, Takumi, Keiko Sakurai, Lindsey Tate, and Hiroki Tamura. 2020. "Gaze-Based Vehicle Driving Evaluation of System with an Actual Vehicle at an Intersection with a Traffic Light" Electronics 9, no. 9: 1408. https://doi.org/10.3390/electronics9091408

APA StyleShimauchi, T., Sakurai, K., Tate, L., & Tamura, H. (2020). Gaze-Based Vehicle Driving Evaluation of System with an Actual Vehicle at an Intersection with a Traffic Light. Electronics, 9(9), 1408. https://doi.org/10.3390/electronics9091408