Smart Image Enhancement Using CLAHE Based on an F-Shift Transformation during Decompression

Abstract

:1. Introduction

2. Related Work

2.1. F-Shift Transformation

2.2. Two Dimensional F-Shift Transformation (TDFS)

2.3. Contrast-Limited Adaptive Histogram Equalization (CLAHE)

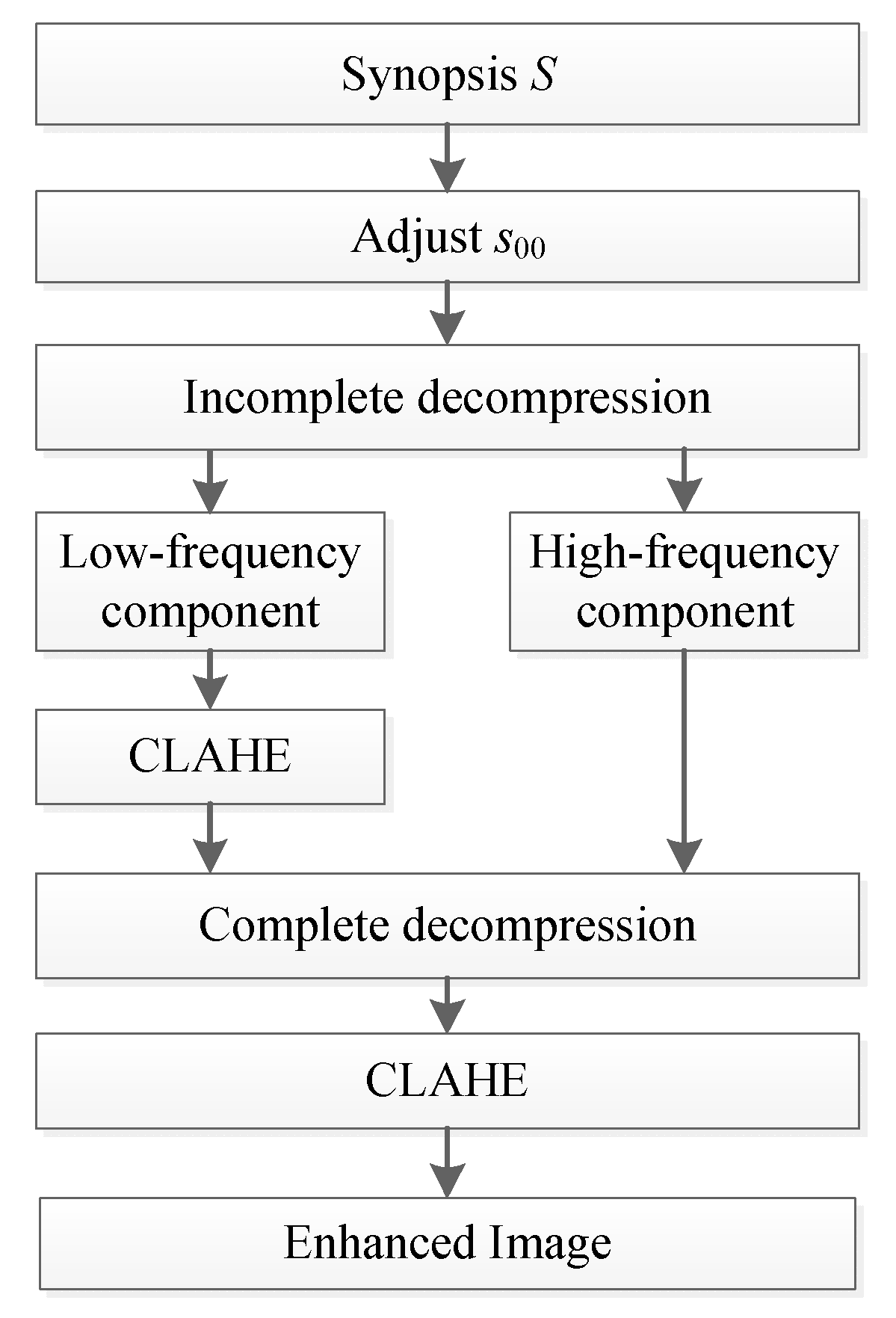

3. Proposed Method

3.1. Adaptive Coefficient Adjustment

3.2. Incomplete Decompression and Enhancing the Low-Frequency Component

3.3. Complete Decompression and Further Enhancement

4. Experimental Results

4.1. Impact of the Error Bound on the Enhancement and Compression Results

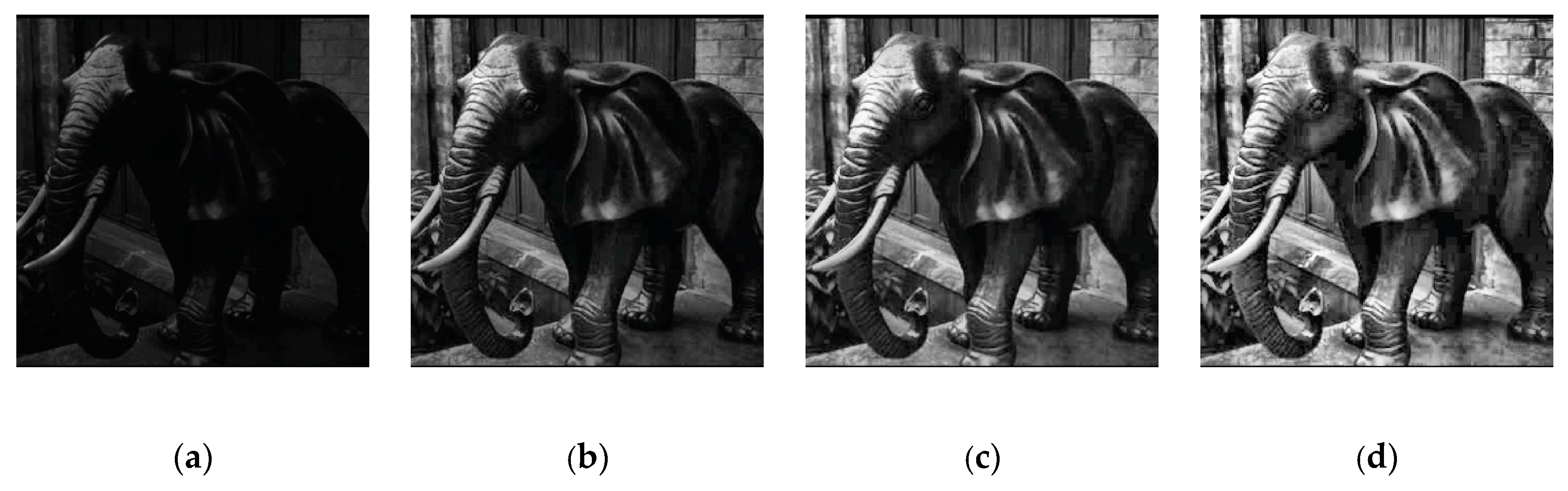

4.2. Comparison of the Enhancement Effect of Different Methods

4.3. Method Validation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hsia, C.H.; Yang, J.H.; Chiang, J.S. Complexity reduction method for ultrasound imaging enhancement in tetrolet transform domain. J. Supercomput. 2020, 76, 1438–1449. [Google Scholar] [CrossRef]

- Xia, K.J.; Wang, J.Q.; Cai, J. A novel medical image enhancement algorithm based on improvement correction strategy in wavelet transform domain. Cluster Comput. 2019, 22, 10969–10977. [Google Scholar] [CrossRef]

- Pang, C.; Zhang, Q.; Zhou, X.; Hansen, D.; Wang, S.; Maeder, A. Computing unrestricted synopses under maximum error bound. Algorithmica 2013, 65, 1–42. [Google Scholar] [CrossRef]

- Zhang, Q.; Pang, C.; Hansen, D. On multidimensional wavelet synopses for maximum error bounds. In International Conference on Database Systems for Advanced Applications; Springer: Berlin/Heidelberg, Germany, 2009; pp. 646–661. [Google Scholar]

- Rahman, S.; Rahman, M.M.; Abdullah-Al-Wadud, M.; Al-Quaderi, G.D.; Shoyaib, M. An adaptive gamma correction for image enhancement. EURASIP J. Image Video Process. 2016, 1, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Lim, S.H.; Isa, N.A.M.; Ooi, C.H.; Vin Toh, K.K. A new histogram equalization method for digital image enhancement and brightness preservation. Signal Image Video Process. 2015, 9, 675–689. [Google Scholar] [CrossRef]

- Zhuang, L.; Guan, Y. Image enhancement via subimage histogram equalization based on mean and variance. Comput. Intell. Neurosci. 2017, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arora, S.; Agarwal, M.; Kumar, V.; Gupta, D. Comparative study of image enhancement techniques using histogram equalization on degraded images. Int. J. Eng. Technol. 2018, 7, 468–471. [Google Scholar] [CrossRef]

- Rahman, Z.U.; Jobson, D.J.; Woodell, G.A. Retinex processing for automatic image enhancement. J. Electron. Imaging. 2004, 13, 100–110. [Google Scholar]

- Lee, S. An efficient content-based image enhancement in the compressed domain using retinex theory. IEEE Trans. Circuits Syst. Video Technol. 2007, 17, 199–213. [Google Scholar] [CrossRef]

- Anand, S.; Gayathri, S. Mammogram image enhancement by two-stage adaptive histogram equalization. Optik 2015, 126, 3150–3152. [Google Scholar] [CrossRef]

- Sargun, S.; Rana, S.B. Performance evaluation of HE, AHE and fuzzy image enhancement. Int. J. Comput. Appl. 2015, 122, 14–19. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. Graph. Gems 1994, 474–485. [Google Scholar]

- Singh, P.; Mukundan, R.; Ryke, R.D. Feature enhancement in medical ultrasound videos using contrast-limited adaptive histogram equalization. J. Digital Imaging 2019, 1–13. [Google Scholar] [CrossRef]

- Wang, J.W.; Le, N.T.; Lee, J.S.; Wang, C.C. Color face image enhancement using adaptive singular value decomposition in fourier domain for face recognition. Pattern Recognit. 2016, 57, 31–49. [Google Scholar] [CrossRef]

- Makandar, A.; Halalli, B. Image enhancement techniques using highpass and lowpass filters. Int. J. Comput. Appl. 2015, 109, 21–27. [Google Scholar] [CrossRef]

- Kuo, C.M.; Yang, N.C.; Liu, C.S.; Tseng, P.Y.; Chang, C.K. An effective and flexible image enhancement algorithm in compressed domain. Multimed. Tools Appl. 2016, 75, 1177–1200. [Google Scholar] [CrossRef]

- Sharma, A.; Khunteta, A. Satellite image enhancement using discrete wavelet transform, singular value decomposition and its noise performance analysis. In Proceedings of the 2016 International Conference on Micro-Electronics and Telecommunication Engineering (ICMETE), Ghaziabad, India, 22–23 September 2016; pp. 594–599. [Google Scholar]

- Hsieh, C.T.; Lai, E.; Wang, Y.C. An effective algorithm for fingerprint image enhancement based on wavelet transform. Pattern Recognit. 2003, 36, 303–312. [Google Scholar] [CrossRef]

- Kim, S.; Kang, W.; Lee, E.; Paik, J. Wavelet-domain color image enhancement using filtered directional bases and frequency-adaptive shrinkage. IEEE Trans. Consum. Electron. 2010, 56, 1063–1070. [Google Scholar] [CrossRef]

- Uhring, W.; Jung, M.; Summ, P. Image processing provides low-frequency jitter correction for synchroscan streak camera temporal resolution enhancement. Opt. Metrol. Prod. Eng. 2004, 5457, 245–252. [Google Scholar]

- Yang, J.; Wang, Y.; Xu, W.; Dai, Q. Image and video denoising using adaptive dual-tree discrete wavelet packets. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 642–655. [Google Scholar] [CrossRef]

- Shahane, P.R.; Mule, S.B.; Ganorkar, S.R. Color image enhancement using discrete wavelet transform. Digital Image Process. 2012, 4, 1–5. [Google Scholar]

- Zhang, C.; Ma, L.N.; Jing, L.N. Mixed frequency domain and spatial of enhancement algorithm for infrared image. In Proceedings of the 2012 9th International Conference on Fuzzy Systems and Knowledge Discovery, Sichuan, China, 29–31 May 2012; pp. 2706–2710. [Google Scholar]

- Huang, L.; Zhao, W.; Wang, J.; Sun, Z. Combination of contrast limited adaptive histogram equalisation and discrete wavelet transform for image enhancement. IET Image Process. 2015, 9, 908–915. [Google Scholar]

- Fan, R.; Li, X.; Zhao, H.; Zhang, H.; Pang, C.; Wang, J. Image enhancement method in decompression based on F-shift transformation. In Communications in Computer and Information Science, Proceedings of the 6th International Conference, ICDS 2019, Ningbo, China, 15–20 May 2019; Springer: Berlin/Heidelberg, Germany, 2020; Volume 1179, pp. 232–241. [Google Scholar]

- Li, X.; Fan, R.; Zhang, H.; Li, T.; Pang, C. Two-dimensional wavelet synopses with maximum error bound and its application in parallel compression. J. Intell. Fuzzy Syst. 2019, 37, 3499–3511. [Google Scholar] [CrossRef]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Li, X.; Li, T.; Zhao, H.; Dou, Y.; Pang, C. Medical image enhancement in F-shift transformation domain. Health Inf. Sci. Syst. 2019, 7, 1–8. [Google Scholar] [CrossRef]

- Lee, H.S.; Moon, S.W.; Eom, I.K. Underwater image enhancement using successive color correction and superpixel dark channel prior. Symmetry 2020, 12, 1220. [Google Scholar] [CrossRef]

- Li, B.; Xie, W. Image denoising and enhancement based on adaptive fractional calculus of small probability strategy. Neurocomputing 2016, 175, 704–714. [Google Scholar] [CrossRef]

- Qu, Z.; Xing, Y.; Song, Y. Image enhancement based on pulse coupled neural network in the nonsubsample shearlet transform domain. Math. Prob. Eng. 2019, 2019, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Chang, D.C.; Wu, W.R. Image contrast enhancement based on a Histogram transformation of local standard deviation. IEEE Trans. Med. Imaging 1998, 17, 518–531. [Google Scholar] [CrossRef] [Green Version]

- Zhuang, L.; Guan, Y. Adaptive Image enhancement using entropy-based subhistogram equalization. Comput. Intell. Neurosci. 2018. [Google Scholar] [CrossRef] [Green Version]

| Resolution | Low-Frequency Component | High-Frequency Component |

|---|---|---|

| 8 | {[5,9], [4,8], [−1,3], [6,10],[3,7], [2,6], [0,4], [8,12]} | ------ |

| 4 | {[5,8], [2.5,6.5], [3,6], [4,8]} | {0,3.5,0,−4} |

| 2 | {[5,6.5], [4,6]} | {0,0} |

| 1 | {[5,6]} | {0} |

| Images | Methods | Mean | SD | Entropy | AG |

|---|---|---|---|---|---|

| Figure 10 | Original | 175.33 | 22.12 | 4.00 | 2.32 |

| CLAHE [13] | 177.31 | 24.00 | 5.73 | 3.66 | |

| CLAHE_DWT [25] | 174.04 | 25.08 | 5.74 | 3.36 | |

| Our method | 145.53 | 38.75 | 7.23 | 10.42 | |

| Figure 11 | Original | 172.16 | 55.83 | 6.98 | 15.25 |

| CLAHE [13] | 156.15 | 67.02 | 7.71 | 21.90 | |

| CLAHE_DWT [25] | 161.73 | 69.02 | 7.32 | 18.99 | |

| Our method | 134.30 | 71.25 | 7.95 | 22.90 | |

| Figure 12 | Original | 132.38 | 27.06 | 5.50 | 5.00 |

| CLAHE [13] | 145.48 | 39.08 | 7.16 | 10.15 | |

| CLAHE_DWT [25] | 165.83 | 49.75 | 7.53 | 12.25 | |

| Our method | 131.32 | 70.14 | 7.98 | 20.10 | |

| Figure 13 | Original | 95.39 | 53.78 | 6.12 | 7.01 |

| CLAHE [13] | 107.27 | 59.31 | 7.76 | 9.79 | |

| CLAHE_DWT [25] | 127.63 | 67.71 | 7.95 | 12.38 | |

| Our method | 125.44 | 71.51 | 7.99 | 14.92 | |

| Figure 14 | Original | 32.52 | 25.11 | 5.43 | 2.55 |

| CLAHE [13] | 55.16 | 39.74 | 6.52 | 7.12 | |

| CLAHE_DWT [25] | 94.57 | 53.48 | 7.02 | 8.68 | |

| Our method | 93.92 | 48.86 | 7.48 | 12.95 | |

| Figure 15 | Original | 20.12 | 19.68 | 5.63 | 1.67 |

| CLAHE [13] | 51.67 | 42.00 | 6.95 | 4.40 | |

| CLAHE_DWT [25] | 75.26 | 55.24 | 7.39 | 5.61 | |

| Our method | 92.60 | 61.72 | 7.72 | 7.90 |

| Images | Methods | Mean | SD | Entropy | AG |

|---|---|---|---|---|---|

| Figure 16 | Original | 175.33 | 22.12 | 4.00 | 2.32 |

| CLAHE [13] | 177.31 | 24.00 | 5.73 | 3.66 | |

| Scheme 1 | 146.86 | 25.65 | 6.18 | 4.44 | |

| Scheme 2 | 147.15 | 22.85 | 5.81 | 3.68 | |

| Scheme 3 | 167.66 | 32.51 | 6.72 | 8.64 | |

| Scheme 4 | 145.00 | 40.17 | 7.29 | 10.84 | |

| Scheme 5 | 145.73 | 31.82 | 6.79 | 8.92 | |

| Our method | 141.50 | 40.67 | 7.34 | 11.60 | |

| Figure 17 | Original | 132.38 | 27.06 | 5.50 | 5.00 |

| CLAHE [13] | 145.48 | 39.08 | 7.16 | 10.15 | |

| Scheme 1 | 143.29 | 45.90 | 7.48 | 11.05 | |

| Scheme 2 | 140.98 | 46.34 | 7.51 | 13.70 | |

| Scheme 3 | 136.79 | 63.18 | 7.91 | 20.96 | |

| Scheme 4 | 132.65 | 69.80 | 7.97 | 19.77 | |

| Scheme 5 | 130.49 | 70.87 | 7.98 | 24.66 | |

| Our method | 130.35 | 70.88 | 7.99 | 20.52 | |

| Figure 18 | Original | 20.12 | 19.68 | 5.63 | 1.65 |

| CLAHE [13] | 51.67 | 42.00 | 6.95 | 4.39 | |

| Scheme 1 | 57.31 | 40.57 | 6.91 | 4.09 | |

| Scheme 2 | 57.70 | 40.72 | 6.92 | 4.24 | |

| Scheme 3 | 91.16 | 62.22 | 7.74 | 7.98 | |

| Scheme 4 | 94.04 | 61.13 | 7.73 | 7.94 | |

| Scheme 5 | 94.10 | 61.05 | 7.73 | 8.17 | |

| Our method | 93.67 | 60.88 | 7.74 | 8.32 | |

| Figure 19 | Original | 14.07 | 39.75 | 4.13 | 2.28 |

| CLAHE [13] | 29.25 | 47.36 | 5.20 | 4.43 | |

| Scheme 1 | 33.52 | 47.21 | 4.91 | 3.90 | |

| Scheme 2 | 33.85 | 46.75 | 5.13 | 4.15 | |

| Scheme 3 | 45.20 | 55.36 | 6.24 | 5.83 | |

| Scheme 4 | 47.63 | 56.94 | 6.12 | 6.00 | |

| Scheme 5 | 48.04 | 55.58 | 6.29 | 6.30 | |

| Our method | 48.24 | 57.09 | 6.35 | 6.10 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, R.; Li, X.; Lee, S.; Li, T.; Zhang, H.L. Smart Image Enhancement Using CLAHE Based on an F-Shift Transformation during Decompression. Electronics 2020, 9, 1374. https://doi.org/10.3390/electronics9091374

Fan R, Li X, Lee S, Li T, Zhang HL. Smart Image Enhancement Using CLAHE Based on an F-Shift Transformation during Decompression. Electronics. 2020; 9(9):1374. https://doi.org/10.3390/electronics9091374

Chicago/Turabian StyleFan, Ruiqin, Xiaoyun Li, Sanghyuk Lee, Tongliang Li, and Hao Lan Zhang. 2020. "Smart Image Enhancement Using CLAHE Based on an F-Shift Transformation during Decompression" Electronics 9, no. 9: 1374. https://doi.org/10.3390/electronics9091374

APA StyleFan, R., Li, X., Lee, S., Li, T., & Zhang, H. L. (2020). Smart Image Enhancement Using CLAHE Based on an F-Shift Transformation during Decompression. Electronics, 9(9), 1374. https://doi.org/10.3390/electronics9091374