A Survey of Multi-Task Deep Reinforcement Learning

Abstract

1. Introduction

Contributions

- Examining the state-of-the-art within the deep reinforcement learning domain concerning the multi-tasking aspect.

- Single source reference for implementation details as well as comparison study of three of the major solutions developed to incorporate the multi-tasking aspect with deep reinforcement learning.

- Provides details on most of the methodologies attempted to bring the multi-tasking feature into the deep reinforcement learning arena under a single survey.

- Survey of the majority of the research challenges that are being encountered concerning the adaptation of multi-tasking into deep reinforcement learning.

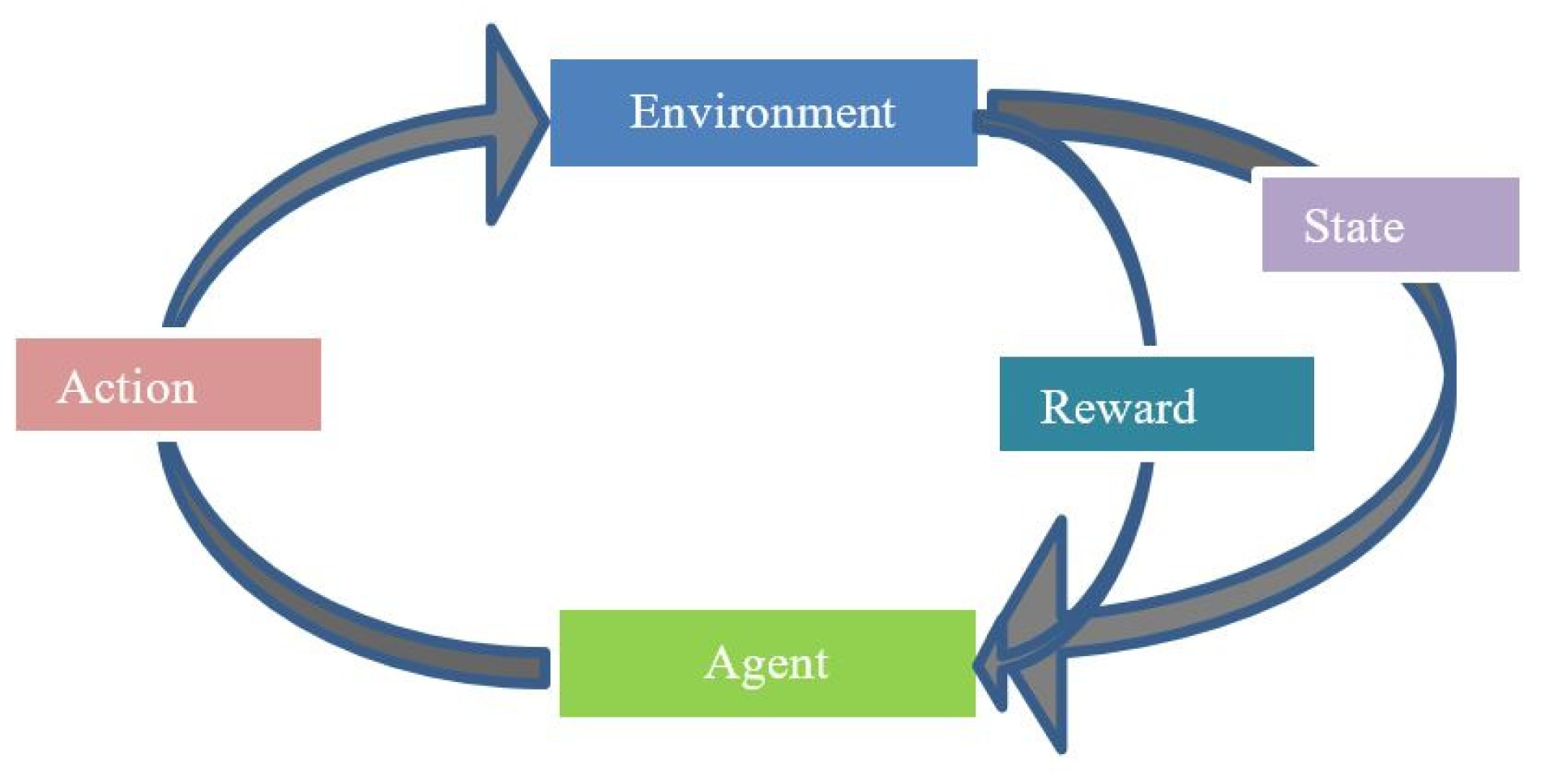

2. Overview of Reinforcement Learning

2.1. Reinforcement Learning Setup

2.2. The Markov Property

- Set of all possible states that an agent can be while in the environment, represented by S.

- Set of all possible actions that an agent can take while in a state s € S, denoted by A.

- Transition dynamics function T defined as T (s, a, s’) = Pr (St+1 = s’|St = s, A = a). Since the actions are considered part of a probability distribution, here T represents distribution over the possible resulting state by taking a specific action while in a given state s.

- A reward function, R, associated with a state transition by taking a specific action R (St, at, St+1).

- A discount factor γ [0,1], which will be used for the calculation of discounted future rewards associated with each state transition. Generally, a low discount factor value will be applied for expected future rewards for state transitions leading to the nearby states, whereas a high discount factor value will be applied for rewards associated with actions leading to states that are far from the current state [24].

2.3. Key Challenges of Reinforcement Learning

- By heavily relying only on the reward values, an agent needs to follow a brute-force strategy to derive an optimal policy.

- For every action taken while in a particular state, the RL agent needs to deal with the complexities related to the maximum expected discounted future reward for that action. This scenario is often denoted as the (temporal) credit assignment problem [26].

- With environments with a 3D nature, the size of the continuous state and action pairs can be quite large.

- Observations of an agent from a complex environment heavily depend solely on its actions, which can contain strong temporal correlations.

2.4. Multi-Task Learning

3. Deep Reinforcement Learning with Multi-Tasking

3.1. Transfer Learning Oriented Approach

3.2. Learning Shared Representations for Value Functions

3.3. Progressive Neural Networks

- Have a system with the ability to incorporate prior knowledge during the learning process at each layer of the feature hierarchy;

- Develop a system with immunity to a catastrophic forgetting scenario.

3.4. PathNet

3.5. Policy Distillation and Actor-Mimic

3.5.1. Policy Distillation

3.5.2. Actor-Mimic

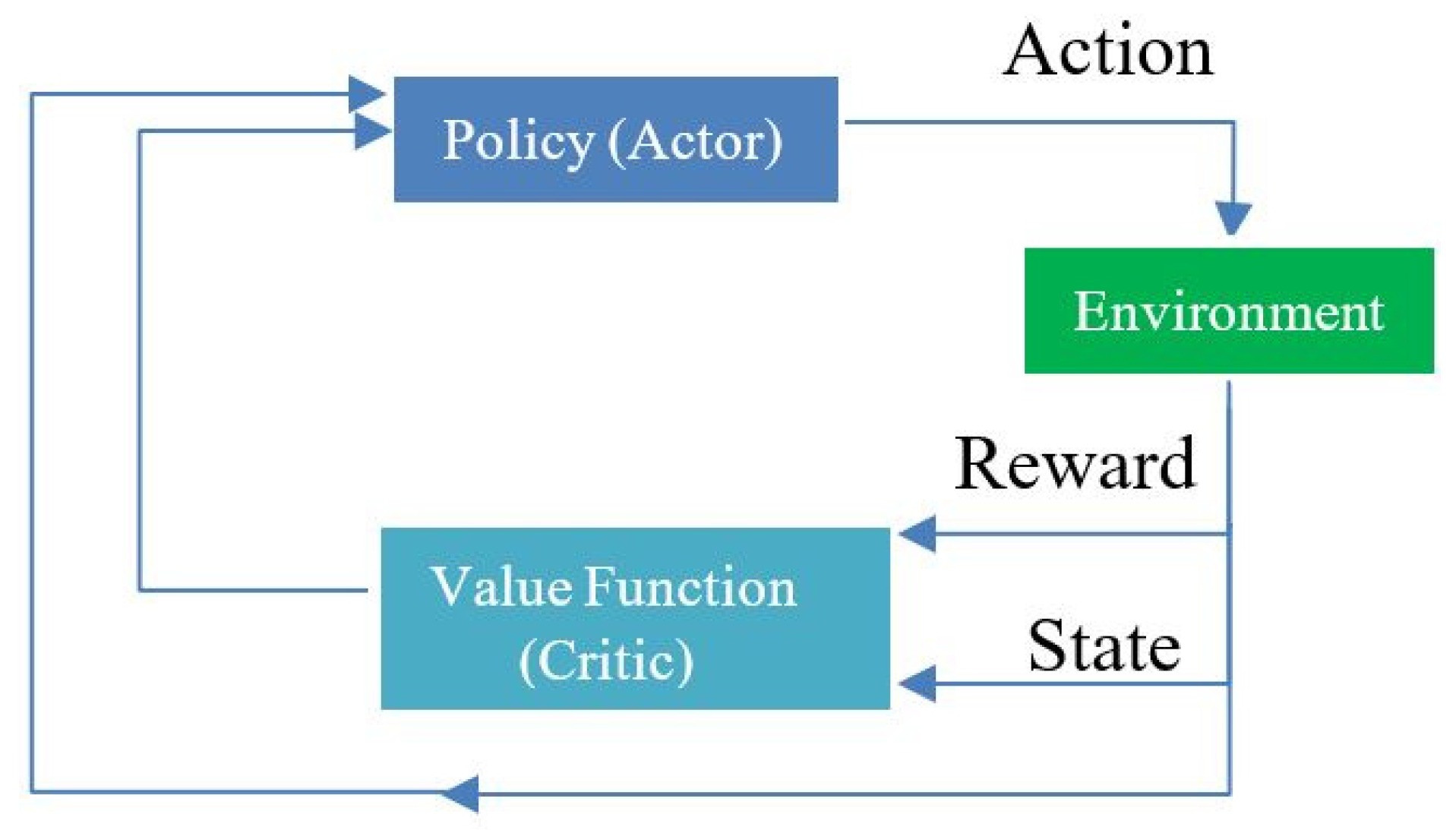

3.6. Asynchron ous Advantage Actor-Critic (A3C)

3.7. Others

4. Research Challenges

4.1. Scalability

4.2. Distraction Dilemma

4.3. Partial Obeservability

4.4. Effective Exploration

4.5. Catastrophic Forgetting

4.6. Negative Knowledge Transfer

5. Review of Existing Solutions

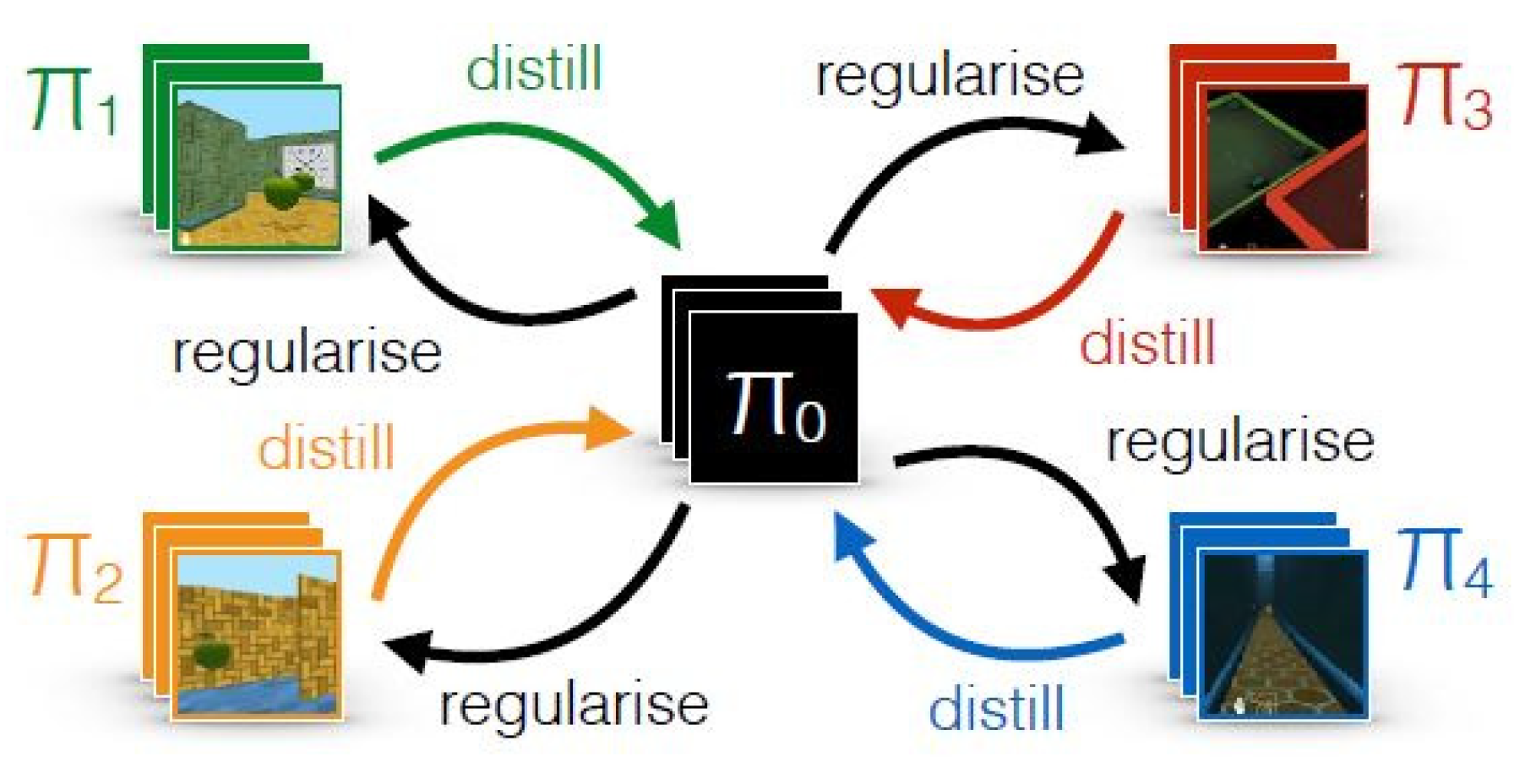

5.1. DISTRAL (DIStill & TRAnsfer Learning)

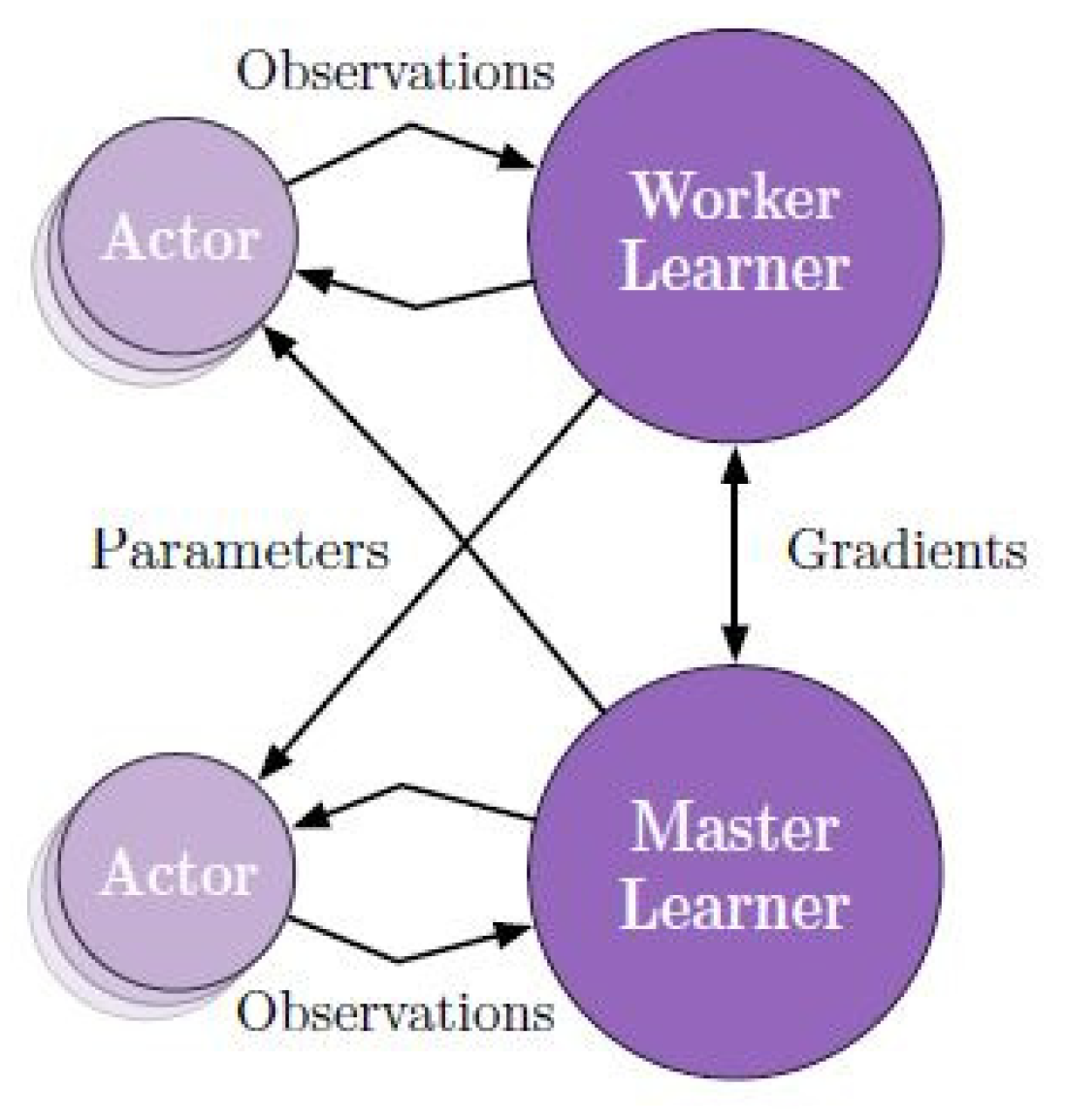

5.2. IMPALA (Importance Weighted Actor-Learner Architecture)

5.3. PopArt

5.4. Comparison of Existing Solutions

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sutton, R.S. Generalization in reinforcement learning: Successful examples using sparse coarse coding. In Advances in Neural Information Processing Systems 8; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Konidaris, G.; Osentoski, S.; Thomas, P. Value function approximation in reinforcement learning using the Fourier basis. In Proceedings of the Twenty-fifth AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Twenty-sixth Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, Nevada, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Holcomb, S.D.; Porter, W.K.; Ault, S.V.; Mao, G.; Wang, J. Overview on deepmind and its alphago zero ai. In Proceedings of the 2018 International Conference on Big Data and Education, Honolulu, HI, USA, 9–11 March 2018; pp. 67–71. [Google Scholar]

- Caruana, R. Multitask learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Ding, Z.; Dong, H. Challenges of Reinforcement Learning In Deep Reinforcement Learning; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Glatt, R.; Costa, A.H.R. Improving deep reinforcement learning with knowledge transfer. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Hessel, M.; Soyer, H.; Espeholt, L.; Czarnecki, W.; Schmitt, S.; van Hasselt, H. Multi-task deep reinforcement learning with popart. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Li, Y. Deep reinforcement learning: An overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A brief survey of deep reinforcement learning. arXiv 2017, arXiv:1708.05866. [Google Scholar] [CrossRef]

- Sallab, A.E.; Abdou, M.; Perot, E.; Yogamani, S. Deep reinforcement learning framework for autonomous driving. Electron. Imaging 2017, 2017, 70–76. [Google Scholar] [CrossRef]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3357–3364. [Google Scholar]

- Calandriello, D.; Lazaric, A.; Restelli, M. Sparse multi-task reinforcement learning. In Proceedings of the 28th Annual Conference on Neural Information Processing Systems Neural Information, Montreal, QC, Canada, 8–13 December 2014; pp. 819–827. [Google Scholar]

- Song, M.-P.; Gu, G.-C.; Zhang, G.-Y. Survey of multi-agent reinforcement learning in markov games. Control Decis. 2005, 20, 1081. [Google Scholar]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Trans. Cybern 2020, 50, 3826–3839. [Google Scholar] [CrossRef]

- Hernandez-Leal, P.; Kartal, B.; Taylor, M.E. A survey and critique of multiagent deep reinforcement learning. Auton. Agents Multi-Agent Syst. 2019, 33, 750–797. [Google Scholar] [CrossRef]

- Denis, N.; Fraser, M. Options in Multi-task Reinforcement Learning-Transfer via Reflection. In Proceedings of the Canadian Conference on Artificial Intelligence, Kingston, ON, Canada, 28–31 May 2019; pp. 225–237. [Google Scholar]

- da Silva, F.L.; Costa, A.H.R. A survey on transfer learning for multiagent reinforcement learning systems. J. Artif. Intell. Res. 2019, 64, 645–703. [Google Scholar] [CrossRef]

- Palmer, G.; Tuyls, K.; Bloembergen, D.; Savani, R. Lenient multi-agent deep reinforcement learning. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, International Foundation for Autonomous Agents and Multiagent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 443–451. [Google Scholar]

- Teh, Y.; Bapst, V.; Czarnecki, W.M.; Quan, J.; Kirkpatrick, J.; Hadsell, R.; Heess, N.; Pascanu, R. Distral: Robust multitask reinforcement learning. In Proceedings of the Thirty-first Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9, December 2017; pp. 4496–4506. [Google Scholar]

- Espeholt, L.; Soyer, H.; Munos, R.; Simonyan, K.; Mnih, V.; Ward, T.; Doron, Y.; Firoiu, V.; Harley, T.; Dunning, I. Impala: Scalable distributed deep-rl with importance weighted actor-learner architectures. arXiv 2018, arXiv:1802.01561. [Google Scholar]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Cassandra, A.R. Planning and acting in partially observable stochastic domains. Artif. Intell. 1998, 101, 99–134. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press Cambridge: Cambridge, MA, USA, 1998; Volume 2. [Google Scholar]

- Waibel, A. Modular construction of time-delay neural networks for speech recognition. Neural Comput. 1989, 1, 39–46. [Google Scholar] [CrossRef]

- Pontil, M.; Maurer, A.; Romera-Paredes, B. The benefit of multitask representation learning. J. Mach. Learn. Res. 2016, 17, 2853–2884. [Google Scholar]

- Boutsioukis, G.; Partalas, I.; Vlahavas, I. Transfer learning in multi-agent reinforcement learning domains. In European Workshop on Reinforcement Learning; Springer: Berlin/Heidelberg, Germany, 2011; pp. 249–260. [Google Scholar]

- Weiss, G. Multiagent Systems: A Modern Approach to Distributed Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Mnih, V.; Kavukcuogluy, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G. Human-level control through deep reinforcement learning. Nature 2015, 518, 529. [Google Scholar] [CrossRef]

- Yoshua, B. Learning deep architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484. [Google Scholar] [CrossRef]

- Borsa, D.; Graepel, T.; Shawe-Taylor, J. Learning shared representations in multi-task reinforcement learning. arXiv 2016, arXiv:1603.02041. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Rusu, A.A.; Rabinowitz, N.C.; Desjardins, G.; Soyer, H.; Kirkpatrick, J.; Kavukcuoglu, K.; Pascanu, R.; Hadsell, R. Progressive neural networks. arXiv 2016, arXiv:1606.04671. [Google Scholar]

- Taylor, M.E.; Stone, P. An introduction to intertask transfer for reinforcement learning. Ai Mag. 2011, 32, 15. [Google Scholar] [CrossRef]

- Fernando, C.; Banarse, D.; Blundell, C.; Zwols, Y.; Ha, D.; Rusu, A.A.; Pritzel, A.; Wierstra, D. Pathnet: Evolution channels gradient descent in super neural networks. arXiv 2017, arXiv:1701.08734. [Google Scholar]

- Rusu, A.A.; Colmenarejo, S.G.; Gulcehre, C.; Desjardins, G.; Kirkpatrick, J.; Pascanu, R.; Mnih, V.; Kavukcuoglu, K.; Hadsell, R. Policy distillation. arXiv 2015, arXiv:1511.06295. [Google Scholar]

- Buciluǎ, C.; Caruana, R.; Niculescu-Mizil, A. Model compression. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PS, USA, 20–23 August 2006; pp. 535–541. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Parisotto, E.; Ba, J.L.; Salakhutdinov, R. Actor-mimic: Deep multitask and transfer reinforcement learning. arXiv 2015, arXiv:1511.06342. [Google Scholar]

- Akhtar, M.S.; Chauhan, D.S.; Ekbal, A. A Deep Multi-task Contextual Attention Framework for Multi-modal Affect Analysis. ACM Trans. Knowl. Discov. Data (TKDD) 2020, 14, 1–27. [Google Scholar] [CrossRef]

- Bellemare, M.G.; Naddaf, Y.; Veness, J.; Bowling, M. The arcade learning environment: An evaluation platform for general agents. J. Artif. Intell. Res. 2013, 47, 53–279. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Grave, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Wang, Y.; Stokes, J.; Marinescu, M. Actor Critic Deep Reinforcement Learning for Neural Malware Control. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 1005–1012. [Google Scholar]

- Vuong, T.-L.; Nguyen, D.-V.; Nguyen, T.-L.; Bui, C.-M.; Kieu, H.-D.; Ta, V.-C.; Tran, Q.-L.; Le, T.-H. Sharing experience in multitask reinforcement learning. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 3642–3648. [Google Scholar]

- Li, L.; Gong, B. End-to-end video captioning with multitask reinforcement learning. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Hilton Waikoloa Village, HI, USA, 7–11 January 2019; pp. 339–348. [Google Scholar]

- Chaplot, D.S.; Lee, L.; Salakhutdinov, R.; Parikh, D.; Batra, D. Embodied Multimodal Multitask Learning. arXiv 2019, arXiv:1902.01385. [Google Scholar]

- Yang, M.; Tu, W.; Qu, Q.; Lei, K.; Chen, X.; Zhu, J.; Shen, Y. MARES: Multitask learning algorithm for Web-scale real-time event summarization. World Wide Web 2019, 22, 499–515. [Google Scholar] [CrossRef]

- Liang, Y.; Li, B. Parallel Knowledge Transfer in Multi-Agent Reinforcement Learning. arXiv 2020, arXiv:2003.13085. [Google Scholar]

- Liu, X.; Li, L.; Hsieh, P.-C.; Xie, M.; Ge, Y. Developing Multi-Task Recommendations with Long-Term Rewards via Policy Distilled Reinforcement Learning. arXiv 2020, arXiv:2001.09595. [Google Scholar]

- Osband, I.; Blundell, C.; Pritzel, A.; van Roy, B. Deep exploration via bootstrapped DQN. In Proceedings of the Thirtieth Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4026–4034. [Google Scholar]

- Mankowitz, D.J.; Žídek, A.; Barreto, A.; Horgan, D.; Hessel, M.; Quan, J.; Oh, J.; van Hasselt, H.; Silver, D.; Schaul, T. Unicorn: Continual learning with a universal, off-policy agent. arXiv 2018, arXiv:1802.0829. [Google Scholar]

| Characteristic | Name of Solution | ||

|---|---|---|---|

| PopArt | IMPALA | DISTRAL | |

| Distraction Dilemma | No | Yes | No |

| Master Policy | Yes | Yes | Yes |

| Operation Model | A3C Model | A3C Model | Centroid Policy |

| Multitask Learning | Yes | Yes | Yes |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vithayathil Varghese, N.; Mahmoud, Q.H. A Survey of Multi-Task Deep Reinforcement Learning. Electronics 2020, 9, 1363. https://doi.org/10.3390/electronics9091363

Vithayathil Varghese N, Mahmoud QH. A Survey of Multi-Task Deep Reinforcement Learning. Electronics. 2020; 9(9):1363. https://doi.org/10.3390/electronics9091363

Chicago/Turabian StyleVithayathil Varghese, Nelson, and Qusay H. Mahmoud. 2020. "A Survey of Multi-Task Deep Reinforcement Learning" Electronics 9, no. 9: 1363. https://doi.org/10.3390/electronics9091363

APA StyleVithayathil Varghese, N., & Mahmoud, Q. H. (2020). A Survey of Multi-Task Deep Reinforcement Learning. Electronics, 9(9), 1363. https://doi.org/10.3390/electronics9091363