1. Introduction

In the last few years, deep learning has significantly contributed to the improvement of visual perception research. This encouraged people in recent vision research to show more interest in challenging problems, such as video understanding. The main goal of video understanding is to describe the contents of a video in natural language automatically. Compared to image captioning, which describes a still image, video understanding is a more challenging task as information in a video is far more complicated. We need to capture not only the spatial contents of video (objects, scenes), but also the temporal dynamics (actions, context, flow) within the video sequences for adequate video description. Recent advances in 3D convolutional neural networks (CNNs) [

1] provide a method to yield semantic representation of each short video segment which also embeds temporal dynamics. However, for longer and complicated video sequences (such as 120 s long in the ActivityNet dataset [

2]), the context understanding of a video becomes more important for generating natural descriptions. Since long videos involve multiple events ranging across multiple time scales, capturing diverse temporal context between events is the key for natural video description with context understanding. In the following, we introduce traditional video captioning models and their limitations for video context understanding. We then propose our approach to this problem.

The conventional deep learning based video captioning models adopt gated recurrent neural networks (RNN), such as long short-term memory (LSTM) or gated recurrent unit (GRU), with the encoder-decoder architecture [

3,

4,

5]. Those models encode each input video sequence into semantic representations and send it to a decoder to generate video captions. However, those approaches have following limitations:

The conventional RNN is not sufficient to encode information with long-term dependency in long video sequences. Although LSTM or GRU partially address such an issue, long-term dependency is still an unsolved problem for long sequential data. Additionally, those conventional captioning models hardly maintain the contextual information included in a video sequence. Due to the memory limitation in the RNN models, the conventional video captioning models only work for short video clips with simple scenes and are not applicable to long videos consisting of multiple complicated events.

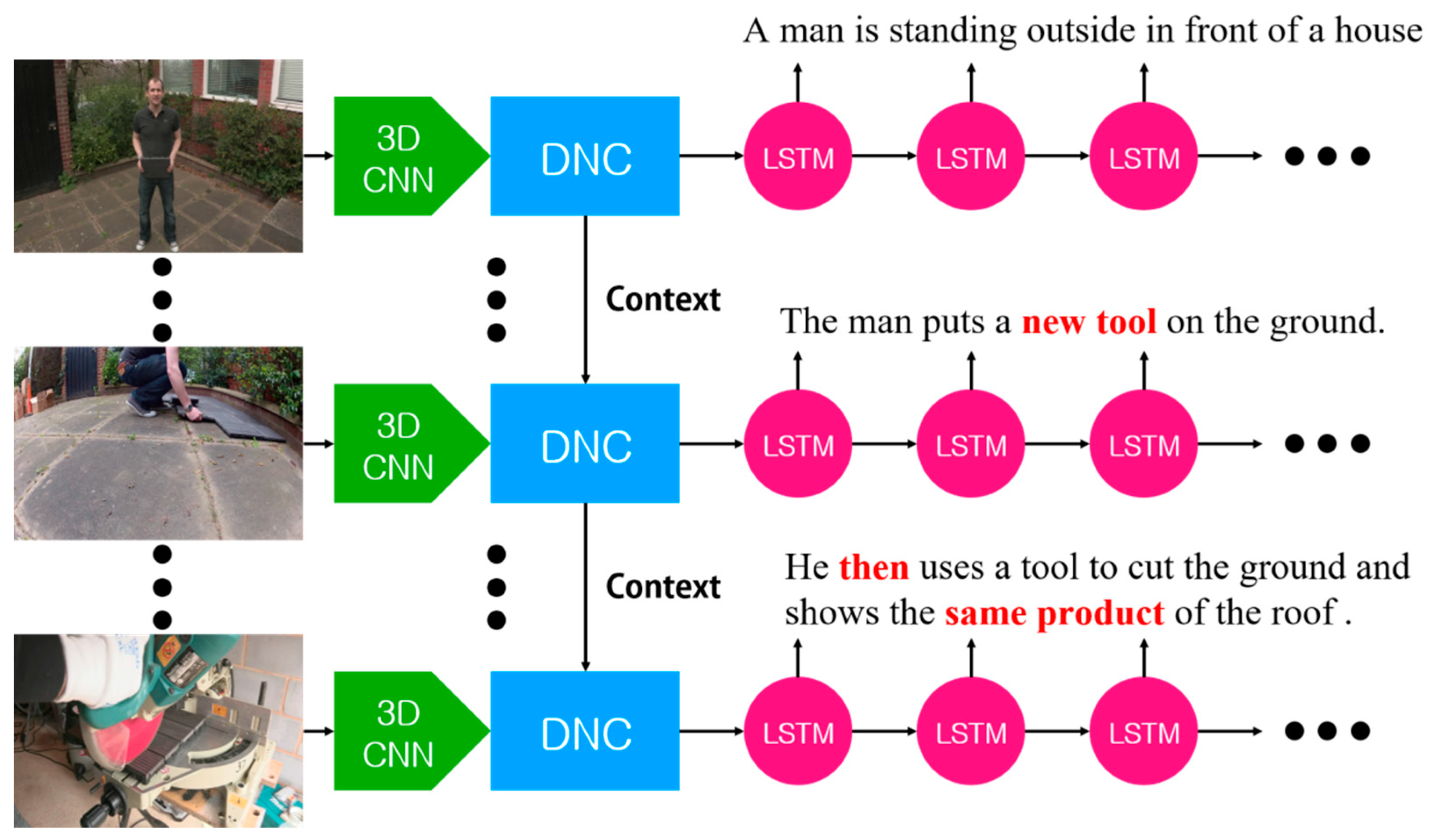

To overcome this limitation, in this paper, we propose a novel context aware video captioning model which can generate captions based on temporal contextual information in a long video as shown in

Figure 1. To focus on the temporal context alone, we divide a long input video into event-wise sub-video clips and leverage external memory to understand temporal contextual information in the video. To reliably store and retrieve temporal contextual information, we adopt a differentiable neural computer (DNC) [

6]. DNC naturally learns to use its internal memory for context understanding in a supervised fashion and it also provides the contents of its memory as an output. In our proposed model, we consecutively connect the DNCs based captioning models (DNC augmented LSTM) with this memory information which reflects the context, and sequentially train each language model to generate captions for each sub scenes. In our experiments, we show that the proposed model generates temporally coherent sentences by using previous contextual information, and compare the captioning performance with other state-of-the art video captioning models. Additionally, we show the superior performance of our model based on quantitative measures, such as Bilingual Evaluation Understudy (BLEU) [

7], Metric for Evaluation of Translation with Explicit Ordering (METEOR) score [

8], and Consensus-based Image Description Evaluation (CIDEr-D) [

9].

Context Aware Video Caption Generation

The context aware video caption generation regards how much the generated output is relevant to its context. In

Figure 1, the generated sentence includes ‘new tool’ which indicates our proposed model understands that the ‘tool’ of current input scene is ‘new’ one. Additionally, another generated sentence includes ‘then’, which indicates the proposed model understands causality of events. Such abilities only can be accomplished by context aware caption generation.

From the current scene, 3D CNN extracts valuable information as feature map. Based on the extracted feature map, the DNC memorizes current scene information and passes through its current state to the next DNC. The second DNC uses not only current input information but also initialized by previous DNC state information. Finally, the second DNC comprehends contextual relationships between previous states and the current input by understanding the current input based on previous states. Such a process accumulates consecutively, therefore, the final DNC can accommodate context of the events.

2. Related Works

The early video captioning studies focused on extracting semantic content, such as subject, verb, or object, and associate them with visual elements in the scenes [

10,

11,

12]. For instance, there is a study [

11] that constructs a factor graph model to obtain the confidence of semantic contents and finds the optimal combination of them for sentence template matching. However, such an early model only works for specific videos and the number of possible expressions is limited. In contrast, recent research [

13,

14] shows that the deep learning based approach is effective for video-based language modeling tasks when it is trained with large dataset including vast amounts of linguistic information.

The earlier studies on deep learning-based video captioning use mean pooling on the feature map from the pre-trained convolutional neural network (CNN) to obtain feature representations of every input video frame and apply RNN for language modeling [

14]. However, this method is limited only for video clips with short and static backgrounds. With the success of neural machine translation (NMT), the LSTM-based encoder-decoder structure, which is known as the sequence-to-sequence model, is also applied for video captioning [

5]. They obtain semantic representations of video frames from the pre-trained CNN and provide them as input to the LSTM encoder to obtain the final hidden states. Then they optimize the loss function of the LSTM decoder for one-step ahead prediction to generate subsequent words. However, since the sentence generation of a decoder only depends on the output of an encoder, they cannot obtain good performance for long videos. In order to address this problem, the attention mechanism [

15] is introduced to video captioning [

16]. Through the attention mechanism, RNN can generate each word based on soft attention over the temporal segments of a video. There is a study that adopts such soft attention and visualizes the activated attention region when it generates a word for image captioning [

17]. They apply the CNN feature map vector

and the LSTM hidden state

to the attention model [

15] and obtain the attention weight

which indicates a relation between them. Then they train the LSTM decoder with the weighted sum of CNN feature map as an initial state to implement the soft attention.

For longer and coherent captioning, researchers also try to consider context information [

13,

18]. They introduce a hierarchical RNN to encode both local and global contexts of a video. In their model, the first level of hierarchy learns local temporal structure of each subsequence and the second level of hierarchy learns global temporal structure between subsequences [

18]. They also applied attention to each layer of the hierarchy to obtain a richer representation for the video captioning which successfully increased the performance score of METEOR and BLEU. There is another type of hierarchical structure which stacks RNN for considering contextual information over the RNN for sentence generation [

13]. In that model, the higher-level RNN combines its contextual hidden states with the embedded sentence generated from the lower-level RNN to decide an initial state for the generation of the next sentence.

The closest work to our model is the dense video captioning which generates captions for every event in the videos [

19]. They adopt Deep Action Proposals (DAPs) [

20] in order to estimate the start and end time of each event in the video and train DAP with the video captioning model. To reflect the past and future event contexts to the current video caption generation, they apply the attention mechanism to the hidden states of the LSTM which encodes each sub video clip to show its improvement in overall context understanding. There is a recent study that introduces descriptiveness-driven temporal attention which is an improved version of the temporal attention [

21]. They applied holistic attention score which represents descriptiveness of each clip composing a video to increase the attention weights of descriptive clips.

Previous works applied attention mechanism and hierarchical structure to include the contextual information in video captioning. However, the context awareness requires to memorize both the sequence of important events and the relationship between them.

In this paper, we propose a new method that can overcome such limitations of previous video captioning models by leveraging external memory (DNC) for context understanding [

6]. Our model not only can generate natural captions for each event in a video but also reflects context information between related events for coherent captioning. There is a recent study that introduces descriptiveness-driven temporal attention which is an improved version of the temporal attention [

21]. They applied holistic attention score which represents descriptiveness of each clip composing a video to increase the attention weights of descriptive clips.

Previous works applied attention mechanism and hierarchical structure to include the contextual information in video captioning. However, the context awareness requires to memorize both the sequence of important events and the relationship between them.

In this paper, we propose a new method that can overcome such limitations of previous video captioning models by leveraging external memory (DNC) for context understanding. Our model not only can generate natural captions for each event in a video but also reflects context information between related events for coherent captioning.

3. Video Captioning with DNC

3.1. Differentiable Neural Computer (DNC)

Differentiable neural computer (DNC) started from a Turing machine. Turing machines are abstract modern computer structures which show that all computations are possible given the appropriate external memory and algorithms [

4]. Google Deep Mind proposed the Neural Turing Machine (NTM), a system that combines neural networks and external memory to implement a differentiable Turing machine, and in 2016, a Nature paper proposed an improved version of the NTM model, DNC. In their paper, they demonstrated that DNC can effectively learn how to use memory to deal with complex and structured data such as Q&A (bAbI), family tree, and London’s subway maps [

6]. DNC consists of a controller and a memory, and the controller transmits a control signal to the memory unit in an interface vector. The interface vector contains several parameters related to memory operation, and each parameter determines the weighting factor, which is the degree involved in reading or writing memory. In the process of finding the correct answer through learning, the controller is trained to output an interface vector that gives the optimal weighting factor. In other words, the controller learns how to determine the weighting factor that determines where, in what order, and how much information is read or written in memory, all of which are determined by the interface vector. Each component included in interface vector is shown in

Table 1.

DNC can perform content-based addressing to find useful information by calculating the similarity between the content of a memory and a key vector, and location-based addressing to search for information in the order entered in memory or in reverse order, regardless of similarity. This is why DNC is able to respond flexibly in understanding the complex nature of data:

where

means content-based weighting, and is determined by the cosine similarity between the key vector belonging to the interface vector and the information vector in memory. DNC allows content-based weighting to flexibly determine from the data how much to read or write to the information in memory:

Equation (3) calculates read weighting

, which determines how much information in memory is read, and Equation (4) calculates read vector

, which means information read from memory through context-based addressing.

,

, and

mean three read modes: backward, content-based, and forward, and read mode is assigned to each read head. Read weighting

is defined as a weighted sum of each read mode vector, backward weighting

, content-based weighting

, and forward weighting

. Finally,

read vectors

are obtained through the matrix product of memory matrix

and read weighting

:

Equation (5) shows write weighting to determine how much information to allocate to memory, and Equation (6) shows the update process of memory matrix is allocation weighting, which introduces a usage vector, which is a value related to the frequency of memory usage, so that the usage vector has a small value, that is, a large value at a memory address that has not been used. The allocation gate in Equation (5) is learned to have a large value when memory allocation occurs. It enables flexible memory allocation by determining the superiority of location-based addressing and content-based addressing. Finally, the memory erases the information from memory by subtracting the multiplication of erase vector and write weighting from the previous memory matrix , and the information is allocated to memory by adding the multiplication of write vector and write weighting as shown in Equation (6).

3.2. A Single DNC-LSTM-Based Video Caption Model

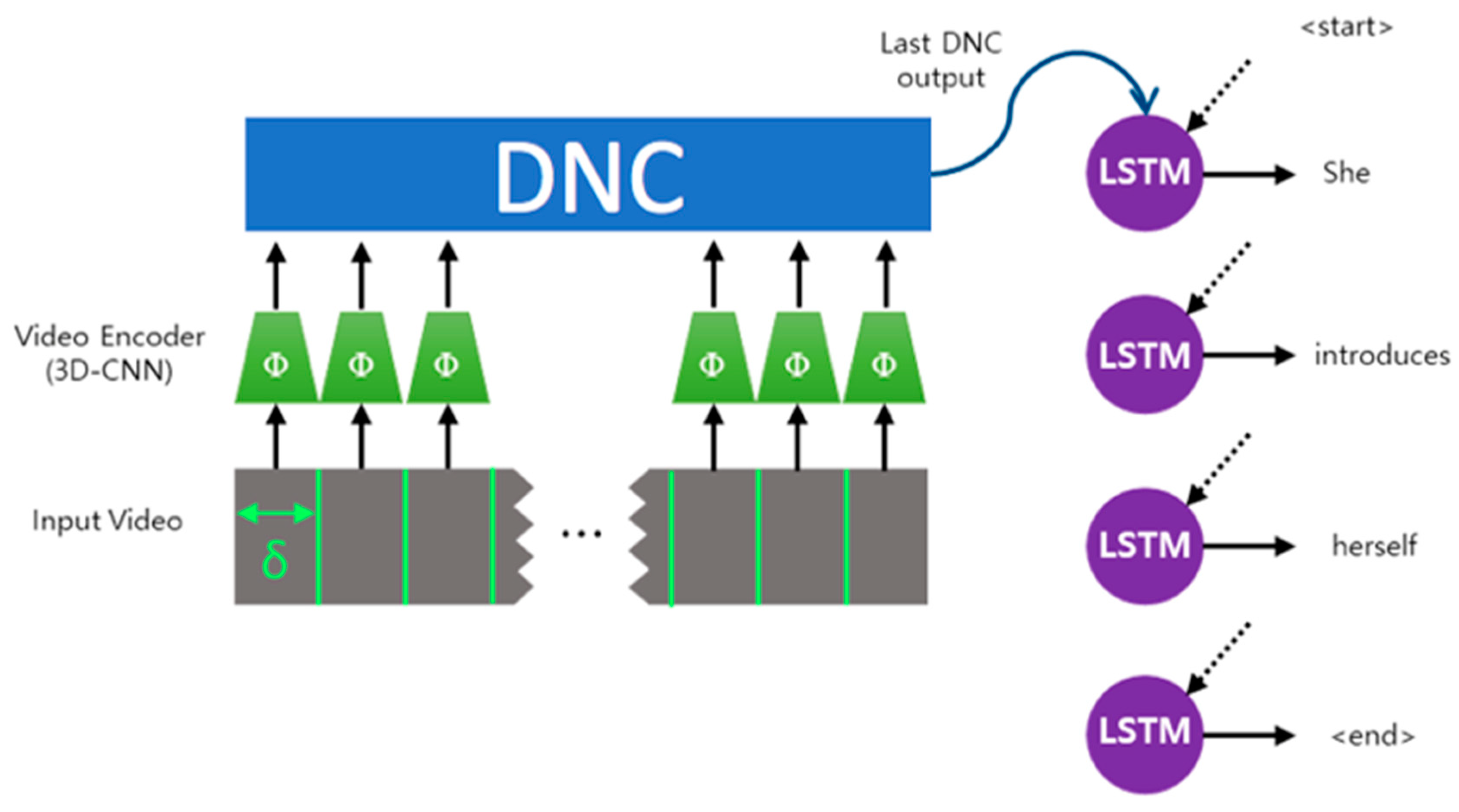

Our proposed model sequentially connects and trains a single video caption generation model with DNC for temporal context awareness. As shown in

Figure 2, a single video caption generation model is an encoder-decoder network integrated with DNC memory. It encodes a video clip with pre-trained 3D-CNN and generates sentences from the information stored in DNC memory by using an LSTM decoder.

We use a 4096-dimensional feature map obtained from the pre-trained 3D-CNN [

1] to extract spatial-temporal features of a video. For this process, we divide video sequences into a number of small video clips with each 16 frames

() and extract feature vectors for each clip. For more temporally coherent feature extraction, we apply this method on every video clip by overlapping eight frames as follows:

where

are the extracted features at time step

,

are the selected frames in the current video clip, and

is the 3D CNN which generates a 4096-dimensional feature map from the last fully-connected layer.

After this process, to reflect the previous information in the memory of the DNC to current information, we concatenate the read vector, which reflects the contents of past DNC memory with the current input feature vector and provide them as input to the DNC controller. Since the input to the DNC controller includes the sequence of 3D CNN features, input

on

is as follows:

where

is the read vector at the time step

. The DNC output of last time step

, which is the concatenation of read vector

and controller output vector

, is projected on the output space. This value is used as the initial state

of LSTM decoder as in Equation (9). The decoder is trained to generate sentences based on the value of

:

where

,

is the number of LSTM decoder hidden unit,

is the size of read vector and

is the size of LSTM controller output vector. The decoder has the LSTM structure which takes input of embedded words as shown in Equation (10). In Equation (11), the output of LSTM at each time step

predicts the one-hot vector of a next word by applying the Softmax function to the outcome of the fully connected output layer with the nodes of vocabulary size as:

where

,

and

is the LSTM decoder cell state where

is the size of the vocabulary and

is the size of the word embedding vector of the decoder. The

is the one-hot vector with the size of vocabulary and is fed as an input to each decoder step. We can obtain the vector by shifting the target sentence by one step for the one-step ahead prediction. The

is the word embedding matrix and the

is a matrix for projection of LSTM output to a vocabulary size vector.

The loss function is defined by the cross-entropy between the LSTM output vector and the one-hot vector of a target word, and optimized by the Back-Propagation Through Time (BPTT) algorithm as follows:

where

,

, and

is the size of the mini-batch. Since composing long video samples with several mini-batches can cause excessive zero-padding, we set

in our proposed model. The

is

word of the target sentence which is converted to the one-hot vector of vocabulary size. The DNC learns optimal memory operations to generate a target sentence and the LSTM decoder learns to generate sentences when the video scene representation is given.

Through the association operation which is based on the similarity between the given data and stored information in a memory, the DNC can retrieve information stored in the memory. In our proposed model, we take advantage of such characteristic of the DNC for understanding the context of complex and long videos.

3.3. Consecutive DNC-Based Video Caption Model

In this paper, we have two hypotheses:

Based on the above hypotheses, the main idea of our model is to provide a temporal context to the video caption generation by passing various components involved in DNC memory operations and its mechanism to the next stage of DNC.

In other words, we can utilize abstracted information of accumulated input data in DNC memory and the values involved in the memory I/O operation as a medium for context awareness. Under this assumption, we define the context vector

of

sub-scene with an input sequence length

as follows:

where

. The meaning of each component of the

is follows:

Memory matrix : Abstractive representation of the input data updated by content-based addressing and location-based addressing.

Usage vector : Frequency of usage. The more frequently the memory is used, the larger the value.

Precedence vector : Memory allocation priority. When a memory block is assigned with data, it decreases. It differentiates memory allocation priority to reduce interference between the memory blocks.

Link matrix : The order of each memory block usage. becomes larger if the memory block, right after the memory block is more frequently used.

Write weight : The degree of a given input data is reflected to memory. The larger the value, the more information is stored in memory.

Read weight : The degree of information read from memory. The larger the value, the more information is read from memory and reflected in an output.

Read vector: Information read from a memory through three types of read modes—content-based, forward, and backward.

As shown in

Figure 3, each DNC memory for each sub-video clip is initialized with the context vector

generated from its previous DNC for context awareness. Based on this structure, we sequentially train each LSTM decoder for coherent caption generation. If we represent the model in

Section 3.1 as

, the

context vector as

, feature sequence obtained from the 3D-CNN for

sub-scene as

and the result of sentence generation as

, then, our entire video captioning model can be described as Equation (14).

Based on the BPTT algorithm, our model is trained by sequentially optimizing the cross-entropy loss function between the sentence

from each sub-scene and a target sentence

over all sub-scenes as shown in Equation (15). The DNC and the LSTM share their model parameters over every stage

for context understanding and sentence generation:

where

and

. The proposed model learns how to read or write various connection patterns between each sub-scene to the external memory and flexibly utilize the context information for video captioning. In addition, by reading the context information accumulated in the memory through a DNC read vector, and provide it as the initial state of the LSTM decoder for training, it is possible to generate the sentences with context understanding.

4. Experimental Results

For the performance evaluation, we compare our model with other state-of-the-art video captioning approaches [

14,

19,

21,

22,

23] with respect to the context awareness. We have performed four experiments with the ActivityNet Caption dataset [

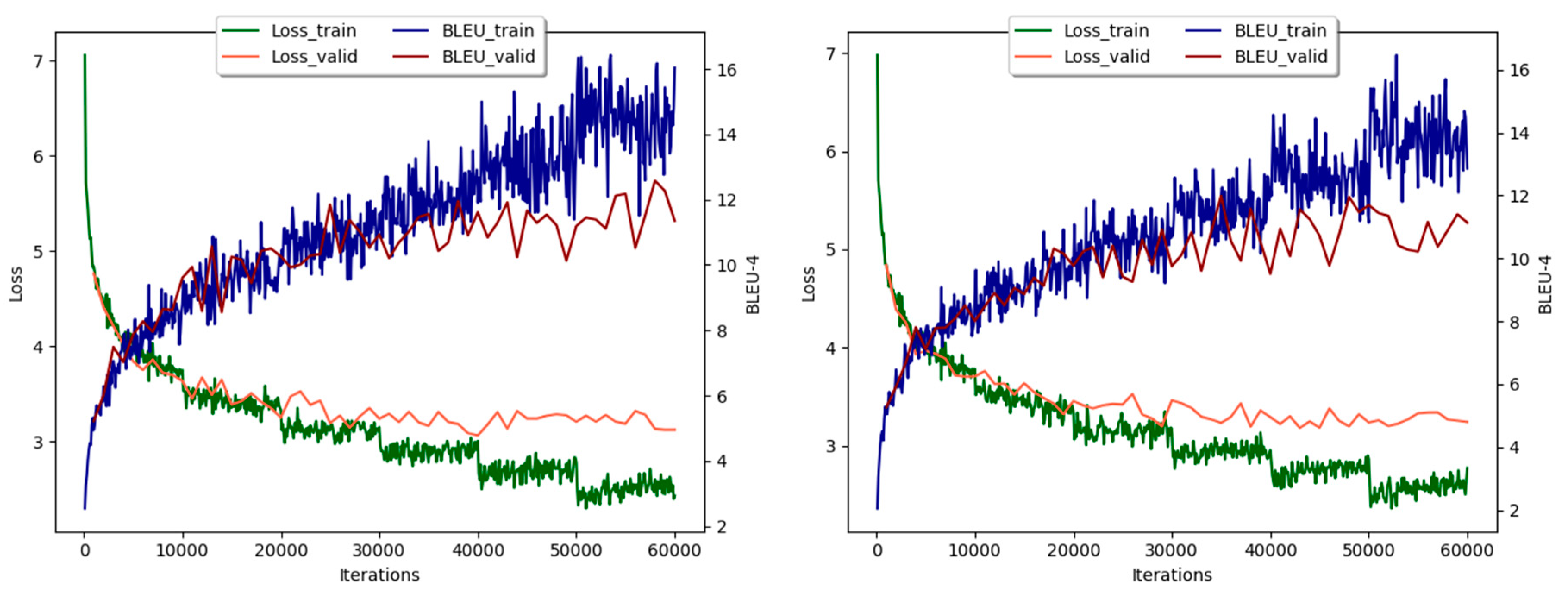

19]. First is learning the curve comparison, which indicates that the proposed model is computationally efficient, as shown in

Figure 4. Second is the ‘without context’ experiment, which indicates the efficiency of DNC itself. Third is the ‘with context’ experiment, which indicates how our proposed consecutive DNC outperforms other approaches. Finally, the last experiment is a qualitative evaluation for generated caption examples, which indicates that our results include more relevant contextual information. Since the goal of our approach is understanding the context of the video without explicitly searching for event-wise correlation, dense video captioning models are not appropriate for comparison. Therefore, we select only video captioning models which considers context from video for our experiment. We also show the effect of the DNC memory connections over time in our model. In our experiments, we focus on the context awareness of generated captions. To evaluate the model performance independent of sub-scene localization, we assume that event localization is already performed. The 3D CNN feature sequences are extracted based on the specified start and end time of each sub-scene in the ground truth dataset, and used for training and testing of our model.

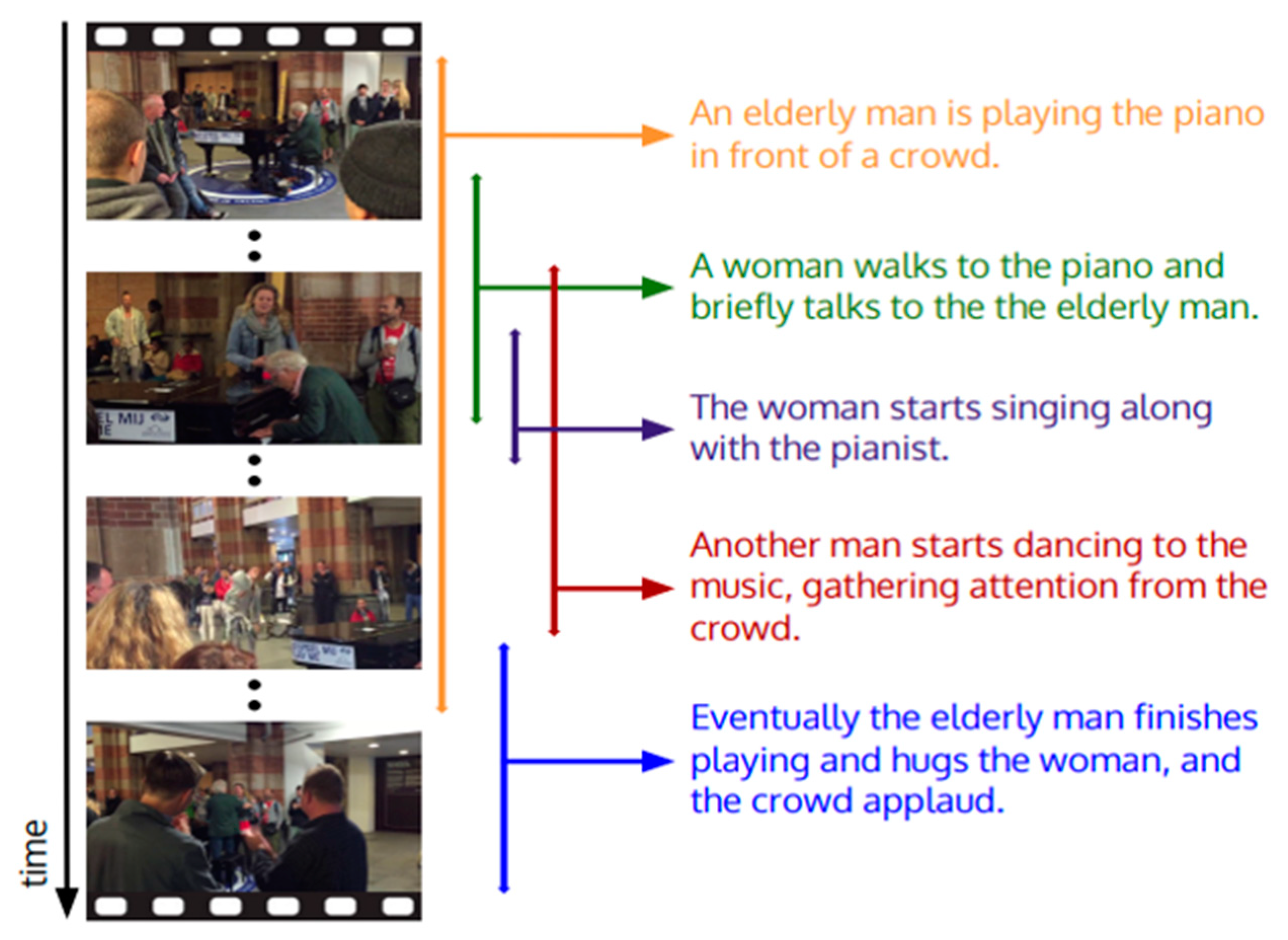

The dataset used in our experiments is ActivityNet Caption dataset [

19] which is based on ActivityNet version 1.3 [

24] and consists of about 20,000 YouTube videos. Each video has an average length of 180 s and each datum sample includes the captions which are composed of start/end time and description of the sub-scenes. Each sample includes three sub-scenes in average and each caption is a sentence consisting of average 13.5 words. The captions are prepared while considering the causal relationships between events. The number of training/validation/test videos are 10,024/4926/5044, respectively, and the total number of sentences is 100,000.

4.1. Model Training

To extract the spatio-temporal features from a video, we apply a pre-trained 3D CNN which is trained on sports 1M dataset [

1]. For this process, we define 16 video frames as one clip and input them to the 3D CNN to extract a 4096-dimension feature vector of the last fully-connected output layer. In order to extract detailed features, slide-windowing is performed on the video samples by overlapping eight frames at a time. For sentence preprocessing, the PTB tokenizer included in the Stanford CoreNLP tool [

25] is used. The word dictionary for converting words to integers is constructed based on the sentences contained in the training and validation datasets. Each word is converted to a one-hot vector, and then converted to a dense vector expression by multiplying the word with a matrix for word embedding, and is used as input to the decoder.

The DNC controller uses LSTM with 256 hidden units. In addition, the number of DNC memory blocks is 256, the size of the vector stored in a memory is 64 dimensions, and the read head for the read vector is four, in total. The read vector of the DNC is projected to a vector with a size of 1024 to obtain a final output, and this vector is used as the initial state of the LSTM decoder. The number of hidden units of the LSTM decoder is set to 1024, and each word is converted into a 300-dimension embedding vector. To avoid over-fitting, we apply the dropout [

26] with 0.3 ratio on every I/O layer of the LSTM layers.

For training, the ADAM optimization algorithm is used, and the learning rate is set to

and the momentum decay parameters

and

are used according to the method proposed in [

27]. As shown in Equation (15), the cross-entropy between the output of the LSTM decoder and the one-hot vector of a target word is used for the loss function. Since configuring video consisting of multiple sub-scenes into multiple mini-batches can results in excessive zero-padding, we set the size of mini-batch as 1.

After training the scene, to provide the context information accumulated in the DNC to the scene, we construct a tuple as described in Equation (13) and set it as the initial value of the DNC memory for the training of the scene. The same procedure is performed sequentially for all following sub-scenes. We measure the loss and 4-g BLEU score (B@4) of the training data for every 100th time point and check the progress by measuring the loss and BLEU score of the validation data for every 1000 time point. After six epochs of training, when the loss and BLEU scores are converged for validation data, we finish the training process. In our experiment, the entire training takes 12 h with the computing power of NVIDIA QUADRO GV100 of 5120 CUDA cores, 640 tensor cores and 32GB GPU memory.

For the evaluation of a single DNC based captioning model, it is not connected with any other DNC memories over time and the

tuple is also not used. The initial value of the single DNC model is always set to default so that the caption can be generated only from the current input video sequences without any consideration for the context between events. All other conditions are same, and the training is continued for six epochs and finished.

Figure 5 shows the learning curve of our model conditioned on ‘with context’ and ‘without context’. Both learning curves which are almost similar indicate that even though we additionally include our proposed model, it does not increase the problem complexity. Each line with the color of blue and brown represents the BLEU score of training and validation, respectively, and each green and orange correspond to the loss of training and validation, respectively.

4.2. Performance Comparison with Other Approaches

Table 2 and

Table 3 show the results of the quantitative performance comparison between the proposed model and other models in the video captioning field with the ActivityNet Caption dataset. For the performance measurements, BLEU [

7], METEOR [

8], and CIDEr-D [

9] are used.

In the ‘without context’ comparison, [

14,

22] trained each sub-scene and its caption as a single sample, and the context between the scenes is disconnected as in the case of ‘without context’ condition in our model. In LSTM-YT [

14], feature maps from the pre-trained VGG network are extracted and the result of mean pooling over the time axis is used as the initial state of the LSTM decoder. S2VT [

22] has an encoder-decoder structure in which the mean pooling is replaced with an LSTM encoder. Those two models are well-known approaches which use CNN-extracted features for caption generation, but not considering contextual information. Those models only consider temporal sequential information in their structures. Therefore, those approaches are suitable for ‘without context’ comparison to show the caption generation performance of a single DNC model. H-RNN [

23] uses two RNNs, one for a sentence generator, and the other for determining an initial state of a generator for the next sub-scene description. TempoAttn [

19] presented the dataset used in this experiment, and we adopt their model as a baseline of comparison for our study. In order to reflect the past and future contexts to the current sentence generation [

19], applied an attention mechanism to the hidden states of the LSTM which encodes each scene for context understanding. DVC [

21] is the most recent study to have applied a holistic attention score on an attention mechanism to distill descriptive video clips. Those three selected models are known as context aware approaches. Those models consider specific structures to address context information. Therefore, we can show how much our proposed model generates contextually more relevant results in quantitative and qualitative evaluation compared to those models. The evaluation is performed for [

14,

22] with the condition of ‘without context’ and [

19,

21,

23] ‘with context’, respectively.

As shown in

Table 2, compared to [

14,

22] with LSTM, the proposed DNC-based ‘without context’ model produces an overall higher score for BLEU and comparable scores for METEOR and CIDEr-D. In this result, we can see that using DNC as a video encoder instead of LSTM can improve the video captioning performance in terms of BLEU.

The comparison results in

Table 3 show that our proposed model outperforms all other models in terms of BLEU and CIDEr-D score considerably. Additionally, our model’s METEOR score is superior to the baseline Temporal Attention model [

19] and the H-RNN model [

23], but slightly lower than DVC [

21]. Moreover, compared to the proposed ‘without context’ model result in

Table 2, the proposed ‘with context’ model shows significant improvement in performance. The quantitative analysis results indicate the excellence of the proposed consecutive DNC structure for context awareness. Since the goal of our model is not dense captioning, but context-aware captioning, quantitative analysis is not sufficient to measure how well the context is reflected in the generated descriptions. Therefore, we will show a more detailed result of our proposed model through qualitative analysis. In

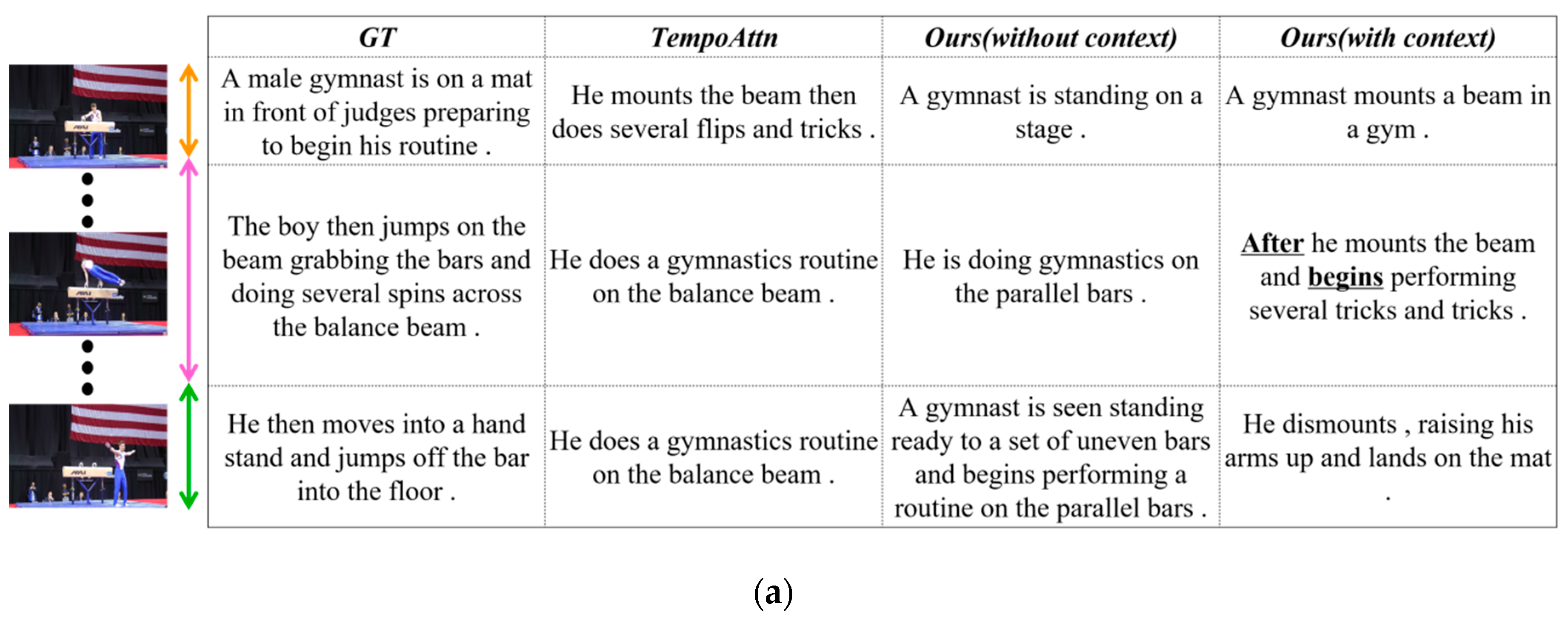

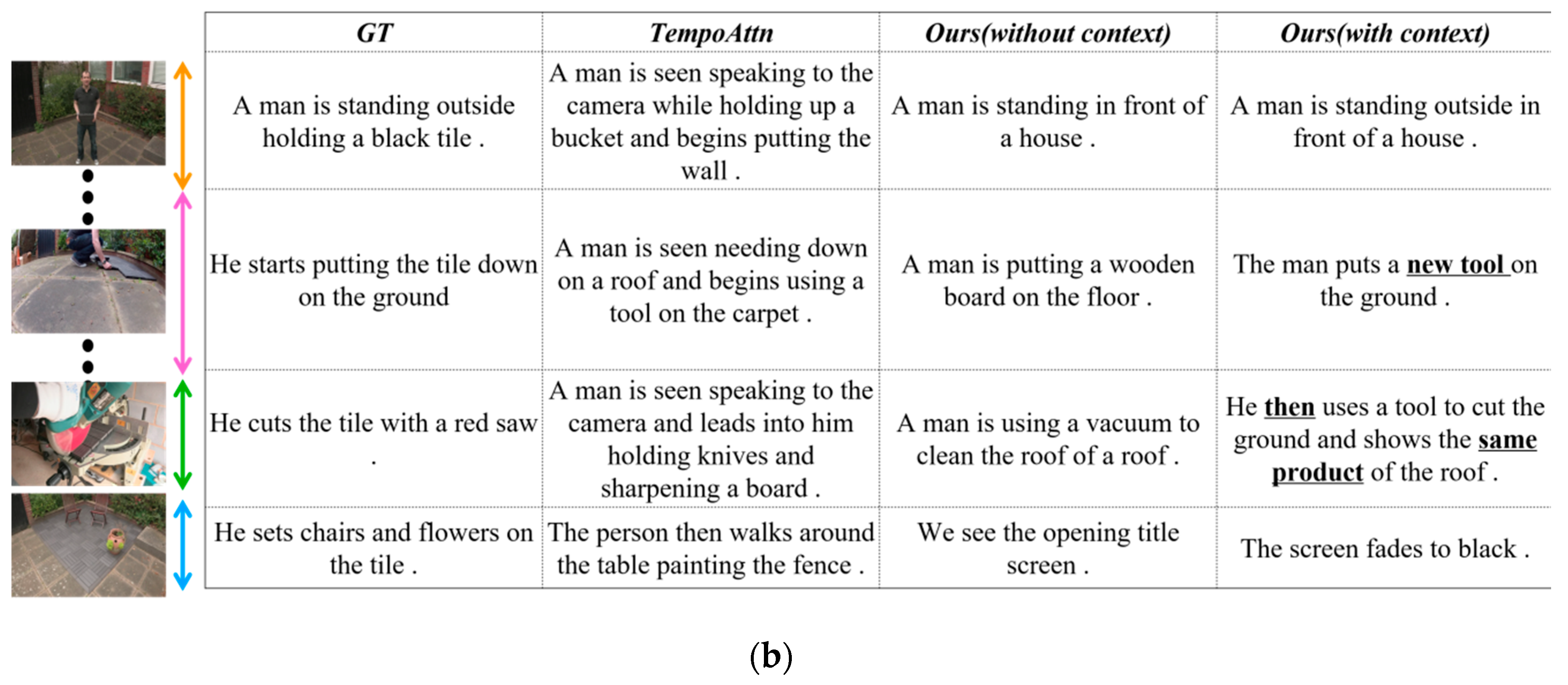

Figure 6, we compare the generated captions of our ‘with context’ model, ‘without context’ model and TempoAttn [

19].

Figure 6a shows that TempAttn repeats the same description for the second scene, although the scene has already changed. However, for the same case, our model generates the sentences with words of contextual meaning, such as the endings and beginnings, which are marked in bold, implies that our description contains more context-related words than other models. In the case of the ‘without context’ model, even though the gymnast’s performance is already finished in the last scene, it cannot recognize the situation and generates an incorrect description, “beginning performing”. In contrast, the ‘with context model’ generates more natural and coherent sentences compared to all other models by using the words with contextual meaning, such as “then”, “the new tool” (different from a previous tool), “the same product” (same as a previous one), etc.

According to the qualitative analysis, we can easily understand that the frequent usage of pronouns or conjunctions for contextual expressions can be found compare to other approaches. In order to come out of various concatenations, the connection must be natural considering the context, so we can realize that the context is well considered even though it is disadvantageous in some cases of numerical evaluations. Furthermore, the performance of the consecutive DNC captioning model with contextual connection is superior to the single DNC captioning model without context consideration. This result demonstrates the effectiveness of the contextual connection of the DNC-based caption generation model in learning temporal context.