Woven Fabric Pattern Recognition and Classification Based on Deep Convolutional Neural Networks

Abstract

:1. Introduction

2. Materials and Methods

2.1. Convolutional Neural Networks

2.2. VGGNet

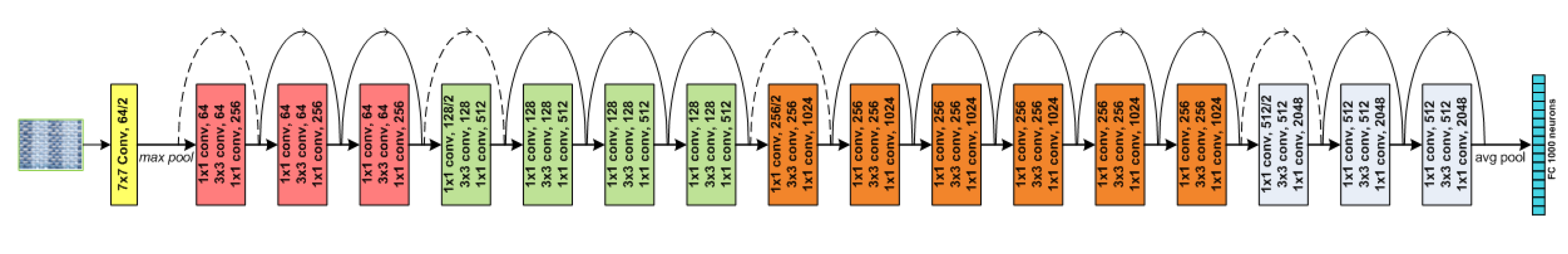

2.3. ResNet

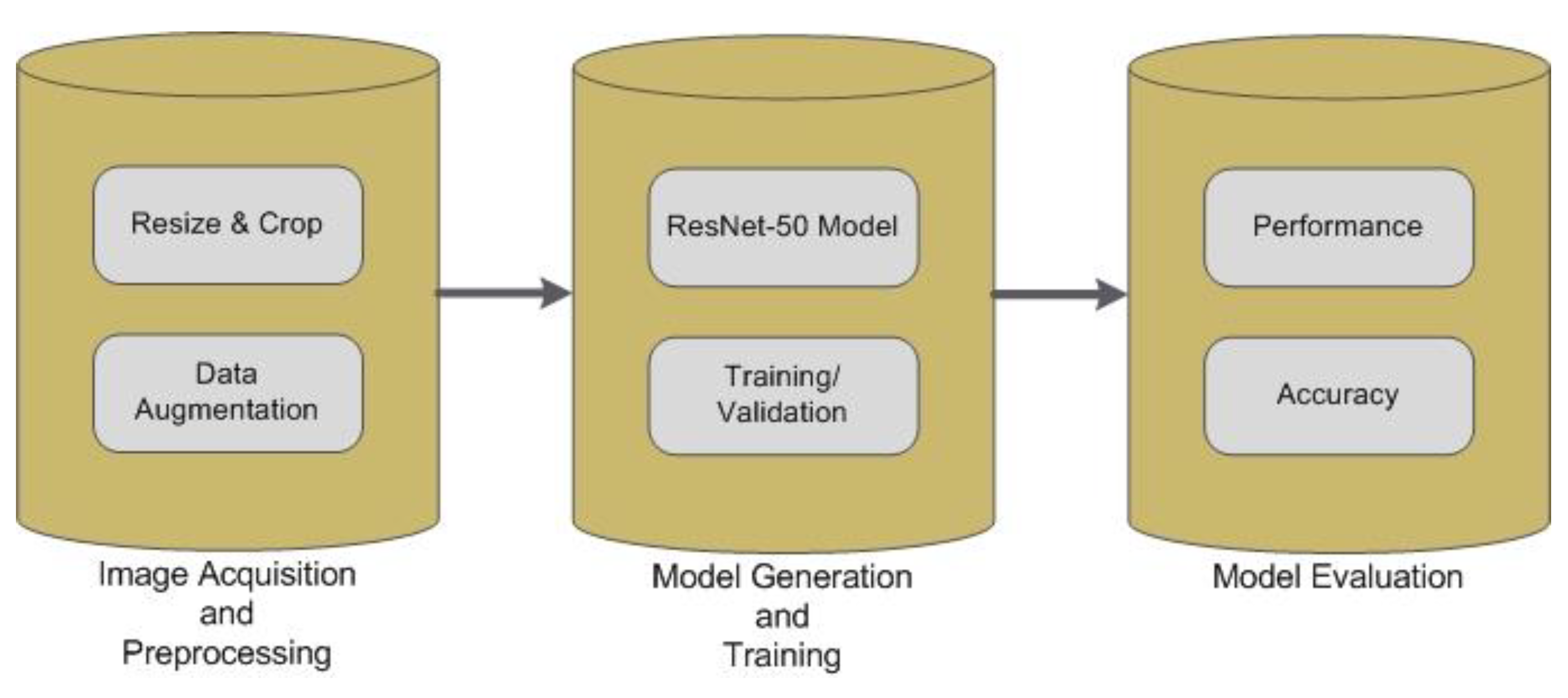

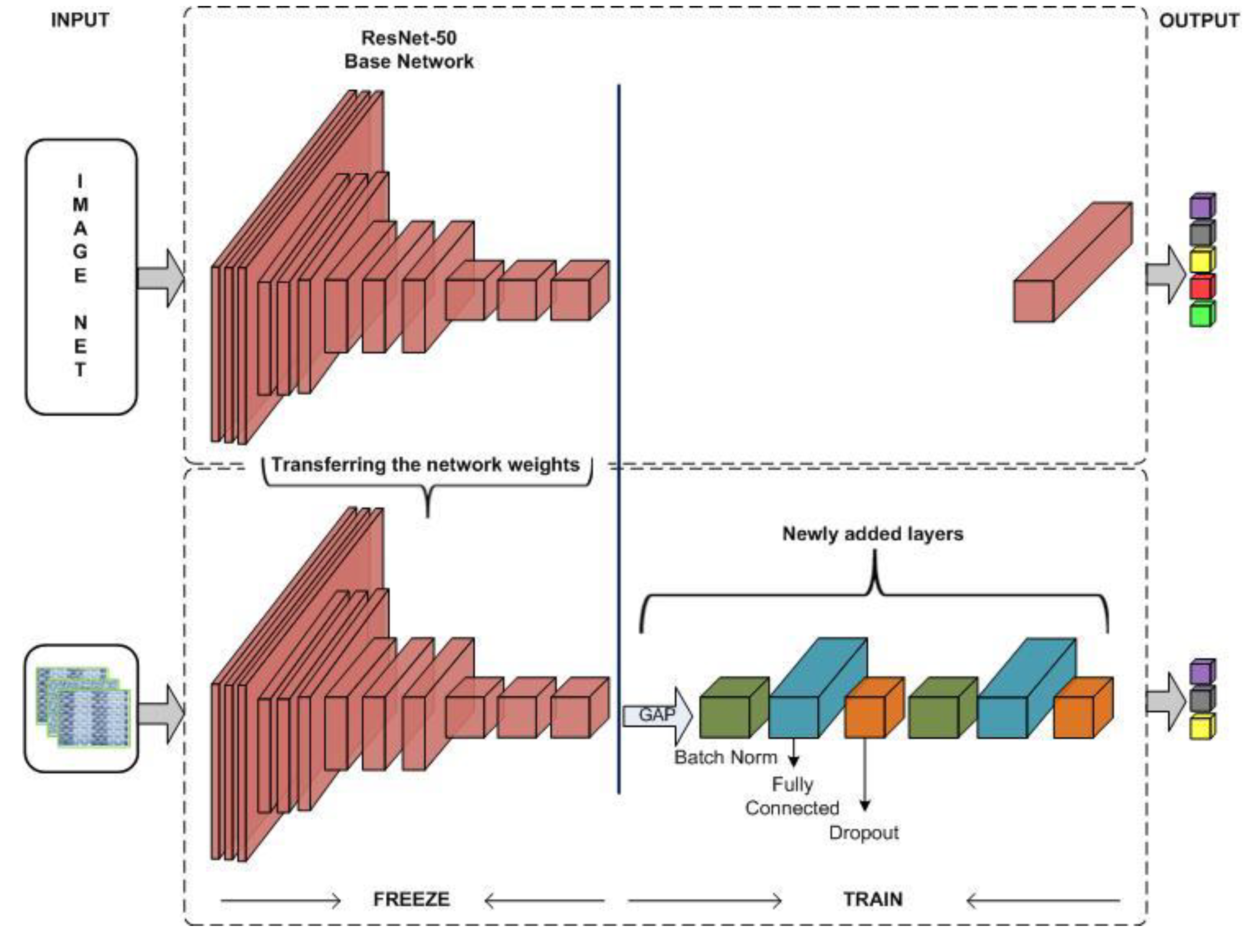

2.4. Proposed Model

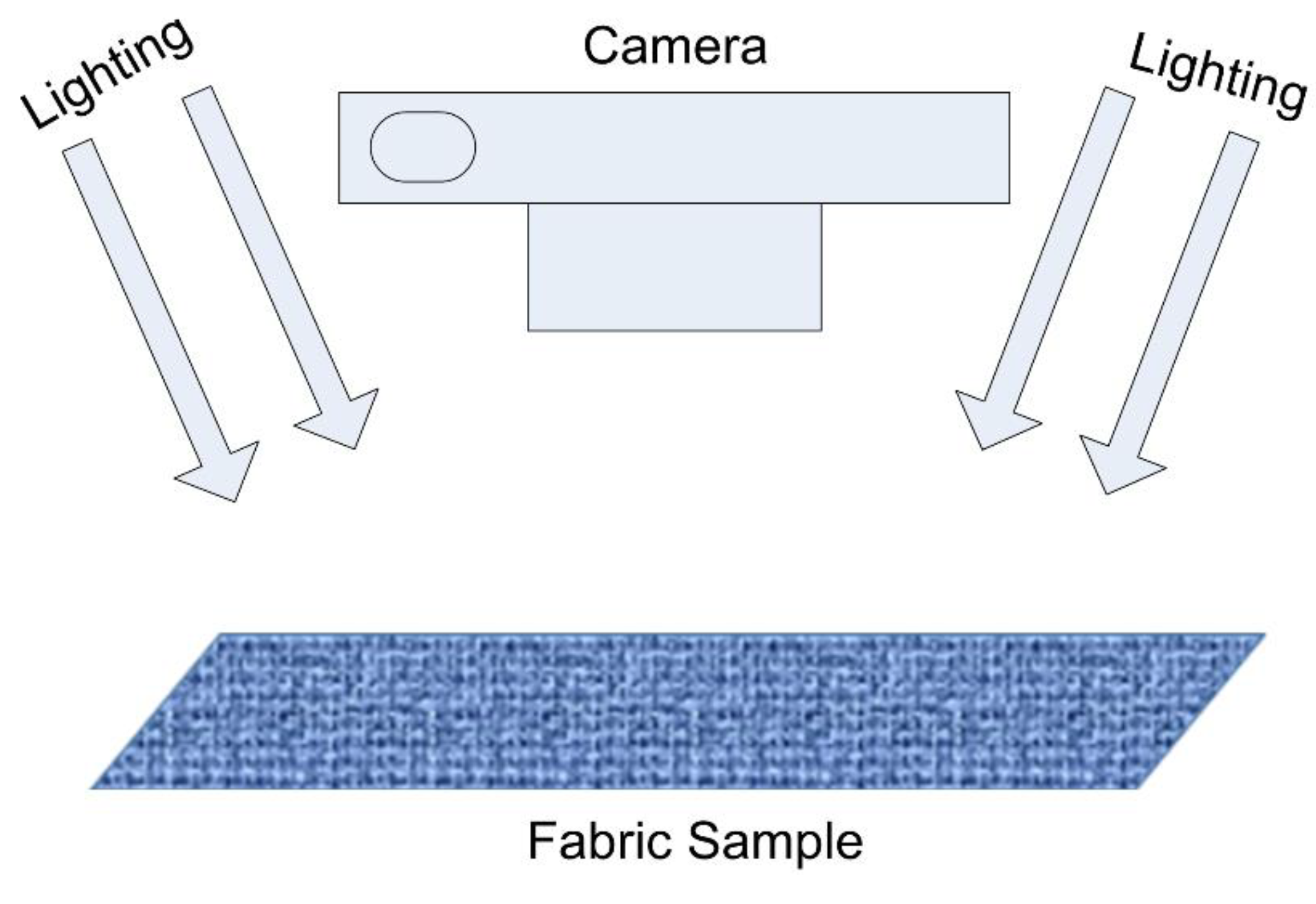

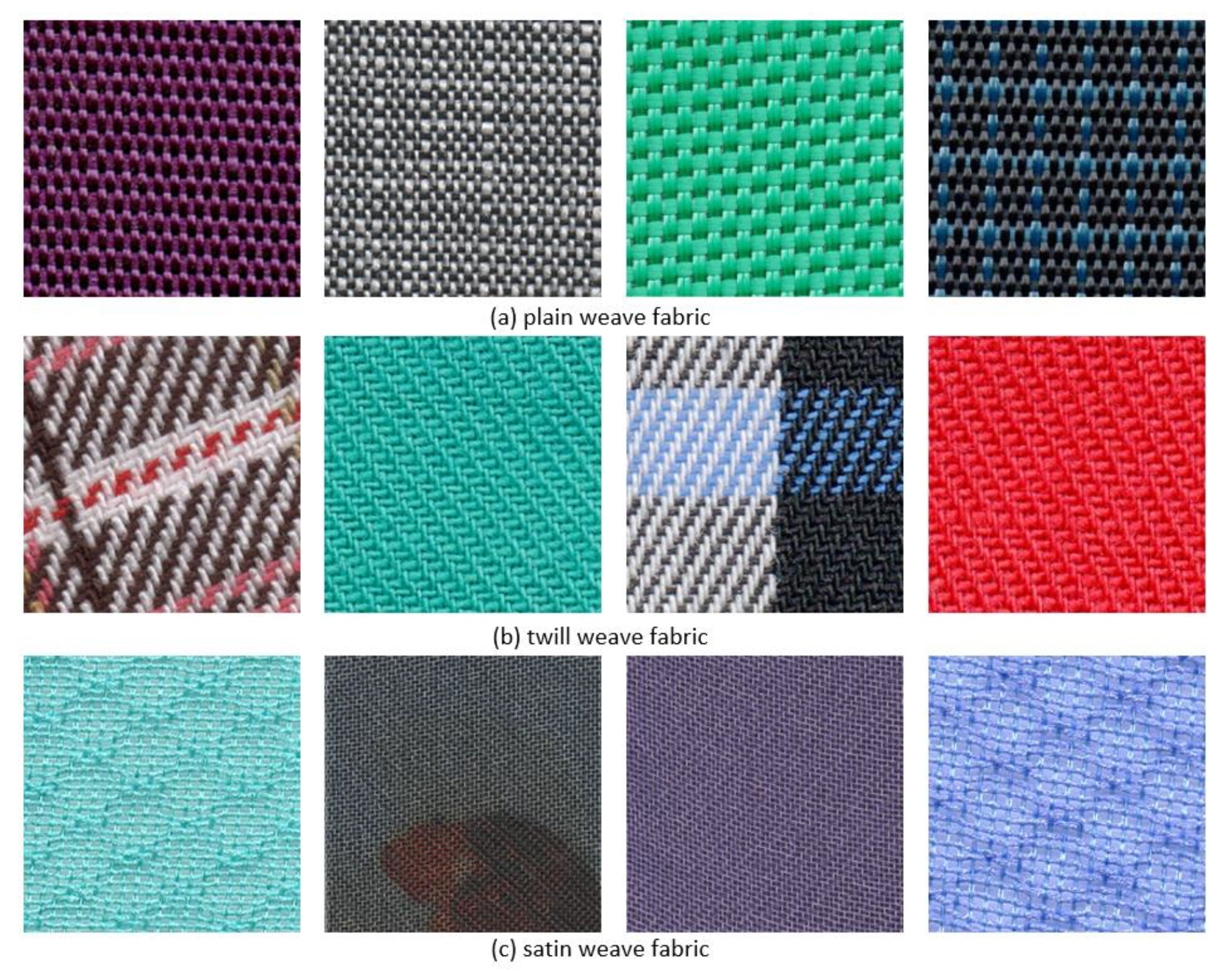

2.5. Dataset

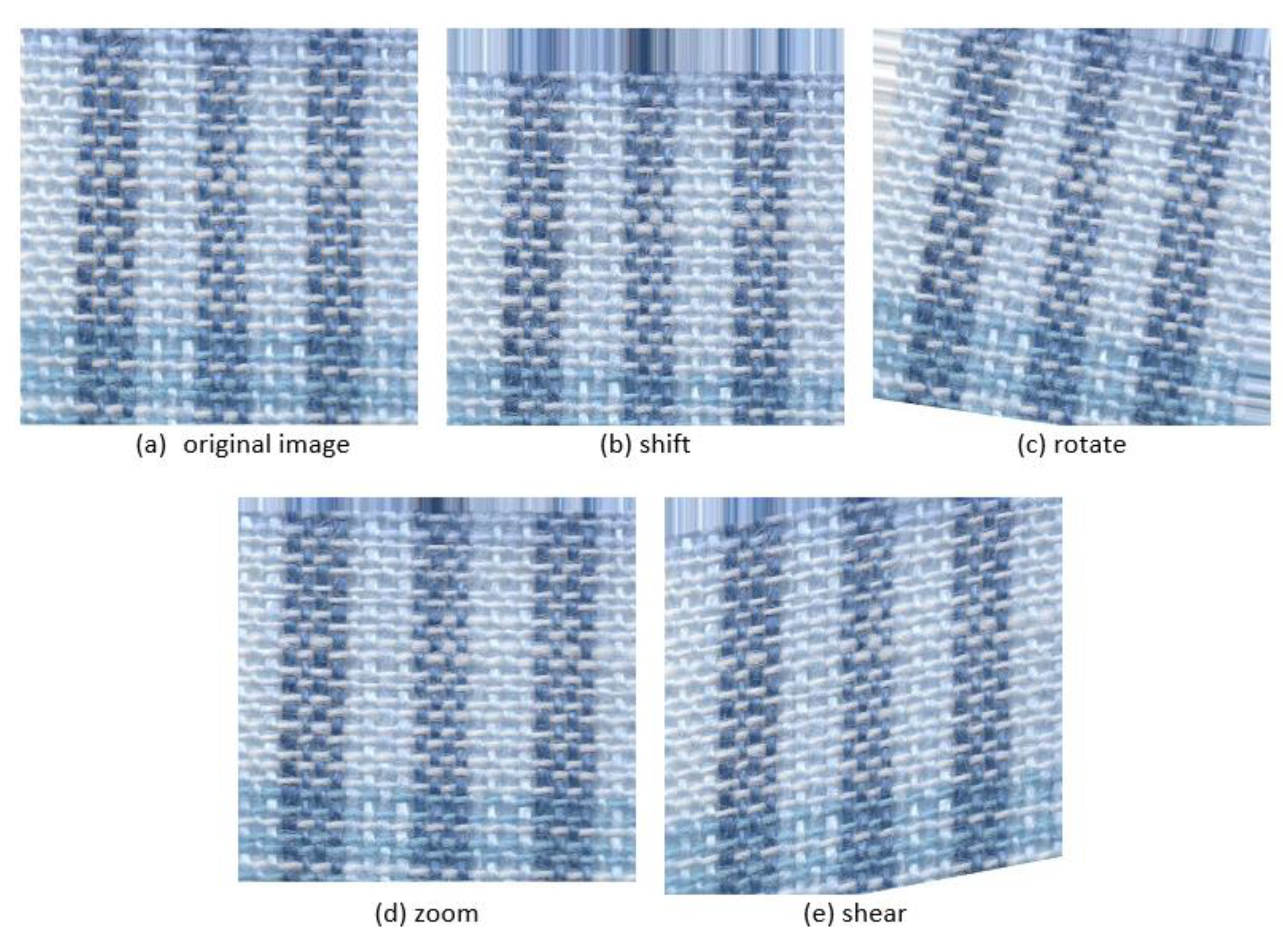

2.6. Data Augmentation

3. Experimental Results

3.1. Experimental Framework

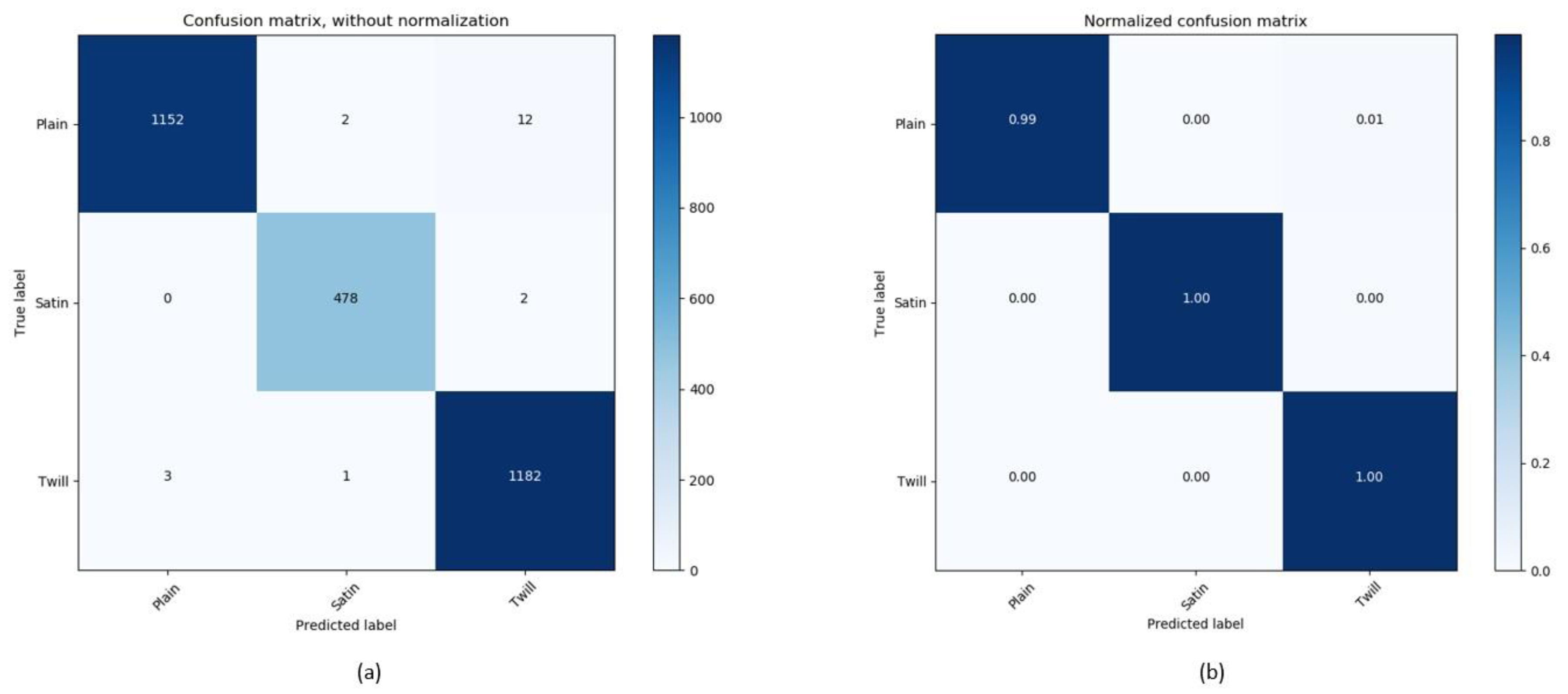

3.2. Results

Evaluation Metrics

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Riello, G.; Roy, T. How India Clothed the World: The World of South Asian Textiles, 1500–1850; Brill: Leiden, The Netherlands, 2009. [Google Scholar]

- Osborne, E.F. Weaving Process for Production of a Full Fashioned Woven Stretch Garment with Load Carriage Capability. Patent No. 7,841,369, 30 November 2010. [Google Scholar]

- Guarnera, G.C.; Hall, P.; Chesnais, A.; Glencross, M. Woven fabric model creation from a single image. ACM Trans. Graph. (TOG) 2017, 36, 1–13. [Google Scholar] [CrossRef]

- Shih, C.-Y.; Kuo, C.-F.J.; Cheng, J.-H. A study of automated color, shape and texture analysis of Tatami embroidery fabrics. Text. Res. J. 2016, 86, 1791–1802. [Google Scholar] [CrossRef]

- Jing, J.; Xu, M.; Li, P.; Li, Q.; Liu, S. Automatic classification of woven fabric structure based on texture feature and PNN. Fibers Polym. 2014, 15, 1092–1098. [Google Scholar] [CrossRef]

- Halepoto, H.; Gong, T.; Kaleem, K. Real-Time Quality Assessment of Neppy Mélange Yarn Manufacturing Using Macropixel Analysis. Tekstilec 2019, 62, 242–247. [Google Scholar] [CrossRef]

- Xiao, Z.; Nie, X.; Zhang, F.; Geng, L.; Wu, J.; Li, Y. Automatic recognition for striped woven fabric pattern. J. Text. Inst. 2015, 106, 409–416. [Google Scholar] [CrossRef]

- Kumar, K.S.; Bai, M.R. Deploying multi layer extraction and complex pattern in fabric pattern identification. Multimed. Tools Appl. 2020, 79, 10427–10443. [Google Scholar] [CrossRef]

- Li, J.; Wang, W.; Deng, N.; Xin, B. A novel digital method for weave pattern recognition based on photometric differential analysis. Measurement 2020, 152, 107336. [Google Scholar] [CrossRef]

- Kuo, C.-F.J.; Shih, C.-Y.; Ho, C.-E.; Peng, K.-C. Application of computer vision in the automatic identification and classification of woven fabric weave patterns. Text. Res. J. 2010, 80, 2144–2157. [Google Scholar] [CrossRef]

- Pan, R.; Gao, W.; Liu, J.; Wang, H. Automatic recognition of woven fabric patterns based on pattern database. Fibers Polym. 2010, 11, 303–308. [Google Scholar] [CrossRef]

- Pan, R.; Gao, W.; Liu, J.; Wang, H.; Zhang, X. Automatic detection of structure parameters of yarn-dyed fabric. Text. Res. J. 2010, 80, 1819–1832. [Google Scholar]

- Pan, R.; Gao, W.; Liu, J.; Wang, H. Automatic recognition of woven fabric pattern based on image processing and BP neural network. J. Text. Inst. 2011, 102, 19–30. [Google Scholar] [CrossRef]

- Kuo, C.-F.J.; Kao, C.-Y. Self-organizing map network for automatically recognizing color texture fabric nature. Fibers Polym. 2007, 8, 174–180. [Google Scholar]

- Fan, Z.; Zhang, S.; Mei, J.; Liu, M. Recognition of Woven Fabric based on Image Processing and Gabor Filters. In Proceedings of the 2017 IEEE 7th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Honolulu, HI, USA, 31 July–4 August 2017; pp. 996–1000. [Google Scholar]

- Schröder, K.; Zinke, A.; Klein, R. Image-based reverse engineering and visual prototyping of woven cloth. IEEE Trans. Vis. Comput. Graph. 2014, 21, 188–200. [Google Scholar] [CrossRef]

- Trunz, E.; Merzbach, S.; Klein, J.; Schulze, T.; Weinmann, M.; Klein, R. Inverse Procedural Modeling of Knitwear. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8630–8639. [Google Scholar]

- Yildiz, K. Dimensionality reduction-based feature extraction and classification on fleece fabric images. Signal Image Video Process. 2017, 11, 317–323. [Google Scholar] [CrossRef]

- Li, P.; Wang, J.; Zhang, H.; Jing, J. Automatic woven fabric classification based on support vector machine. In Proceedings of the International Conference on Automatic Control and Artificial Intelligence (ACAI 2012), Xiamen, China, 3–5 March 2012. [Google Scholar]

- Guo, Y.; Ge, X.; Yu, M.; Yan, G.; Liu, Y. Automatic recognition method for the repeat size of a weave pattern on a woven fabric image. Text. Res. J. 2019, 89, 2754–2775. [Google Scholar] [CrossRef]

- Xiao, Z.; Guo, Y.; Geng, L.; Wu, J.; Zhang, F.; Wang, W.; Liu, Y. Automatic Recognition of Woven Fabric Pattern Based on TILT. Math. Probl. Eng. 2018, 11, 1–12. [Google Scholar] [CrossRef]

- Khan, B.; Han, F.; Wang, Z.; Masood, R.J. Bio-inspired approach to invariant recognition and classification of fabric weave patterns and yarn color. Assem. Autom. 2016, 36, 152–158. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, C.; Li, C.; Ding, S.; Dong, Y.; Huang, Y. Fabric defect recognition using optimized neural networks. J. Eng. Fibers Fabr. 2019, 14. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Agrawal, P.; Girshick, R.; Malik, J. Analyzing the performance of multilayer neural networks for object recognition. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 Septemeber 2014; pp. 329–344. [Google Scholar]

- Yanai, K.; Kawano, Y. Food image recognition using deep convolutional network with pre-training and fine-tuning. In Proceedings of the 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar]

- Wang, N.; Li, S.; Gupta, A.; Yeung, D.-Y. Transferring rich feature hierarchies for robust visual tracking. arXiv 2015, arXiv:1501.04587. [Google Scholar]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 international interdisciplinary PhD workshop (IIPhDW), Swinoujście, Poland, 9–12 May 2018; pp. 117–122. [Google Scholar]

| Fabric Type | Precision | Recall | F1-Score | Number of Test Samples |

|---|---|---|---|---|

| Plain | 1.00 | 0.99 | 0.99 | 1166 |

| Twill | 0.99 | 1.00 | 0.99 | 480 |

| Satin | 0.99 | 1.00 | 0.99 | 1186 |

| Model | Precision | Recall | F1-Score | Accuracy | Balanced Accuracy |

|---|---|---|---|---|---|

| VGG-16 | 0.918 ± 0.032 | 0.923 ± 0.016 | 0.920 ± 0.010 | 0.924 ± 0.009 | 0.921 ± 0.013 |

| ResNet-50 | 0.983 ± 0.015 | 0.991 ± 0.006 | 0.986 ± 0.013 | 0.993 ± 0.003 | 0.991 ± 0.002 |

| Authors | Method | Accuracy | |

|---|---|---|---|

| Feature Extraction | Classification | ||

| Li et al. [19] | LBP + GLCM | SVM | 87.77 |

| Kuo et al. [14] | CIE + Co-occurrence matrix | SOM | 92.63 |

| Xiao et al. [21] | TILT + HOG | FCM | 94.57 |

| This work | ResNet-50 | 99.30 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iqbal Hussain, M.A.; Khan, B.; Wang, Z.; Ding, S. Woven Fabric Pattern Recognition and Classification Based on Deep Convolutional Neural Networks. Electronics 2020, 9, 1048. https://doi.org/10.3390/electronics9061048

Iqbal Hussain MA, Khan B, Wang Z, Ding S. Woven Fabric Pattern Recognition and Classification Based on Deep Convolutional Neural Networks. Electronics. 2020; 9(6):1048. https://doi.org/10.3390/electronics9061048

Chicago/Turabian StyleIqbal Hussain, Muhammad Ather, Babar Khan, Zhijie Wang, and Shenyi Ding. 2020. "Woven Fabric Pattern Recognition and Classification Based on Deep Convolutional Neural Networks" Electronics 9, no. 6: 1048. https://doi.org/10.3390/electronics9061048

APA StyleIqbal Hussain, M. A., Khan, B., Wang, Z., & Ding, S. (2020). Woven Fabric Pattern Recognition and Classification Based on Deep Convolutional Neural Networks. Electronics, 9(6), 1048. https://doi.org/10.3390/electronics9061048