Abstract

Epilepsy is a neurological disorder that affects 50 million people worldwide. It is characterised by seizures that can vary in presentation, from short absences to protracted convulsions. Wearable electronic devices that detect seizures have the potential to hail timely assistance for individuals, inform their treatment, and assist care and self-management. This systematic review encompasses the literature relevant to the evaluation of wearable electronics for epilepsy. Devices and performance metrics are identified, and the evaluations, both quantitative and qualitative, are presented. Twelve primary studies comprising quantitative evaluations from 510 patients and participants were collated according to preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines. Two studies (with 104 patients/participants) comprised both qualitative and quantitative evaluation components. Despite many works in the literature proposing and evaluating novel and incremental approaches to seizure detection, there is a lack of studies evaluating the devices available to consumers and researchers, and there is much scope for more complete evaluation data in quantitative studies. There is also scope for further qualitative evaluations amongst individuals, carers, and healthcare professionals regarding their use, experiences, and opinions of these devices.

1. Introduction

Epilepsy is a neurological disorder affecting 50 million people worldwide [1]. While seizures can be controlled with antiepileptic drugs, more than 30% of people with epilepsy have drug-resistant seizures [2]. The timely detection of seizures is important in hailing assistance that can reduce the potential for injuries and sudden unexpected death in epilepsy (SUDEP) events [3,4]. This paper reviews the literature relevant to qualitative and quantitative assessments of the wearable electronics available to individuals and researchers for the detection of epilepsy seizures.

The onset of epileptic seizures is associated with autonomic changes including flushing, sweating, and heart rate changes [5,6] that have the potential to be detected by wearable temperature, electrodermal activity (EDA), and optical pulse “photoplethysmography” (PPG) sensors, respectively. The seizures themselves can be convulsive or nonconvulsive. Convulsive seizures involve repeated involuntary contractions and relaxations of muscles that appear as repetitive, rhythmic, shaking motions. The pronounced motor activity of convulsive seizures makes them potentially recognisable with accelerometery. In contrast, nonconvulsive seizures can be difficult to detect; they can appear as simple absences or losses in muscle strength. Seizure types are described according to their type of presentation as tonic, clonic, tonic-clonic, myoclonic, atonic, and absence as summarised below:

- Tonic seizures (TS) associated with contractions of the muscles;

- Clonic seizures (CS) associated with repeated contractions and relaxation of muscles;

- Tonic-clonic seizures (TCS) associated with stiffening followed by shaking;

- Myoclonic seizures (MS) associated with twitching regions of muscles;

- Atonic seizures associated with loss of muscle strength;

- Absence seizures associated with individuals appearing detached or inattentive.

The management and treatment of epilepsy relies on the assessment of seizure presentation and frequency, but patient self-reports and carer recall can be unreliable [7] and patient seizure diaries can underestimate seizure frequency [8,9]. In a review of electroencephalography (EEG) and other seizure reporting technologies for epilepsy treatment, Bidwell et al. [10] highlighted “a strong need for better distinguishing between patients exhibiting generalized and partial seizure types as well as achieving more accurate seizure counts“ but concluded that high false positive seizure detection rates meant that most technologies failed to surpass patient self-reporting performance.

Whilst EEG is used in clinical laboratory settings for seizure assessment and diagnosis, new research toward wearable ambulatory EEG sensing [11] offers future opportunities for assessment and monitoring beyond the clinical environment. However, currently, despite “great interest in the use of wearable technology across epilepsy service users, carers, and healthcare professionals” [7], the monitoring of seizures outside the clinic, in real-world settings and during the activities of everyday living, is limited to the sensing afforded by a small set of available wearable epilepsy seizure-sensing devices. Additionally, some nonwearable devices are available, for example, sensors designed to attach to a bed or mattress to detect night-time seizures; however, the focus of this review is on wearable devices.

1.1. Wearable Electronics for Epilepsy Seizure Detection

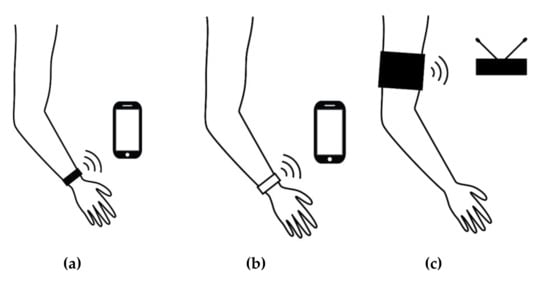

There has been strong interest and market growth in wrist-worn wearable health and well-being devices [12] incorporating digital thermometers for temperature, conductivity sensors for EDA, micro-electromechanical systems (MEMS) for accelerometery, and light-emitting diodes (LEDs) and photodiodes for PPG pulse wave detection [13], as well as new advances toward flexible skin-inspired sensors [14]. Despite reliability concerns related to ambulatory sensing [15], wearable devices are increasingly used in clinical and healthcare applications. Low- and mid-range wearable devices typically comprise optical PPG pulse, EDA, temperature, and three-axis accelerometer sensors [16,17,18,19]. As illustrated in Figure 1, wearable electronics for epilepsy seizure detection, based on wrist- and arm-worn sensor configurations, are now available to individuals and researchers for the purpose of detecting and reporting seizures and alerting carers for timely assistance. Additional sensors such as gyroscopes and GPS (global positioning system) receivers can detect rotational movements and location, respectively. Signals from these sensors can be used to detect “preictal” periods before a seizure by electrodermal activity and heart rate changes, or, during the seizure, shaking motor movements (or lack of movement during absences), and can be used to locate, report, and log seizure events. It is, however, difficult to reliably detect seizures in everyday life [20], and the challenge of disambiguating seizures and normal everyday (seizure-like) movements such as teeth brushing may result in false alarms that require repeated cancellations and which may disincentivise uptake among patients.

Figure 1.

Wearables and apps for epilepsy seizure detection: (a) dedicated wrist-worn sensing device and companion app (e.g., Embrace and Epi-Care free); (b) app using sensed data from a compatible consumer wrist-worn tracker (e.g., the SmartWatch Inspyre app with an Apple or Samsung device); (c) a non-wrist wearable with a base station (NightWatch).

Table 1 summarises the currently available wearable consumer seizure-detecting devices that have been evaluated in the literature. These include the Embrace seizure-detecting wrist-worn sensor, developed by Empatica [21]. Embrace is a maturing product that is available to consumers via device purchase and monthly subscription (subscriptions, at the time of writing, are £9.90–£44.90 per month). Empatica also market an “E4” (previously “E3”) research version of their Embrace device that provides researchers with access to the raw sensor data that can be used to test seizure-detecting algorithms. Also, as shown in Table 1, other devices reported in the literature include the Epi-Care free [22], NightWatch [23], and SmartWatch [24]. Epi-Care free is a wrist-worn (or ankle-worn) sensor incorporating an accelerometer, gyroscope, and GPS to detect seizure motor activity and send alerts to family members or telecare services (subscriptions, at the time or writing, are £995 and £1115 per year). The NightWatch sensor is an armband wearable that senses pulse and activity to detect and report nocturnal seizures. The Smart Monitor SmartWatch is a seizure detector that makes use of wearable heart rate and activity data (originally from prototype wearable devices and now the app, named “Inspyre”, can access data from compatible Apple and Samsung Galaxy and Gear watches) and summon help to the GPS location of the wearer (subscriptions, at the time of writing, are from £9.99 to £24.99 per month). Other wearable consumer products for epilepsy seizure detection include Brio, Epilert (no longer available), Pulse Companion, and Open Seizure Detector (App). However, these devices have not been assessed in the literature.

Table 1.

Wearable electronics for epilepsy seizure detection.

Detection Performance

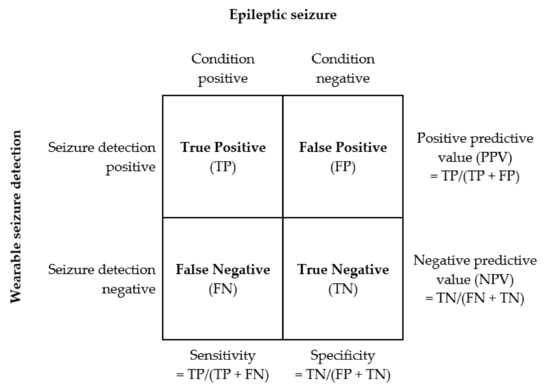

Figure 2 summarises seizure detections in terms of true/false and positive/negative outcomes and the related sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV), and the associated formulae, including the false alarm rate (FAR), are summarised in Equations (1)–(6).

Sensitivity = TP/(FN + TP)

Specificity = TN/(TN + FP)

Positive Predictive Value (PPV)/Precision = TP/(TP + FP)

Negative Predictive Value (NPV) = TN/(FN + TN)

Accuracy = (TP + TN)/(TP + TN + FP + FN)

False Alarm Probability = FP/day

Figure 2.

Seizure detection performance metrics.

Ideally, assessments of wearable devices would present results for these metrics for significant numbers of subjects over sufficient duration for testing detection of a substantial number of seizures of different types. In addition, assessments would also ideally support repeatability by clearly specifying test conditions, device models, and, also, version information [25]. Given the importance of timely alerts for seizure detection and the need to reduce the anxiety and alarm fatigue associated with high false alarm rates (FARs), detection latency and FARs should also be reported.

2. Method

A systematic review of primary studies evaluating wearable seizure-detecting devices spanning almost fifteen years (from 1 January 2005 to 31 October 2019, when the review was initiated) was conducted with an evidence-based methodology [26,27] and in accordance with PRISMA guidelines [28]. A requirement of the review was that devices were identified and available to individuals or researchers (i.e., not unavailable, proof-of-concept, laboratory prototypes).

2.1. Search Strategy

Both technology and medical digital libraries were used to identify primary studies. These were Association for Computing Machinery (ACM), Institute of Electrical and Electronics Engineers (IEEE) Xplore Digital Library, Medline, ScienceDirect, and Wiley Online Library.

The keyword search string below was evolved to identify primary studies relevant to wearable epilepsy sensing devices:

(“wearable” OR “smart watch” OR “smart watch” OR “wrist-worn” OR “wrist worn” OR “wrist worn” OR “wristband” OR “armband”) AND (“epileptic” OR “epilepsy”).

2.2. Eligibility Criteria and Selection

Studies were eligible for selection if they met all three of the following inclusion criteria:

- Primary studies in peer-reviewed literature;

- Studies where the main theme is consumer wearable electronics for epilepsy seizure detection;

- Studies reporting quantitative and/or qualitative assessment data.

The relevant papers were assessed for quality according to screening criteria including rigour, credibility, and relevance [29].

3. Results

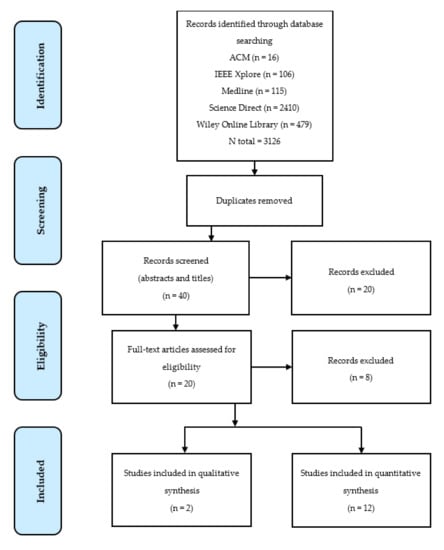

Following the PRISMA systematic review guidance outlined in Figure 3, a total of 12 papers satisfied the eligibility criteria. A second researcher checked the screening and eligibility of papers and a third researcher moderated the results.

Figure 3.

Flow diagram of the systematic review according to preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines.

As summarised in Table 2, all 12 studies reported qualitative assessments (8 conducted in clinical settings and 4 in free-living conditions). Two of the 12 studies also reported qualitative assessments. While the search process did initially identify qualitative papers on wearable devices for epilepsy, some of these studies [30,31] were assessments of perceptions about the potential of such devices rather than assessments of actual use. No studies reported solely qualitative assessment data for the real use of available wearable devices for seizure detection.

Table 2.

Overview of studies and participant numbers.

3.1. Quantitative Studies

3.1.1. Clinical Setting

Eight of the 12 quantitative studies were conducted in clinical settings. All eight were evaluation studies [32,33,34,35,36,37,38,39] with data gathered from epileptic inpatients and outpatients; none were two-arm or controlled studies with healthy participants. Most studies compared recorded device data with other clinical reference recordings, including EEG, video EEG (vEEG), electromyography (EMG), and electrocardiogram (ECG). The studies are summarised in Table 3 in terms of the devices used, the numbers of participants, the numbers of seizures detected, and, where specified, the study duration. As shown in the summary in Table 3, four of the studies used Empatica E3, E4, and Embrace devices, three used Smart Monitor’s evolving SmartWatch devices, and one used the Epi-Care free. The numbers of patient participants varied from 3 to 135. A study [33] with three participants selected 1 h recorded segments rather than continuous recordings. Otherwise, observation durations varied within studies [33,38,39] as well as between studies from 17 h to 487 days, and two studies [35,37] did not report durations. The total number of seizures detected in studies varied from 7 and 55 and, across all studies, a total of 226 seizures were reported as detected. Only one study [33] did not report the number of detected seizures.

Table 3.

Clinical setting studies with number of seizures and duration.

Table 4 summarises the performance assessments of the studies. The reporting of performance metrics was variable and sparse across most of the studies. For example, false alarm rates for only three studies could be identified. The studies using the Empatica E3 and E4 implemented machine learning detection methods (kNN: k-nearest neighbour; RF: random forest; NB: naïve Bayes; SVM: support vector machine). Regalia et al., 2019 [35] made brief reference to previously unpublished assessments with 135 patients and 22 seizures with 100% sensitivity and an FAR of 0.42 per day for a “fixed and frozen” algorithm. No methodology, sensitivity, or other assessment information was provided, and the paper largely focused on compiling and comparing other Empatica wristband performance indicators. Heldberg et al., 2015 [32] reported the sensitivity and specificity for two different classifiers. Vandecasteele et al. [34] compared the performance of SVM classifiers on hospital ECG with wearable ECG and E4 PPG recordings. PPG motion artefacts (which would have been largely induced by the seizures themselves) made more than half of the seizures undetectable via this approach and resulted in a poor sensitivity of 32%. The studies encompassed different seizure types but with TCS and “motor” seizures often included. Dramatically different performance results were observed. For example, sensitivities of 100% and 16% were reported by the authors of [35] and [37], respectively. Notably, the latter paper [37] comprised a large number of (undetected) nonmotor seizures. The levels of patient activity and any movement constraints were not generally explicitly reported and, in any case, are difficult to convey. However, in the clinical setting, worn sensors usually benefit from reduced interference from activities of daily living. For example, the good wearable performance for the small study in [33] was achieved from recordings taken simultaneously with EEGs, i.e., when one would expect patients to be inactive.

Table 4.

Performance assessments in clinical settings.

Smart Monitor’s SmartWatch was used in three of the eight clinical assessments. Patterson et al. [37] reported the lowest sensitivity (16% overall: 31% for general tonic-clonic (GTC) and 0% for MS) in a study of 41 patients aged 5–41 years. Citing Lockman et al. [36], the authors did not record false positives “because these are well known”. Lockman et al. [36] did report 204 false alarm occurrences in their SmartWatch study with 40 patients between “March 2009 and June 2010” but did not specify an FAR or confirm the duration of actual usage within the study period. Velez et al. [38] referred to 81 false alarms but also did not specify an FAR (and one cannot be estimated because of the varying durations of 1–9 days). Beniczky et al. [39] reported a sensitivity of 90% and an FAR of 0.2 per day in a study with 73 participants with GTC seizures who were monitored for 17–171 hours. An average detection latency of 55 s was reported.

3.1.2. Free-Living Environment

Four of the 12 quantitative studies report free-living environment evaluations. These studies are summarised in Table 5 and Table 6 and comprise 169 participants and 850 seizures.

Table 5.

Free-living studies with number of seizures and duration.

Table 6.

Performance metrics in a free-living setting.

Onorati et al. [40] reported a range of classifier performances for the E3 and E4 with sensitivities from 83.64% to 94.55% and FARs of between 0.2 and 0.29 per day. Van de Vel et al. [3] and Meritam et al. [8] both reported Epi-Care free evaluations with 71 and 1 participants, respectively. For the 71 patients [8], a sensitivity of 90% and an FAR of 0.1 per day were reported. Arends et al. [41] reported a sensitivity of 86% for the NightWatch arm-worn nocturnal seizure monitor, an FAR of 0.25 per night, and a PPV of 49%.

3.1.3. Data Failures—Missing and Unusable Data

In addition to missed seizures caused by algorithms failing to detect seizures in acquired data, seizures can also be missed when data are not recorded, not received, or not usable (for example, if they are so corrupted as to be unusable). There were limited discussions of data failures or the “missingness” of data in the studies. Examples are summarised in Table 7.

Table 7.

Missing data.

3.2. Qualitative Studies

Only two studies provided qualitative assessment data for device evaluations. Both of these studies also reported quantitative evaluations that were included in the earlier sections. Summaries of patient and stakeholder views and observations are listed in Table 8.

Table 8.

Qualitative studies.

Arends et al., 2018 [41] evaluated the NightWatch night-time upper arm seizure monitor using a multifactor questionnaire with 33 carer stakeholder respondents comprising 30 nurses, 2 parent carers, and 1 “not specified”. Meritam et al., 2018 [8] performed a qualitative evaluation of the Epi-Care free monitor with 71 patient participants aged 7–72 years using a post-study systems usability questionnaire (PSSUQ) comprising 13 questions and requiring a 1–7 Likert-scale response from participants on aspects on monitor usability.

Both studies identified concerns in terms of (a) physical intrusion, e.g., discomfort or irritation, and (b) performance concerns, e.g., signal reception or detection failures. Participants in both studies agreed with the benefits of the monitors in terms of the potential for improved responses to seizure events and the potential for improved care outcomes.

4. Discussion

The aim of this review was to collate and analyse qualitative and quantitative assessments of wearable electronics for epilepsy seizure monitoring that are available to individuals and researchers. Although there are over 3000 works in the literature discussing, proposing, and evaluating novel and incremental approaches to epilepsy seizure detection, there are very few that report evaluation data and, as observed previously [42], none that report comparative results of large-scale studies. In terms of the Oxford Centre for Evidence-Based Medicine 2011 Levels of Evidence 1–5 scale [43], none of the reviewed studies would qualify as the highest level of evidence (Level 1), and most would rank as Level 3 or below.

The diversity of the reviewed studies in terms of motor and nonmotor seizure types and levels of patient activity/freedom of movement is matched by the diversity of results including, for example, very high and very low sensitivities.

Across the reviewed works there was a lack of full detail, including details required to establish important metrics such as false alarm rates (FARs) and details important to reproducibility such as device, firmware, and app version numbers [44]. Ideally, the frequency, duration, impact, and cause of all data recording failures (resulting in the “missingness” of data) would also be provided in all performance assessment studies. There was also a lack of detail regarding the performance of the devices themselves in terms of seizure detection and estimation of key parameters such as heart rate. In a recent study [45], researchers compared consumer-grade and research-grade heart rate (HR) and heart rate variability (HRV) estimating wearables (including the Empatica E4 and two other HR sensing devices) and observed that “while the research-grade wearables are the only wearables that provide users with raw data that can be used to visualize PPG waveforms and calculate HRV, the HR measurements tended to be less accurate than consumer-grade wearables. This is especially important for researchers and clinicians to be aware of when choosing devices for clinical research and clinical decision support.” [45] This very difficult problem of achieving accurate and reliable continuous sensor data in nonsedentary scenarios is highly significant and worthy of more attention if researchers are to develop robust methods and make valid conclusions from acquired data.

Wearable electronic devices for epilepsy seizure detection have the potential to improve patient outcomes and to afford carers more freedom. However, the technology is still evolving. There are opportunities for improvements in system reliability and algorithm detection performance and, ideally, monitors would be sensitive across the range of seizure types whilst maintaining acceptably low false alarm rates. Ideally, future seizure sensing systems and algorithms would benefit from detailed qualitative and quantitative assessments of their performance. However, we should appreciate that assessing technology in critical health scenarios is not easy. Clinical assessments are onerous and resource-expensive undertakings, and their timescales are at odds with the iterative updating of digital technologies. Free-living assessments in particular require investments in time and resources, and they present additional difficulties in terms of truth data.

5. Conclusions

There is much scope for further research and improved performance reporting of wearable devices for epilepsy seizure detection and monitoring. There is a lack of qualitative studies eliciting feedback and stakeholder recommendations from real-world experiences of device usage. Ideally, future studies will report on the data quality and reliability of the sensing devices and provide much more detailed information regarding assessments, including device model and version numbers as well as detailed contextual information about the wearers and their activity.

Author Contributions

Conceptualisation, T.K., T.R.; methodology, T.K., T.R., S.I.W.; formal analysis, T.R.; investigation, T.R., T.C. and S.I.W; writing—original draft preparation, T.R., S.I.W.; writing—review and editing, T.R., S.I.W., T.C.; visualisation, T.C. and S.I.W.; project administration, T.K. and S.I.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

Tendai Rukasha thanks Evaresto Rukasha and Betty Rukasha for PhD funding and thanks Pearl Brereton for her comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BTCS | Bilateral tonic-clonic seizures |

| CPS | Complex partial seizures |

| CS | Clonic seizures |

| ECG | Electrocardiogram |

| EDA | Electrodermal activity |

| EMG | Electromyography |

| FN | False negative |

| FNV/R | False negative value/rate |

| FP | False positive |

| FPV/R | False positive value/rate |

| FS | Focal seizures |

| FTC | Focal tonic–clonic |

| GTC | General tonic-clonic |

| HRV | Heart rate variability |

| kNN | k-nearest neighbour |

| MS | Myoclonic seizures |

| MTS | Myoclonic-tonic seizures |

| NB | Naïve Bayes classifier |

| NPV/R | Negative predictive value/rate |

| PMS | Predominantly motor seizures |

| PNMS | Predominantly non-motor seizures |

| PPG | Photoplethysmography |

| PPV/R | Positive predictive value/rate |

| PRV | Pulse rate variability |

| PS | Partial onset seizures |

| RF | Random forest |

| SUDEP | Sudden unexpected death in epilepsy |

| SVM | Support vector machine |

| TCS | Tonic-clonic seizures |

| TLS | Temporal lobe seizures |

| TN | True negative |

| TP | True positive |

| TPV/R | True positive value/rate |

| TS | Tonic seizures |

| vEEG | Video electroencephalogram |

References

- WHO. Epilepsy: A Public Health Imperative; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Sheng, J.; Liu, S.; Qin, H.; Li, B.; Zhang, X. Drug-resistant epilepsy and surgery. Curr. Neuropharmacol. 2018, 16, 17–28. [Google Scholar] [CrossRef] [PubMed]

- Van de Vel, A.; Verhaert, K.; Ceulemans, B. Critical evaluation of four different seizure detection systems tested on one patient with focal and generalized tonic and clonic seizures. Epilepsy Behav. 2014, 37, 91–94. [Google Scholar] [CrossRef] [PubMed]

- Van Andel, J.; Thijs, R.; de Weerd, A.; Arends, J.; Leijten, F. Non-EEG based ambulatory seizure detection designed for home use: What is available and how will it influence epilepsy care? Epilepsy Behav. 2016, 57, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Wannamaker, B.B. Autonomic Nervous System and Epilepsy. Epilepsia 1985, 26, S31–S39. [Google Scholar] [CrossRef] [PubMed]

- Baumgartner, C.; Lurger, S.; Leutmezer, F. Autonomic symptoms during epileptic seizures. Epileptic Disord. 2001, 3, 103–116. [Google Scholar] [PubMed]

- Bruno, E.; Simblett, S.; Lang, A.; Biondi, A.; Odoi, C.; Schulze-Bonhage, A.; Wykes, T.; Richardson, M. Wearable technology in epilepsy: The views of patients, caregivers, and healthcare professionals. Epilepsy Behav. 2018, 85, 141–149. [Google Scholar] [CrossRef] [PubMed]

- Meritam, P.; Ryvlin, P.; Beniczky, S. User-based evaluation of applicability and usability of a wearable accelerometer device for detecting bilateral tonic-clonic seizures: A field study. Epilepsia 2018, 59, 48–52. [Google Scholar] [CrossRef] [PubMed]

- Fisher, R.S.; Blum, D.E.; DiVentura, B.; Vannest, J.; Hixson, J.D.; Moss, R.; Herman, S.T.; Fureman, B.E.; French, J.A. Seizure diaries for clinical research and practice: Limitations and future prospects. Epilepsy Behav. 2012, 24, 304–310. [Google Scholar] [CrossRef] [PubMed]

- Bidwell, J.; Khuwatsamrit, T.; Askew, B.; Ehrenberg, J.; Helmers, S. Seizure reporting technologies for epilepsy treatment: A review of clinical information needs and supporting technologies. Seizure 2015, 32, 109–117. [Google Scholar] [CrossRef] [PubMed]

- Casson, A.J.; Yates, D.C.; Smith, S.J.; Duncan, J.S.; Rodriguez-Villegas, E. Wearable electroencephalography. IEEE Eng. Med. Biol. Mag. 2010, 29, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Mukhopadhyay, S.C. Wearable sensors for human activity monitoring: A review. IEEE Sens. J. 2014, 15, 1321–1330. [Google Scholar] [CrossRef]

- Collins, T.; Pires, I.; Oniani, S.; Woolley, S. How Reliable is Your Wearable Heart Rate Monitor? The Conversation, Health+ Medicine. Available online: https://theconversation.com/how-reliable-is-your-wearable-heart-rate-monitor-98095 (accessed on 7 June 2020).

- Xu, K.; Lu, Y.; Takei, K. Multifunctional Skin-Inspired Flexible Sensor Systems for Wearable Electronics. Adv. Mater. Technol. 2019, 4, 1800628. [Google Scholar] [CrossRef]

- Oniani, S.; Woolley, S.I.; Pires, I.M.; Garcia, N.M.; Collins, T.; Ledger, S.; Pandyan, A. Reliability assessment of new and updated consumer-grade activity and heart rate monitors. In Proceedings of the IARIA Conference on Sensor Device Technologies and Applications SENSORDEVICES, Venice, Italy, 16–20 September 2018. [Google Scholar]

- Kamišalić, A.; Fister, I.; Turkanović, M.; Karakatič, S. Sensors and functionalities of non-invasive wrist-wearable devices: A review. Sensors 2018, 18, 1714. [Google Scholar] [CrossRef] [PubMed]

- Tamura, T.; Maeda, Y.; Sekine, M.; Yoshida, M. Wearable photoplethysmographic sensors—past and present. Electronics 2014, 3, 282–302. [Google Scholar] [CrossRef]

- Sarcevic, P.; Kincses, Z.; Pletl, S. Online human movement classification using wrist-worn wireless sensors. J. Ambient Intell. Humaniz. Comput. 2019, 10, 89–106. [Google Scholar] [CrossRef]

- Sharma, N.; Gedeon, T. Objective measures, sensors and computational techniques for stress recognition and classification: A survey. Comput. Methods Programs Biomed. 2012, 108, 1287–1301. [Google Scholar] [CrossRef] [PubMed]

- Johansson, D.; Malmgren, K.; Alt Murphy, M. Wearable sensors for clinical applications in epilepsy, Parkinson’s disease, and stroke: A mixed-methods systematic review. J. Neurol. 2018, 265, 1740–1752. [Google Scholar] [CrossRef] [PubMed]

- Empatica Inc. Boston, USA and Empatica Srl, Milano, Italy (E3, E4 and Embrace). Available online: https://www.empatica.com (accessed on 12 May 2020).

- Danish Care Technology (Epi-Care Free), Sorø, Denmark, UK. Available online: https://danishcare.co.uk/epicare-free/ (accessed on 12 May 2020).

- LivAssured, B.V. (NightWatch), Leiden, The Netherlands. Available online: https://www.nightwatch.nl/ (accessed on 12 May 2020).

- Smart Monitor (SmartWatch Inspyre), San Jose, USA. Available online: https://smart-monitor.com/about-smartwatch-inspyre-by-smart-monitor/ (accessed on 12 May 2020).

- Collins, T.; Woolley, S.I.; Oniani, S.; Pires, I.M.; Garcia, N.M.; Ledger, S.J.; Pandyan, A. Version Reporting and Assessment Approaches for New and Updated Activity and Heart Rate Monitors. Sensors 2019, 19, 1705. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.A.; Dyba, T.; Jorgensen, M. May Evidence-based software engineering. In Proceedings of the 26th International Conference on Software Engineering, Edinburgh, UK, 28 May 2004. [Google Scholar]

- Kitchenham, B.A.; Budgen, D.; Brereton, P. Evidence-based Software Engineering and Systematic Reviews; Taylor and Francis Group: Boca Raton, FL, USA, 2015. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

- Dybå, T.; Dingsøyr, T. Empirical studies of agile software development: A systematic review. Inf. Softw. Technol. 2008, 50, 833–859. [Google Scholar] [CrossRef]

- Kramer, U.; Kipervasser, S.; Shlitner, A.; Kuzniecky, R. A Novel Portable Seizure Detection Alarm System: Preliminary Results. J. Clin. Neurophysiol. 2011, 28, 36–38. [Google Scholar] [CrossRef] [PubMed]

- Ozanne, A.; Johansson, D.; Hällgren Graneheim, U.; Malmgren, K.; Bergquist, F.; Alt Murphy, M. Wearables in epilepsy and Parkinson’s disease-A focus group study. Acta Neurol. Scand. 2017, 137, 188–194. [Google Scholar] [CrossRef] [PubMed]

- Heldberg, B.; Kautz, T.; Leutheuser, H.; Hopfengartner, R.; Kasper, B.; Eskofier, B. Using wearable sensors for semiology-independent seizure detection—Towards ambulatory monitoring of epilepsy. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5593–5596. [Google Scholar]

- Al-Bakri, A.; Villamar, M.; Haddix, C.; Bensalem-Owen, M.; Sunderam, S. Noninvasive seizure prediction using autonomic measurements in patients with refractory epilepsy. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 2422–2425. [Google Scholar]

- Vandecasteele, K.; De Cooman, T.; Gu, Y.; Cleeren, E.; Claes, K.; Paesschen, W.; Huffel, S.; Hunyadi, B. Automated Epileptic Seizure Detection Based on Wearable ECG and PPG in a Hospital Environment. Sensors 2017, 17, 2338. [Google Scholar] [CrossRef] [PubMed]

- Regalia, G.; Onorati, F.; Lai, M.; Caborni, C.; Picard, R. Multimodal wrist-worn devices for seizure detection and advancing research: Focus on the Empatica wristbands. Epilepsy Res. 2019, 153, 79–82. [Google Scholar] [CrossRef] [PubMed]

- Lockman, J.; Fisher, R.; Olson, D. Detection of seizure-like movements using a wrist accelerometer. Epilepsy Behav. 2011, 20, 638–641. [Google Scholar] [CrossRef] [PubMed]

- Patterson, A.; Mudigoudar, B.; Fulton, S.; McGregor, A.; Poppel, K.; Wheless, M.; Brooks, L.; Wheless, J. SmartWatch by SmartMonitor: Assessment of Seizure Detection Efficacy for Various Seizure Types in Children, a Large Prospective Single-Center Study. Pediatric Neurol. 2015, 53, 309–311. [Google Scholar] [CrossRef] [PubMed]

- Velez, M.; Fisher, R.; Bartlett, V.; Le, S. Tracking generalized tonic-clonic seizures with a wrist accelerometer linked to an online database. Seizure 2016, 39, 13–18. [Google Scholar] [CrossRef] [PubMed]

- Beniczky, S.; Polster, T.; Kjaer, T.; Hjalgrim, H. Detection of generalized tonic-clonic seizures by a wireless wrist accelerometer: A prospective, multicenter study. Epilepsia 2013, 54, 58–61. [Google Scholar] [CrossRef] [PubMed]

- Onorati, F.; Regalia, G.; Caborni, C.; Migliorini, M.; Bender, D.; Poh, M.; Frazier, C.; Kovitch Thropp, E.; Mynatt, E.; Bidwell, J.; et al. Multicenter clinical assessment of improved wearable multimodal convulsive seizure detectors. Epilepsia 2017, 58, 1870–1879. [Google Scholar] [CrossRef] [PubMed]

- Arends, J.; Thijs, R.; Gutter, T.; Ungureanu, C.; Cluitmans, P.; Van Dijk, J.; van Andel, J.; Tan, F.; de Weerd, A.; Vledder, B.; et al. Multimodal nocturnal seizure detection in a residential care setting. J. Neurol. 2018, 91, 2010–2019. [Google Scholar] [CrossRef] [PubMed]

- Jory, C.; Shankar, R.; Coker, D.; McLean, B.; Hanna, J.; Newman, C. Safe and sound? A systematic literature review of seizure detection methods for personal use. Seizure 2016, 36, 4–15. [Google Scholar] [CrossRef] [PubMed]

- OCEBM Levels of Evidence Working Group. The Oxford Levels of Evidence 2. Oxford Centre for Evidence-Based Medicine. Available online: https://www.cebm.net/index.aspx?o=5653 (accessed on 7 June 2020).

- Woolley, S.; Collins, T.; Mitchell, J.; Fredericks, D. Investigation of wearable health tracker version updates. BMJ Health Care Inform. 2019, 26, e100083. [Google Scholar] [CrossRef] [PubMed]

- Bent, B.; Goldstein, B.A.; Kibbe, W.A.; Dunn, J.P. Investigating sources of inaccuracy in wearable optical heart rate sensors. NPJ Digit. Med. 2020, 3, 1–9. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).