Utilisation of Embodied Agents in the Design of Smart Human–Computer Interfaces—A Case Study in Cyberspace Event Visualisation Control

Abstract

1. Introduction

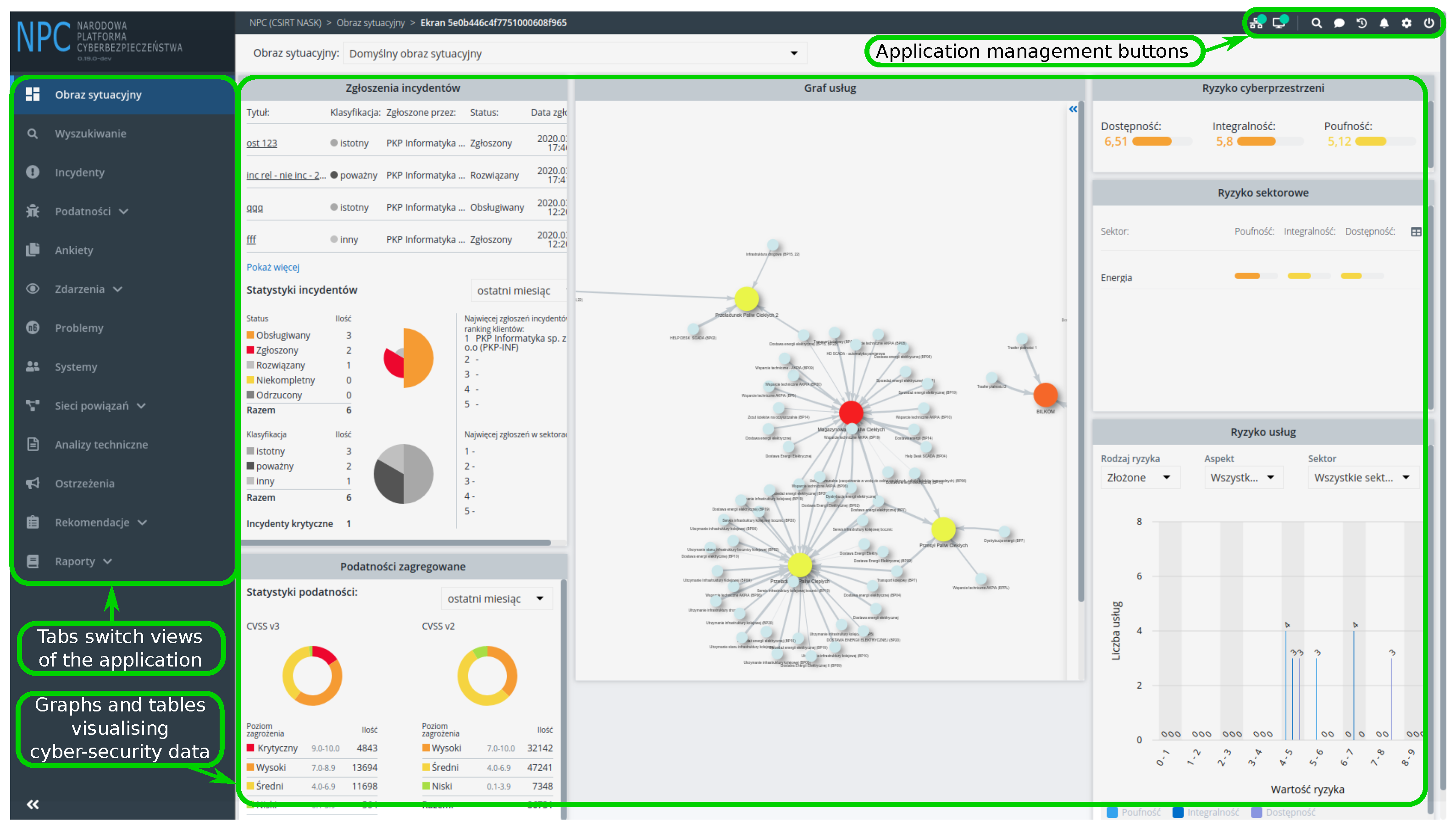

1.1. Cybersecurity Data Visualisation

1.2. Human–Computer Interface to Cybersecurity Data Visualisation

1.3. Agents

1.4. Goals and Structure of the Paper

2. Specification Method

2.1. Requirements

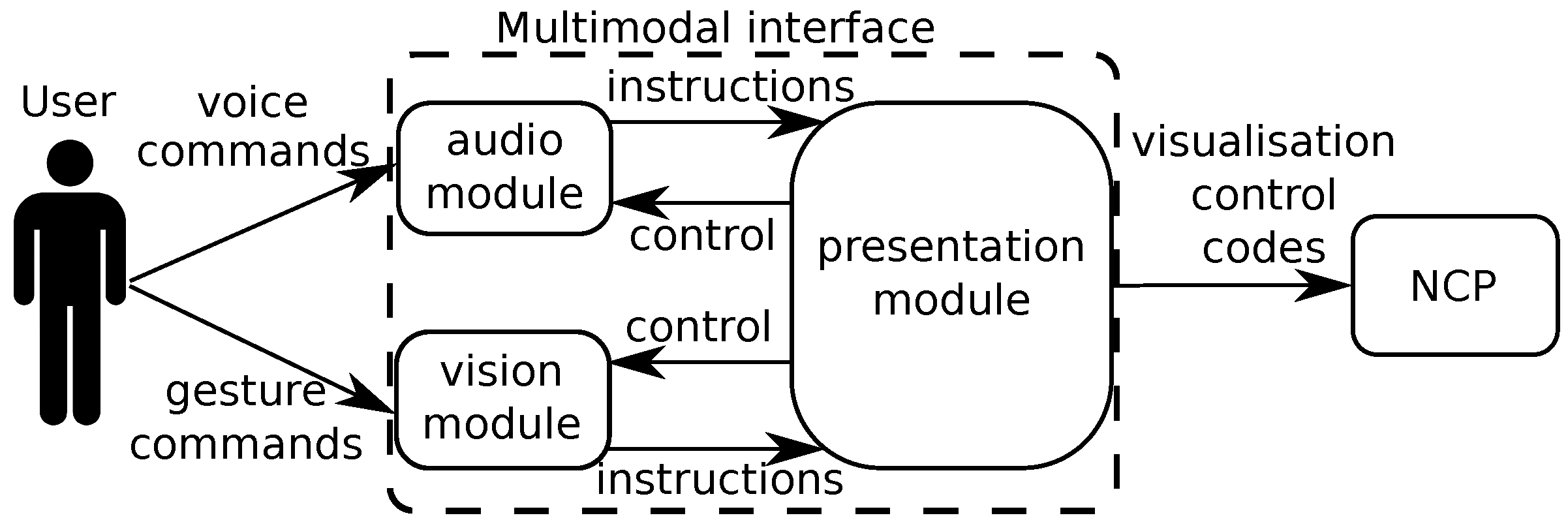

2.2. General Structure of the Interface

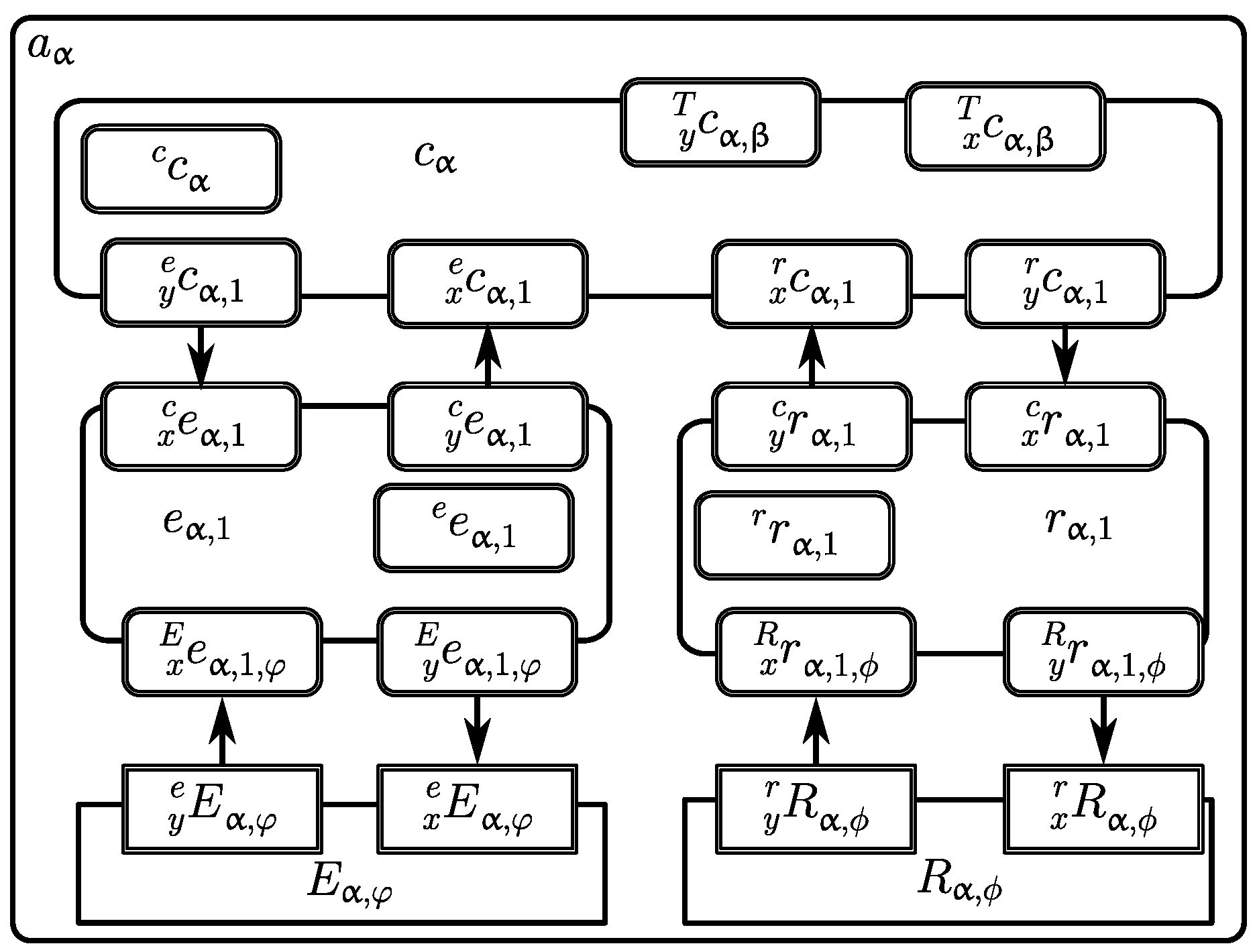

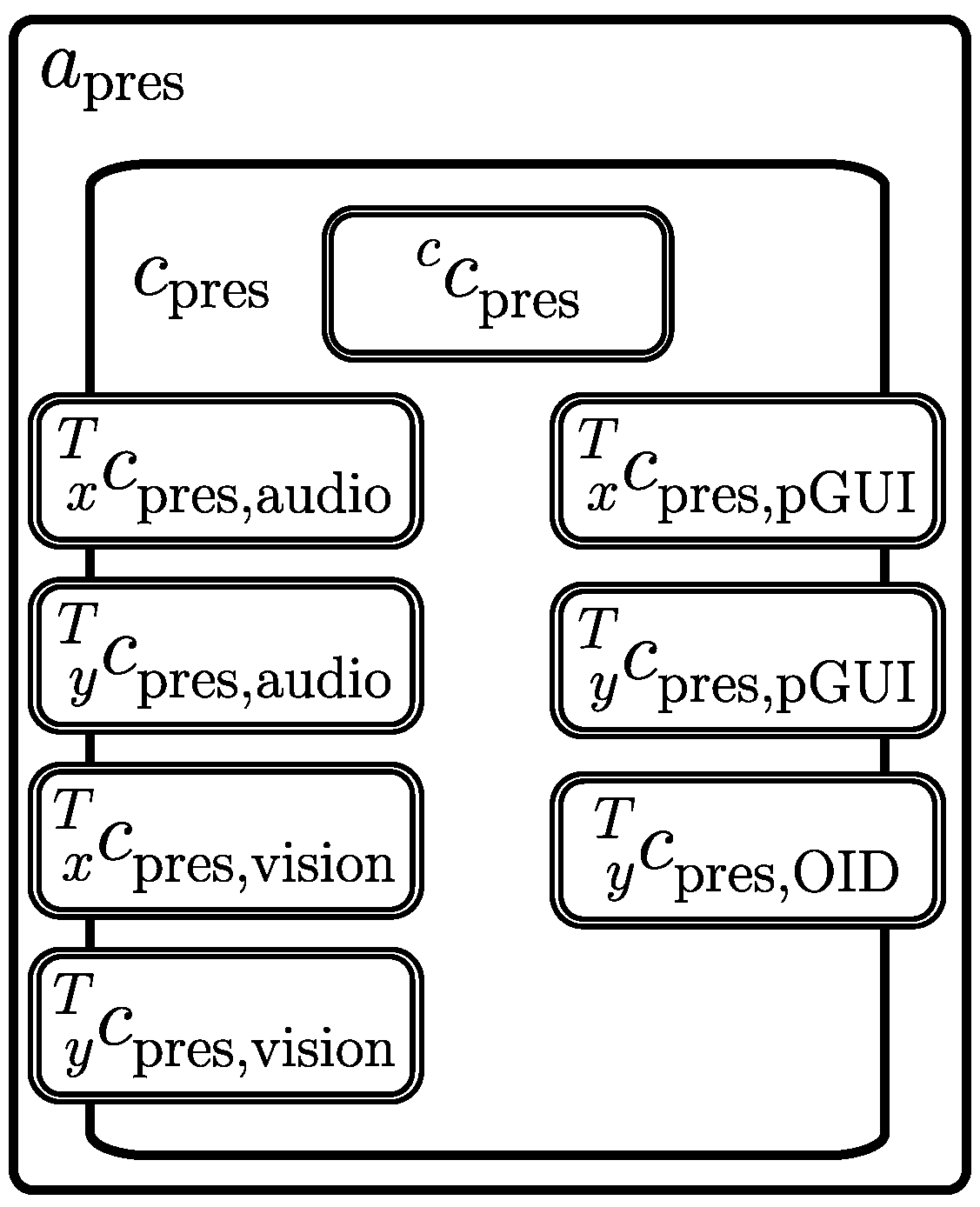

2.3. Building Blocks: Embodied Agents

- a single control subsystem

- zero or more real effectors

- zero or more virtual effectors

- zero or more real receptors

- zero or more virtual receptors

- x stands for input buffer,

- y for output buffer,

- no left subscript denotes internal memory.

- is the input buffer (thus x) from the control subsystem of the virtual effector named ls of the agent ;

- is the output buffer (thus y) from the control subsystem of the agent to the agent (more concretely, its control subsystem );

- is the internal memory of the virtual receptor named ls of the agent .

2.4. Design Methodology

- determine the real effectors and receptors necessary to perform the task being the imperative of the system to act,

- decompose the system into agents,

- assign to the agents the real effectors and receptors (taking into account the transmission delays and the necessary computational power),

- assign specific tasks to each of the agents,

- define virtual receptors and effectors for each agent, hence determine the concepts that the control subsystem will use to express the task of the agent,

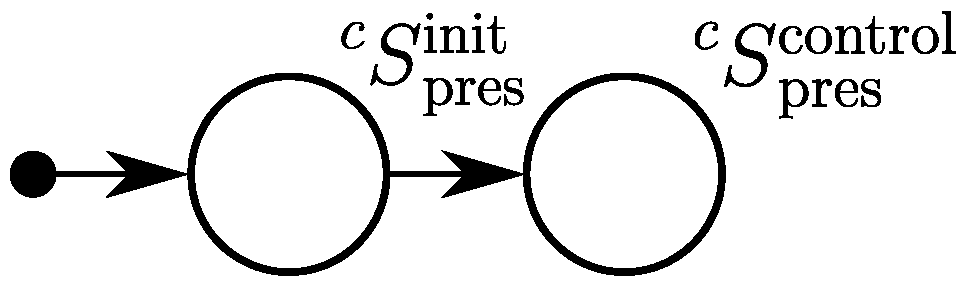

- specify the FSMs switching the behaviours for each subsystem within each agent,

- assign an adequate behaviour to each FSM state,

- define the parameters of the behaviours, i.e., transition functions, terminal and error conditions.

3. Specification of Modules and Structure of the Interface

3.1. Window Agents

3.2. Agent Governing the Standard Operator Interface Devices

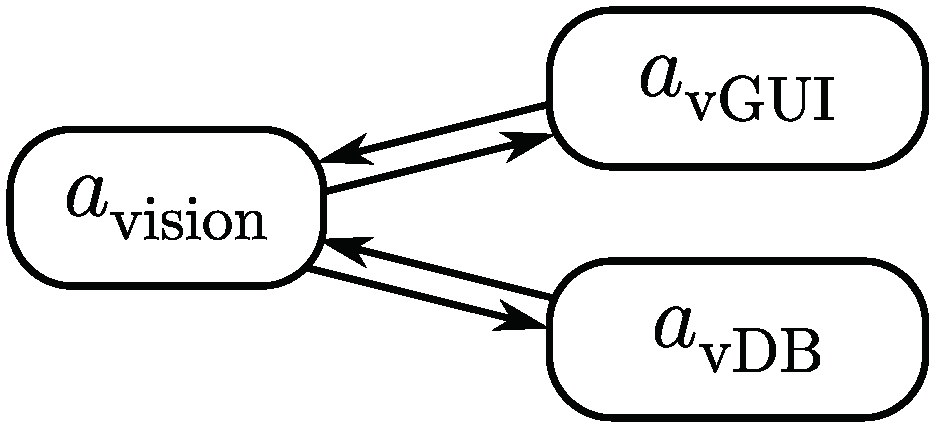

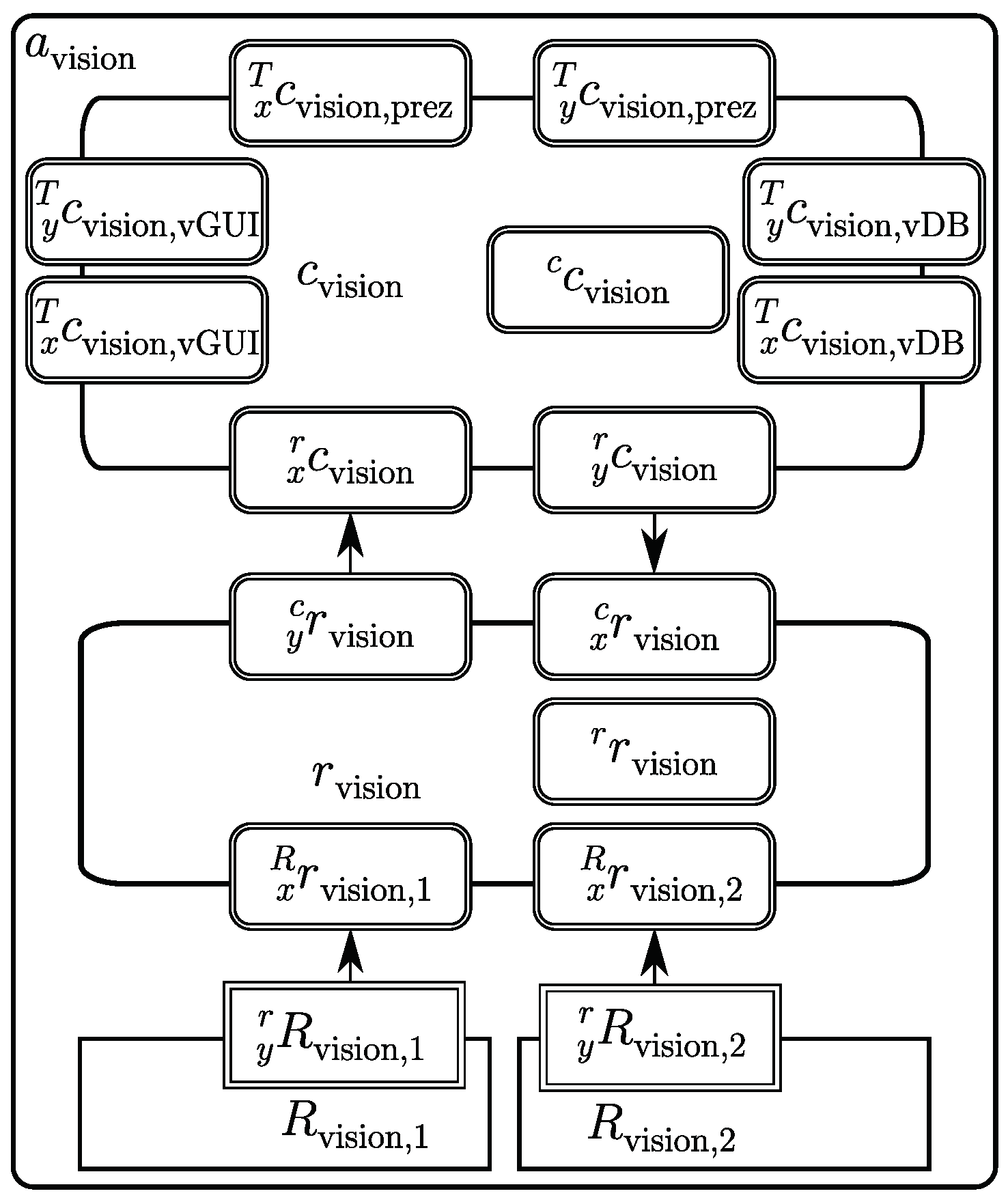

3.3. Vision Module

3.3.1. Vision Agent

3.3.2. Database Agent

3.3.3. Window Agent

3.4. Audio Module

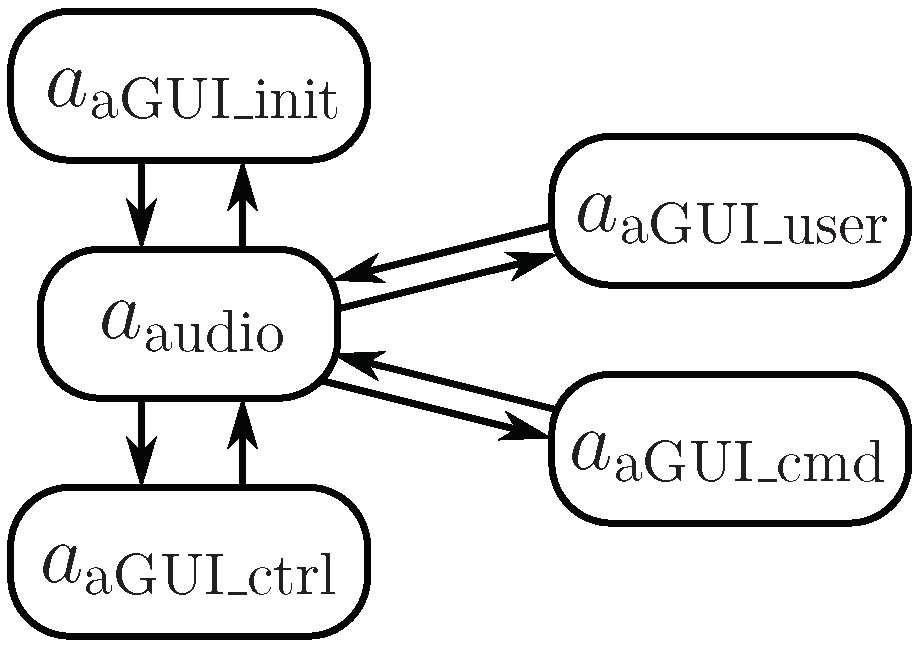

3.4.1. Structure

3.4.2. Window Agents

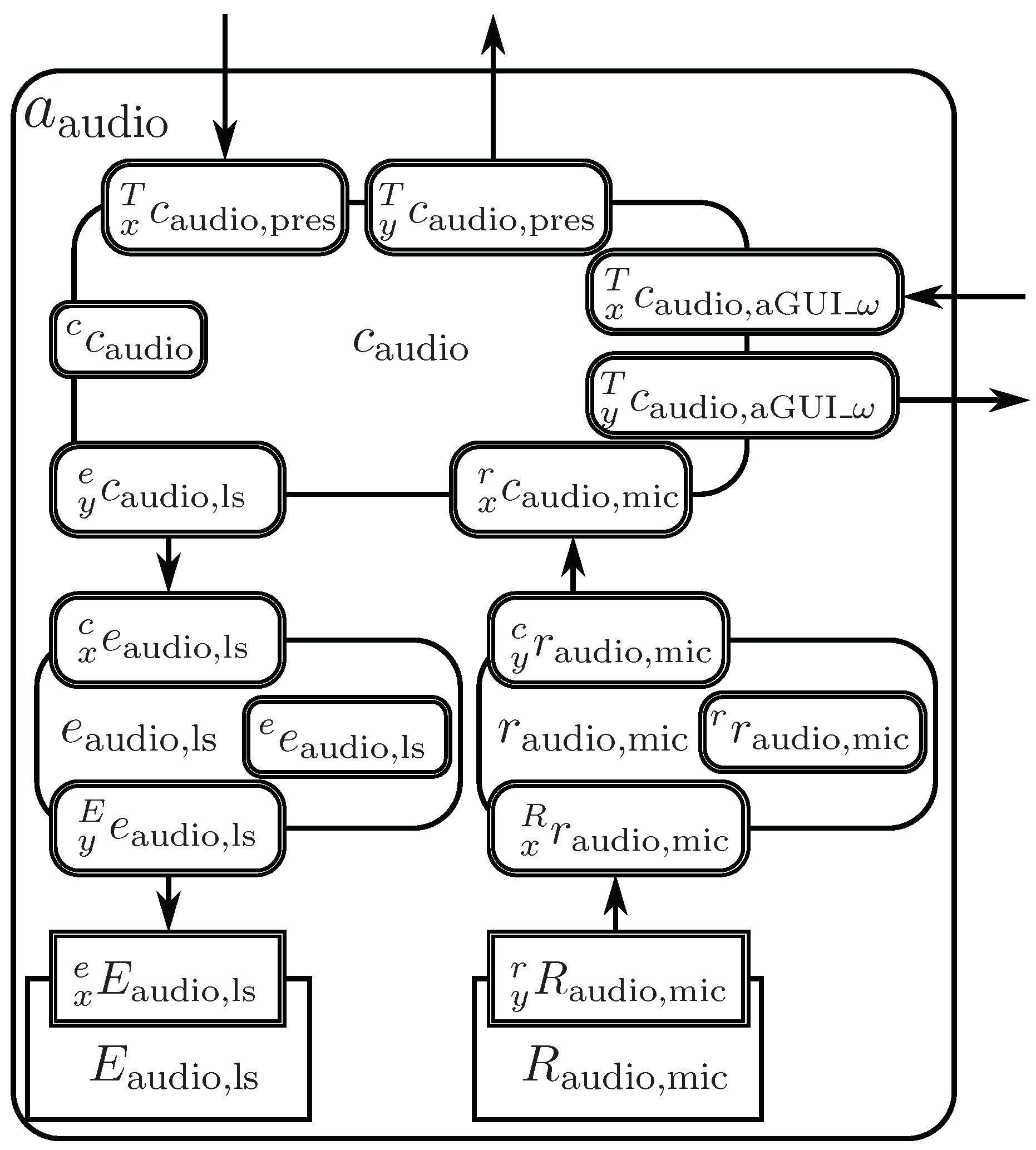

3.4.3. Audio Agent

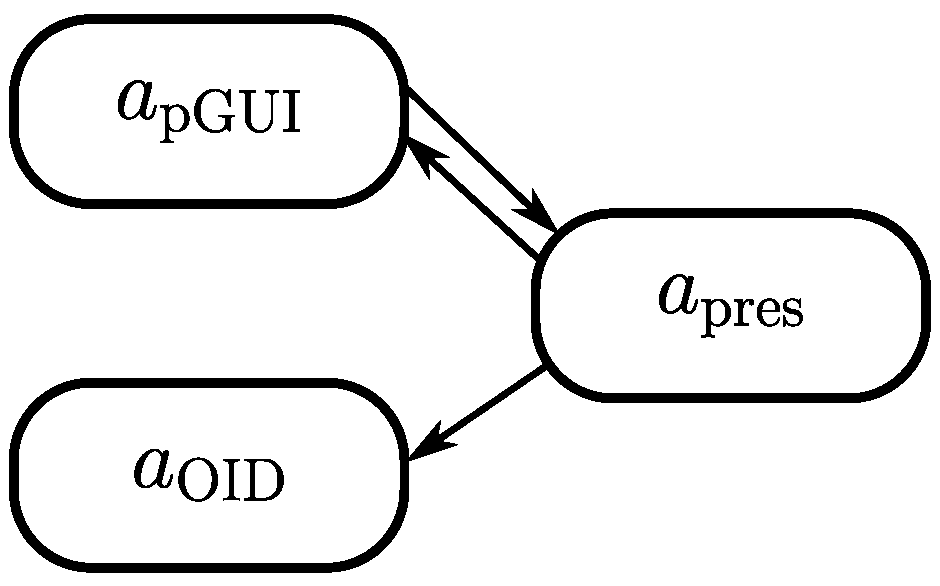

3.5. Presentation Module

3.5.1. Presentation Agent

3.5.2. Window Agent

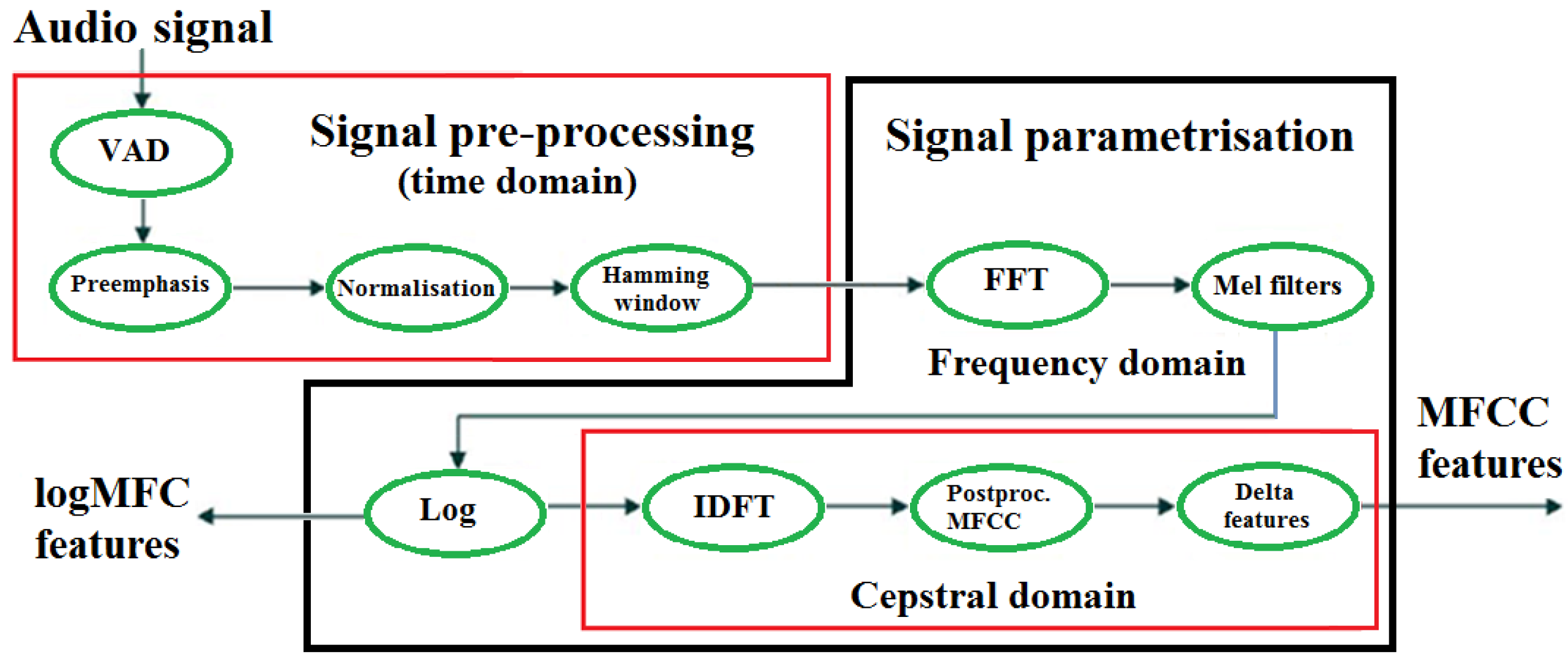

4. Implementation of Audio Processing

4.1. Real Audio Receptor

4.2. Virtual Audio Receptor

4.3. Selected Audio Agent Behaviours

4.3.1. Audio Signal Parametrisation

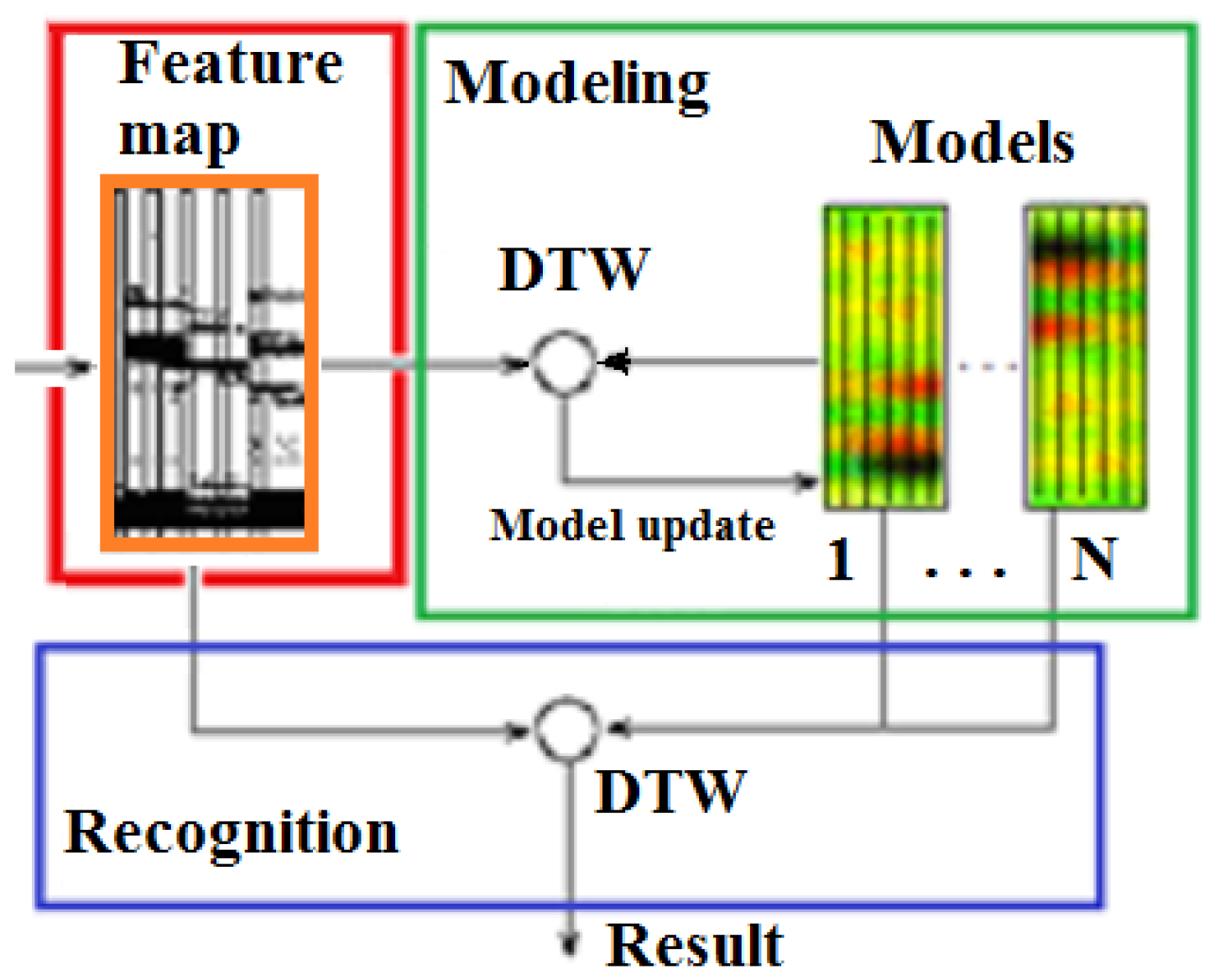

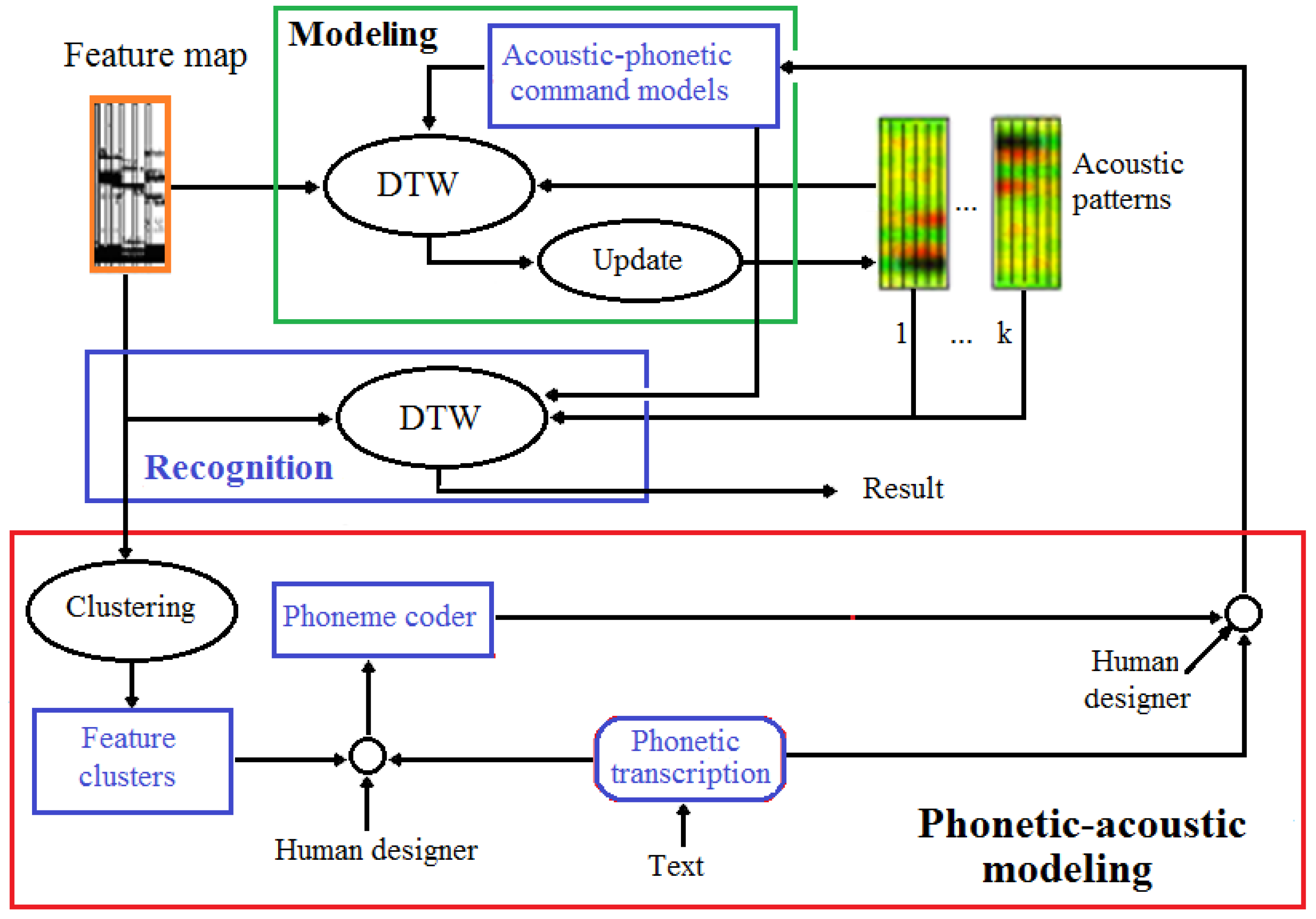

4.3.2. Command Modelling and -Recognition

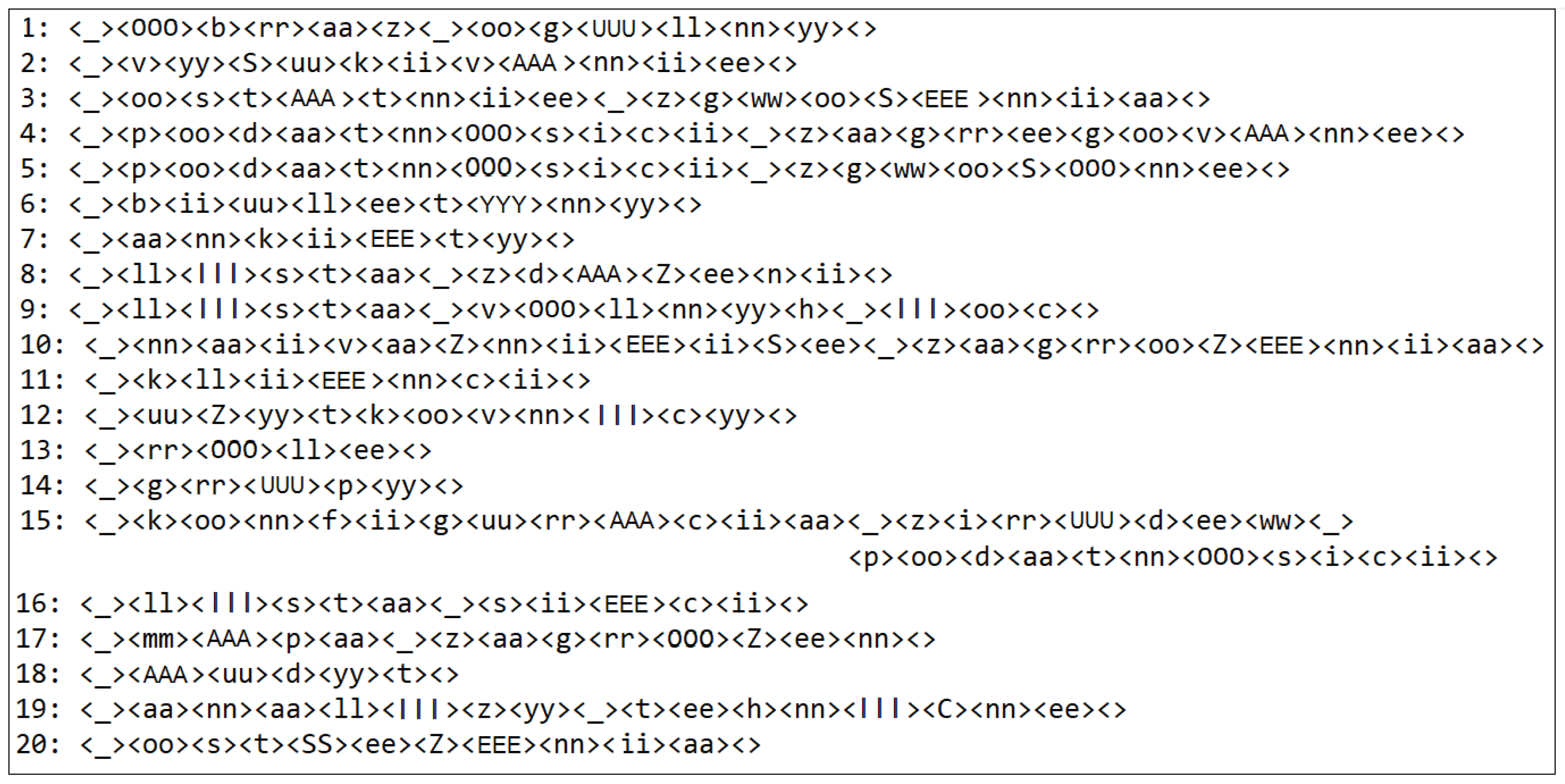

Phonetic-Acoustic Transcription

The Phonetic-Acoustic Coder

4.3.3. Speaker Modeling and -Recognition

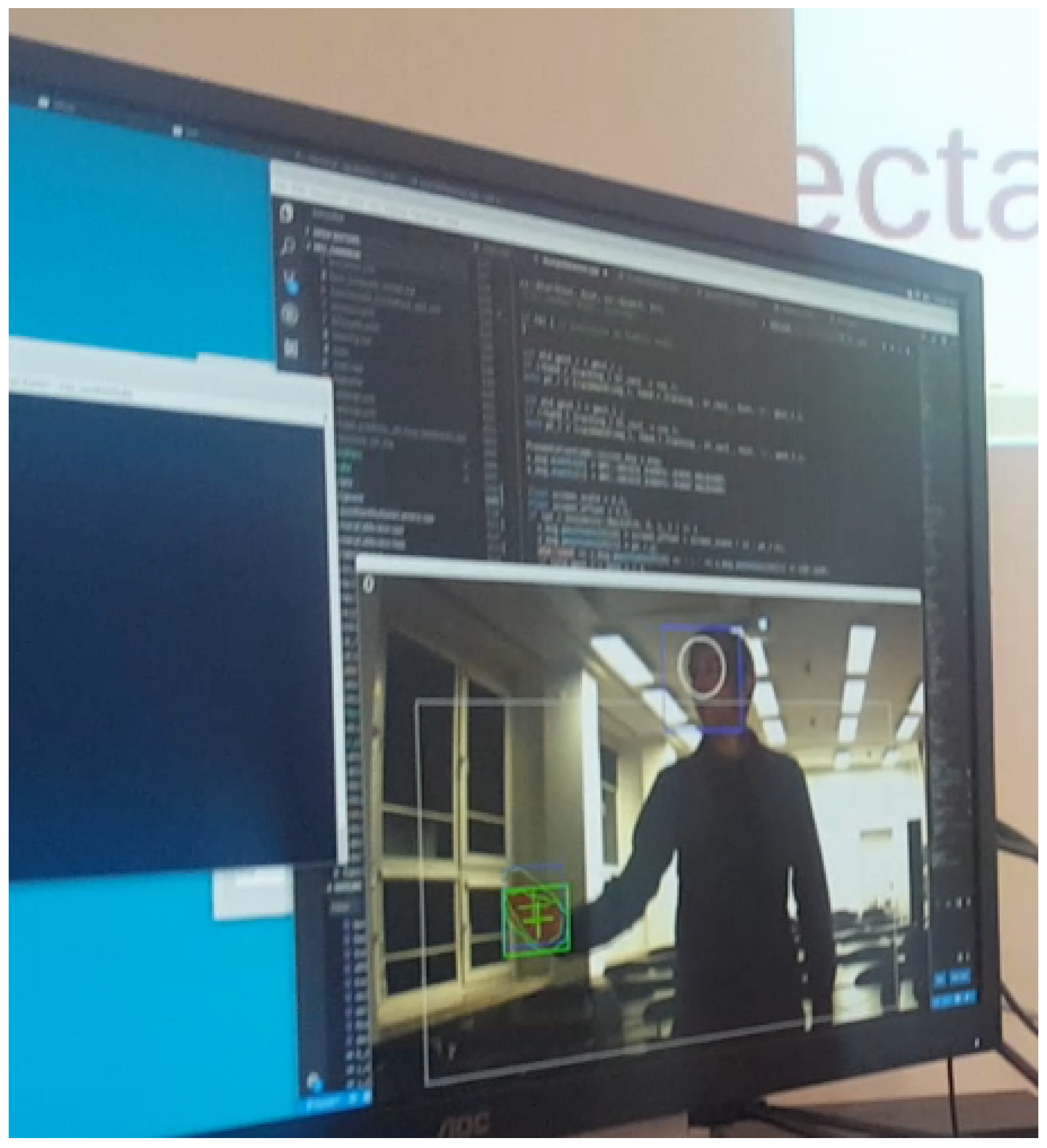

5. Implementation of Vision Processing

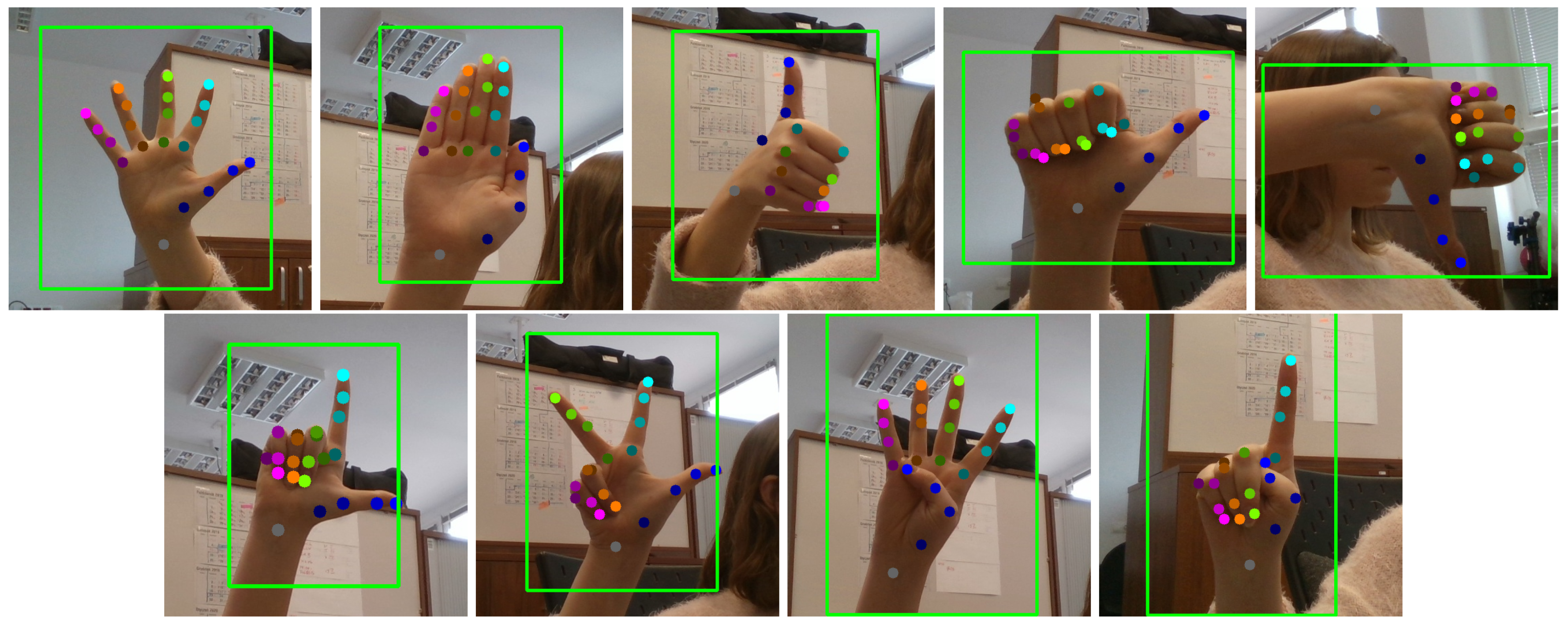

5.1. Real Receptor: Data Acquisition

5.2. Virtual Receptor: Image Preprocessing and Segmentation

5.3. Control Subsystem: Gesture Recognition

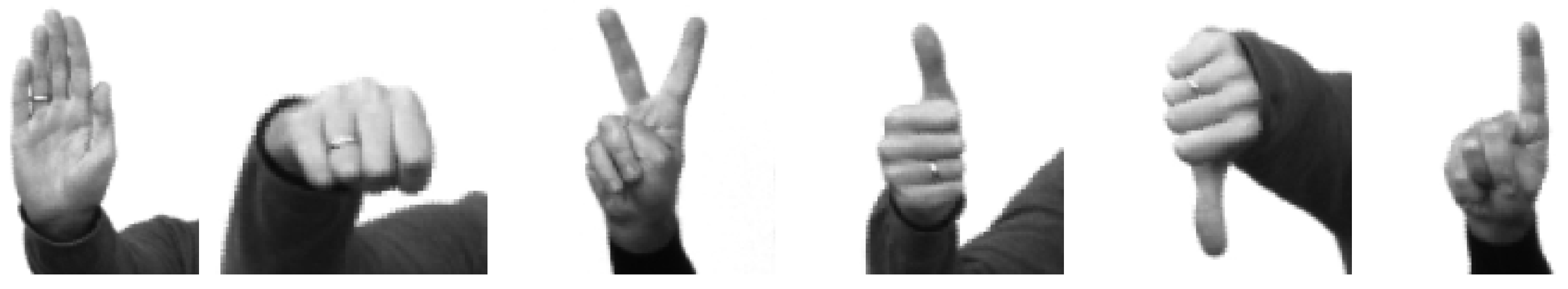

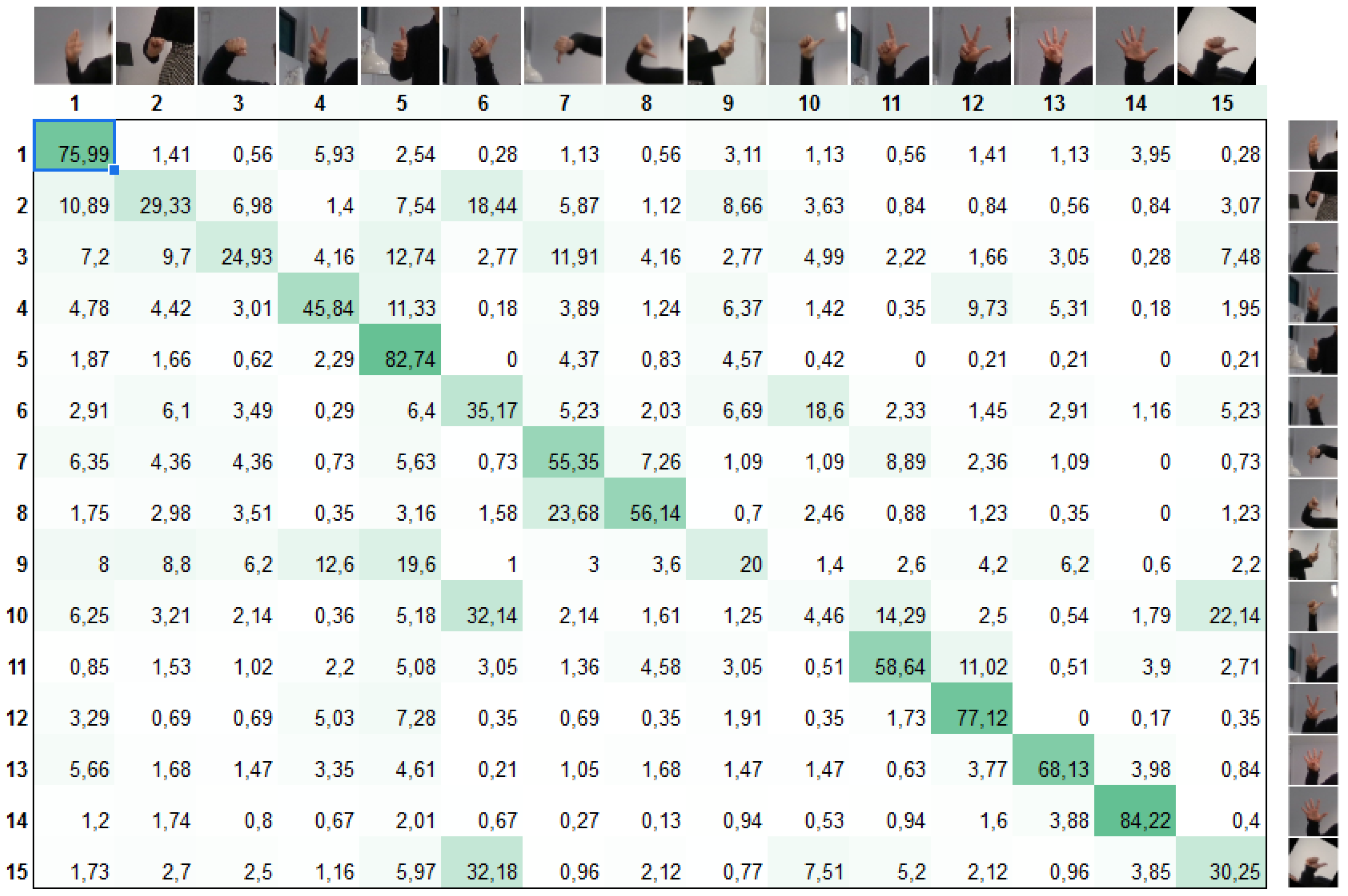

5.3.1. Hand Poses

5.3.2. Dynamic Gestures

6. Implementation of the Presentation Interface

6.1. Interaction with the NCP Visualisation Component

- events caused by the movement of the cursors and the motion of the user’s hands,

- events of the computer mouse buttons and static gestures shown by the user.

6.2. Multi-Modal Fusion

7. Tests

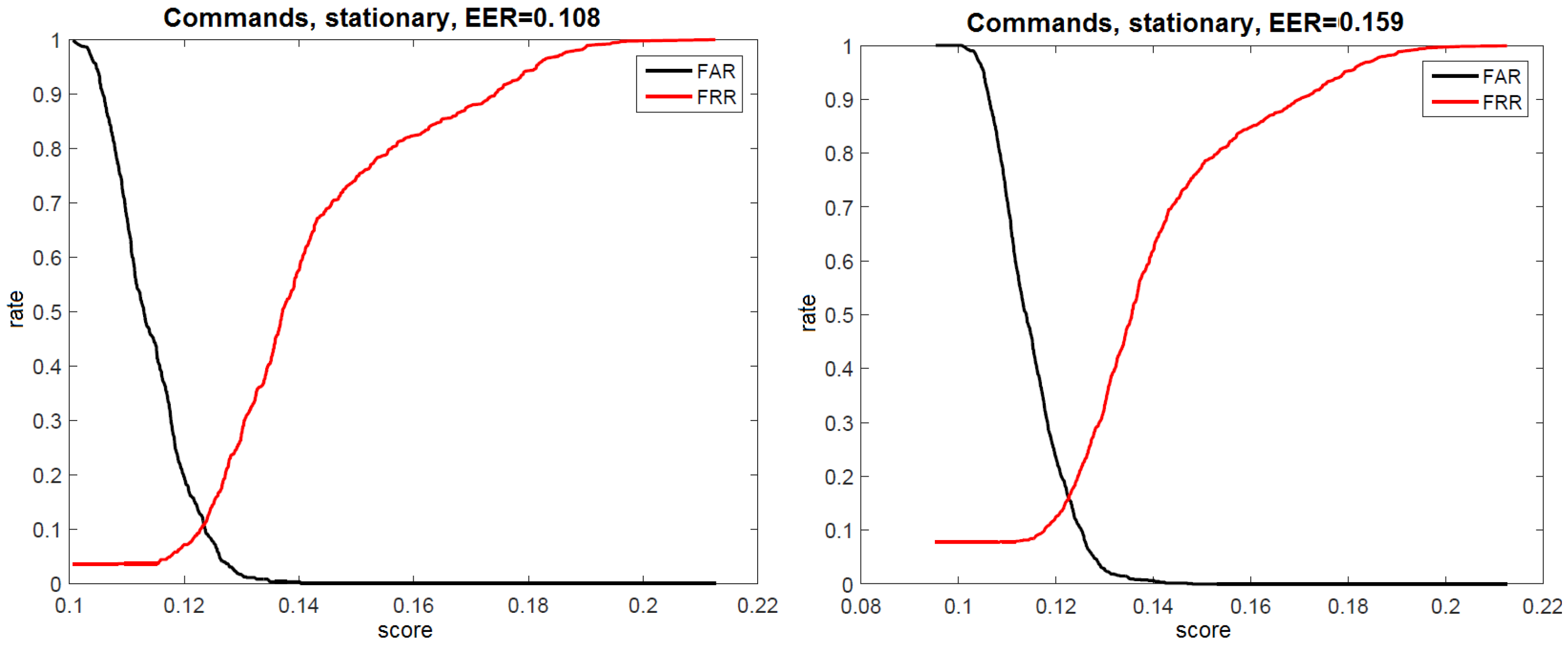

7.1. Audio Tests

7.1.1. Command Dictionaries

7.1.2. Command Recognition Results

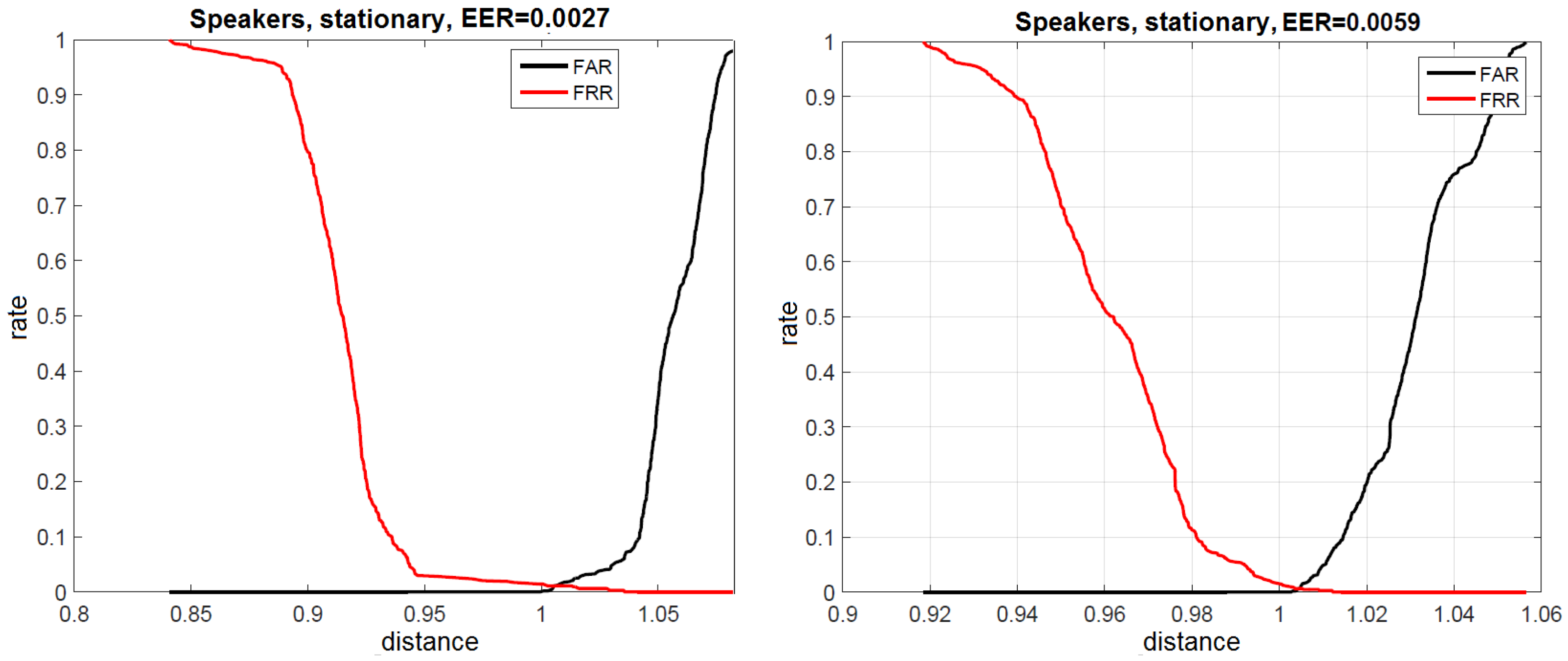

7.1.3. Speaker Recognition Results

7.2. Related Audio Tests

7.2.1. Speech Recognition

- mono0—the initial monophone model (WER = 29.9%)

- tri1—initial triphone model (WER = 15.88%)

- tri3b—triphones + context (+/– 3 frames) + LDA + SAT (fMLLR) with lexicon rescoring and silence probabilities (WER = 11.82%)

- nnet3—regular time-delay DNN (WER = 7.37%)

- chain—a DNN-HMM model implemented with nnet3 (WER = 5.73%)

7.2.2. Speaker Recognition

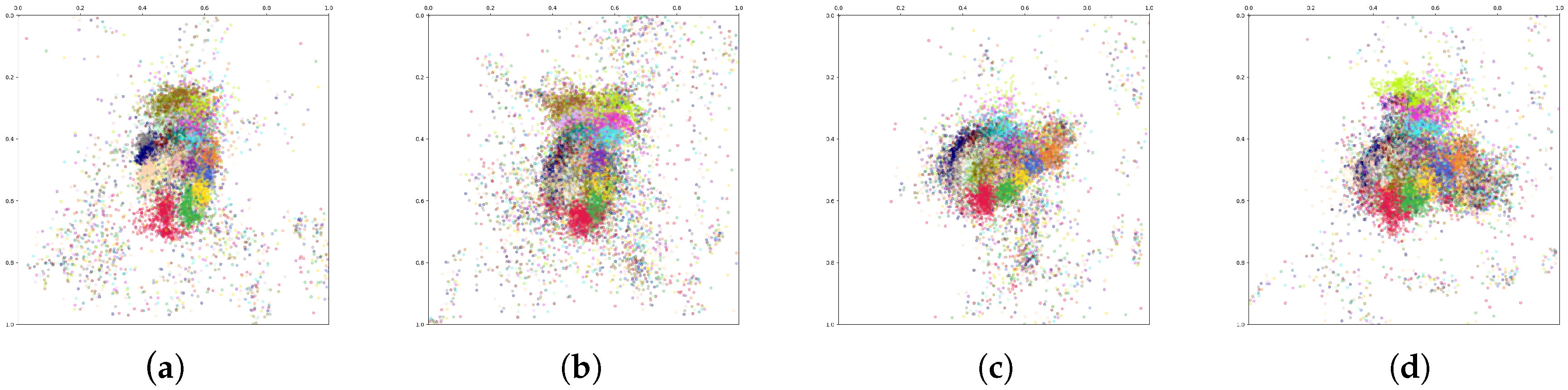

7.3. Vision Tests

7.3.1. Classifier Accuracy

7.3.2. Usability Test

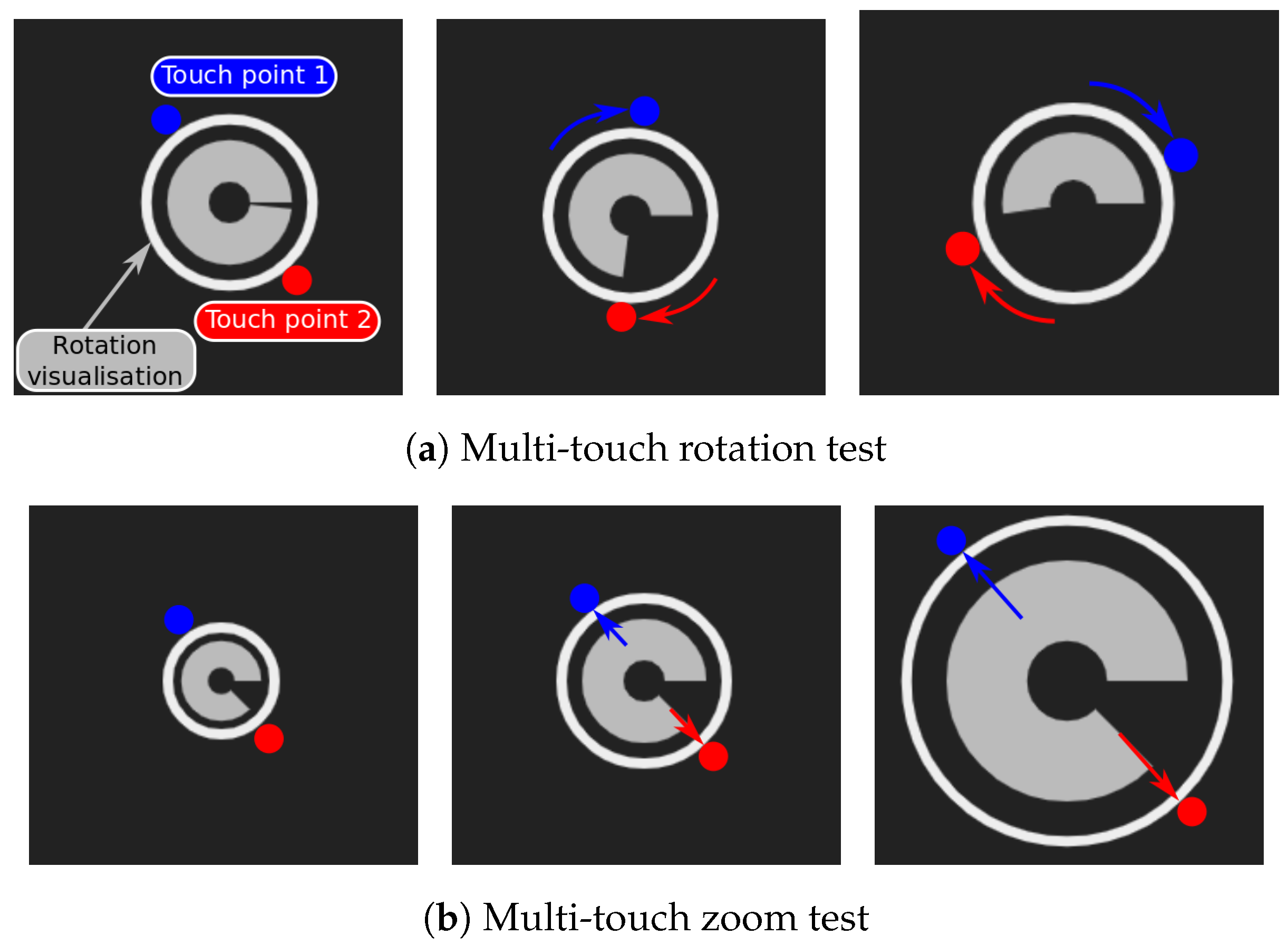

7.4. Tests of the Presentation Interface

- the events addressed to the operating system itself were interpreted as intended (screen lock/unlock),

- the visualisation application received events generated by the multi-modal interface,

- reaction of the application corresponded to the intention of the user,

- failures of the multi-modal interface and mistakes of the user were reported to the user.

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dijkstra, E. On the Role of Scientific Thought. In Selected Writings on Computing: A Personal Perspective; Springer: New York, NY, USA, 1982; pp. 60–66. [Google Scholar] [CrossRef]

- Bernstein, L.; Yuhas, C. Trustworthy Systems Through Quantitative Software Engineering; Quantitative Software Engineering Series; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Dudek, W.; Szynkiewicz, W. Cyber-security for Mobile Service Robots–Challenges for Cyber-physical System Safety. J. Telecommun. Inf. Technol. 2019, 2, 29–36. [Google Scholar] [CrossRef]

- Shiravi, H.; Shiravi, A.; Ghorbani, A. A Survey of Visualization Systems for Network Security. IEEE Trans. Vis. Comput. Graph. 2012, 18, 1313–1329. [Google Scholar] [CrossRef]

- Sethi, A.; Wills, G. Expert-interviews led analysis of EEVi—A model for effective visualization in cyber-security. In Proceedings of the 2017 IEEE Symposium on Visualization for Cyber Security (VizSec), Phoenix, AZ, USA, 2 October 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. An evaluation framework for network security visualizations. Comput. Secur. 2019, 84, 70–92. [Google Scholar] [CrossRef]

- Best, D.M.; Endert, A.; Kidwell, D. 7 Key Challenges for Visualization in Cyber Network Defense. In Proceedings of the Eleventh Workshop on Visualization for Cyber Security; Association for Computing Machinery: New York, NY, USA, 2014; pp. 33–40. [Google Scholar] [CrossRef]

- Chen, S.; Guo, C.; Yuan, X.; Merkle, F.; Schäfer, H.; Ertl, T. OCEANS: Online collaborative explorative analysis on network security. In Proceedings of the Eleventh Workshop on Visualization for Cyber Security; Association for Computing Machinery: New York, NY, USA, 2014. [Google Scholar]

- McKenna, S.; Staheli, D.; Fulcher, C.; Meyer, M. BubbleNet: A Cyber Security Dashboard for Visualizing Patterns. Comput. Graph. Forum 2016, 35, 281–290. [Google Scholar] [CrossRef]

- Cao, N.; Lin, C.; Zhu, Q.; Lin, Y.; Teng, X.; Wen, X. Voila: Visual Anomaly Detection and Monitoring with Streaming Spatiotemporal Data. IEEE Trans. Vis. Comput. Graph. 2018, 24, 23–33. [Google Scholar] [CrossRef]

- Song, B.; Choi, J.; Choi, S.S.; Song, J. Visualization of security event logs across multiple networks and its application to a CSOC. Clust. Comput. 2017, 22, 1861–1872. [Google Scholar] [CrossRef]

- Heneghan, N.; Baker, G.; Thomas, K.; Falla, D.; Rushton, A. What is the effect of prolonged sitting and physical activity on thoracic spine mobility? An observational study of young adults in a UK university setting. BMJ Open 2018, 8, e019371. [Google Scholar] [CrossRef] [PubMed]

- Wahlström, J. Ergonomics, musculoskeletal disorders and computer work. Occup. Med. 2005, 55, 168–176. [Google Scholar] [CrossRef] [PubMed]

- Loh, P.Y.; Yeoh, W.L.; Muraki, S. Impacts of Typing on Different Keyboard Slopes on the Deformation Ratio of the Median Nerve. In Congress of the International Ergonomics Association (IEA 2018); Bagnara, S., Tartaglia, R., Albolino, S., Alexander, T., Fujita, Y., Eds.; Springer: Cham, Switzerland, 2019; pp. 250–254. [Google Scholar] [CrossRef]

- Tiric-Campara, M.; Krupic, F.; Biscevic, M.; Spahic, E.; Maglajlija, K.; Masic, Z.; Zunic, L.; Masic, I. Occupational overuse syndrome (technological diseases): Carpal tunnel syndrome, a mouse shoulder, cervical pain syndrome. Acta Inform. Med. 2014, 22, 333–340. [Google Scholar] [CrossRef] [PubMed]

- Dumas, B.; Lalanne, D.; Oviatt, S. Human Machine Interaction. In Human Machine Interaction; Springer: Cham, Switzerland, 2009; Volume 5440, pp. 3–26. [Google Scholar]

- Lee, B.; Isenberg, P.; Riche, N.; Carpendale, S. Beyond Mouse and Keyboard: Expanding Design Considerations for Information Visualization Interactions. IEEE Trans. Vis. Comput. Graph. 2012, 18, 2689–2698. [Google Scholar] [CrossRef] [PubMed]

- Nunnally, T.; Uluagac, A.S.; Beyah, R. InterSec: An interaction system for network security applications. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 7132–7138. [Google Scholar] [CrossRef]

- Agha, G. Actors: A Model of Concurrent Computation in Distributed Systems; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Nwana, H.S.; Ndumu, D.T. A Brief Introduction to Software Agent Technology. In Agent Technology: Foundations, Applications, and Markets; Springer: Berlin/Heidelberg, Germany, 1998; pp. 29–47. [Google Scholar] [CrossRef]

- Padgham, L.; Winikoff, M. Developing Intelligent Agent Systems: A Practical Guide; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Dorri, A.; Kanhere, S.; Jurdak, R. Multi-Agent Systems: A survey. IEEE Access 2018, 6, 28573–28593. [Google Scholar] [CrossRef]

- Abar, S.; Theodoropoulos, G.K.; Lemarinier, P.; O’Hare, G.M. Agent Based Modelling and Simulation tools: A review of the state-of-art software. Comput. Sci. Rev. 2017, 24, 13–33. [Google Scholar] [CrossRef]

- Macal, C. Everything you need to know about agent-based modelling and simulation. J. Simul. 2016, 10, 144–156. [Google Scholar] [CrossRef]

- Brooks, R.A. Intelligence without reason. Artif. Intell. Crit. Concepts 1991, 3, 107–163. [Google Scholar]

- Brooks, R.A. Intelligence Without Representation. Artif. Intell. 1991, 47, 139–159. [Google Scholar] [CrossRef]

- Brooks, R.A. Elephants don’t play chess. Robot. Auton. Syst. 1990, 6, 3–15. [Google Scholar] [CrossRef]

- Brooks, R.A. New approaches to robotics. Science 1991, 253, 1227–1232. [Google Scholar] [CrossRef]

- Arkin, R.C. Behavior-Based Robotics; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach; Prentice Hall: Upper Saddle River, NJ, USA, 1995. [Google Scholar]

- Lebeuf, C.; Storey, M.; Zagalsky, A. Software Bots. IEEE Softw. 2018, 35, 18–23. [Google Scholar] [CrossRef]

- Kornuta, T.; Zieliński, C. Robot control system design exemplified by multi-camera visual servoing. J. Intell. Robot. Syst. 2013, 77, 499–524. [Google Scholar] [CrossRef]

- Zieliński, C.; Stefańczyk, M.; Kornuta, T.; Figat, M.; Dudek, W.; Szynkiewicz, W.; Kasprzak, W.; Figat, J.; Szlenk, M.; Winiarski, T.; et al. Variable structure robot control systems: The RAPP approach. Robot. Auton. Syst. 2017, 94, 226–244. [Google Scholar] [CrossRef]

- Figat, M.; Zieliński, C. Methodology of Designing Multi-agent Robot Control Systems Utilising Hierarchical Petri Nets. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3363–3369. [Google Scholar] [CrossRef]

- Dudek, W.; Węgierek, M.; Karwowski, J.; Szynkiewicz, W.; Winiarski, T. Task harmonisation for a single-task robot controller. In Proceedings of the 2019 12th International Workshop on Robot Motion and Control (RoMoCo), Poznań, Poland, 8–10 July 2019; pp. 86–91. [Google Scholar] [CrossRef]

- Kravari, K.; Bassiliades, N. A Survey of Agent Platforms. J. Artif. Soc. Soc. Simul. 2015, 18, 11. [Google Scholar] [CrossRef]

- Dudek, W.; Szynkiewicz, W.; Winiarski, T. Cloud computing support for the multi-agent robot navigation system. J. Autom. Mob. Robot. Intell. Syst. 2017, 11, 67–74. [Google Scholar] [CrossRef]

- Zieliński, C.; Figat, M.; Hexel, R. Communication Within Multi-FSM Based Robotic Systems. J. Intell. Robot. Syst. 2019, 93, 787–805. [Google Scholar] [CrossRef]

- Bonabeau, E.; Dorigo, M.; Theraulaz, G. Swarm Intelligence: From Natural to Artificial Systems; Oxford University Press: Oxford, NY, USA, 1999. [Google Scholar]

- Walker, W.; Lamere, P.; Kwok, P.; Raj, B.; Singh, R.; Gouvea, E.; Wolf, P.; Woelfel, J. Sphinx-4: A Flexible Open Source Framework for Speech Recognition; SMLI TR2004-0811; SUN Microsystems Inc.: Santa Clara, CA, USA, 2004; Available online: http://cmusphinx.sourceforge.net/ (accessed on 9 October 2018).

- Kaldi. The KALDI Project. Available online: http://kaldi.sourceforge.net/index.html (accessed on 5 October 2018).

- Mak, M.W.; Chien, J.T. Machine Learning for Speaker Recognition. INTERSPEECH 2016 Tutorial. Available online: http://www.eie.polyu.edu.hk/~mwmak/papers/IS2016-tutorial.pdf (accessed on 8 September 2016).

- Alize. The ALIZE Project. Available online: http://alize.univ-avignon.fr (accessed on 11 October 2018).

- Reynolds, D.A.; Rose, R.C. Robust text-independent speaker identification using gaussian mixture speaker models. IEEE Trans. Speech Audio Process. 1995, 3, 72–83. [Google Scholar] [CrossRef]

- Reynolds, D.A.; Quatieri, T.; Dunn, R. Speaker verification using adapted gaussian mixture models. Digit. Signal Process. 2000, 10, 19–41. [Google Scholar] [CrossRef]

- Khoury, E.; El Shafey, L.; Marcel, S. Spear: An open source toolbox for speaker recognition based on Bob. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Nayana, P.; Mathew, D.; Thomas, A. Comparison of text independent speaker identification systems using gmm and i-vector methods. Procedia Comput. Sci. 2017, 115, 47–54. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Lukic, Y.; Vogt, C.; Dürr, O.; Stadelmann, T. Speaker identification and clustering using convolutional neural networks. In Proceedings of the 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Vietri sul Mare, Italy, 13–16 September 2016; pp. 1–6. [Google Scholar]

- Chung, J.; Nagrani, A.; Zisserman, A. Voxceleb2: Deep speaker recognition. arXiv 2018, arXiv:1806.05622. [Google Scholar]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using boosted cascade of simple features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1867–1874. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Zimmermann, C.; Brox, T. Learning to Estimate 3D Hand Pose from Single RGB Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4913–4921. [Google Scholar]

- Gillian, N.E.; Knapp, R.B.; O’Modhrain, M.S. Recognition Of Multivariate Temporal Musical Gestures Using N-Dimensional Dynamic Time Warping. In Proceedings of the International Conference on New Interfaces for Musical Expression, Oslo, Norway, 30 May – 1 June 2011. [Google Scholar]

- Mangai, U.; Samanta, S.; Das, S.; Chowdhury, P. A survey of decision fusion and feature fusion strategies for pattern classification. IETE Tech. Rev. 2010, 27, 293–307. [Google Scholar] [CrossRef]

- CLARIN Studio Corpus. Available online: http://mowa.clarin-pl.eu/ (accessed on 20 March 2020).

- Zygadło, A. A System for Automatic Generation of Speech Corpora. Ph.D. Thesis, Warsaw University of Technology, Warszawa, Poland, 2019. [Google Scholar]

- Boczek, B. Ropoznawanie Mówcy z Wykorzystaniem Głębokich Sieci Neuronowych (Speaker Recognition Using Deep Neural Networks). Bachelor’s Thesis, Warsaw University of Technology, Warszawa, Poland, 2019. [Google Scholar]

- Białobrzeski, R. Rozpoznawanie Mówców na Urządzeniach Mobilnych (Speaker Recognition on Mobile Devices). Master’s Thesis, Warsaw University of Technology, Warszawa, Poland, 2018. [Google Scholar]

- Wang, Y.; Zhang, B.; Peng, C. SRHandNet: Real-Time 2D Hand Pose Estimation With Simultaneous Region Localization. IEEE Trans. Image Process. 2020, 29, 2977–2986. [Google Scholar] [CrossRef] [PubMed]

| Keyboard Shortcut | Visualisation Component |

|---|---|

| Page_Down | scroll down the screen by its height |

| Page_Up | scroll up the screen by its height |

| alt + x | switch to bookmark |

| Super_L + l | lock screen |

| ctrl + alt + l | lock screen in the Xfce window manager |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Szynkiewicz, W.; Kasprzak, W.; Zieliński, C.; Dudek, W.; Stefańczyk, M.; Wilkowski, A.; Figat, M. Utilisation of Embodied Agents in the Design of Smart Human–Computer Interfaces—A Case Study in Cyberspace Event Visualisation Control. Electronics 2020, 9, 976. https://doi.org/10.3390/electronics9060976

Szynkiewicz W, Kasprzak W, Zieliński C, Dudek W, Stefańczyk M, Wilkowski A, Figat M. Utilisation of Embodied Agents in the Design of Smart Human–Computer Interfaces—A Case Study in Cyberspace Event Visualisation Control. Electronics. 2020; 9(6):976. https://doi.org/10.3390/electronics9060976

Chicago/Turabian StyleSzynkiewicz, Wojciech, Włodzimierz Kasprzak, Cezary Zieliński, Wojciech Dudek, Maciej Stefańczyk, Artur Wilkowski, and Maksym Figat. 2020. "Utilisation of Embodied Agents in the Design of Smart Human–Computer Interfaces—A Case Study in Cyberspace Event Visualisation Control" Electronics 9, no. 6: 976. https://doi.org/10.3390/electronics9060976

APA StyleSzynkiewicz, W., Kasprzak, W., Zieliński, C., Dudek, W., Stefańczyk, M., Wilkowski, A., & Figat, M. (2020). Utilisation of Embodied Agents in the Design of Smart Human–Computer Interfaces—A Case Study in Cyberspace Event Visualisation Control. Electronics, 9(6), 976. https://doi.org/10.3390/electronics9060976