A Reconfigurable Convolutional Neural Network-Accelerated Coprocessor Based on RISC-V Instruction Set

Abstract

1. Introduction

2. Background

2.1. RISC-V Instruction Set

2.2. E203 CPU

3. Hardware Design of CNN-Accelerated Coprocessor

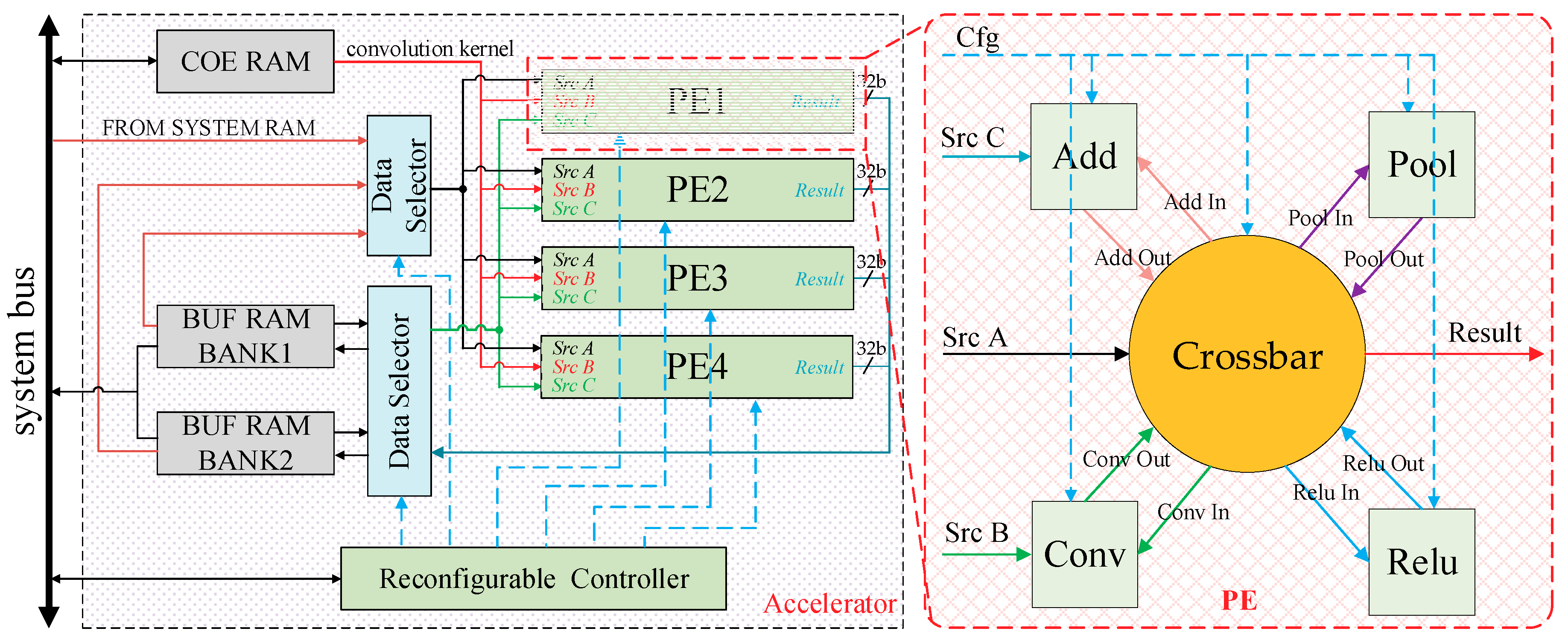

3.1. Accelerator Structure Optimization

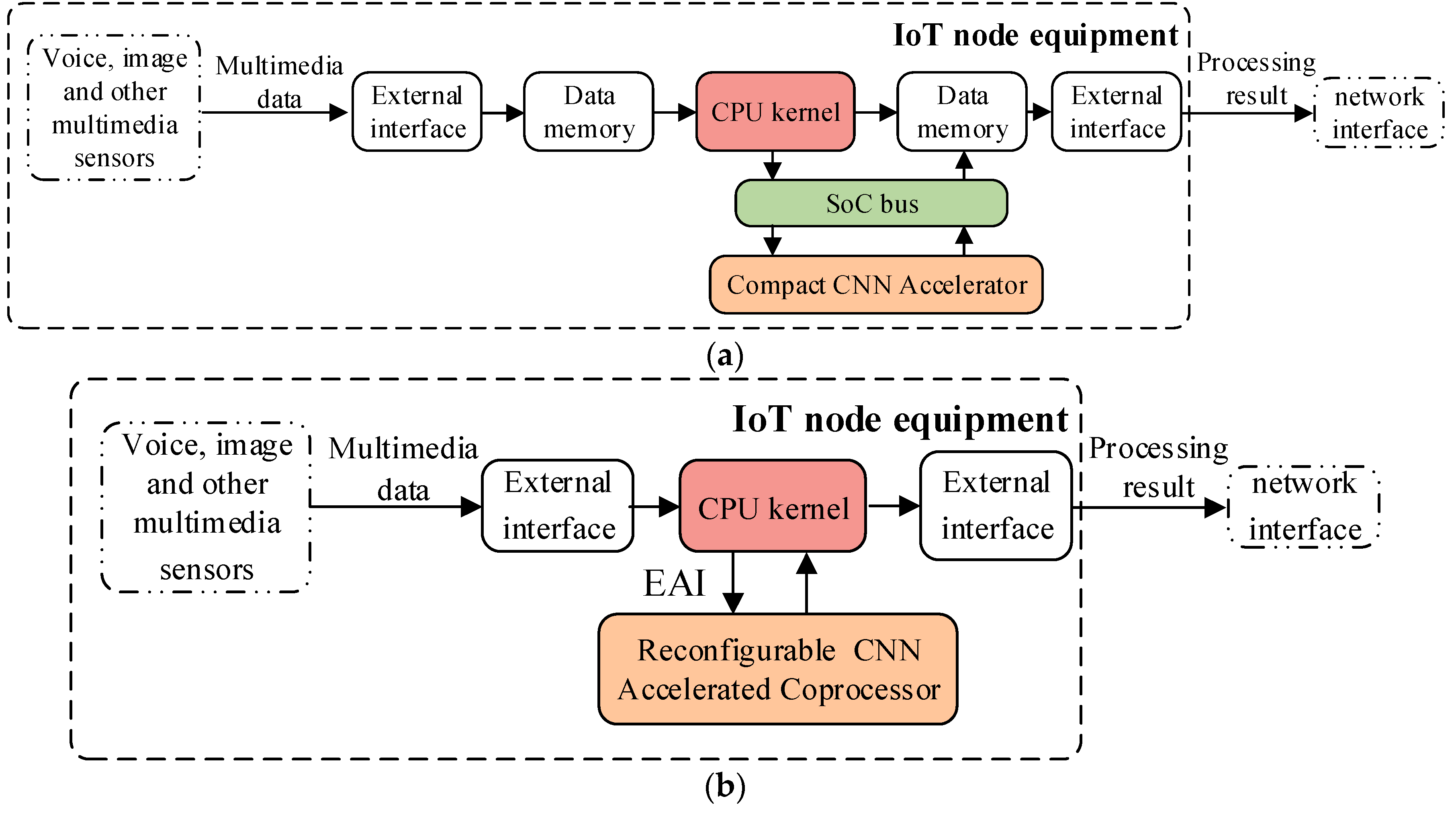

3.2. Coprocessor Design

4. Software Design of CNN-Accelerated Coprocessor

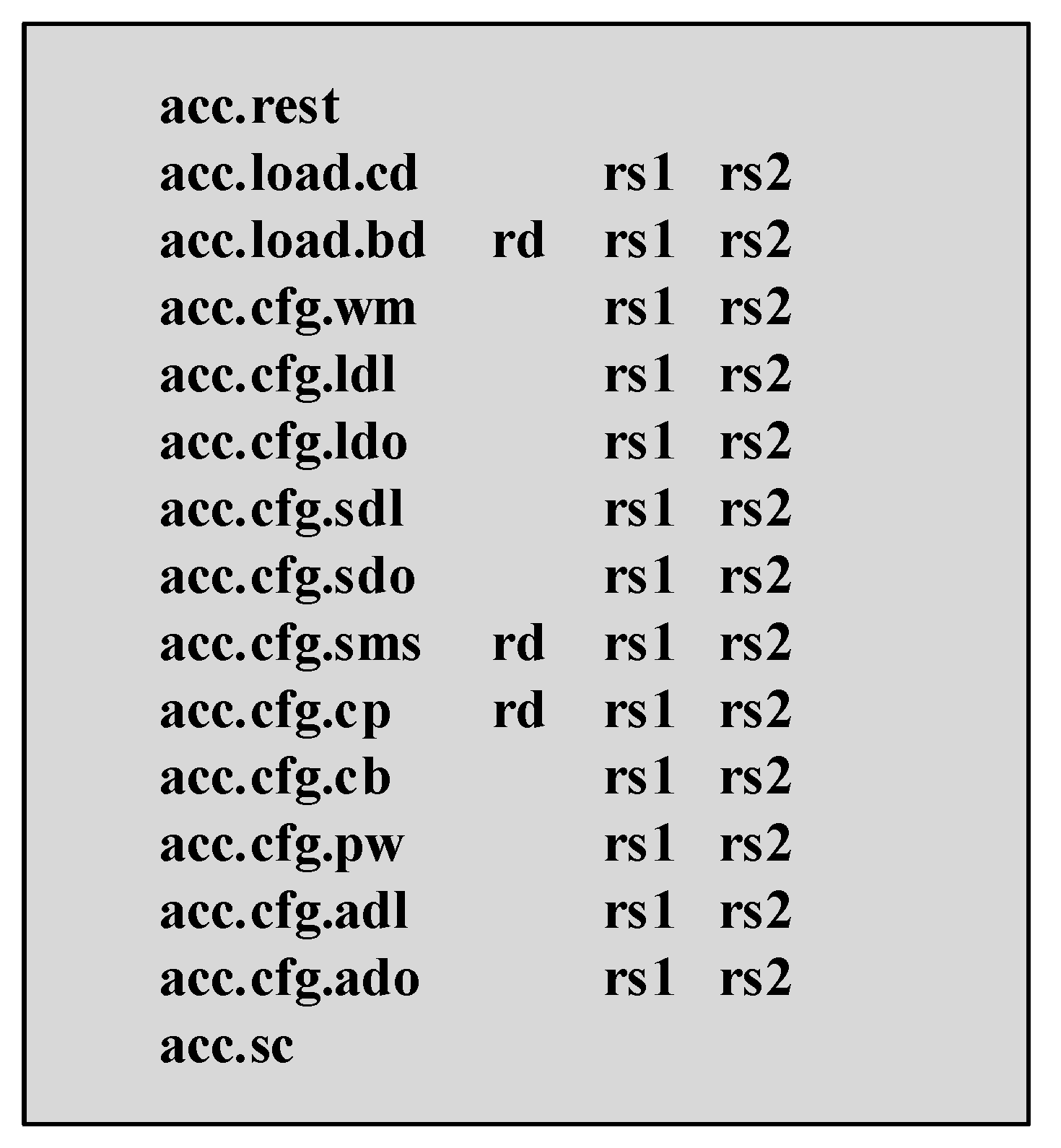

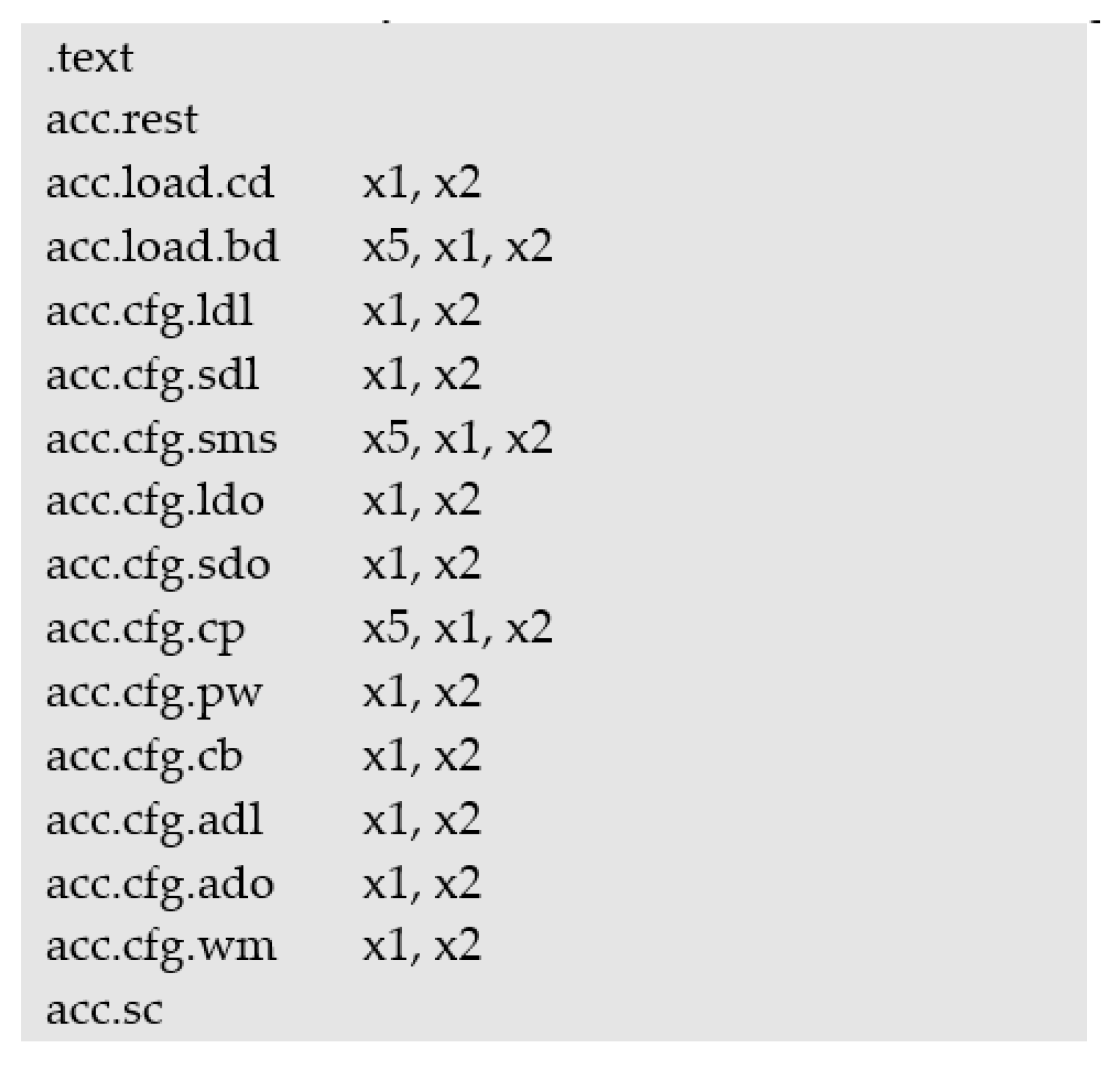

4.1. Instruction Design of Coprocessor

- Reset the coprocessorBefore using the coprocessor, you need to use the acc.rest instruction to reset the coprocessor.

- Load the convolution coefficientIf you need to use the convolution module in the coprocessor, you need to use the acc.load.cd instruction to load the convolution coefficient into the COE cache.

- Load the source matrixThe coprocessor supports reading the source matrix directly from memory for calculation, and also supports moving the source matrix to CACHE BANK and reading the data from CACHE BANK for calculation. The instruction to achieve this function is acc.load.bd.

- Configure coprocessor parameters

- (1)

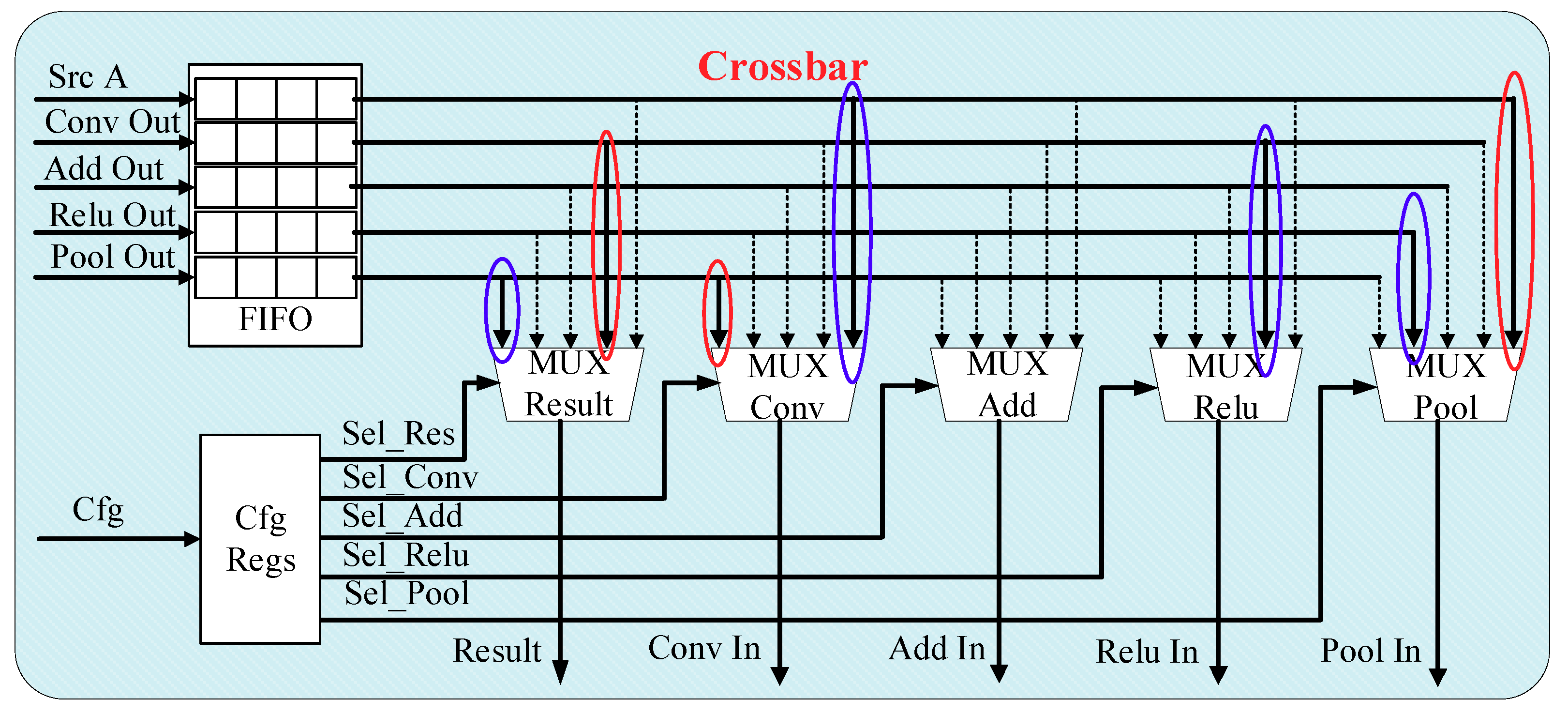

- Working modeConfigure the working mode of each PE of the coprocessor, that is, configure the crossbar in each PE, select the acceleration unit that participates in the calculation, and configure the direction of the data flow according to the algorithm. The instruction to achieve this function is acc.cfg.wm.

- (2)

- Calculate data source locationConfigure the coprocessor calculation data source. The coprocessor can load data directly from memory or load data from CACHE BANK. Before starting the coprocessor, you need to configure the coprocessor calculation data source according to the storage location of the source matrix. The instruction to achieve this is acc.cfg.ldl. Configure the offset from the base address of the calculation data required by each PE in the data source. The calculation data required by the four PEs is continuously stored in the data source, so the relative offset of the calculation data required by each PE needs to be determined. The instruction to achieve this is acc.cfg.ldo.

- (3)

- Storage location of calculation resultsThe configuration coprocessor calculation result storage location is the same as the configuration calculation data source. It supports saving calculation results to memory or CACHE BANK, and the configuration instruction is acc.cfg.sdl. Configure the relative offset of each PE calculation result in the storage location. The configuration instruction is acc.cfg.sdo.

- (4)

- The width and height of source matrixConfigure the width and height of the source matrix in each PE, and the instruction to achieve this function is acc.cfg.sms.

- (5)

- Convolution kernel coefficient parameterConfigure the offset address of the convolution kernel in the COE CACHE and the size of the convolution kernel. The configuration instruction is acc.cfg.cp. Configure each convolution kernel bias. The configuration instruction is acc.cfg.cb.

- (6)

- Pooling parametersConfigure the height and width of the maximum pooling method. The configuration instruction is acc.cfg.pw.

- (7)

- Matrix plus parametersConfigure the loading position of another input matrix participating in the matrix addition calculation, support the input matrix to be loaded from memory or CACHE BANK, and the configuration instruction is acc.cfg.adl. Configure the relative offset of another input matrix in each PE. The configuration instruction is acc.cfg.ado.

- Start calculationUsing the acc.sc instruction calculation, it enables the coprocessor to start the calculation according to the configuration parameters.

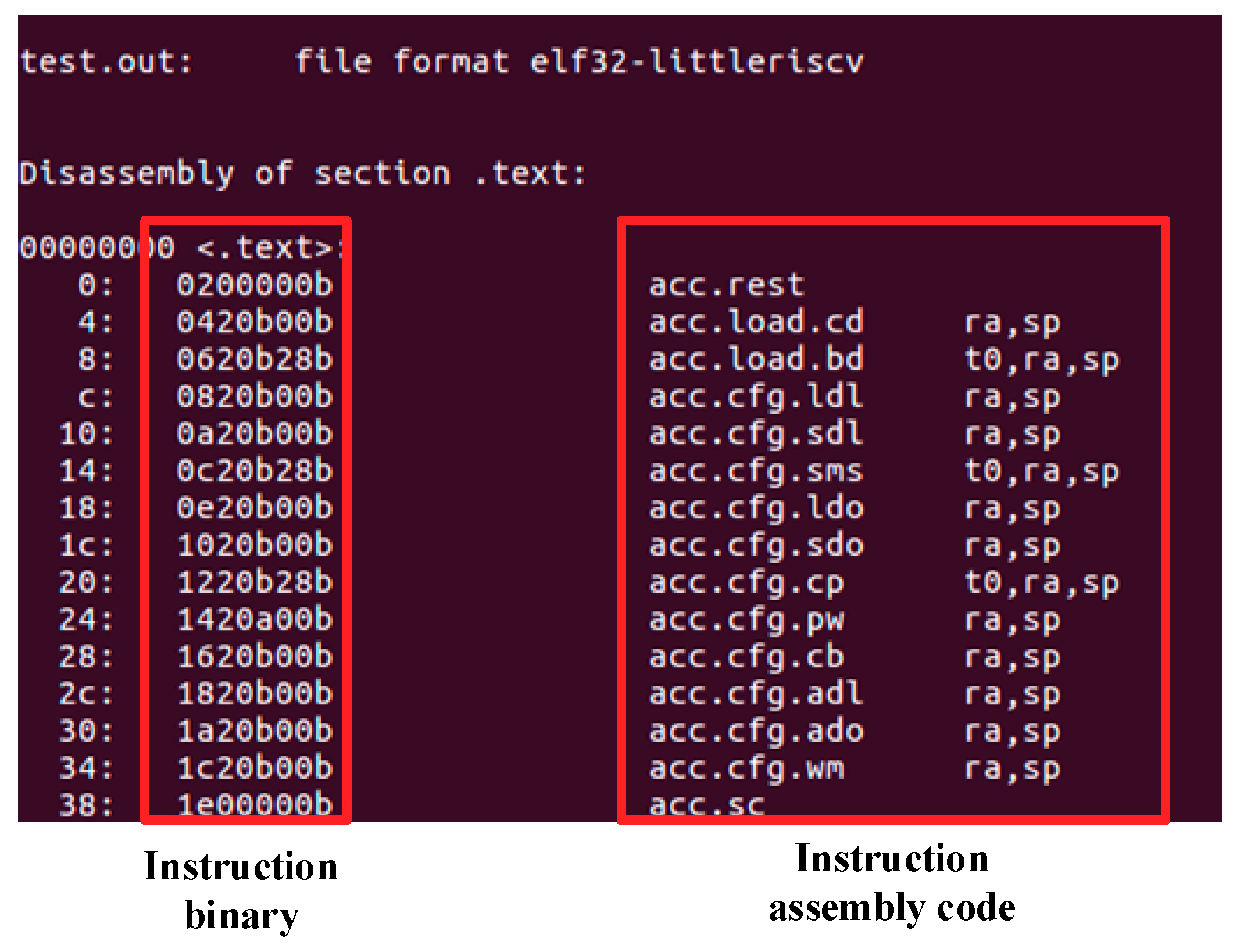

4.2. Establishment of Instruction Compiling Environment for Coprocessor

4.3. Coprocessor-Accelerated Library Function Design

5. Implementation of Common Algorithms on Coprocessor

5.1. LeNet-5 Network Implementation

- (1)

- Convolution layer C1. Convolutions are performed using six 5 × 5 size convolution kernels and 32 × 32 original images to generate six 28 × 28 feature maps, and the feature map is activated using the ReLU function.

- (2)

- Pooling layer S2. The S2 layer uses a 2 × 2 pooling filter to perform maximum pooling on the output of C1 to obtain six 14 × 14 feature maps.

- (3)

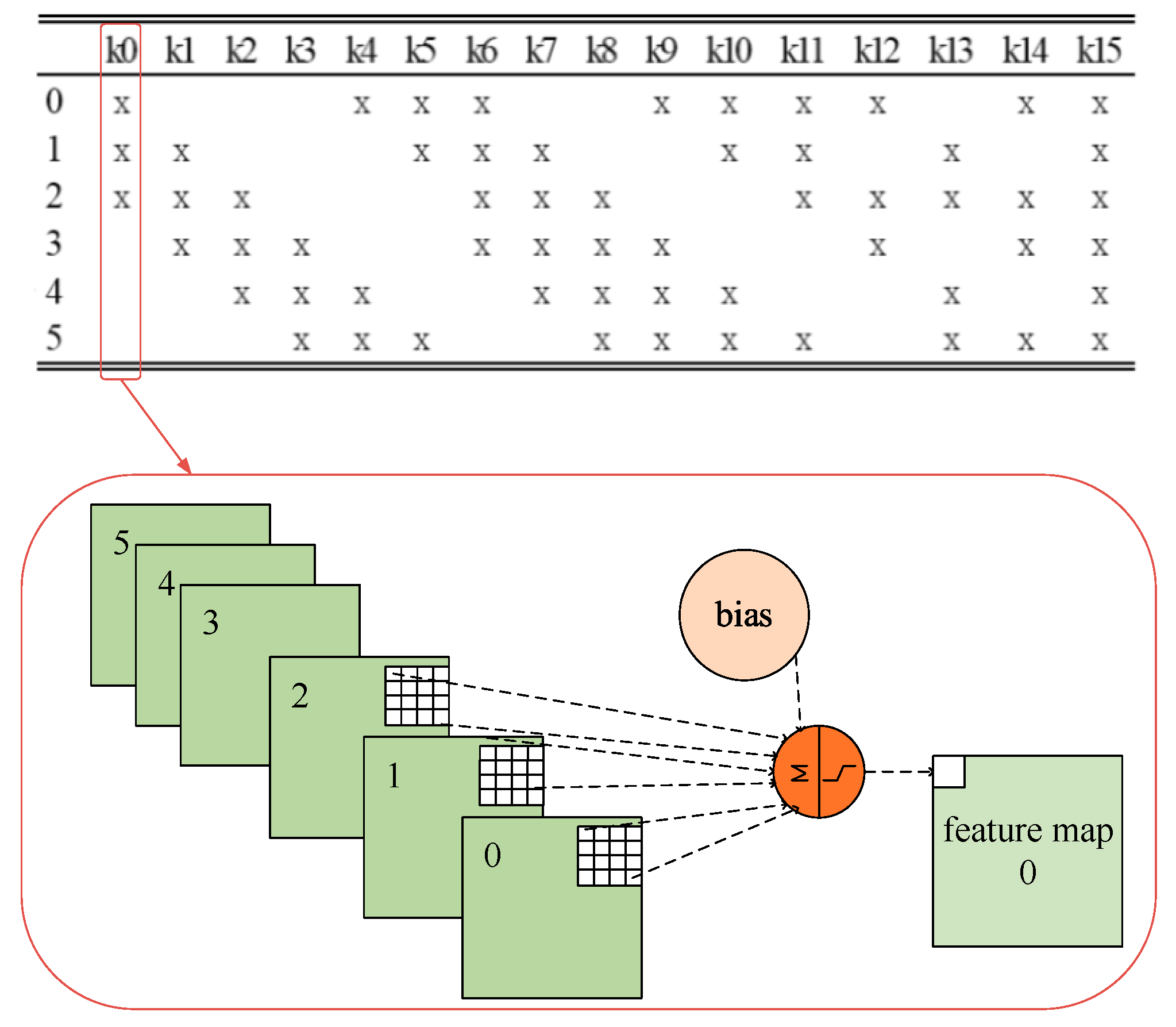

- Partially connected layer C3. The C3 layer uses 16 5 × 5 convolution kernels to partially connect with the six feature maps output by S2 and calculates 16 10 × 10 feature maps. The partial connection relationship and calculation process are shown in Figure 13. Take the calculation process of the output 0th feature map as an example: First, use the 0th convolution kernel to convolve with the 0, 1, 2 feature maps output by the S2 layer, and then add the results of the three convolutions, Plus a bias, and finally activate it to obtain the 0th feature map of the C3 layer.

- (4)

- Pooling layer S4. This layer uses a 2 × 2 pooling filter to pool the output of C3 into 16 5 × 5 feature maps.

- (5)

- Expand layer S5. This layer combines the 16 5 × 5 feature maps output by S4 into a one-dimensional matrix of size 400.

- (6)

- Fully connected layer S6. The S6 layer fully connects the one-dimensional matrix output from the S5 layer with 10 convolution operators, and obtains 10 classification results as the recognition results of the input image.

- (1)

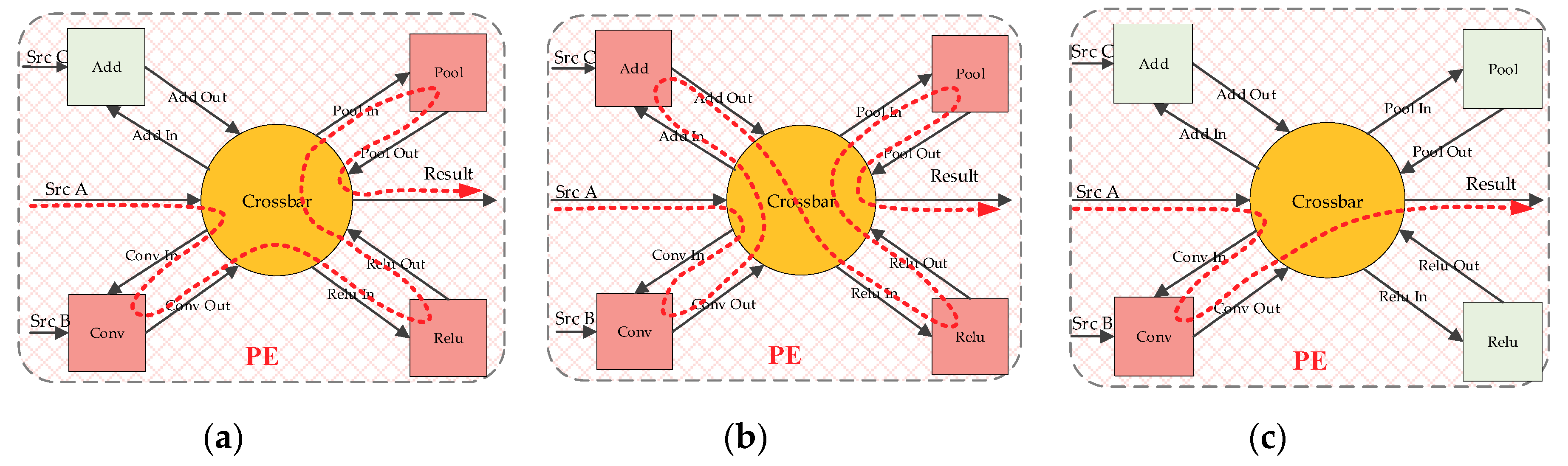

- First, map the C1 layer and the S2 layer. Configure the coprocessor to use the convolution, ReLU, and pooling modules of the PE unit, and configure the parameters of these three modules. Configure the crossbar to enable the data flow in the sequence shown in Figure 14a, start the coprocessor, and calculate the C1 and S2 layers.

- (2)

- Map C3 layer and S4 layer. Configure the coprocessor to use the convolution, matrix addition, ReLU, and pooling modules of the PE unit, and configure the parameters of these four modules. Configure the crossbar to enable the data flow in the order shown in Figure 14b, start the coprocessor, calculate the C3 layer and S4 layer, and cache the calculation result in BUF RAM BANK1.

- (3)

- The S5 layer uses CPU calculations. Use a software program to expand the calculation result in (2) into a 1 × 400 one-dimensional matrix, which is buffered in BUF RAM BANK2.

- (4)

- Map the S6 layer. Configure the coprocessor to use the convolution module of the PE unit so that the convolution module supports one-dimensional convolution. Configure the crossbar so that the data flow follows the sequence in Figure 14c and uses only the convolution module. Configure the data source as BUF RAM BANK2, start the coprocessor, and calculate the S6 layer.

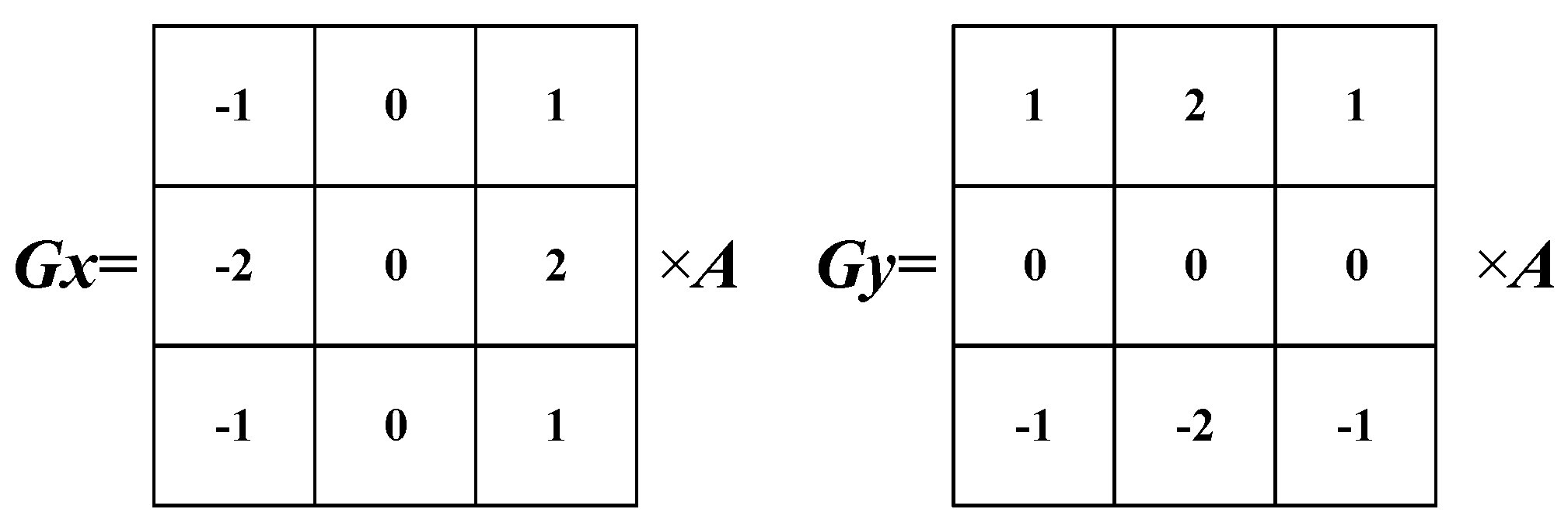

5.2. Sobel Edge Detection and FIR Algorithm Implementation

- (1)

- Calculate Gx and Gy. Configure the coprocessor to use two PE units. Each PE unit uses pooling and convolution modules, and the convolution kernels used by the convolution modules in the two PEs are the two convolution kernels of the Sobel operator. Configure the crossbar so that the data flow follows the sequence in Figure 16a. Start the coprocessor, calculate Gx and Gy, and cache the calculation result in BUF RAM BANK1.

- (2)

- Use the CPU to calculate |Gx| and |Gy|. Use a software program to take the absolute value of each element in the cached result of (1) to obtain |Gx| and |Gy|, and cache the calculation result in BUF RAM BANK2.

- (3)

- Calculate |G|. Configure the coprocessor to use the matrix addition module of the PE unit and configure the crossbar so that the data flow follows the order in Figure 16b and only the matrix addition module is used. Configure the data source as BUF RAM BANK2, start the coprocessor, and calculate |G|.

6. Experiment and Resource Analysis

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sheth, A.P. Internet of Things to Smart IoT Through Semantic, Cognitive, and Perceptual Computing. IEEE Intell. Syst. 2016, 31, 108–112. [Google Scholar]

- Shi, W.S.; Cao, J.; Zhang, Q.; Li, Y.H.; Xu, L.Y. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Varghese, B.; Wang, N.; Barbhuiya, S.; Kilpatrick, P.; Nikolopoulos, D.S. Challenges and Opportunities in Edge Computing. In Proceedings of the 2016 IEEE International Conference on Smart Cloud (SmartCloud), New York, NY, USA, 18–20 November 2016. [Google Scholar]

- Chua, L.O. CNN: A Paradigm for Complexity; World Scientific: Singapore, 1998. [Google Scholar]

- Sainath, T.N.; Kingsbury, B.; Saon, G.; Soltau, H.; Mohamed, A.R.; Dahl, G.; Ramabhadran, B. Deep Convolutional Neural Networks for Large-scale Speech Tasks. Neural Networks 2015, 64, 39–48. [Google Scholar] [CrossRef]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 15 February 2015. [Google Scholar]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Parashar, A.; Rhu, M.; Mukkara, A.; Puglielli, A.; Venkatesan, R.; Khailany, B.; Emer, J.; Keckler, S.W.; Dally, W.J. SCNN: An Accelerator for Compressed-sparse Convolutional Neural Networks. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017. [Google Scholar]

- Chi, P.; Li, S.; Xu, C.; Zhang, T.; Zhao, J.; Liu, Y.; Wang, Y.; Xie, Y. PRIME: A Novel Processing-in-Memory Architecture for Neural Network Computation in ReRAM-Based Main Memory. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Korea, 18–22 June 2016. [Google Scholar]

- Hardieck, M.; Kumm, M.; Möller, K.; Zipf, P. Reconfigurable Convolutional Kernels for Neural Networks on FPGAs. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, New York, NY, USA, 24–26 February 2019. [Google Scholar]

- Bettoni, M.; Urgese, G.; Kobayashi, Y.; Macii, E.; Acquaviva, A. A Convolutional Neural Network Fully Implemented on FPGA for Embedded Platforms. In Proceedings of the 2017 New Generation of CAS (NGCAS), Genova, Italy, 6–9 September 2017. [Google Scholar]

- Liu, B.; Zou, D.; Feng, L.; Feng, S.; Fu, P.; Li, J.B. An FPGA-Based CNN Accelerator Integrating Depthwise Separable Convolution. Electronics 2019, 8, 281. [Google Scholar] [CrossRef]

- Kurth, A.; Vogel, P.; Capotondi, A.; Marongiu, A.; Benini, L. HERO: Heterogeneous Embedded Research Platform for Exploring RISC-V Manycore Accelerators on FPGA. In Proceedings of the Workshop on Computer Architecture Research with RISC-V (CARRV), Boston, MA, USA, 14 October 2017. [Google Scholar]

- Ajayi, T.; Al-Hawaj, K.; Amarnath, A. Experiences Using the RISC-V Ecosystem to Design an Accelerator-Centric SoC in TSMC 16nm. In Proceedings of the Workshop on Computer Architecture Research with RISC-V (CARRV), Boston, MA, USA, 14 October 2017. [Google Scholar]

- Matthews, E.; Shannon, L. Taiga: A Configurable RISC-V Soft-Processor Framework for Heterogeneous Computing Systems Research. In Proceedings of the Workshop on Computer Architecture Research with RISC-V (CARRV), Boston, MA, USA, 14 October 2017. [Google Scholar]

- Ge, F.; Wu, N.; Xiao, H.; Zhang, Y.; Zhou, F. Compact Convolutional Neural Network Accelerator for IoT Endpoint SoC. Electronics 2019, 8, 497. [Google Scholar] [CrossRef]

- Patterson, D.; Waterman, A. The RISC-V Reader: An Open Architecture Atlas; Strawberry Canyon: Berkeley, CA, USA, 2017. [Google Scholar]

- Available online: https://riscv.org/ (accessed on 26 February 2020).

- Available online: https://github.com/SI-RISCV/e200_opensource (accessed on 26 February 2020).

- El-Sawy, A.; El-Bakry, H.; Loey, M. CNN for Handwritten Arabic Digits Recognition Based on LeNet-5. Springer Sci. Bus. Media 2016, 533, 566–575. [Google Scholar]

- Bhogal, R.K.; Agrawal, A. Image Edge Detection Techniques Using Sobel, T1FLS, and IT2FLS. In Proceedings of the ICIS 2018, San Francisco, CA, USA, 13–16 December 2018. [Google Scholar]

| Instruction | Funct7 | Rd | Xd | Rs1 | Xs1 | Rs2 | Xs2 |

|---|---|---|---|---|---|---|---|

| acc.rest | 1 | - | 0 | - | - | - | 0 |

| acc.load.cd | 2 | - | 0 | CoeMemoryAddress | 1 | Length|CacheBaseAddress | 1 |

| acc.load.bd | 3 | BANKID | 0 | BankMemoryAddress | 1 | Length|BankBaseAddress | 1 |

| acc.cfg.ldl | 4 | - | 0 | SrcDataBaseAddress | 1 | Location | 1 |

| acc.cfg.sdl | 5 | - | 0 | StoreDataBaseAddress | 1 | Location | 1 |

| acc.cfg.sms | 6 | PEID | 0 | Width | 1 | Height | 1 |

| acc.cfg.ldo | 7 | - | 0 | Offset1|Offset2 | 1 | Offset3|Offset4 | 1 |

| acc.cfg.sdo | 8 | - | 0 | Offset1|Offset2 | 1 | Offset3|Offset4 | 1 |

| acc.cfg.cp | 9 | PEID | 0 | CoeCacheBaseAddress | 1 | CoeHeight|CoeWidth | 1 |

| acc.cfg.cb | 10 | - | 0 | PEID | 1 | CoeBasis | 1 |

| acc.cfg.pw | 11 | - | 0 | Width | 1 | Height | 1 |

| acc.cfg.adl | 12 | - | 0 | SrcAddDataAddress | 1 | Location | 1 |

| acc.cfg.ado | 13 | - | 0 | Offset1|Offset2 | 1 | Offset3|Offset4 | 1 |

| acc.cfg.wm | 14 | - | 0 | PEID | 1 | Order | 1 |

| acc.sc | 15 | - | 0 | - | 0 | - | 0 |

| Tool Name | Function |

|---|---|

| riscv32-unkown-elf-as | Assembler, convert assembly code to executable ELF file |

| riscv32-unkown-elf-ld | Linker, linking multiple target and library files as executables |

| riscv32-unknown-elf-objcopy | Converting ELF format files to bin format files |

| riscv32-unknown-elf-objdump | Disassembler, which converts binary files into assembly code |

| Function Interface | Function |

|---|---|

| void CoprocessorRest () | Reset coprocessor |

| int LoadCoeData (unsigned int CoeMemoryAddress, unsigned int CacheBaseAddress, unsigned int Length) | Load convolution kernel coefficients into COE CACHE |

| int LoadBankData (unsigned int BankMemoryAddress, unsigned int BankBaseAddress, unsigned int Length, int Bankid) | Load source matrix into CACHE BANK |

| int CfgLoadDataLoc (int Location, unsigned int SrcDataBaseAddress, unsigned int Offset1, unsigned int Offset2, unsigned int Offset3, unsigned int Offset4) | Configure the location of the calculation data source and the relative offset of the calculation data of each PE unit in the data source |

| int CfgStoreDataLoc (int Location, unsigned int StoreDataBaseAddress, unsigned int Offset1, unsigned int Offset2, unsigned int Offset3, unsigned int Offset4) | Configure the calculation result storage location and the relative offset of the calculation result of each PE unit in the storage location |

| int CfgAddDataLoc (int Location, unsigned int SrcAddDataAddress, unsigned int Offset1, unsigned int Offset2, unsigned int Offset3, unsigned int Offset4) | Configure the loading position of another input matrix participating in the matrix addition calculation and the relative offset of the other input matrix in each PE unit in the loading position |

| int CfgSrcMatrixSize (int Width, int Height, int PEID) | Configure the width and height of the source matrix |

| int CfgCoeParm (unsigned int CoeCacheBaseAddress, int CoeHeight, int CoeWidth, int PEID) | Configure convolution kernel size and offset address in COE CACHE |

| int CfgCoeBias (float CoeBasis1, float CoeBasis2, float CoeBasis3, float CoeBasis4) | Configure the convolution kernel bias for each PE unit |

| int CfgPoolWidth (int Width, int Height) | Configure pooling width and height |

| int CfgWorkMode (unsigned int Order1, unsigned int Order2, unsigned int Order, 3 unsigned int Order4) | Configure the working mode of 4 PE units, that is, select the acceleration unit that participates in the calculation and the direction of the data flow |

| int CoprocessorStartCal () | Start calculation |

| Layer | Calculation Formula | Explanation |

|---|---|---|

| C1 | Convolution and ReLU | |

| S2 | Pooling | |

| C3 | Convolution, matrix addition, and ReLU | |

| S4 | Pooling | |

| S5 | Expand | |

| S6 | Fully connected |

| Module | Slice LUTs | Slice Registers | DSPs |

|---|---|---|---|

| E203 kernel | 4338 | 1936 | 0 |

| coprocessor | 8534 | 7023 | 21 |

| CLINT | 33 | 132 | 0 |

| PLIC | 464 | 411 | 0 |

| DEBUG | 235 | 568 | 0 |

| Peripherals | 3199 | 2281 | 0 |

| Others | 1092 | 1325 | 0 |

| Total | 17,913 | 13,676 | 21 |

| Algorithm | Rv32IM Instruction | Coprocessor Instruction |

|---|---|---|

| Convolution (4 × 4 matrix and 3 × 3 convolution kernel) | 12,982 | 2070 |

| Pooling (6 × 6 matrix and 2 × 2 filter) | 2452 | 1706 |

| ReLU (6 × 6 matrix) | 1249 | 1101 |

| Matrix Addition (6 × 6 matrix) | 2755 | 2005 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, N.; Jiang, T.; Zhang, L.; Zhou, F.; Ge, F. A Reconfigurable Convolutional Neural Network-Accelerated Coprocessor Based on RISC-V Instruction Set. Electronics 2020, 9, 1005. https://doi.org/10.3390/electronics9061005

Wu N, Jiang T, Zhang L, Zhou F, Ge F. A Reconfigurable Convolutional Neural Network-Accelerated Coprocessor Based on RISC-V Instruction Set. Electronics. 2020; 9(6):1005. https://doi.org/10.3390/electronics9061005

Chicago/Turabian StyleWu, Ning, Tao Jiang, Lei Zhang, Fang Zhou, and Fen Ge. 2020. "A Reconfigurable Convolutional Neural Network-Accelerated Coprocessor Based on RISC-V Instruction Set" Electronics 9, no. 6: 1005. https://doi.org/10.3390/electronics9061005

APA StyleWu, N., Jiang, T., Zhang, L., Zhou, F., & Ge, F. (2020). A Reconfigurable Convolutional Neural Network-Accelerated Coprocessor Based on RISC-V Instruction Set. Electronics, 9(6), 1005. https://doi.org/10.3390/electronics9061005