Resource Partitioning and Application Scheduling with Module Merging on Dynamically and Partially Reconfigurable FPGAs

Abstract

1. Introduction

- We jointly solve the problem of resource partitioning and application scheduling and consider many physical constraints;

- We introduce module merging technology to improve execution efficiency;

- We propose two algorithms based on simulated annealing which can be utilized to solve a optimal solution in a short time;

- The effectiveness of our proposed algorithms are evaluated on a number of benchmarks abstracted by piratical applications and compared with three approaches.

2. Related Work

3. Problem Formulation

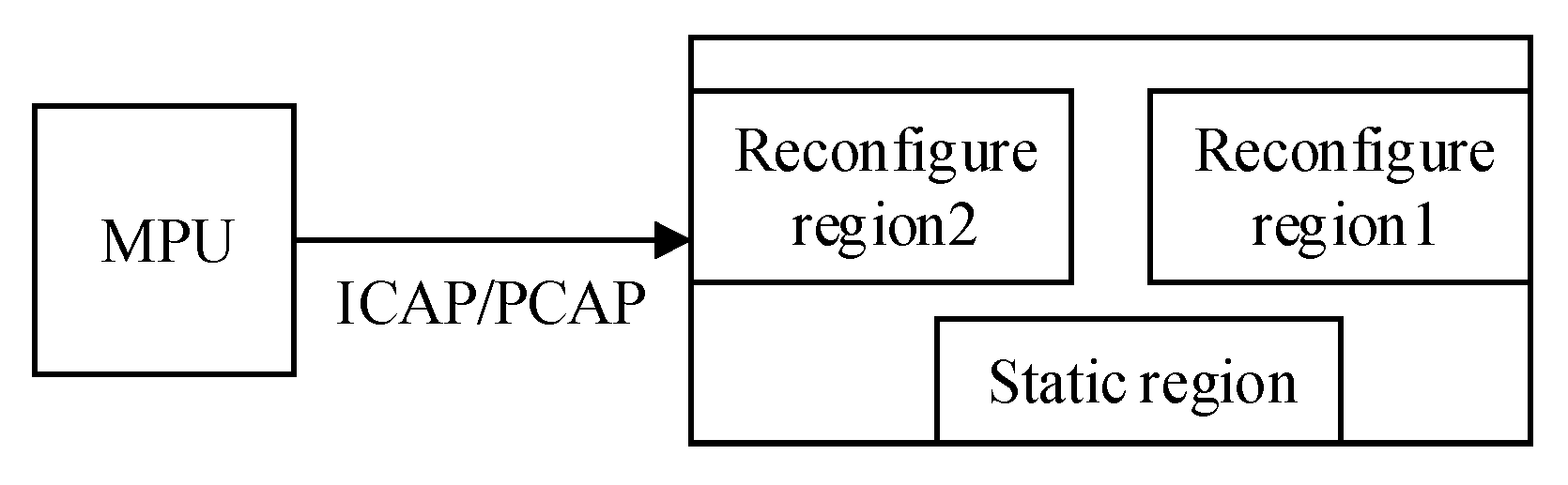

3.1. Platform Model

3.2. Application Model

3.3. The Partitioning and Scheduling Problem

3.4. Insight of the Studied Problem

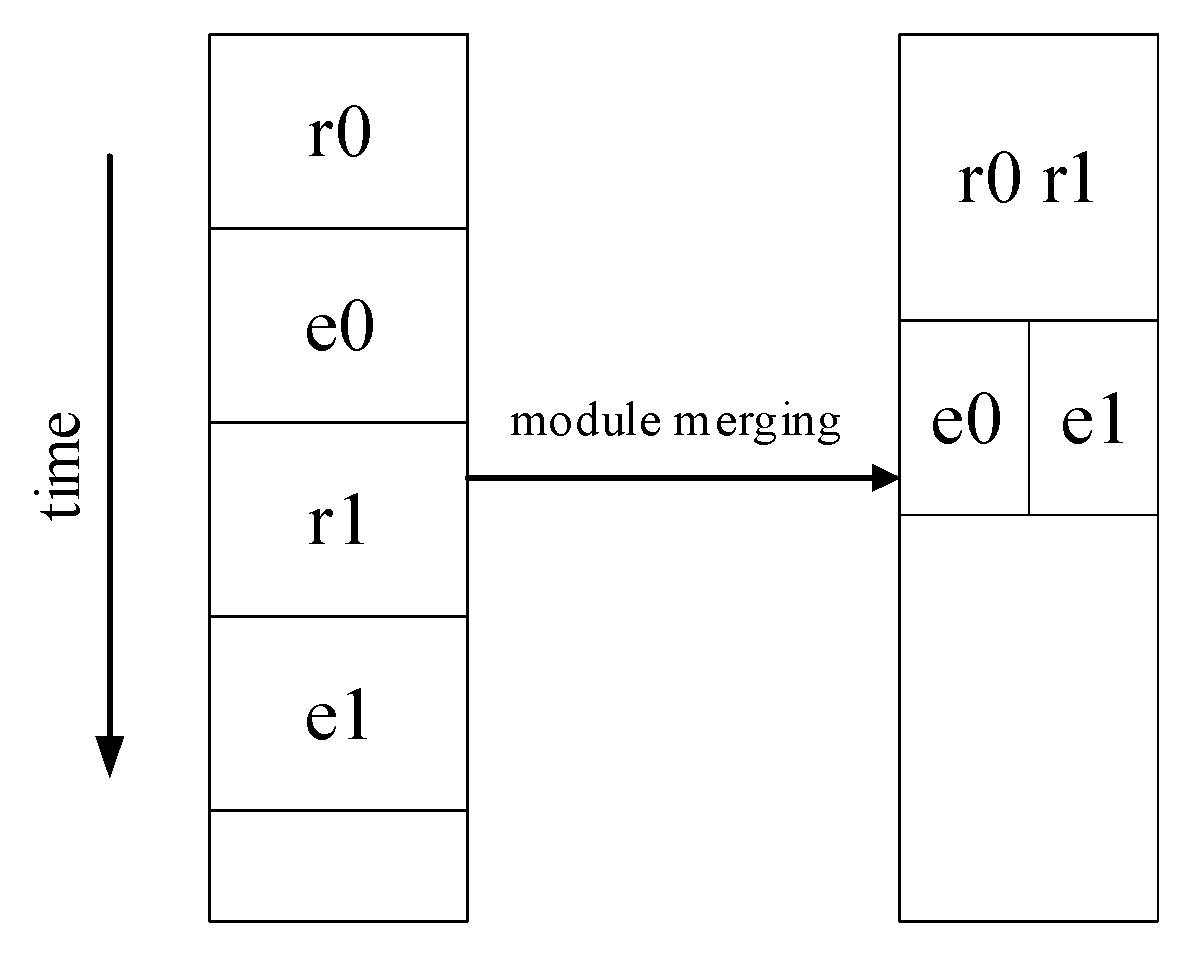

3.5. Module Merging

4. The Reconfiguration-Dependency Non-Consistent Algorithm Based on Simulated Annealing (RDNC-SA)

4.1. Structure of the Simulated Annealing Algorithm

- A feasible solution is generated randomly as the initial solution.

- Then it is disturbed to search for a new solution in the solution space. If a feasible solution is found, the reconfiguration order and the mapping relationship between task and region can be obtained simultaneously. The number of regions and resource types are determined by the mapping relationship.

- When a feasible solution is obtained, we need to calculate its objective function f.

- the difference of the objective value between the new solution and the former one is calculated;

- According to Metropolis criterion described in the below, whether to accept the new solution is judged.

- The next step is to judge the number of iterations in the inner loop is reached.

- Determine whether the termination condition is reached after the end of the inner loop iteration. If not, the algorithm would cool the temperature and continue to produce new solutions. Otherwise, the optimal solution is returned.

4.2. Solution Structure

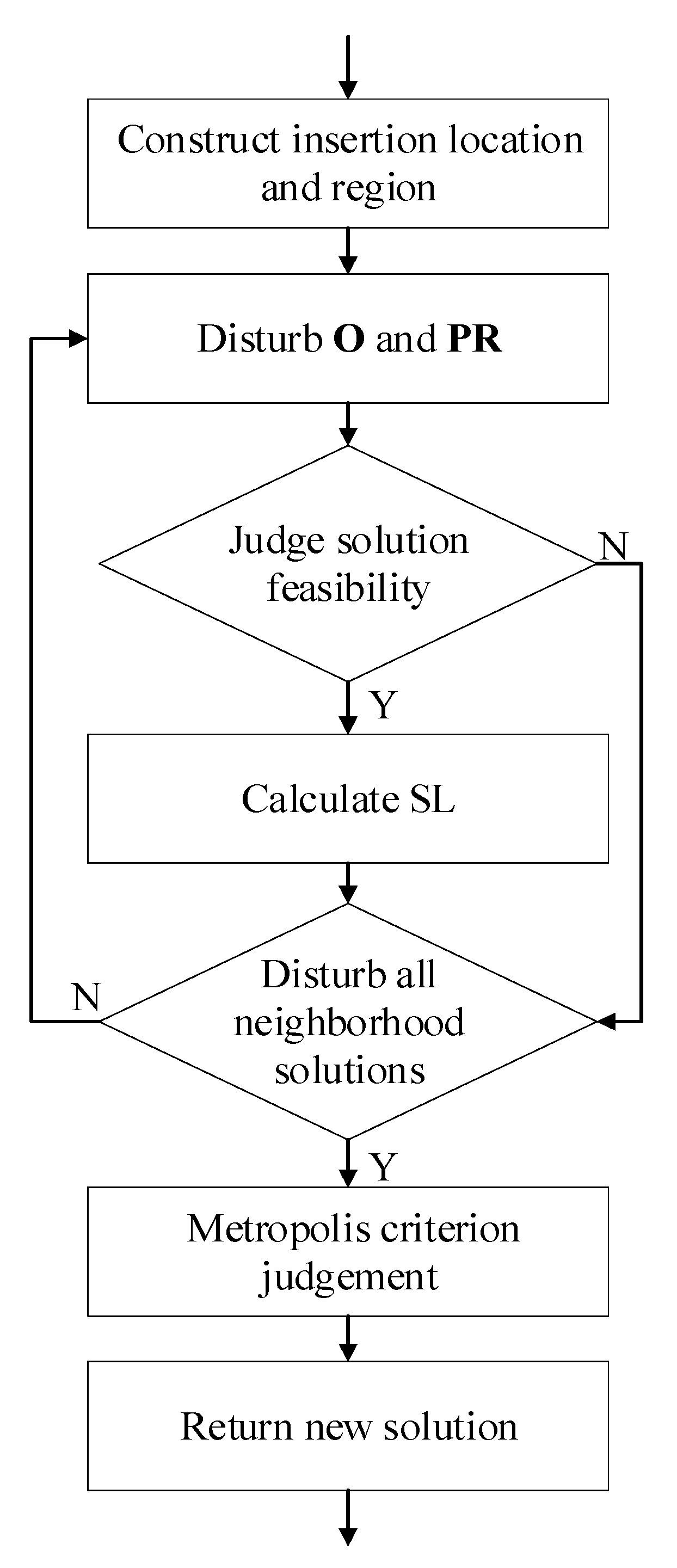

4.3. Disturbance Method with Module Merging

4.4. Solution Feasibility Evaluation

4.4.1. Resource Conflicting Condition

4.4.2. Execution Infeasible Condition

4.5. Scheduling Length Calculation

| Algorithm 1 Calculate scheduling length |

|

4.6. New Solution Based on Neighborhood Solution Set

- Before disturbing , we need to select a set of insertion locations for in O. The ROV corresponding to these locations is a set of non repeating values. Similarly, a set of non repeating insertion regions is also selected in PR.

- Each location and region to be inserted for is arranged to form a number of unique pairs denoted as Pair. Each element of Pair is denoted as . Insert into the O and PR positions of each . A solution is formed after each disturbance. After the feasibility analysis of the solutions formed by each disturbance, the SL of each feasible solution is calculated. we need to save feasible solutions and corresponding SL.

- After all of the neighborhood solutions solved, we compared total solution SL to the minimum SL of solution and selecting one as return value according to Metropolis criterion. Noting that this process of selecting is different SA main process. In here, it is only a select strategy for returning a solution from neighborhood. After returning a solution, the SA main process still needs to judge whether reception this new solution as current solution according to Metropolis criterion.

| Algorithm 2 Reconfiguration-dependency non-consistent algorithm (RDNC-SA) |

|

5. The Reconfiguration-Dependency Consistent Algorithm Based on Simulated Annealing (RDC-SA)

| Algorithm 3 Reconfiguration-dependency consistent algorithm based on simulated annealing (RDC-SA) |

|

6. Experiment Result

6.1. Target Platform Configuration

6.2. Parameter Setup

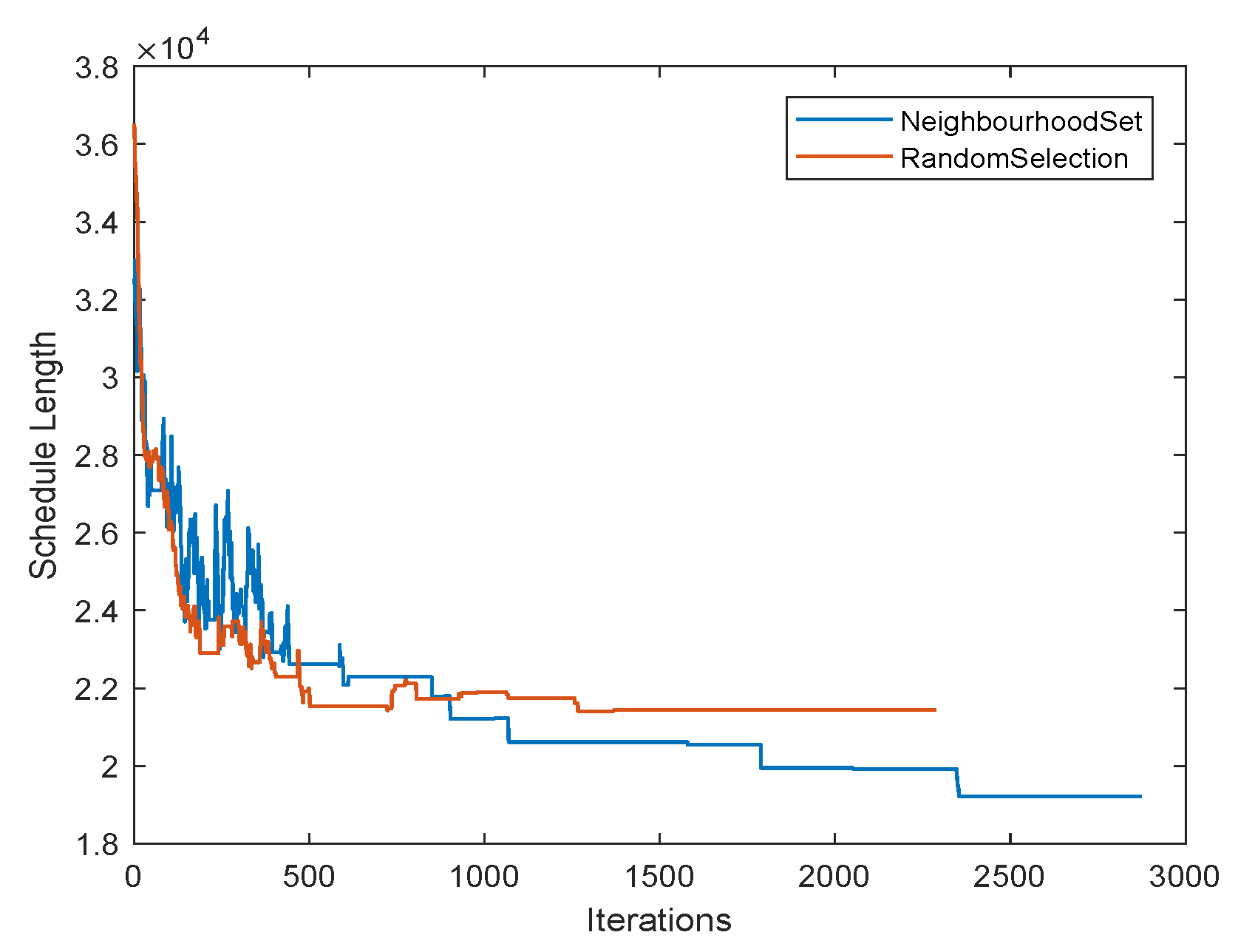

6.3. Performance Analysis of Neighborhood Solution Set

6.4. Performance Analysis of Different Algorithms

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nurvitadhi, E.; Sheffield, D.; Sim, J.; Mishra, A.; Venkatesh, G.; Marr, D. Accelerating binarized neural networks: Comparison of FPGA, CPU, GPU, and ASIC. In Proceedings of the 2016 International Conference on Field-Programmable Technology (FPT), Xi’an, China, 7–9 December 2016. [Google Scholar]

- Xilinx. Available online: https://www.xilinx.com/support/documentation/sw_manuals/xilinx2020_1/ug909-vivado-partial-reconfiguration.pdf (accessed on 23 June 2020).

- Kara, K.; Alistarh, D.; Alonso, G.; Mutlu, O.; Zhang, C. FPGA-accelerated dense linear machine learning: A precision-convergence trade-off. In Proceedings of the 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Napa, CA, USA, 30 April–2 May 2017. [Google Scholar]

- Njiki, M.; Elouardi, A.; Bouaziz, S.; Casula, O.; Roy, O. A multi-FPGA architecture-based real-time TFM ultrasound imaging. J. Real Time Image Process. 2019, 16, 505–521. [Google Scholar] [CrossRef]

- Lentaris, G.; Stratakos, I.; Stamoulias, I.; Soudris, D.; Lourakis, M.; Zabulis, X. High-performance vision-based navigation on SoC FPGA for spacecraft proximity operations. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 1188–1202. [Google Scholar] [CrossRef]

- Dhar, A.; Yu, M.; Zuo, W.; Wang, X.; Kim, N.S.; Chen, D. Leveraging Dynamic Partial Reconfiguration with Scalable ILP Based Task Scheduling. In Proceedings of the 2020 33rd International Conference on VLSI Design and 2020 19th International Conference on Embedded Systems (VLSID), Bangalore, India, 4–8 January 2020. [Google Scholar]

- El Cadi, A.A.; Souissi, O.; Atitallah, R.B.; Belanger, N.; Artiba, A. Mathematical programming models for scheduling in a CPU/FPGA architecture with heterogeneous communication delays. J. Intell. Manuf. 2018, 29, 629–640. [Google Scholar] [CrossRef]

- Saha, S.; Sarkar, A.; Chakrabarti, A. Scheduling Dynamic Hard Real-Time Task Sets on Fully and Partially Reconfigurable Platforms. IEEE EMBED Syst. Lett. 2015, 7, 23–26. [Google Scholar] [CrossRef]

- Charitopoulos, G.; Koidis, I.; Papadimitriou, K.; Pnevmatikatos, D. Hardware task scheduling for partially reconfigurable FPGAs. In Proceedings of the International Symposium on Applied Reconfigurable Computing, Bochum, Germany, 13–17 April 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 487–498. [Google Scholar]

- Salamy, H.; Aslan, S. A genetic algorithm based approach to pipelined memory-aware scheduling on an MPSoC. In Proceedings of the 2015 IEEE Dallas Circuits and Systems Conference (DCAS), Dallas, TX, USA, 12–13 October 2015. [Google Scholar]

- Ramezani, R. A prefetch-aware scheduling for FPGA-based multi-task graph systems. J. Supercomput. 2020, 76, 7140–7160. [Google Scholar] [CrossRef]

- Resano, J.; Mozos, D.; Catthoor, F. A hybrid prefetch scheduling heuristic to minimize at run-time the reconfiguration overhead of dynamically reconfigurable hardware [multimedia applications]. In Proceedings of the Design, Automation and Test in Europe, Munich, Germany, 7–11 March 2015. [Google Scholar]

- Clemente, J.A.; Beretta, I.; Rana, V.; Atienza, D.; Sciuto, D. A mapping-scheduling algorithm for hardware acceleration on reconfigurable platforms. ACM Trans. Reconfig. Technol. Syst. TRETS 2014, 7, 1–27. [Google Scholar] [CrossRef]

- Kim, J.J.; Yang, H.M.; Ryu, K.H.; Kim, H.S. FPGA Low Power Technology Mapping for Reuse Module Design under the Time Constraint. In Proceedings of the 2008 Second International Conference on Future Generation Communication and Networking, Sanya, Hainan, China, 13–15 December 2008. [Google Scholar]

- Clemente, J.A.; Resano, J.; Mozos, D. An approach to manage reconfigurations and reduce area cost in hard real-time reconfigurable systems. ACM Trans. Embed. Comput. Syst. TECS 2014, 13, 1–24. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, J.; Zhang, C.; Luk, W. HW/SW partitioning for region-based dynamic partial reconfigurable FPGAs. In Proceedings of the 2014 IEEE 32nd International Conference on Computer Design (ICCD), Seoul, Korea, 19–22 October 2014. [Google Scholar]

- Dorflinger, A.; Albers, M.; Schlatow, J.; Fiethe, B.; Michalik, H.; Keldenich, P.; S’andor, P.F. Hardware and software task scheduling for ARM-FPGA platforms. In Proceedings of the 2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Edinburgh, UK, 6–9 August 2018. [Google Scholar]

- Ferrandi, F.; Lanzi, P.L.; Pilato, C.; Sciuto, D.; Tumeo, A. Ant colony optimization for mapping, scheduling and placing in reconfigurable systems. In Proceedings of the 2013 NASA/ESA Conference on Adaptive Hardware and Systems (AHS-2013), Torino, Italy, 24–27 June 2013. [Google Scholar]

- Sun, Z.; Zhang, H.; Zhang, Z. Resource-Aware Task Scheduling and Placement in Multi-FPGA System. IEEE ACCESS 2019, 7, 163851–163863. [Google Scholar] [CrossRef]

- Sahoo, S.S.; Nguyen, T.D.; Veeravalli, B.; Kumar, A. Multi-objective design space exploration for system partitioning of FPGA-based Dynamic Partially Reconfigurable Systems. Integration 2019, 67, 95–107. [Google Scholar] [CrossRef]

- Biondi, A.; Buttazzo, G. Timing-aware FPGA partitioning for real-time applications under dynamic partial reconfiguration. In Proceedings of the 2017 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Pasadena, CA, USA, 24–27 July 2017; pp. 172–179. [Google Scholar]

- Zhou, T.; Pan, T.; Meyer, M.C.; Dong, Y.; Watanabe, T. An Interval-based Mapping Algorithm for Multi-shape Tasks on Dynamic Partial Reconfigurable FPGAs. In Proceedings of the 2020 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Portland, OR, USA, 17–21 May 2020. [Google Scholar]

- Deiana, E.A.; Rabozzi, M.; Cattaneo, R.; Santambrogio, M.D. A multiobjective reconfiguration-aware scheduler for FPGA-based heterogeneous architectures. In Proceedings of the 2015 International Conference on ReConFigurable Computing and FPGAs (ReConFig), Mexico City, Mexico, 7–9 December 2015. [Google Scholar]

- Ramezani, R.; Sedaghat, Y.; Naghibzadeh, M.; Clemente, J.A. Reliability and makespan optimization of hardware task graphs in partially reconfigurable platforms. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 983–994. [Google Scholar] [CrossRef]

- Bender, M.A.; Farach-Colton, M.; Pemmasani, G.; Skiena, S.; Sumazin, P. Lowest common ancestors in trees and directed acyclic graphs. J. Algorithms 2005, 57, 75–94. [Google Scholar] [CrossRef]

- Tang, Q.; Guo, B.; Wang, Z. Sw/Hw Partitioning and Scheduling on Region-Based Dynamic Partial Reconfigurable System-on-Chip. Electronics 2020, 9, 1362. [Google Scholar] [CrossRef]

- Xiao, X.; Xie, G.; Li, R.; Li, K. Minimizing schedule length of energy consumption constrained parallel applications on heterogeneous distributed systems. In Proceedings of the 2016 IEEE Trustcom/BigDataSE/ISPA, Tianjin, China, 23–26 August 2016. [Google Scholar]

- Tang, Q.; Wang, Z.; Guo, B.; Zhu, L.H.; Wei, J.B. Partitioning and Scheduling with Module Merging on Dynamic Partial Reconfigurable FPGAs. ACM Trans. Reconfig. Technol. Syst. TRETS 2020, 13, 1–24. [Google Scholar] [CrossRef]

- Chen, S.; Yoshimura, T. Fixed-outline floorplanning: Block-position enumeration and a new method for calculating area costs. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2008, 27, 858–871. [Google Scholar] [CrossRef]

- Tang, Q.; Wu, S.F.; Shi, J.W.; Wei, J.B. Optimization of duplication-based schedules on network-on-chip based multi-processor system-on-chips. IEEE Trans. Parallel Distrib. Syst. 2016, 28, 826–837. [Google Scholar] [CrossRef]

- Canon, L.C.; El Sayah, M.; Héam, P.C. A comparison of random task graph generation methods for scheduling problems. In European Conference on Parallel Processing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 61–73. [Google Scholar]

- Tang, Q.; Basten, T.; Geilen, M.; Stuijk, S.; Wei, J.B. Mapping of synchronous dataflow graphs on MPSoCs based on parallelism enhancement. J. Parallel Distrib. Comput. 2017, 101, 79–91. [Google Scholar] [CrossRef]

- Gurobi. Available online: https://www.gurobi.com/ (accessed on 16 January 2012).

- Purgato, A.; Tantillo, D.; Rabozzi, M.; Sciuto, D.; Santambrogio, M.D. Resource-efficient scheduling for partially-reconfigurable FPGA-based systems. In Proceedings of the 2016 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Chicago, IL, USA, 23–27 May 2016. [Google Scholar]

| Task Name | ET | CLB Num | PN | CN |

|---|---|---|---|---|

| 243 | 231 | - | {} | |

| 175 | 532 | - | {,} | |

| 154 | 287 | - | {} | |

| 146 | 357 | {,} | {,} | |

| 139 | 532 | {,} | {} | |

| 199 | 91 | {} | {} | |

| 256 | 406 | {,} | {} | |

| 17 | 14 | {,} | {} |

| Application | MILP | IS-k | ACO | RDNC | RDC | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| App Name | TaskNum | Time | RDNC-SA | RDC-SA | Time | RDNC-SA | RDC-SA | Time | RDNC-SA | RDC-SA | Time | Time |

| PIR | PIR | PIR | PIR | PIR | PIR | |||||||

| JPEG encoder | 8 | 0.39 | −3% | −3% | 0.36 | −3% | −3% | 4.01 | −29% | −29% | 31.96 | 0.85 |

| Parallel Gauss Elimination | 12 | 29.18 | −12% | −10% | 2.10 | −13% | −11% | 4.66 | −43% | −42% | 54.44 | 2.4 |

| LU decomposition | 14 | −8% | −8% | 5.76 | −8% | −8% | 5.30 | −34% | −34% | 46.2 | 2.29 | |

| Parallel Tiled QR factorization | 14 | 1109.05 | −10% | −10% | 10.12 | −14% | −14% | 3.08 | −41% | −41% | 55.34 | 1.84 |

| Gauss Elimination | 14 | −6% | −9% | 7.42 | −6% | −9% | 4.38 | −33% | −36% | 75.39 | 2.88 | |

| Channel Equalizer | 14 | 102.88 | −6% | −9% | 3.15 | −6% | −9% | 5.19 | −37% | −39% | 96.94 | 1.53 |

| Gauss Jordan | 15 | −8% | −6% | 40.43 | −17% | −15% | 4.42 | −35% | −34% | 63.03 | 2.69 | |

| Quadratic Equation Solver | 15 | −10% | −15% | 4.49 | −11% | −16% | 3.91 | −41% | −44% | 67.73 | 0.7 | |

| TD-SCDMA | 16 | −12% | −12% | 32.24 | −13% | −13% | 3.64 | −42% | −42% | 46.64 | 2.34 | |

| FFT | 16 | −5% | −4% | 115.39 | −6% | −5% | 3.88 | −22% | −21% | 124.96 | 2.6 | |

| Laplace Equation | 16 | −9% | −7% | 16.73 | −11% | −9% | 4.22 | −33% | −32% | 97.17 | 2.34 | |

| Parallel MVA | 16 | −14% | −10% | 56.64 | −18% | −15% | 4.38 | −33% | −30% | 68.21 | 2.96 | |

| Ferret | 20 | −12% | 0% | 50.60 | −14% | −3% | 4.02 | −45% | −38% | 124.08 | 1.23 | |

| Cyber Shake | 20 | −13% | −12% | 1475.25 | −14% | −13% | 4.94 | −44% | −43% | 231.61 | 4.98 | |

| Epigenomics | 20 | −10% | −10% | 117.74 | −15% | −15% | 4.16 | −41% | −41% | 160.32 | 2.43 | |

| Montage | 20 | −10% | −10% | 43.83 | −11% | −11% | 4.26 | −43% | −43% | 88.79 | 2.27 | |

| LIGO | 22 | −12% | −10% | 40.90 | −12% | −10% | 4.82 | −43% | −42% | 143.54 | 4 | |

| WLAN 802.11a Receiver | 24 | −12% | −14% | 70.63 | −12% | −14% | 5.06 | −33% | −34% | 102.73 | 1.59 | |

| MP3 Decoder Block Parallelism | 27 | −7% | −9% | 131.32 | −6% | −8% | 6.41 | −34% | −35% | 222.84 | 4.81 | |

| SIPHT | 31 | −14% | −12% | −15% | −13% | 6.25 | −37% | −35% | 255.16 | 7.48 | ||

| Molecular Dynamics | 41 | −15% | −13% | −14% | −13% | 9.54 | −32% | −32% | 277.55 | 8.89 | ||

| Modem | 50 | −30% | −30% | 268.30 | −6% | −5% | 9.87 | −42% | −41% | 372.14 | 6.86 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Tang, Q.; Guo, B.; Wei, J.-B.; Wang, L. Resource Partitioning and Application Scheduling with Module Merging on Dynamically and Partially Reconfigurable FPGAs. Electronics 2020, 9, 1461. https://doi.org/10.3390/electronics9091461

Wang Z, Tang Q, Guo B, Wei J-B, Wang L. Resource Partitioning and Application Scheduling with Module Merging on Dynamically and Partially Reconfigurable FPGAs. Electronics. 2020; 9(9):1461. https://doi.org/10.3390/electronics9091461

Chicago/Turabian StyleWang, Zhe, Qi Tang, Biao Guo, Ji-Bo Wei, and Ling Wang. 2020. "Resource Partitioning and Application Scheduling with Module Merging on Dynamically and Partially Reconfigurable FPGAs" Electronics 9, no. 9: 1461. https://doi.org/10.3390/electronics9091461

APA StyleWang, Z., Tang, Q., Guo, B., Wei, J.-B., & Wang, L. (2020). Resource Partitioning and Application Scheduling with Module Merging on Dynamically and Partially Reconfigurable FPGAs. Electronics, 9(9), 1461. https://doi.org/10.3390/electronics9091461