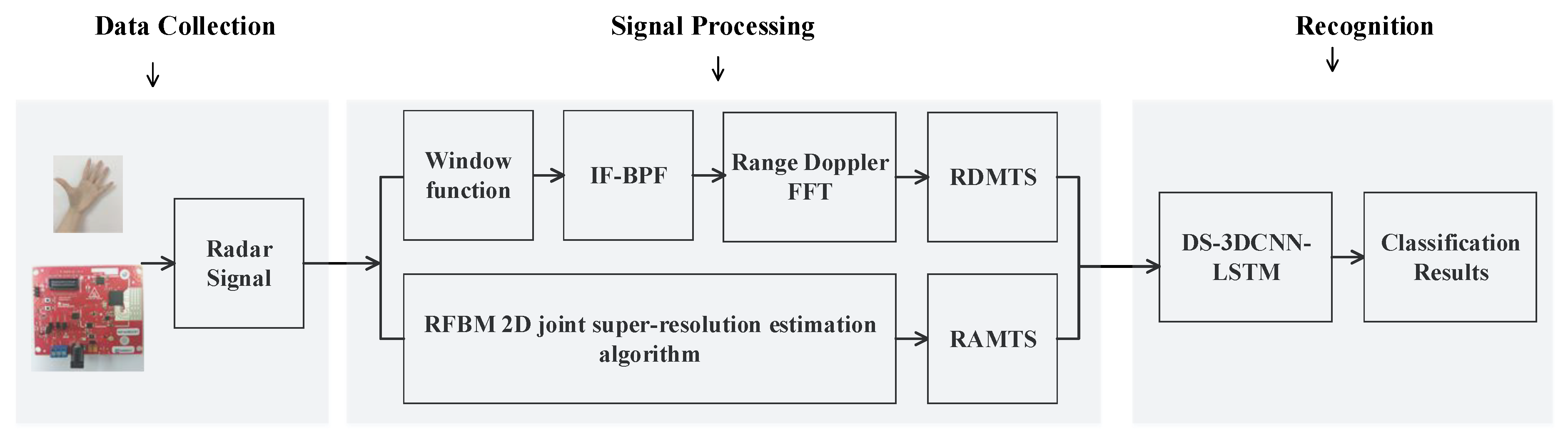

Continuous Gesture Recognition Based on Time Sequence Fusion Using MIMO Radar Sensor and Deep Learning

Abstract

1. Introduction

- (1)

- The development of a new system for hand-gesture recognition based on FMCW MIMO radar and deep learning.

- (2)

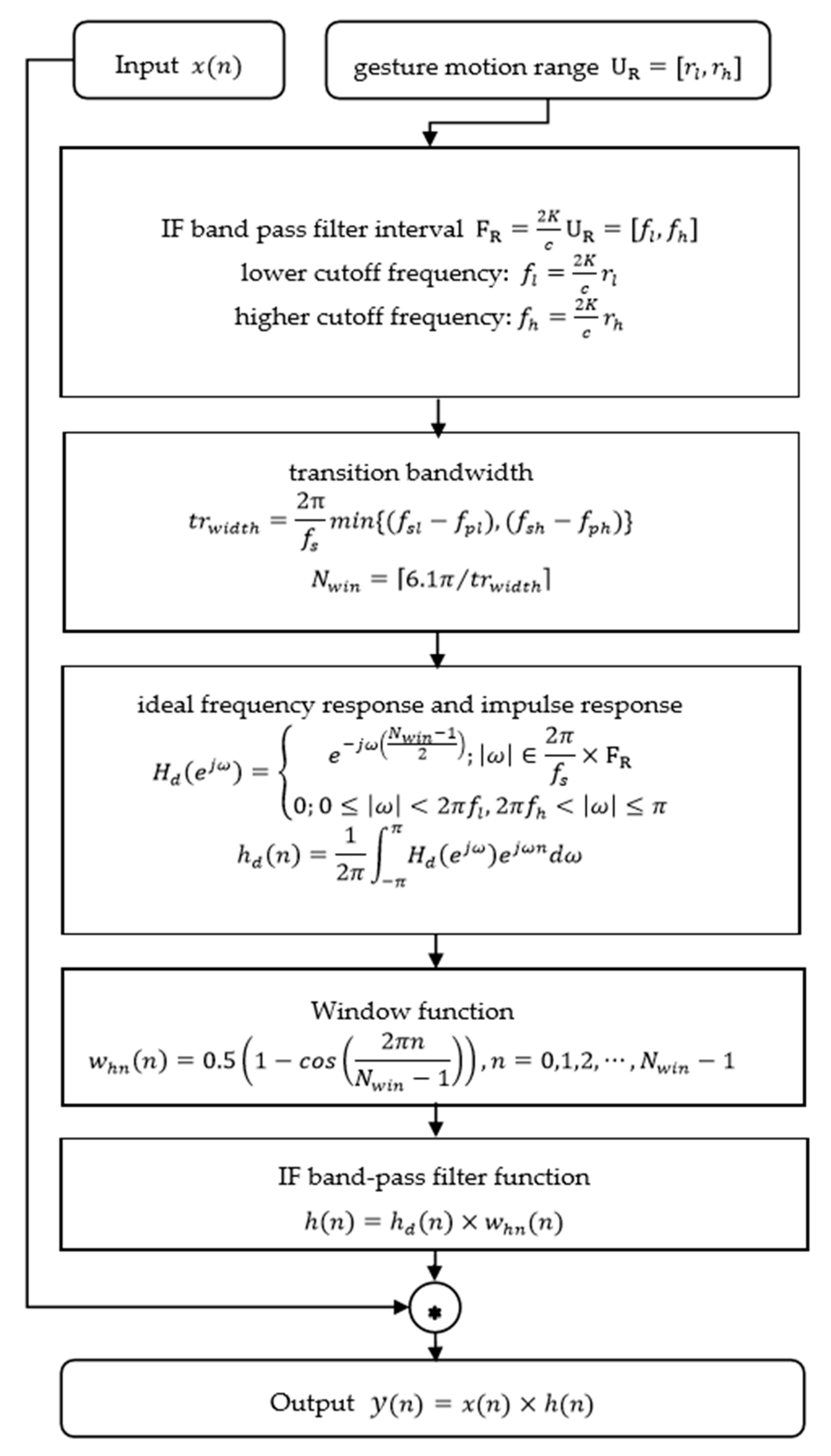

- Designing a pre-processing algorithm based on windowed Range–Doppler-FFT and intermediate-frequency signal band-pass-filter (IF-BPF) to alleviate spectrum leakage and suppress clutters in RDM.

- (3)

- Proposing a RFBM 2D joint super-resolution estimation algorithm to generate RAM for joint estimation of range and azimuth.

- (4)

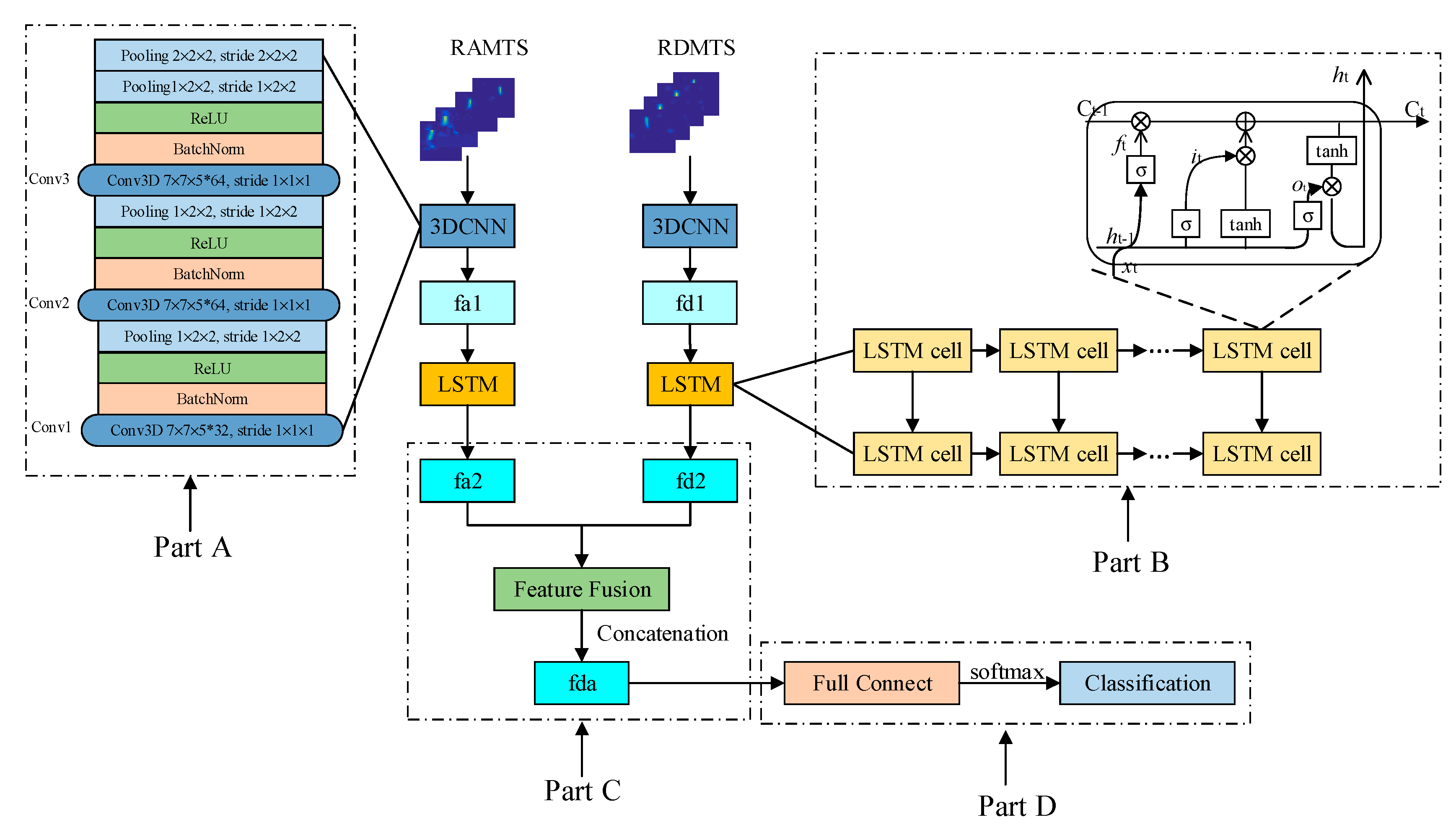

- Designing a DS-3DCNN-LSTM network to extract and fuse RDMTS and RAMTS to obtain high recognition accuracy of complex gestures.

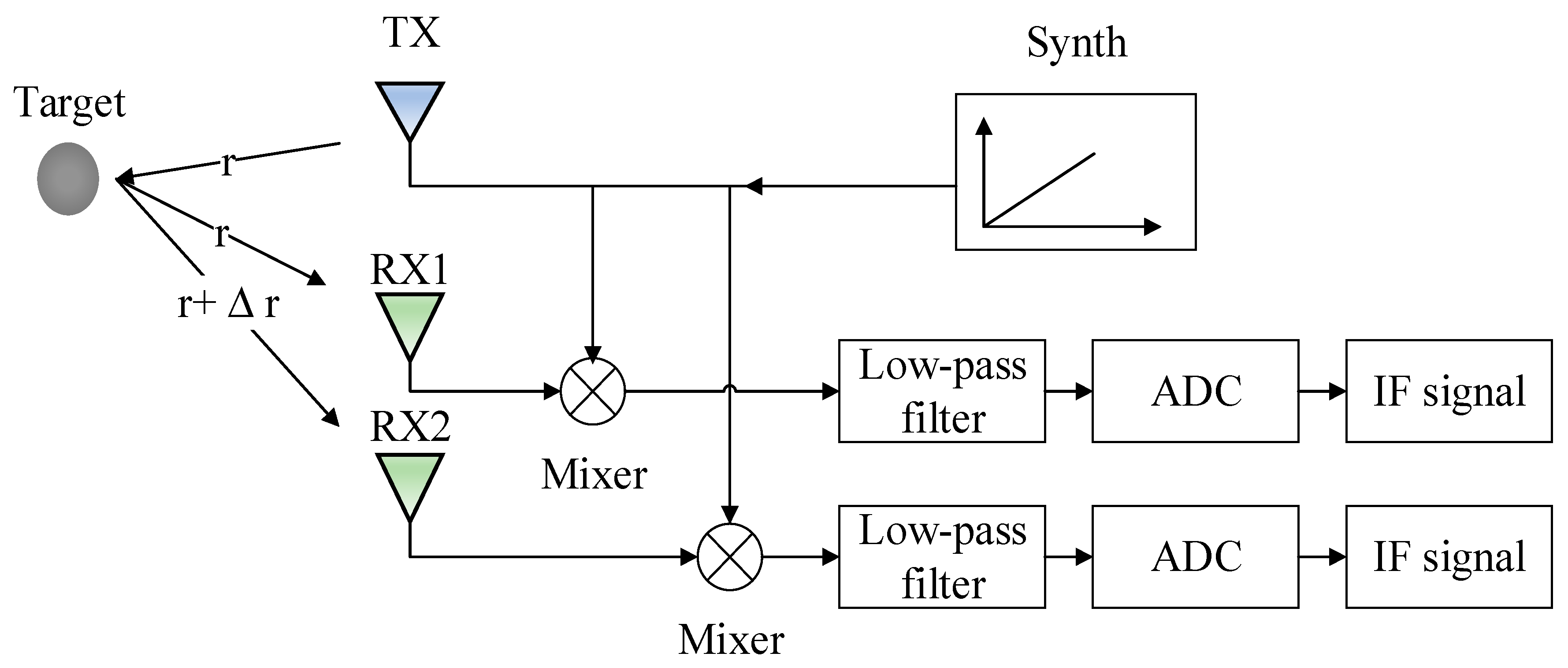

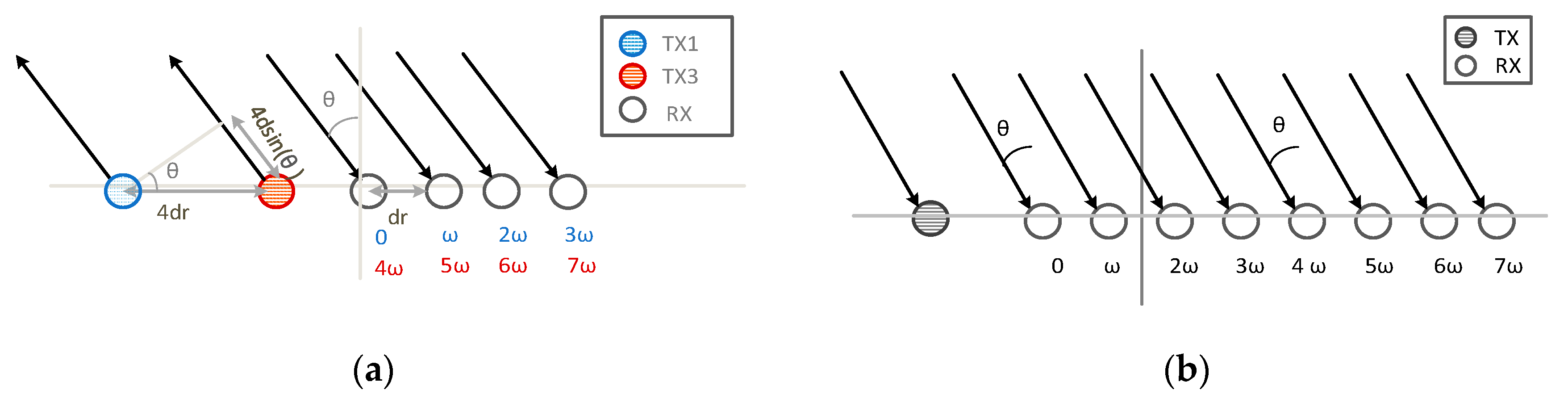

2. FMCW MIMO Radar

3. Signal Processing

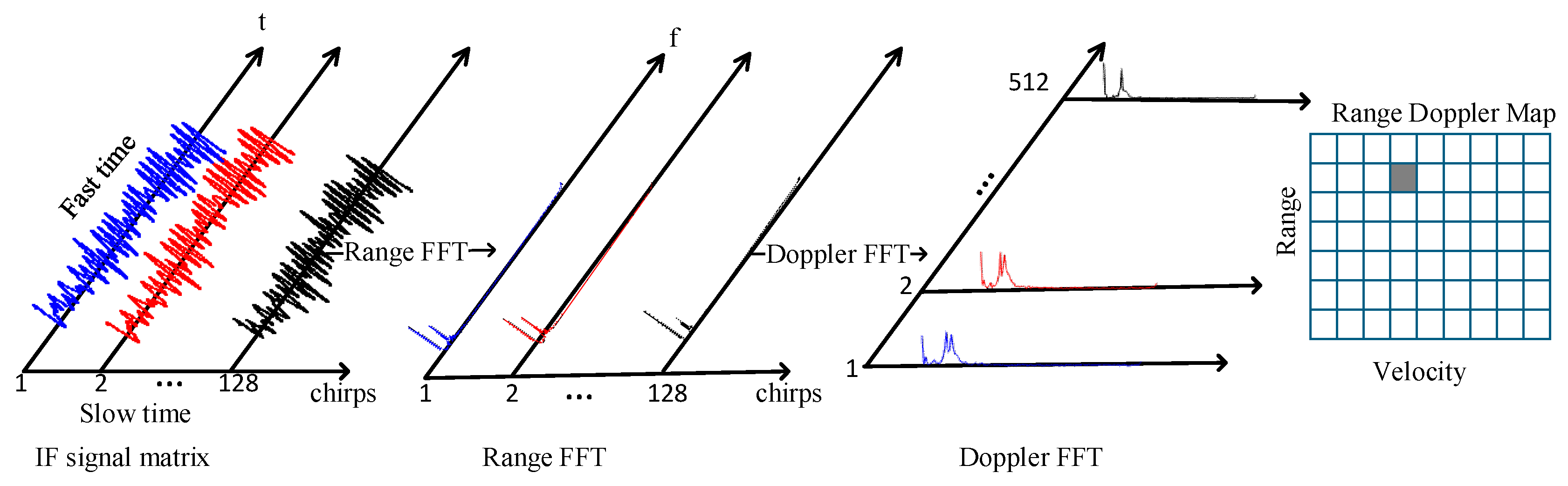

3.1. Generate RDM

3.1.1. Generate Traditional RDM

3.1.2. Window Functions for Spectrum Leakage Suppression

3.1.3. Designed IF Band-Pass-Filter (IF-BPF) for Clutter Suppression

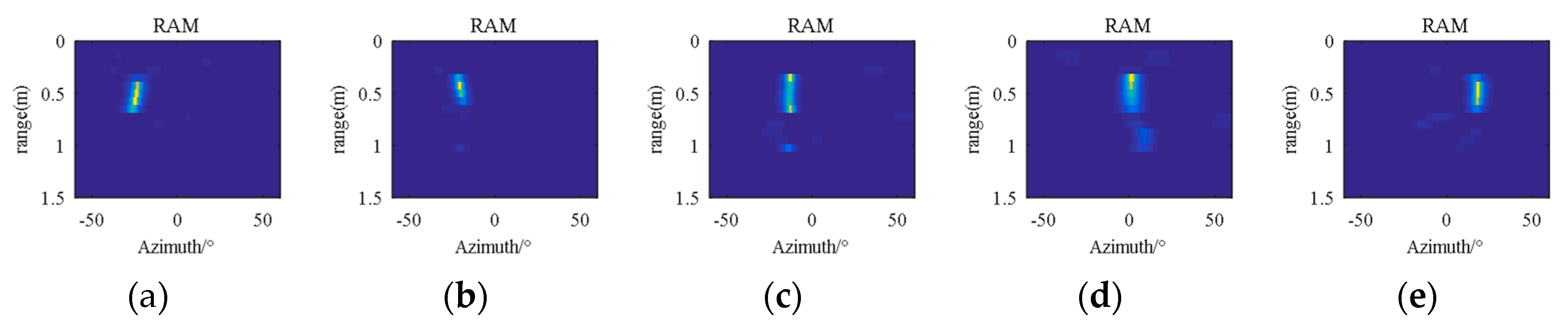

3.2. Generate RAM

| Algorithm 1 RFBM joint super-resolution estimation algorithm |

| Input: , , is the first chirp of the frame signal of matrix . |

| Initialization: is the number of iterations. |

| (1) Matrix rearrangement. Rearrange 3-D matrix to a 2-D matrix . Matrix represents sampling points in one signal chirp of the virtual antennas chirp. |

| (2) FFT. Conduct Range FFT along the fast time dimension, and get matrix .

|

| (3) Select the column of matrix .

|

| (4) Calculate covariance matrix.

|

| (5) Obtain the noise subspace . Perform the singular value decomposition of the covariance matrix and get .

|

| Where and are signal subspace and noise subspace, respectively. |

| (6) Determine steering vectors and angle search space.

|

| Where and indicate the upper and lower bounds of angular search space , respectively. |

| Steering vectors is shown as |

| (7) Calculate the MUSIC spatial spectrum.

|

| (8) Iteration.

|

| Repeat from (3) to (7) until |

| Output: |

| The obtained spectrum form a 2D range-azimuth space, called Range Azimuth Map (RAM). |

4. Dual Stream 3DCNN-LSTM Networks

4.1. 3DCNN

4.2. LSTM

5. Experiments and Result Analysis

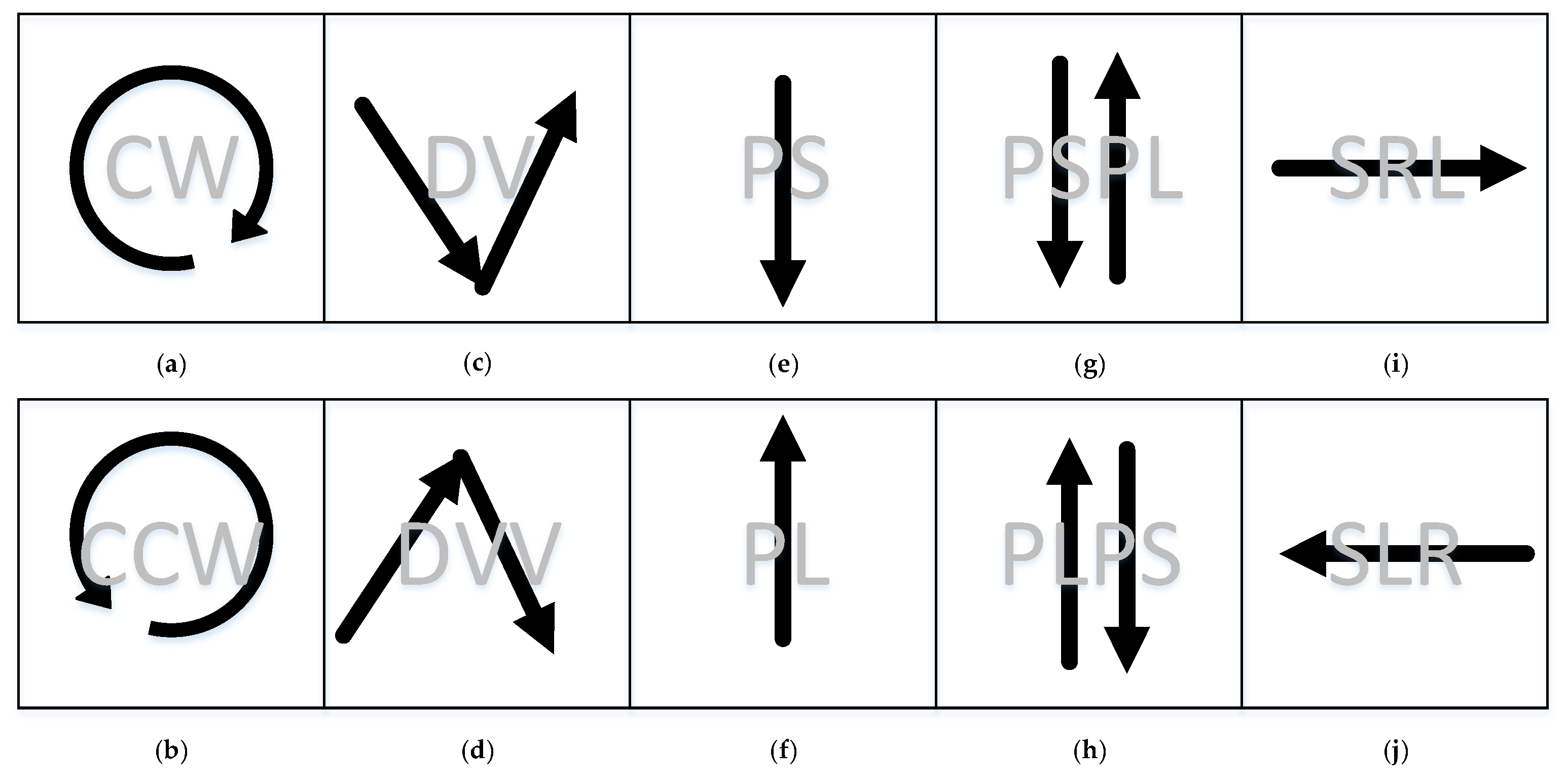

5.1. Experimental Setup and Data Collection

5.2. Signal Processing Results and Analysis

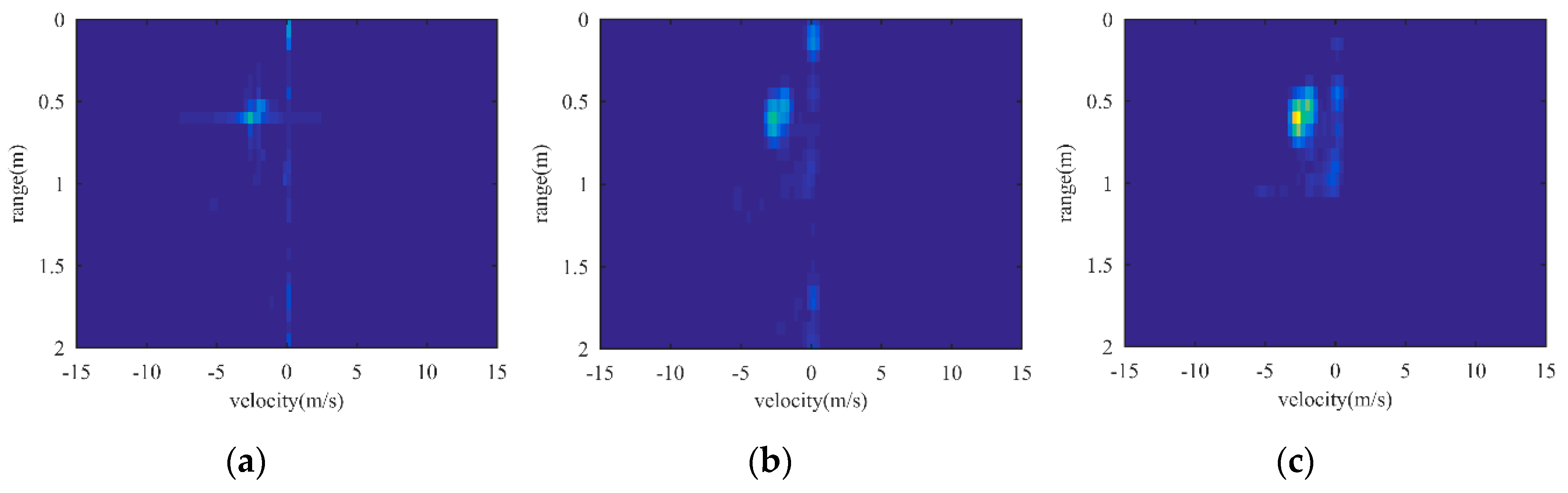

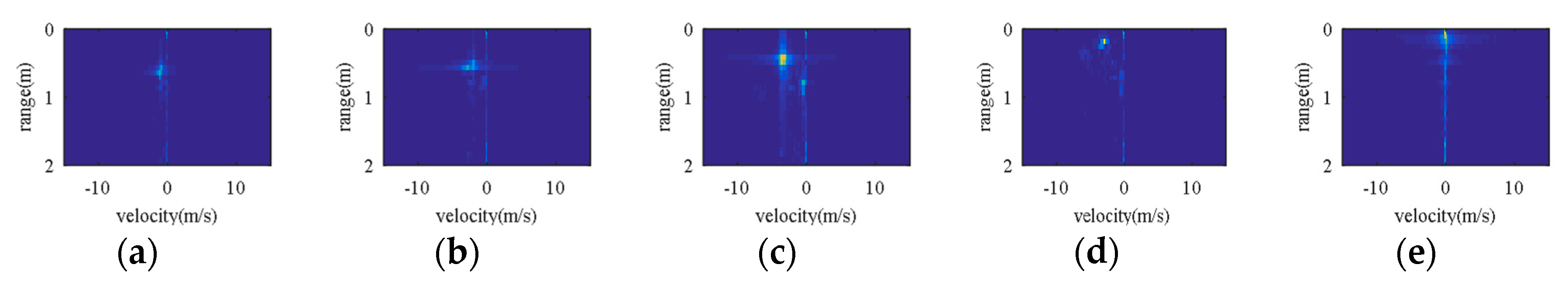

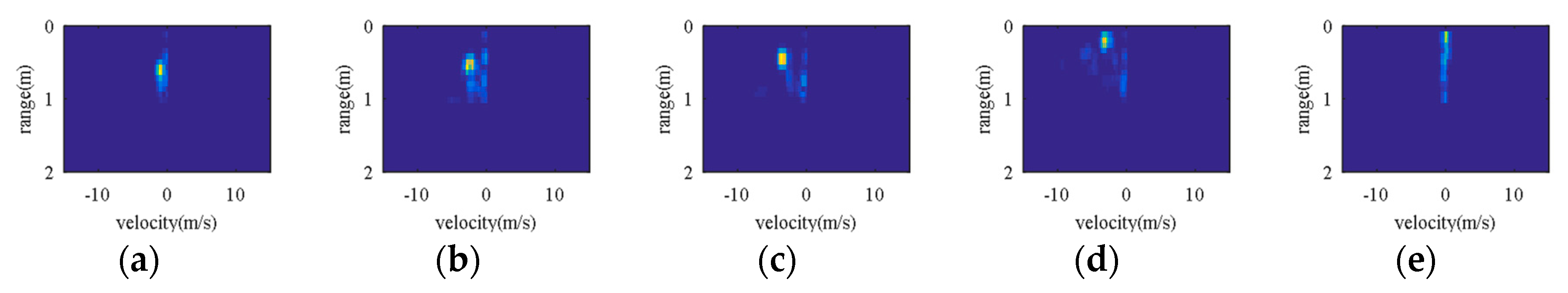

5.2.1. RDMTS with Windowing and IF-BPF

5.2.2. RDMTS with RFBM Algorithm

5.3. Classification Accuracy

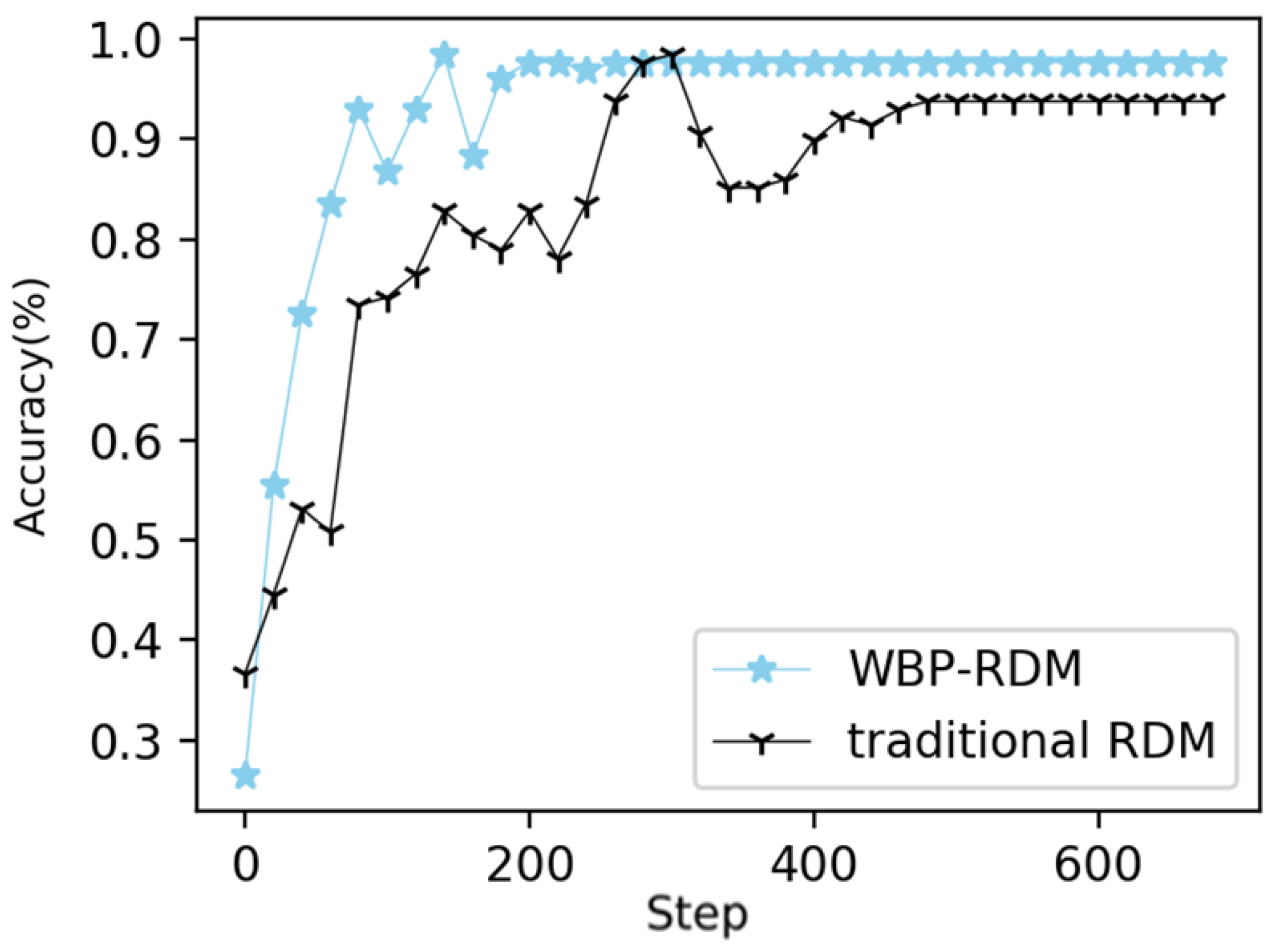

5.4. Impact of Signal Processing Method on Accuracy

5.4.1. Impact of RFBM Algorithm

5.4.2. Impact of Window Function and IF-BPF

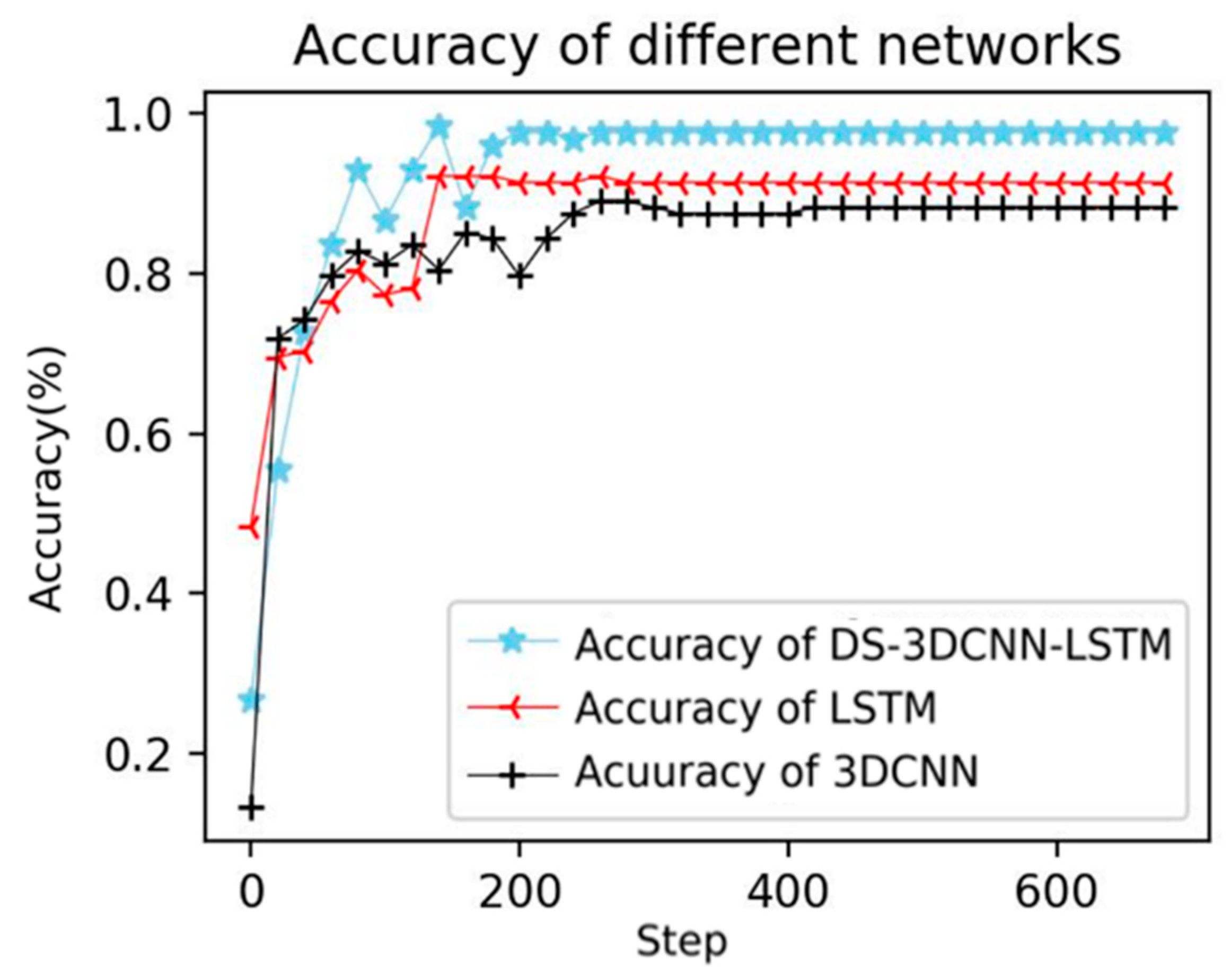

5.5. Impact of Different Networks on Accuracy

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Deng, M. Robust Human Gesture Recognition by Leveraging Multi-Scale Feature Fusion. Signal Process. Image Commun. 2019, 83, 115768. [Google Scholar] [CrossRef]

- John, V.; Umetsu, M.; Boyali, A.; Mita, S.; Imanishi, M.; Sanma, N.; Shibata, S. Real-Time Hand Posture and Gesture-Based Touchless Automotive User Interface Using Deep Learning. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Redondo Beach, CA, USA, 11–14 June 2017; pp. 869–874. [Google Scholar]

- Li, X. Human–Robot Interaction Based on Gesture and Movement Recognition. Signal Process. Image Commun. 2020, 81, 115686. [Google Scholar] [CrossRef]

- Rautaray, S.S.; Agrawal, A. Vision Based Hand Gesture Recognition for Human Computer Interaction: A Survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Almasre, M.A.; Al-Nuaim, H. Recognizing Arabic Sign Language Gestures Using Depth Sensors and a KSVM Classifier. In Proceedings of the 2016 8th Computer Science and Electronic Engineering (CEEC), Colchester, UK, 28–30 September 2016; pp. 146–151. [Google Scholar]

- Wu, C.-H.; Lin, C.H. Depth-Based Hand Gesture Recognition for Home Appliance Control. In Proceedings of the 2013 IEEE International Symposium on Consumer Electronics (ISCE), Hsinchu, Taiwan, 3–6 June 2013; pp. 279–280. [Google Scholar]

- Gupta, S.; Molchanov, P.; Yang, X.; Kim, K.; Tyree, S.; Kautz, J. Towards Selecting Robust Hand Gestures for Automotive Interfaces. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 1350–1357. [Google Scholar]

- Li, G.; Zhang, R.; Ritchie, M.; Griffiths, H. Sparsity-Based Dynamic Hand Gesture Recognition Using Micro-Doppler Signatures. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 928–931. [Google Scholar]

- Skaria, S.; Al-Hourani, A.; Lech, M.; Evans, R.J. Hand-Gesture Recognition Using Two-Antenna Doppler Radar with Deep Convolutional Neural Networks. IEEE Sens. J. 2019, 19, 3041–3048. [Google Scholar] [CrossRef]

- Zhang, S.; Li, G.; Ritchie, M.; Fioranelli, F.; Griffiths, H. Dynamic Hand Gesture Classification Based on Radar Micro-Doppler Signatures. In Proceedings of the 2016 CIE International Conference on Radar (RADAR), Guangzhou, China, 10–13 October 2016; pp. 1–4. [Google Scholar]

- Wu, Q.; Zhao, D. Dynamic Hand Gesture Recognition Using FMCW Radar Sensor for Driving Assistance. In Proceedings of the 2018 10th International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 18–20 October 2018; pp. 1–6. [Google Scholar]

- Sun, Y.; Fei, T.; Schliep, F.; Pohl, N. Gesture Classification with Handcrafted Micro-Doppler Features Using a FMCW Radar. In Proceedings of the 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 16–17 April 2018; pp. 1–4. [Google Scholar]

- Zhang, Z.; Tian, Z.; Zhou, M. Latern: Dynamic Continuous Hand Gesture Recognition Using FMCW Radar Sensor. IEEE Sens. J. 2018, 18, 3278–3289. [Google Scholar] [CrossRef]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar. ACM Trans. Graph. 2016, 35, 1–19. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, S.; Zhou, M.; Jiang, Q.; Tian, Z. TS-I3D Based Hand Gesture Recognition Method with Radar Sensor. IEEE Access 2019, 7, 22902–22913. [Google Scholar] [CrossRef]

- Choi, J.-W.; Ryu, S.-J.; Kim, J.-H. Short-Range Radar Based Real-Time Hand Gesture Recognition Using LSTM Encoder. IEEE Access 2019, 7, 33610–33618. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-Range FMCW Monopulse Radar for Hand-Gesture Sensing. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Washington, DC, USA, 10–15 May 2015; pp. 1491–1496. [Google Scholar]

- Chung, H.; Chung, Y.; Tsai, W. An Efficient Hand Gesture Recognition System Based on Deep CNN. In Proceedings of the 2019 IEEE International Conference on Industrial Technology (ICIT), Melbourne, Australia, 13–15 February 2019; pp. 853–858. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-Scale Video Classification with Convolutional Neural Networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1725–1732. [Google Scholar]

- Zhu, F.; Kong, X.; Fu, H.; Tian, Q. A Novel Two-Stream Saliency Image Fusion CNN Architecture for Person Re-Identification. Multimed. Syst. 2018, 24, 569–582. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand Gesture Recognition with 3D Convolutional Neural Networks. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar]

- Zhang, W.; Wang, J. Dynamic Hand Gesture Recognition Based on 3D Convolutional Neural Network Models. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; pp. 224–229. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3d Convolutional Networks. In Proceedings of the IEEE international conference on computer vision, Las Condes, San Diego, Chile, 11–18 December 2015; pp. 4489–4497. [Google Scholar]

- Zhang, Z.; Tian, Z.; Zhou, M. SmartFinger: A Finger-Sensing System for Mobile Interaction via MIMO FMCW Radar. In Proceedings of the 2019 IEEE Globecom Workshops, GC Wkshps 2019—Proceedings, Waikoloa, HI, USA, 9–13 December 2019; pp. 1–5. [Google Scholar]

- Koch, P.; Dreier, M.; Maass, M.; Böhme, M.; Phan, H.; Mertins, A. A Recurrent Neural Network for Hand Gesture Recognition Based on Accelerometer Data. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 5088–5091. [Google Scholar]

- Tai, T.-M.; Jhang, Y.-J.; Liao, Z.-W.; Teng, K.-C.; Hwang, W.-J. Sensor-Based Continuous Hand Gesture Recognition by Long Short-Term Memory. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Jian, C.; Li, J.; Zhang, M. LSTM-Based Dynamic Probability Continuous Hand Gesture Trajectory Recognition. IET Image Process. 2019, 13, 2314–2320. [Google Scholar] [CrossRef]

- Hamidi, S.; Nezhad-Ahmadi, M.-R.; Safavi-Naeini, S. TDM Based Virtual FMCW MIMO Radar Imaging at 79GHz. In Proceedings of the 2018 18th International Symposium on Antenna Technology and Applied Electromagnetics (ANTEM), Waterloo, ON, Canada, 19–22 August 2018; pp. 1–2. [Google Scholar]

- Robey, F.C.; Coutts, S.; Weikle, D.; McHarg, J.C.; Cuomo, K. MIMO Radar Theory and Experimental Results. In Proceedings of the Conference Record of the Thirty-Eighth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 7–10 November 2004; Volume 1, pp. 300–304. [Google Scholar]

- Schmidt, R. Multiple Emitter Location and Signal Parameter Estimation. IEEE Trans. Antennas Propag. 1986, 34, 276–280. [Google Scholar] [CrossRef]

- Belfiori, F.; van Rossum, W.; Hoogeboom, P. Application of 2D MUSIC Algorithm to Range-Azimuth FMCW Radar Data. In Proceedings of the 2012 9th European Radar Conference, Amsterdam, The Netherlands, 29 October–2 November 2012; pp. 242–245. [Google Scholar]

- Christoph, S.; Jawad, M.; Nossek, M.; Amine, M.; Josef, A.N. DoA Estimation Performance and Computational Complexity of Subspace- and Compressed Sensing-Based Methods. In Proceedings of the 19th International ITG Workshop on Smart Antennas, Ilmenau, Germany, 3–5 March 2015. [Google Scholar]

- Roy, R.; Kailath, T. ESPRIT-Estimation of Signal Parameters via Rotational Invariance Techniques. IEEE Trans. Acoust. 1989, 37, 984–995. [Google Scholar] [CrossRef]

- Capon, J. High-Resolution Frequency-Wavenumber Spectrum Analysis. Proc. IEEE 1969, 57, 1408–1418. [Google Scholar] [CrossRef]

- Stoica, P.; Wang, Z.; Li, J. Robust Capon Beamforming. In Proceedings of the Conference Record of the Thirty-Sixth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 3–6 November 2002; Volume 1, pp. 876–880. [Google Scholar]

- Wang, Y.; Jiang, Z.; Gao, X.; Hwang, J.-N.; Xing, G.; Liu, H. RODNet: Object Detection under Severe Conditions Using Vision-Radio Cross-Modal Supervision. arXiv 2020, arXiv:2003.01816. [Google Scholar]

- Major, B.; Fontijne, D.; Ansari, A.; Sukhavasi, R.T. 2019 I. I. C. on C. V. W. In Proceedings of the Vehicle Detection With Automotive Radar Using Deep Learning on Range-Azimuth-Doppler Tensors, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Dongmei, L.; Peng, Z.; Yigang, H. Analysis and Comparison of Several Harmonic Detection Methods with Windowed and Interpolation FFT. Electr. Meas. Instrum. 2013, 50, 51–55. [Google Scholar]

- Farina, A.; Studer, F.A. A Review of CFAR Detection Techniques in Radar Systems. Microw. J. 1986, 29, 115. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- IWR1443 Evaluation Module (IWR1443BOOST) mmWave Sensing Solution User’s Guide. Available online: http://www.ti.com.cn/tool/cn/IWR1443BOOST/ (accessed on 6 April 2019).

- DCA1000EVM Data Capture Card User’s Guide. Available online: http://www.ti.com.cn/tool/cn/IWR1443BOOST/ (accessed on 6 April 2019).

| Parameter | Symbol | Value |

|---|---|---|

| Carrier frequency | 77 GHz | |

| Bandwidth | 4 GHz | |

| Time window | 100 μs | |

| Idle Time between chirps | 100 μs | |

| Wavelength | 3.90 mm | |

| Transmitting antenna distance | 7.8 mm | |

| Receiving antenna | 1.9 5 mm | |

| Number of Frames | 30 | |

| Number of chirps in a frame | 128 | |

| Samples in one chirp | 512 | |

| Frame period | 80 ms |

| Gestures | CW | CCW | DV | DVV | PL | PLPS | PS | PSPL | SLR | SRL |

|---|---|---|---|---|---|---|---|---|---|---|

| CW | 0.944 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.056 |

| CCW | 0.00 | 1.000 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| DV | 0.00 | 0.00 | 0.857 | 0.143 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| DVV | 0.00 | 0.00 | 0.00 | 1.000 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| PL | 0.00 | 0.00 | 0.00 | 0.00 | 1.000 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| PLPS | 0.00 | 0.00 | 0.00 | 0.00 | 0.111 | 0.889 | 0.00 | 0.00 | 0.00 | 0.00 |

| PS | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.000 | 0.00 | 0.00 | 0.00 |

| PSPL | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.000 | 0.00 | 0.00 |

| SLR | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.000 | 0.00 |

| SRL | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.000 |

| Training Strategy | Modality | Accuracy |

|---|---|---|

| Strategy 1 | RDMTS only | 82.03% |

| Strategy 2 | RAMTS only | 93.97% |

| Strategy 3 | RAMTS + RDMTS | 97.66% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, W.; Jiang, X.; Xu, L.; Luo, J.; Xu, M.; Hou, F. Continuous Gesture Recognition Based on Time Sequence Fusion Using MIMO Radar Sensor and Deep Learning. Electronics 2020, 9, 869. https://doi.org/10.3390/electronics9050869

Lei W, Jiang X, Xu L, Luo J, Xu M, Hou F. Continuous Gesture Recognition Based on Time Sequence Fusion Using MIMO Radar Sensor and Deep Learning. Electronics. 2020; 9(5):869. https://doi.org/10.3390/electronics9050869

Chicago/Turabian StyleLei, Wentai, Xinyue Jiang, Long Xu, Jiabin Luo, Mengdi Xu, and Feifei Hou. 2020. "Continuous Gesture Recognition Based on Time Sequence Fusion Using MIMO Radar Sensor and Deep Learning" Electronics 9, no. 5: 869. https://doi.org/10.3390/electronics9050869

APA StyleLei, W., Jiang, X., Xu, L., Luo, J., Xu, M., & Hou, F. (2020). Continuous Gesture Recognition Based on Time Sequence Fusion Using MIMO Radar Sensor and Deep Learning. Electronics, 9(5), 869. https://doi.org/10.3390/electronics9050869