Test Case Generation Method for Increasing Software Reliability in Safety-Critical Embedded Systems

Abstract

1. Introduction

2. Background

2.1. UML State Machine

- S: Non-empty set

- s0: Initial state, the element of the set “S”

- SΨ: Final state, the element of the set “S”

- I: The set of input event

- O: The set of output event

- σ: S × I → O × S′; transition function between two states

2.2. FSM and W Method

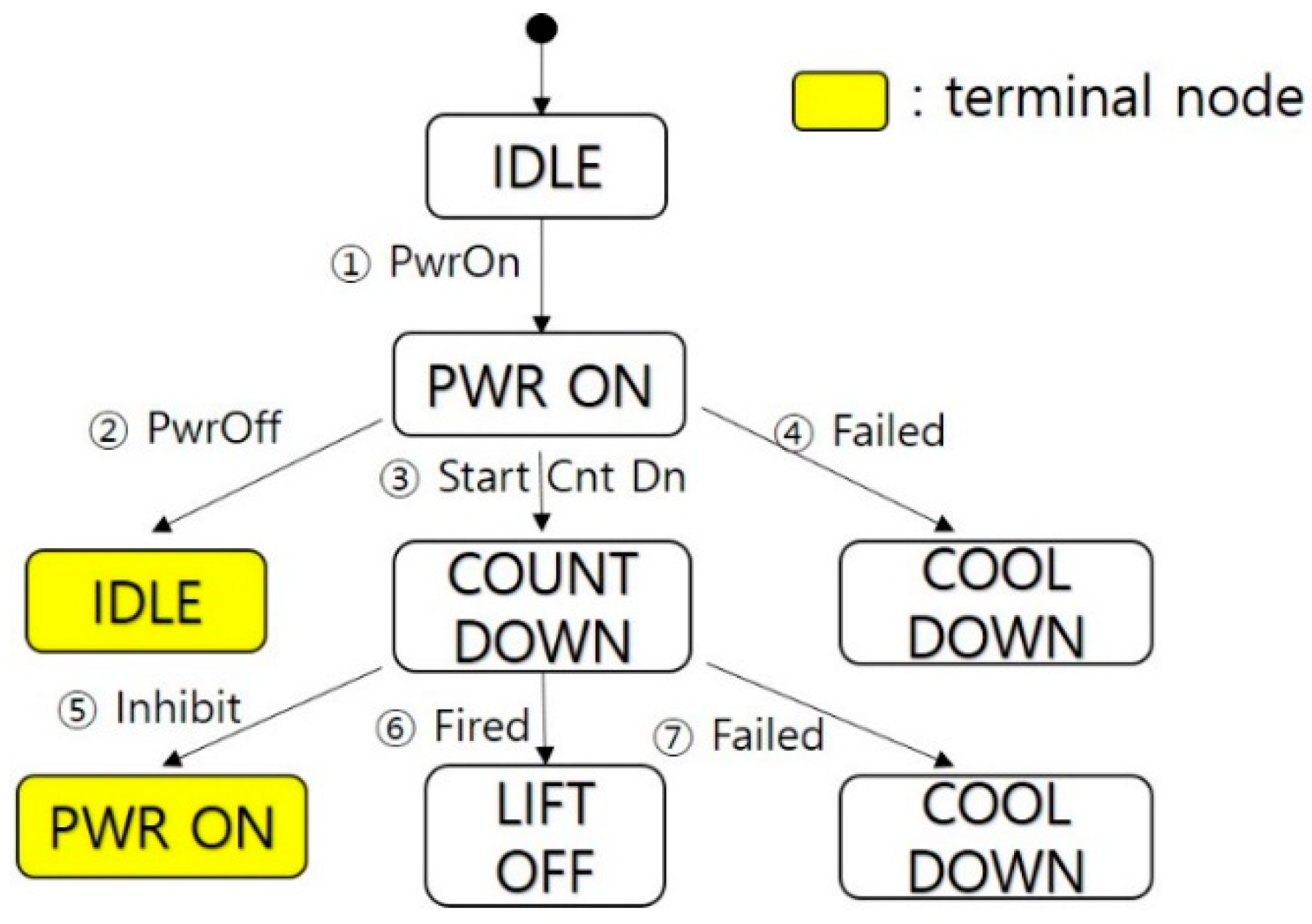

- Req 1: Power Control, Power on, and off control

- Req 2: Countdown Control, Countdown start, and lift-off detection

- Req 3: Cool Down, Emergency cool down sequence

- Req 4: Ready Monitoring, Take-off ready status monitoring

- Req 5: Countdown Monitoring, Countdown status

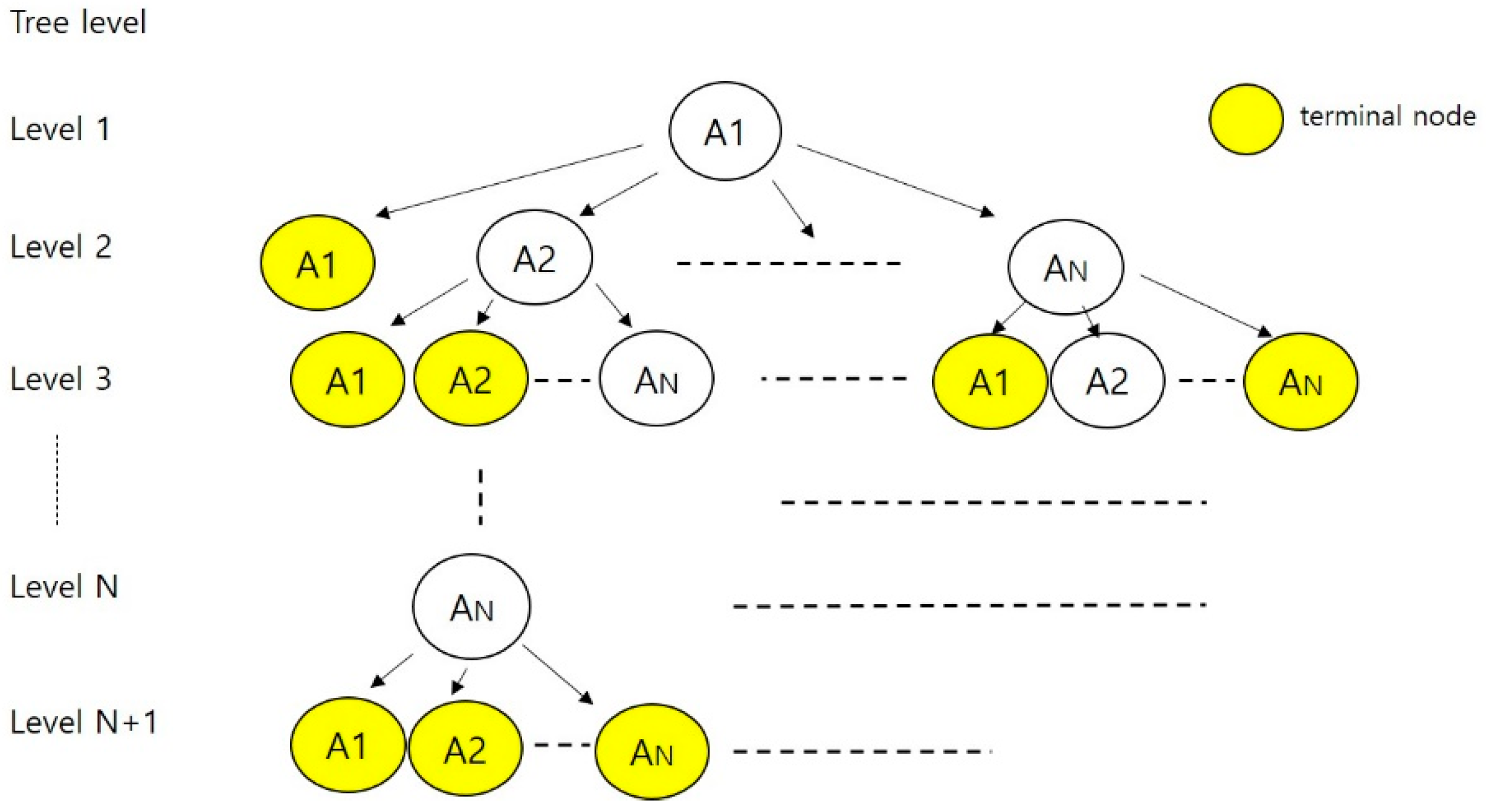

- Set the root of “T” with the initial state of the FSM, “IDLE”. This is level 1 of “T”.

- Suppose we have already built “T” to a level “k”. The “(k + 1)”th level is built by examining nodes transferable from the “k”th-level nodes. A node at the “k”th level is terminated if it is the same as a non-terminal at some level “j”, j ≤ k. Otherwise, we attach a successor node to the “k”th-level node.

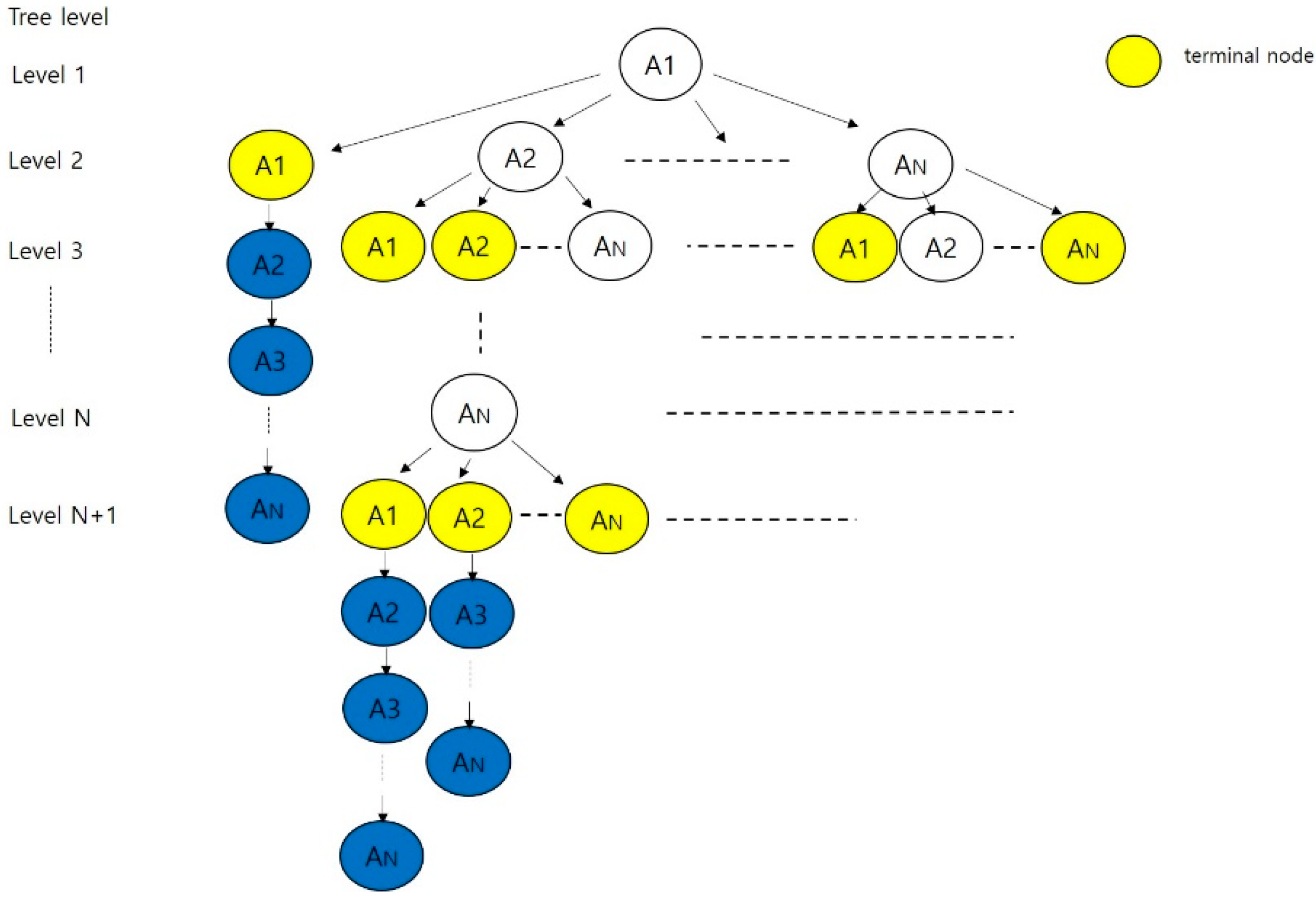

3. Proposed Method

- Node: It is used as the corresponding expression of the “state” in the tree, i.e., a “node” is used in the tree as a “state” is used in the FSM.

- Terminal node: This is present in W method. If one state has already been visited, the state becomes a “terminal node”. The final state is also a “terminal node”.

- Initial entry status: This is the status of the state when it has been entered for the first time.

- Re-entry status: This is the status of the state when it is re-entered after entering another state.

- Post-processing: This is the processing that makes the re-entry status equal to the initial entry status.

- Post-processing error(s): These error(s) are occurred by the reasons why post-processing is incorrect.

- Test case: This has the same meaning as “test sequence”.

- Test set: This is the collection of test cases (sequences). It has the same meaning as “test suite”.

3.1. Algorithm

| /// Algorithm of the proposed method /// |

| 1: input: 2: SM: state machine, s’: a specified final state 3: output: 4: TS: Test set 5: begin 6: TS = {}, Tree Tr = {init node n0, node set N = {n0}, edge set E = {}} 7: n0 is a node corresponding to the initial state s0 ∈ SM 8: for each non-terminal leaf node n ∈ Tr 9: begin 10: for each outbound transition t of the state corresponding to n 11: begin 12: st = a target state of t 13: for each input i ∈ I ∈ t 14: begin 15: n’ = a node corresponding to st, N = N ∪ {n’} 16: e = an edge {n ⨯ { i } → O ∈ t ⨯ n’}, E = E ∪ {e} 17: end for 18: if st is already represented by another node then 19: Tr = Tr ∪ shortest path from the node n’ corresponding to st to a node corresponding to s’, st = s’ 20: end if 21: if st is a final state then 22: set n’ is terminal node 23: end if 24: end for 25: end for 26: for each event sequence TC from n0 ∈ Tr to a terminal node n ∈ Tr 27: begin 28: TS = TS ∪ TC 29: end for ///end /// |

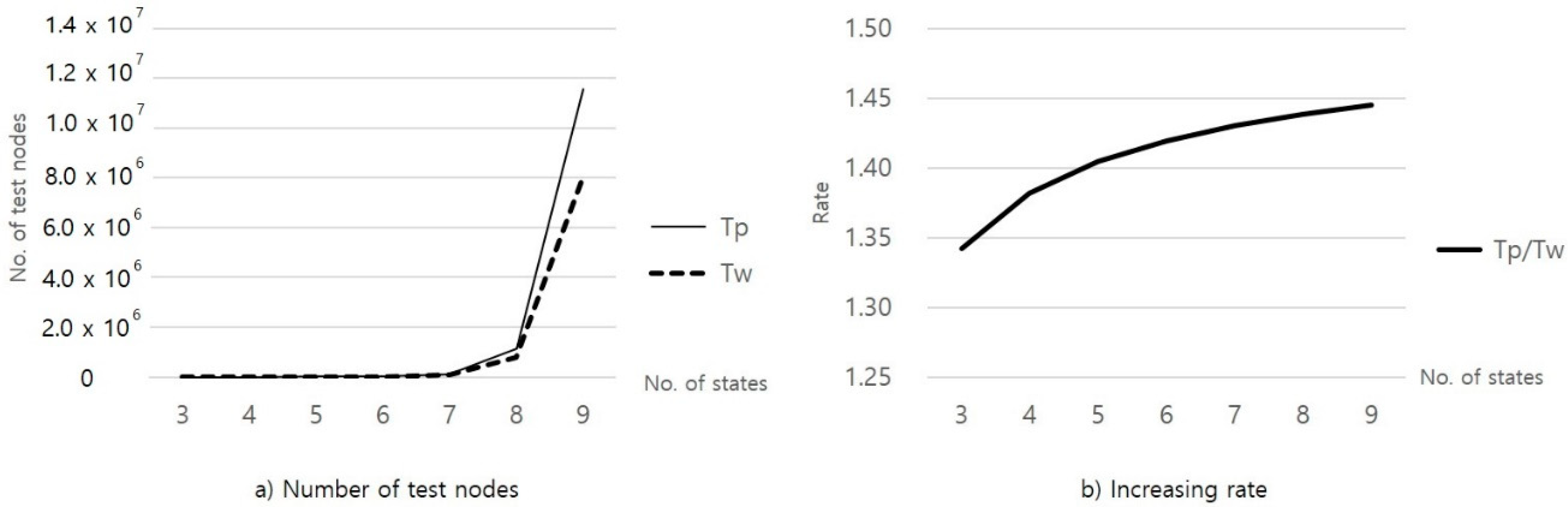

3.2. Testing Time Analysis

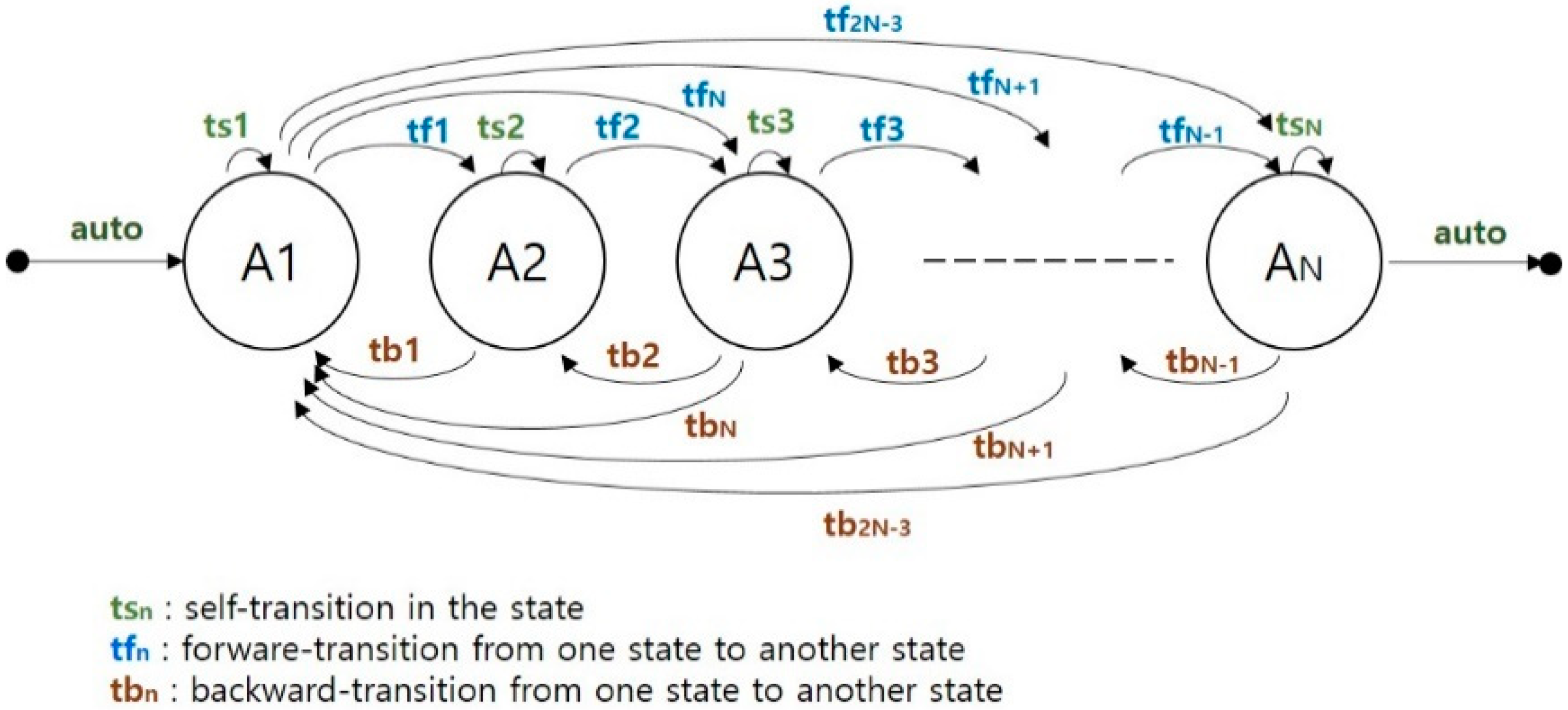

- The number of test nodes for a “An” in each level, Tpn(An, l), is the sum of the test nodes in W method and the added nodes in the proposed method.

- If the number of “An”s in each level is Nt(An, l), the number of test nodes for “An”s in each level, Tpn(An, l), is Tpn(An, l) × Nt(An, l),.

- The number of test nodes for “An”s in the tree, Tp(An), is the sum of Tp(An, l) where l is from 1 to N + 1.

- Level 1: Tpn(A1, 1) = 1, Nt(A1, 1) = 0, Tp(A1,1) = 0

- Level 2: Tpn(A1, 2) = N + 1, Nt(A1, 2) = 1, Tp(A1,2) = N + 1

- Level 3: Tpn(A1, 3) = N + 2, Nt(A1, 3) = N − 1, Tp(A1,3) = (N + 2) × (N − 1)

- Level 4: Tpn(A1, 4) = N + 3, Nt(A1, 4) = (N − 1)(N − 2), Tp(A1,4) = (N + 3) × (N − 1)(N − 2)

- 5.

- Level N + 1: Tpn(A1, N + 1) = 2N, Nt(A1, N + 1) = (N − 1)!), Tp(A1,N + 1) = 2N × (N − 1)!

4. Case Study

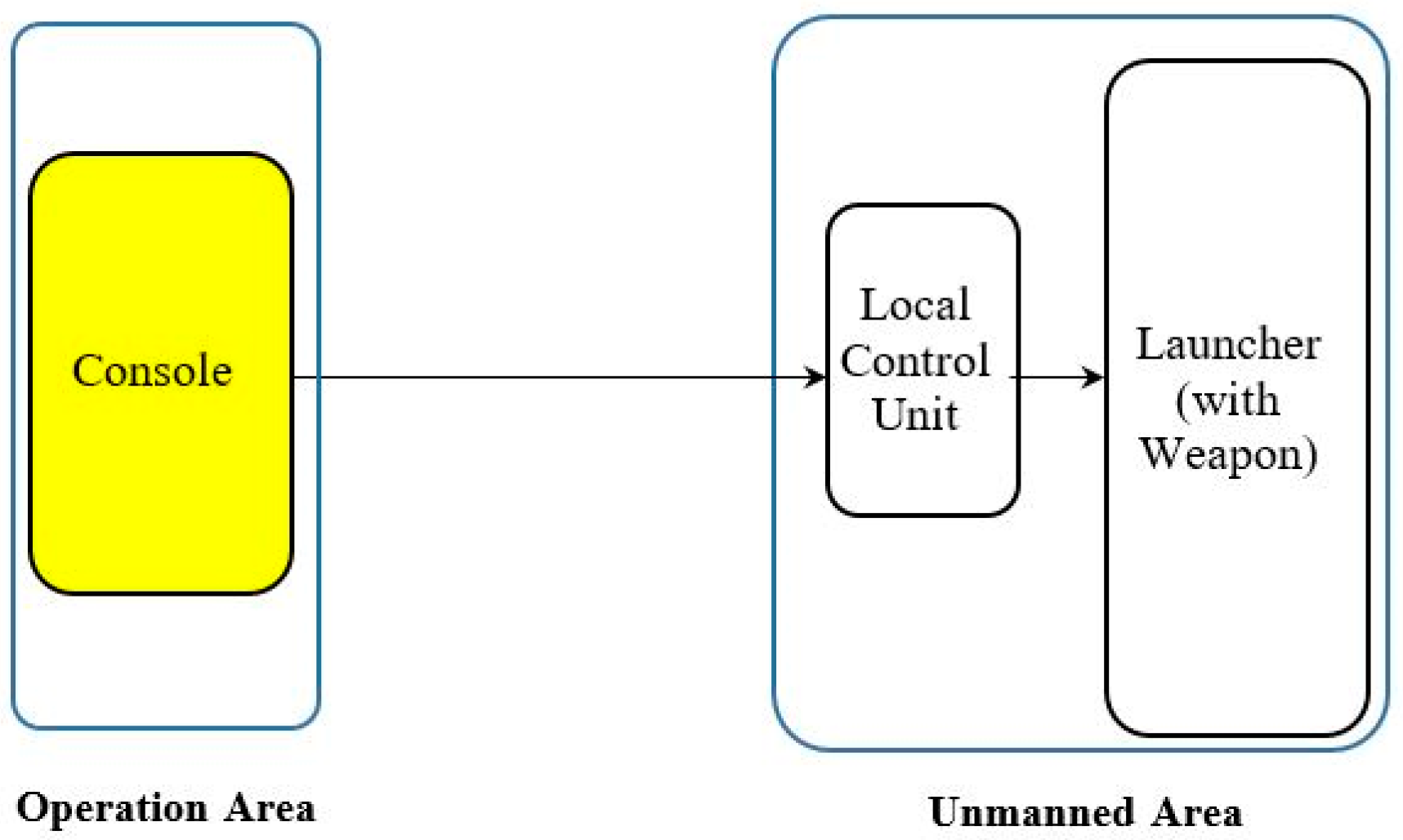

4.1. Methodology

- Construct an FSM specification from the requirement specification.

- Derive a tree and generate test suites using the W method and the proposed method.

- Test the implementation of the two test suites and compare the results. This step is repeated to check the reproducibility of the test.

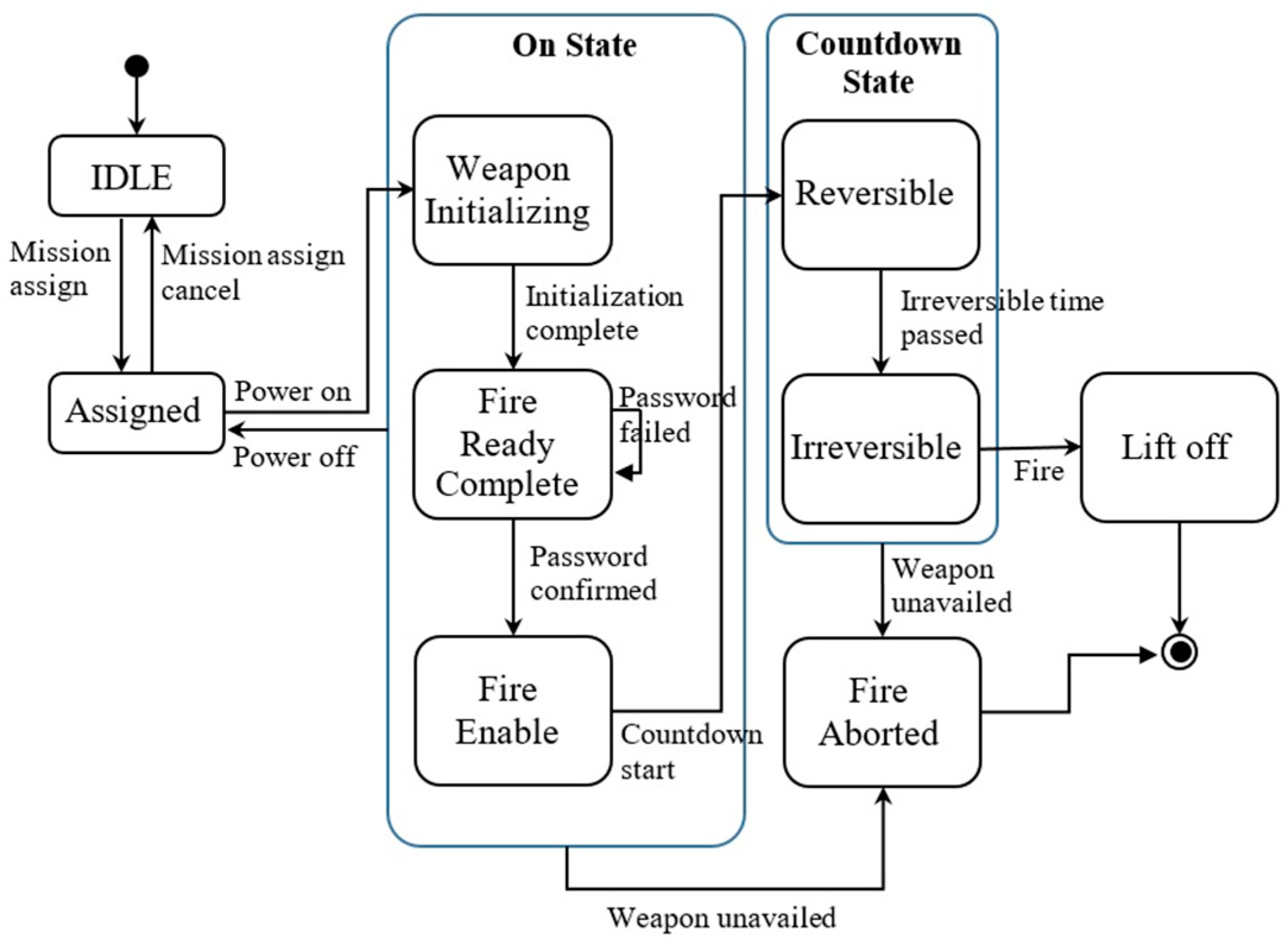

4.2. Constructing FSM

- Req 1: Mission Assign, Mission assignment, or cancel

- Req 2: Password Control, Input password, and confirm

- Req 3: Power Control, Power on, and off control

- Req 4: Mission Process Control, Mission Processing after Power on

- Req 5: Countdown Control, Countdown start, and lift-off detection

- Req 6: Fire Aborted (Cool down), Emergency cool down sequence execution

- Req 7: Ready Monitoring, Firing Ready status monitoring

- Req 8: Countdown Monitoring, Countdown status monitoring

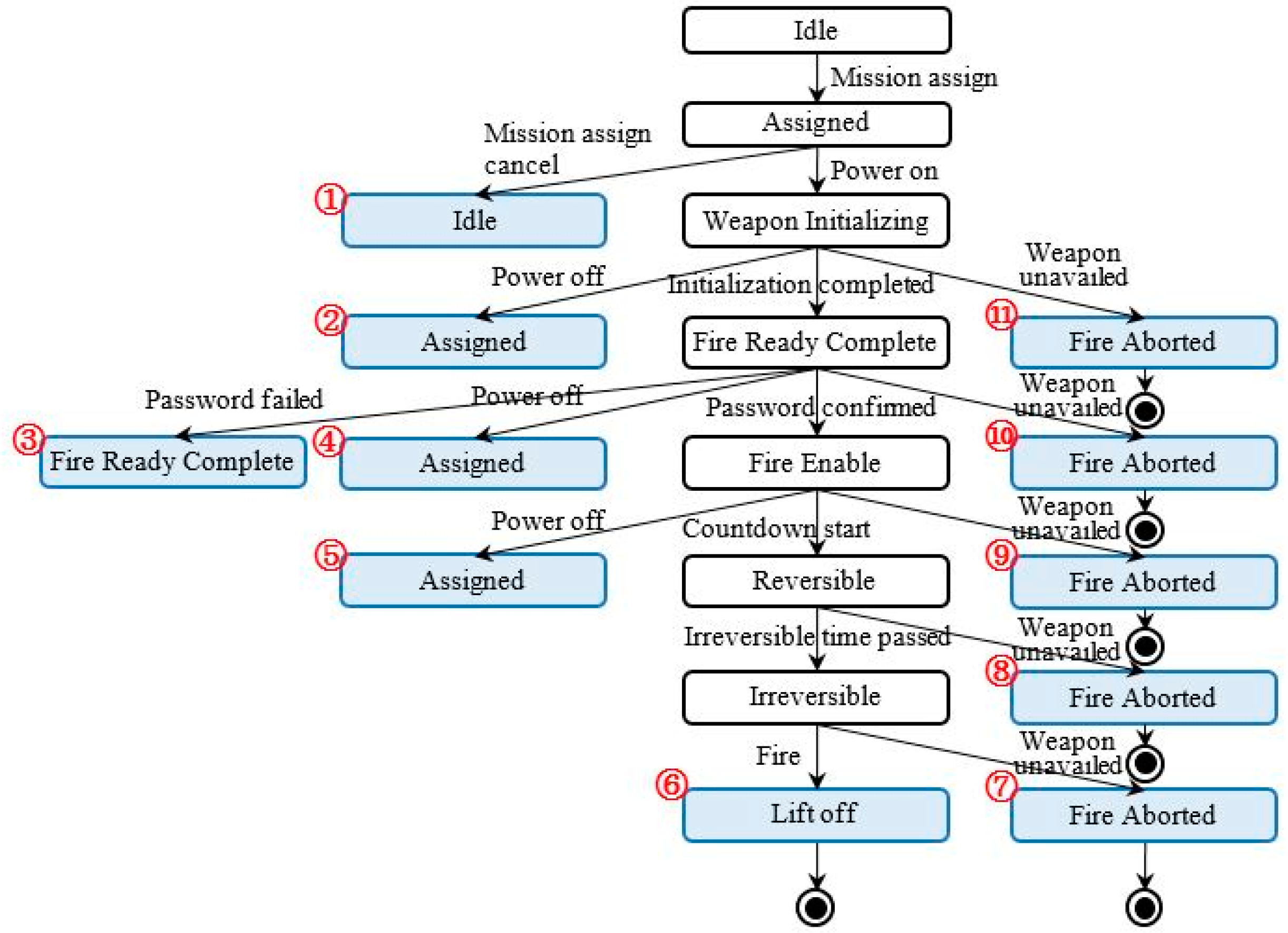

4.3. Deriving Tree and Generating Test Suite

4.4. Test and Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lee, D.; Yannakakis, M. Principles and Methods of Testing Finite State Machines―A survey. Proc. IEEE 1996, 84, 1090–1123. [Google Scholar] [CrossRef]

- Chow, T.S. Testing software design modeled by finite-state machines. IEEE Trans. Softw. Eng. 1978, 4, 178–187. [Google Scholar] [CrossRef]

- Dorofeeva, M.; Koufareva, I. Novel modification of the W-method. Jt. NCC IIS Bull. Comp. Sci. 2002, 18, 69–80. [Google Scholar]

- Fujiwara, S.; Bochmann, G.; Khendek, F.; Amalou, M.; Ghedamsi, A. Test selection based on finite state models. IEEE Trans. Softw. Eng. 1991, 6, 591–603. [Google Scholar] [CrossRef]

- Petrenko, A.; Yevtushenko, N.; Bochmann, G.V. Testing deterministic implementations from their nondeterministic specifications. In Proceedings of the Testing of Communicating Systems: Proceedings IFIP 9th Intern Workshop of Protocol Test Systems, Darmstadt, Germany, 9–11 September 1996. [Google Scholar]

- Cutigi, J.F.; Simao, A.; Souza, S.R.S. Reducing FSM-Based Test Suites with Guaranteed Fault Coverage. Comput. J. 2016, 5, 1129–1143. [Google Scholar] [CrossRef]

- Florentin, I.; Logica, B. W-method for Hierarchical and Communicating Finite State Machines. In Proceedings of the 2007 5th IEEE International Conference on Industrial Informatics, Vienna, Austria, 23–27 June 2007. [Google Scholar]

- Liang, H.; Dingel, J.; Diskin, Z. A Comparative Survey of Scenario-Based to State-Based Model Synthesis Approaches. In Proceedings of the International Workshop on Scenarios and State Machines: Models, Algorithms, and Tools, Shanghai, China, 27 May 2006. [Google Scholar]

- Whittle, J.; Schumann, J. Generating Statechart Designs from Scenarios. In Proceedings of the International Conference on Software Engineering, Limerick, Ireland, 4–11 June 2000. [Google Scholar]

- Sun, J.; Dong, J.S. Extracting FSMs from Object-Z Specifications with History Invariants. In Proceedings of the International Conference on Engineering of Complex Computer Systems, Shanghai, China, 16–20 June 2005. [Google Scholar]

- Dick, J.; Faivre, A. Automating the Generation and Sequencing of Test Cases from Model Based Specifications. In Proceedings of the FME’93: Industrial-Strength Formal Methods, Fifth International Symposium Formal Methods Europe, Odense, Denmark, 19–23 April 1993. [Google Scholar]

- Carrington, D.; MacColl, I.; McDonald, J.; Murray, L.; Strooper, P. From Object-Z Specifications to ClassBench Test Suites. Softw. Test. Verif. Reliab. 2000, 10, 111–137. [Google Scholar] [CrossRef][Green Version]

- Hierons, R.M. Testing from a Z Specification. Softw. Test. Verif. Reliab. 1997, 7, 19–33. [Google Scholar] [CrossRef]

- Weißleder, S. Simulated Satisfaction of Coverage Criteria on UML State Machines. In Proceedings of the IEEE International Conference on Software Testing, Verification and Validation, Paris, France, 6–9 April 2010. [Google Scholar]

- Friske, M.; Schelingloff, B.H. Improving Test Coverage for UML State Machines using Transition Instrumentation. In Proceedings of the International Conference on Computer Safety, Reliability, and Security, Nuremberg, Germany, 18–21 September 2007; pp. 301–314. [Google Scholar]

- Rajan, A.; Whalen, M.W.; Heimdahl, M.P.E. The Effect of Proagram and Model Structure on MC/DC Test Adequacy Coverage. In Proceedings of the International Conference on Software Engineering, Leipzig, Germany, 10–18 May 2008. [Google Scholar]

- Aleksandr, S.T.; Maxim, L.G.; Nina, V.Y. Testing systems of Interacting Timed Finite State Machines with the Guaranteed Fault Coverage. In Proceedings of the 17th International Conference of Young Specialists on Micro/Nanotechnologies and Electron Devices(EDM), Erlagol, Russia, 30 June–4 July 2016. [Google Scholar]

- Hoda, K. Finite State Machine Testing Complete Round-trip Versus Transition Trees. In Proceedings of the IEEE 28th International Symposium on Software Reliability Engineering Workshops, Toulouse, France, 23–26 October 2017. [Google Scholar]

- Booch, G.; Rumbaugh, J.; Jacobson, I. Unified Modelling Language User Guide, 2nd ed.; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 2005. [Google Scholar]

- UML. Available online: http://www.omg.org/spec/UML (accessed on 18 December 2019).

- Harel, D.; Politi, M. Modeling Reactive Systems with Statecharts: The STATEMATE Approach; McGraw-Hill: New York, NY, USA, 1998. [Google Scholar]

- ITU-T. Recommendation Z.100 Specification and Description Language (SDL).; International Telecommunications Union: Geneva, Switzerland, 1999. [Google Scholar]

| No. of Test Sequence | Test Sequence | Covered Requirements |

|---|---|---|

| 1 | Idle → Pwr on →Idle | Req. 1, 4 |

| 2 | Idle → Pwr on →Count down→Pwr on | Req. 2, 5 |

| 3 | Idle → Pwr on →Count down→Lift off | Req. 2, 3, 5 |

| 4 | Idle → Pwr on →Cool down | Req. 1, 4 |

| 5 | Idle → Pwr on →Count down→Cool down | Req. 2, 3, 5 |

| No. of Test Sequence | Test Sequence | Covered Requirements |

|---|---|---|

| 1 | Idle → Pwr on →Idle→ Pwr on →Count down→ Lift off | Req. 1, 2, 3, 4, 5 |

| 2 | Idle → Pwr on →Count down→Pwr on→ Count down→Lift off | Req. 1, 2, 3, 4, 5 |

| 3 | Idle → Pwr on →Count down→Lift off | Req. 2, 3, 5 |

| 4 | Idle → Pwr on →Cool down | Req. 1, 4 |

| 5 | Idle → Pwr on →Count down→Cool down | Req. 2, 3, 5 |

| No. of States (N) | Tw | Tp | Tp/Tw |

|---|---|---|---|

| 3 | 38 | 51 | 1.34 |

| 4 | 212 | 293 | 1.38 |

| 5 | 1370 | 1924 | 1.40 |

| 6 | 10,112 | 14,352 | 1.42 |

| 7 | 84,158 | 120,365 | 1.43 |

| 8 | 780,908 | 1,123,411 | 1.44 |

| 9 | 8,000,882 | 11,562,918 | 1.44 |

| Army Missile | Navy Missile | Satellite | Radar | |

|---|---|---|---|---|

| No. of Modules | 997 | 2684 | 92 | 774 |

| Lines of Code | 74,122 | 220,153 | 5207 | 60,389 |

| McCabe’s Cyclomatic Complexity | 7881 | 25,701 | 512 | 6358 |

| No. of Test Sequence | Test Sequence | |

|---|---|---|

| 1 | Idle→Assigned→Idle | |

| 2 | Idle→Assigned→Weapon Initializing → Assigned | |

| 3 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Ready Complete | |

| 4 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Assigned | |

| 5 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable→ Assigned | |

| 6 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Reversible→ Irreversible→Lift off | |

| 7 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Reversible→ Irreversible→Fire Aborted | |

| 8 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Reversible→ Fire Aborted | |

| 9 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Fire Aborted | |

| 10 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Aborted | |

| 11 | Idle→Assigned→Weapon Initializing → Fire Aborted |

| No. of Test Sequence | Test Sequence | |

|---|---|---|

| 1 | Idle→Assigned→Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable →Reversible→ Irreversible→Lift off | |

| 2 | Idle→Assigned→Weapon Initializing → Assigned→Weapon Initializing →Fire Ready Complete→Fire Enable →Reversible→ Irreversible→Lift off | |

| 3 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Ready Complete →Fire Enable →Reversible→ Irreversible→Lift off | |

| 4 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Assigned→Weapon Initializing →Fire Ready Complete→Fire Enable →Reversible→ Irreversible→Lift off | |

| 5 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable→ Assigned→Weapon Initializing→Fire Ready Complete→Fire Enable →Reversible→ Irreversible→Lift off | |

| 6 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Reversible→ Irreversible→Lift off | |

| 7 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Reversible→ Irreversible→Fire Aborted | |

| 8 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Reversible→ Fire Aborted | |

| 9 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Enable → Fire Aborted | |

| 10 | Idle→Assigned→Weapon Initializing →Fire Ready Complete→ Fire Aborted | |

| 11 | Idle→Assigned→Weapon Initializing → Fire Aborted |

| No. of Test Sequence | W Method | Proposed Method |

|---|---|---|

| 1 | Req. 1 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 2 | Req. 1, 3, 4 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 3 | Req. 1, 2, 3, 4, 7 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 4 | Req. 1, 3, 4, 7 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 5 | Req. 1, 2, 3, 4, 7 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 6 | Req. 1, 2, 3, 4, 5, 7, 8 | Req. 1, 2, 3, 4, 5, 7, 8 |

| 7 | Req. 1, 2, 3, 4, 5, 6, 7, 8 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 8 | Req. 1, 2, 3, 4, 5, 6, 7, 8 | Req. 1, 2, 3, 4, 5, 6, 7, 8 |

| 9 | Req. 1, 2, 3, 4, 6, 7 | Req. 1, 2, 3, 4, 6, 7 |

| 10 | Req. 1, 3, 4, 6, 7 | Req. 1, 3, 4, 6, 7 |

| 11 | Req. 1, 3, 4, 6 | Req. 1, 3, 4, 6 |

| Test Suite | Total Length (Test Nodes) | No. of Errors (Founded) |

|---|---|---|

| W method | 61 | 5 |

| Proposed method | 90 | 7 |

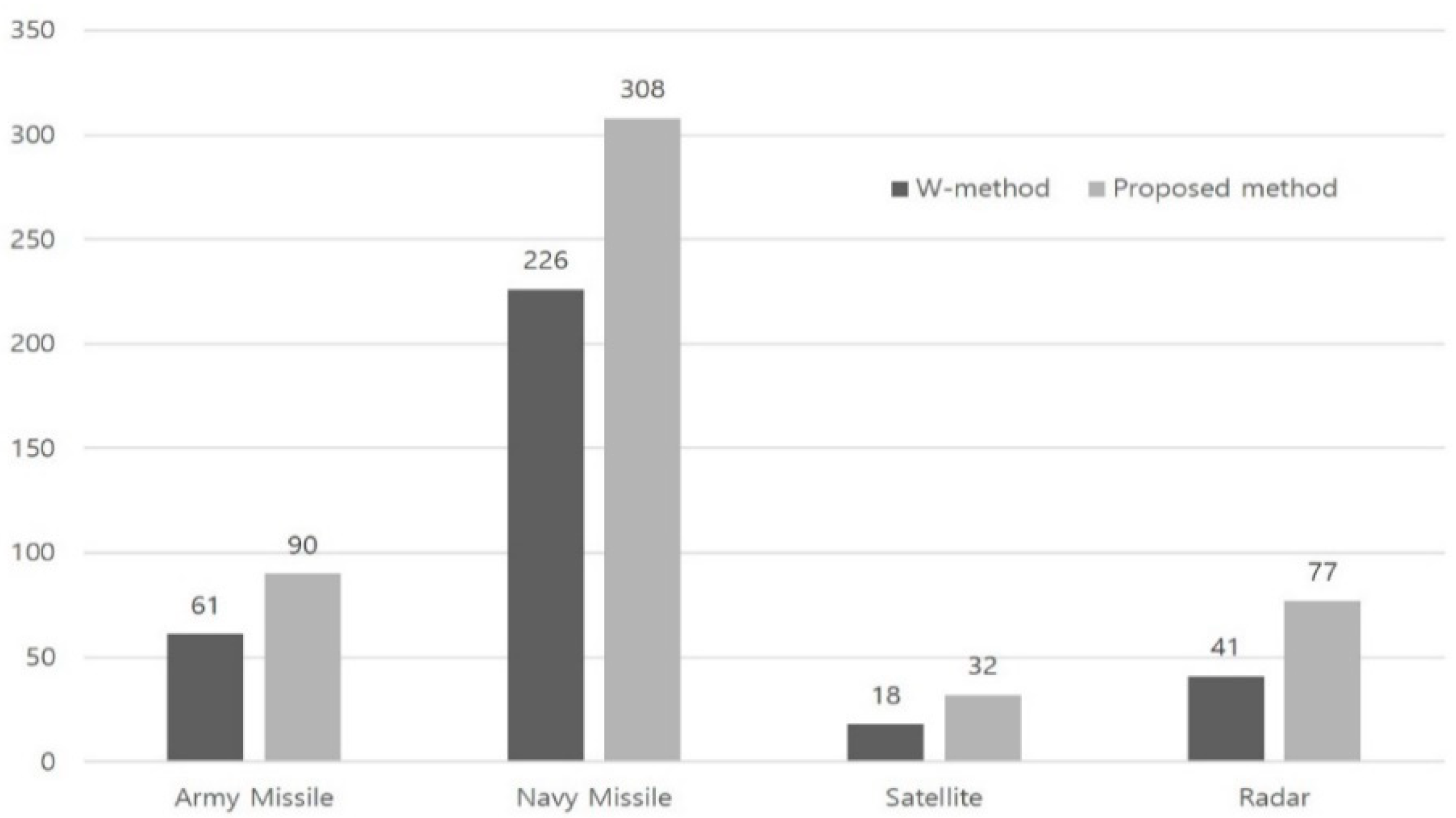

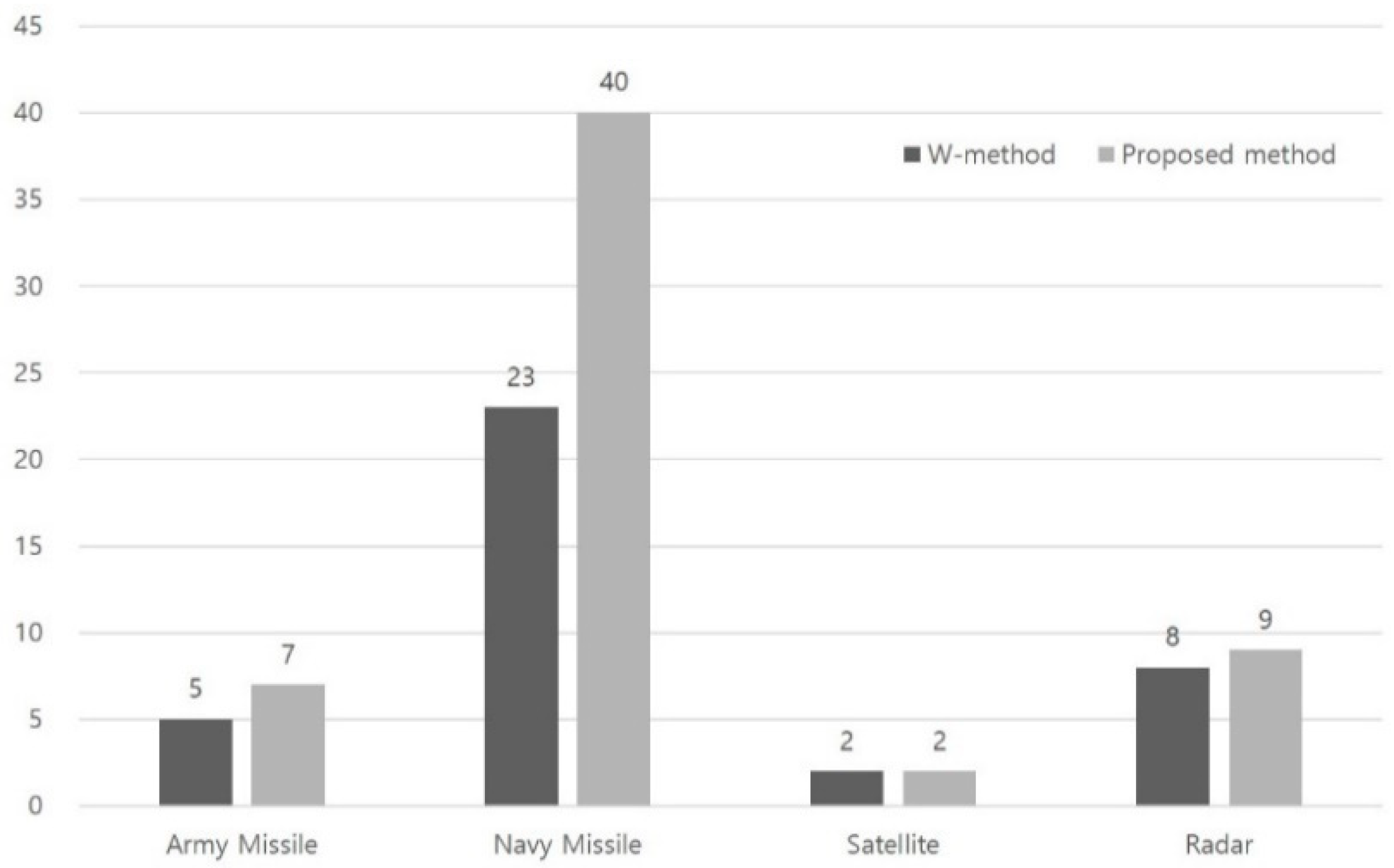

| Case Study No. | System | Total Testing Length (W/Proposed) | No. of Errors (W/Proposed) |

|---|---|---|---|

| 1 | Army Missile | 61/90 | 5/7 |

| 2 | Navy Missile | 226/308 | 23/40 |

| 3 | Satellite | 18/32 | 2/2 |

| 4 | Radar | 41/77 | 8/9 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koo, B.; Bae, J.; Kim, S.; Park, K.; Kim, H. Test Case Generation Method for Increasing Software Reliability in Safety-Critical Embedded Systems. Electronics 2020, 9, 797. https://doi.org/10.3390/electronics9050797

Koo B, Bae J, Kim S, Park K, Kim H. Test Case Generation Method for Increasing Software Reliability in Safety-Critical Embedded Systems. Electronics. 2020; 9(5):797. https://doi.org/10.3390/electronics9050797

Chicago/Turabian StyleKoo, Bongjoo, Jungho Bae, Seogbong Kim, Kangmin Park, and Hyungshin Kim. 2020. "Test Case Generation Method for Increasing Software Reliability in Safety-Critical Embedded Systems" Electronics 9, no. 5: 797. https://doi.org/10.3390/electronics9050797

APA StyleKoo, B., Bae, J., Kim, S., Park, K., & Kim, H. (2020). Test Case Generation Method for Increasing Software Reliability in Safety-Critical Embedded Systems. Electronics, 9(5), 797. https://doi.org/10.3390/electronics9050797