Attention-LSTM-Attention Model for Speech Emotion Recognition and Analysis of IEMOCAP Database

Abstract

1. Introduction

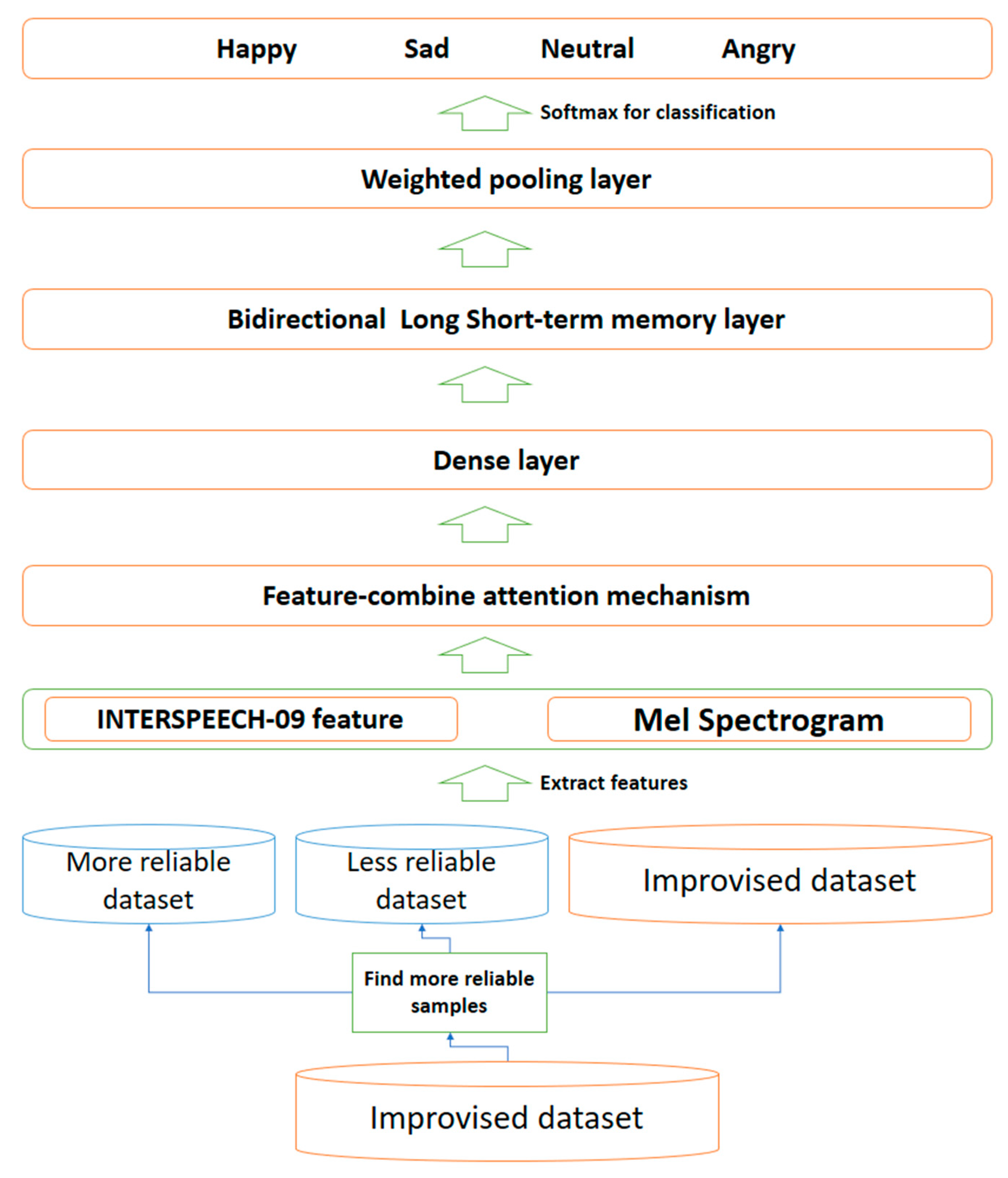

- the addition of the deep-learning-based LLD feature extraction capability for enhanced and expanded IS09 features,

- an IS09-mel-spectrogram attention mechanism that focuses on the important parts, and

- an accurate-experimentation method with a highly reliable dataset.

2. Related Works

3. Model

3.1. Problem Definition

3.2. Feature-Combined Attention Mechanism

4. IEMOCAP Database

5. Experiment

6. Analysis of IEMOCAP Database

7. Discussion

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mirsamadi, S.; Barsoum, E.; Zhang, C. Automatic speech emotion recognition using recurrent neural networks with local attention. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2227–2231. [Google Scholar]

- Tahon, M.; Devillers, L. Towards a Small Set of Robust Acoustic Features for Emotion Recognition: Challenges. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 24, 16–28. [Google Scholar] [CrossRef]

- Schuller, A.B.; Batliner, D.; Seppi, S.; Steidi, T.; Vogt, J.; Wagner, L.; Devillers, L.; Vidrascu, N.; Kessous, A.L. The relevance of feature type for the automatic classification of emotional user states: Low level descriptors and functionals. In Proceedings of the 8th Annual Conference of the International Speech Communication Association, Antwerp, Belgium, 27–31 August 2016; pp. 2253–2256. [Google Scholar]

- Koolagudi, S.G.; Rao, K.S. Emotion recognition from speech: A review. Int. J. Speech Technol. 2012, 15, 99–117. [Google Scholar] [CrossRef]

- Schuller, B.; Arsic, D.; Wallhoff, F.; Rigoll, G. Emotion recognition in the noise applying large acoustic feature sets. Proc. Speech Prosody 2006, 2006, 276–289. [Google Scholar]

- Álvarez, A.; Cearreta, I.; López-Gil, J.-M.; Arruti, A.; Lazkano, E.; Sierra, B.; Garay-Vitoria, N. Feature Subset Selection Based on Evolutionary Algorithms for Automatic Emotion Recognition in Spoken Spanish and Standard Basque Language. In Proceedings of the International Conference on Text, Speech and Dialogue, Brno, Czech Republic, 11–15 September 2006; Volume 4188, pp. 565–572. [Google Scholar]

- Busso, C.; Bulut, M.; Narayanan, S.S. Toward Effective Automatic Recognition Systems of Emotion in Speech in Social Emotions in Nature and Artifact: Emotions in Human and Human-Computer Interaction Social Emotions in Nature and Artifact: Emotions in Human and Human-Computer Interaction; Gratch, J., Marsella, S., Eds.; Oxford University Press: New York, NY, USA, 2013; pp. 110–127. [Google Scholar]

- Eyben, F.; Weninger, F.; Gross, F.; Schuller, B. Recent developments in openSMILE, the munich open-source multimedia feature extractor. In Proceedings of the 21st ACM International Conference on Multimedia—MM ’13, Barcelona, Spain, 21–25 October 2013; pp. 835–838. [Google Scholar] [CrossRef]

- Ramet, G.; Garner, P.N.; Baeriswyl, M.; Lazaridis, A. Context-Aware Attention Mechanism for Speech Emotion Recognition. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 126–131. [Google Scholar] [CrossRef]

- Lee, J.; Tashev, I. High-level feature representation using recurrent neural network for speech emotion recognition. In Proceedings of the 16th Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 1537–1540. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Qin, Y.; Song, D.; Chen, H.; Cheng, W.; Jiang, G.; Cottrell, G.W. A Dual-Stage Attention-Based Recurrent Neural Network for Time Series Prediction. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2627–2633. [Google Scholar]

- Verleysen, M.; Francois, D. The Curse of Dimensionality in Data Mining and Time Series Prediction. In Proceedings of the International Work-Conference on Artificial Neural Networks, Barselona, Spain, 8–10 June 2005; Volume 3512, pp. 758–770. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.-C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control. 2019, 47, 312–323. [Google Scholar] [CrossRef]

- Vidrascu, L.; Devillers, L. Five emotion classes detection in real-world call centre data: The use of various types of paralinguisticfeatures. In Proceedings of the International Workshop on Paralinguistic Speech Between Models and Data, Saarbrücken, Germany, 2–3 August 2007; DFKI Publications: Kaiserslautern, Germany, 2007. [Google Scholar]

- Kandali, C.A.B.; Routray, A.; Basu, T.K. Emotion recognition from Assamese speeches using MFCC features and GMM classifier. In Proceedings of the 2008 IEEE Region 10 Conference (TENCON 2008), Hyderabad, India, 19–21 November 2008; pp. 1–5. [Google Scholar]

- Chenchah, F.; Lachiri, Z. Acoustic Emotion Recognition Using Linear and Nonlinear Cepstral Coefficients. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 135–138. [Google Scholar] [CrossRef][Green Version]

- Nalini, N.J.; Palanivel, S. Music emotion recognition: The combined evidence of MFCC and residual phase. Egypt. Inform. J. 2016, 17, 1–10. [Google Scholar] [CrossRef]

- Stuhlsatz, A.; Meyer, C.; Eyben, F.; Zielke, T.; Meier, G.; Schuller, B. Deep neural networks for acoustic emotion recognition: Raising the benchmarks. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 5688–5691. [Google Scholar]

- Albanie, S.; Nagrani, A.; Vedaldi, A.; Zisserman, A. Emotion Recognition in Speech using Cross-Modal Transfer in the Wild. In Proceedings of the 2018 ACM Multimedia Conference on Multimedia Conference—MM ’18, Seoul, Korea, 22–26 October 2018; pp. 292–301. [Google Scholar]

- Yoon, S.; Byun, S.; Jung, K. Multimodal Speech Emotion Recognition Using Audio and Text. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 112–118. [Google Scholar] [CrossRef]

- Trigeorgis, G.; Ringeval, F.; Brueckner, R.; Marchi, E.; Nicolaou, M.A.; Schuller, B.; Zafeiriou, S. Adieu features? End-To-End speech emotion recognition using a deep convolutional recurrent network. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5200–5204. [Google Scholar]

- Chen, M.; He, X.; Yang, J.; Zhang, H. 3-D Convolutional Recurrent Neural Networks with Attention Model for Speech Emotion Recognition. IEEE Signal Process. Lett. 2018, 25, 1440–1444. [Google Scholar] [CrossRef]

- Hsiao, P.-W.; Chen, C.-P. Effective Attention Mechanism in Dynamic Models for Speech Emotion Recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2526–2530. [Google Scholar]

- Etienne, C.; Fidanza, G.; Petrovskii, A.; Devillers, L.; Schmauch, B. Speech emotion recognition with data augmentation and layer-wise learning rate adjustment. arXiv 2018, arXiv:1802.0563068. [Google Scholar]

- Tzinis, E.; Potamianos, A. Segment-based speech emotion recognition using recurrent neural networks. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 190–195. [Google Scholar]

- Neumann, M.; Vu, N.T. Attentive Convolutional Neural Network Based Speech Emotion Recognition: A Study on the Impact of Input Features, Signal Length, and Acted Speech. In Proceedings of the Interspeech 2017—18th Annual Conference of the International Speech Communication Association, Stockholm, Sweden, 20–24 August 2017; pp. 1263–1267. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. OSDI 2016, 16, 265–283. [Google Scholar]

| Dataset | Number of Data Points | Happy | Angry | Neutral | Sad | Decision |

|---|---|---|---|---|---|---|

| I (improvised) | 2280 | 284 | 289 | 1099 | 608 | - |

| R (more reliable) | 1202 | 99 | 132 | 582 | 389 | D > 2 |

| U (less reliable) | 1078 | 185 | 157 | 517 | 219 | D <= 2 |

| Experiment Name | Used Feature | Model |

|---|---|---|

| IS_LA | IS09 | LA |

| MS_LA | MelSpectrogram | LA |

| ISMS_LA | IS09+MelSpectrogram | LA |

| IS_ALA | IS09 | ALA |

| MS_ALA | MelSpectrogram | ALA |

| ISMS_ALA | IS09+MelSpectrogram | ALA |

| Experiment | Weighed Accuracy | Unweighted Accuracy |

|---|---|---|

| IS_LA | 60.992.8 | 60.412.3 |

| MS_LA | 65.213.1 | 63.272.1 |

| ISMS_LA | 64.274.7 | 61.825.7 |

| IS_ALA | 55.743.1 | 53.862.2 |

| MS_ALA | 66.072.6 | 63.201.5 |

| ISMS_ALA | 67.663.4 | 65.084.5 |

| Experiment | Weighed Accuracy | Unweighted Accuracy |

|---|---|---|

| Etienne et al. [28] | 64.5 | 61.7 |

| Tzinis et al. [29] | 64.2 | 60.0 |

| Lee et al. [10] | 62.9 | 63.9 |

| Neumann et al. [30] | 62.1 | _ |

| Ramet et al. [9] | 68.8 | 63.7 |

| ISMS_ALA (proposed model) | 67.663.4 | 65.084.5 |

| Training Dataset | Evaluation Dataset | WA | UA |

|---|---|---|---|

| TI | EI | 67.663.4 | 65.084.5 |

| ER | 73.207.4 | 68.377.2 | |

| EU | 62.604.4 | 61.966.4 | |

| TR | EI | 65.683.5 | 60.315.4 |

| ER | 73.187.7 | 69.437.0 | |

| EU | 59.585.4 | 57.174.5 | |

| TU | EI | 62.63 | 60.695.8 |

| ER | 69.22 | 66.22 | |

| EU | 60.704.5 | 61.577.0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, Y.; Kim, Y.-J. Attention-LSTM-Attention Model for Speech Emotion Recognition and Analysis of IEMOCAP Database. Electronics 2020, 9, 713. https://doi.org/10.3390/electronics9050713

Yu Y, Kim Y-J. Attention-LSTM-Attention Model for Speech Emotion Recognition and Analysis of IEMOCAP Database. Electronics. 2020; 9(5):713. https://doi.org/10.3390/electronics9050713

Chicago/Turabian StyleYu, Yeonguk, and Yoon-Joong Kim. 2020. "Attention-LSTM-Attention Model for Speech Emotion Recognition and Analysis of IEMOCAP Database" Electronics 9, no. 5: 713. https://doi.org/10.3390/electronics9050713

APA StyleYu, Y., & Kim, Y.-J. (2020). Attention-LSTM-Attention Model for Speech Emotion Recognition and Analysis of IEMOCAP Database. Electronics, 9(5), 713. https://doi.org/10.3390/electronics9050713