People Walking Classification Using Automotive Radar

Abstract

1. Introduction

Related Work

2. Radar System Description

2.1. Used Devices

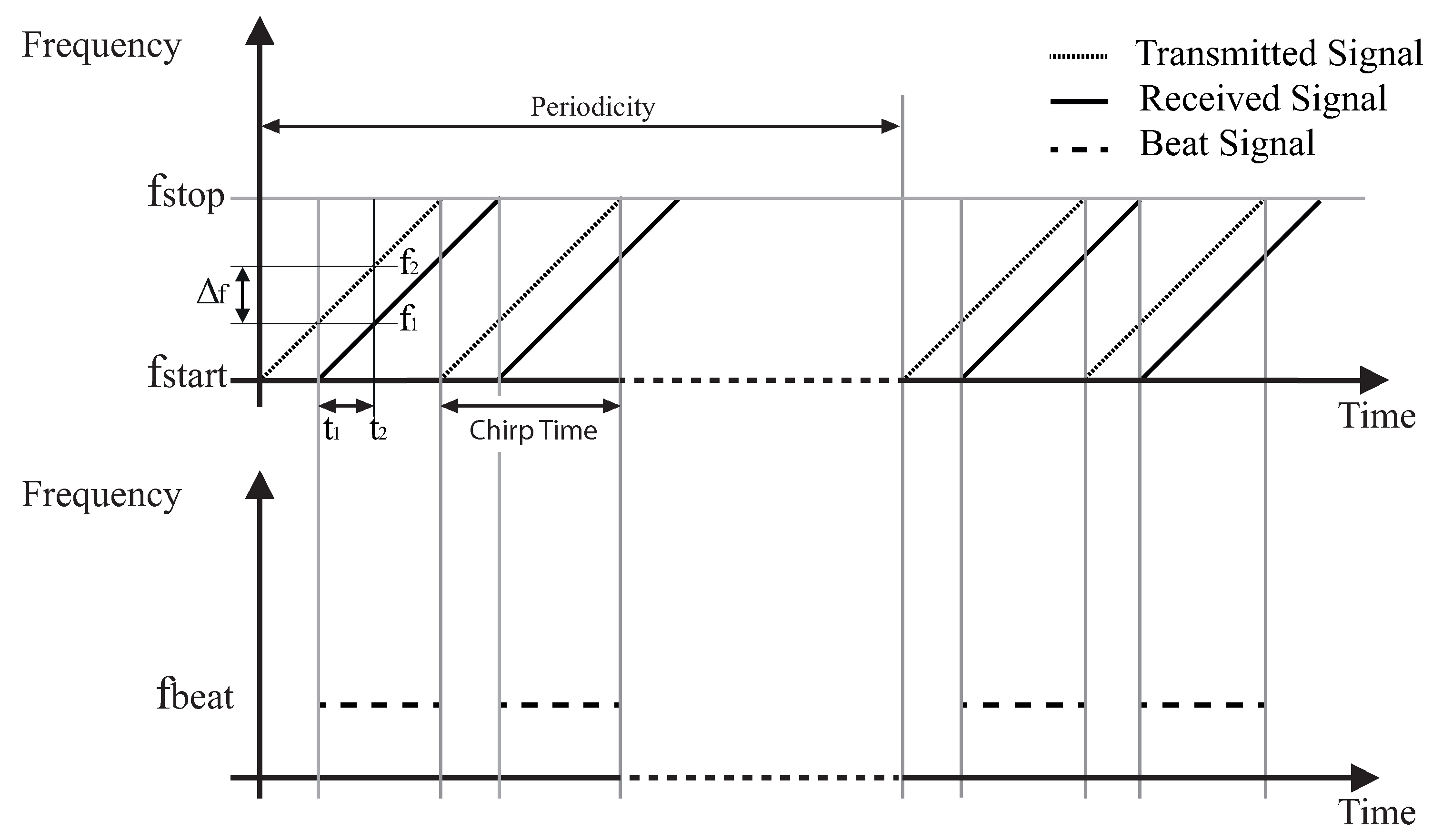

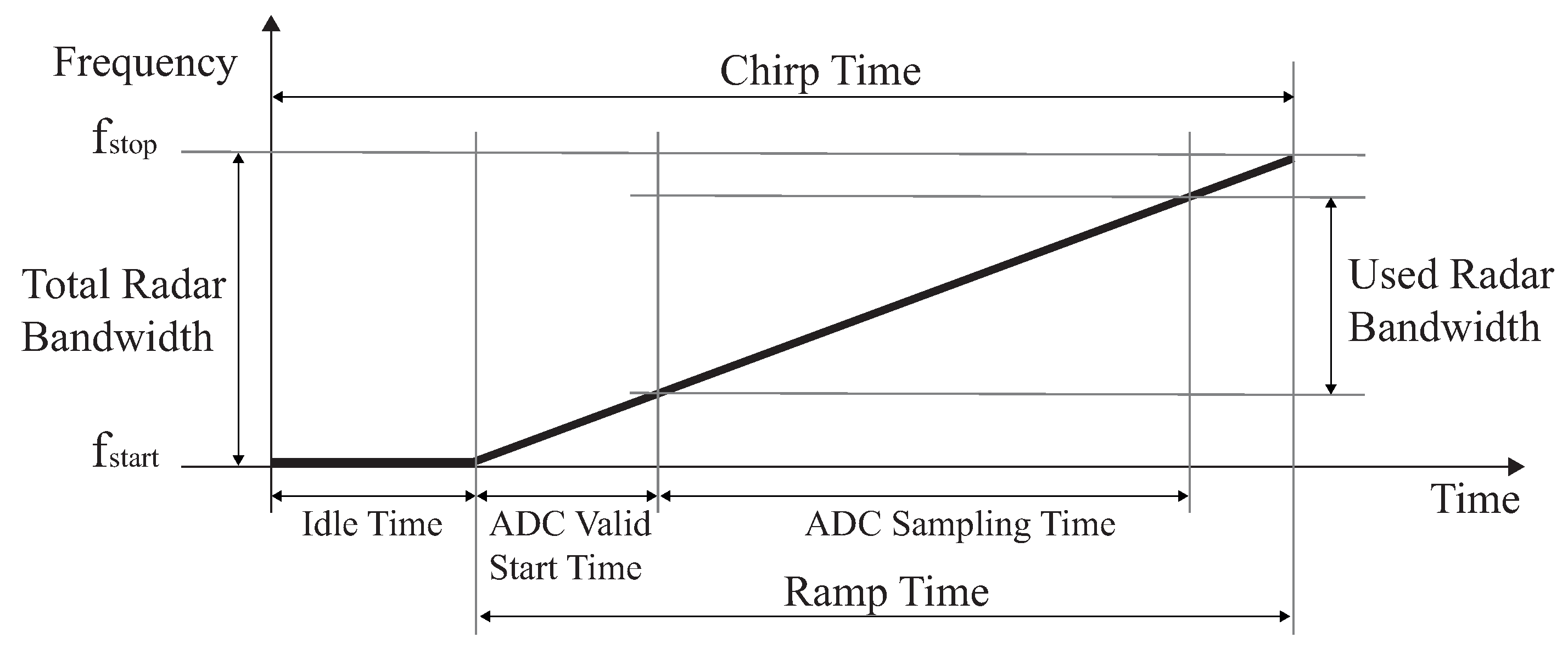

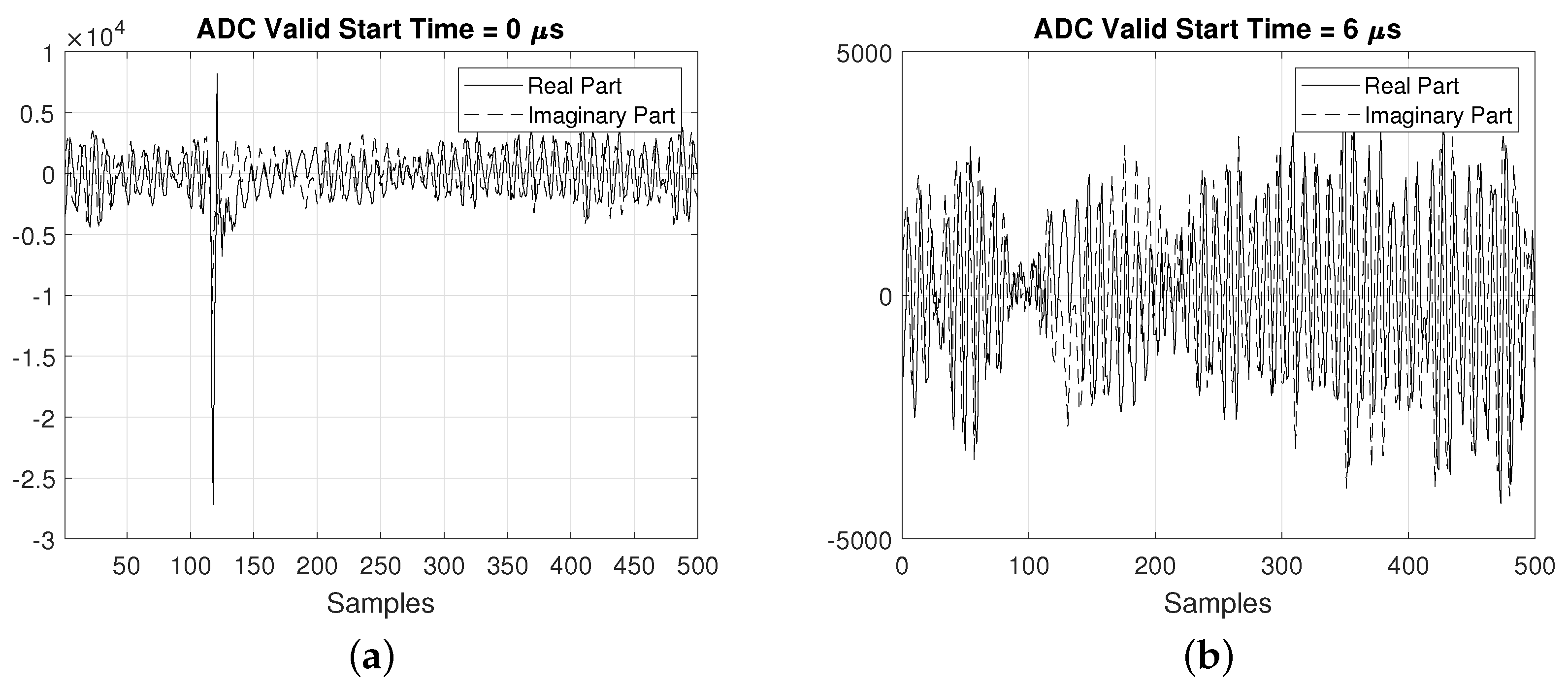

2.2. Transmitted Signals

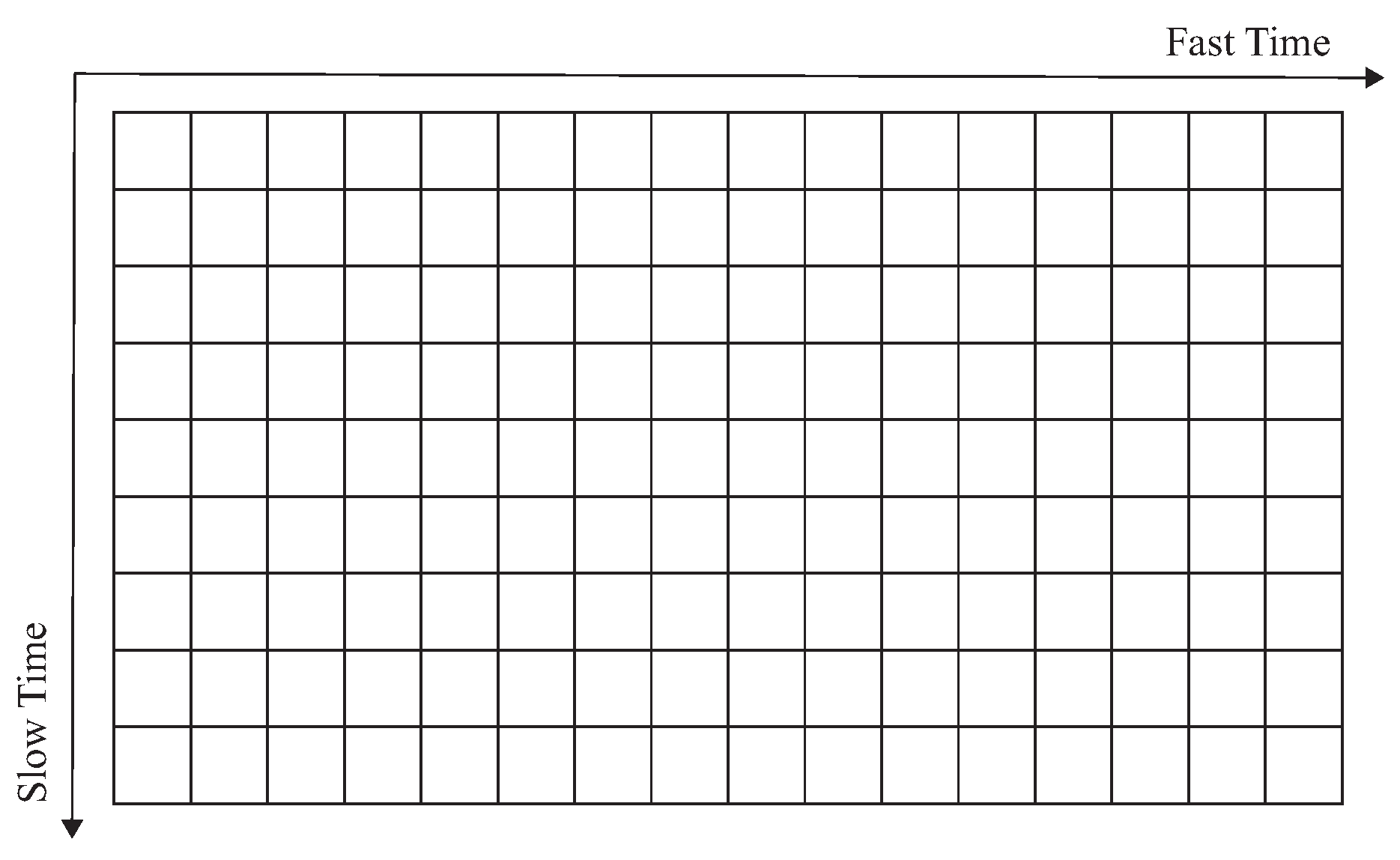

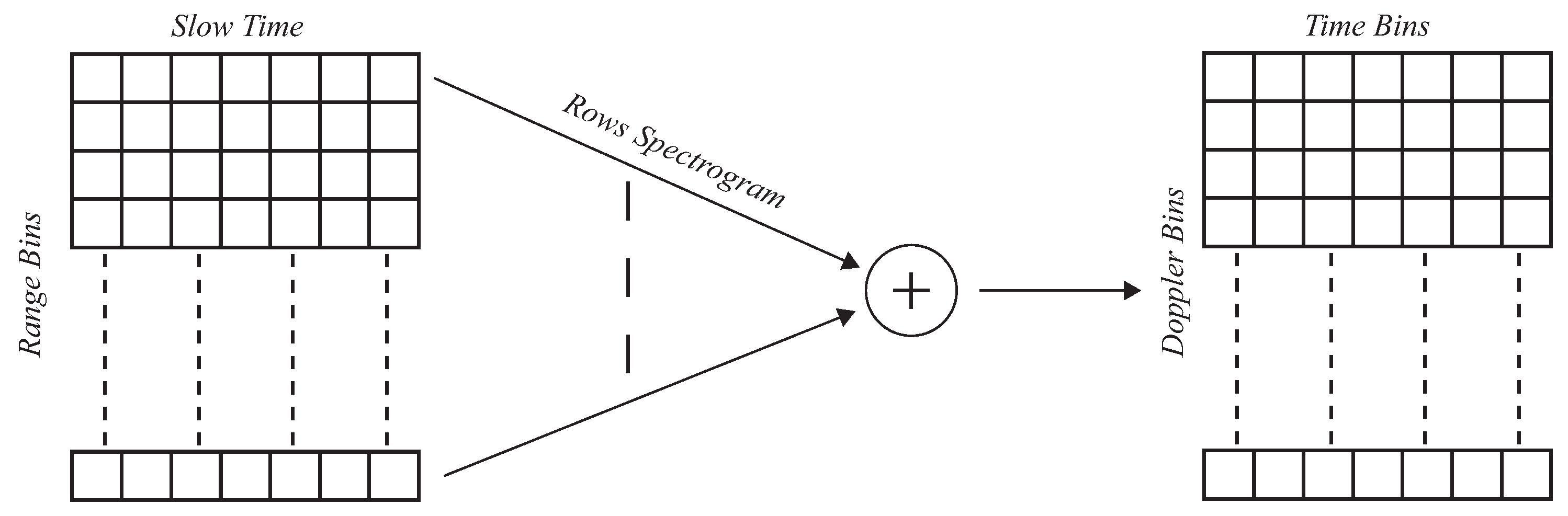

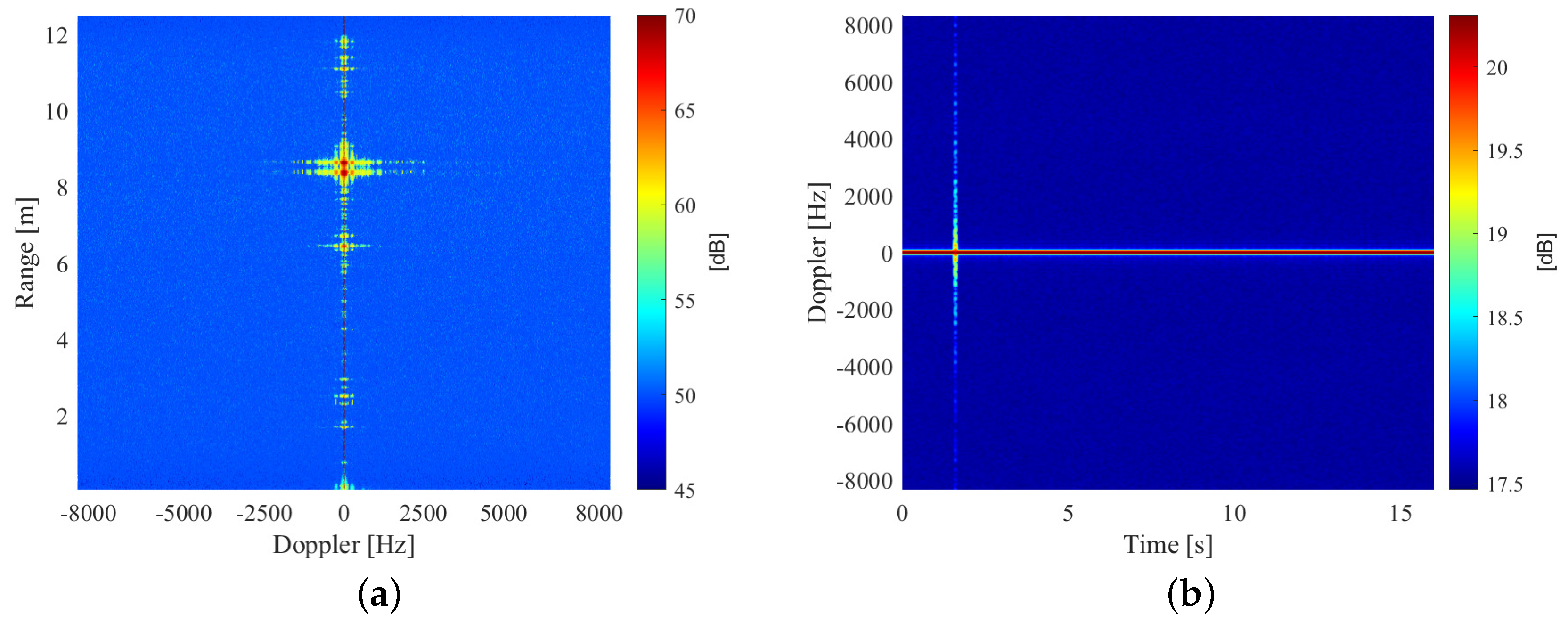

3. Radar Signal Processing

4. Movements Classification

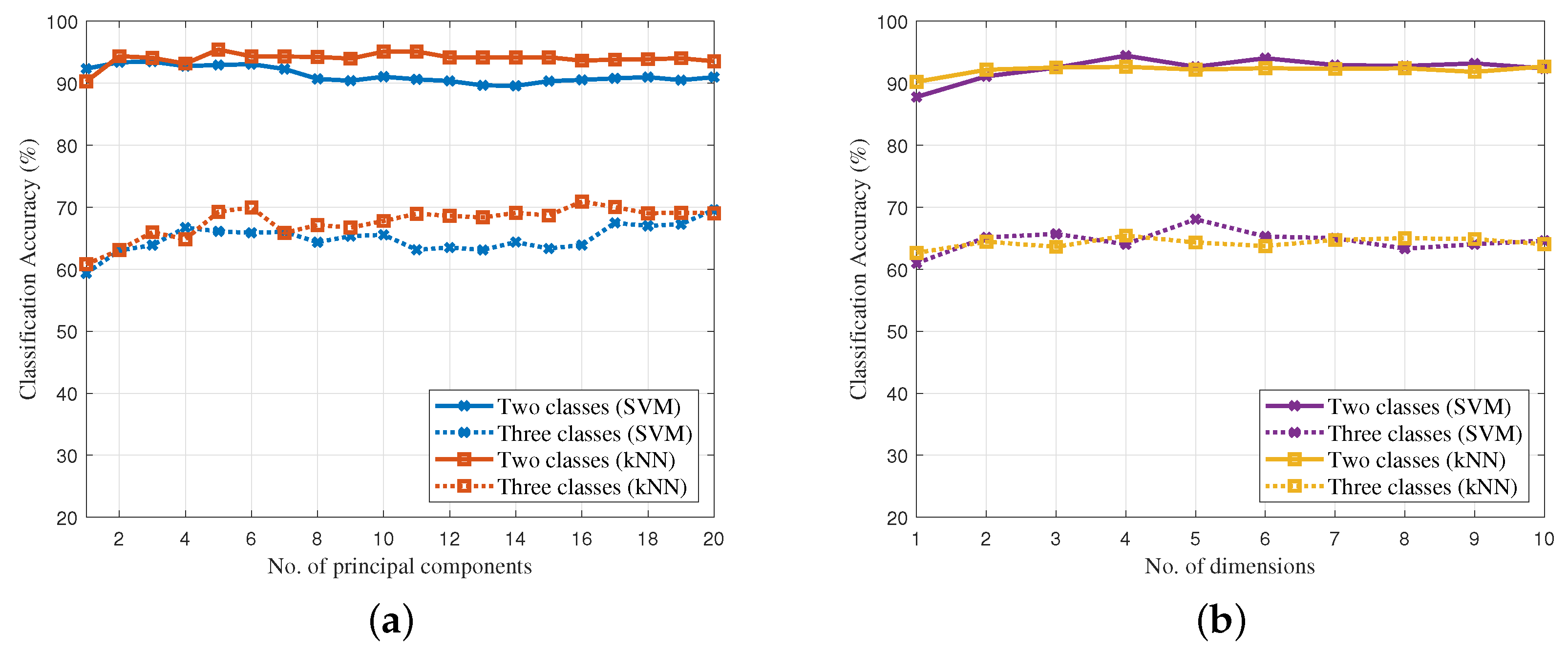

4.1. Principal Component Analysis

4.2. t-distributed Stochastic Neighbor Embedding

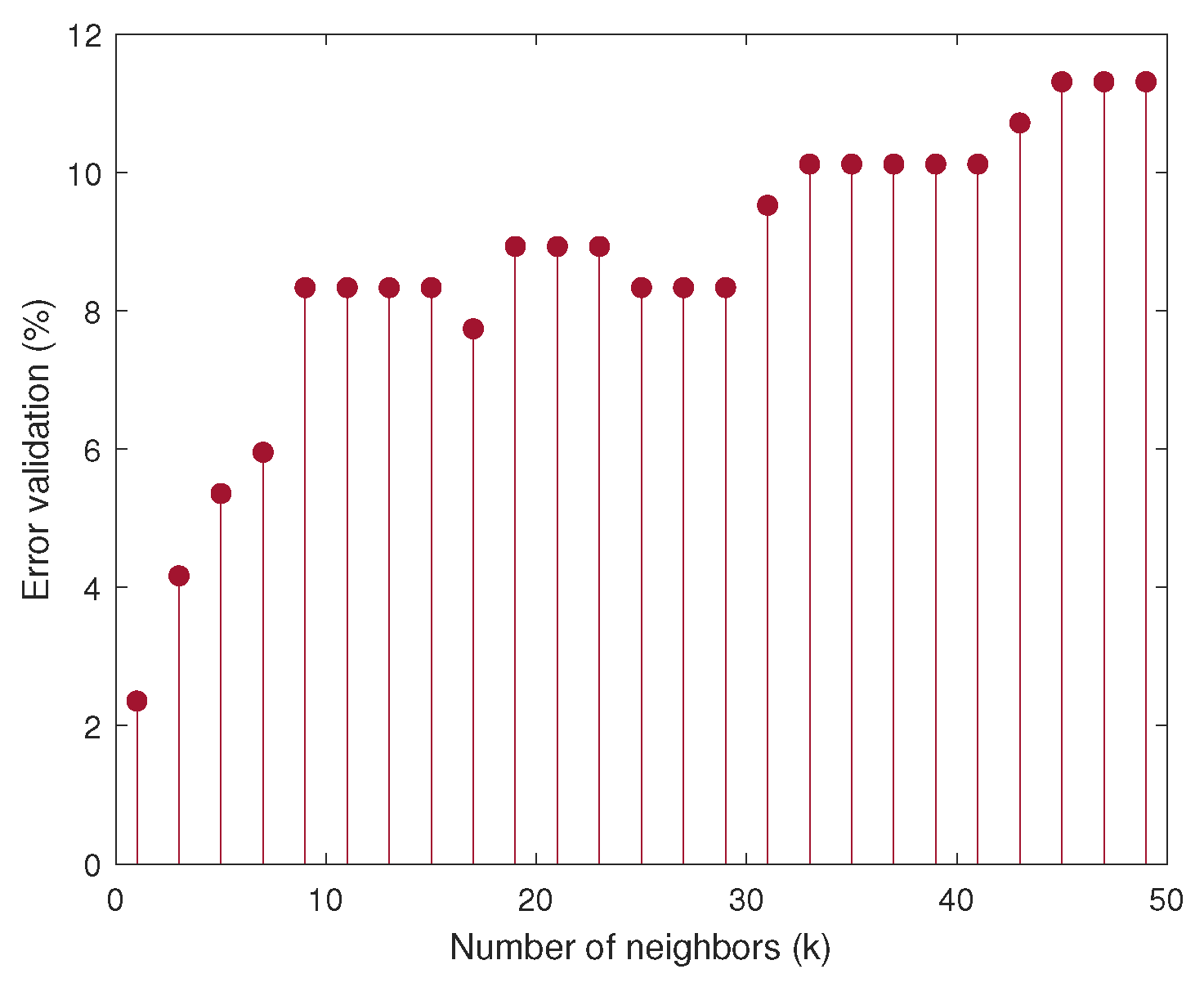

4.3. Classification Algorithms

5. Experimental Results

5.1. Experimental Setup

- Slow walk;

- Fast walk;

- Slow walk with hands in pockets.

5.2. Classification

6. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ADC | analog-to-digital converter |

| FMCW | Frequency Modulated Continuous Wave |

| MIMO | multiple-input multiple output |

| NN | Nearest Neighbor |

| PCA | Principal Component Analysis |

| SDR | Software Defined Radio |

| STFT | Short Time Fourier Transform |

| SVM | Support Vector Machine |

| t-SNE | t-distributed Stochastic Neighbor Embedding |

References

- Frazier, L.M. MDR for law enforcement [motion detector radar]. IEEE Potentials 1998, 16, 23–26. [Google Scholar] [CrossRef]

- Mazel, D.S.; Barry, A. Mobile Ravin: Intrusion Detection and Tracking with Organic Airport Radar and Video Systems. In Proceedings of the 40th Annual 2006 International Carnahan Conference on Security Technology, Lexington, KY, USA, 16–19 October 2006; pp. 30–33. [Google Scholar] [CrossRef]

- Zyczkowski, M.; Palka, N.; Trzcinski, T.; Dulski, R.; Kastek, M.; Trzaskawka, P. Integrated radar-camera security system: Experimental results. In Proceedings of the Radar Sensor Technology XV, Orlando, FL, USA, 25–29 April 2011; Volume 8021, p. 80211U. [Google Scholar]

- Pisa, S.; Pittella, E.; Piuzzi, E. A survey of radar systems for medical applications. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 64–81. [Google Scholar] [CrossRef]

- Roy, A.; Gale, N.; Hong, L. Automated traffic surveillance using fusion of Doppler radar and video information. Math. Comput. Model. 2011, 54, 531–543. [Google Scholar] [CrossRef]

- Seifert, A.K.; Schäfer, L.; Amin, M.G.; Zoubir, A.M. Subspace Classification of Human Gait Using Radar Micro-Doppler Signatures. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 311–315. [Google Scholar] [CrossRef]

- Chen, V.C. Doppler signatures of radar backscattering from objects with micro-motions. IET Signal Process. 2008, 2, 291–300. [Google Scholar] [CrossRef]

- Tahmoush, D. Review of micro-Doppler signatures. IEE Proc.-Radar Sonar Navig. 2015, 9, 1140–1146. [Google Scholar] [CrossRef]

- Held, P.; Steinhauser, D.; Kamann, A.; Holdgrün, T.; Doric, I.; Koch, A.; Brandmeier, T. Radar-Based Analysis of Pedestrian Micro-Doppler Signatures Using Motion Capture Sensors. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 787–793. [Google Scholar] [CrossRef]

- Prophet, R.; Hoffmann, M.; Vossiek, M.; Sturm, C.; Ossowska, A.; Malik, W.; Lübbert, U. Pedestrian Classification with a 79 GHz Automotive Radar Sensor. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cippitelli, E.; Fioranelli, F.; Gambi, E.; Spinsante, S. Radar and RGB-Depth Sensors for Fall Detection: A Review. IEEE Sens. J. 2017, 17, 3585–3604. [Google Scholar] [CrossRef]

- Zeng, Z.; Amin, M.G.; Shan, T. Arm Motion Classification Using Time-Series Analysis of the Spectrogram Frequency Envelopes. Remote Sens. 2020, 12, 454. [Google Scholar] [CrossRef]

- Ma, X.; Zhao, R.; Liu, X.; Kuang, H.; Al-qaness, M.A. Classification of Human Motions Using Micro-Doppler Radar in the Environments with Micro-Motion Interference. Sensors 2019, 19, 2598. [Google Scholar] [CrossRef]

- Kwon, J.; Kwak, N. Radar Application: Stacking Multiple Classifiers for Human Walking Detection Using Micro-Doppler Signals. Appl. Sci. 2019, 9, 3534. [Google Scholar] [CrossRef]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Analysis of polarimetric multistatic human micro-Doppler classification of armed/unarmed personnel. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; pp. 0432–0437. [Google Scholar] [CrossRef]

- Ricci, R.; Balleri, A. Recognition of humans based on radar micro-Doppler shape spectrum features. IEE Proc.-Radar Sonar Navig. 2015, 9, 1216–1223. [Google Scholar] [CrossRef]

- Fioranelli, F.; Ritchie, M.; Griffiths, H. Performance Analysis of Centroid and SVD Features for Personnel Recognition Using Multistatic Micro-Doppler. IEEE Geosci. Remote Sens. Lett. 2016, 13, 725–729. [Google Scholar] [CrossRef]

- Vandersmissen, B.; Knudde, N.; Jalalvand, A.; Couckuyt, I.; Bourdoux, A.; De Neve, W.; Dhaene, T. Indoor Person Identification Using a Low-Power FMCW Radar. IEEE Geosci. Remote Sens. Lett. 2018, 56, 3941–3952. [Google Scholar] [CrossRef]

- Gambi, E.; Ciattaglia, G.; De Santis, A. People Movement Analysis with Automotive Radar. In Proceedings of the 2019 IEEE 23rd International Symposium on Consumer Technologies (ISCT), Ancona, Italy, 19–21 June 2019; pp. 233–237. [Google Scholar] [CrossRef]

- Dekker, B.; Jacobs, S.; Kossen, A.S.; Kruithof, M.C.; Huizing, A.G.; Geurts, M. Gesture recognition with a low power FMCW radar and a deep convolutional neural network. In Proceedings of the 2017 European Radar Conference (EURAD), Nuremberg, Germany, 11–13 October 2017; pp. 163–166. [Google Scholar] [CrossRef]

- Anishchenko, L.; Alekhin, M.; Tataraidze, A.; Ivashov, S.; Bugaev, A.S.; Soldovieri, F. Application of step-frequency radars in medicine. In Proceedings of the Radar Sensor Technology XVIII, Baltimore, MD, USA, 5–9 May 2014; Volume 9077, pp. 524–530. [Google Scholar] [CrossRef]

- Rissacher, D.; Galy, D. Cardiac radar for biometric identification using nearest neighbour of continuous wavelet transform peaks. In Proceedings of the IEEE International Conference on Identity, Security and Behavior Analysis (ISBA 2015), Hong Kong, China, 23–25 March 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Ciattaglia, G.; Senigagliesi, L.; De Santis, A.; Ricciuti, M. Contactless measurement of physiological parameters. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 8–11 September 2019; pp. 22–26. [Google Scholar] [CrossRef]

- Zabalza, J.; Clemente, C.; Di Caterina, G.; Ren, J.; Soraghan, J.J.; Marshall, S. Robust PCA micro-doppler classification using SVM on embedded systems. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 2304–2310. [Google Scholar] [CrossRef]

- Jokanović, B.; Amin, M.; Ahmad, F.; Boashash, B. Radar fall detection using principal component analysis. In Proceedings of the Radar Sensor Technology XX, Baltimore, MD, USA, 17–21 April 2016; Volume 9829, p. 982916. [Google Scholar]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Li, X.; He, Y.; Jing, X. A Survey of Deep Learning-Based Human Activity Recognition in Radar. Remote Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- Lee, S.S.; Choi, S.T.; Choi, S.I. Classification of gait type based on deep learning using various sensors with smart insole. Sensors 2019, 19, 1757. [Google Scholar] [CrossRef]

- Seyfioğlu, M.S.; Gürbüz, S.Z.; Özbayoğlu, A.M.; Yüksel, M. Deep learning of micro-Doppler features for aided and unaided gait recognition. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 1125–1130. [Google Scholar] [CrossRef]

- Ramasubramanian, K.; Ramaiah, K.; Aginskiy, A. Moving from Legacy 24 GHz to State-of-the-Art 77 GHz Radar. Available online: http://www.ti.com/lit/wp/spry312/spry312.pdf (accessed on 30 March 2020).

- Verlekar, T.T.; Soares, L.D.; Correia, P.L. Automatic Classification of Gait Impairments Using a Markerless 2D Video-Based System. Sensors 2018, 18, 2743. [Google Scholar] [CrossRef]

- Lee, L.; Grimson, W.E.L. Gait analysis for recognition and classification. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21–21 May 2002; pp. 155–162. [Google Scholar] [CrossRef]

- Elkurdi, A.; Soufian, M.; Nefti-Meziani, S. Gait speeds classifications by supervised modulation-based machine-learning using kinect camera. J. Med. Res. Innov. 2018, 2, 1–6. [Google Scholar] [CrossRef]

- Latorre, J.; Colomer, C.; Alcañiz, M.; Llorens, R. Gait analysis with the Kinect v2: Normative study with healthy individuals and comprehensive study of its sensitivity, validity, and reliability in individuals with stroke. J. Neuroeng. Rehabil. 2019, 16, 97. [Google Scholar] [CrossRef]

- Instruments, T. AWR1642 Single-Chip 77- and 79-GHz FMCW Radar Sensor. Available online: http://www.ti.com/lit/ds/symlink/awr1642.pdf (accessed on 30 March 2020).

- Patole, S.M.; Torlak, M.; Wang, D.; Ali, M. Automotive radars: A review of signal processing techniques. IEEE Signal Process. Mag. 2017, 34, 22–35. [Google Scholar] [CrossRef]

- Ancortek. SDR 2400AD. Available online: http://ancortek.com/wp-content/uploads/2019/04/SDR-2400AD-Datasheet.pdf (accessed on 30 March 2020).

- Li, J.; Stoica, P. MIMO Radar with Colocated Antennas. IEEE Signal Process. Mag. 2007, 24, 106–114. [Google Scholar] [CrossRef]

- Brennan, P.; Huang, Y.; Ash, M.; Chetty, K. Determination of sweep linearity requirements in FMCW radar systems based on simple voltage-controlled oscillator sources. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1594–1604. [Google Scholar] [CrossRef]

- Alizadeh, M.; Shaker, G.; De Almeida, J.C.M.; Morita, P.P.; Safavi-Naeini, S. Remote monitoring of human vital signs using mm-wave FMCW radar. IEEE Access 2019, 7, 54958–54968. [Google Scholar] [CrossRef]

- Mitra, S.K.; Kuo, Y. Digital Signal Processing: A Computer-Based Approach; McGraw-Hill: New York, NY, USA, 2006; Volume 2. [Google Scholar]

- Cohen, L. Time-Frequency Analysis; Prentice Hall: Upper Saddle River, NJ, USA, 1995; Volume 778. [Google Scholar]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Maaten, L.V.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Bryan, J.D.; Kwon, J.; Lee, N.; Kim, Y. Application of ultra-wide band radar for classification of human activities. IEE Proc.-Radar Sonar Navig. 2012, 6, 172–179. [Google Scholar] [CrossRef]

- Björklund, S.; Petersson, H.; Hendeby, G. Features for micro-Doppler based activity classification. IEE Proc.-Radar Sonar Navig. 2015, 9, 1181–1187. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| 77 GHz | |

| S | 60.012 MHz/ |

| 100 | |

| ADC Valid Start Time | 6 |

| 10 Msps | |

| 60 | |

| 512 | |

| 400 | |

| no. of chirps per frame | 128 |

| Periodicity | 40 ms |

| Used Radar Bandwidth | 3.6 GHz |

| Kernel | Linear | Gaussian | Polynomial |

|---|---|---|---|

| Error validation (%) | 4.46 | 17.26 | 33.33 |

| True/Predicted | S | F |

|---|---|---|

| Slow Walk (S) | 110 (109) | 2 (3) |

| Fast Walk (F) | 9 (8) | 47 (48) |

| True/Predicted | S | F | SH |

|---|---|---|---|

| Slow Walk (S) | 33 (32) | 2 (1) | 21 (23) |

| Fast Walk (F) | 4 (5) | 49 (48) | 3 (3) |

| Slow Walk with Hands in Pockets (SH) | 16 (22) | 1 (2) | 39 (32) |

| Radar Type | No. of Activities | Dataset Dimension | Algorithm | Best Accuracy | |

|---|---|---|---|---|---|

| [*] | FMCW mmWave | 2 | 19 subjects, 168 acquisitions | PCA/t-SNE + k-NN/SVM | 93.5% |

| [*] | FMCW mmWave | 3 | 19 subjects, 168 acquisitions | PCA/t-SNE + k-NN/SVM | 72% |

| [45] | Ultra Wide Band | 7 | 8 subjects, 280 acquisitions | PCA + SVM | 89.1% |

| [46] | FMCW mmWave | 5 | 3 subjects, 95 acquisitions | CV/TV + SVM | 91% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Senigagliesi, L.; Ciattaglia, G.; De Santis, A.; Gambi, E. People Walking Classification Using Automotive Radar. Electronics 2020, 9, 588. https://doi.org/10.3390/electronics9040588

Senigagliesi L, Ciattaglia G, De Santis A, Gambi E. People Walking Classification Using Automotive Radar. Electronics. 2020; 9(4):588. https://doi.org/10.3390/electronics9040588

Chicago/Turabian StyleSenigagliesi, Linda, Gianluca Ciattaglia, Adelmo De Santis, and Ennio Gambi. 2020. "People Walking Classification Using Automotive Radar" Electronics 9, no. 4: 588. https://doi.org/10.3390/electronics9040588

APA StyleSenigagliesi, L., Ciattaglia, G., De Santis, A., & Gambi, E. (2020). People Walking Classification Using Automotive Radar. Electronics, 9(4), 588. https://doi.org/10.3390/electronics9040588