1. Introduction

Conventional artificial intelligence (AI) and machine learning systems are implemented on remote “cloud” servers comprising graphical processing units (GPUs). However, with the rapid growth of edge devices, there is a need for condensing and implementing AI algorithms in energy-constrained edge devices. While AI algorithms are conventionally realized in hardware using digital circuits, analog implementation of AI algorithms can potentially reduce energy consumption by several orders of magnitude [

1] by eliminating data movement from the central processing unit (CPU) to the memory. However, existing analog AI implementations [

1,

2,

3,

4,

5] have only demonstrated fundamental multiply-accumulate capabilities [

2,

3,

4] or implemented sub-circuits for linear classification [

5,

6].

In this work, we propose a fully-integrated artificial neural network (ANN) implemented entirely using analog circuits comprising of custom non-linear activation function and current-domain multiply-and-accumulate (MAC). The proposed CMOS implementation of the ANN can be considered as a multi-layer fully connected neural nets that consist of an input layer, a non-linear hidden layer and an output layer. The hidden layer of the ANN applies non-linear transformation of input from one vector space to another and such hierarchical non-linear transformation provides a unique way to increase the separation between the decision boundaries and ultimately improve the efficiency of the machine learner over any standard linear machine algorithm (e.g., logistic regression [

7], SVM [

8]).

The performance of our analog machine learning (ML) classifier is validated with the Wisconsin Breast Cancer Dataset (WBCD) [

9] which is a widely used dataset. The WBCD dataset is obtained through digital pathology which utilizes virtual microscopy to digitize biological specimen slides with computer-based technologies. The digitized slides can then be used to perform an accurate analysis of the specimen under observation. The WBCD dataset comprises biopsy information from 699 patients with nine attributes each and is grouped into two classes: benign (458 patients) and malignant (241 patients). The WBCD dataset has been chosen for this work since it is a popular dataset and classification accuracy metrics on the dataset are widely reported. Since the classification complexity, and hence accuracy, varies significantly with the dataset, using a popular dataset is useful for benchmarking. Typically cancer classification using the WBCD dataset is performed using digital AI algorithms implemented on power hungry GPUs [

10,

11,

12,

13]. Jouni [

14] presented an optimal activation function for a CMOS based breast cancer tumor classifier implementation which minimizes classification error as well as area consumed by the classifier. The work Zhao [

15] presented CMOS based x-ray imagers with low electronic noise for digital breast tomosynthesis (DBT). The motivation of our work is to demonstrate the feasibility of implementing a fully integrated AI chip that is capable of making an accurate classification while consuming low area and power. The proposed classifier comprises 1 hidden layer with a common-source (CS) amplifier based non-linear activation function [

16]. The entire ANN is fully-integrated on-chip and implemented in 65 nm CMOS process. The prototype consumes 50

W power from a 1.1 V supply leading to a 160 fJ/classification energy efficiency which is several orders of magnitude better than existing CMOS classifiers [

1,

5,

17]. While post-layout simulation results on the proposed cancer classifier architecture was presented in [

18], chip measurement results from a 65 nm CMOS prototype are presented in this paper. The rest of this paper is organized as follows: the proposed architecture is discussed in

Section 2, measurement results are presented in

Section 3, future research directions are discussed in

Section 4, and the paper is concluded in

Section 5.

2. Proposed Architecture

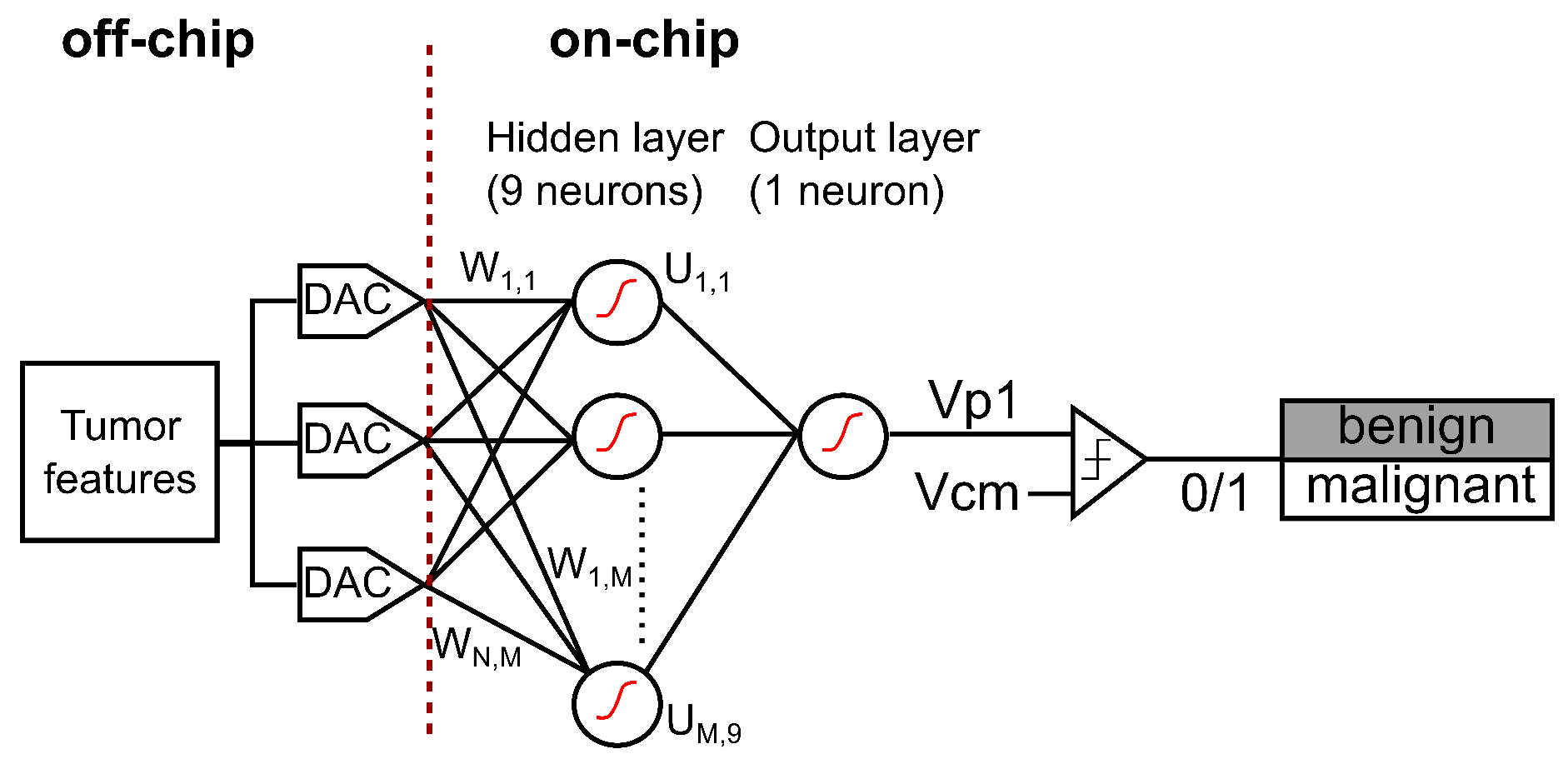

Figure 1 depicts architecture of the proposed classifier. The classifier comprises one hidden layer with nine neurons and an output layer with one neuron. The nine attributes of the tumor are extracted from biopsy data and fed into the classifier. The nine attributes recorded from the tumor are as follows: (i) clump thickness, (ii) uniformity of cell size, (iii) uniformity of cell shape, (iv) marginal adhesion, (v) single epithelial cell size, (vi) bare nuclei, (vii) bland chomatin, (viii) normal nucleoli and (ix) mitoses. Output from the last layer is then sent to an argmax layer for deciding the class of tumor, i.e., benign or malignant. The argmax layer is realized using a comparator whose outputs are “0” or “1” depending on whether the tumor is benign or malignant.

Fundamentally, an ML classifier performs arithmetic operation which can be defined as

where

is a non-linear or linear activation function.

denotes the

i-th element of the weight vector and

is the

j-th element of the input vector to the activation function. The proposed classifier architecture implements activation function in the analog domain thus leveraging the full physical properties of the MOS device. This ensures a higher area and energy efficiency in comparison to digital implementation of the activation function which operates MOS device as a switch.

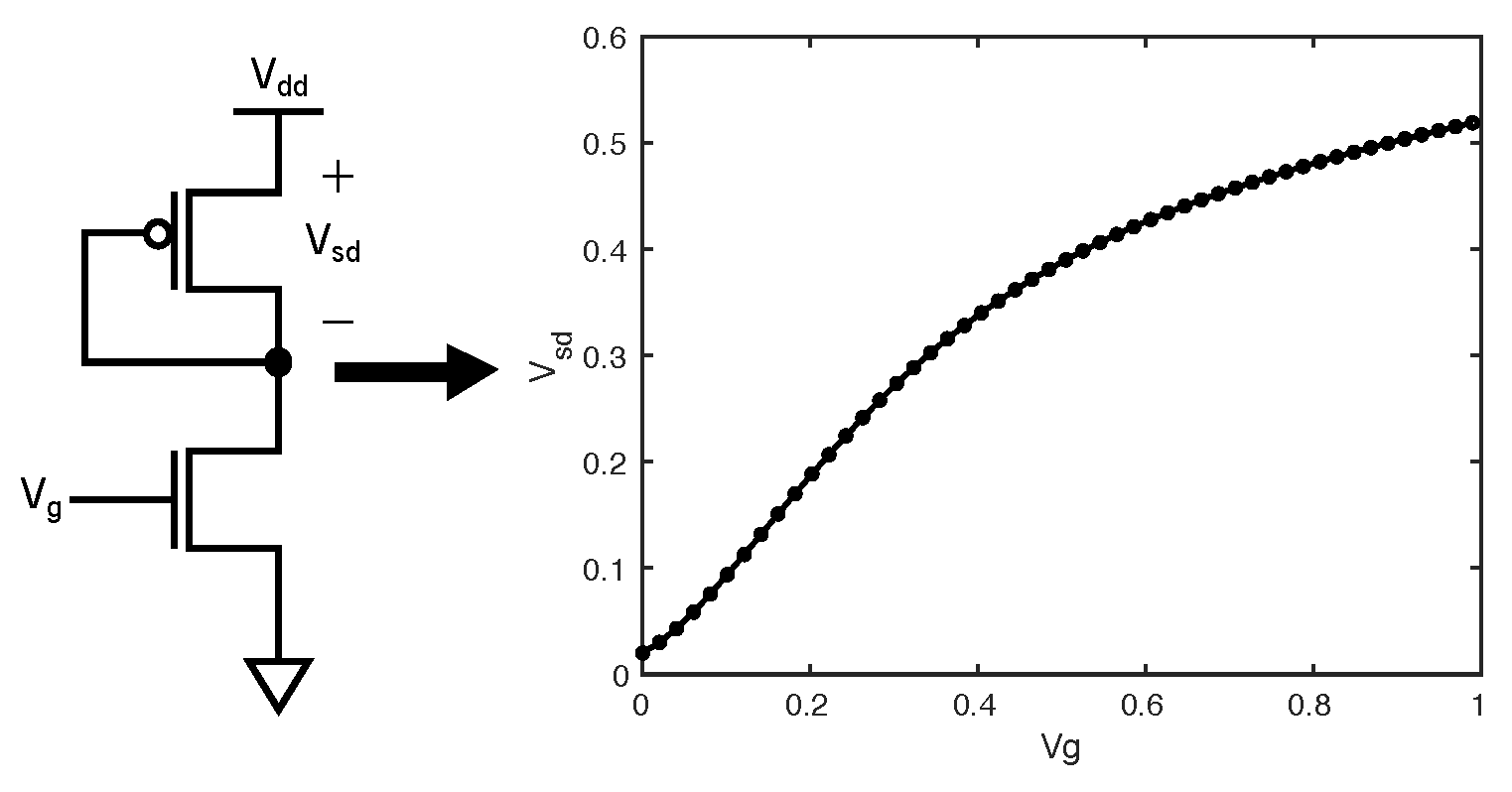

Figure 2 depicts the neuron employed in the proposed cancer classifier which is a single-stage CS amplifier. The CS amplifier comprises an NMOS input transistor with a diode-connected PMOS load. The inputs are fed to the gate of the NMOS and the AI model weights are encoded as widths of the NMOS transistors. The drain current (

) of the NMOS is a function of the width (

W) and input voltage (

). Hence, the

of the NMOS is modulated depending on

and

W giving rise to a transfer function as illustrated in

Figure 2 We leverage the natural non-linearity of the CS amplifier to implement the activation function for the hidden and output layers. Our approach simplifies the hardware complexity by using an approximate transfer function depicted in

Figure 2 instead of designing circuits that match the commonly used activation functions like tanh [

19].

To get a good classification accuracy with the proposed approximate activation function, we use a hardware-software co-design methodology.

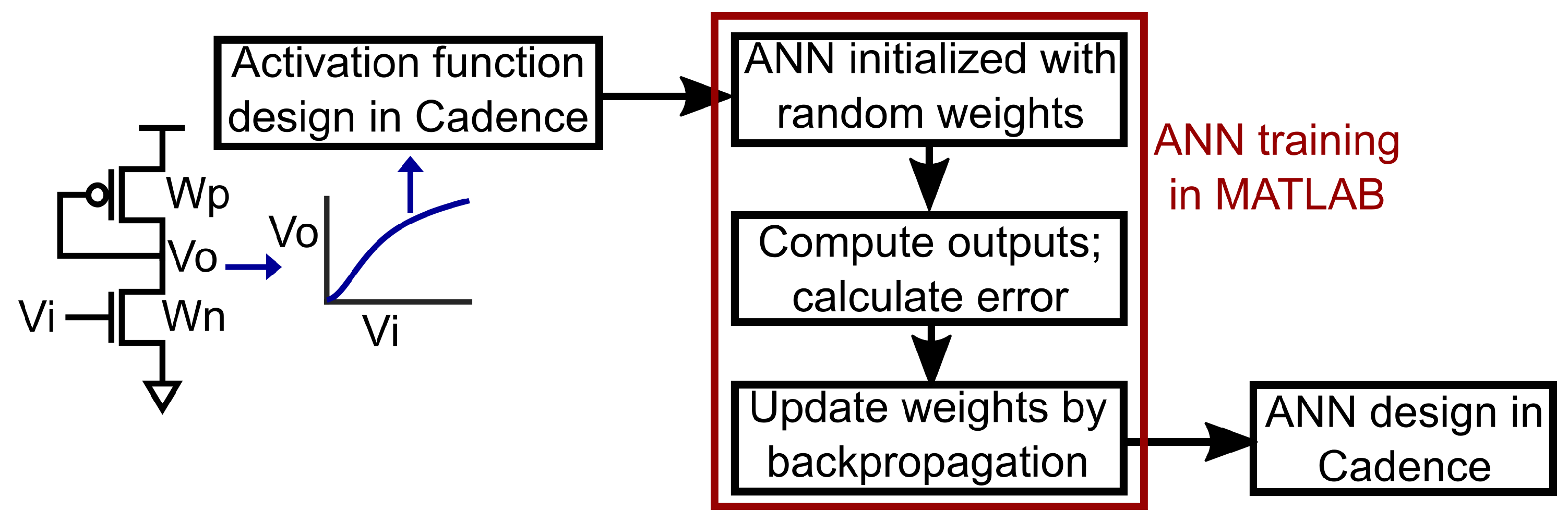

Figure 3 illustrates the proposed design paradigm. First, a CS amplifier is designed and characterized in SPICE. The V-I characteristics of the CS amplifier are then incorporated into the ANN training model using a lookup table. The ANN is then trained using Matlab. The trained weights are then acquired from Matlab and incorporated into the ANN SPICE model as widths of NMOS transistors in the activation function circuit. Finally, the ANN SPICE model is simulated with the test dataset to validate the classifier.

Algorithm 1 describes the pseudo-code snippet used for training the ANN in Matlab from the CS amplifier parameters extracted from SPICE simulations. The ANN weights are initialized with random values and the back-propagation algorithm updates the weights as the AI model iterates through each input from the training set. Stochastic gradient descent [

20] computes the derivative of the mean squared error with respect to the current weights and computes the new weights as a function of the learning rate, error derivative, and current weights. The WBCD dataset is split randomly into a training set with 419 samples, and a test set with 280 samples. Each sample has nine attributes with integer values in the range 0–10. The attributes are scaled to fit into a 0.27 V–0.6 V range before feeding it into the ANN circuit. The weights are constrained to {0,1} which helps significantly reduce area costs associated with implementing the classifier on-chip.

| Algorithm 1 ANN Training pseudo-code. |

- 1:

- 2:

- 3:

- 4:

for i<Number of Training Iterations do - 5:

for j< Size(Train set) do - 6:

- 7:

- 8:

- 9:

if then - 10:

- 11:

end if - 12:

- 13:

- 14:

- 15:

- 16:

end for - 17:

- 18:

end for - 19:

if W1 > 0 then - 20:

W1 - 21:

else - 22:

W1 - 23:

end if - 24:

if W2 > 0 then - 25:

W2 - 26:

else - 27:

W2 - 28:

end if

|

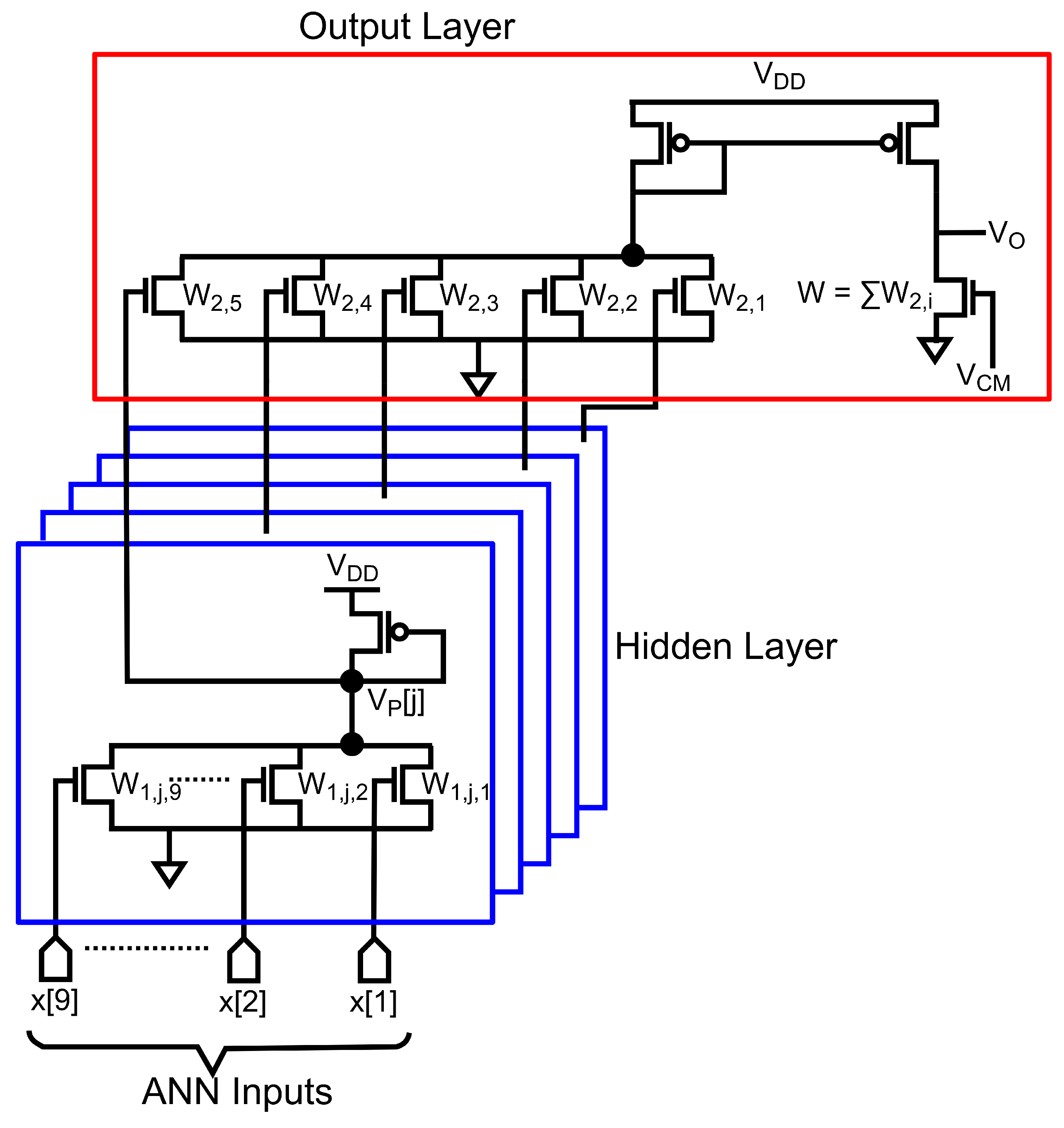

Figure 4 depicts transistor-level schematic of the proposed classifier. The classifier comprises one input layer with nine inputs, one hidden layer with five neurons and one output layer with one neuron. As mentioned earlier, weights of the hidden and output layers are encoded into the width of each NMOS transistor as illustrated in

Figure 4. MAC functionality is implemented in the current domain and mathematically represented as

where

denotes the drain current,

denotes transconductance, and

denotes the input of each NMOS transistor. Encoding the ANN weights into the width of each NMOS transistor effectively changes the

of each transistor, thus, scaling the drain current. The drain currents of the NMOS transistors are summed using the diode-connected PMOS load thus realizing MAC operation. The large output swing of the pseudo-differential amplifier is fed to the comparator thus allowing for direct classification of the output as “0/1” indicating benign or malignant tumors. Implementing the activation function of the classifier in the current domain ensures immunity against charge injection errors, unlike switched-capacitor implementations. The proposed classifier circuit allows for a high-speed implementation while maintaining low power consumption. The proposed classifier is also resilient to random mismatches and supply voltage fluctuations which is further discussed in

Section 3.

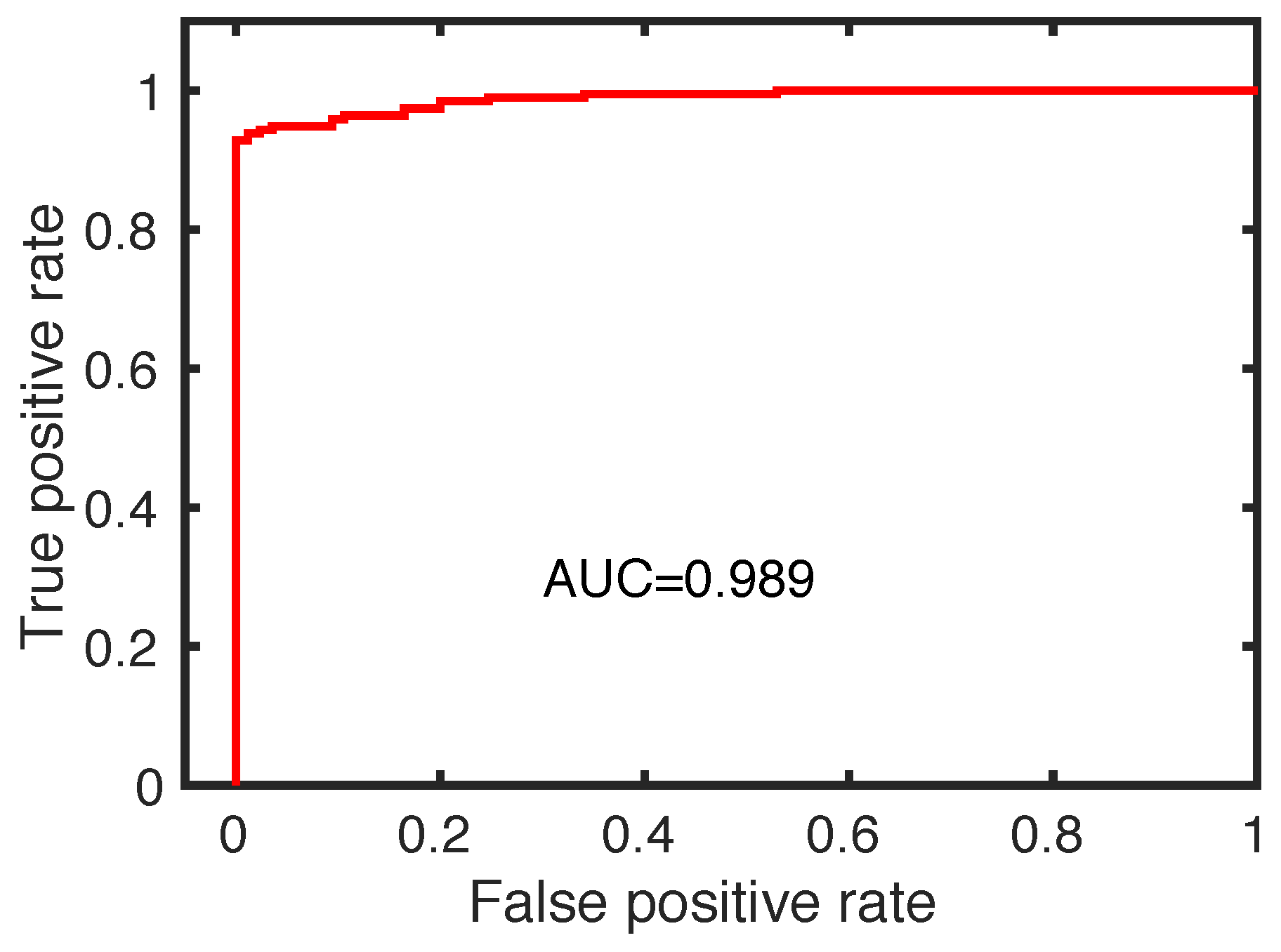

Figure 5 illustrates the receiver operating characteristic (ROC) of the proposed classifier from the post-layout simulation. The ROC plot contains information of true positive rate (TPR)versus false positive rate (FPR) for different classification thresholds which are defined as:

where TN is the true negative and FN is the false negative. In the WBCD dataset, the number of samples the classifier correctly identifies as benign is TN and the number of samples correctly classified as malignant is TP. On the other hand, the number of benign samples incorrectly classified as malignant is FP and the number of malignant samples incorrectly classified as benign is FN. The measure of separability of the two classes, i.e., benign and malignant can be computed from the area under the ROC curve (AUC). A higher AUC implies that the classifier is accurate at correctly distinguishing between the two aforementioned classes.

Figure 5 yields an AUC of 0.989 thus validating that the proposed classifier can accurately distinguish between benign and malignant samples irrespective of sample distribution in the test data.

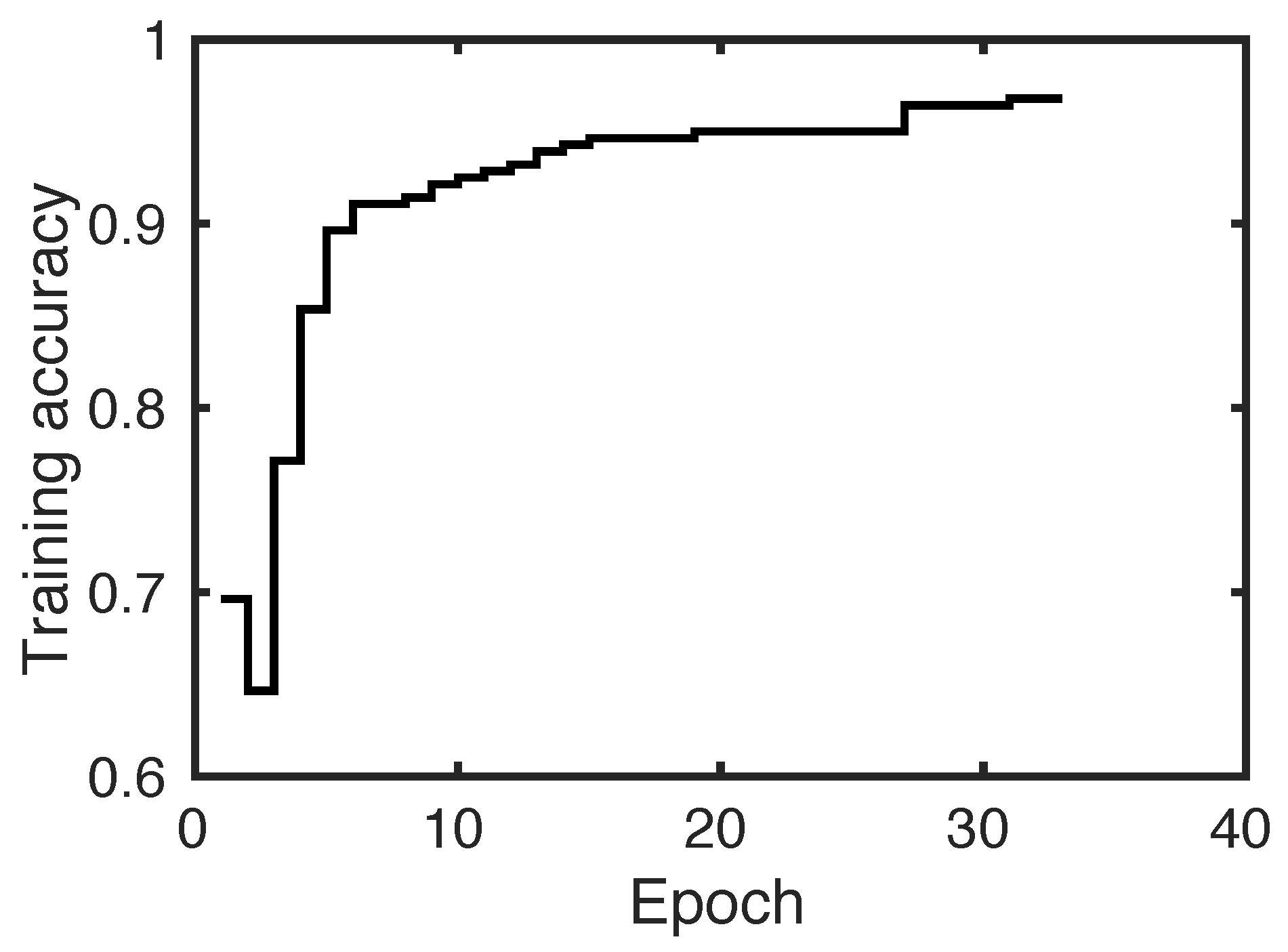

Figure 6 illustrates the training accuracy versus the number of iterations. The ANN achieves a high accuracy within 40 iterations.

3. Measurement Results

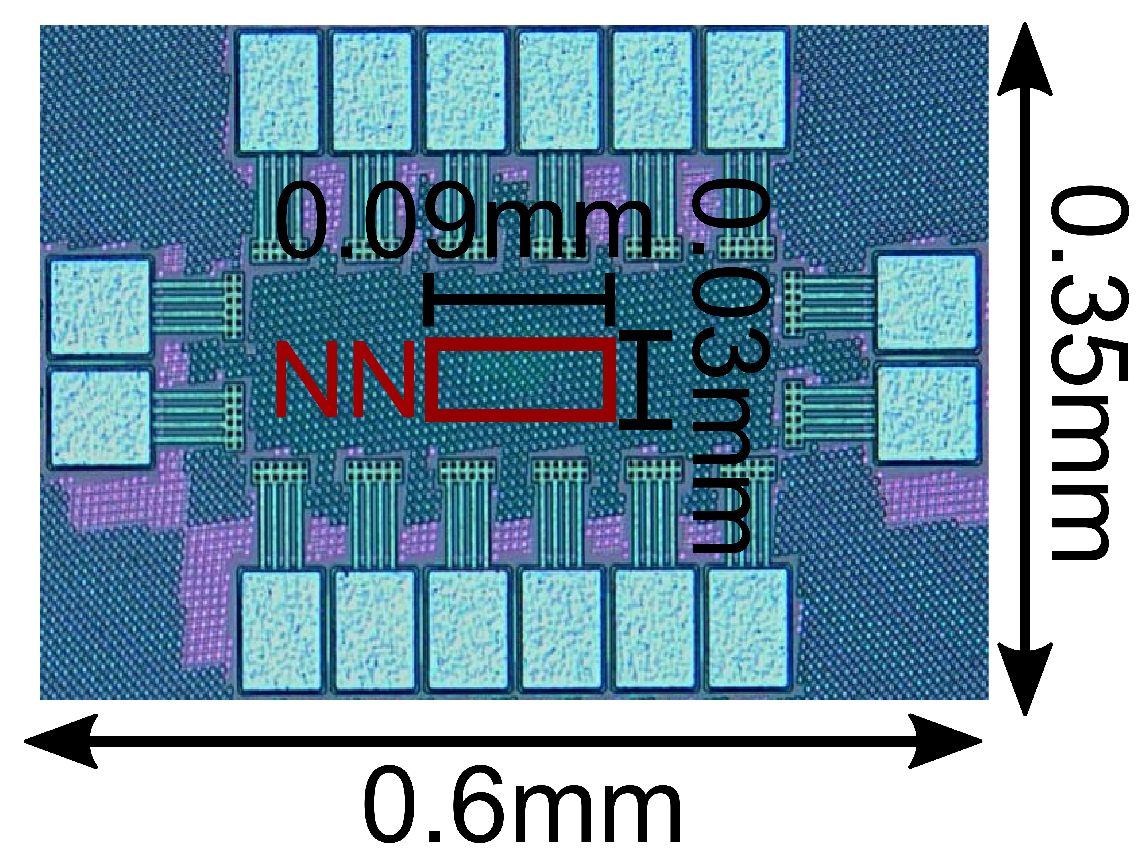

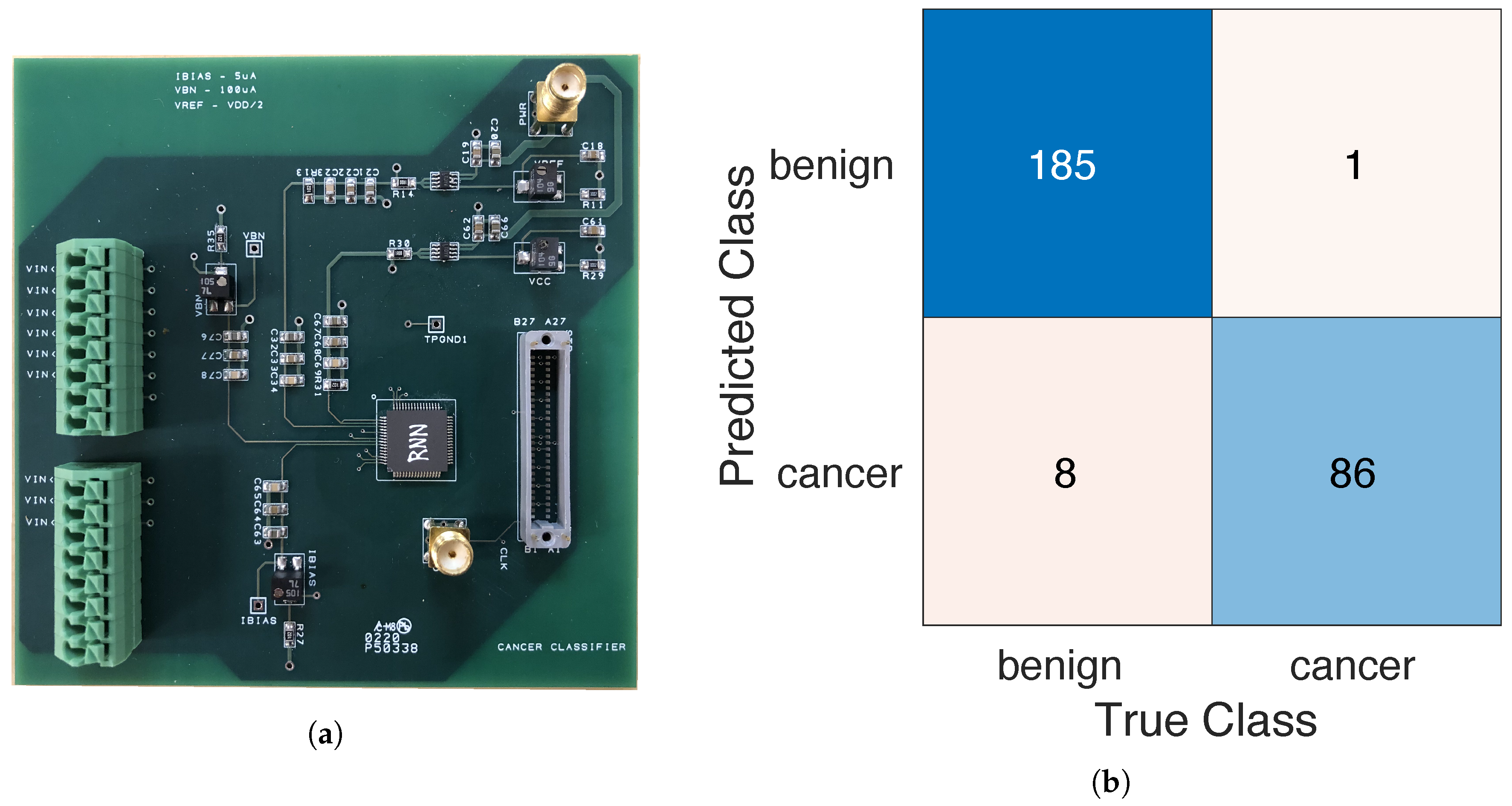

A prototype of the proposed cancer classification ANN was fabricated in 1P9M TSMC 65 nm process.

Figure 7 depicts the chip micro-photograph of the proposed cancer classifier. The classifier chip occupies a core area of 0.003 mm

.

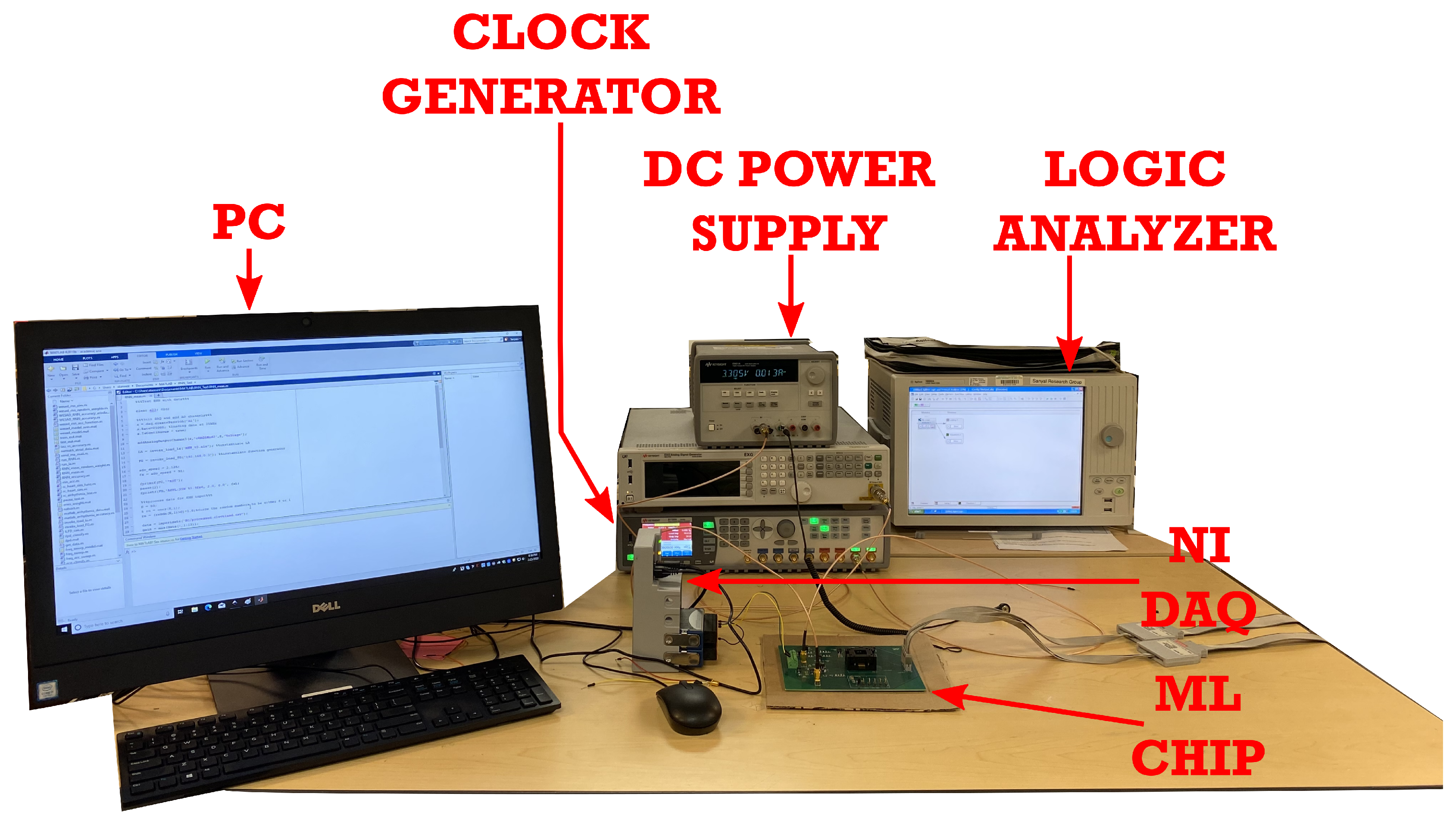

Figure 8 depicts the test setup used for validating the proposed classifier and

Figure 9a depicts the printed circuit board (PCB) for testing the classifier. A National Instruments (NI) off-chip digital-to-analog converter (DAC) is used to convert the 9 digital attributes of each sample to analog voltages which are then fed to the classifier chip. The NI DAC is programmed with a PC using Matlab. The NI DAC comprises a 16 channel 16-bit DAC which generates the analog inputs to the classifier while functioning as a zero-order hold. A logic analyzer is used to record the digital outputs of the classifier chip. The logic analyzer receives the clock signal from the function generator and captures the data at the rising edges of the clock. A DC power supply is used to provide a 1.1 V supply to the chip. An oscilloscope is used for debugging purposes when analog/digital signal, going to or coming off the chip, needs to be probed. Similarly, a digital multimeter is used to probe the DC voltages on the PCB.

Figure 9b depicts the measured confusion matrix of the proposed classifier. The confusion matrix illustrates the performance of the classifier when tested with biopsy data from 280 patients. The rows and columns of the confusion matrix indicate the predicted and actual classes of the test data. The classifier achieves a 97% accuracy with only one false positive classification which is very important for clinical decisions.

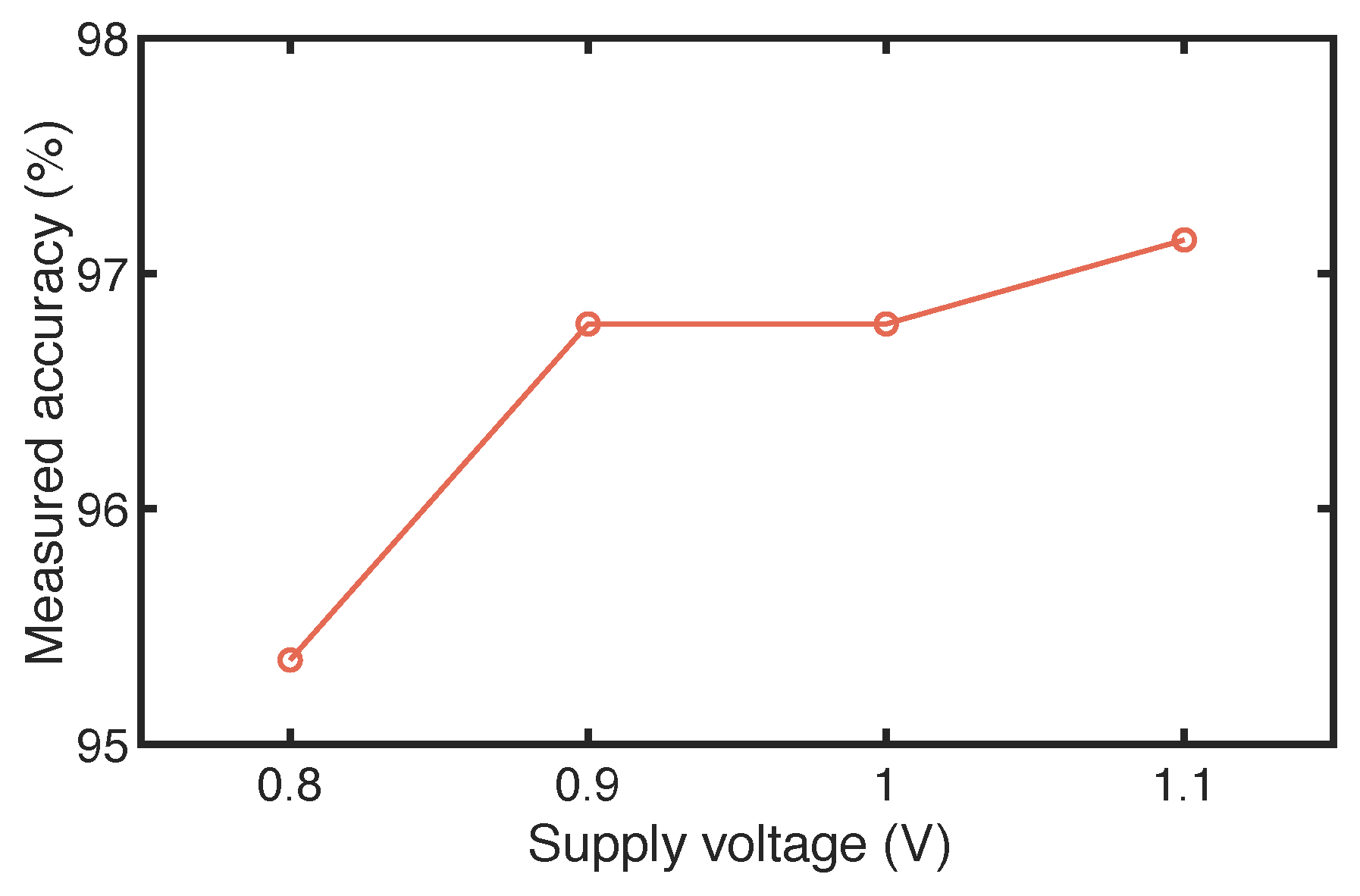

Figure 10 depicts the classifier accuracy versus supply voltage (VDD) variation. The classifier achieves an accuracy greater than 96.8% when VDD ≥ 0.9 V and falls to 95.4% for VDD < 0.9 V. The classifier achieves an average accuracy of 96.9% while consuming 50

W power from a 1.1 V supply thus achieving energy efficiency of 160 fJ/classification.

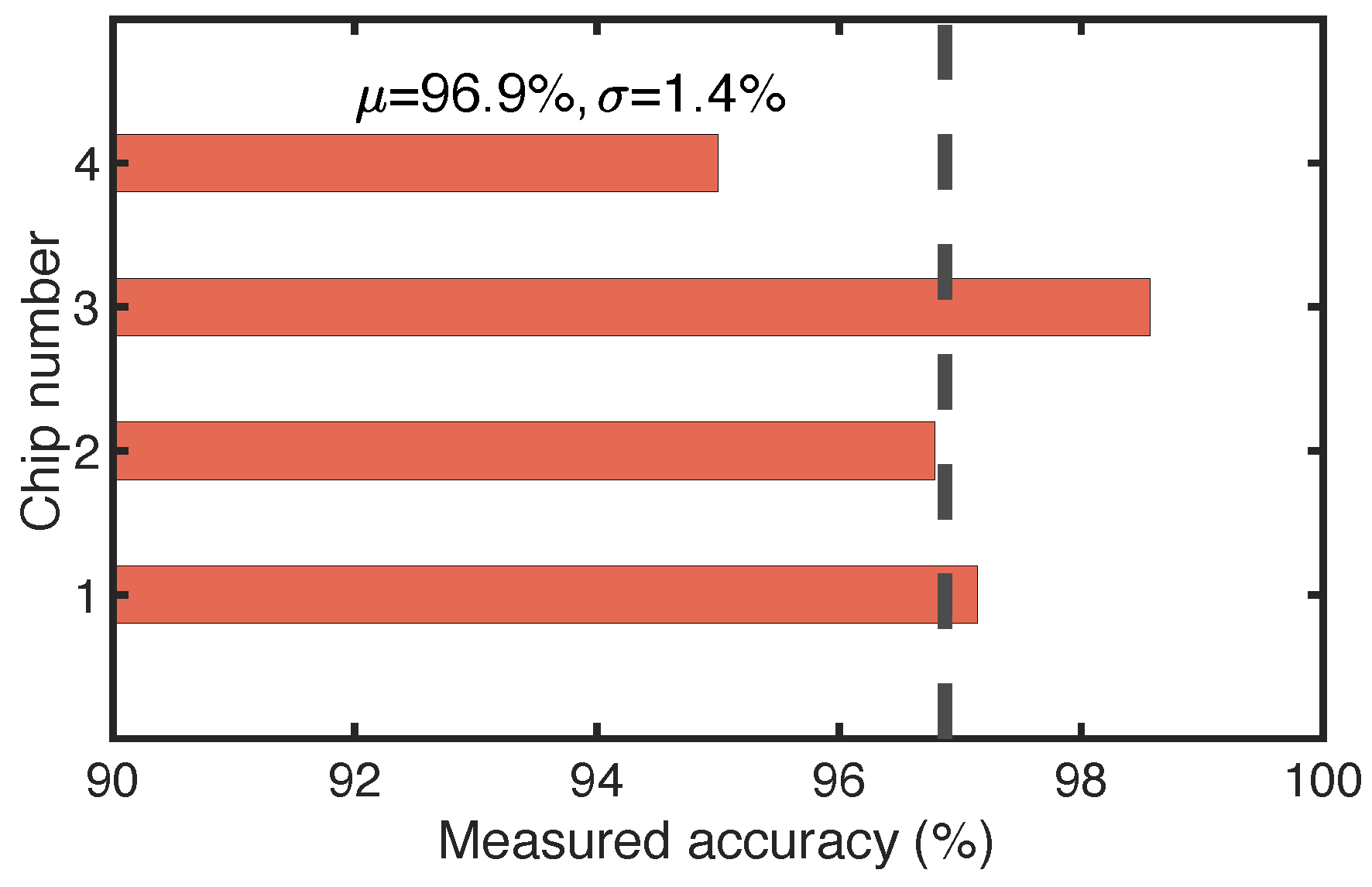

Figure 11 depicts histogram of measured classification accuracy from four chips. It can be observed that the classifier has an average accuracy of 96.9% with a standard deviation of 1.4%. Hence, the proposed classifier architecture is robust to random mismatches. In addition to noise contribution of the classifier circuit itself, the off-chip DAC used to convert the digital samples to analog voltages contributes a 500

V input-referred noise. Even in the presence of both noise sources, the chip achieves average classification accuracy of 97% which shows robustness to noise.

Table 1 provides a comparison of the performance of the proposed classifier with existing WBCD classifiers. Typically, WBCD classifiers are implemented on GPUs with neural network implementations in Python or Matlab with energy consumption in the range of mJ/classification. In contrast, the proposed classifier achieves similar accuracy while consuming energy of only 160 fJ/classification.

4. Discussion & Future Work

This work has presented a fully integrated machine learning classifier using a custom analog activation function and a hardware-software co-design methodology that incorporates device knowledge into the ANN training phase to ensure that the fabricated prototype closely matches simulation results with ANN model. The current version of the classifier is based on class-A circuits which are always turned on. To improve energy efficiency, we will use switched-capacitor circuits to implement the MAC operations and also use a switched-capacitor amplifier to realize the custom activation functions. Another direction of future research would be to integrate sensing capability into the same die as the ANN. We will start with an image sensor since imaging is a popular modality and there are many popular image datasets, such as MNIST [

22] and CIPHER [

23], which can be used for benchmarking our ANN. Integration of image sensor with ANN also necessitate some form of image cleaning/pre-processing and we will employ similar techniques as described in [

24]. Further down the road, we also intend to integrate wireless communication capability [

25] on-chip.

Another research aspect we will pursue is adding an ease of explanation to our ANNs. Typically a complex network as ANN with a lot of trainable parameters requires large volume of data with targeted labels for proper training which in real life, is difficult to obtain for every targeted task. In addition, given multiple non-linear transformation of the input, the ANN is limited by its black box architecture with less necessary mechanism for explanation of the decision. However, there has recently been a surge of work in explanatory artificial intelligence (XAI) for the digital ANN to increase transparency (e.g., linear proxy model, silent mapping, role representation). In the future work, we will explore CMOS XAI for adding transparency to our architecture.