1. Introduction

Images have become a main carrier of information dissemination as multimedia technology develops rapidly. Nevertheless, the information usually suffers from various degrees of distortion during acquisition, transmission, storage, and other conditions such as camera motion, which makes it extremely difficult to perform subsequent processing. For the purpose of maintaining, controlling, and improving the image quality, it is important to evaluate and quantify it. Since we are in the big data era, the quality of images has a lot of impact in daily life, as the high-definition camera on mobile phones, aerial equipment on drones, and monitoring equipment for public transportation. If the images obtained are not clear enough, or there are noises, it is bound to affect user experience. In the relevant scientific research field, image quality is also closely associated with various scientific works [

1,

2]. For example, astronomical observations require high quality images, and for medical imaging, the quality even determines final diagnosis. Therefore, the need to develop an efficient and reliable image quality assessment method is indeed increasing.

Image quality assessment is the process of analyzing the features of an image and then, evaluating the degree of its distortion. There are two categories of image quality assessment (IQA) methods, subjective methods and objective methods. Subjective IQA methods rely on the opinions of a great many viewers, making it costly, time consuming, and inefficient in practical applications. The objective methods overcome these deficiencies by using a mathematical model to create a mapping between image features and quality scores that strives to truly reflect human visual perception. Computers are used to build models that imitate the human visual system. When the powerful computing ability is utilized, the evaluation efficiency is greatly improved.

The objective IQA methods are usually divided into the following three categories, based on the available information of the given reference image (undistorted image): Full-reference IQA (FR-IQA), reduced-reference IQA (RR-IQA) and no-reference IQA (NR-IQA).

Among these three types, NR-IQA has the widest range of application and the most practical value, and therefore has received more attention and has become a hotspot in recent years. In early NR-IQA studies, researchers were committed to design some hand-crafted features that could discriminate distorted images and, then, train a regression model to predict image quality. Some NR-IQA methods have been based on a specific distortion [

3,

4], which commonly used the prior knowledge of the distortion type. To assess the image quality with no information available, natural scene statistics (NSS)-based methods have been widely used to extract reliable features, which assume the natural images share certain statistics and the occurrence of distortions can change these statistics [

5,

6,

7,

8,

9]. Nevertheless, the hand-crafted features have always been designed for a specific type of distortion which lies in the low-level feature and leads to insufficient feature extraction and analysis.

The development of convolutional neural networks (CNN) has greatly accelerated computer vision research and application. In 2012, Krizhevsky et al. used CNN to make significant progress in image recognition [

10], which enabled researchers to have a greater prospect of computer vision. With the popularity of CNN, the structure of neural networks is getting deeper and deeper. A deeper structure means the network has a stronger ability to extract features with higher accuracy of results, however, however, at the cost of a larger amount of training data.

The success of CNN in image recognition has attracted researchers to look for the application in IQA. When compared with the traditional methods, the application of neural networks has brought great progress to NR-IQA. Researchers have completed the NR-IQA task by training deep neural networks (DNN) [

11,

12,

13]. Nevertheless, the problem to be solved in the above research is that we lack a great amount of sample data for the task. Especially when the network structure is deep and wide, the number of parameters increases rapidly, in the meantime, the number of training data should be increased accordingly. The labeling process in an IQA image dataset requires that many human reviewers assess each image which is extremely labor intensive and costly. Therefore, most of the IQA datasets are too limited for effectively training neural networks, as shown in

Table 1. Therefore, researchers focus on how to increase the amount of data.

The methods for expanding the amount of data can be roughly divided into two types, image segmentation inside the database and extension out of the dataset. The method of image segmentation in the database is to expand the data volume by dividing the entire image into tiles [

12,

17,

18,

19,

20,

21,

22]. However, it has shortcomings in that the assessment of small image patches can ignore the integrity of a whole image and it is not efficient. With regards to methods of augmenting outside the database, researchers always process the available images, such as reversing, contrast changing, and applying varying degrees of Gaussian blur, to ensure sufficient samples [

23,

24,

25].

The quality of the image is mainly affected by distortion. Distortion is divided into two types according to the way it is generated, synthetic distortion, and authentic distortion. Synthetic distortion refers to distortion generated during processing and transmission, such as distortion caused by image compression. Authentic distortion refers to distortion occurring in daily, for example, camera jitter, overexposure, and underexposure. In the current research work on NR-IQA, the research objective is mainly focused on synthetic distortion. When algorithms for synthetic distortion are used for authentic distortion, they performed poorly. A few algorithms for authentic distortion have not performed excellently because of the limited amount of data, and the fact that authentic distortion images cannot be augmented with image processing methods such as Gaussian blur.

Conversely, in real life, authentic distortion is the main factor affecting image quality. Meanwhile, with the rapid development of mobile devices and camera equipment, as well as the eagerness of people to know whether their devices work well, the requirement to evaluate the quality of authentic images has been rapidly improving. Unfortunately, the existing IQA algorithms do not perform well in the real life daily scene. The difficulty is that authentic distortion types are much more varied and the degree of distortion is much more complex, and therefore when applied to authentic application scenes, the methods for synthesizing distortion underperform in experiment. Therefore, the development of no-reference image quality assessment methods for authentic distortion is of great significance in practical applications. However, methods for authentic distortion face the difficulty that researchers cannot expand data such as by processing the available images using Gaussian blur. Authentic distortions are unlike synthetic distortions, since authentic distortions are generated in human’s daily life with strong authenticity and complexity. Therefore, the available images are too few. Therefore, DNN models are always trained with an IQA database for direct authentic distortion. In Reference [

24], researchers designed an architecture called S-CNN for synthetically distorted images, however, considering that the DNN model was not beneficial for authentic IQA databases, they selected the pretrained VGG-16 network for the classification task on ImageNet as another branch to extract relevant features for authentically distorted images. Finally, in the fine-tuning step, they tailored the pretrained S-CNN and VGG-16 [

26] and introduced bilinear pooling module for optimization of the entire model. Unfortunately, the limited size of database restricted the performance on the authentic distortion.

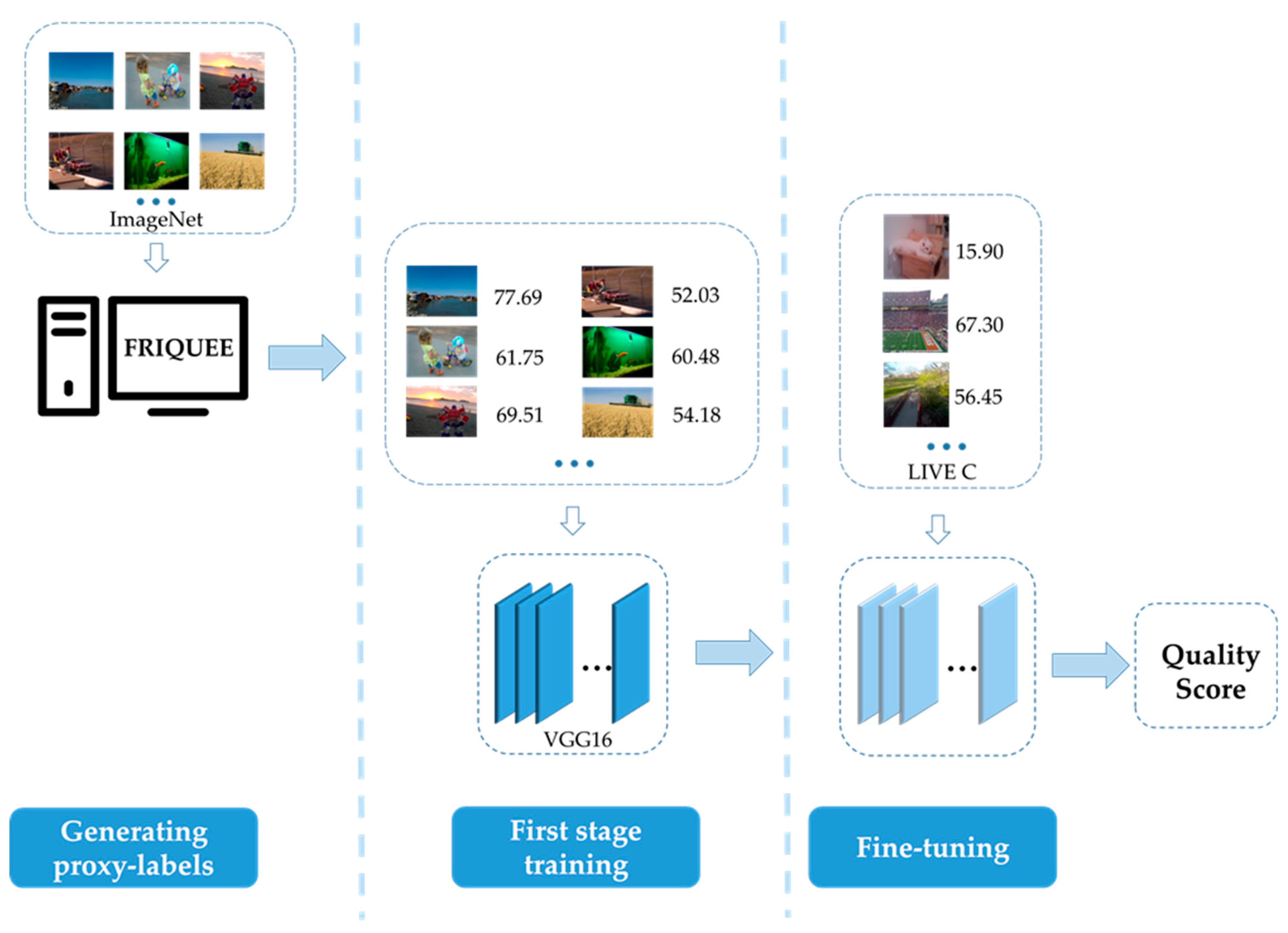

In this paper, an NR-IQA algorithm, based on image proxy labels, is proposed, mainly focusing on the assessment of authentically distorted images. In order to distinguish from the human labels, we define the quality score, which is generated by using a traditional NR-IQA algorithm, as “proxy labels”. “Proxy” means that the objective results are obtained by computer after the extraction and assessment of the image features, instead of human judgement. Above all, a traditional IQA algorithm for authentic distortion is applied to obtain amounts of image proxy labels. Generating the proxy labels expands the data size without processing the natural images, to ensure the authenticity of natural image distortion. Then, we use the proxy labels to train the model followed by a fine-tuning.

The innovation lies in applying cascading transfer learning to IQA. Since VGG16 [

26] is a classic model for image classification tasks, the feature it can extract is related to classification of the images in natural scenes. In our work, the classification task can be regarded as the source task and the database for training the original VGG16, i.e., the ImageNet database, can be regarded as the source domain. The target task is the IQA, and the target domain is the image in the IQA database for authentic distortion. The source domain and the target domain are similar to some extent, that is, ImageNet contains the images whose scenes are similar with authentic distortion images. Therefore, we use the images in the source domain to generate proxy labels, then, train the VGG16 model and transfer it to a preliminary IQA model. After the first transfer learning, the model has the ability to assess the image quality. For the sake of adequately meeting the IQA task, the twice transfer learning is necessary. In order to be consistent with image type in the first transfer learning, we use authentic distortion images for training in the twice training. After the twice training, the model is completely transferred to the target task.

We apply cascading transfer learning in our algorithm. The first expands the authentic images data size and learning preliminarily, then, the second inherits the foundation from the previous learning and fine-tunes the model. Therefore, the model is transferred to the target task completely, and can more accurately predict the quality of authentically distorted images. When compared with the algorithms which only fine-tune, our algorithm performs better in terms of expanding data size and adequate training. The experimental results strongly demonstrate that the predictions generated by our algorithm are highly correlated with human perception and achieves state-of-art performance in a LIVE In the Wild Image Quality Challenge database [

15].

The remainder of this paper is organized as follows: Related work is introduced in

Section 2, details of the methodology we proposed are given in

Section 3, experimental results and the analysis are presented in

Section 4, and conclusions are given in

Section 5.

3. Proxy Labels Based No-Reference Image Quality Assessment

In this section, a NR-IQA algorithm based on obtaining proxy labels for authentically distorted images is detailed. First, the general framework and process of the algorithm are described and, then, the key points and innovations of the algorithm proposed.

3.1. Overview of Our Approach

Most of the IQA research is focused on synthetic distortion. In real-life scenarios, due to the complexity and variety of real scenes, the complexity of image distortion is not a simple analogy of synthetic distortion. Therefore, the method for synthesizing distortion cannot meet the actual needs when applied to real-life scenes. At the same time, we noticed that the application of deep learning to blind IQA has been the focus of many researches. The IQA method based on deep learning establishes the mapping from the underlying image to the high-level perception through the deep neural network, so that the image quality is more closely related to human perception. However, deep networks have obvious shortcomings that cannot be ignored such as a large number of samples are needed for training. However, manual labeling of image quality is time-consuming and labor intensive, and it is extremely unrealistic to create a dataset with a variety of distortions, such as ImageNet, and therefore researchers are also looking to expand the number of training available.

Notably, there are a lot of authentically distorted images in life, but these images are not manually labeled. If we could effectively use this large number of unlabeled distortion images, we could significantly expand the amount of data, thus, making network training more accurate. However, manual labeling of these images is time-consuming and labor intensive. If the image is labeled using a traditional IQA algorithm, the computing power of the computer is fully utilized, and an objective quality evaluation of a large number of images is performed in a short time. In this way, the efficiency is greatly improved as compared with manual labeling. Although the objective assessment based on the machine is not close to the human visual perception, it can predict by extracting and analyzing the features of a large number of images, and the network can learn the IQA-related features during the training, and therefore the network has the function of the assessment of image quality.

The ultimate goal of the IQA is to simulate the perception of the person to evaluate the image. If the model is trained using only a large number of machine assessment labels, although the problem of insufficient data volume is solved, the model has the function of evaluating image quality. However, the mapping established by the model stops at objectively assessing the quality, and it is necessary to continue to adjust in order to imitate the perception of the person. In order to avoid this problem, we apply the manually labeled images to continue to learn. By fine-tuning the model through the data in the existing IQA dataset, the network can further imitate human perception based on the function of assessing image quality, adjust the model mapping with human perception, and complete the IQA task more perfectly.

Our approach is based on the observation that a huge number of authentically distorted images is available. The first step in this work is to acquire a lot of real distortion images. We select ImageNet [

14] for augmenting data size. This set contains a large number of real scene images, which can meet both the authentic distortion and the large amount of data required in this work. Moreover, the images are mostly taken from reality, and therefore model trained by these images can play a more robust role when applied to life practices. After obtaining a large number of authentic distortion images, the low-level features of the images are extracted by FRIQUEE [

34], a traditional NR-IQA method, to perform objective quality scores. The scores obtained are saved as proxy labels of the images. This step aims at labeling without humans, which greatly expands the amount of training data as compared with other methods.

In the second step, the authentic distortion images from ImageNet and their corresponding proxy labels are fed into the VGG16 network for training, that is, the first transfer learning. Through the training by a large number of data, the task of VGG16 is gradually transformed into IQA from classification, meanwhile the deep-level features related to image quality are learned and other irrelevant features are suppressed. In a word, the model has the function of image quality assessment.

Although the network has been preliminary competent for the IQA task after an adequate training, the ability of predicting remains in the objective evaluation stage and is a certain distance from human perception. Therefore, in the third step, the IQA database and labels are fed for fine-tuning, which is regarded as the second transfer learning. The LIVE In the Wild Image Quality Challenge database which contains the various type of authentically distorted image is selected to adjust the network. Therefore, the model can match the relationship between the artificial perception and the image distortion.

3.2. Generating Proxy Labels

Researchers have established some IQA standard databases, in which the image quality scores are given by the amounts of observers. This kind of labeling is regarded as human labeling. However, the data size is too limited when fed into a deep neural network, and always leads to an unsatisfied performance. In order to expand the amount of available data for training, this work takes the objective methods by computer instead of manual labeling. In order to distinguish from human labels, we define the score, which a computer gives using a traditional NR-IQA algorithm, as “proxy labels”. “Proxy” means that the objective results are obtained by machine after the extraction and assessment of the image features, instead of human judging. By expanding the labels, the amount of data can be effectively increased, so that the network can fully learn the relevant features of IQA during the training process and avoid the over-fitting problem caused by insufficient data volume.

Since this work is to perform image quality assessment on authentic distorted images, a conventional IQA method specific for authentic distortion is considered when selecting an algorithm for augmenting the proxy labels. When automatically scoring a large number of original images, selecting the algorithm with the best performance to maximize the training accuracy and success rate. At present, in many traditional algorithms, FRIQUEE [

34] is one of the best algorithms for authentic distortion image, as shown in

Table 2. Therefore, in this work, the FRIQUEE algorithm is chosen as a tool for generating proxy labels.

Although the proxy labels do not completely match human perception, they have relevant low-level features of image quality. After training large numbers of proxy labels, the model does not directly meet the purpose of imitating people’s perception but does learn the characteristics of distorted images and lays a good foundation for subsequent model adjustment.

3.3. Training Network Using Proxy Labels

In this work, the VGG16 [

26] network is selected for training and testing. The VGG network is a model proposed by Oxford University, in 2014. Because its structure is intuitive and easy to understand, it has been applied in various fields and it has become a widely used convolutional neural network model. The VGG16 is a model for image classification tasks, and it shows excellent performance in classification and other image processing areas. Meanwhile, lots of IQA researches based on the VGG16 perform quite well, which indicates that VGG16 is also suitable for IQA. When comparing with other DNN models such as ResNet [

48] and AlexNet [

10], VGG16 has a moderate structure depth, quite fast operation speed, and good performance. While ensuring the training and computing efficiency, it also guarantees competitive performance.

In the original architecture, the last layer of the network is 1000 channels, corresponding to 1000 categories in image recognition. For the single score output of IQA, the number of channels in the last layer is modified to one to meet the needs of the single-class output in this work.

During the initial training process, the model learns the relevant features of IQA through a large amount of data training. Since the VGG16 network requires that size of input image is 224 × 224, first, a large number of original images are subjected to size filtering before input to qualify images with sizes greater than 224 × 224 are retained. Random sampling is adopted at the beginning of VGG16, which ensures any image bigger than 224 × 224 is randomly cropped to 224 × 224. Therefore, despite the different aspect ratios and size, all images fed into VGG16 are 224 × 224. The images and their proxy labels are fed into the VGG16 network, and the first stage of training is performed. During the process, due to the existence of many proxy labels relevant to IQA, the original classification features in the network are suppressed, and the features related to IQA are learned and enhanced. After this training, the network already has the function of quality assessment. Nevertheless, since the data used for training is objective data obtained by computer labeling, it is different from the actual perception of human beings, therefore, it is necessary to continually train. According to the fundament, the IQA database is used to fine-tune the network.

3.4. Fine-Tuning the Network

After the preliminary training, the network has the ability to evaluate the quality of images, but the ability is based on objective methods, with some differences existing as compared with people’s true feelings. In order to narrow the difference, the second phase of training is carried out, that is, the network is fine-tuned using the IQA database.

In the existing IQA database, most databases are oriented to synthetic distortion. The LIVE In the Wild Image Quality Challenge database [

15] is an IQA database of authentic distortion images that fit into this work. Among them are 1162 distorted images, containing various types of distortion which have been captured by various representative modern mobile devices.

In the second phase of training, the model is fine-tuned using the authentic distortion images and corresponding human labels. The characteristics of IQA in the network are further enhanced, making the model more accurate. In addition, more importantly, after fine-tuning, the mapping between features and scores is closer to a human’s perception of distortion, making the model more practical.