1. Introduction

Over the past few years, automated translation performance has improved dramatically with the development of neural machine translation (NMT). In the past decades, we said that rule-based machine translation (RBMT) had a high meaning-transmission accuracy, and statistical-based machine translation (SMT) had excellent fluency. However, NMT has excellent quality in both aspects when there is a large-scale, bilingual parallel corpus.

In practice, it is often difficult to acquire such large-scale parallel corpora, except with some special language pairs or for specific companies. Additionally, most of the special-domain corpora are limited. Considering the weakness of NMT on low-resource and out-of-vocabulary issues, some researchers proposed using a hybrid approach of aiding NMT with SMT [

1]. For teams that have already developed an RBMT system with a stable translation quality, utilizing their existing RBMT with NMT when translating a specific domain can be an attractive solution. To test this assumption, we performed a preliminary evaluation on the Korean-to-Chinese translation performance of RBMT, SMT, and NMT in several different domains, including news, Twitter, and spoken language. The spoken dataset with 1012 bilingual sentence pairs consists of daily conversations, travel sentences, and clean sentences selected from the log of the mobile translation service. The other spoken dataset and the single-word dataset are composed with complete sentences and single words, respectively, are randomly selected from the same translation service log. News and Twitter are randomly collected from the day’s news and hot issue-related trending topics on Twitter. Except for the first spoken dataset with bilingual sentence pairs, other datasets do not have translation references—we did not translate the data manually to perform BLEU evaluation [

2]. Instead, our translators evaluated the MT results directly, because it would be more intuitive and faster for small datasets. The average length in

Table 1 represents the average number of word segments (spacing unit in Korean) per sentence in difference datasets. GenieTalk (HancomInterfree, Seoul, Korea), Moses [

3], and OpenNMT [

4] are adopted for RBMT, SMT, and NMT, respectively, and 2.87 million bilingual sentence pairs in the spoken-language domain are used to train the SMT and NMT systems. BLEU and translation accuracy [

5,

6] are adopted for large- and small-scale assessments, respectively.

Table 1 shows that both NMT and SMT outperform RBMT for in-domain translation (“Spoken”). However, the translation quality drops significantly for out-of-domain sentences. This tendency is more severe in the case of NMT, showing that NMT is more vulnerable in low-resource domains (“News” and “Twitter”) and is more sensitive to noisy data (“Twitter”). NMT and SMT are also weaker when performing translation in the absence of context information (“Single word”), while RBMT shows better quality in these domains.

Starting from the above observations, this paper proposes combining RBMT with NMT using a feature-based classifier to select the best translation from the two models; this is a novel approach for hybrid machine translation.

The contribution of this paper resides in several aspects:

To the best of our knowledge, this paper is the first to combine NMT with RBMT results in domains where language resources are scarce, and we have achieved good experimental results.

In this paper, we propose a set of features, including pattern matching features and rule-based features, to reflect the reliability of the knowledge used in the RBMT transfer process. This results in better performance in quality prediction than surface information and statistical information features, which have been widely used in previous research.

Since neural networks perform text classification well, we compare feature-based classification with text-based classification, and our results show that feature-based classification has better performance, which means explicit knowledge that expresses in-depth information is still helpful, even for neural networks.

To construct a training corpus for the classification of hybrid translation, which is very costly, our study built a small hybrid training corpus manually and then automatically expanded the training corpus with the proposed classifier. As a result, classification performance was greatly improved.

The rest of this paper is organized as follows:

Section 2 reviews related work on hybrid machine translation (MT) systems.

Section 3 presents our hybrid system’s architecture and the specific MT systems adopted in this paper.

Section 4 presents our proposed features for hybrid machine translation.

Section 5 describes the experimental settings for the tests and discusses the results from different experiments. We present our conclusions and discuss future research in

Section 6.

2. Related Works

The first effort toward a hybrid machine translation system adopted SMT as a post-editor of RBMT. In this model, a rule-based system first translated the inputs, and then an SMT system corrected the rule-based translations and gave the final output [

7,

8,

9,

10,

11]. The training corpus for the post-editing system consisted of a “bilingual” corpus, where the source side was the rule-based translation, and the target side was the human translation in the target language. This architecture aimed to improve lexical fluency without losing RBMT’s structural accuracy. The experiments showed that the purpose was partially achieved: automatic post-editing (APE) outperformed both RBMT and SMT [

9,

10]. On the other hand, APE for RBMT degraded the performance on some grammatical issues, including tense, gender, and number [

9]. It was particularly limited in long-distance reordering for language pairs that have significant grammatical differences; analysis errors could further exacerbate this because of structural errors introduced during rule-based translation [

11].

Other researchers proposed an “NMT→SMT” framework, which combined NMT with SMT in a cascading architecture [

1]. NMT was adopted as a pre-translator, and SMT, which was tuned by a pre-translated corpus, was adopted as a target–target translator. As a result, the performance was significantly improved in Japanese-to-English and Chinese-to-English translations.

APE has developed to a professional level where human post-edits of the automatic machine translation are required instead of independent reference translations [

12,

13,

14], because learning from human post-edits is more effective for post-editing [

15,

16]. However, developing a human-edit corpus is time-consuming and costly, so it cannot be performed for all language pairs. There is not a clear performance improvement when NMT results are post-edited using neural networks, unlike when SMT results are post-edited using neural networks [

17].

Reference [

18] reported that under the same conditions, selecting the best result from several independent automatic translations was better than APE. This hybrid approach adopted several independent translation systems and picked the best result using either quality estimation or a classification approach. Quality estimation predicts the translation qualities using the language model, word alignment information, and other linguistic information; then, it ranks the quality scores of multiple translations and outputs the top-ranked result as the final translation [

19,

20]. In addition to hybrid MT, quality estimation also has other applications, such as providing information about whether the translation is reliable, highlighting the segments that need to be revised and estimating the required post-editing effort [

21,

22,

23].

Feature extraction is normally one of the main issues of quality estimation and classification-based hybrid translation methods. Features can be separated into black-box and glass-box types: black-box features can be extracted without knowing the translation process, include the length of source and target sentences, the n-gram frequency, and the language model (LM) probability of the source or target segments. Meanwhile, glass-box features depend on some aspect of the translation process, such as the global scores of the SMT system and the number of distinct hypotheses in the n-best list of SMT [

22,

23]. Most of the existing research has either focused on black-box features or the glass-box features from SMT, including language model perplexity, translation ambiguity, phrase-table probabilities, and translation token length [

5,

18,

24,

25,

26]. However, a classification approach is considered more proper for a hybrid RBMT/SMT system, because most of the quality estimation research tend to evaluate translation quality using language models and word alignment information; thus, it tends to overvalue the results of SMT [

5,

18].

Another issue with the classification approach involves the training corpus for hybrid classification [

5,

24,

25,

27,

28]. The training corpus is composed of extracted features and labels indicating the “better” and “best” translations. Human translators should be involved in building the labeled training corpus; therefore, building such a corpus is a time-consuming and costly process. In previous researches, given the bilingual corpus and the outputs of the two MT systems, the labels are determined based on the quality evaluation metrics such as BLEU. However, this approach has a limit in hybridizing SMT with RBMT, because such measures may cause a biased preference for language model-based systems [

5]. Reference [

5] proposed an auto-labeling method for RBMT and SMT hybridization that only evaluated SMT translations: the labels would indicate that the SMT results were better if their BLEU metrics were high enough, and the labels would indicate that the RBMT results were better if the SMT results’ BLEU metrics were low enough. This approach avoided the difficulty of securing a large-scale training corpus, but it assumed that an SMT translation was better than an RBMT translation based on the SMT translation’s BLEU score; the quality of the RBMT translation was not considered. In practice, sentences that are translated well by SMT are relatively short and contain high-frequency expressions; these are also translated well by RBMT. Sentences that receive low scores with SMT also show relatively inferior performance with RBMT.

Other researchers adopted only one of these systems, either rule-based or statistical, as the basis, letting other systems produce sub-phrases to enrich the translation knowledge. Reference [

29] adopted an SMT decoder with a combined phrase table (produced by SMT and several rule-based systems) to perform the final translation. Others [

28,

30,

31,

32] used a rule-based system to produce a skeleton of the translation and then decided whether the sub-phrases produced by SMT could be substituted for portions of the original output. This architecture required the two systems to be closely integrated, making implementation more difficult.

3. System Architecture

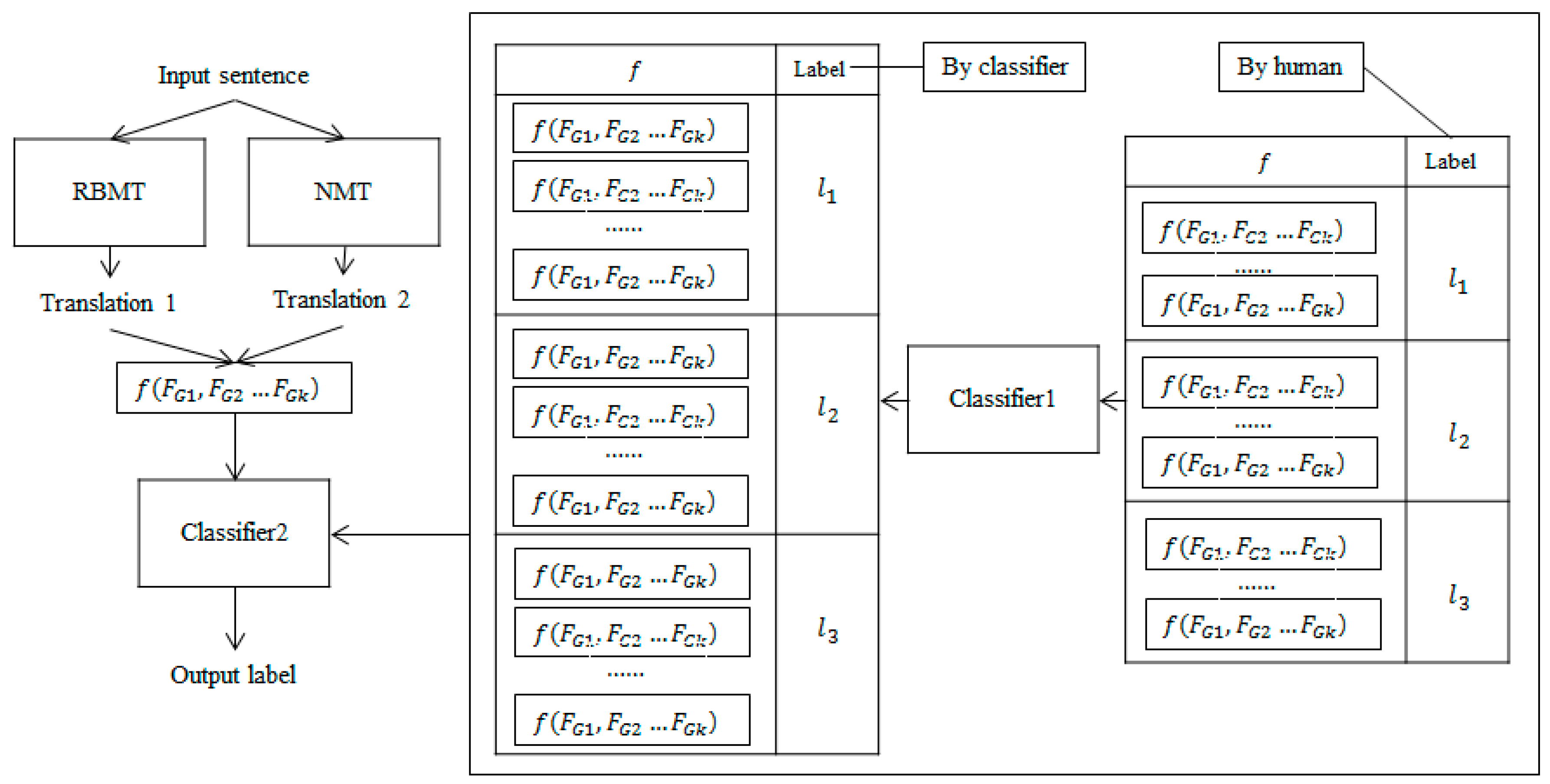

This paper aims to offset the disadvantages of NMT, which shows low performance in low-resource domains, by using RBMT. As shown in

Figure 1, a classification approach is adopted to select the best of the RBMT and NMT translations.

Since the performance of RBMT is much lower than that of NMT in general cases, classification accuracy becomes more important to prevent the hybridized results from being lower than those of NMT, which is the baseline. To ensure accurate classification, we thoroughly explored glass-box features that reflect the confidence of RBMT, and we expanded the training corpus automatically using a small-capacity, labeled corpus constructed by human developers, which is a decidedly simple self-training approach.

3.1. Rich Knowledge-Based RBMT

The RBMT system adopted in this study is a machine translation system based on rich knowledge [

33]. The main knowledge sources used for the transfer procedure include a bilingual dictionary (backed by statistical and contextual information) and large-scale transfer patterns (at the phrase and sentence level). The phrase-level patterns, which play the most important role in the transfer procedure, describe dependencies and syntactic relations with word orders in the source and target languages. The arguments of the patterns can be at the part-of-speech (POS) level, semantic level, or lexicon level. The following are examples of Korean-to-English transfer patterns; for readability, Korean characters have been replaced with phonetic spellings:

(N1:dobj) V2 → V2 (N1:dobj)

(N1[sem=location]:modi+ro/P2) V3 → V3 (N1:dobj)

(N1[sem=tool]:dobj) gochi/V2 → fix/V2 (N1:dobj)

(N1[sem=thought]:dobj) gochi/V2 → change/V2 (N1:dobj)

(hwajang/N1:dobj) gochi/V2 → refresh/V2 (makeup/N1:dobj)

The source-language arguments are used as constraints in pattern matching. A pattern with more arguments, or with a higher proportion of lexical- and semantic-level arguments, tends to be more informative. We use weights to express the amount of information in the source-language portion of the patterns. The weight of the lexical-level argument is higher than the semantic argument, and the weight of the semantic argument is higher than the POS argument. In terms of POS, verbs and auxiliary words weigh more than adjectives, while adjectives weigh more than nouns. Adjectives weigh more than nouns, because Korean adjectives have various forms of use, which cause the ambiguity in both analysis and transfer. For example, the Korean phrase “yeppeuge ha (keep sth pretty)” can be “yeppeu/J+geha/X”, or “yeppeuge/A ha/V”. In the previous analysis, the combination of adjectives and auxiliary words increased the difficulty of translation. Patterns that obtain higher matching weights gain higher priority in pattern matching. The quality of the translation strongly depends on the patterns used in the transfer process.

The system supports multilingual translations from and to Korean, so the patterns share the same form in different language pairs. The following Korean-to-Chinese transfer patterns share the same Korean parts with the above Korean-to-English patterns, while some of them even share the same target-language parts (if there is no lexicon argument involved):

(N1:dobj) V2 → V2 (N1:dobj)

(N1[sem=location]:modi+ro/P2) V3 → V3 (N1:dobj)

(N1[sem=tool]:dobj) gochi/V2 → xiu1li3/V2 (N1:dobj)

(N1[sem=thought]:dobj) gochi/V2 → gai3/V2 (N1:dobj)

(hwajang/N1:dobj) gochi/V2 → bu3zhuang1/V2_1

3.2. Neural Machine Translation

The NMT system OpenNMT [

4], which uses an attention-based, bi-directional recurrent neural network, is adopted in this paper. The corpus used for training covers travel, shopping, and diary domains as Korean–Chinese language pairs. As described in

Section 1, there are 2.87 million pairs. The sentences in both languages are segmented with byte-pair encoding [

34] to minimize the rare-word problem. The dictionaries include 10,000 tokens in Korean and 17,400 tokens in Chinese. The sizes of the dictionaries are so small that most tokens are character-level, and the sizes are determined by a series of experiments using Korean–Chinese NMT. The trained model includes four hidden layers with 1000 nodes in each layer. Other hyperparameters followed the default OpenNMT settings (embedding dimensionality 512, beam size 5, dropout 0.7, batch size 32, and sgd optimizer with learning rate 1.0 for training). The validation perplexity converged to 5.54 at the end of 20 training epochs.

3.3. Feature-Based Classifier with an Automatically Expanded Dataset

Since creating human-labeled training sets for hybrid translation is time-consuming, expensive, and generally not available at large scales, we use support vector machines (SVMs) as the basic classifier, because SVMs are efficient, especially with a large number of feature dimensions and smaller datasets, while a small training set makes deep learning prohibitive. Since neural networks have shown good performance in classification using only word vectors, we also compare the feature-based classifier with several text classifiers to see whether the proposed features are still effective in such a comparison.

We use a self-training approach to construct the training corpus automatically. First, we trained the feature-based classifier 1 on the human-labeled dataset, and then we automatically constructed a larger dataset with the trained classifier. The automatically classified dataset is used with the human-labeled data to train the classifier 2 for hybrid translation, as shown in

Figure 1 above.

4. Feature for Hybrid Machine Translation

The classifier must determine whether the NMT or RBMT translation is more reliable. Therefore, the features fed into the classifier are designed to reflect the translation qualities of both the RBMT system and the NMT model.

The RBMT translation procedure includes analysis, transfer, and generation; thus, analysis and transfer errors have a direct impact on translation quality.

4.1. High-Frequency Error Features

Ambiguity leads to high-frequency errors in analysis and transfer procedures. Analysis ambiguity includes morpheme ambiguity (segmentation ambiguity in Chinese), POS ambiguity, and syntactic ambiguity. Transfer ambiguity includes semantic ambiguity and word-order ambiguity. These features are language dependent, and they have often been described as grammar features in previous research. These features are black box features because they are extracted from the source sentences without morpheme or POS analysis. This paper proposes 24 features to reflect high-frequency errors related to ambiguities in Korean and Chinese. Some of these are as follows:

4.1.1. Morpheme and POS Ambiguities

In Korean, same surface morphological forms might have different root forms:

Sal: sa (buy), or sal (live)

Na-neun: nal-neun (flying), or na-neun (“I” as pronoun, or “sprout” as verb)

4.1.2. Syntactic Ambiguities

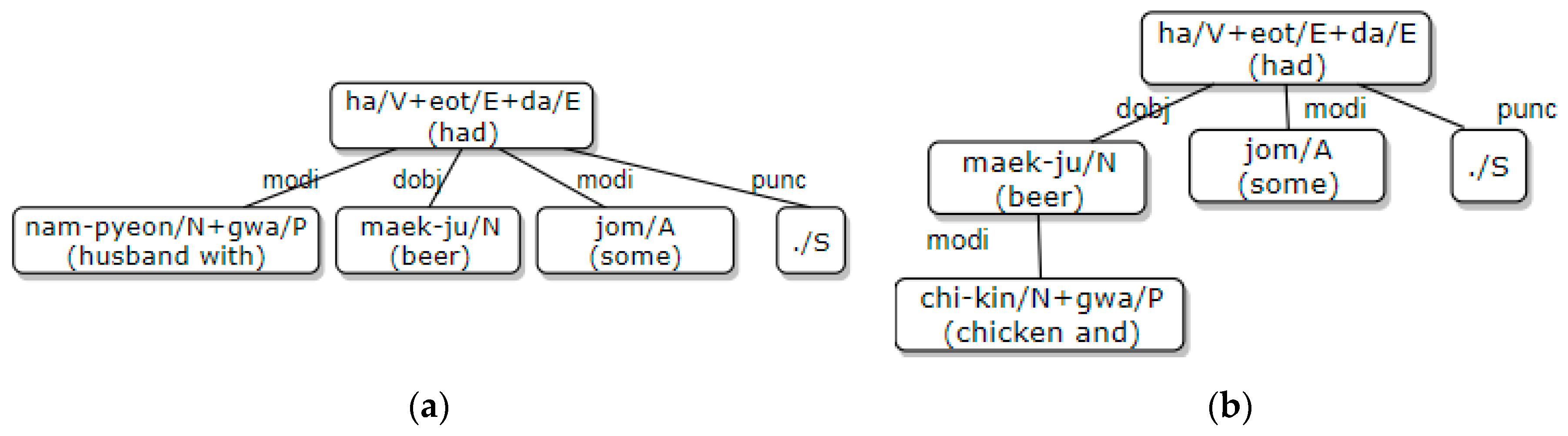

Case particles in Korean are often omitted, which causes case ambiguities among the subjective, vocative, and objective cases. The particles “-wa/-gwa”, which mean ‘with’ or ‘and’ in English, cause syntactic ambiguities when they are used to connect two nouns. Below are two examples where the syntactic structures are determined by the word semantics (see (a) and (b) in

Figure 2). In this case, there are analysis ambiguities, because word semantics are not considered during analysis.

4.1.3. Ambiguity of Adverbial Postpositional Particles

Many adverbial postpositional particles in Korean not only have word-sense ambiguity, but they also cause word-order ambiguity in Korean-to-Chinese translation. This is seen with the high-frequency particles “-ro (with/by/as/to/on/onto)”, “-e (at/on/to/in/for/by)”, and “-kka-ji (even/until/by/to/up to).” The following are examples of “-ro” translation ambiguity:

4.1.4. Word-Order Ambiguity Related to Long-Distance Reordering

There are some terms that are particularly relevant when discussing long-distance reordering in translation. In Korean, these include auxiliary predicates, such as “-ryeo-go_ha (want to)” and “-r-su_it (can/may)”, and some verbs, such as “ki-dae_ha (expect)” and “won_ha (wish)”. These words can cause word-order ambiguity during translation, which results in translation errors.

4.2. Basic Linguistic Features

Basic linguistic features have been widely used in previous research. These include POS, syntactic features, and surface features related to source sentences and target translations. These features are normally considered black-box features [

22,

23], but except for the length of the string feature, they can also be considered as glass-box features because they are produced by the POS tagger and parser included in the RBTM system [

5].

4.2.1. Source- and Target-Sentence Surface Features

There are 19 features in this set. These include:

- -

Length features, such as the number of characters, morphemes, and syntax nodes. While the number of characters corresponds to the length of the string, the number of morphemes is equal to the tagged POS count. In some languages, the number of syntax nodes is the same as the number of morphemes, but they differ in Korean, because postpositional particles and ending words are not considered to be independent syntax nodes.

- -

Length comparison features of source and target sentences, such as the lengths of the NMT and RBMT outputs, the length ratio of each translation with the source sentence, and the length-difference ratio of each translation with the source sentence

- -

The number of unknown words in each translation and their proportion in the translation

- -

The number of negative words, such as “no/not”, in the source sentence, and the difference in negative word counts between the source and each translation

- -

The number of numerals in the source sentence, and the difference in numeral counts between the source and each translation

4.2.2. Source-Sentence Linguistic Features

The number of different POSs (such as the number of verbs) and their proportion in the sentence. There are 46 total POS features.

The number of different syntax nodes (such as the number of objects or subjects) and their proportions in the syntactic nodes. There are 36 total syntactic features.

4.3. Pattern-Matching Features Reflecting Transfer Confidence

As previously introduced, the RBMT system uses transfer patterns to convert the source sentence into a translation, and the translation quality is strongly influenced by exact matches of the patterns. For example, if the original is translated with a long or informative pattern, it is more likely that the translation quality is higher than that of several short patterns or a less informative pattern. There are 19 features proposed to reflect pattern-matching confidence, and all of them are glass-box features.

4.3.1. Pattern-Number Features

In the input sentence, adopted features include the number of words that need pattern matching, the number of the words matched with patterns, and the ratio of both. The lower the pattern-matching ratio, the lower the translation reliability. All words in Korean (except final endings and modal, tense-related words) must be matched with patterns for translation.

In the matched patterns, features include the number of arguments on the source side of the patterns and the proportion of matched arguments. When more arguments match with the input sentence, the match is more reliable.

4.3.2. Pattern-Weight Features

In the input sentence, adopted features include the total weight of the words that need pattern matching, the total weight of the words matched with patterns, and the ratio of these with the sentence.

In the matched patterns, adopted features include the weights of the patterns on the source side, and the ratio of the matched arguments’ weights to the patterns’ weights.

4.3.3. Pattern-Overlap and Pattern-Shortage Features

Pattern-overlap features include the number of verbs, auxiliary verbs, conjunctional connective words, and particles that match more than two patterns. If a word matches more than two patterns, the word position in those patterns may differ, and this can create word-order ambiguity related to word reordering, particularly for the above POSs. If these words do not match any patterns, this can also lead to translation errors.

Pattern-shortage features include the number of verbs, auxiliary verbs, connective words, and particles that fail to match any pattern.

4.3.4. High-Confidence Pattern Features

These features indicate whether an input sentence has been translated with a high-confidence pattern. There are patterns that are described at the sentence level, and most of their arguments are described with lexicon or sense tags. These patterns are considered high-confidence patterns. If an input sentence is translated using one of these patterns, the translation is usually trustworthy.

4.4. NMT Features

Most of the features in previous research that represent the translation quality of SMT, including the translation probability of target phrases and n-gram LM probability, were extracted from phrase tables and language models. Apart from the perplexity score of the final translation, NMT is more of a black box that barely produces sufficient information for feature extraction.

However, there are still factors that affect the quality of NMT translations, such as the number of numerals and rare words. We propose 20 NMT features as follows:

4.4.1. Translation-Perplexity Features

The perplexity produced by the NMT decoder for each translation can be adopted as a glass-box feature. The lower the perplexity, the more confident the model is regarding the translation.

The normalized perplexity score according to the translation is also adopted as a feature, because perplexity tends to be high when the target output is long.

4.4.2. Token-Frequency Features

Token-frequency classes are used for the source and target side. The average token frequency of each side is normalized to create 10 classes (1 to 10) that are adopted as black-box features. Tokens with higher frequencies belong to higher classes.

The average frequency ratio of the target tokens and the source tokens are captured using the ratio of their average frequencies and the ratio of their classes.

4.4.3. Token Features

To avoid the rare-word problem, NMT normally uses sub-words as tokens for both source and target sentences.

The numbers of numerals, foreign tokens, unknown tokens, and low-frequency tokens are captured with eight total black-box features for the source-sentence and its translation. Foreign tokens can be on either the source or target side (for example, English words in both a Korean source-sentence and a Chinese translation). Unknown tokens are those that are outside the token dictionary’s scope or untranslated tokens on the target side (for example, untranslated Korean tokens remaining in the Chinese translation). Low-frequency tokens are tokens included in the token dictionary whose occurrence frequency in the training corpus is under a specified threshold.

The counts of mismatched numerals and foreign tokens in the source sentence and in the translation are captured with one feature. If both the source and target sentences have the same number of numerals or foreign tokens, but one of these numerals or foreign tokens differs in the translation (from that in the source sentence), we consider this a mismatch.

4.5. Rule-Based Features

By considering the proposed features above, several new rule-based features are obtained to detect whether the RBMT or NMT result is trustworthy. This produces four glass-box features in total.

4.5.1. RBMT Pattern Feature

Whether the RBMT result is unreliable is captured by considering pattern-matching features and calculating penalties. For example, if the ratio of matching pattern weights is below a given threshold, or if pattern matching is severely lacking, the RBMT result is not reliable.

4.5.2. Basic-Linguistic Features

Whether the RBMT result is unreliable is captured by considering the mismatching numerals and negative words along with other potential grammar errors.

Which translation is less reliable is captured by considering the number of unknown words in RBMT and NMT and whether the length difference ratio of RBMT or NMT exceeds a threshold. For example, if RBMT translates all words, but two words of NMT are not translated, the results of RBMT will be considered more trustworthy. Alternatively, if the original sentence and the RBMT translation are very long, but the NMT translation is very short, the NMT may not be trusted.

4.5.3. Classification Feature

After considering the rule-based features above, we add a feature to indicate whether we prefer the RBMT or NMT translation.

6. Conclusions

This paper deals with a classification-based hybridization that selects the best translation between the results of NMT and RBMT. Considering that previously used measures and features tend to evaluate frequency and fluency, which create a preference bias for NMT, we investigated RBMT-related features, including pattern-matching features and rule-based features that measure RBMT’s quality, and we proposed NMT-related features that reflect NMT’s quality. We also expanded the training data automatically using a classifier trained on a small, human-constructed dataset. The proposed classification-based hybrid translation system achieved a translation accuracy of 86.63%, which outperformed both RBMT and NMT. In practice, it is difficult to improve the accuracy of translation system after a certain degree (such as 80%). Thus, the above improvement is remarkable. The contribution to performance is more evident in out-of-domain translations, where RBMT and NMT showed translation accuracies of 69.60% and 67.44%, respectively, while the proposed hybrid system scored 71.75%.

Since deep-learning text classification and sentence classification have shown good performance, we also compared feature-based classification with text classification. Our experiments show that feature-based classification still has better performance than text classification.

Future studies will focus on low-resource NMT by exploring ways to utilize the in-depth and explicit linguistic knowledge that has been used in RBMTs system until now. We expect to use user dictionaries and syntactic information to improve NMT’s ability to control low-frequency-word translation and sentence structure in long-sentence translation.