Speaker Verification Employing Combinations of Self-Attention Mechanisms

Abstract

1. Introduction

2. Related Works

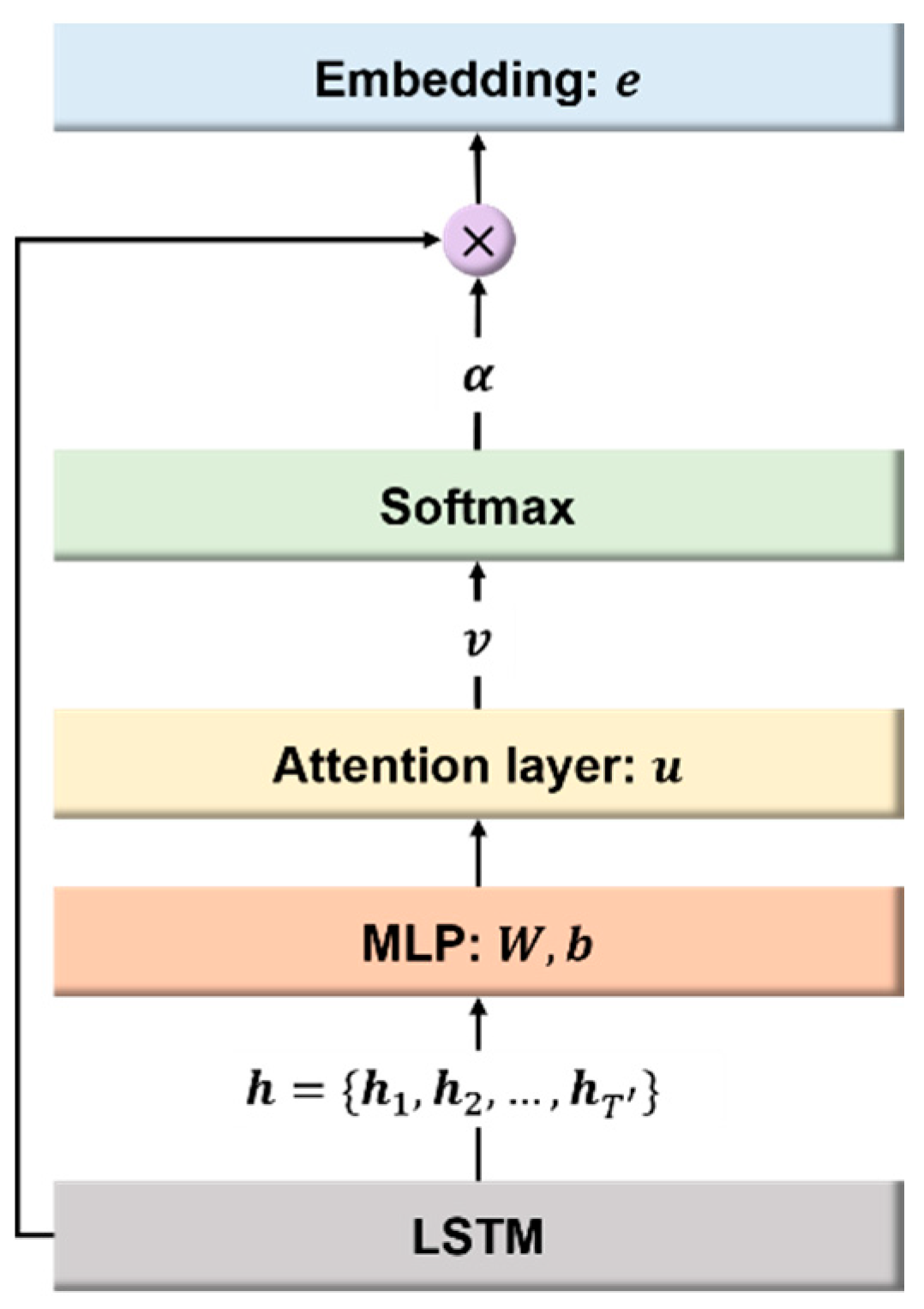

2.1. LSTM-Based Embedding Extractor

2.2. Single-Head Attention

2.3. Multi-Head Attention

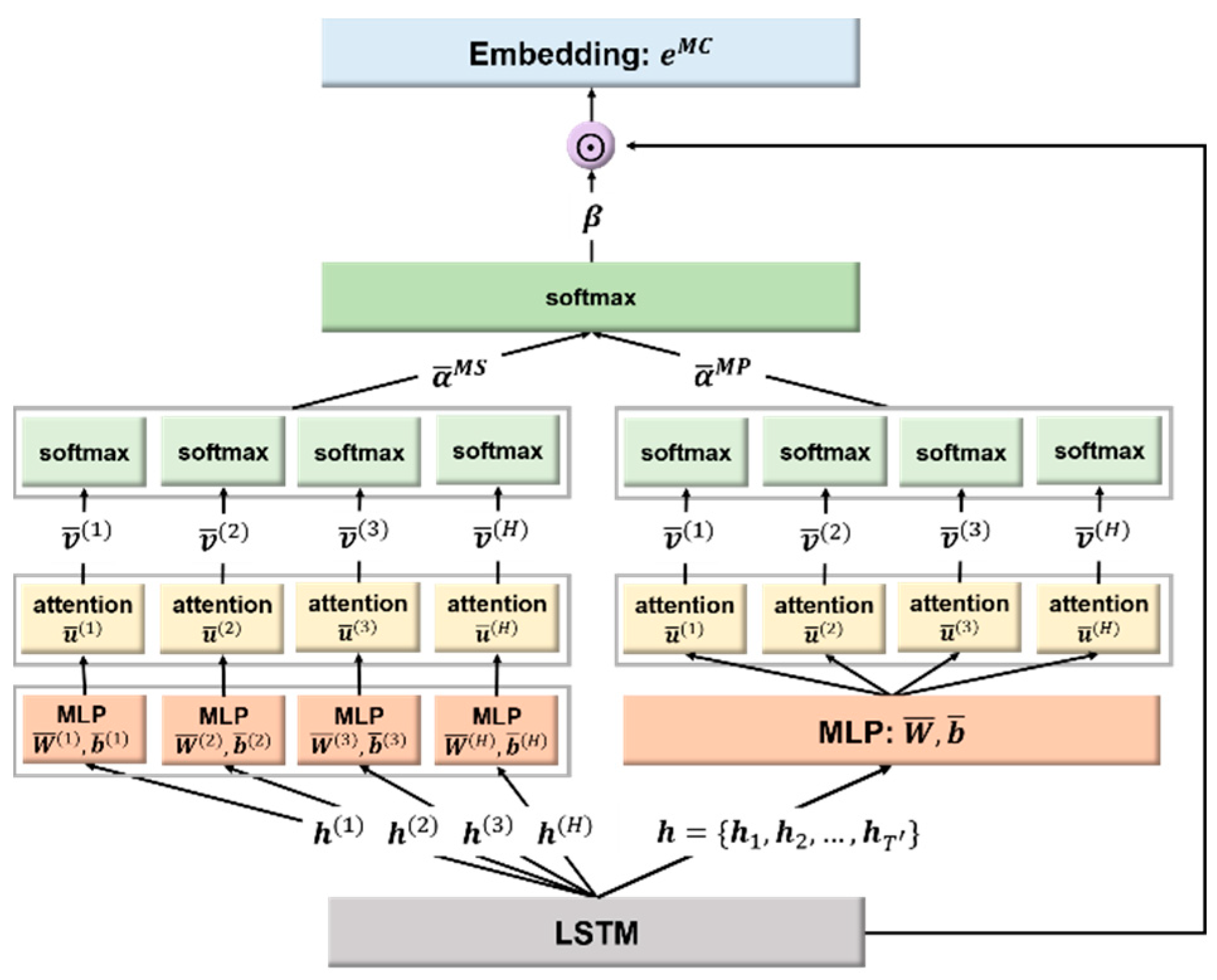

3. Proposed Methods

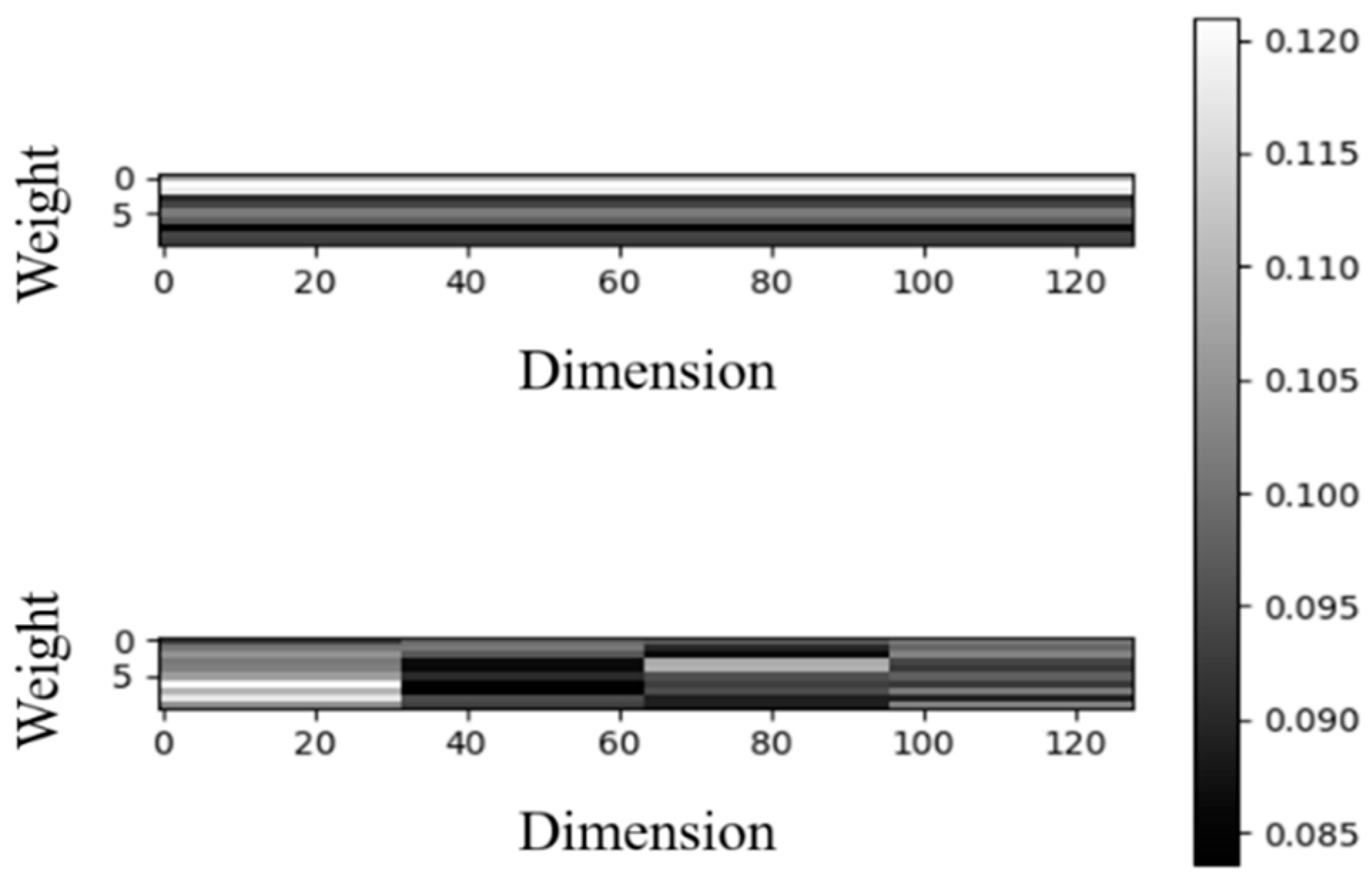

3.1. Multi-Head Projection and Split Combination

3.1.1. Multi-Head Attention with Projection

3.1.2. Multi-Head Attention with Split

3.2. Single-Head and Multi-Head Combination

4. Experiments

4.1. Dataset

4.2. Model Architecture

- (1)

- The LSTM-based model receives acoustic features as input and converts them to frame-level features.

- (2)

- The attention layer converts frame-level features to utterance-level features.

- (3)

- GE2E calculates loss during parameter optimization.

4.2.1. LSTM-Based Speaker Verification Model

4.2.2. Generalized End-to-End (GE2E) Loss

4.2.3. Attention Layer

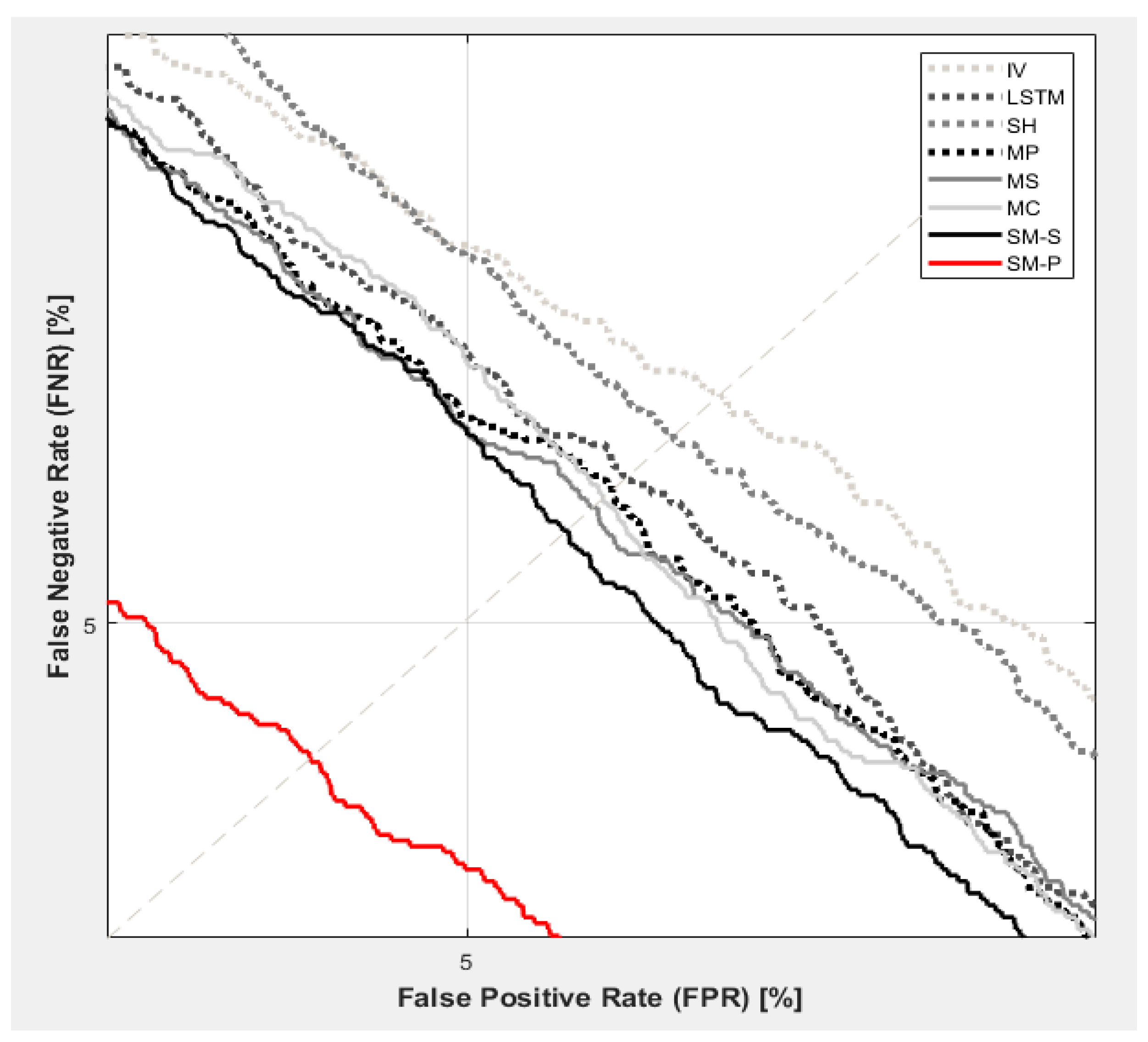

4.3. Experimental Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Reynolds, D.A.; Rose, R.C. Robust text-independent speaker identification using Gaussian mixture speaker models. IEEE Trans. Speech Audio Process. 1995, 3, 72–83. [Google Scholar] [CrossRef]

- Reynolds, D.A.; Quatieri, T.F.; Dunn, R.B. Speaker Verification Using Adapted Gaussian Mixture Models. Digit. Signal Process. 2000, 10, 19–41. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Robust Speaker Identification Algorithms and Results in Noisy Environments. In Advances in Neural Networks—ISNN 2018; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 10878. [Google Scholar]

- Dehak, N.; Kenny, P.; Dehak, R.; Dumouchel, P.; Ouellet, P. Front-end factor analysis for speaker verification. IEEE Trans. Audiospeechand Lang. Process. 2011, 19, 788–798. [Google Scholar] [CrossRef]

- Variani, E.; Lei, X.; McDermott, E.; Lopez-Moreno, I.; Dominguez, J.G. Deep neural networks for small footprint text-dependent speaker verification. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4080–4084. [Google Scholar]

- Peddinti, V.; Povey, D.; Khudanpur, S. A time delay neural network architecture for efficient modeling of long temporal contexts. In Proceedings of the 16th Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 3214–3218. [Google Scholar]

- Liu, Y.; Qian, Y.; Chen, N.; Fu, T.; Zhang, Y.; Yu, K. Deep feature for text-dependent speaker verification. Speech Commun. 2015, 73, 1–13. [Google Scholar] [CrossRef]

- Snyder, D.; Garcia-Romero, D.; Povey, D.; Khudanpur, S. Deep neural network embeddings for text-independent speaker verification. In Proceedings of the Interspeech 2017, 18th Annual Conference of the International Speech Communication Association, Stockholm, Sweden, 20–24 August 2017; pp. 999–1003. [Google Scholar]

- Heigold, G.; Moreno, I.; Bengio, S.; Shazeer, N. End-to-end text-dependent speaker verification. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5115–5119. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Chowdhury, F.; Wang, Q.; Moreno, I.L.; Wan, L. Attention-based models for text-dependent speaker verification. arXiv 2017, arXiv:1710.10470. [Google Scholar]

- Wang, Q.; Downey, C.; Wan, L.; Mansfield, P.A.; Moreno, I.L. Speaker diarization with lstm. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5239–5243. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Okabe, K.; Koshinaka, T.; Shinoda, K. Attentive Statistics Pooling for Deep Speaker Embedding. arXiv 2018, arXiv:1803.10963. [Google Scholar]

- Zhu, Y.; Ko, T.; Snyder, D.; Mak, B.; Povey, D. Self-Attentive Speaker Embeddings for Text-Independent Speaker Verification. In Proceedings of the 19th Annual Conference of the International Speech Communication (INTERSPEECH 2018), Hyderabad, India, 2–6 September 2018. [Google Scholar]

- India, M.; Safari, P.; Hernando, J. Self Multi-Head Attention for Speaker Recognition. arXiv 2019, arXiv:1906.09890. [Google Scholar]

- Sankala, S.; Rafi, B.S.M.; Kodukula, S.R.M. Self Attentive Context dependent Speaker Embedding for Speaker Verification. In Proceedings of the 2020 National Conference on Communications (NCC), Kharagpur, India, 21–23 February 2020. [Google Scholar]

- You, L.; Guo, W.; Dai, L.-R.; Du, J. Deep Neural Network Embeddings with Gating Mechanisms for Text-Independent Speaker Verification. arXiv 2019, arXiv:1903.12092. [Google Scholar]

- Fujita, Y.; Kanda, N.; Horiguchi, S.; Xue, Y.; Nagamatsu, K.; Watanabe, S. End-to-End Neural Speaker Diarization with Self-Attention. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019. [Google Scholar]

- Wang, Z.; Yao, K.; Li, X.; Fang, S. Multi-Resolution Multi-Head Attention in Deep Speaker Embedding. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2020), Barcelona, Spain, 4–8 May 2020; pp. 6464–6468. [Google Scholar]

- Wan, L.; Wang, Q.; Papir, A.; Moreno, I.L. Generalized End-to-End Loss for Speaker Verification. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Nagrani, A.; Chung, J.S.; Zisserman, A. VoxCeleb: A Large-Scale Speaker Identification Dataset. arXiv 2017, arXiv:1706.08612. [Google Scholar]

- Available online: https://pytorch.org (accessed on 20 August 2020).

| Models | Labels | EER [%] | |

|---|---|---|---|

| i-vector/PLDA [4] | IV | 6.02 | |

| LSTM (no attention) [9] | LSTM | 5.63 | |

| Single-head [11] | SH | 5.82 | |

| Multi-head with Projection [15] | MP | 5.57 | |

| Multi-head with Split [16] | MS | 5.50 | |

| Projection & Split Multi-head Combination | (proposed) | MC | 5.53 |

| Single & Multi-head with Split Combination | (proposed) | SM-S | 5.39 |

| Single & Multi-head with Projection Combination | (proposed) | SM-P | 4.45 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bae, A.; Kim, W. Speaker Verification Employing Combinations of Self-Attention Mechanisms. Electronics 2020, 9, 2201. https://doi.org/10.3390/electronics9122201

Bae A, Kim W. Speaker Verification Employing Combinations of Self-Attention Mechanisms. Electronics. 2020; 9(12):2201. https://doi.org/10.3390/electronics9122201

Chicago/Turabian StyleBae, Ara, and Wooil Kim. 2020. "Speaker Verification Employing Combinations of Self-Attention Mechanisms" Electronics 9, no. 12: 2201. https://doi.org/10.3390/electronics9122201

APA StyleBae, A., & Kim, W. (2020). Speaker Verification Employing Combinations of Self-Attention Mechanisms. Electronics, 9(12), 2201. https://doi.org/10.3390/electronics9122201