Approximate LSTM Computing for Energy-Efficient Speech Recognition

Abstract

1. Introduction

2. Background

2.1. DNN-Based End-to-End Speech Recognition

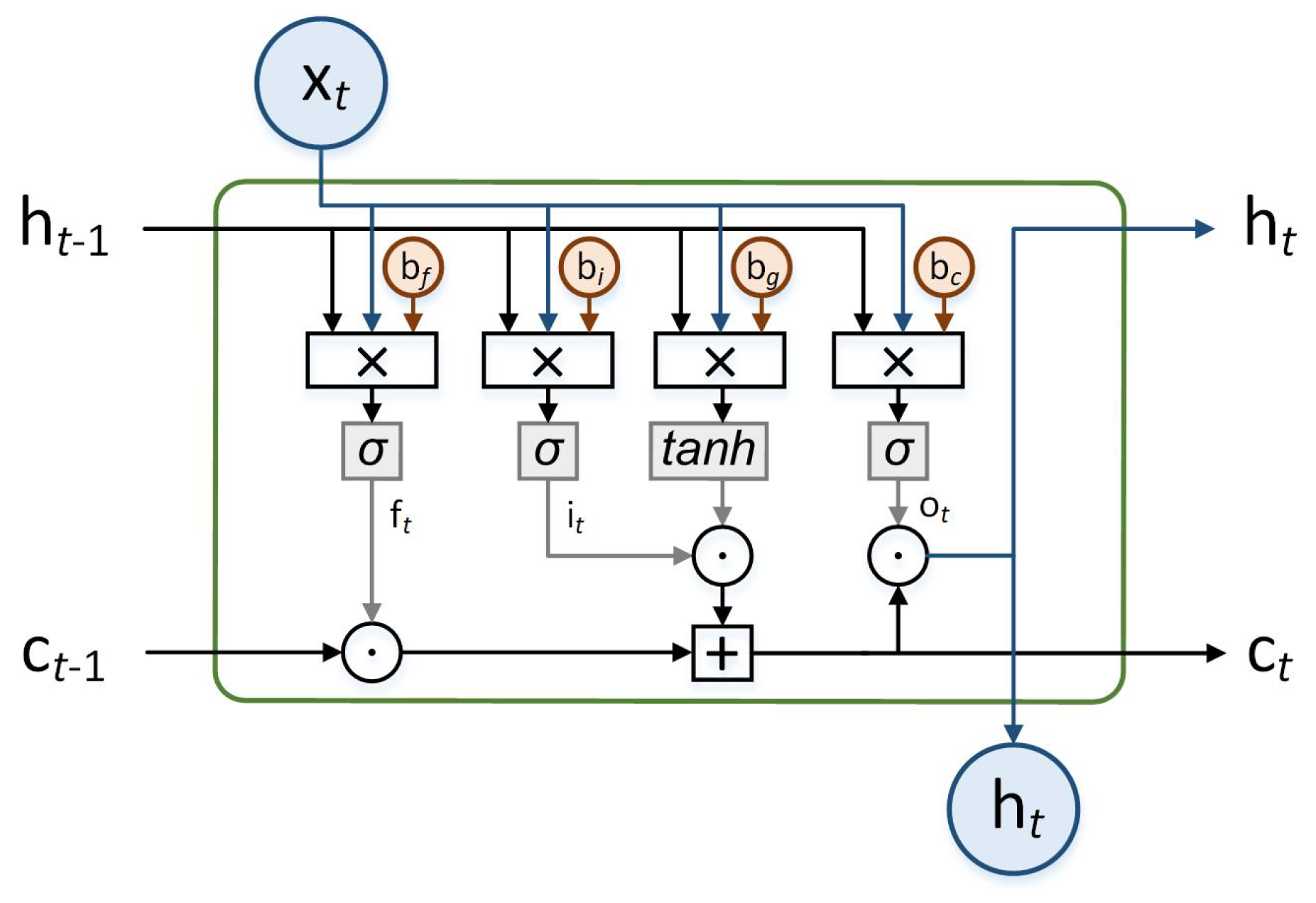

2.2. DeepSpeech Network and LSTM Operations

3. Similarity-Based LSTM Operation

3.1. Cell-Skipping Method Using Similarity Score

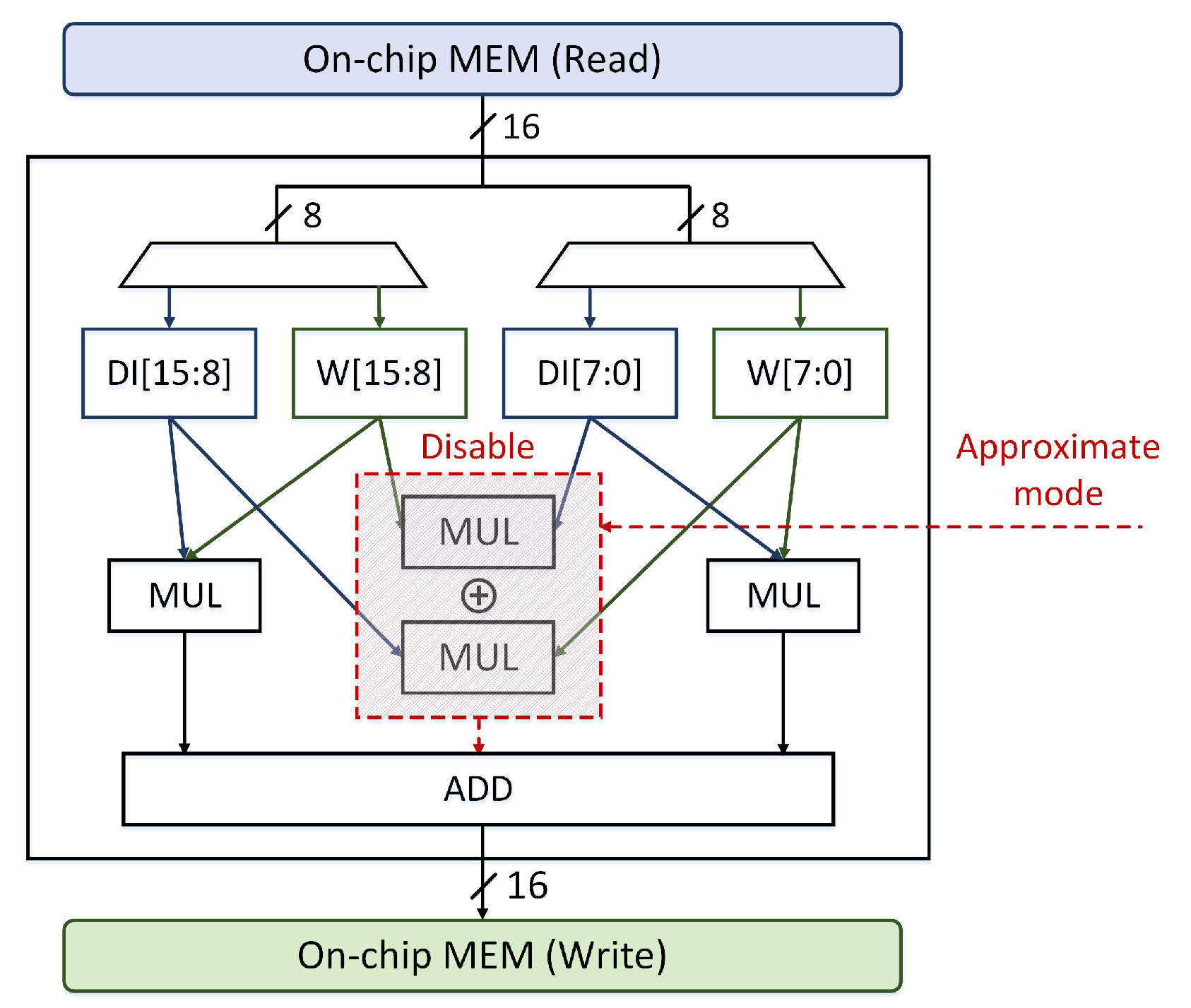

3.2. Pseudo-Skipping Method for Approximate LSTM Operations

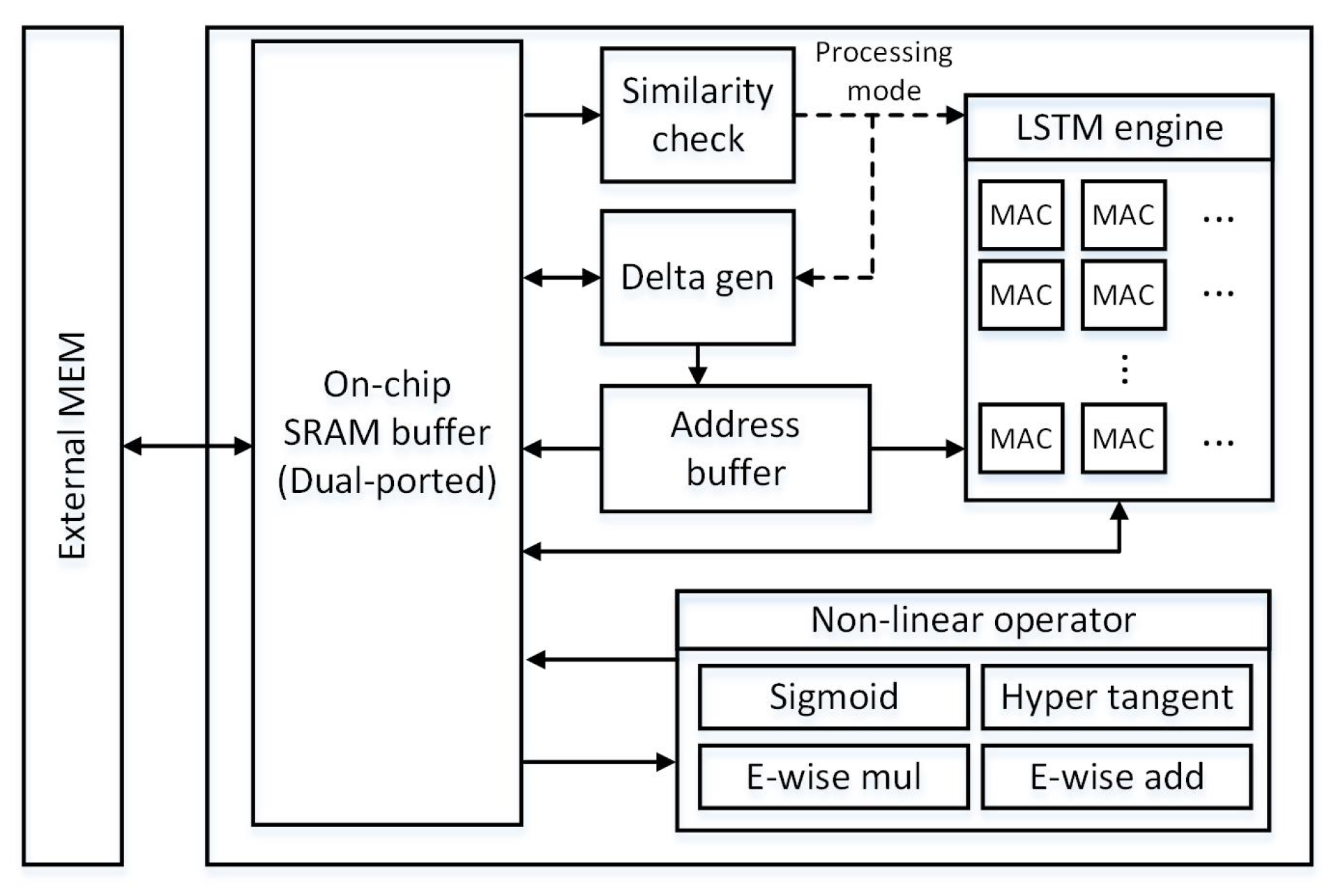

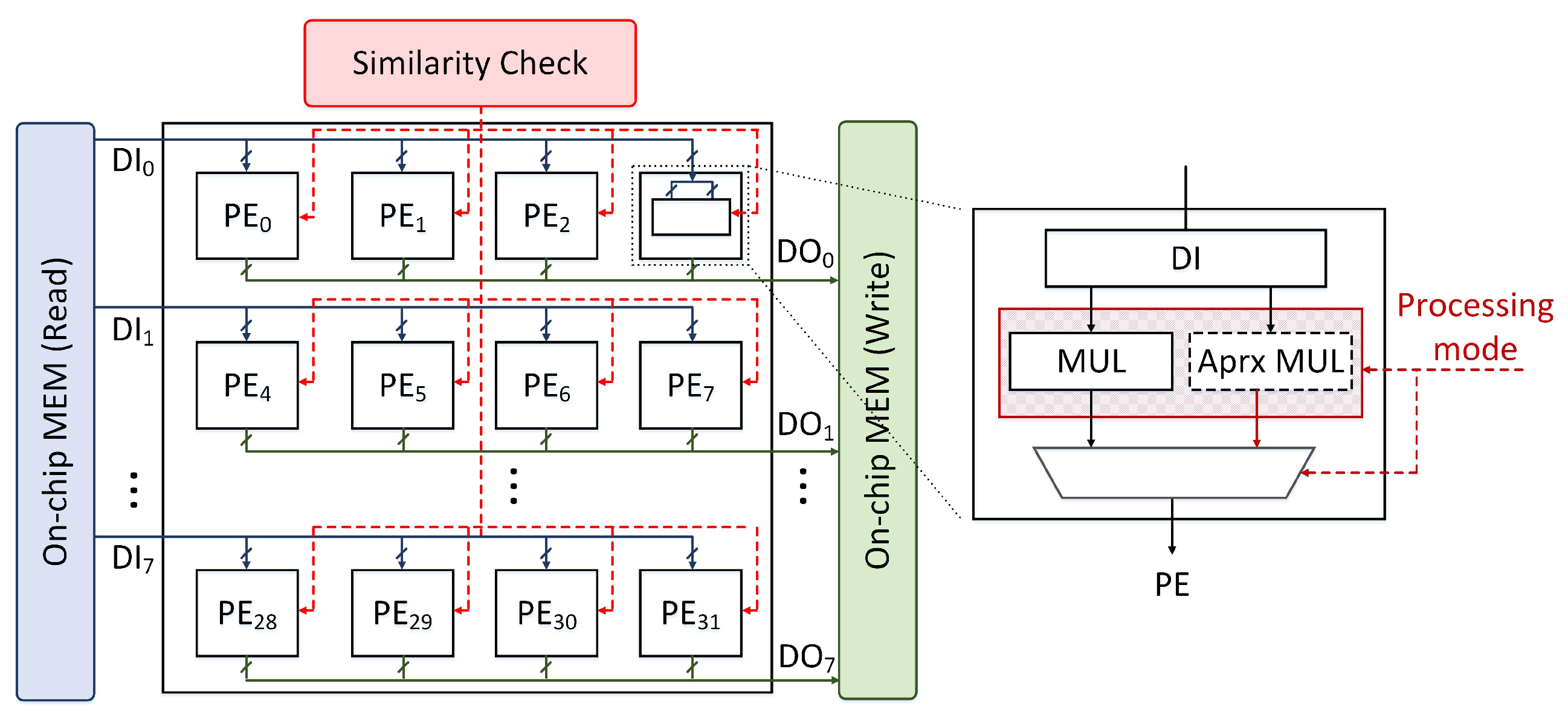

4. Accelerator Design for Approximate LSTM Processing

5. Simulation and Implementation Results

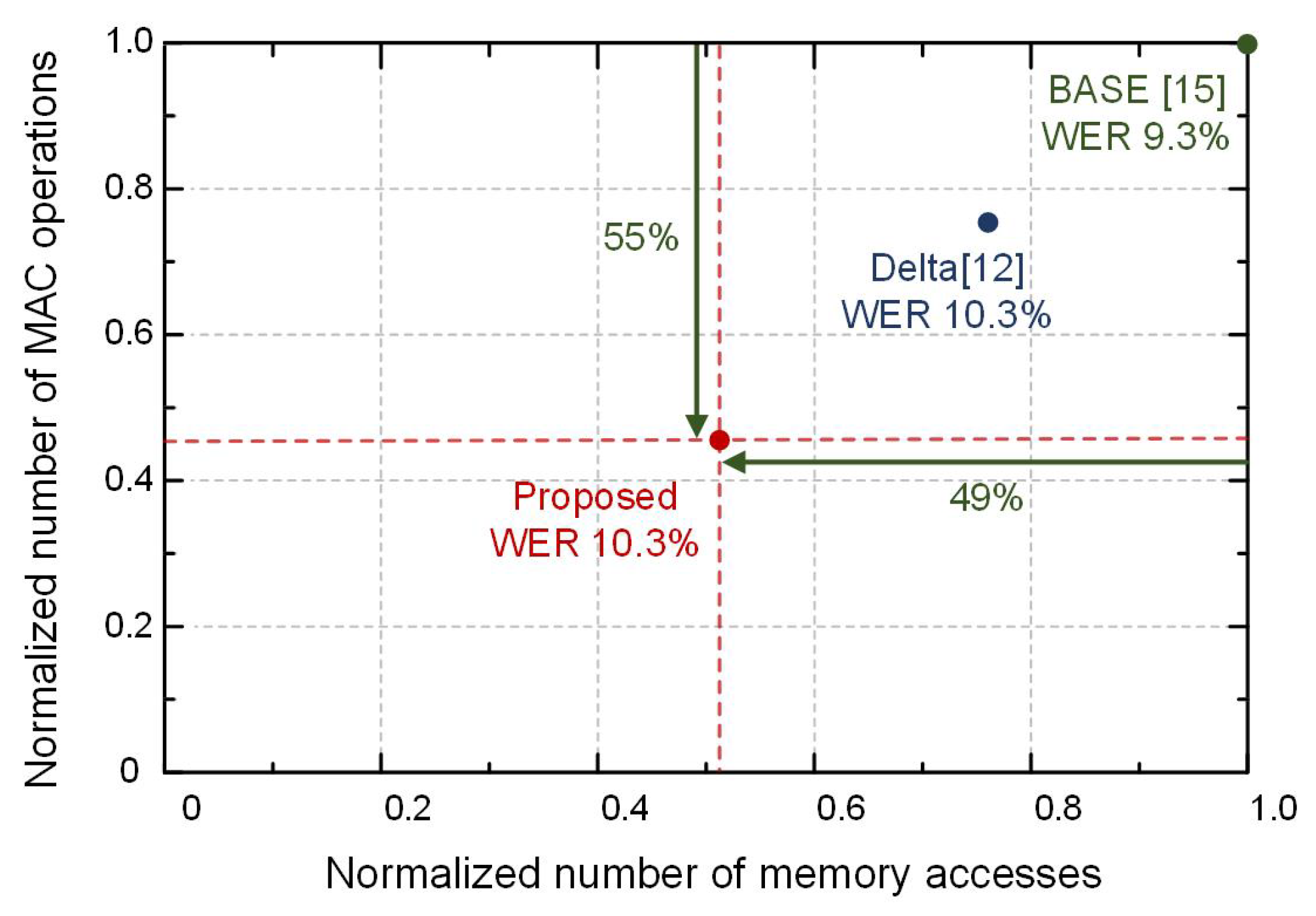

5.1. Algorithm-Level Performance

5.2. Prototype Implementation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gao, F.; Huang, T.; Wang, J.; Sun, J.; Hussain, A.; Zhou, H. A novel multi-input bidirectional LSTM and HMM based approach for target recognition from multi-domain radar range profiles. Electronics 2019, 8, 535. [Google Scholar] [CrossRef]

- Kang, S.I.; Lee, S. Improvement of Speech/Music Classification for 3GPP EVS Based on LSTM. Symmetry 2018, 10, 605. [Google Scholar] [CrossRef]

- Kumar, J.; Goomer, R.; Singh, A.K. Long short term memory recurrent neural network (lstm-rnn) based workload forecasting model for cloud datacenters. Procedia Comput. Sci. 2018, 125, 676–682. [Google Scholar] [CrossRef]

- Kadetotad, D.; Berisha, V.; Chakrabarti, C.; Seo, J.S. A 8.93-TOPS/W LSTM recurrent neural network accelerator featuring hierarchical coarse-grain sparsity with all parameters stored on-chip. In Proceedings of the ESSCIRC 2019-IEEE 45th European Solid State Circuits Conference (ESSCIRC), Cracow, Poland, 23–26 September 2019; pp. 119–122. [Google Scholar]

- Wang, M.; Wang, Z.; Lu, J.; Lin, J.; Wang, Z. E-lstm: An efficient hardware architecture for long short-term memory. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 280–291. [Google Scholar] [CrossRef]

- Kung, J.; Kim, D.; Mukhopadhyay, S. Dynamic approximation with feedback control for energy-efficient recurrent neural network hardware. In Proceedings of the 2016 International Symposium on Low Power Electronics and Design, San Francisco, CA, USA, 8–10 August 2016; pp. 168–173. [Google Scholar]

- Byun, Y.; Ha, M.; Kim, J.; Lee, S.; Lee, Y. Low-complexity dynamic channel scaling of noise-resilient CNN for intelligent edge devices. In Proceedings of the 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), Florence, Italy, 25–29 March 2019; pp. 114–119. [Google Scholar]

- Campos, V.; Jou, B.; Giró-i Nieto, X.; Torres, J.; Chang, S.F. Skip rnn: Learning to skip state updates in recurrent neural networks. arXiv 2017, arXiv:1708.06834. [Google Scholar]

- Moon, S.; Byun, Y.; Park, J.; Lee, S.; Lee, Y. Memory-Reduced Network Stacking for Edge-Level CNN Architecture With Structured Weight Pruning. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 735–746. [Google Scholar] [CrossRef]

- Neil, D.; Pfeiffer, M.; Liu, S.C. Phased lstm: Accelerating recurrent network training for long or event-based sequences. Adv. Neural Inf. Process. Syst. 2016, 29, 3882–3890. [Google Scholar]

- Dai, R.; Li, L.; Yu, W. Fast training and model compression of gated RNNs via singular value decomposition. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Neil, D.; Lee, J.H.; Delbruck, T.; Liu, S.C. Delta networks for optimized recurrent network computation. In Proceedings of the International Conference on Machine Learning (PMLR), Sydney, Australia, 11–15 August 2017; pp. 2584–2593. [Google Scholar]

- Andri, R.; Cavigelli, L.; Rossi, D.; Benini, L. YodaNN: An ultra-low power convolutional neural network accelerator based on binary weights. In Proceedings of the 2016 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Pittsburgh, PA, USA, 11–13 July 2016; pp. 236–241. [Google Scholar]

- Jo, J.; Kung, J.; Lee, S.; Lee, Y. Similarity-Based LSTM Architecture for Energy-Efficient Edge-Level Speech Recognition. In Proceedings of the 2019 IEEE/ACM International Symposium on Low Power Electronics and Design (ISLPED), Lausanne, Switzerland, 29–31 July 2019; pp. 1–6. [Google Scholar]

- Hannun, A.; Case, C.; Casper, J.; Catanzaro, B.; Diamos, G.; Elsen, E.; Prenger, R.; Satheesh, S.; Sengupta, S.; Coates, A.; et al. Deep speech: Scaling up end-to-end speech recognition. arXiv 2014, arXiv:1412.5567. [Google Scholar]

- Mirghafori, N.; Morgan, N. Combining connectionist multi-band and full-band probability streams for speech recognition of natural numbers. In Proceedings of the Fifth International Conference on Spoken Language Processing, Sydney, Australia, 30–4 December 1998. [Google Scholar]

- Nguyen, T.S.; Stüker, S.; Niehues, J.; Waibel, A. Improving sequence-to-sequence speech recognition training with on-the-fly data augmentation. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 7689–7693. [Google Scholar]

- Miao, Y.; Gowayyed, M.; Metze, F. EESEN: End-to-end speech recognition using deep RNN models and WFST-based decoding. In Proceedings of the 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; pp. 167–174. [Google Scholar]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An asr corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Australia, 19–24 April 2015; pp. 5206–5210. [Google Scholar]

- Gao, C.; Neil, D.; Ceolini, E.; Liu, S.C.; Delbruck, T. DeltaRNN: A power-efficient recurrent neural network accelerator. In Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 25–27 February 2018; pp. 21–30. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th USENIX symposium on operating systems design and implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Silfa, F.; Dot, G.; Arnau, J.M.; Gonzàlez, A. E-PUR: An energy-efficient processing unit for recurrent neural networks. In Proceedings of the 27th International Conference on Parallel Architectures and Compilation Techniques, Limassol, Cyprus, 1–4 November 2018; pp. 1–12. [Google Scholar]

- Dupenloup, G. Automatic Synthesis Script Generation for Synopsys Design Compiler. U.S. Patent 6,836,877, 28 December 2004. [Google Scholar]

- Kommuru, H.B.; Mahmoodi, H. ASIC Design Flow Tutorial Using Synopsys Tools; Nano-Electronics & Computing Research Lab, School of Engineering, San Francisco State University: San Francisco, CA, USA, 2009. [Google Scholar]

- Moon, S.; Lee, H.; Byun, Y.; Park, J.; Joe, J.; Hwang, S.; Lee, S.; Lee, Y. FPGA-based sparsity-aware CNN accelerator for noise-resilient edge-level image recognition. In Proceedings of the 2019 IEEE Asian Solid-State Circuits Conference (A-SSCC), Macao, China, 4–6 November 2019; pp. 205–208. [Google Scholar]

- Jorge, J.; Giménez, A.; Iranzo-Sánchez, J.; Civera, J.; Sanchis, A.; Juan, A. Real-Time One-Pass Decoder for Speech Recognition Using LSTM Language Models. In Proceedings of the 20th Annual Conference of the International Speech Communicatoin Association(INTERSPEECH), Graz, Austria, 15–19 September 2019; pp. 3820–3824. [Google Scholar]

| Total Area (μm) | Similarity Check (μm) | Delta Gen (μm) | Non-Linear Operations (μm) | LSTM Engine (μm) | SRAM (μm) | Others (μm) |

|---|---|---|---|---|---|---|

| 3,367,650 | 13,830 | 37,703 | 522,732 | 140,629 | 2,639,526 | 13,230 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jo, J.; Kung, J.; Lee, Y. Approximate LSTM Computing for Energy-Efficient Speech Recognition. Electronics 2020, 9, 2004. https://doi.org/10.3390/electronics9122004

Jo J, Kung J, Lee Y. Approximate LSTM Computing for Energy-Efficient Speech Recognition. Electronics. 2020; 9(12):2004. https://doi.org/10.3390/electronics9122004

Chicago/Turabian StyleJo, Junseo, Jaeha Kung, and Youngjoo Lee. 2020. "Approximate LSTM Computing for Energy-Efficient Speech Recognition" Electronics 9, no. 12: 2004. https://doi.org/10.3390/electronics9122004

APA StyleJo, J., Kung, J., & Lee, Y. (2020). Approximate LSTM Computing for Energy-Efficient Speech Recognition. Electronics, 9(12), 2004. https://doi.org/10.3390/electronics9122004