Resource-Aware Device Allocation of Data-Parallel Applications on Heterogeneous Systems

Abstract

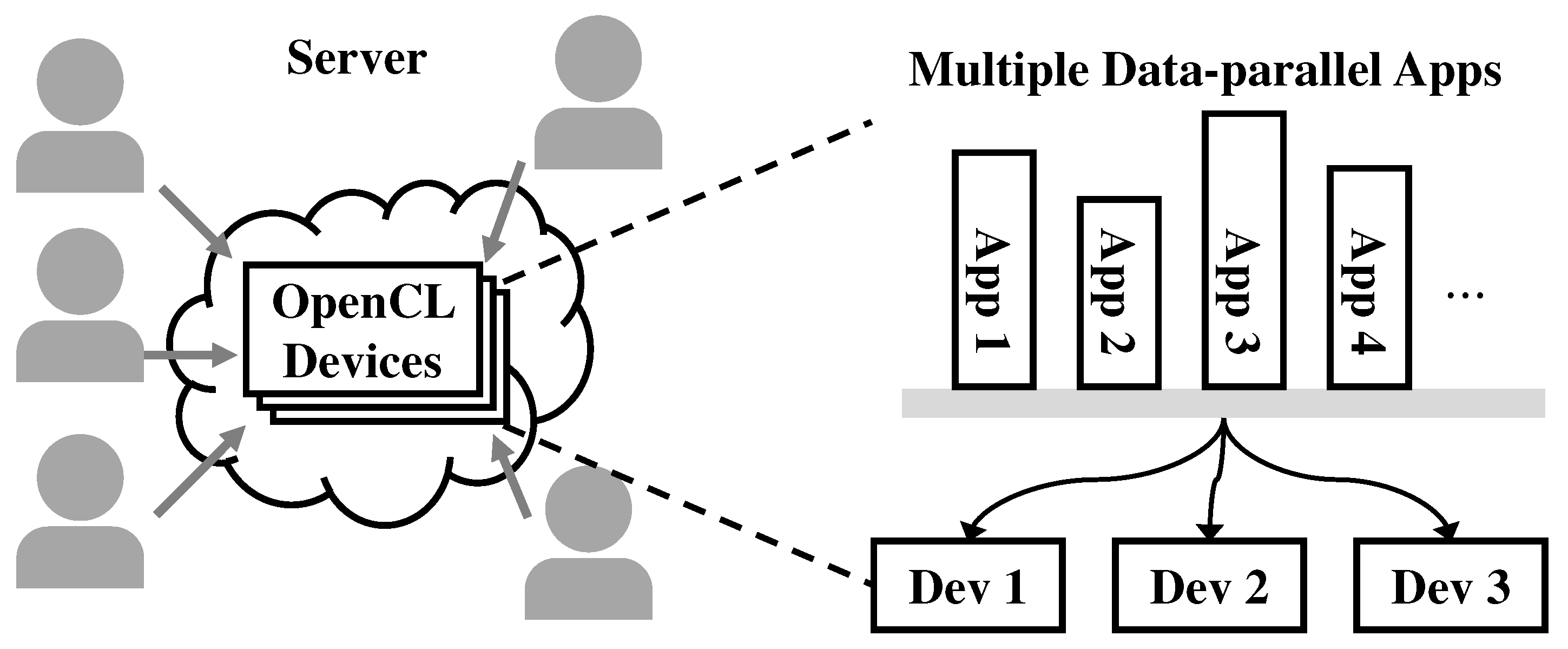

1. Introduction

2. Background and Motivation

2.1. OpenCL Programming Model

2.2. Target Device-Selection Challenge

2.3. Execution Time Prediction of Data-Parallel Applications

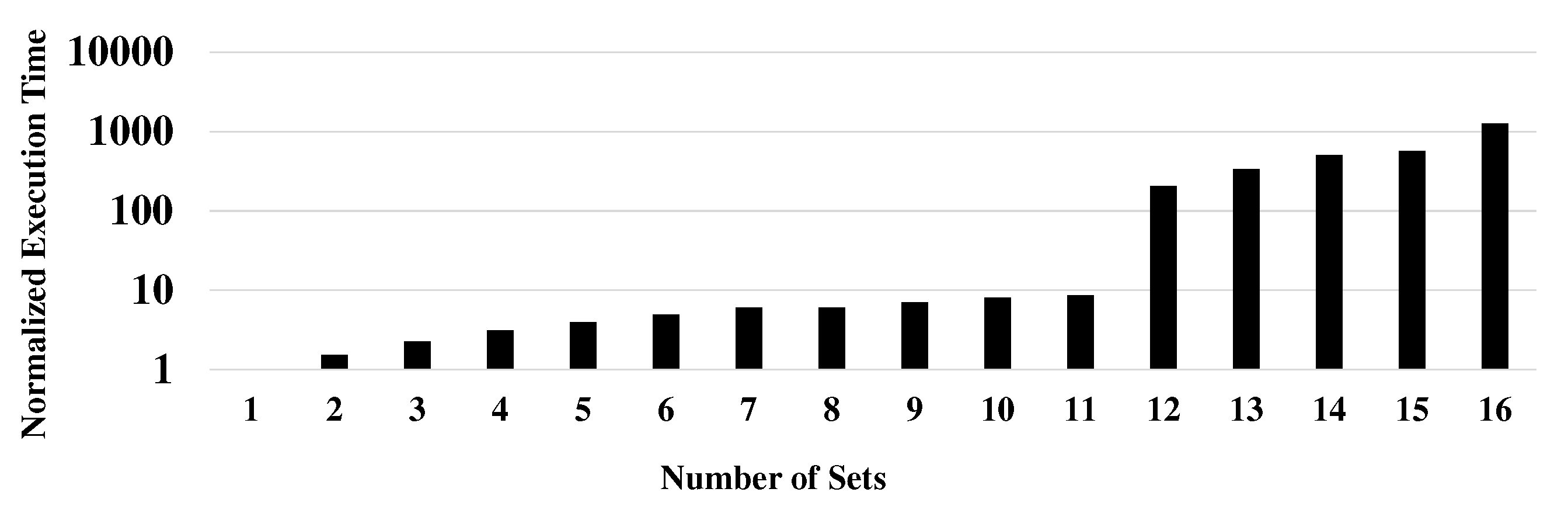

2.4. Memory Limitations

2.4.1. Limitations in Non-Shared-Memory Systems

2.4.2. Limitations in Shared-Memory Systems

3. ADAMS Framework

3.1. Overview

3.2. ADAMS Execution Model

3.2.1. Information Record

3.2.2. Allocation Manager

| Algorithm 1 Process Allocation Algorithm. |

| Require:DeviceList dList, ProcessList ProcList, Process P Ensure:process allocation {Predict execution time and memory usage of P for all devices}

|

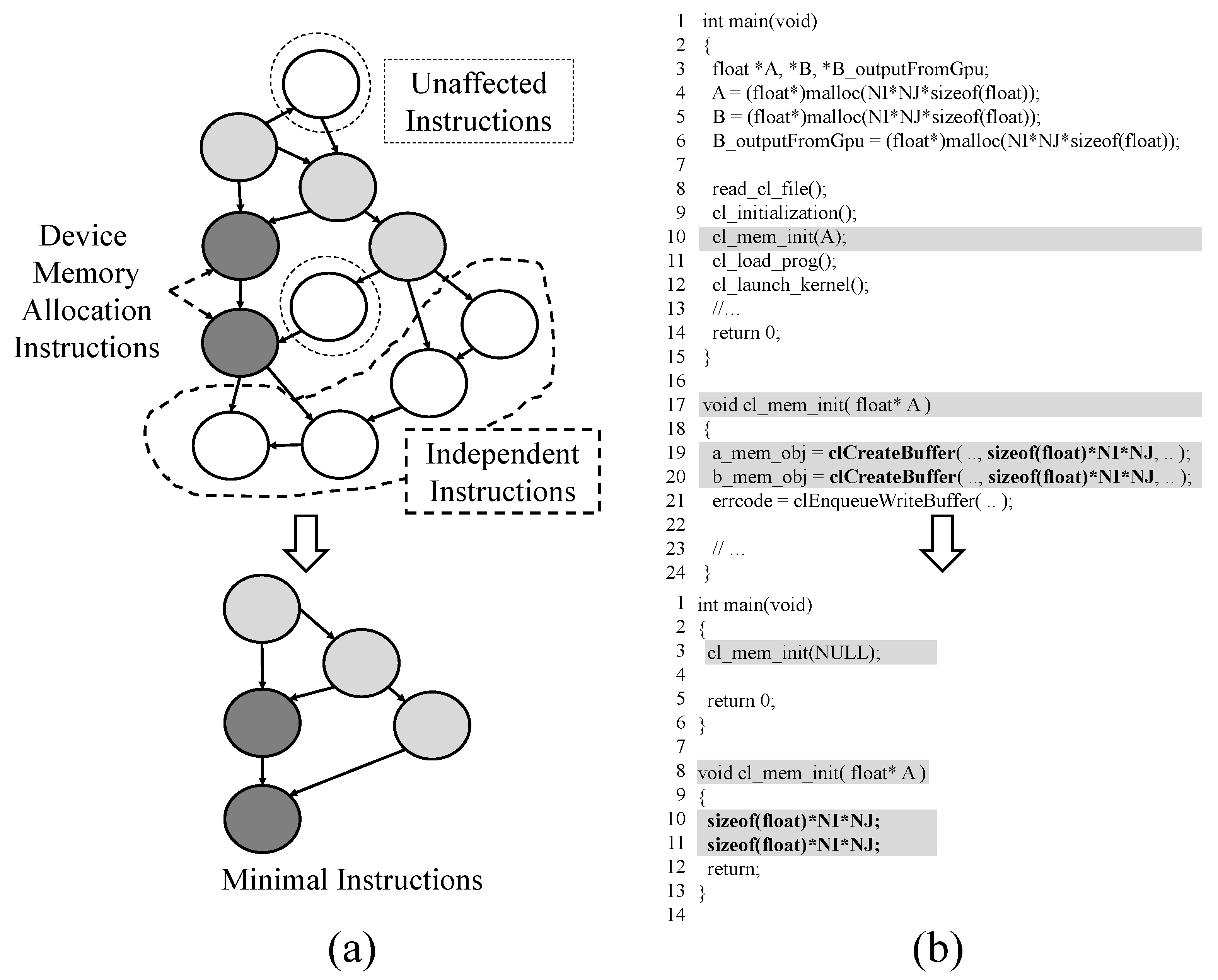

3.2.3. Global Memory Analyzer

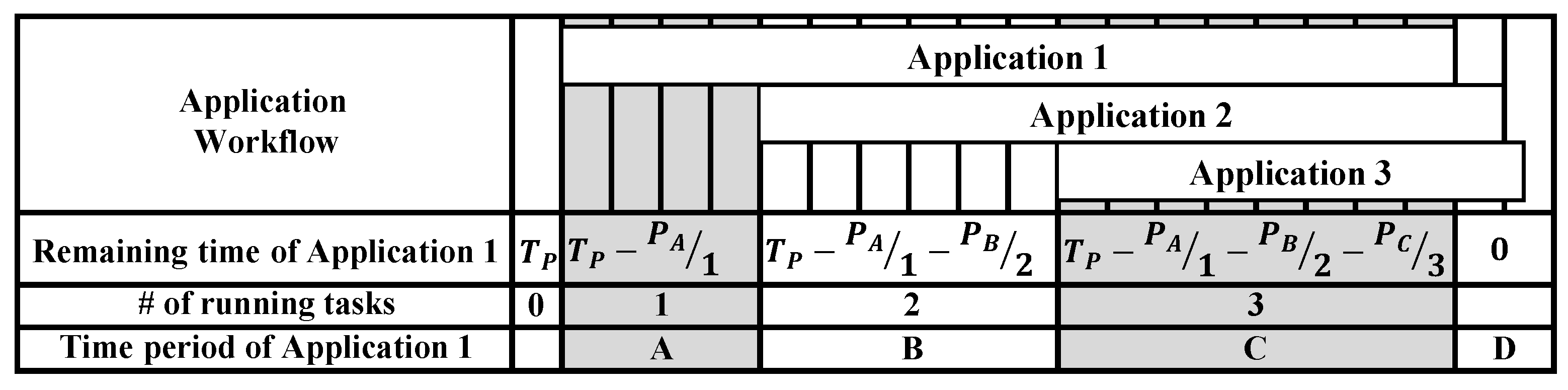

3.2.4. Concurrent Time Estimator

| Algorithm 2 Remaining Time Update Algorithm. |

| Require: PIR of process, DIR of device that process allocated Ensure:update of remaining time in PIR {If event occurs, calculate base time and updates to value of DIR}

|

3.2.5. Time Prediction

4. Evaluation and Discussion

4.1. Allocation Policy on Non-Shared-Memory Systems

4.2. Memory Consideration on Non-Shared-Memory Systems

4.3. Memory Consideration on Shared-Memory Systems

4.4. Case Study: Multiple IRIS Recognition Applications

4.5. Overhead

5. Related Work

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Moreau, T.; Jiang, Z.; Zheng, L.; Yan, E.; Shen, H.; Cowan, M.; Wang, L.; Hu, Y.; Ceze, L.; et al. TVM: An automated end-to-end optimizing compiler for deep learning. In Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), Carlsbad, CA, USA, 8–10 October 2018; pp. 578–594. [Google Scholar]

- Kim, J.; Kwon Lee, J.; Mu Lee, K. Deeply-recursive convolutional network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1637–1645. [Google Scholar]

- Garcia, J.; Dumont, R.; Joly, J.; Morales, J.; Garzotti, L.; Bache, T.; Baranov, Y.; Casson, F.; Challis, C.; Kirov, K.; et al. First principles and integrated modelling achievements towards trustful fusion power predictions for JET and ITER. Nucl. Fusion 2019, 59, 086047. [Google Scholar] [CrossRef]

- Kirk, D. NVIDIA CUDA software and GPU parallel computing architecture. In Proceedings of the ISMM, Montreal, QC, Canada, 7–14 May 2007; Volume 7, pp. 103–104. [Google Scholar]

- Stone, J.E.; Gohara, D.; Shi, G. OpenCL: A parallel programming standard for heterogeneous computing systems. Comput. Sci. Eng. 2010, 12, 66–73. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Seo, S.; Lee, J.; Nah, J.; Jo, G.; Lee, J. SnuCL: An OpenCL framework for heterogeneous CPU/GPU clusters. In Proceedings of the 26th ACM International Conference on Supercomputing, San Servolo Island, Venice, Italy, 1–26 June 2012; ACM: New York, NY, USA, 2012; pp. 341–352. [Google Scholar]

- You, Y.P.; Wu, H.J.; Tsai, Y.N.; Chao, Y.T. VirtCL: A framework for OpenCL device abstraction and management. In Proceedings of the 20th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, San Francisco, CA, USA, 7–11 February 2015; ACM: New York NY, USA, 2015; pp. 161–172. [Google Scholar]

- Lee, J.; Samadi, M.; Mahlke, S. Orchestrating multiple data-parallel kernels on multiple devices. In Proceedings of the 2015 International Conference on Parallel Architecture and Compilation (PACT), San Francisco, CA, USA, 10–21 October 2015; pp. 355–366. [Google Scholar]

- Luk, C.K.; Hong, S.; Kim, H. Qilin: Exploiting parallelism on heterogeneous multiprocessors with adaptive mapping. In Proceedings of the 42nd Annual International Symposium on Microarchitecture, New York, NY, USA, 12–16 December 2009; pp. 45–55. [Google Scholar]

- Intel Corporation. In Proceedings of the 8th and 9th Generation Intel Core Processor Families. Available online: https://www.intel.com/content/dam/www/public/us/en/documents/datasheets/8th-gen-core-family-datasheet-vol-1.pdf (accessed on 1 November 2020).

- Intel. Intel Core i9-9900K Processor (16M Cache, up to 5.00 GHz). Available online: https://ark.intel.com/content/www/us/en/ark/products/186605//intel-core-i9-9900k-processor-16m-cache-up-to-5-00-ghz.html (accessed on 1 November 2020).

- Intel Corporation. The Compute Architecture of Intel Processor Graphics Gen9. Available online: https://software.intel.com/sites/default/files/managed/c5/9a/The-Compute-Architecture-of-Intel-Processor-Graphics-Gen9-v1d0.pdf (accessed on 1 November 2020).

- Branover, A.; Foley, D.; Steinman, M. Amd fusion apu: Llano. IEEE Micro 2012, 32, 28–37. [Google Scholar] [CrossRef]

- NVIDIA. NVIDIA Tesla P100. Available online: http://images.nvidia.com/content/pdf/tesla/whitepaper/pascal-architecture-whitepaper.pdf (accessed on 1 November 2020).

- NVIDIA TESLA. V100 GPU Architecture: The World’S Most Advanced Datacenter GPU. Technical Report. Available online: https://images.nvidia.com/content/volta-architecture/pdf/volta-architecture-whitepaper.pdf (accessed on 1 November 2020).

- Park, J.J.K.; Park, Y.; Mahlke, S. Chimera: Collaborative Preemption for Multitasking on a Shared GPU. In Proceedings of the Twentieth International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS ’15); ACM: New York, NY, USA, 2015; pp. 593–606. [Google Scholar]

- Adriaens, J.T.; Compton, K.; Kim, N.S.; Schulte, M.J. The case for GPGPU spatial multitasking. In Proceedings of the IEEE International Symposium on High-Performance Computer Architecture, New Orleans, LA, USA, 25–29 February 2012. [Google Scholar]

- Grauer-Gray, S.; Xu, L.; Searles, R.; Ayalasomayajula, S.; Cavazos, J. Auto-tuning a high-level language targeted to GPU codes. In Proceedings of the 2012 Innovative Parallel Computing (InPar), San Jose, CA, USA, 13–14 May 2012; pp. 1–10. [Google Scholar]

- Clang: A C Language Family Frontend for LLVM. 2007. Available online: http://clang.llvm.org (accessed on 1 November 2020).

- Lattner, C.; Adve, V. LLVM: A Compilation Framework for Lifelong Program Analysis & Transformation. In Proceedings of the 2004 International Symposium on Code Generation and Optimization, San Jose, CA, USA, 20–24 March 2004; pp. 75–86. [Google Scholar]

- Hoffmann, H.; Eastep, J.; Santambrogio, M.D.; Miller, J.E.; Agarwal, A. Application heartbeats: A generic interface for specifying program performance and goals in autonomous computing environments. In Proceedings of the 7th International Conference on Autonomic Computing, Washington, DC, USA, 7–11 June 2010; pp. 79–88. [Google Scholar]

- Alaslani, M.G.; Elrefaei, L.A. Convolutional neural network-based feature extraction for iris recognition. Int. J. Comp. Sci. Inf. Tech. 2018, 10, 65–78. [Google Scholar] [CrossRef]

- CASIA Iris-V4.0 Database. Available online: http://biometrics.idealtest.org/ (accessed on 1 November 2020).

- Pandit, P.; Govindarajan, R. Fluidic kernels: Cooperative execution of opencl programs on multiple heterogeneous devices. In Proceedings of the Annual IEEE/ACM International Symposium on Code Generation and Optimization, Orlando, FL, USA, 15–19 February 2014; p. 273. [Google Scholar]

- Spafford, K.; Meredith, J.; Vetter, J. Maestro: Data orchestration and tuning for OpenCL devices. In European Conference on Parallel Processing; Springer: Berlin, Germany, 2010; pp. 275–286. [Google Scholar]

- Carlos, S.; Toharia, P.; Bosque, J.L.; Robles, O.D. Static multi-device load balancing for opencl. In Proceedings of the 2012 IEEE 10th International Symposium on Parallel and Distributed Processing with Applications, Leganes, Spain, 10–13 July 2012; pp. 675–682. [Google Scholar]

- Grewe, D.; O’Boyle, M.F. A static task partitioning approach for heterogeneous systems using OpenCL. In International Conference on Compiler Construction; Springer: Berlin, Germany, 2011; pp. 286–305. [Google Scholar]

- Kaleem, R.; Barik, R.; Shpeisman, T.; Hu, C.; Lewis, B.T.; Pingali, K. Adaptive heterogeneous scheduling for integrated GPUs. In Proceedings of the 2014 23rd International Conference on Parallel Architecture and Compilation Techniques (PACT), Edmonton, AB, Canada, 23–27 August 2014; pp. 151–162. [Google Scholar]

- Majeti, D.; Meel, K.S.; Barik, R.; Sarkar, V. Automatic data layout generation and kernel mapping for cpu+ gpu architectures. In Proceedings of the 25th International Conference on Compiler Construction, Barcelona, Spain, 17–18 March 2016; pp. 240–250. [Google Scholar]

- Taylor, B.; Marco, V.S.; Wang, Z. Adaptive optimization for OpenCL programs on embedded heterogeneous systems. ACM Sigplan Not. 2017, 52, 11–20. [Google Scholar] [CrossRef]

- Pfander, D.; Daiß, G.; Pflüger, D. Heterogeneous Distributed Big Data Clustering on Sparse Grids. Algorithms 2019, 12, 60. [Google Scholar] [CrossRef]

- Tupinambá, A.L.R.; Sztajnberg, A. Transparent and optimized distributed processing on gpus. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 3673–3686. [Google Scholar] [CrossRef]

- Chen, C.; Li, K.; Ouyang, A.; Li, K. Flinkcl: An opencl-based in-memory computing architecture on heterogeneous cpu-gpu clusters for big data. IEEE Trans. Comput. 2018, 67, 1765–1779. [Google Scholar] [CrossRef]

- Singh, A.K.; Basireddy, K.R.; Prakash, A.; Merrett, G.V.; Al-Hashimi, B.M. Collaborative adaptation for energy-efficient heterogeneous mobile SoCs. IEEE Trans. Comput. 2019, 69, 185–197. [Google Scholar] [CrossRef]

| Index | ADAMS API | Description | Input Argument | Return Value |

|---|---|---|---|---|

| (a) | ADAMS_Alloc | Select the appropriate device to run the OpenCL task considering global memory usage. | platform_id, device_id, problem_size, app_id | error_code |

| (b) | ADAMS_Start | Start of device time estimation. | timer_object | NULL |

| (c) | ISR_Call | Time estimation using OS timer. Check time-estimation error. | NULL | NULL |

| (d) | ADAMS_End | End of device time estimation. Release block status. | timer_object | NULL |

| (e) | ADAMS_Dealloc | Release all corresponding information in DIR and PIR. | NULL | NULL |

| (f) | ADAMS_Suspend | Suspend device time estimation without any release of information. | timer_object | NULL |

| (g) | ADAMS_Restart | Restart device time estimation. | timer_object | NULL |

| Non-Shared-Memory System | |||||

| Index | Device | Number of Compute Unit | Global Memory Amount | Global Memory Bandwidth | PCIe (Lane) |

| Dev1 | GTX 1080 Ti | 28 | 11,178 MB | 11 Gbps | PCIe 3.0 (x4) |

| Dev2 | GTX 1050 | 5 | 2000 MB | 7 Gbps | PCIe 3.0 (x8) |

| Dev3 | GTX 1050 | 5 | 2000 MB | 7 Gbps | PCIe 3.0 (x4) |

| Shared-Memory System | |||||

| Index | Device | Number of Compute Unit | Global Memory Amount | Global Memory Bandwidth | |

| Dev1 | Intel i7-7700K | 4 | 4096 MB | 35.76 Gbps | |

| Dev2 | Intel Gen 9 HD Graphics 630 [14] | 24 | 4096 MB | 35.76 Gbps | |

| System Type | Device List | Process List | PMT | Total |

|---|---|---|---|---|

| Non-shared | 0.84 KB | 175.2 KB | 48.8 KB | 224.84 KB |

| Shared | 0.56 KB | 89.6 KB | 36 KB | 126.16 KB |

| Application | Non-Shared-Memory System | Shared Memory System | |

|---|---|---|---|

| Small Set | Large Set | ||

| 2dconv | 6400 | 10,400 | 22,000 |

| 2 mm | 1600 | 2400 | 1061 |

| 3 mm | 1600 | 2400 | 1061 |

| atax | 11,200 | 16,000 | 30,000 |

| bicg | 11,200 | 16,000 | 30,000 |

| gemm | 2400 | 3200 | 1061 |

| gesummv | 5600 | 8000 | 20,000 |

| gramschmidt | 640 | 1280 | 1441 |

| mvt | 7200 | 11,200 | 20,000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.; Kang, S.; Lim, J.; Jung, S.; Kim, W.; Park, Y. Resource-Aware Device Allocation of Data-Parallel Applications on Heterogeneous Systems. Electronics 2020, 9, 1825. https://doi.org/10.3390/electronics9111825

Kim D, Kang S, Lim J, Jung S, Kim W, Park Y. Resource-Aware Device Allocation of Data-Parallel Applications on Heterogeneous Systems. Electronics. 2020; 9(11):1825. https://doi.org/10.3390/electronics9111825

Chicago/Turabian StyleKim, Donghyeon, Seokwon Kang, Junsu Lim, Sunwook Jung, Woosung Kim, and Yongjun Park. 2020. "Resource-Aware Device Allocation of Data-Parallel Applications on Heterogeneous Systems" Electronics 9, no. 11: 1825. https://doi.org/10.3390/electronics9111825

APA StyleKim, D., Kang, S., Lim, J., Jung, S., Kim, W., & Park, Y. (2020). Resource-Aware Device Allocation of Data-Parallel Applications on Heterogeneous Systems. Electronics, 9(11), 1825. https://doi.org/10.3390/electronics9111825