1. Introduction

Speaker recognition aims to analyze the speaker representation from input audio. A subfield of speaker recognition is speaker verification, which determines whether the utterance of the claimed speaker should be accepted or rejected by comparing it to the utterance of the registered speaker. Speaker verification is divided into text dependent and text independent. Text-dependent speaker verification aims to recognize only the specified utterances when verifying the speaker. Examples include Google’s “OK Google” and Samsung’s “Hi Bixby.” Meanwhile, text-independent speaker verification is not limited to the type of utterances to be recognized. Therefore, the problems to be solved using text-independent speaker verification are more difficult. If the performance is guaranteed, text-independent speaker verification can be utilized in various biometric systems and e-learning platforms, such as biometric authentication for chatbots, voice ID, and virtual assistants.

Owing to advances in computational power and deep learning techniques, the performance of text-independent speaker verification has been improved. Text-independent speaker verification using deep neural networks (DNN) is divided into two streams. The first one is an end-to-end system [

1]. The input of the DNN is a speech signal, and the output is the verification result. This is a single-pass operation in which all processes can be operated at once. However, the input speech of a variable length is difficult to handle. To address this problem, several studies have applied a pooling layer or temporal average layer to an end-to-end system [

2,

3]. The second is a speaker embedding-based system [

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14], which generates an input of variable length into a vector of fixed length using a DNN. The generated vector is used as an embedding to represent the speaker. The speaker embedding-based system can handle input speech of variable length and can generate speaker representations from various environments.

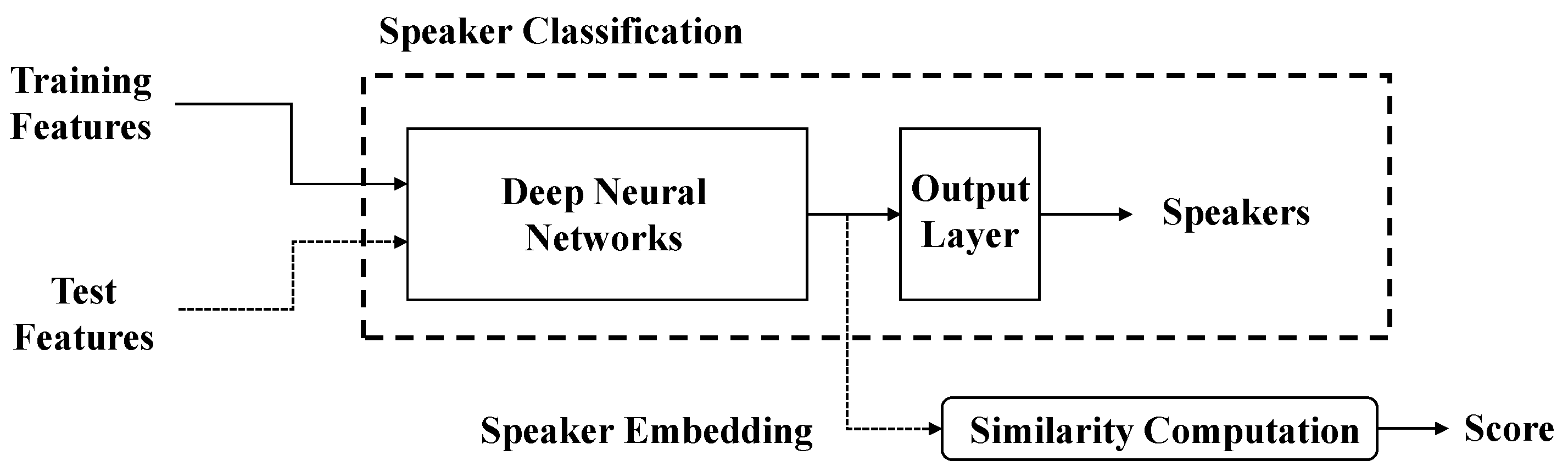

As shown in

Figure 1, a DNN has been used as a speaker embedding extractor in a speaker embedding-based system. In general, a speaker embedding-based system executes the following processes [

4,

5,

6,

7]:

The speaker classification model is trained.

The speaker embedding is extracted by using the output value of the inner layer of the speaker classification model.

The similarity between the embedding of the registered speaker and the claimed speaker is computed.

The acceptance or rejection is determined by a previously decided threshold value.

In addition, back-end methods, for example, probabilistic linear discriminant analysis, can be used [

8,

9,

10].

The most important part in the above system is the speaker embedding generation [

13]. Speaker embedding is a high-dimensional feature vector that contains speaker information. An ideal speaker-embedding maximizes inter-class variations and minimizes intra-class variations [

10,

14,

15]. The component that directly affects the speaker embedding generation is the encoding layer. The encoding layer takes a frame-level feature and converts it into a compact utterance-level feature. It also converts variable-length features to fixed-length features.

Most encoding layers are based on various pooling methods, for example, temporal average pooling (TAP) [

10,

14,

16], global average pooling (GAP) [

13,

15], and statistical pooling (SP) [

6,

14,

17,

18]. In particular, self-attentive pooling (SAP) has improved performance by focusing on the frames for a more discriminative utterance-level feature [

10,

19,

20], and pooling layers provide compressed speaker information by rescaling the input size. These are mainly used with convolutional neural networks (CNN) [

10,

13,

14,

15,

16,

17,

20]. The speaker embedding is extracted using the output value of the last pooling layer in a CNN-based speaker model.

To improve the representational power of the speaker embedding, residual learning derived from ResNet [

21] and squeeze-and-excitation (SE) blocks [

22] were adapted for the speaker models [

10,

13,

14,

15,

16,

20,

23]. Residual learning maintains input information through mappings between layers called “shortcut connections.” A large-scale CNN using shortcut connections can avoid gradient degradation. The SE block consists of a squeeze operation (which condenses all of the information on the features) and an excitation operation (which scales the importance of each feature). Therefore, a channel-wise feature response can be adjusted without significantly increasing the model complexity in the training.

The main limitation of the previous encoding layers is that the model uses only the output feature of the last pooling layer as input. In other words, the model uses only one frame-level feature when performing speaker embedding. Therefore, similar to [

14,

24], a previous study presented a shortcut connection-based multi-layer aggregation to improve the speaker representations when calculating the weight at the encoding layer [

13]. Specifically, the frame-level features are extracted from between each residual layer in ResNet. Then, these frame-level features are fed into the input of the encoding layer using shortcut connections. Consequently, a high-dimensional speaker embedding is generated.

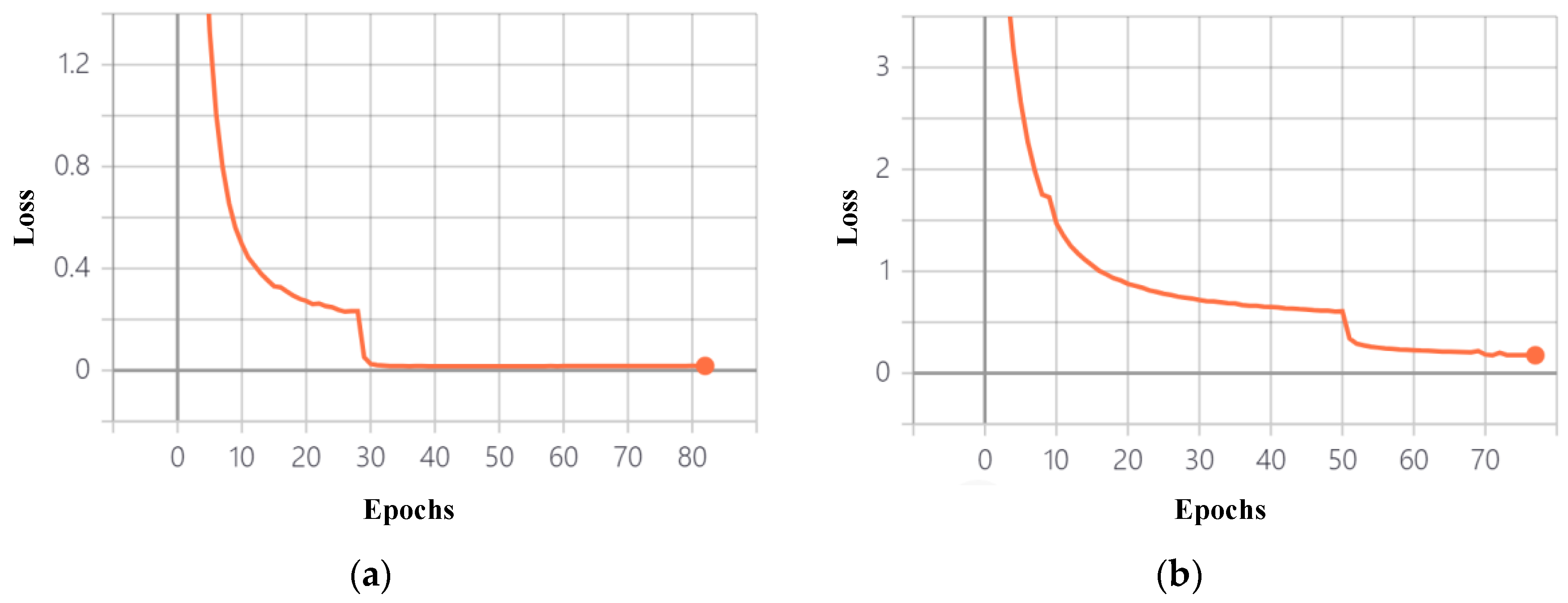

However, the previous study [

13] has limitations. First, the model parameter size is relatively large, and the model generates high-dimensional speaker embeddings (1024 dimensions, about 15 million model parameters). This leads to inefficient training and thus requires a sufficiently large amount of data for training. Second, the multi-layer aggregation approach increases not only the speaker’s information but also the intrinsic and extrinsic variation factors, for example, emotion, noise, and reverberation. Some of these unspecified factors increase variability while generating speaker embedding.

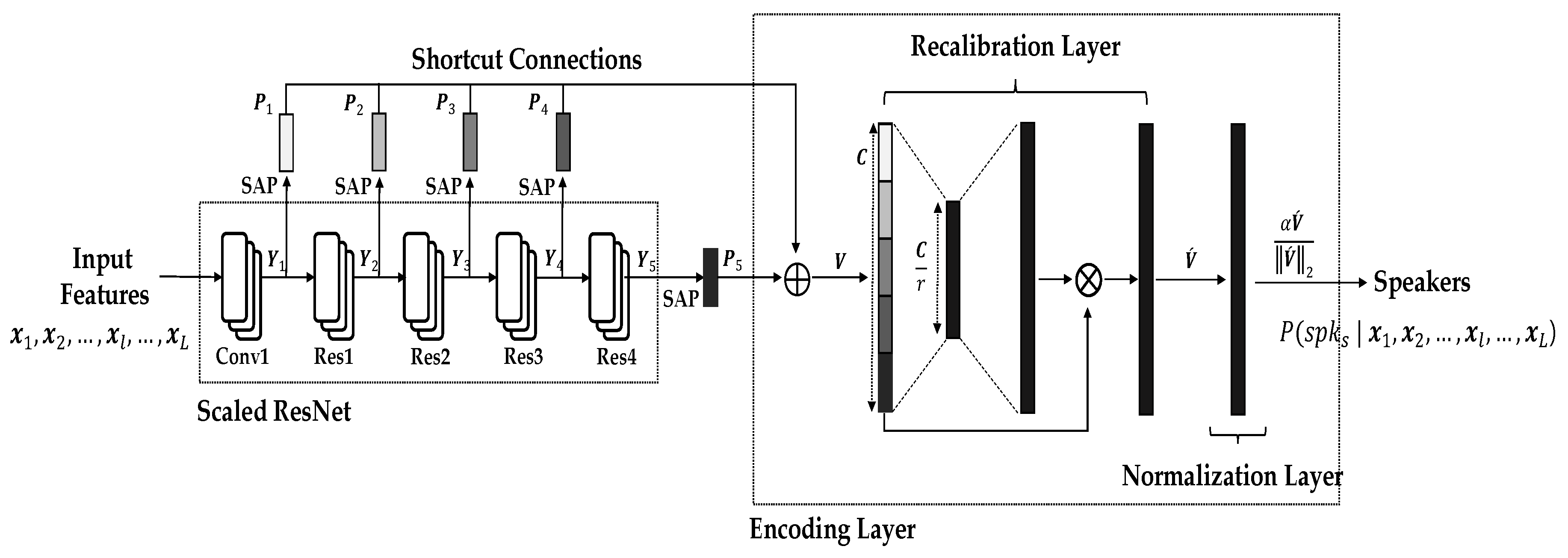

Hence, we propose a self-attentive multi-layer aggregation with feature recalibration and deep length normalization for a text-independent speaker verification system, as shown in

Figure 2. We present an improved version of the previous study, as described in the following steps:

The remainder of this paper is organized as follows.

Section 2 describes a baseline system using shortcut connections-based multi-layer aggregation.

Section 3 introduces the proposed self-attentive multi-layer aggregation method with feature recalibration and normalization.

Section 4 discusses our experiments, and conclusions are drawn in

Section 5.

3. Self-Attentive Multi-Layer Aggregation with Feature Recalibration and Normalization

As discussed in

Section 1, the previous study has two problems. The model parameter problem is addressed by building a scaled ResNet-34. However, the problem of multi-layer aggregation remains. Multi-layer aggregation uses output features of multiple layers to develop the speaker embedding system. It is assumed that not only speaker information but also other unspecified factors exist in the output feature of the layer. The unspecified factor lowers the speaker verification performance. Therefore, we proposed three methods: self-attentive multi-layer aggregation, feature recalibration, and deep length normalization.

3.1. Model Architecture

As presented in

Figure 2 and

Table 4, the proposed network mainly consists of a scaled ResNet and an encoding layer. Frame-level features are trained in the scaled ResNet, and utterance-level features are trained in the encoding layer.

In the scaled ResNet, given an input feature

of length

(

), output features

(

) from each residual layer of the scaled ResNet are generated using SAP. Here, the length

is determined by the number of channels in the

residual layer. Then, the generated output features are concatenated into one feature

as in Equation (1) (where

indicates concatenation).

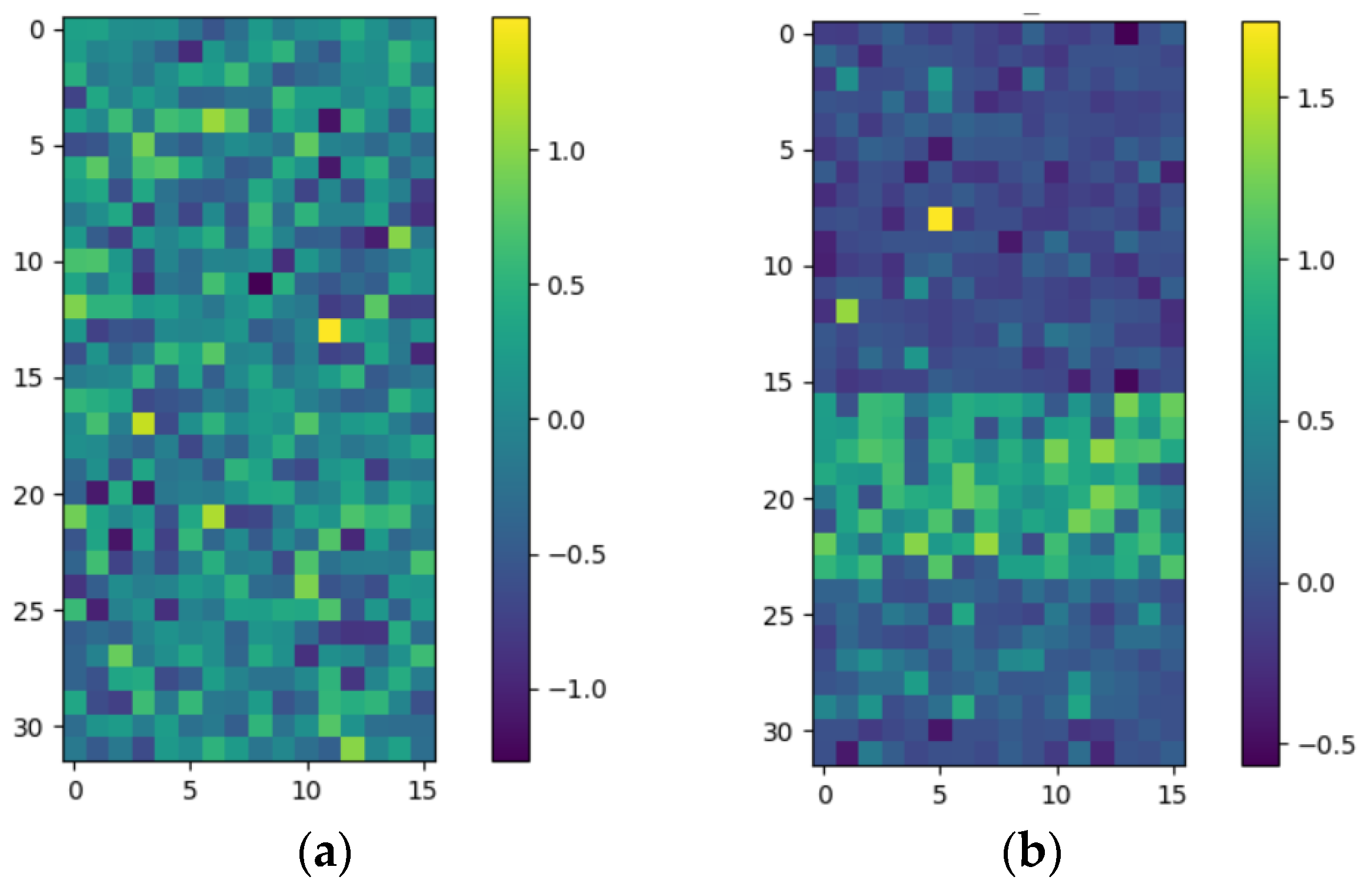

The concatenated feature (length , ) is a set of frame-level features and is used as the input of the encoding layer.

The encoding layer comprises a feature recalibration layer and a deep length normalization layer. In the feature recalibration layer, the concatenated feature is recalibrated by fully-connected layers and nonlinear activations. Consequently, a recalibrated feature () is generated. Then, the recalibrated feature is normalized according to the length of input in the deep-length normalization layer. The normalized feature is used as a speaker embedding and is fed into the output layer. Further, a log probability for speaker classes , ), is generated in the output layer.

3.2. Self-Attentive Multi-Layer Aggregation

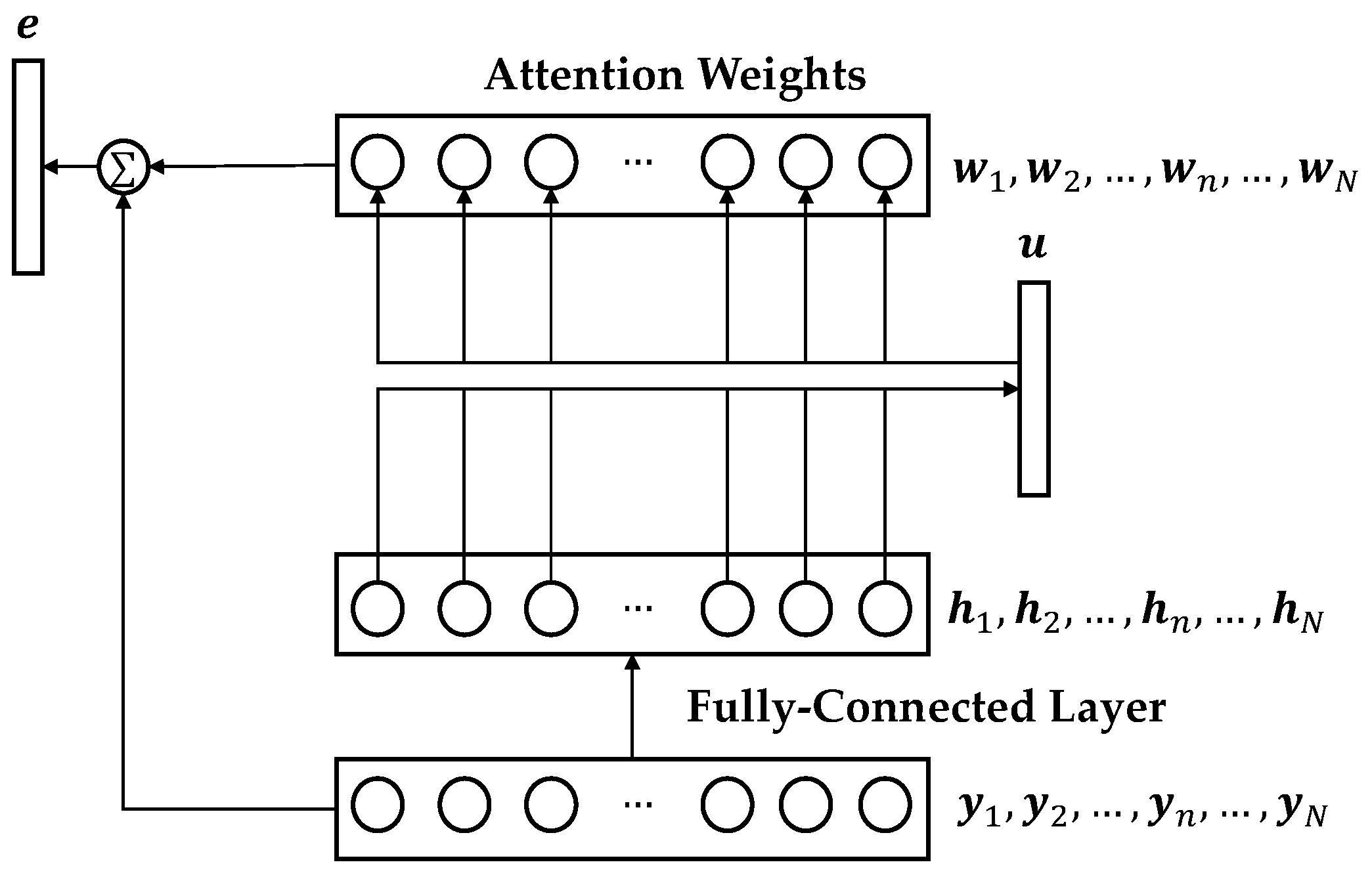

As shown in

Figure 2 and

Figure 3, SAP is applied to each residual layer using shortcut connections. For every input feature, given an output feature of the first convolution layer or the

residual layers after conducting an average pooling,

of length

(

) is obtained. The number of dimensions

is determined by the number of channels.

Then, the average feature is fed into a fully-connected hidden layer to obtain

using a hyperbolic tangent activation function. Given

and a learnable context vector

, the attention weight

is measured by training the similarity between

and

with a softmax normalization as in Equation (2).

Then, the embedding

is generated using the weighted sum of the normalized attention weights

and

as in Equation (3).

The embedding vector can be rewritten as () in the order of the dimensions. Consequently, the SAP output feature is generated. This process helps generate a more discriminative feature while focusing on the frame-level features of each layer. Moreover, dropout regularization and batch normalization are used in . Then, the generated features are concatenated into one feature, , as in Equation (1).

3.3. Feature Recalibration

After the self-attentive multi-layer aggregation, the concatenated feature

is fed into the feature recalibration layer. The feature recalibration layer aims to train the correlations between each channel of the concatenated feature; this is inspired by [

22].

Given an input feature

(

, where

is the sum of all channels), the feature channels are recalibrated using two fully-connected layers and nonlinear activations, as in Equation (4).

Here, refers to the leaky rectified linear unit activation; refers to the sigmoid activation; is the front fully-connected layer, , and is the back fully-connected layer, . According to the reduction ratio , a dimensional transformation is performed between the two fully-connected layers, such as a bottleneck structure, while channel-wise multiplication is performed. The rescaled channels are then multiplied by the input feature . Consequently, an output feature () is generated. This generated feature is the result of recalibration according to the importance of the channels.

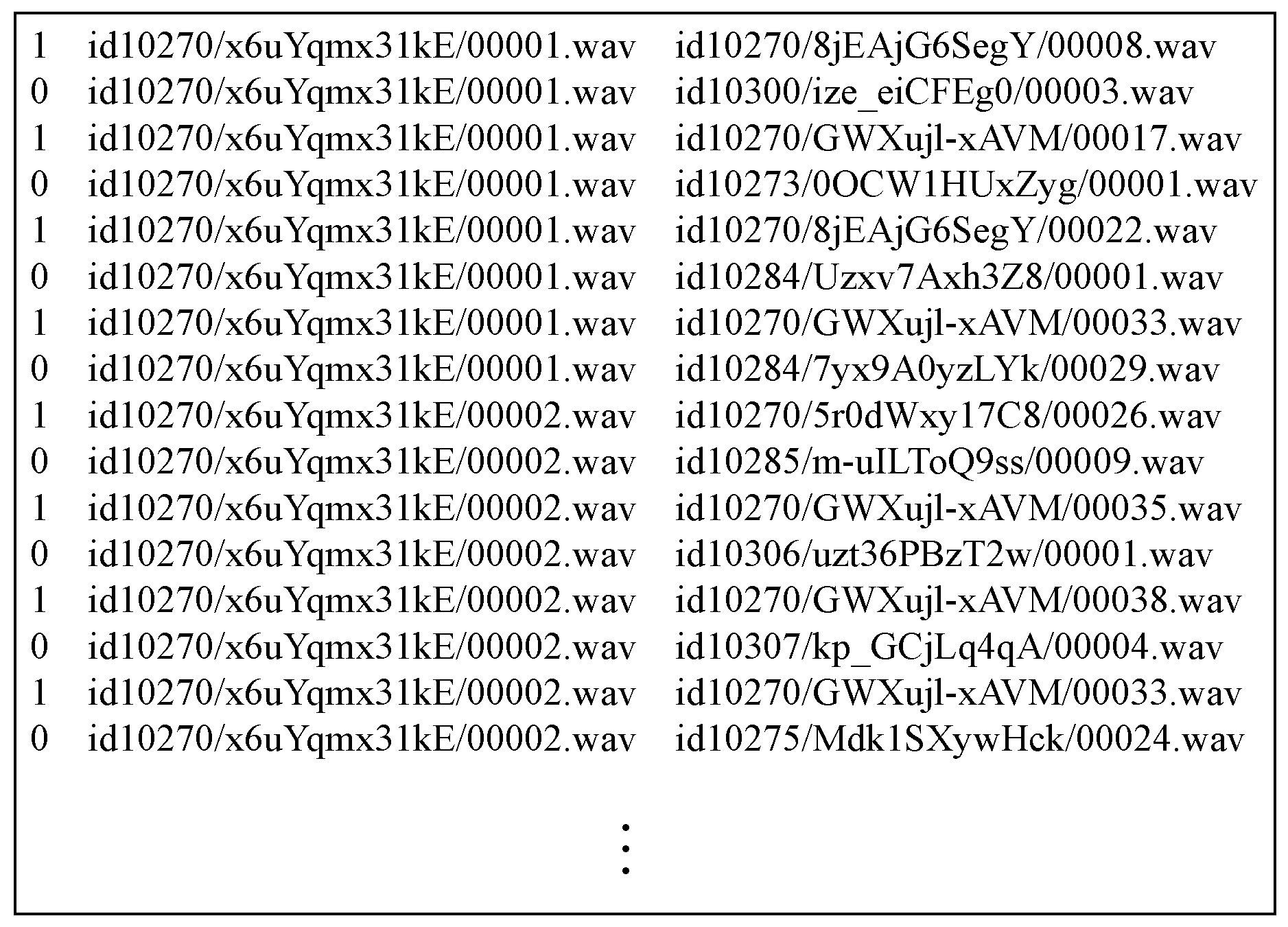

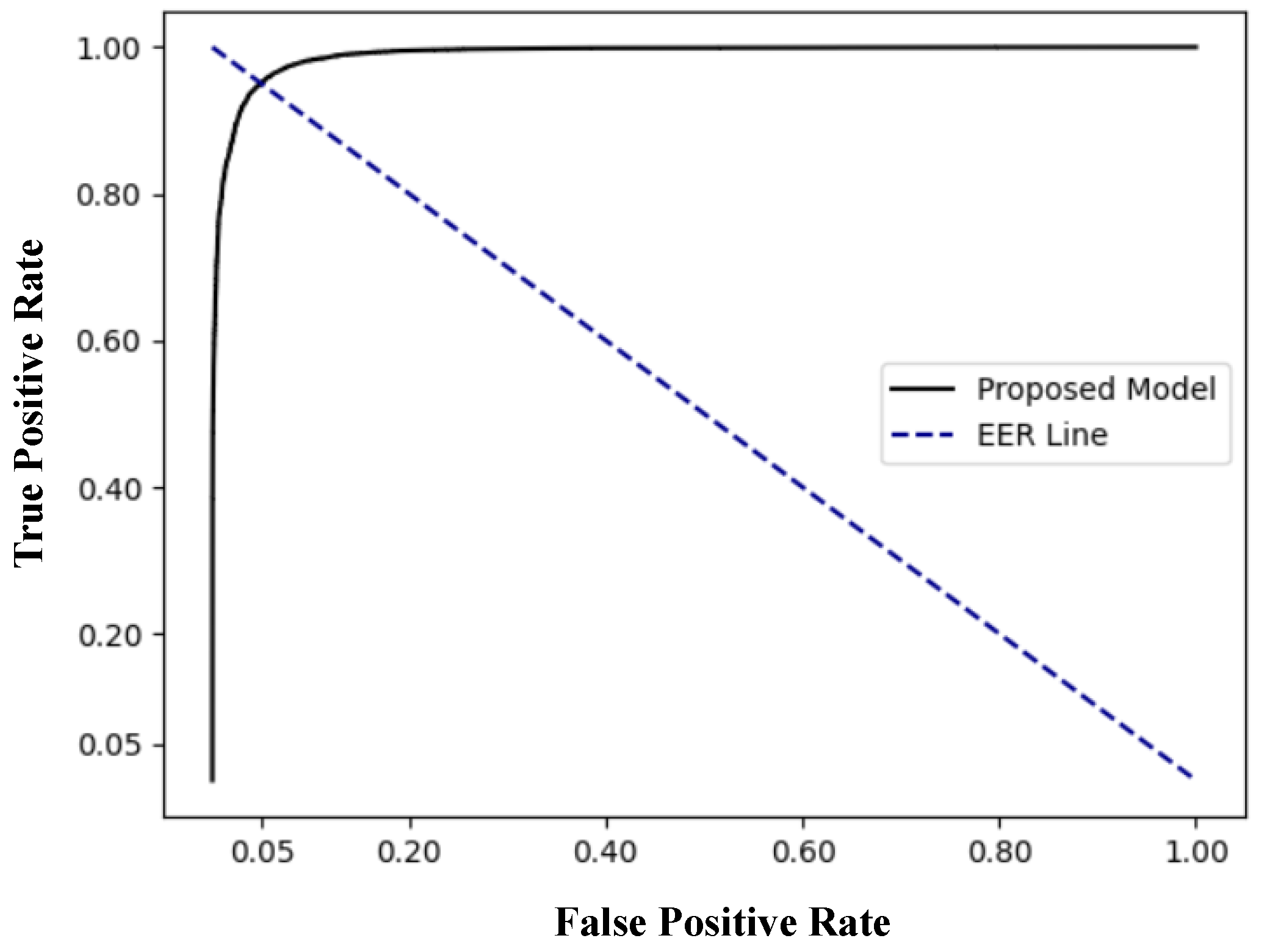

3.4. Deep Length Normalization

As in [

11], deep length normalization was applied to the proposed model. The L2 constraint is applied to the length axis of the recalibrated feature

with a scale constant,

, as in Equation (5).

Then, the normalized

is fed into the output layer for speaker classification. This feature is used as a speaker embedding, as shown in

Figure 4.