Karpinski Score under Digital Investigation: A Fully Automated Segmentation Algorithm to Identify Vascular and Stromal Injury of Donors’ Kidneys

Abstract

1. Introduction

2. Materials and Methods

2.1. Database Description

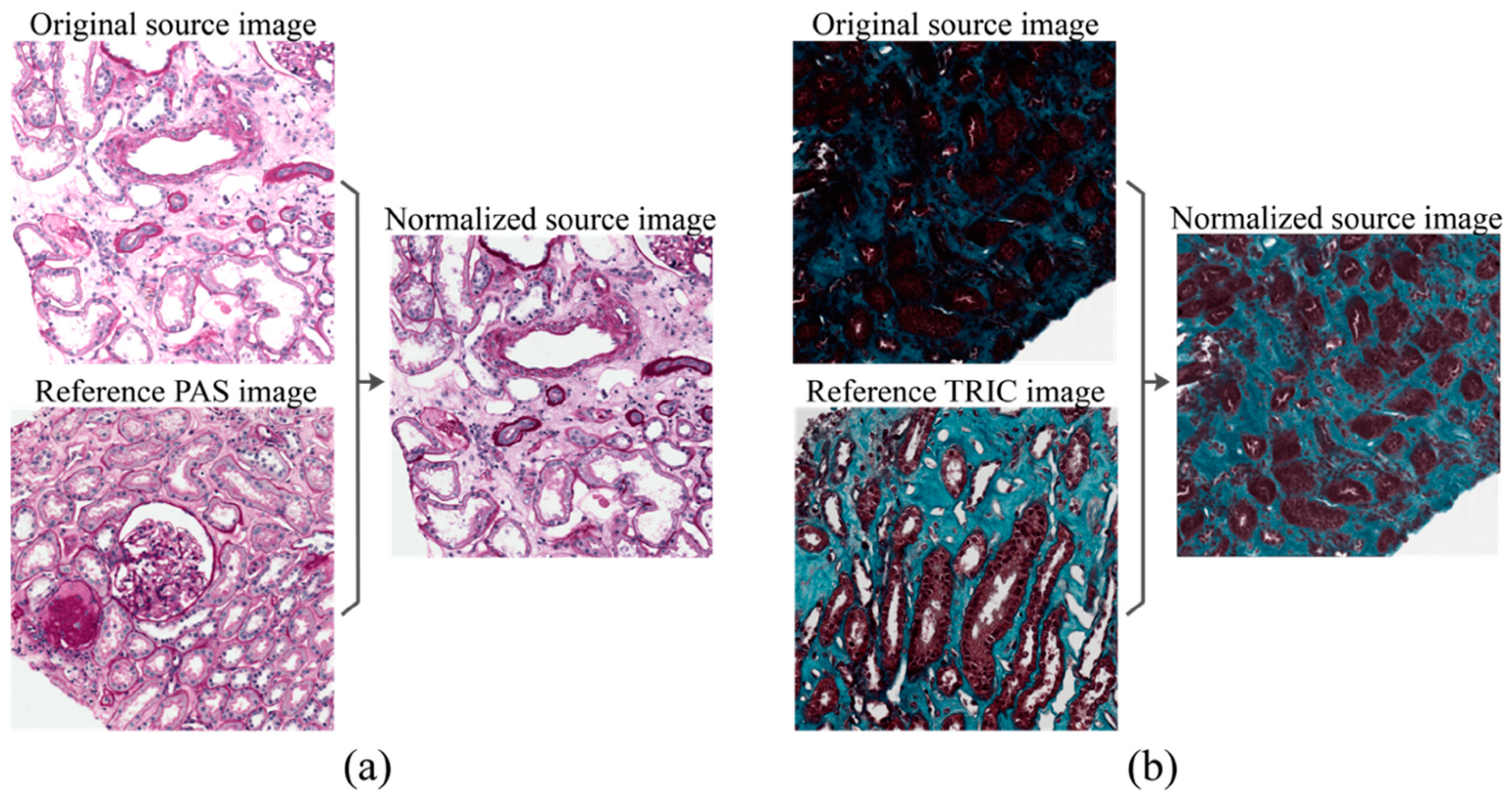

2.2. Stain Normalization

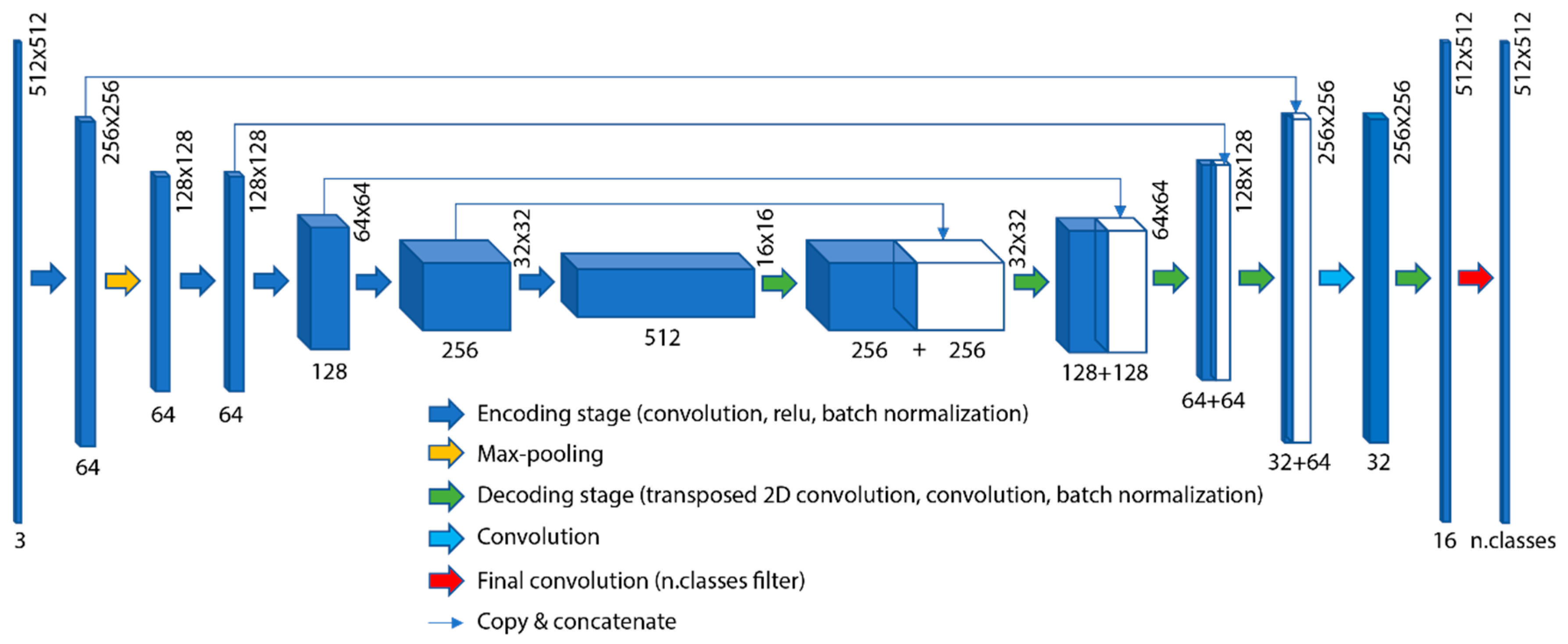

2.3. Deep Network Architecture

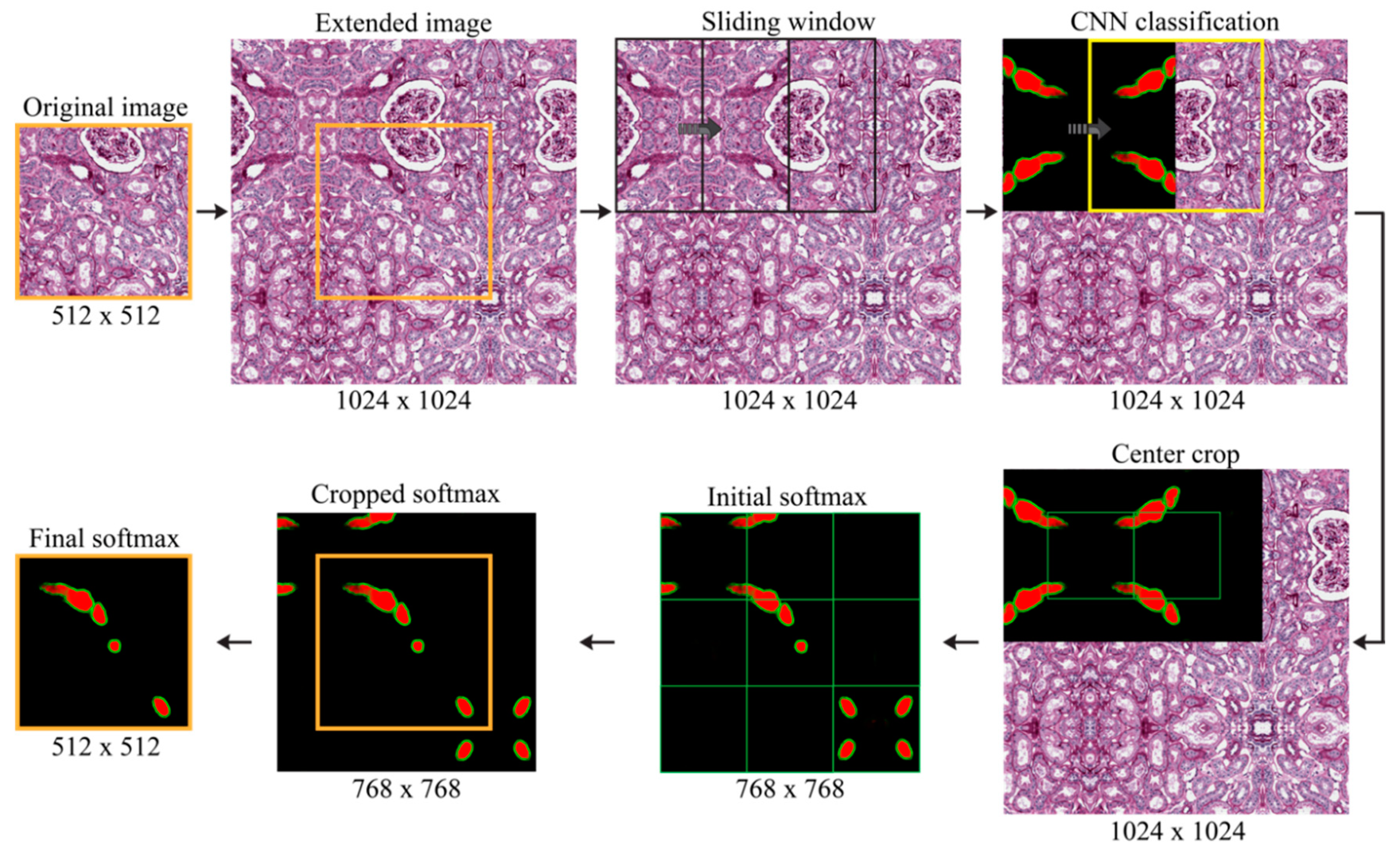

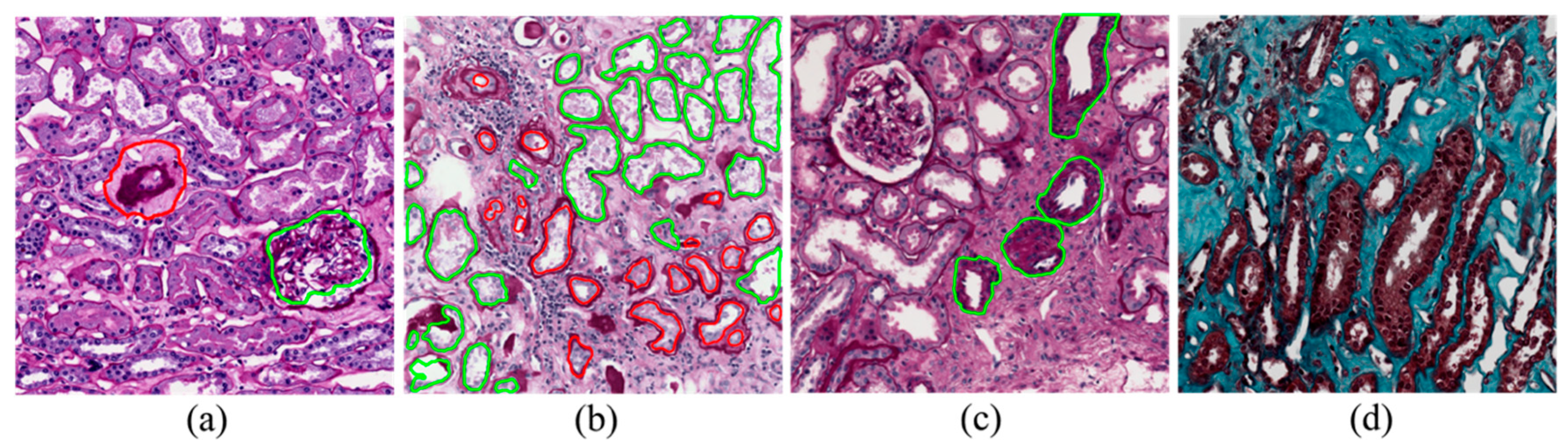

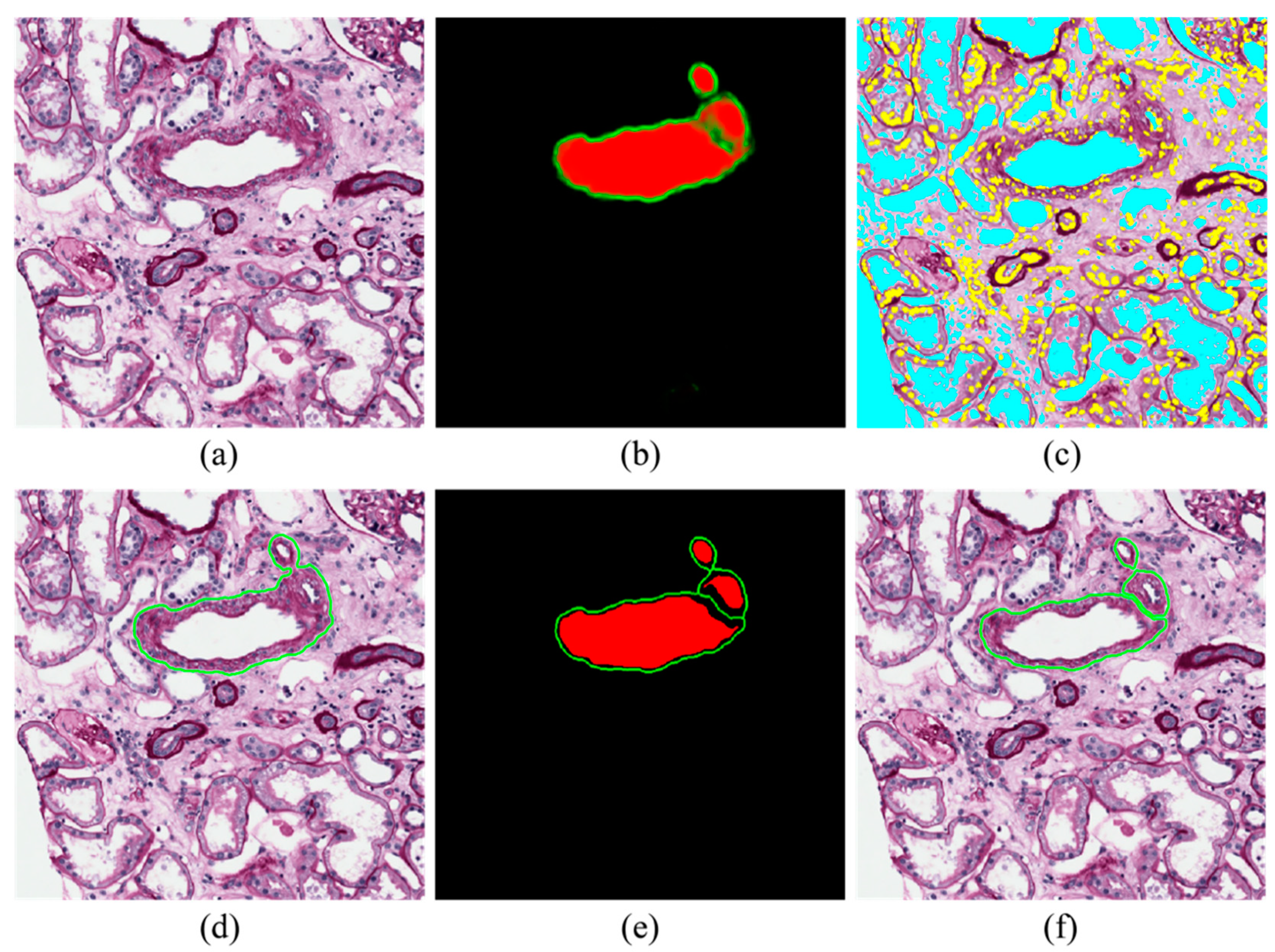

2.4. Blood Vessel Detection

- Inner region mask: thresholding (0.35) and level-set on the probability map of inner regions (red layer);

- Boundary mask: thresholding (0.35) and level-set on the probability map of boundary regions (green layer);

- New red layer of the softmax: subtraction of the boundary mask from the inner region mask;

- New green layer of the softmax: skeleton of the boundary mask.

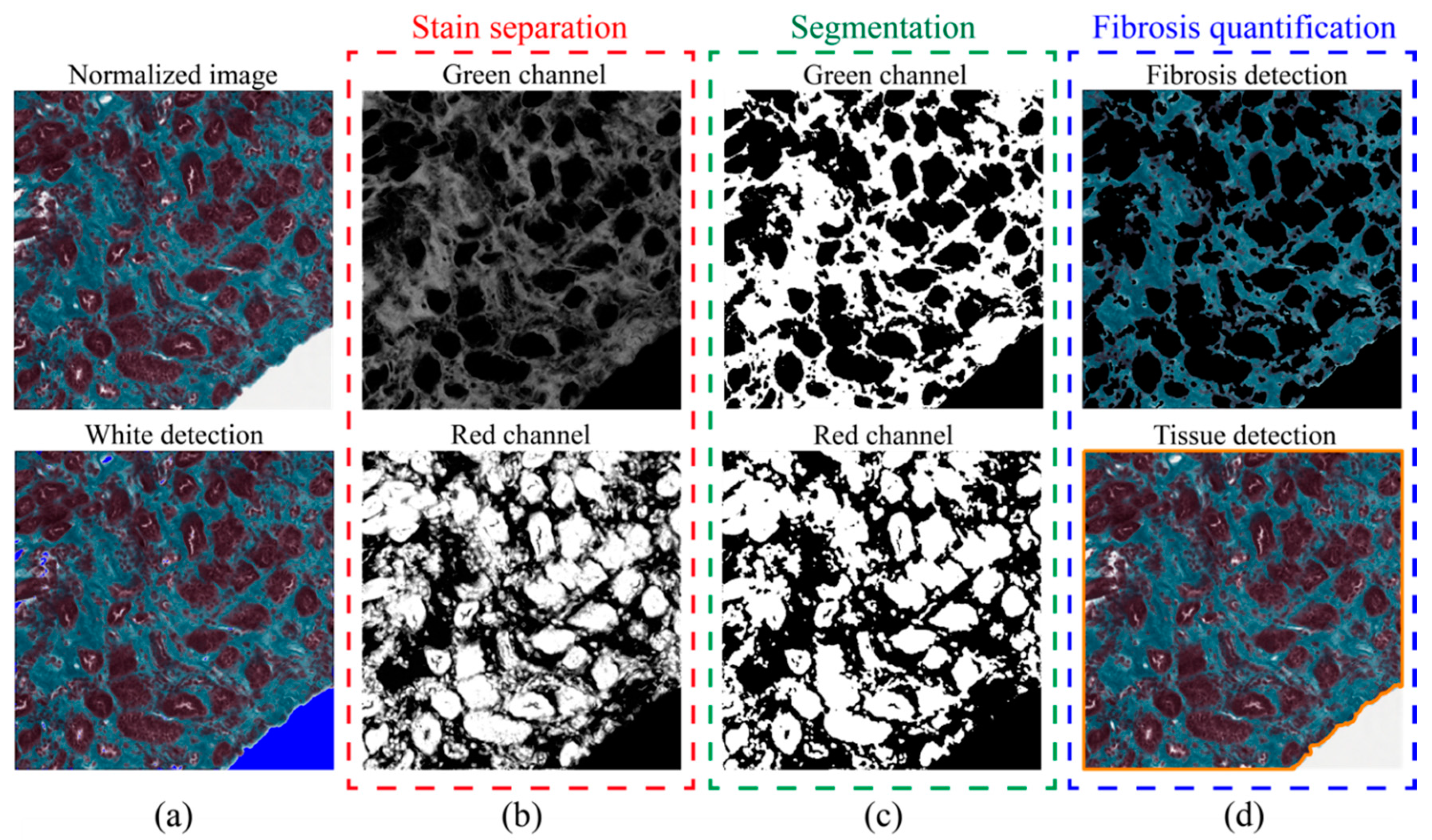

2.5. Fibrosis Segmentation

2.6. Performance Metrics

3. Results

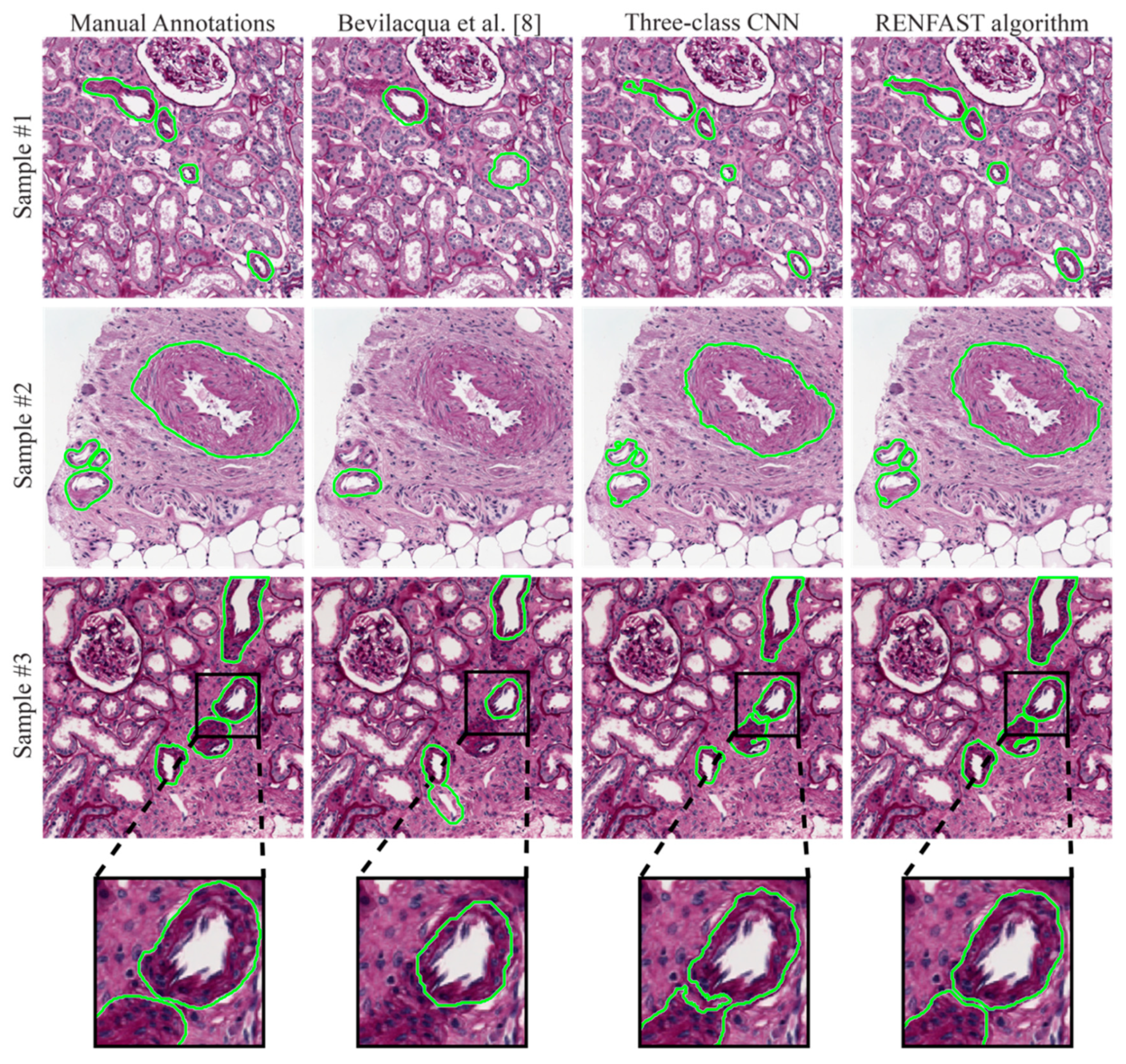

3.1. Blood Vessel Detection

3.2. Fibrosis Segmentation

3.3. Whole Slide Analysis

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Salmon, G.; Salmon, E. Recent Innovations in Kidney Transplants. Nurs. Clin. N. Am. 2018, 53, 521–529. [Google Scholar] [CrossRef] [PubMed]

- Metzger, R.A.; Delmonico, F.L.; Feng, S.; Port, F.K.; Wynn, J.J.; Merion, R.M. Expanded criteria donors for kidney transplantation. Am. J. Transplant. 2003, 3, 114–125. [Google Scholar] [CrossRef] [PubMed]

- Heilman, R.L.; Mathur, A.; Smith, M.L.; Kaplan, B.; Reddy, K.S. Increasing the use of kidneys from unconventional and high-risk deceased donors. Am. J. Transplant. 2016, 16, 3086–3092. [Google Scholar] [CrossRef]

- Altini, N.; Cascarano, G.D.; Brunetti, A.; Marino, F.; Rocchetti, M.T.; Matino, S.; Venere, U.; Rossini, M.; Pesce, F.; Gesualdo, L. Semantic Segmentation Framework for Glomeruli Detection and Classification in Kidney Histological Sections. Electronics 2020, 9, 503. [Google Scholar] [CrossRef]

- Karpinski, J.; Lajoie, G.; Cattran, D.; Fenton, S.; Zaltzman, J.; Cardella, C.; Cole, E. Outcome of kidney transplantation from high-risk donors is determined by both structure and function. Transplantation 1999, 67, 1162–1167. [Google Scholar] [CrossRef] [PubMed]

- Carta, P.; Zanazzi, M.; Caroti, L.; Buti, E.; Mjeshtri, A.; Di Maria, L.; Raspollini, M.R.; Minetti, E.E. Impact of the pre-transplant histological score on 3-year graft outcomes of kidneys from marginal donors: A single-centre study. Nephrol. Dial. Transplant. 2013, 28, 2637–2644. [Google Scholar] [CrossRef][Green Version]

- Furness, P.N.; Taub, N.; Project, C. of E.R.T.P.A.P. (CERTPAP) International variation in the interpretation of renal transplant biopsies: Report of the CERTPAP Project. Kidney Int. 2001, 60, 1998–2012. [Google Scholar] [CrossRef]

- Bevilacqua, V.; Pietroleonardo, N.; Triggiani, V.; Brunetti, A.; Di Palma, A.M.; Rossini, M.; Gesualdo, L. An innovative neural network framework to classify blood vessels and tubules based on Haralick features evaluated in histological images of kidney biopsy. Neurocomputing 2017, 228, 143–153. [Google Scholar] [CrossRef]

- He, D.-C.; Wang, L. Texture features based on texture spectrum. Pattern Recognit. 1991, 24, 391–399. [Google Scholar] [CrossRef]

- Tey, W.K.; Kuang, Y.C.; Ooi, M.P.-L.; Khoo, J.J. Automated quantification of renal interstitial fibrosis for computer-aided diagnosis: A comprehensive tissue structure segmentation method. Comput. Methods Programs Biomed. 2018, 155, 109–120. [Google Scholar] [CrossRef] [PubMed]

- Fu, X.; Liu, T.; Xiong, Z.; Smaill, B.H.; Stiles, M.K.; Zhao, J. Segmentation of histological images and fibrosis identification with a convolutional neural network. Comput. Biol. Med. 2018, 98, 147–158. [Google Scholar] [CrossRef]

- Monaco, J.; Hipp, J.; Lucas, D.; Smith, S.; Balis, U.; Madabhushi, A. Image segmentation with implicit color standardization using spatially constrained expectation maximization: Detection of nuclei. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Nice, France, 1–5 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 365–372. [Google Scholar]

- Peter, L.; Mateus, D.; Chatelain, P.; Schworm, N.; Stangl, S.; Multhoff, G.; Navab, N. Leveraging random forests for interactive exploration of large histological images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Boston, MA, USA, 14–18 September 2014; Springer: Cham, Switzerland, 2014; pp. 1–8. [Google Scholar]

- Ciompi, F.; Geessink, O.; Bejnordi, B.E.; De Souza, G.S.; Baidoshvili, A.; Litjens, G.; Van Ginneken, B.; Nagtegaal, I.; Van Der Laak, J. The importance of stain normalization in colorectal tissue classification with convolutional networks. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 160–163. [Google Scholar]

- Salvi, M.; Michielli, N.; Molinari, F. Stain Color Adaptive Normalization (SCAN) algorithm: Separation and standardization of histological stains in digital pathology. Comput. Methods Programs Biomed. 2020, 193, 105506. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Salvi, M.; Molinari, F. Multi-tissue and multi-scale approach for nuclei segmentation in H&E stained images. Biomed. Eng. Online 2018, 17. [Google Scholar] [CrossRef]

- Salvi, M.; Molinaro, L.; Metovic, J.; Patrono, D.; Romagnoli, R.; Papotti, M.; Molinari, F. Fully automated quantitative assessment of hepatic steatosis in liver transplants. Comput. Biol. Med. 2020, 123, 103836. [Google Scholar] [CrossRef]

- Schindelin, J.; Arganda-Carreras, I.; Frise, E.; Kaynig, V.; Longair, M.; Pietzsch, T.; Preibisch, S.; Rueden, C.; Saalfeld, S.; Schmid, B. Fiji: An open-source platform for biological-image analysis. Nat. Methods 2012, 9, 676–682. [Google Scholar] [CrossRef]

| Dataset | Subset | Stain | # Patients | # Images |

|---|---|---|---|---|

| Vessels | TRAIN | PAS | 30 | 300 |

| TEST | PAS | 5 | 50 | |

| Fibrosis | TRAIN | TRIC | 25 | 250 |

| TEST | TRIC | 5 | 50 |

| Method | Subset | Comp. Time (s) | BalACCURACY | Precision | Recall | F1SCORE |

|---|---|---|---|---|---|---|

| Bevilacqua et al. [8] | TRAIN | 2.58 ± 1.24 | 0.6845 ± 0.1467 | 0.8618 ± 0.1955 | 0.5115 ± 0.2196 | 0.5996 ± 0.1931 |

| TEST | 2.64 ± 1.18 | 0.6487 ± 0.1494 | 0.7677 ± 0.2647 | 0.4944 ± 0.2241 | 0.5684 ± 0.2281 | |

| Two-class CNN 1 | TRAIN | 0.57 ± 0.11 | 0.8821 ± 0.1116 | 0.9203 ± 0.0945 | 0.8026 ± 0.1630 | 0.8430 ± 0.1242 |

| TEST | 0.56 ± 0.09 | 0.8116 ± 0.1305 | 0.9308 ± 0.1004 | 0.6923 ± 0.1743 | 0.7741 ± 0.1419 | |

| Three-class CNN 2 | TRAIN | 0.74 ± 0.16 | 0.8744 ± 0.0861 | 0.9888 ± 0.0337 | 0.7706 ± 0.1199 | 0.8601 ± 0.0919 |

| TEST | 0.71 ± 0.18 | 0.8220 ± 0.1075 | 0.9800 ± 0.0800 | 0.6666 ± 0.1837 | 0.7740 ± 0.1597 | |

| RENFAST algorithm | TRAIN | 2.67 ± 0.41 | 0.9443 ± 0.0821 | 0.9185 ± 0.0634 | 0.9151 ± 0.0950 | 0.9126 ± 0.0611 |

| TEST | 2.59 ± 0.53 | 0.8936 ± 0.0969 | 0.9269 ± 0.0845 | 0.8185 ± 0.1344 | 0.8593 ± 0.0858 |

| Method | Subset | DSC | HD95 (μm) |

|---|---|---|---|

| Bevilacqua et al. [8] | TRAIN | 0.7476 ± 0.1517 | 20.33 ± 21.67 |

| TEST | 0.7668 ± 0.1381 | 22.31 ± 34.62 | |

| Two-class CNN 1 | TRAIN | 0.7447 ± 0.2312 | 21.13 ± 30.59 |

| TEST | 0.6879 ± 0.2417 | 26.68 ± 36.50 | |

| Three-class CNN 2 | TRAIN | 0.7802 ± 0.1777 | 12.02 ± 22.45 |

| TEST | 0.7483 ± 0.1790 | 9.35 ± 8.84 | |

| RENFAST algorithm | TRAIN | 0.8441 ± 0.1762 | 9.78 ± 10.51 |

| TEST | 0.8358 ± 0.1391 | 6.41 ± 6.25 |

| Method | Subset | Comp. Time (s) | BalACCURACY | Precision | Recall | F1SCORE |

|---|---|---|---|---|---|---|

| Tey et al. [10] | TRAIN | 0.24 ± 0.04 | 0.8575 ± 0.0374 | 0.7538 ± 0.0780 | 0.8905 ± 0.0744 | 0.8147 ± 0.0515 |

| TEST | 0.25 ± 0.07 | 0.8604 ± 0.0428 | 0.7512 ± 0.0736 | 0.9055 ± 0.0734 | 0.8166 ± 0.0492 | |

| Fu et al. [11] | TRAIN | 0.16 ± 0.06 | 0.8988 ± 0.0660 | 0.8832 ± 0.1072 | 0.8940 ± 0.1469 | 0.8727 ± 0.0896 |

| TEST | 0.18 ± 0.09 | 0.9159 ± 0.0491 | 0.8783 ± 0.1019 | 0.9239 ± 0.1026 | 0.8911 ± 0.0644 | |

| No norm. 1 | TRAIN | 0.17 ± 0.07 | 0.9128 ± 0.0221 | 0.9025 ± 0.0482 | 0.8765 ± 0.0434 | 0.8900 ± 0.0240 |

| TEST | 0.18 ± 0.11 | 0.9164 ± 0.0247 | 0.9157 ± 0.0304 | 0.8738 ± 0.0499 | 0.8944 ± 0.0277 | |

| RENFAST algorithm | TRAIN | 0.27 ± 0.13 | 0.9212 ± 0.0199 | 0.9064 ± 0.0355 | 0.8958 ± 0.0480 | 0.8973 ± 0.0275 |

| TEST | 0.29 ± 0.14 | 0.9227 ± 0.0222 | 0.9184 ± 0.0313 | 0.8891 ± 0.0482 | 0.9010 ± 0.0246 |

| Method | Subset | AEMIN (%) | AEMEAN (%) | AEMAX (%) |

|---|---|---|---|---|

| Tey et al. [10] | TRAIN | 0.03 | 8.79 | 42.46 |

| TEST | 0.59 | 8.73 | 38.41 | |

| Fu et al. [11] | TRAIN | 0.01 | 7.81 | 38.62 |

| TEST | 0.04 | 5.93 | 28.73 | |

| No norm. 1 | TRAIN | 0.01 | 2.52 | 11.21 |

| TEST | 0.05 | 2.50 | 8.29 | |

| RENFAST algorithm | TRAIN | 0.01 | 2.42 | 11.17 |

| TEST | 0.01 | 2.32 | 7.81 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salvi, M.; Mogetta, A.; Meiburger, K.M.; Gambella, A.; Molinaro, L.; Barreca, A.; Papotti, M.; Molinari, F. Karpinski Score under Digital Investigation: A Fully Automated Segmentation Algorithm to Identify Vascular and Stromal Injury of Donors’ Kidneys. Electronics 2020, 9, 1644. https://doi.org/10.3390/electronics9101644

Salvi M, Mogetta A, Meiburger KM, Gambella A, Molinaro L, Barreca A, Papotti M, Molinari F. Karpinski Score under Digital Investigation: A Fully Automated Segmentation Algorithm to Identify Vascular and Stromal Injury of Donors’ Kidneys. Electronics. 2020; 9(10):1644. https://doi.org/10.3390/electronics9101644

Chicago/Turabian StyleSalvi, Massimo, Alessandro Mogetta, Kristen M. Meiburger, Alessandro Gambella, Luca Molinaro, Antonella Barreca, Mauro Papotti, and Filippo Molinari. 2020. "Karpinski Score under Digital Investigation: A Fully Automated Segmentation Algorithm to Identify Vascular and Stromal Injury of Donors’ Kidneys" Electronics 9, no. 10: 1644. https://doi.org/10.3390/electronics9101644

APA StyleSalvi, M., Mogetta, A., Meiburger, K. M., Gambella, A., Molinaro, L., Barreca, A., Papotti, M., & Molinari, F. (2020). Karpinski Score under Digital Investigation: A Fully Automated Segmentation Algorithm to Identify Vascular and Stromal Injury of Donors’ Kidneys. Electronics, 9(10), 1644. https://doi.org/10.3390/electronics9101644