1. Introduction

The study of multi-sensor data fusion is a major area of research in distributed multi-agent systems, sensor networks, wearable computing, and fault detection [

1,

2,

3], since this fusion unifies multiple sources of data into a unique and accurate picture of output. The role of data fusion enables intelligent agents in uncertain real-time domains to be aware and ready to act based upon an improved snapshot of environments. Multi-sensor fusion is mostly applied to fully monitoring an environment in which agents are working on the mapping from percept sequences to actions.

Information fusion from different sensors has become a crucial component in distributed military surveillance environments [

4,

5,

6]. Robot agents need to extrapolate and identify the types of moving threats to ensure their surveillance capabilities. In case that robots recognize any target while in searching mode, they should track the target and determine its type and position to successfully complete their supervision mission. This paper focuses on information fusion processing that refines the estimation of types for a specific target, improving the reliability of target identification, and continues to seek out its positions. We propose a set of fusion operators to formulate a combined prediction from multi-source data, which are represented by the degrees of reliability denoted in mathematical probabilities.

Battlefield surveillance robots equipped with infrared sensors closely monitor moving targets. These military robots are semi-autonomously operated; that is, their actions are mostly decided by themselves, but sometimes controlled by their commanders. The multiple robots periodically scan regions and, when they spot any possible threat, inform the control center of their estimations. The control center then fuses the several pieces of evidence from the multiple sensors of different military robots. Given the multiple targets identified, the agents need to decide their target mainly monitored as considering the effective coordination. A decision-theoretic framework is here proposed as a method for the overall correspondence among robots and targets. This approach endows surveillance robots with flexible and dynamic target selection for their real-time coordination in multi-agent environments. The commander at the control center provides feedback on the estimations of the types of multiple robots based upon the results of fusion processing and the best target allocation coordinated with other robots.

We examined the components of a simulation system to iteratively recognize the types of targets proceeding on the pre-planned path in many different settings and to variously test the effective coordination of multiple agents given the current situation. We designed and implemented the simulator and evaluated the agents’ surveillance capabilities in various battlefield settings. The simulator consists of an information panel of military agents and moving targets and a scenario display showing their interactions. The scenario panel presents multiple robots equipped with infrared sensors, a group of targets with different types and their route, and obstacles. The information panel of the simulator displays the information about the military agents and their monitored targets, and includes the resulting fusion accuracy of the type of a specific target identified and the order of targets being set according to their priority as the result of coordination.

The following section describes our work in comparison with related research.

Section 3 explains how to combine multi-sensor data from military robots for surveillance and how to dynamically determine their coordinated target selection.

Section 4 describes the event flow and architecture of the simulator for information fusion and intelligent target allocation in given scenarios. Using the battlefield simulator, in

Section 5, we validate our framework empirically and present the experimental results using our simulator. The final section summarizes our results and discusses further research issues.

2. Related Work

In the field of data fusion technologies, considerable efforts have focused on producing substantial advances in various applications. Khaleghi et al. [

2] comprehensively reviewed fusion methodologies in multi-sensor data fusion domains and several future directions of research in this community. The bulk of imperfect data fusion algorithms were applied to probabilistic fusion, evidential belief reasoning, fuzzy reasoning, and so on. They summarized the use of Dempster–Shafer (DS) theory [

7] for the data fusion problem from an evidential belief reasoning perspective, and discussed the differences between the accuracy of the Bayesian method and the flexibility of DS inference. Wu et al. [

8] also discussed the relationship between the DS approach and the Bayesian method, and their work using DS theory described the user’s attention through mapping from sensor’s output to the context representation. Alyannezhadi et al. [

9] considered multi-sensory data fusion for unknown systems. The proposed approach was based on a clustering scheme with multi-layer perceptron. King et al. [

3] addressed data fusion techniques given the input of wearable sensor data and applied the data to health monitoring applications. To exploit the input data available using wearable sensors and improve the accuracy of the output, they employed a set of data fusion techniques including support vector machines, artificial neural network, and deep learning for feature level non-parametric algorithms, and Bayesian inference and fuzzy logic for decision level algorithms. Among the different types of data fusion research algorithms, in our research using DS theory, we mainly consider the sensor fusion of data perceived by multiple robots, and focus on the improvement of our agents’ situation awareness capabilities.

Our military surveillance robot system can be classified into weakly coordinated systems [

10], which usually are not based on the application of a predefined coordination protocol. Our robots are considered weakly coordinated because they follow decision theory [

11] and dynamically perform their coordinated behaviors depending only upon their local perception given a situation at hand. Once cooperative and coordinated multi-robot systems were developed, the multi-robot task allocation problem [

12,

13] became a main concern of research. In general, the goal of multi-robot task allocation is to map the multiple robots to their accomplished tasks. To solve the multi-robot task allocation problem in various fields of application, different research attempts have been made. In Khamis et al. [

14], the approaches are largely classified into two types: market-based [

12,

13] and optimization-based approaches [

15]. The task of the multi-agents in our study is to monitor a set of moving vehicles in battlefield environments. This problem will be modeled as an optimization-based multi-robot task allocation problem. In this paper, for optimal target allocation, the agents can be equipped with dynamic coordination capabilities achieved by the computation of expected utilities, though they pay the computational cost for all possible combinations of a pair of robot and target.

From the approaches for modeling and simulation of military-relevant robots in the field of simulated battlefield environments [

4,

16], several simulation systems emphasizing situation awareness were constructed. Parasuraman et al. [

5] designed a robotic simulation to complete several tasks including target identification task within a situation map. The unmanned ground vehicle, as a target, moves through the area following a pre-planned path. Their simulation system only supports a single unmanned ground vehicle and its identification, even if the simulation displays route planning, communication, and change detection on the situation map. Sharma et al. [

6] exploited agent-based modeling for a convoy of unmanned vehicles. Their simulation showed a battlefield environment from a single starting point to a single goal, and the environment modeled and plotted various objects, e.g., an enemy outpost, minefields, number of soldiers in platoons, and barriers. Dutt et al. [

17] focused on the modeling and simulation of whether a potential threat location in a tactical battlefield is needed for more investigation using instance-based learning. They assessed their model’s accuracy of threat and non-threat identification in their experiment. In this study, we follow the research on the modeling and simulation in military environments with multiple robots and a group of targets moving on their pre-planned routes with the goal of identifying the specific type of target and the effective coordination of multiple surveillance agents. Our efforts complete the fully autonomous agents from situation awareness to their successful coordination mission in various battlefield situations.

3. Multi-Sensor Data Fusion and Rational Coordination

This section presents a set of fusion rules to merge multi-sensed data into integrated information. Multiple robots then perform coordinated target allocation using decision theory.

3.1. Combining Multi-Sensor Data Using Fusion Operators

To be aware of situation perceived from multiple sensors, distributed surveillance robots require a method to combine a series of data that they have sensed. It is critical that first, the battlefield robots estimate the possible types of targets based upon the data merged. After obtaining data from the sensors, multiple robots inform the control center of their estimations. The control center then fuses evidence multi-sensed from different robots. The combined prediction given a specific target for the commander is defined as:

where

and

represent the confidence of the possible type of a specific target

from robots

i and

j, respectively;

and

; and

and

.

A set of fusion rules is used to formulate the combined prediction from multi-source data expressed in degrees of reliability for the type of a target that has the mathematical properties of probabilities. The fusion rules must be simply applicable because the robots, under time pressure, need to obtain the fusion results as quickly as possible. Considering the complexity of the fusion process, given confidence values of and for , the aggregation operators, are as follows:

The aggregation operators are applied to multi-source data to obtain the combined prediction representing the overall degrees of belief on the type of a specific target. The goal of fusion processing is to combine the estimations from distributed military robots when each of them estimates the probability of reliability on the type of a target. Another goal is to produce a single probability distribution that summarizes their probabilities. Without loss of generality, the aggregation operators can be used to merge data sensed by more than two robots.

Among the aggregation operators, the mean operator extends a statistic summary and provides an average of

from the different robots. The product rule summarizes the probabilities that coincide with

and

. In this case, neither

nor

should be zero, since the product operator suffers from the limitation that if one operand is zero, the entire product will be zero. To avoid the zero results of combined prediction using the product operator, in general, they assume that these zeroes could be replaced with very small positive numbers close to zero, for example,

. Dempster’s rule for combining degrees of belief produces a new belief distribution that represents the consensus of the original opinions [

8,

18,

19]. Using Dempster’s rule, the resulting values of

’s indicate the degrees of agreement on different robots’ probabilities of reliability on the type of a target; however, they completely exclude the degrees of disagreement or conflict. The advantage of using the Dempster’s rule in our fusion processing is that no priors or conditionals are needed.

The normalization of combined prediction is given as:

considering all the estimations about the types of a target. The normalized prediction thus represents the overall confidence on a set of uncertain estimations, and it translates the combined prediction into a specific value ranging from 0 to 1, where

.

3.2. Intelligent Decision-Making for Target Allocation

For real-time target allocation, the surveillance robot agents should monitor their own targets that are constantly progressing or sometimes are not seen behind obstacles. It is impossible for each agent to only watch its specific target during the whole scenario at hand. Thus, the agents should make new decisions coordinated with other agents in every snapshot of an ever-changing situation not based upon a predetermined protocol. For this dynamic coordination of the agents, we endow them with decision theory [

11].

To be rational in the decision-theoretic sense, the agents follow the principle of maximum expected utility (PMEU) [

11]. This section shows how PMEU can be implemented in the decision-making process of target allocation under uncertainty for military robots. The agents equipped with PMEU select the most appropriate behavior to effectively monitor moving targets.

We use the following notation:

A set of agents: ;

A set of actions of agent , : ;

A set of world states: .

The expected utility of the best action

of agent

, arrived at using the body of information

E and executed at time

t, is given by:

where

is the probability that will obtain the state

after action

being executed at time

t, given the body of information

E; and

is the utility of the state

.

To formalize the decision-making problem of target allocation with multiple military robots, decision theory should be composed of probabilities and utilities, as defined in (

3). In our model, the probability that a robot keeps track of a target is defined by the difference between the actual target position and the predicted target position, as estimated by a Kalman filter [

20]. The utility that denotes the desirability of a resulting state as a robot keeps tracking a moving target is assumed to depend upon the attributes, i.e., target velocity approaching the robot, distance between the robot and target, intrinsic value of threat according to the type of a target, and so on. From the robot’s perspective, the utility function assigns a real number representing the overall preferences to a target given the topology of surveillance robots, obstacles, and moving targets.

Given the mapping from a decision-theoretic robot to a specific target, our framework for the coordination of multiple robots optimizes their overall target allocation in battlefield environments. The best target allocation of robots

R and targets

T with the number of robots

m and the number of targets

n is as follows:

where

is one of all possible combinations of robots and targets for

and

, given the body of information

E and at time

t; and

l indicates one of the expected utilities of

. Our coordinated agents following decision theory should decide their optimized selection that maximizes their total expected utilities, as described in (

4). Thus, the multi-robot coordination through the computation of expected utility enables our agents to actively perform dynamic and flexible target allocation in real time.

4. Implementation of Intelligent Simulator for Data Fusion and Target Allocation

We designed and implemented a unit simulator for an individual fusion process, and developed an integrated simulator to fuse data perceived by distributed robots to keep track of targets through the coordination of the multiple robots in simulated battlefield settings. The simulators were programmed using version , Visual Studio 2017 in the .NET environment.

4.1. Unit Simulator for Individual Fusion Process

An individual fusion process was implemented using the aggregation operators of mean, product, and DS theory, as depicted in

Figure 1. As shown in the left upper part of

Figure 1, up to six military robots can be selected, i.e., from Robot1 to Robot6, and the possible types of a specific target that they monitor include a sport utility vehicle (SUV), a truck, an armored personnel carrier (APC), or a tank. Given input values of confidence for each type of target, the combined prediction button calculates the fusion of confidence values according to (

1) using three fusion operators. The normalization button returns a normalized output value, which is computed by (

2), as shown in the left lower part of

Figure 1. The plot button displays a graph whose bar represents the accumulated confidence values on each type of target, as shown in the right side of

Figure 1. The reset button initializes the fusion processing. These buttons are displayed in the left middle part of

Figure 1.

The unit simulator, as shown in

Figure 1, represents the result of an individual fusion process given a current situation. Let

and

from robots

i and

j for

, which represent SUV, Truck, APC, and Tank, respectively. Therefore, there are two surveillance robots,

i and

j, monitoring a specific target, whose type is uncertain among the four types. Given confidence values, aggregation rules can be applied to obtain a combined prediction, as defined in (

1). The outputs of combined prediction are summarized in

Table 1.

When a mean aggregator is used, among the fusion operators, the resulting distribution of combined prediction similarly reflects the distribution of confidence values from each robot’s perspective, since it denotes the average of two input values. With product and DS theory, however, the

’s by

and

(

and

) of the combined prediction are much bigger than the other combined values, i.e.,

’s by

and

(

and

),

’s by

and

(

and

), and

’s by

and

(

and

), compared with the original distributions of their estimations. Normalizing the combined prediction

, as defined in (

2), compares the confidence values on types of a target with each other in the range of 0 and 1. Thus, the normalized prediction using product and DS theory as fusion rules (

and

) indicates that the type of target monitored is definitely an SUV for

.

4.2. Intelligent Simulator for Data Fusion and Target Allocation

The events of our simulator within a cycle occur in order, as depicted in

Figure 2. In preparation mode, the types of targets and their proceeding routes were preset, some obstacles preventing robots from their observation of targets were placed, and the multiple robots were located. To start the simulation, targets proceeding in the route were previously defined, and the robots began to search for possible targets within their range. The robot agents perceive targets and obstacles through infrared sensors, and then extrapolate the type of targets given a set of percepts. The control center fuses multiple robots’ estimations and sends a unique and integrated identification back to them. After identifying the type of targets, a group of surveillance robots coordinate each other to monitor targets even if some of them are hidden by obstacles.

For the target identification and coordination of surveillance robots, we designed and developed an integrated simulator. A unit simulator for data fusion, as depicted in

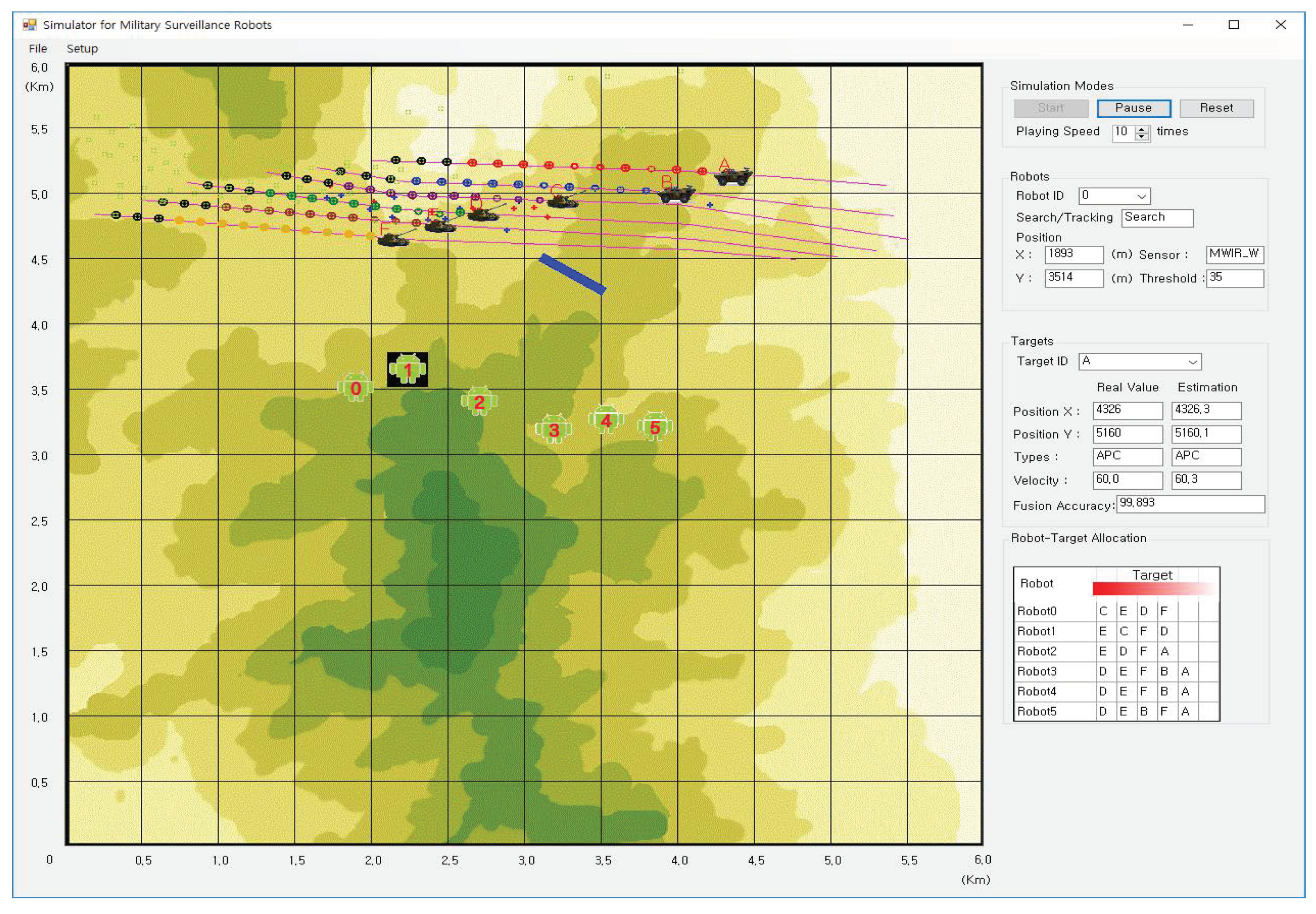

Figure 1, was plugged into the integrated simulator, which included the task of rational coordination for target allocation. The intelligent simulator for data fusion and target allocation in

Figure 3 consists of the information panel of the surveillance robot agents and moving targets, and the scenario display panel. The scenario panel presenting the interaction of the agents and targets displays a group of targets with four different types and their route, the multiple robots equipped with infrared sensors, and obstacles denoted by bars. The right panel of the simulator displays the information about the robot agents and targets. In its top of the panel, there are three simulation modes: Start, Pause, and Reset, and the playing speed can be up to 10 times faster than the original speed. Below the simulation modes, robot information is listed. The parameters are robot ID from

0 to

5, search or tracking mode, position, sensors, and a threshold value switching search mode into tracing mode. Regarding target information, the parameters are target ID from

A to

F, the real values and estimated values of position, types, velocity, and fusion accuracy in two columns. The bottom of the right panel describes robot–target allocation determined by the robots’ coordination. In this scenario, there are six surveillance robots, six identified moving targets, and a specific obstacle. The surveillance robots perceive information through sensors and generate probabilities and utilities, which are then used to optimize target selection that maximizes their total expected utilities, as described in (

4). From each robot’s perspective, the leftmost column in the table displays the best appropriate target being tracked in each given state. As states evolve, each column is shifted to the right direction.

Our simulator provides various scenarios with different formations of targets, robots, and obstacles, and iteratively tests the capabilities of surveillance robot agents in simulated battlefield settings. The intelligent simulator can ultimately provide a test bed for our agents to experimentally verify their autonomy for situation awareness, target detection, and continuous tracking.

5. Experiments and Data Analysis

To test surveillance robots’ capabilities in the fusion process, we performed two experiments and measured the performance of the fusion results (1) on the unique estimation of types of a target using three aggregation operators, and (2) in the case where the robots are equipped with different sensors using the DS theory aggregation operator. Another experiment focused on (3) the performance of coordinated target selection to verify the dynamic target allocation of the robots. For the above three experiments, the performance of our agents was tested in various realistic battlefield situations.

5.1. Individual Fusion Process Using Three Aggregation Operators

To obtain experimental results for our fusion process in various military environments, we developed a program that lists input values and aggregation results in text and graph, as depicted in

Figure 4. The goal of our experiment was to investigate the distribution of confidence values, i.e., the results of three fusion operators applied to surveillance data perceived by the infrared sensors of robots. In the experiment, we assumed that two military robots simultaneously track a specific target at a randomly generated distance. The distance between a battlefield robot and a target is categorized into three ranges: short range (0–3 km), middle range (3–5 km), and long range (5–10 km). Short- and long-range targets each compose

of the total, and the remaining

by middle-range targets.

Figure 4 is divided into two parts, the situation panel on the left and the graph panel on the right. The situation panel consists of a distance from robot1, a distance from robot2, robot1’s confidence value on a specific target given a distance, robot2’s confidence value on the same target given another distance, and the results of fusion processing according to three aggregation operators (mean, product, and DS theory). When the combined prediction button is pressed, the information above and the results of fusion processing are automatically generated over 100 situations. On the graph panel, when targets are generated for short or middle ranges from the robots, the resulting confidence values produced by the product operator and the DS theory operator have overall larger values than those produced by the mean operator. This indicates that product and DS theory operators increase the belief in the accuracy of the specific target when two robots agree on target type compared with the mean operator. When targets are generated for long ranges, the bottom of the graph panel shows that the robots’ estimations of the types of targets do not agree and the resulting values of fusion are relatively low, ranging from

to

.

As such, we only considered the performance of the operators when targets were generated for short or middle ranges. In summary, among the three aggregation operators tested, the fusion process using DS theory showed the most accurate performance, with accuracy averaging at approximately .

5.2. Identifying Types of Targets Depending on Different Sensors

We created two kinds of military scenarios to test the DS theory operator using the intelligent and integrated simulator, as shown in

Figure 3. In each scenario, robot agents tried to predict the type of target in 100 tests for each of the four types of targets: SUV, Truck, APC, and Tank. The surveillance robots were equipped with two different sensors: mid-wavelength infrared (MWIR) and long-wavelength infrared (LWIR). The distance between a target and robots was unchanged between each test. To predict the type of a target and measure the performance of fusion results, the DS theory aggregation operator was applied to the multi-sensed data from the surveillance robots, since the fusion results using DS theory showed the best performance in the experiment in

Section 5.1.

With two surveillance robots and one target scenario, the distance between Robot0 and the target was set to 3 km, and the distance between Robot1 and the target was set to 4.5 km. At first, both the robots were in search mode, searching for targets while rotating 360 degrees. When the probability that a target was considered to be a real threat passed a certain threshold value, each of the robots switched into tracking mode, selectively concentrating on tracking the target through their infrared sensors. The threshold value for switching mode was set to

, since this was the lowest value at which the robots could identify the types of targets in the previous experiment, as depicted in

Figure 4.

Table 2 summarizes the fusion results with two surveillance robots using MWIR and LWIR sensors and different types of targets. The numeric value in each row of

Table 2 represents the average performance of 10 runs for the accuracy of the prediction for the specific target type. For all kinds of targets in these experimental settings, the performance of the robots equipped with LWIR sensors was superior to that of the robots equipped with MWIR sensors. In this experimental setting for two robots and one target, the LWIR sensors were more adequately used to perceive a possible threat in predefined distances, i.e., 3 and 4.5 km, rather than the MWIR sensors. The robots showed the most accurate fusion performance when they were predicting tanks. This was due to the fact that the probability distribution of the distance between each robot and the tank was clearly higher than that of the distance between each robot and the three other types of targets.

As another experiment with three surveillance robots and one target scenario, all the distances between each robot and the target were set to 4 km. Two robots were in tracking mode while one was in search mode, and a target was proceeding on the planned path.

Table 3 summarizes the fusion results of the three surveillance robots using MWIR and LWIR sensors for a specific target of different types after 10 runs per each type of a target.

We confirmed that the experimental results with three robots and one target, as described in

Table 3, were similar to those with two robots and one target, as described in

Table 2. The only difference was that the resulting predictions of the types of targets in the scenario with three robots and one target were more accurate than those with two robots and one target. This difference derived from the normalization of the combined prediction using the DS theory operator considerably increasing the degree of consensus among the robots. Thus, compared with the case with two robots agreeing with each other, the accuracy increased with three robots closely agreeing with each other regarding their predictions of the types of targets.

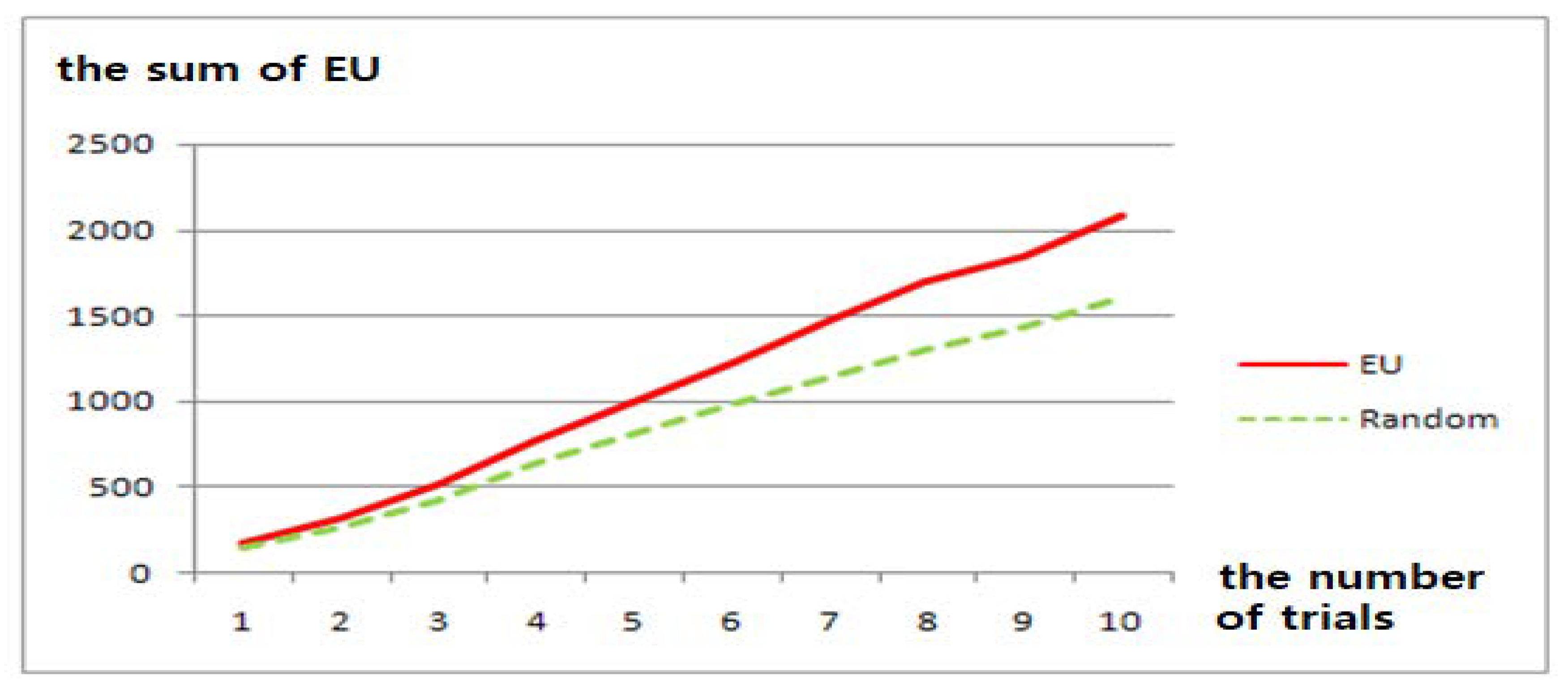

5.3. Rational Decision-Making for Target Allocation

The goal of this experiment was to test the target allocation algorithm for multiple coordinated robots, as described in (

4). For target allocation, the performance of our decision-theoretic agents was compared with those of agents that randomly chose targets in scenarios with four surveillance robots and four moving targets. We set up 10 different scenarios using the simulator, as depicted in

Figure 3, and measured the agents’ performance using two strategies for 10 repetitions of each scenario. The agents’ performance is represented by the sum of expected utilities when they made their decisions regarding target selection. The experimental result is summarized in

Figure 5.

After the 100 total trials for the 10 scenarios, the accumulative sum of expected utilities computed by our agent’s target selection was

higher than that of the agents using the random strategy. The more accurate target allocation of robots

R and targets

T with the number of robots

m and the number of targets

n, as described in (

4), allowed our decision-theoretic agents to dynamically choose their appropriate targets in real time. We found that the difference between the performance of agents with two different strategies for target allocation was clearer when the number of surveillance robots and the number of targets increased from two robots and two targets to four robots and four targets. This experimental result showed that the performance of the robot using a random strategy was inferior to that of our agent as the complexity of situations increased.

6. Conclusions

Here, we proposed a set of fusion operators to combine multi-sensor data from military robots and a framework of coordinated target allocation for surveillance robot agents, and implemented a scenario-based simulator to repeatedly assess the intelligent fusion process and the coordinated target selection in battlefield environments. After confirming the estimation capabilities of robot agents using the unit simulator, we plugged it into an integrated simulator that displays interactions between the surveillance robot agents and a group of targets. The integrated battlefield simulator had multiple targets moving on pre-planned paths, which enabled our agents to verify their target type estimation performance and to test their coordinated capabilities regarding target selection in various battlefield situations.

Military surveillance robots search for possible threats among some targets. Other than the paths followed by the targets, the position and number of obstacles can also be programmed in advance to thus test whether the robots can track threats and communicate the results of fusion processing even when they momentarily do not have a visual on these targets. We developed our simulator so that it could successfully create simulated uncertain battlefield environments in which the autonomy of military robots could be repeatedly tested for their rational decision-making for target identification and intelligent coordination to continuously track the subsequent movements of targets. Using our unmanned surveillance simulator as a test bed, we evaluated our agents’ performance of data fusion and coordination. We found that our flexible agents used aggregation operators for multi-sensed data fusion, and our decision-theoretic agents outperformed agents applying random strategy for the task of target coordination.

The development of this intelligent fusion and coordination simulator contributes to setting up specific scenarios including realistic moving vehicles, obstacles, and distributed surveillance robots with sensors, highlighting the iterative evaluation and verification of our friendly agents’ data fusion and coordination capabilities based upon the identification of hostile moving vehicles. For future research, the proposed framework in our simulator could be applied to real battlefield situations in which the commander of the control center communicates with multiple surveillance robots, fuses their sensed data, and assigns the best target to monitor. We will extend the intelligent fusion and coordination simulator to more complicated environments, in which our agents move on land, sea, or air space and perceive data using multiple sensors while continuously moving. Our agents should be fully autonomous for situation awareness and should adaptively respond to the ever-changing real situation.