Balancing Distributed Key-Value Stores with Efficient In-Network Redirecting

Abstract

1. Introduction

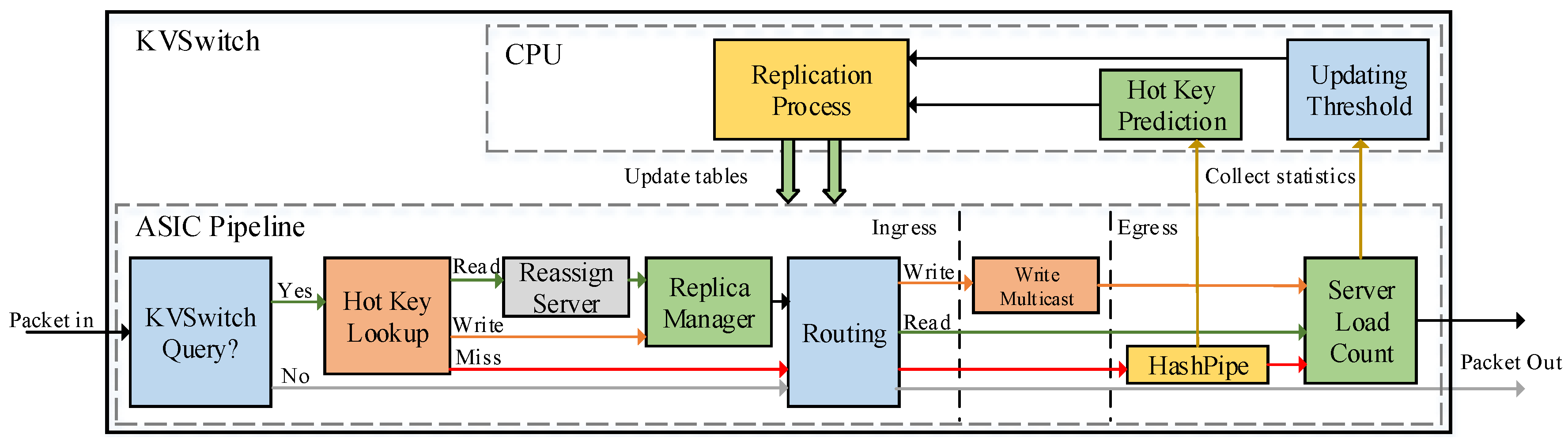

- We design the KVSwitch, an in-network balancer that uses programmable switches to provide dynamic load balancing for KVS without the need for additional hardware (Section 3).

- We devise a replication strategy that dynamically identifies hot items from the changing workload, which can significantly reduce unnecessary replicas (Section 4.1).

- We design a packet processing pipeline that efficiently detects and redirects queries for hot items and accurately collects the workload statistics (Section 4.2).

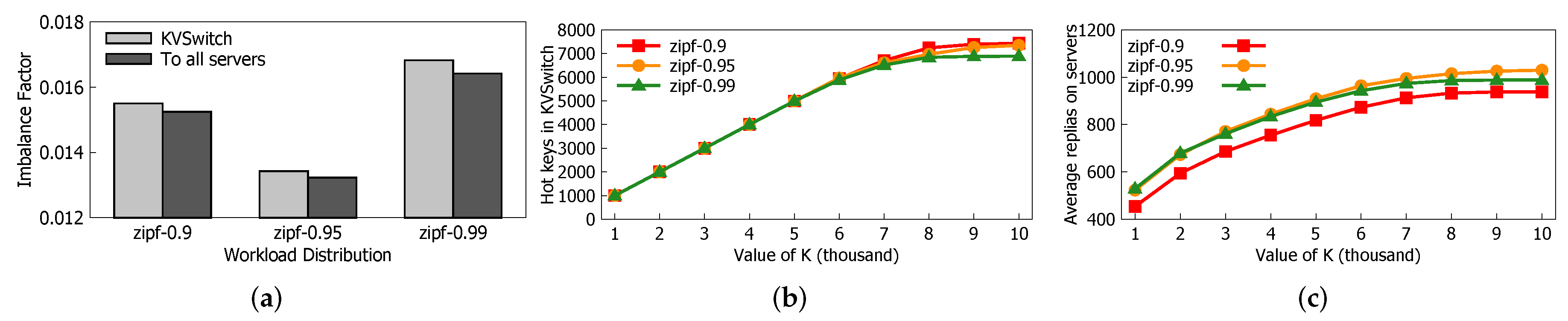

- We prototype KVSwitch in a Tofino switch and perform evaluations on it. The results demonstrate that KVSwitch is able to achieve satisfactory load balance with only resource consumption on servers compared to simply copying hot items to all servers (Section 5).

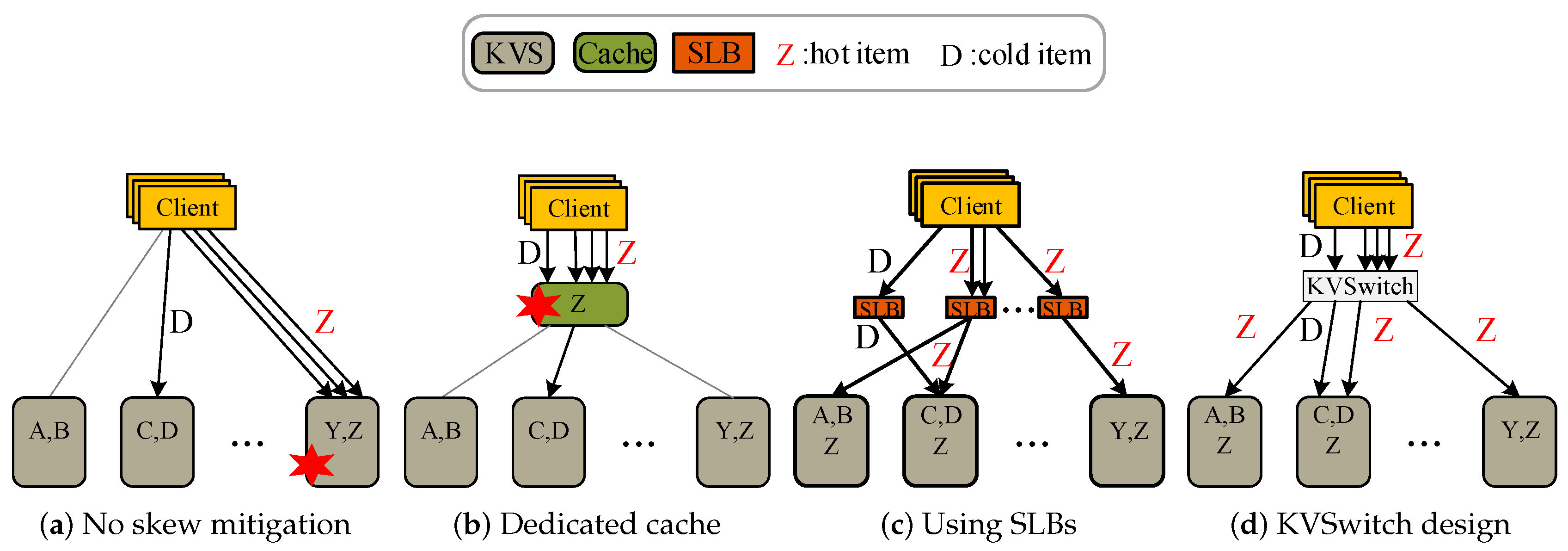

2. Motivation

2.1. Skew and Load Imbalance of KVS

2.2. Existing Solutions for Skew Mitigation

2.3. Programmable Switch

3. KVSwitch Overview

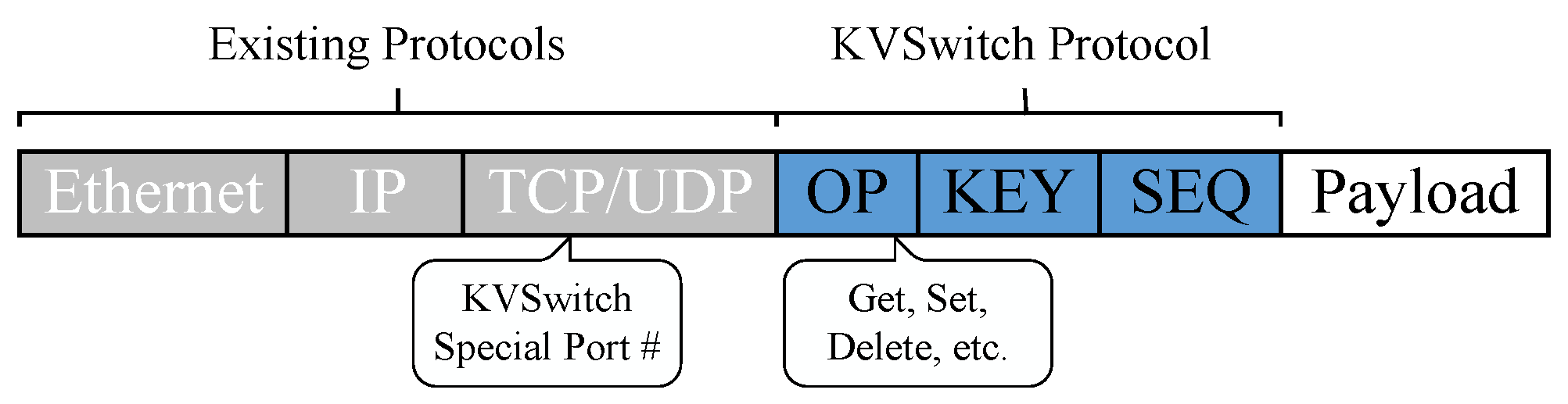

3.1. Network Protocol

3.2. Processing Query

| Algorithm 1: ProcessQuery(pkt) |

|

4. KVSwitch Design

4.1. Functions in CPU

4.1.1. Load Estimation for Next Period

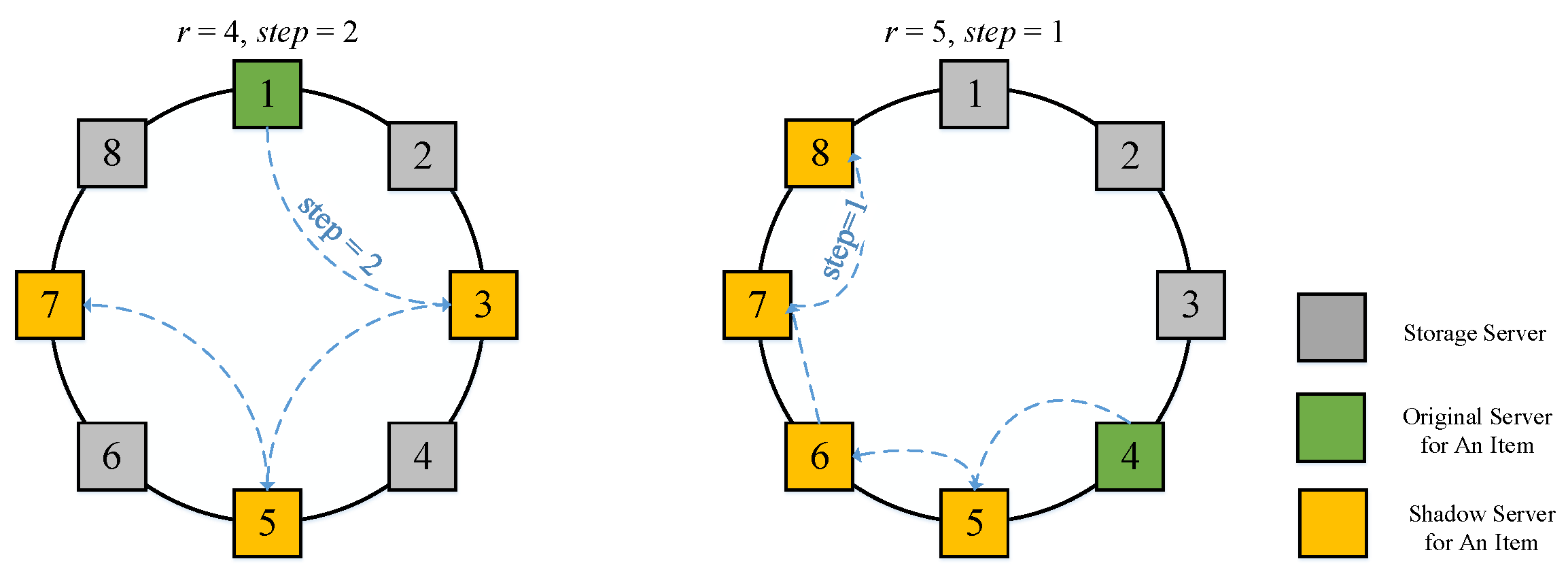

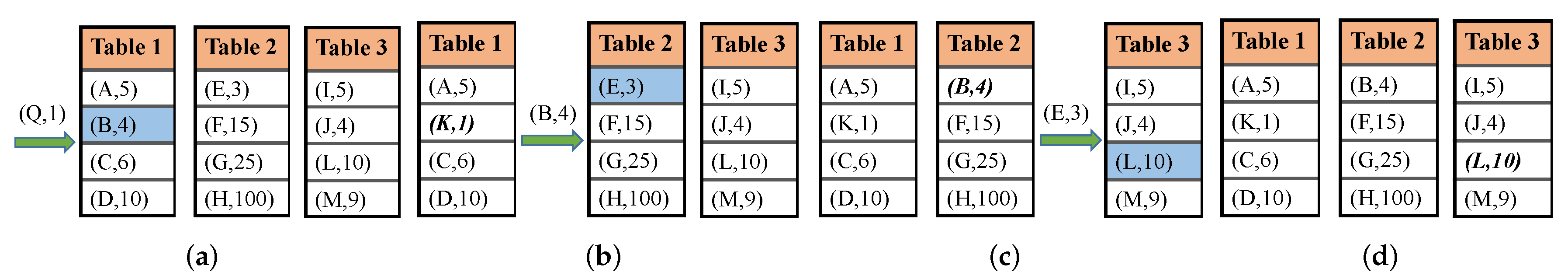

4.1.2. Item Replication

4.1.3. Adaptive Threshold

4.2. Functions in ASIC pipeline

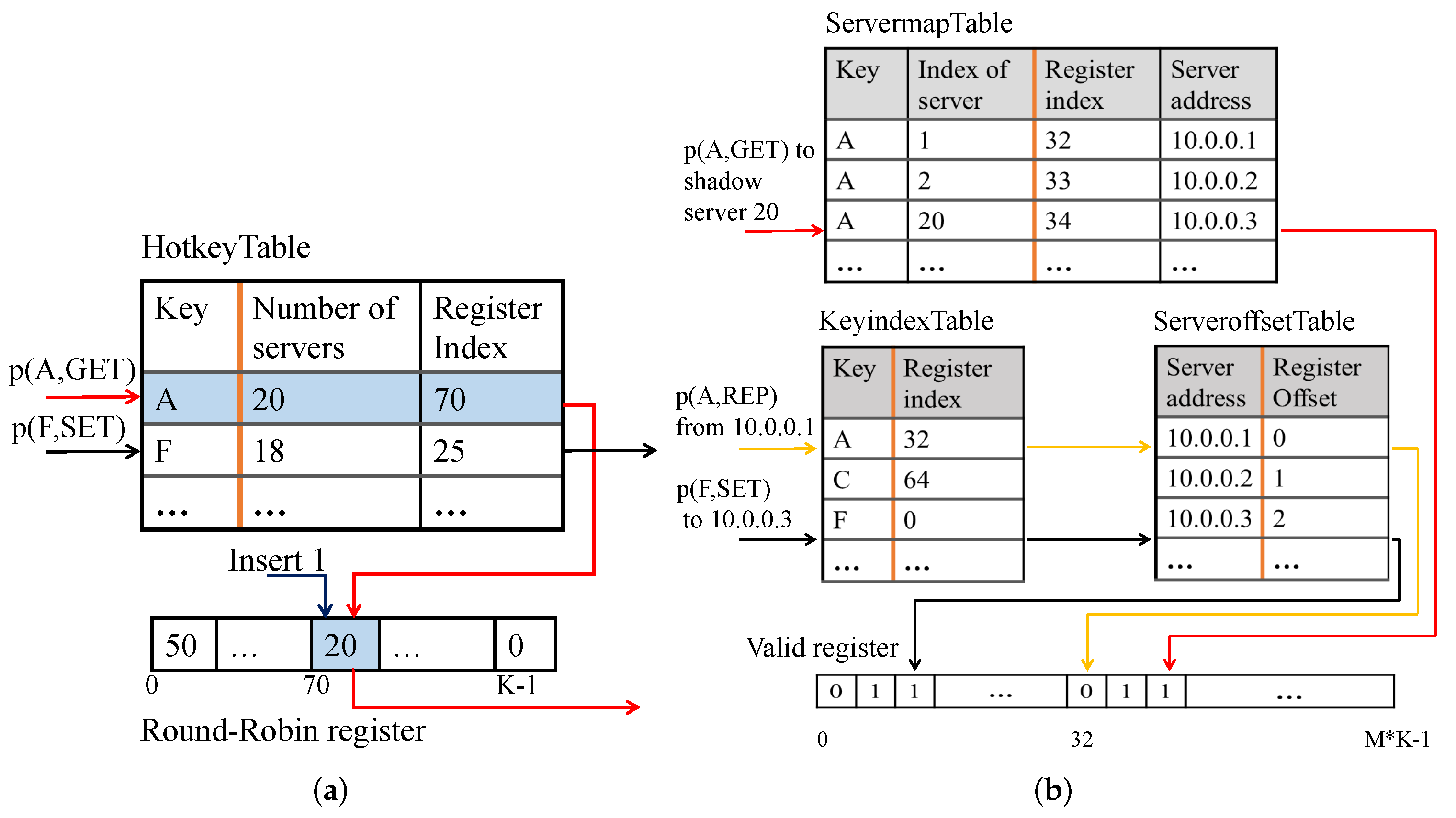

4.2.1. Programming with ASIC Pipeline

4.2.2. Round-Robin Load Balancer

4.2.3. Valid Check and Replica Consistency

4.2.4. Multicast Query

4.2.5. Time-Segmented Top-K Key Tracker

4.2.6. Server Load Statistics

5. Evaluation

5.1. Experiment Setups

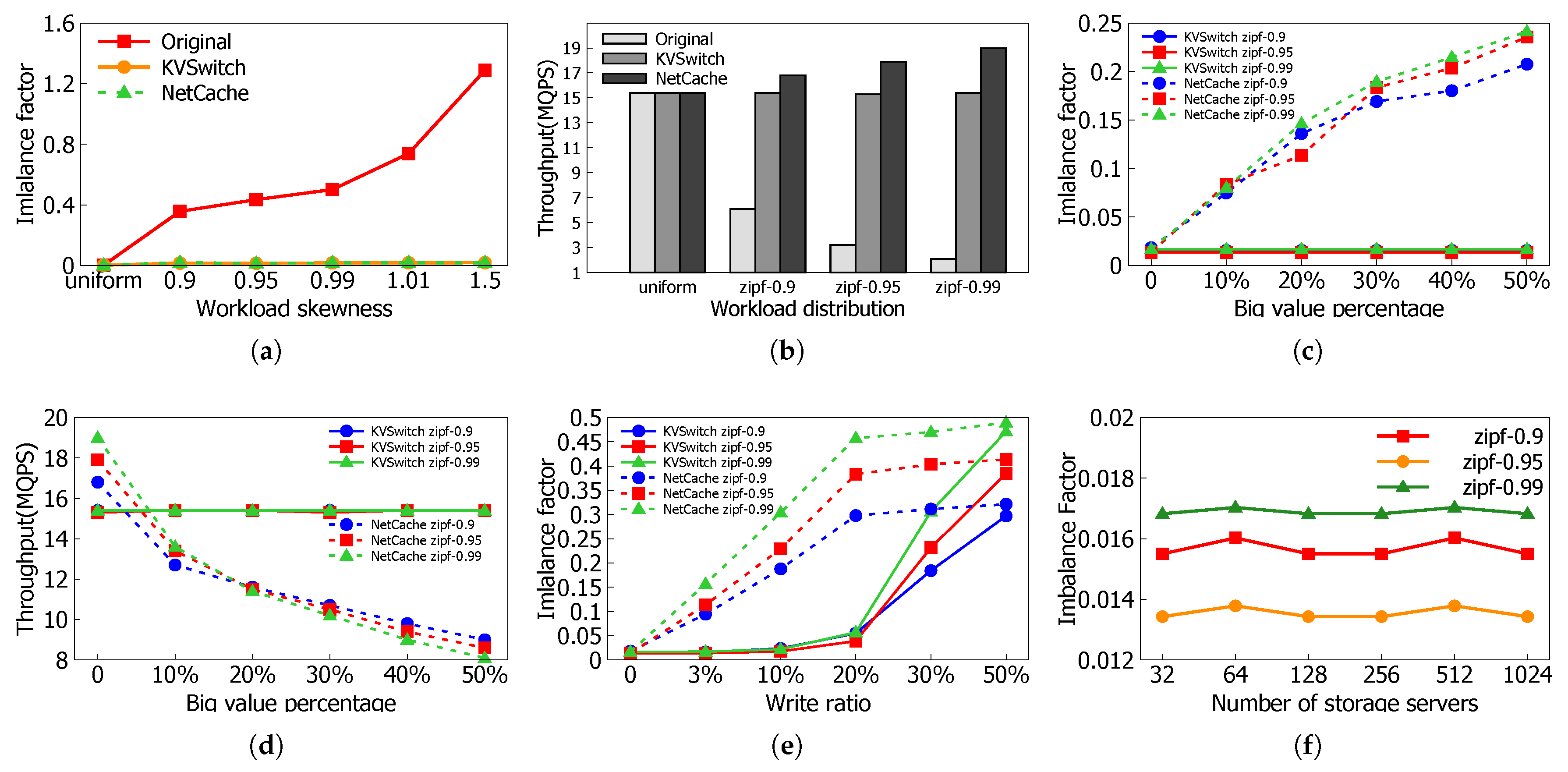

5.2. Performance of Load Balance

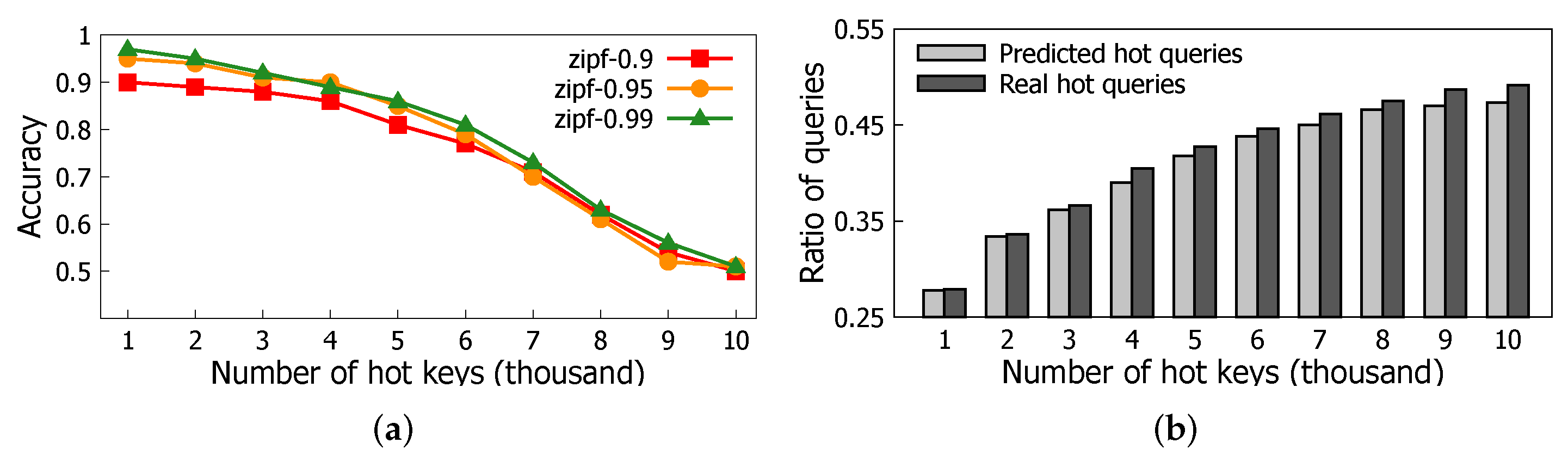

5.3. Accuracy of Hot Key Prediction

5.4. Dynamic Change of the Threshold

5.5. Feasibility Verification

6. Related Work

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| KVS | Key-value Stores |

| SLB | Software Load Balancer |

| ASIC | Application-Specific Integrated Circuit |

| CPU | Central Processing Unit |

| ToR | Top-of-Rack |

| RMT | Reconfigurable Match-action Tables |

| API | Application Programming Interface |

| UDP | User Datagram Protocol |

| TCP | Transmission Control Protocol |

| LRU | Least Recently Used |

| LFU | Least Frequently Used |

| DSL | Domain-Specific Language |

| P4 | Programming Protocol-Independent Packet Processors |

| NIC | Network Interface Card |

| QPS | Queries per Second |

| ALU | Arithmetic Logic Unit |

| SRAM | Static Random Access Memory |

| TCAM | Ternary Content-Addressable Memory |

| VLIW | Very Long Instruction Word |

| QoS | Quality of Service |

| GPU | Graphics Processing Unit |

References

- Nishtala, R.; Fugal, H.; Grimm, S.; Kwiatkowski, M.; Lee, H.; Li, H.C.; McElroy, R.; Paleczny, M.; Peek, D.; Saab, P.; et al. Scaling Memcache at Facebook. In Proceedings of the NSDI, Lombard, IL, USA, 2–5 April 2013; Volume 13, pp. 385–398. [Google Scholar]

- Denning, P.J. The Locality Principle. In Communication Networks And Computer Systems: A Tribute to Professor Erol Gelenbe; World Scientific: Singapore, 2006; pp. 43–67. [Google Scholar] [CrossRef]

- Alibaba. Tair Key-Value System in Alibaba. 2018. Available online: https://m.aliyun.com/yunqi/articles/316466? (accessed on 7 November 2018).

- Karger, D.; Lehman, E.; Leighton, T.; Panigrahy, R.; Levine, M.; Lewin, D. Consistent hashing and random trees: Distributed caching protocols for relieving hot spots on the World Wide Web. In Proceedings of the Twenty-Ninth Annual ACM Symposium on Theory of Computing, El Paso, TX, USA, 4–6 May 1997; ACM: New York, NY, USA, 1997; pp. 654–663. [Google Scholar] [CrossRef]

- Cooper, B.F.; Silberstein, A.; Tam, E.; Ramakrishnan, R.; Sears, R. Benchmarking cloud serving systems with YCSB. In Proceedings of the 1st ACM Symposium on Cloud Computing, Indianapolis, IN, USA, 10–11 June 2010; ACM: New York, NY, USA, 2010; pp. 143–154. [Google Scholar] [CrossRef]

- Huang, Q.; Gudmundsdottir, H.; Vigfusson, Y.; Freedman, D.A.; Birman, K.; van Renesse, R. Characterizing load imbalance in real-world networked caches. In Proceedings of the 13th ACM Workshop on Hot Topics in Networks, Angeles, CA, USA, 27–28 October 2014; ACM: New York, NY, USA, 2014; p. 8. [Google Scholar] [CrossRef]

- Novakovic, S.; Daglis, A.; Bugnion, E.; Falsafi, B.; Grot, B. An Analysis of Load Imbalance in Scale-out Data Serving. In ACM SIGMETRICS Performance Evaluation Review; ACM: New York, NY, USA, 2016; Volume 44, pp. 367–368. [Google Scholar] [CrossRef]

- Jin, X.; Li, X.; Zhang, H.; Soulé, R.; Lee, J.; Foster, N.; Kim, C.; Stoica, I. NetCache: Balancing Key-Value Stores with Fast In-Network Caching. In Proceedings of the 26th Symposium on Operating Systems Principles, Shanghai, China, 28–31 October 2017; ACM: New York, NY, USA, 2017; pp. 121–136. [Google Scholar] [CrossRef]

- Li, X.; Sethi, R.; Kaminsky, M.; Andersen, D.G.; Freedman, M.J. Be Fast, Cheap and in Control with SwitchKV. In Proceedings of the NSDI, Santa Clara, CA, USA, 16–18 March 2016; pp. 31–44. [Google Scholar]

- Fan, B.; Lim, H.; Andersen, D.G.; Kaminsky, M. Small cache, big effect: Provable load balancing for randomly partitioned cluster services. In Proceedings of the 2nd ACM Symposium on Cloud Computing, Cascais, Portugal, 26–28 October 2011; ACM: New York, NY, USA, 2011; p. 23. [Google Scholar] [CrossRef]

- Gavrielatos, V.; Katsarakis, A.; Joshi, A.; Oswald, N.; Grot, B.; Nagarajan, V. Scale-out ccNUMA: Exploiting skew with strongly consistent caching. In Proceedings of the Thirteenth EuroSys Conference, Porto, Portugal, 23–26 April 2018; ACM: New York, NY, USA, 2018; p. 21. [Google Scholar] [CrossRef]

- Zhang, W.; Wood, T.; Hwang, J. Netkv: Scalable, self-managing, load balancing as a network function. In Proceedings of the 2016 IEEE International Conference on Autonomic Computing (ICAC), Wurzburg, Germany, 17–22 July 2016; pp. 5–14. [Google Scholar] [CrossRef]

- Barefoot. Tofino: Programmable Switch up to 6.5 Tbps. 2017. Available online: https://barefootnetworks.com/products/brief-tofino/ (accessed on 7 November 2018).

- Yang, S.; Jiawei, F.; Mei, W.; Chunyuan, Z. KVSwitch: An In-network Load Balancer for Key-Value Stores. In Proceedings of the 2019 IEEE Symposium on Computers and Communications (ISCC), Barcelona, Spain, 30 June–3 July 2019. [Google Scholar]

- Yang, Y.; Zhu, J. Write Skew and Zipf Distribution: Evidence and Implications; ACM: New York, NY, USA, 2016; Volume 12, p. 21. [Google Scholar] [CrossRef]

- Hong, Y.J.; Thottethodi, M. Understanding and mitigating the impact of load imbalance in the memory caching tier. In Proceedings of the 4th annual Symposium on Cloud Computing, Santa Clara, CA, USA, 1–3 October 2013; ACM: New York, NY, USA, 2013; p. 13. [Google Scholar] [CrossRef]

- Bosshart, P.; Daly, D.; Gibb, G.; Izzard, M.; McKeown, N.; Rexford, J.; Schlesinger, C.; Talayco, D.; Vahdat, A.; Varghese, G.; et al. P4: Programming Protocol-Independent Packet Processors; ACM: New York, NY, USA, 2014; Volume 44, pp. 87–95. [Google Scholar] [CrossRef]

- Berenbrink, P.; Friedetzky, T.; Hu, Z.; Martin, R. On Weighted Balls-Into-Bins Games; Elsevier: Amsterdam, The Netherlands, 2008; Volume 409, pp. 511–520. [Google Scholar]

- Group, P. Behavioral Model. 2017. Available online: https://github.com/p4lang/behavioral-model (accessed on 7 November 2018).

- Group, P. Multicast in P4 Behavioral Model. 2018. Available online: https://github.com/p4lang/tutorials/issues/22 (accessed on 7 November 2018).

- Sivaraman, V.; Narayana, S.; Rottenstreich, O.; Muthukrishnan, S.; Rexford, J. Heavy-hitter detection entirely in the data plane. In Proceedings of the Symposium on SDN Research; ACM: New York, NY, USA, 2017; pp. 164–176. [Google Scholar] [CrossRef]

- Emmerich, P.; Gallenmüller, S.; Raumer, D.; Wohlfart, F.; Carle, G. MoonGen: A Scriptable High-Speed Packet Generator. In Proceedings of the Internet Measurement Conference 2015 (IMC’15), Tokyo, Japan, 28–30 October 2015. [Google Scholar] [CrossRef]

- Pearce, O.; Gamblin, T.; De Supinski, B.R.; Schulz, M.; Amato, N.M. Quantifying the effectiveness of load balance algorithms. In Proceedings of the 26th ACM International Conference on Supercomputing, Venice, Italy, 25–29 June 2012; ACM: New York, NY, USA, 2012; pp. 185–194. [Google Scholar] [CrossRef]

- Lai, C.; Jiang, S.; Yang, L.; Lin, S.; Sun, G.; Hou, Z.; Cui, C.; Cong, J. Atlas: Baidu’s key-value storage system for cloud data. In Proceedings of the 2015 31st Symposium on Mass Storage Systems and Technologies (MSST), Santa Clara, CA, USA, 30 May–5 June 2015; pp. 1–14. [Google Scholar] [CrossRef]

- Group, P. A P4 Data Plane of an L2/L3 switch. 2018. Available online: https://github.com/p4lang/switch/tree/master/p4src (accessed on 7 November 2018).

- Dragojević, A.; Narayanan, D.; Hodson, O.; Castro, M. FaRM: Fast remote memory. In Proceedings of the 11th USENIX Conference on Networked Systems Design and Implementation, Seattle, WA, USA, 2–4 April 2014; pp. 401–414. [Google Scholar]

- Fan, B.; Andersen, D.G.; Kaminsky, M. MemC3: Compact and Concurrent MemCache with Dumber Caching and Smarter Hashing. In Proceedings of the NSDI, Lombard, IL, USA, 2–5 April 2013; Volume 13, pp. 371–384. [Google Scholar]

- Kalia, A.; Kaminsky, M.; Andersen, D.G. Using RDMA Efficiently for Key-Value Services. In ACM SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2014; Volume 44, pp. 295–306. [Google Scholar] [CrossRef]

- Kaminsky, A.K.M.; Andersen, D.G. Design guidelines for high performance RDMA systems. In Proceedings of the 2016 USENIX Annual Technical Conference, Denver, CO, USA, 22–24 June 2016; p. 437. [Google Scholar]

- Li, S.; Lim, H.; Lee, V.W.; Ahn, J.H.; Kalia, A.; Kaminsky, M.; Andersen, D.G.; Seongil, O.; Lee, S.; Dubey, P. Architecting to Achieve a Billion Requests per Second Throughput on a Single Key-Value Store Server Platform. In ACM SIGARCH Computer Architecture News; ACM: New York, NY, USA, 2015; Volume 43, pp. 476–488. [Google Scholar]

- Lim, H.; Han, D.; Andersen, D.G.; Kaminsky, M. MICA: A holistic approach to fast in-memory key-value storage. In Proceedings of the USENIX, Philadelphia, PA, USA, 19–20 June 2014. [Google Scholar]

- Lim, K.; Meisner, D.; Saidi, A.G.; Ranganathan, P.; Wenisch, T.F. Thin servers with smart pipes: Designing SoC accelerators for memcached. In ACM SIGARCH Computer Architecture News; ACM: New York, NY, USA, 2013; Volume 41, pp. 36–47. [Google Scholar] [CrossRef]

- Andersen, D.G.; Franklin, J.; Kaminsky, M.; Phanishayee, A.; Tan, L.; Vasudevan, V. FAWN: A fast array of wimpy nodes. In Proceedings of the ACM SIGOPS 22nd Symposium on Operating Systems Principles, Big Sky, MT, USA, 11–14 October 2009; ACM: New York, NY, USA, 2009; pp. 1–14. [Google Scholar] [CrossRef]

- Dabek, F.; Kaashoek, M.F.; Karger, D.; Morris, R.; Stoica, I. Wide-Area Cooperative Storage with CFS. In ACM SIGOPS Operating Systems Review; ACM: New York, NY, USA, 2001; Volume 35, pp. 202–215. [Google Scholar] [CrossRef]

- DeCandia, G.; Hastorun, D.; Jampani, M.; Kakulapati, G.; Lakshman, A.; Pilchin, A.; Sivasubramanian, S.; Vosshall, P.; Vogels, W. Dynamo: Amazon’s Highly Available Key-Value Store. In ACM SIGOPS oPerating Systems Review; ACM: New York, NY, USA, 2007; Volume 41, pp. 205–220. [Google Scholar]

- Singh, A.; Ong, J.; Agarwal, A.; Anderson, G.; Armistead, A.; Bannon, R.; Boving, S.; Desai, G.; Felderman, B.; Germano, P.; et al. Jupiter Rising: A Decade of Clos Topologies and Centralized Control in Google’S Datacenter Network. In ACM SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2015; Volume 45, pp. 183–197. [Google Scholar] [CrossRef]

- CAVIUM. XPliant Ethernet Switch Product Family. 2017. Available online: https://www.cavium.com/xpliant-ethernet-switch-product-family.html (accessed on 7 November 2018).

- Ozdag, R. Intel® Ethernet Switch FM6000 Series-Software Defined Networking. See goo.gl/AnvOvX, 2012; 5. [Google Scholar]

- Sivaraman, A.; Cheung, A.; Budiu, M.; Kim, C.; Alizadeh, M.; Balakrishnan, H.; Varghese, G.; McKeown, N.; Licking, S. Packet transactions: High-level programming for line-rate switches. In Proceedings of the 2016 ACM SIGCOMM Conference, Florianopolis, Brazil, 22–26 August 2016; ACM: New York, NY, USA, 2016; pp. 15–28. [Google Scholar] [CrossRef]

- Ghasemi, M.; Benson, T.; Rexford, J. Dapper: Data plane performance diagnosis of tcp. In Proceedings of the Symposium on SDN Research, Santa Clara, CA, USA, 3–4 April 2017; ACM: New York, NY, USA, 2017; pp. 61–74. [Google Scholar] [CrossRef]

- Narayana, S.; Sivaraman, A.; Nathan, V.; Goyal, P.; Arun, V.; Alizadeh, M.; Jeyakumar, V.; Kim, C. Language-directed hardware design for network performance monitoring. In Proceedings of the Conference of the ACM Special Interest Group on Data Communication, Los Angeles, CA, USA, 21–25 August 2017; ACM: New York, NY, USA, 2017; pp. 85–98. [Google Scholar] [CrossRef]

- Dang, H.T.; Canini, M.; Pedone, F.; Soulé, R. Paxos Made Switch-y; ACM: New York, NY, USA, 2016; Volume 46, pp. 18–24. [Google Scholar] [CrossRef]

- Sapio, A.; Abdelaziz, I.; Aldilaijan, A.; Canini, M.; Kalnis, P. In-Network Computation is a Dumb Idea Whose Time Has Come. In Proceedings of the 16th ACM Workshop on Hot Topics in Networks, Palo Alto, CA, USA, 30 November–1 December 2017; ACM: New York, NY, USA, 2017; pp. 150–156. [Google Scholar] [CrossRef]

| Resource | Switch.p4 | KVSwitch | KVSwitch + Switch.p4 | NetCache |

|---|---|---|---|---|

| Match Crossbar | 50.13% | 7.72% | 57.85% | 45.34% |

| Hash Bits | 32.35% | 17.58% | 49.93% | 30.54% |

| SRAM | 29.79% | 38.43% | 68.22% | 48.98% |

| TCAM | 28.47% | 0.00% | 28.47% | 34.21% |

| VLIW Actions | 34.64% | 8.26% | 42.9% | 37.85% |

| Stateful ALUs | 15.63% | 24.61% | 40.24% | 16.74% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Y.; Fei, J.; Wen, M.; Zhang, C. Balancing Distributed Key-Value Stores with Efficient In-Network Redirecting. Electronics 2019, 8, 1008. https://doi.org/10.3390/electronics8091008

Shi Y, Fei J, Wen M, Zhang C. Balancing Distributed Key-Value Stores with Efficient In-Network Redirecting. Electronics. 2019; 8(9):1008. https://doi.org/10.3390/electronics8091008

Chicago/Turabian StyleShi, Yang, Jiawei Fei, Mei Wen, and Chunyuan Zhang. 2019. "Balancing Distributed Key-Value Stores with Efficient In-Network Redirecting" Electronics 8, no. 9: 1008. https://doi.org/10.3390/electronics8091008

APA StyleShi, Y., Fei, J., Wen, M., & Zhang, C. (2019). Balancing Distributed Key-Value Stores with Efficient In-Network Redirecting. Electronics, 8(9), 1008. https://doi.org/10.3390/electronics8091008