SCRAS Server-Based Crosslayer Rate-Adaptive Video Streaming over 4G-LTE for UAV-Based Surveillance Applications

Abstract

1. Introduction

- We considered the scenario where servers are mobile, i.e., UAVs. They support variety of codec standards such as H.264 and H.265 for video streaming to a remote client. With preprogrammed flight coordinates, they perform video adaptation, among available video resolutions, which best suits their underlying surveillance application in the current 4G-LTE conditions. Hence, video viewing QoE is ensured.

- Mobile servers perform server-side push-based rate adaptation after evaluating the received signal strengths and rate of handovers.

- Our proposed rate-adaptive scheme uses a server-based proactive approach entirely independent of client assistance. This ensures fast streaming; hence, the client will never experience degraded video because of poor signal and handovers.

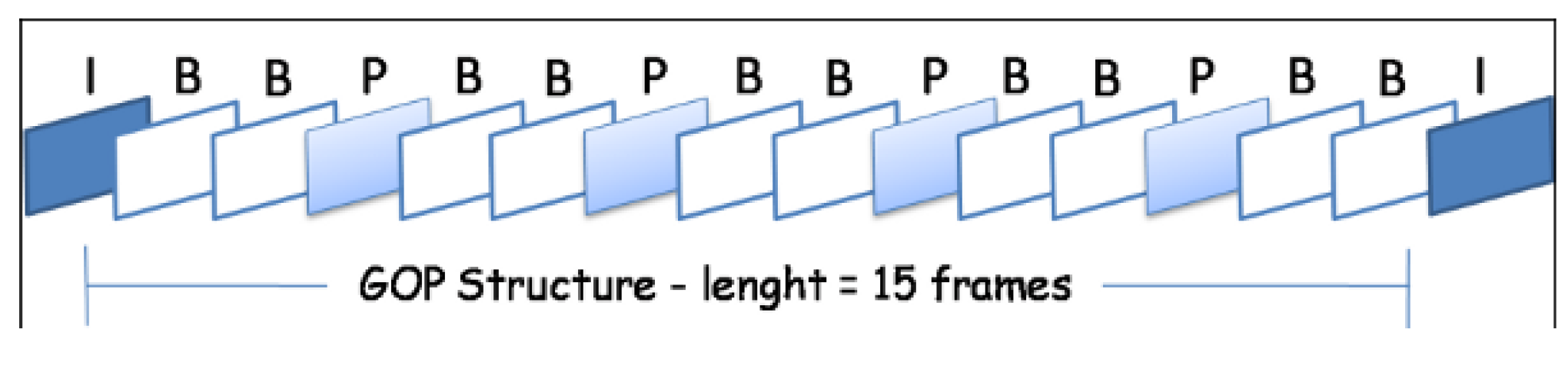

- Our proposed scheme ensures that the video should not flicker by damaging frames during the adaptation process. For this reason, we took group of packets (GoPs) into account and deferred our adaptation process to the end of the latest GoP.

2. Related Work

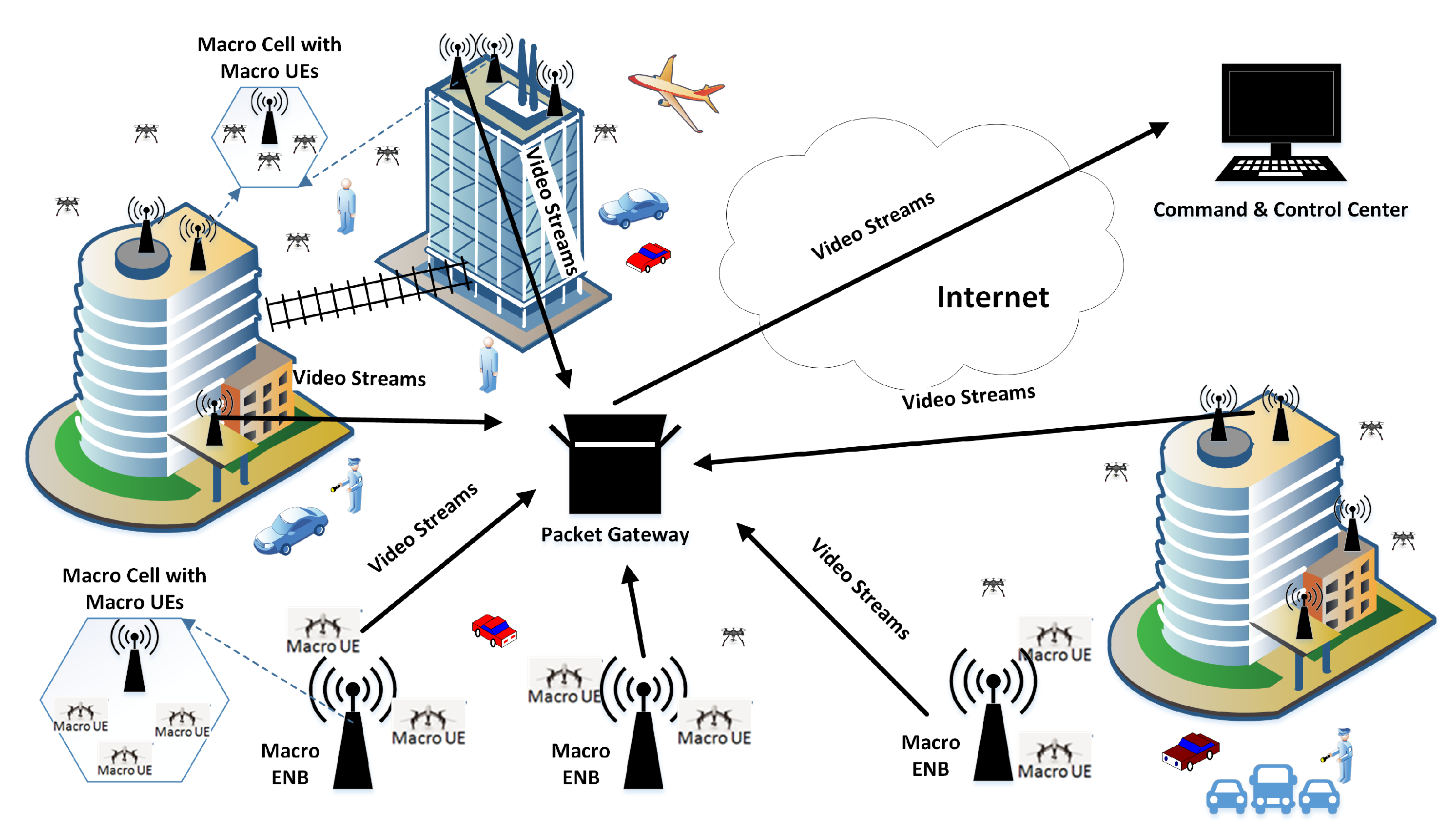

3. Proposed Architecture

3.1. Wireless Signal Propagation Model Used for UAVs

3.2. Mobility Models Used for UAVs

- (i)

- Random Flight Pattern

- (ii)

- Fixed Flight Pattern

4. Measuring QoE over Proposed Architecture

5. SCRAS-Server-Based Crosslayer Rate-Adaptive Scheme

| Algorithm 1: Algorithm SCRAS |

|

6. Experimental Environment and Simulation Settings

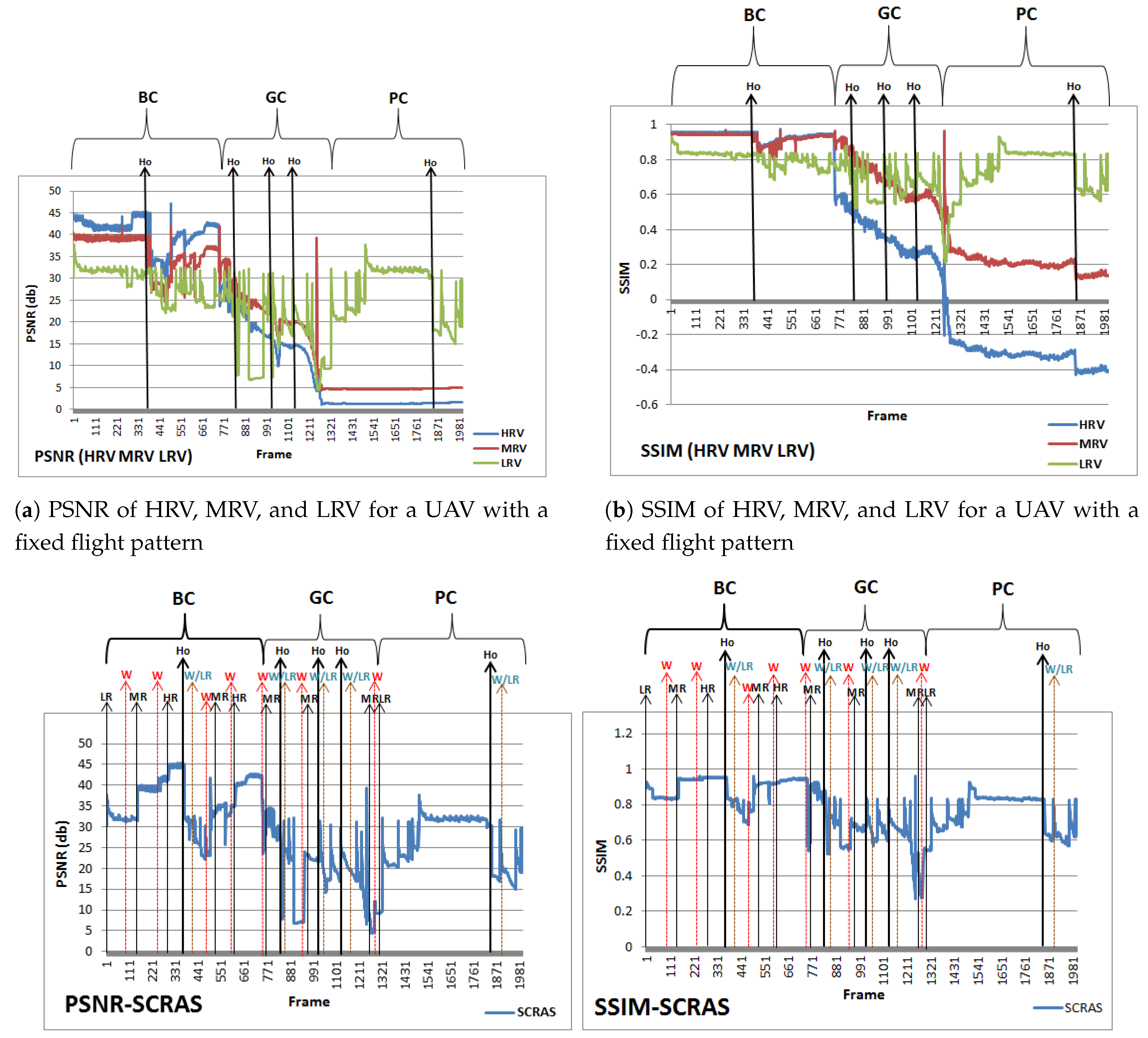

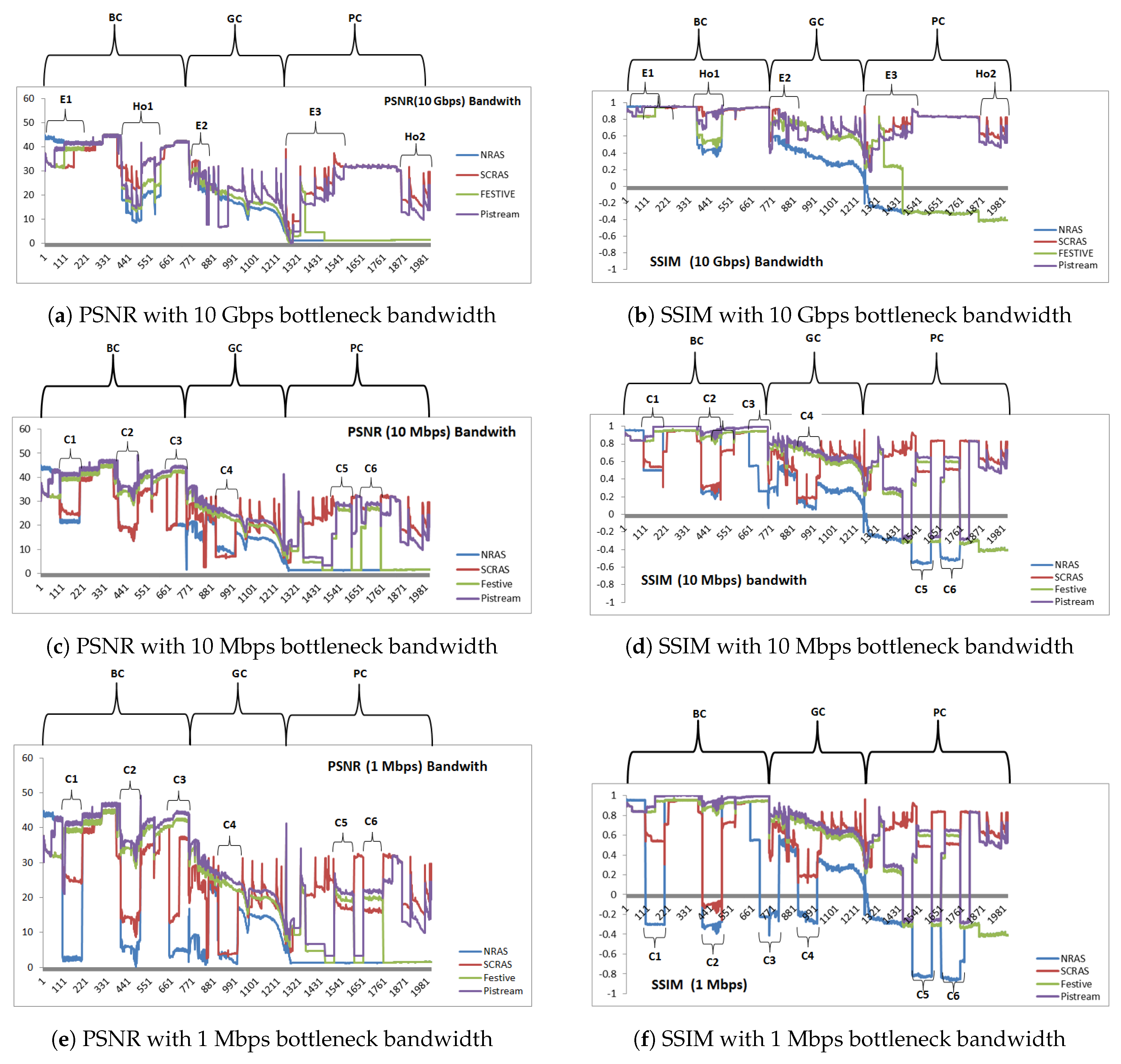

7. Comparative Analysis of SCRAS with Other Schemes

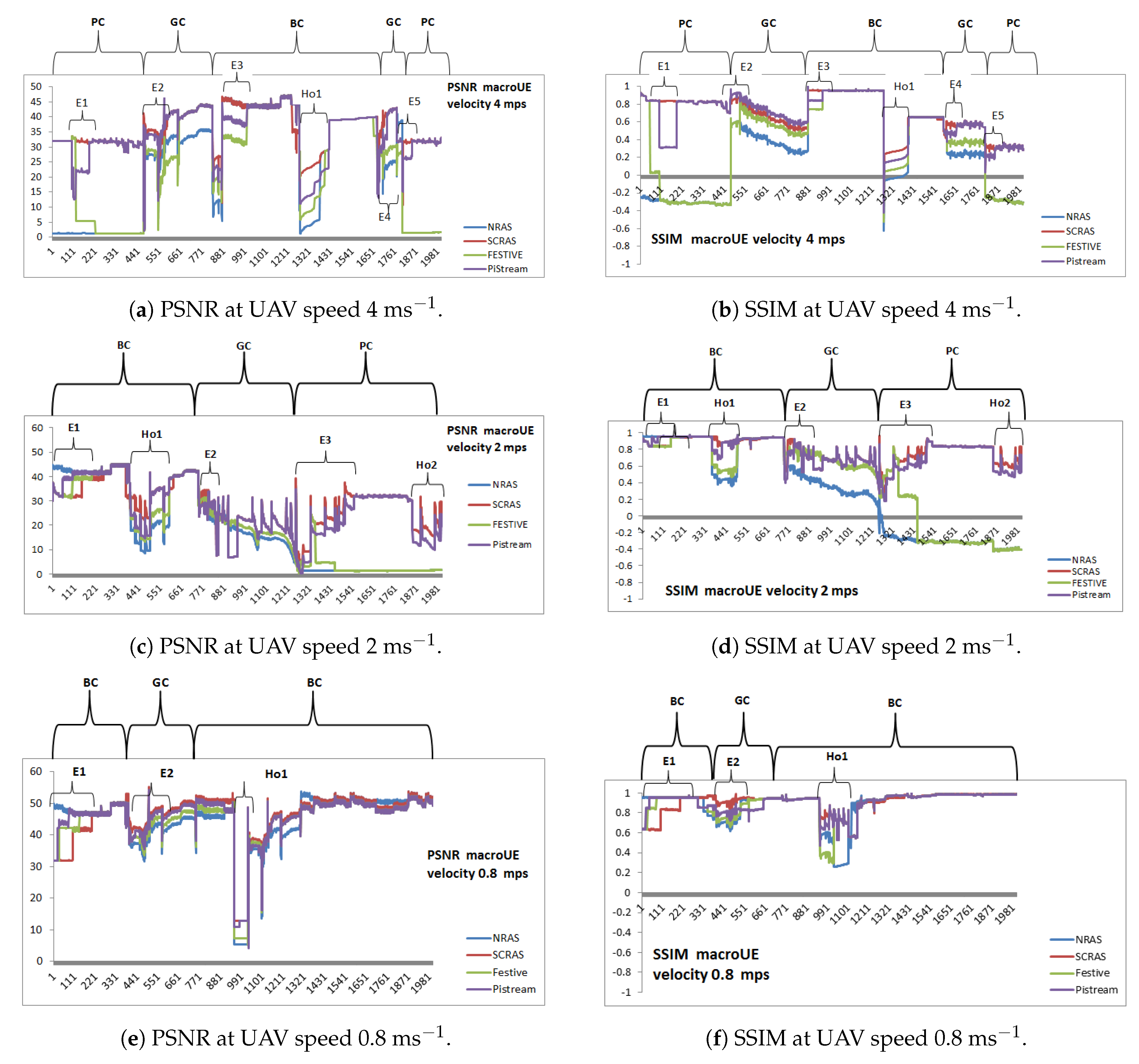

7.1. Impact of UAV Speed

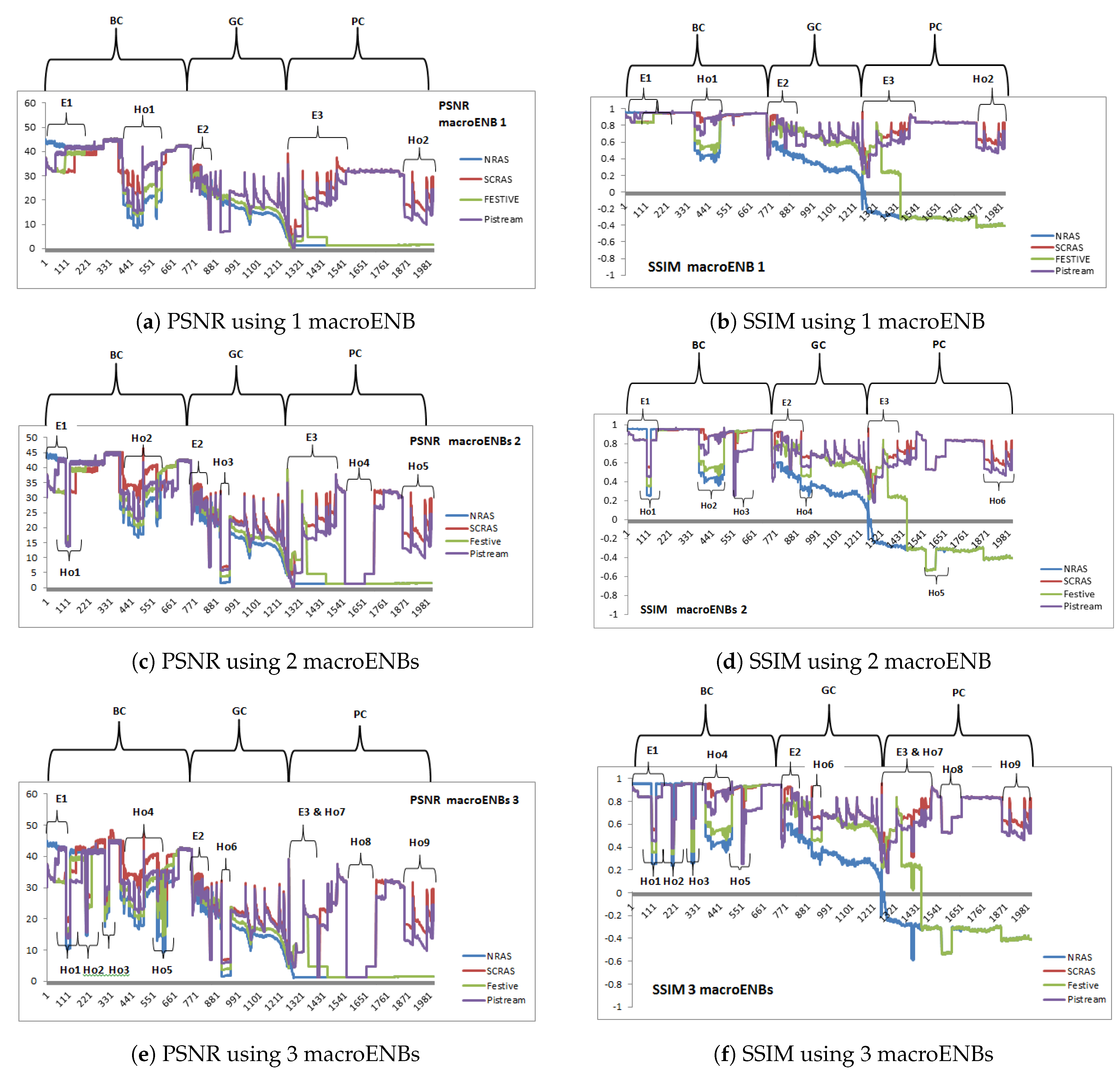

7.2. Impact of Increasing Handovers

7.3. Impact of Congestion in Core Network

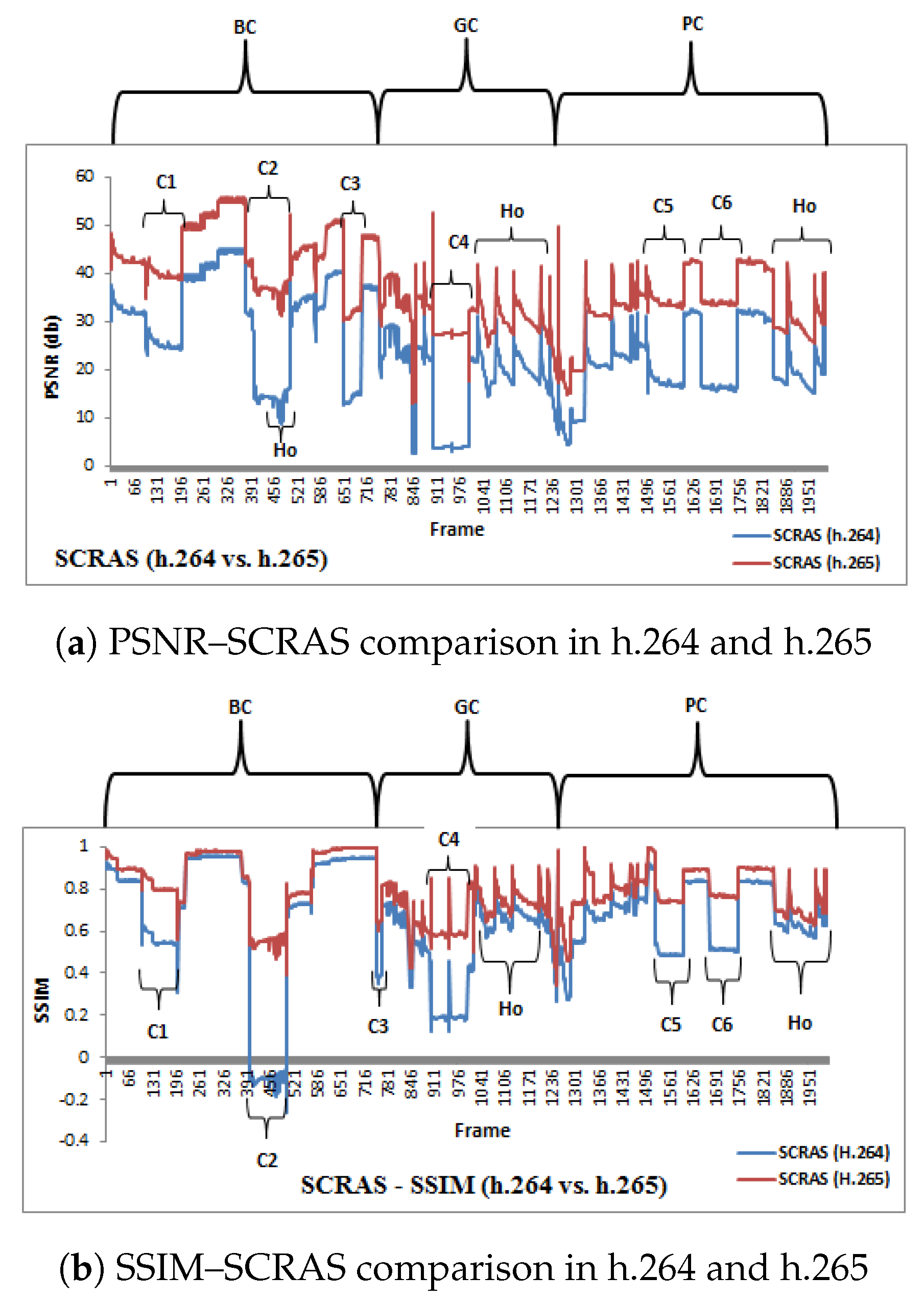

7.4. Impact of Video Encoding Schemes

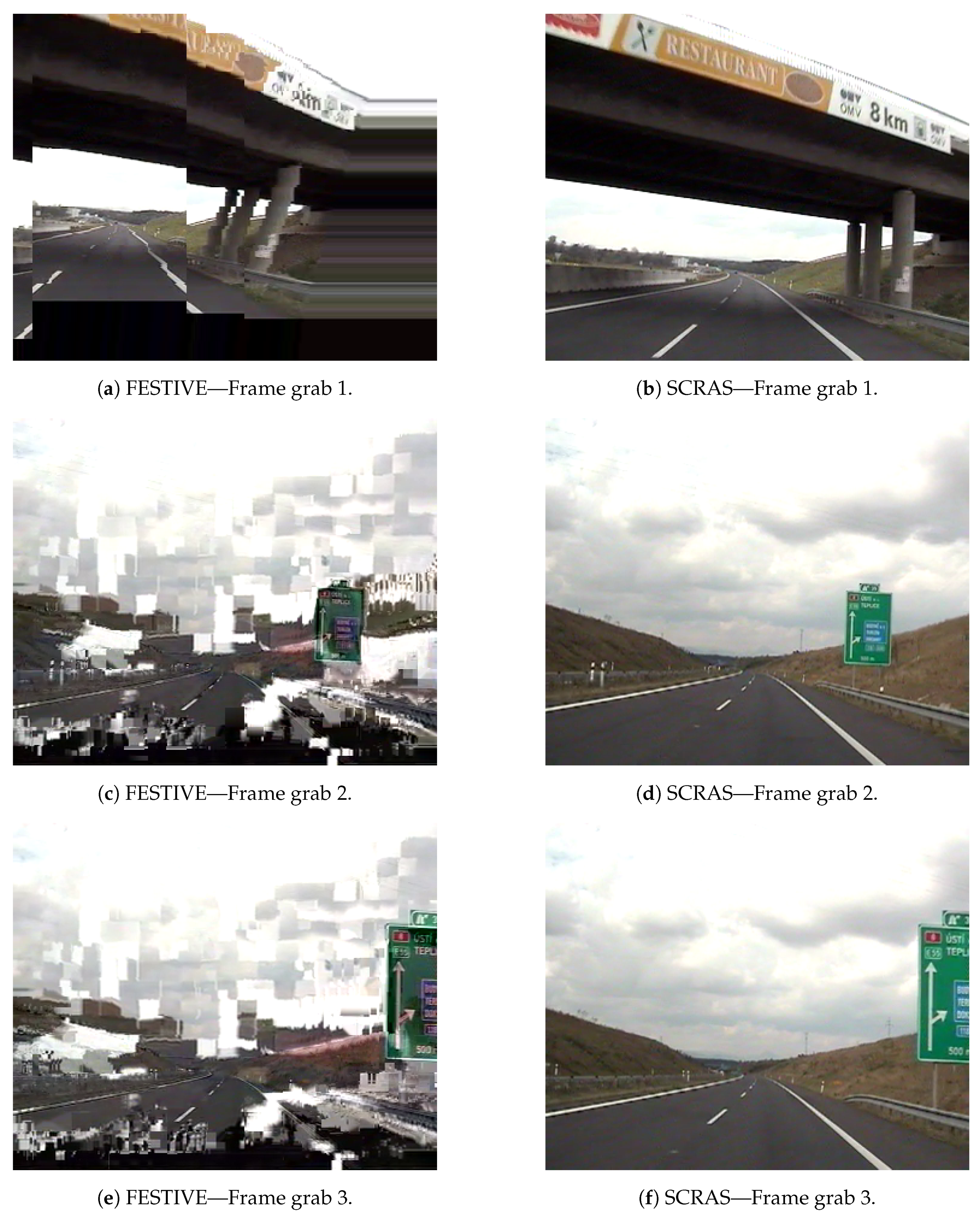

8. Analysis of Frame-Grabs of Streamed Video

9. Outstanding Characteristics of SCRAS

9.1. SCRAS Takes Care of GoP in Rate Adaptation

9.2. SCRAS Outperforms Other Schemes during Handovers in 4G-LTE

9.3. SCRAS Performs Better in Surveillance over 4G-LTE

9.4. SCRAS Impact on Battery Life

10. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References and Note

- Wang, H.; Huo, D.; Alidaee, B. Position Unmanned Aerial Vehicles in the Mobile Ad Hoc Network. J. Intell. Robot. Syst. 2013, 74, 455–464. [Google Scholar] [CrossRef]

- Merwaday, A.; Guvenc, I. UAV assisted heterogeneous networks for public safety communications. In Proceedings of the 2015 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), New Orleans, LA, USA, 9–12 March 2015; pp. 329–334. [Google Scholar]

- Cho, J.; Lim, G.; Biobaku, T.; Kim, S.; Parsaei, H. Safety and Security Management with Unmanned Aerial Vehicle (UAV) in Oil and Gas Industry. Procedia Manuf. 2015, 3, 1343–1349. [Google Scholar] [CrossRef]

- Kumar, S.; Hamed, E.; Katabi, D.; Erran Li, L. LTE radio analytics made easy and accessible. In ACM SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2014; Volume 44, pp. 211–222. [Google Scholar]

- Becker, N.; Rizk, A.; Fidler, M. A measurement study on the application-level performance of LTE. In Proceedings of the 2014 IFIP Networking Conference, Trondheim, Norway, 2–4 June 2014. [Google Scholar] [CrossRef]

- Nguyen, B.; Banerjee, A.; Gopalakrishnan, V.; Kasera, S.; Lee, S.; Shaikh, A.; der Merwe, J.V. Towards understanding TCP performance on LTE/EPC mobile networks. In Proceedings of the 4th Workshop on All Things Cellular: Operations, Applications, & Challenges, Chicago, IL, USA, 22 August 2014. [Google Scholar] [CrossRef]

- Jin, R. Enhancing Upper-Level Performance from Below: Performance Measurement and Optimization in LTE Networks. Ph.D. Thesis, University of Connecticut, Mansfield, CT, USA, 2015. [Google Scholar]

- Aljehani, M.; Inoue, M. Performance evaluation of multi-UAV system in post-disaster application: Validated by HITL simulator. IEEE Access 2019, 7, 64386–64400. [Google Scholar] [CrossRef]

- Huo, Y.; Dong, X.; Lu, T.; Xu, W.; Yuen, M. Distributed and Multi-layer UAV Networks for Next-generation Wireless Communication and Power Transfer: A Feasibility Study. IEEE Internet Things J. 2019, 6, 7103–7115. [Google Scholar] [CrossRef]

- Huo, Y.; Dong, X. Millimeter-wave for unmanned aerial vehicles networks: Enabling multi-beam multi-stream communications. arXiv 2018, arXiv:1810.06923. [Google Scholar]

- Lai, C.C.; Chen, C.T.; Wang, L.C. On-demand density-aware uav base station 3d placement for arbitrarily distributed users with guaranteed data rates. IEEE Wirel. Commun. Lett. 2019, 8, 913–916. [Google Scholar] [CrossRef]

- Jiang, J.; Sekar, V.; Zhang, H. Improving fairness, efficiency, and stability in http-based adaptive video streaming with festive. IEEE/ACM Trans. Netw. (ToN) 2014, 22, 326–340. [Google Scholar] [CrossRef]

- Xie, X.; Zhang, X.; Kumar, S.; Li, L.E. pistream: Physical layer informed adaptive video streaming over lte. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 413–425. [Google Scholar]

- Najiya, K.; Archana, M. UAV Video Processing for Traffic Surveillence with Enhanced Vehicle Detection. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 662–668. [Google Scholar]

- Angelov, P.; Sadeghi-Tehran, P.; Clarke, C. AURORA: Autonomous real-time on-board video analytics. Neural Comput. Appl. 2017, 28, 855–865. [Google Scholar] [CrossRef][Green Version]

- Alsmirat, M.A.; Jararweh, Y.; Obaidat, I.; Gupta, B.B. Automated wireless video surveillance: An evaluation framework. J. Real-Time Image Process. 2017, 13, 527–546. [Google Scholar] [CrossRef]

- Jung, J.; Yoo, S.; La, W.; Lee, D.; Bae, M.; Kim, H. AVSS: Airborne Video Surveillance System. Sensors 2018, 18, 1939. [Google Scholar] [CrossRef]

- Karaki, H.S.A.; Alomari, S.A.; Refai, M.H. A Comprehensive Survey of the Vehicle Motion Detection and Tracking Methods for Aerial Surveillance Videos. IJCSNS 2019, 19, 93. [Google Scholar]

- Shin, S.Y. UAV Based Search and Rescue with Honeybee Flight Behavior in Forest. In Proceedings of the 5th International Conference on Mechatronics and Robotics Engineering, Rome, Italy, 16–19 February 2019; ACM: New York, NY, USA, 2019; pp. 182–187. [Google Scholar]

- Mukherjee, A.; Keshary, V.; Pandya, K.; Dey, N.; Satapathy, S.C. Flying Ad hoc Networks: A Comprehensive Survey. In Information and Decision Sciences; Springer: Singapore, 2018; pp. 569–580. [Google Scholar] [CrossRef]

- Bekmezci, I.; Sahingoz, O.K.; Temel, Ş. Flying ad-hoc networks (FANETs): A survey. Ad Hoc Netw. 2013, 11, 1254–1270. [Google Scholar] [CrossRef]

- Mustaqim, M.; Khawaja, B.A.; Razzaqi, A.A.; Zaidi, S.S.H.; Jawed, S.A.; Qazi, S.H. Wideband and high gain antenna arrays for UAV-to-UAV and UAV-to-ground communication in flying ad-hoc networks (FANETs). Microw. Opt. Technol. Lett. 2018, 60, 1164–1170. [Google Scholar] [CrossRef]

- Qazi, S.; Alvi, A.; Qureshi, A.M.; Khawaja, B.A.; Mustaqim, M. An Architecture for Real Time Monitoring Aerial Adhoc Network. In Proceedings of the 2015 13th International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 14–16 December 2015; pp. 154–159. [Google Scholar]

- Qazi, S.; Siddiqui, A.S.; Wagan, A.I. UAV based real time video surveillance over 4G LTE. In Proceedings of the 2015 International Conference on Open Source Systems & Technologies (ICOSST), Lahore, Pakistan, 17–19 December 2015; pp. 141–145. [Google Scholar]

- Wang, X. Optimizing Networked Drones and Video Delivery in Wireless Network. Ph.D. Thesis, Princeton University, Princeton, NJ, USA, 2017. [Google Scholar]

- Halperin, D.; Hu, W.; Sheth, A.; Wetherall, D. Predictable 802.11 packet delivery from wireless channel measurements. ACM SIGCOMM Comput. Commun. Rev. 2010, 40, 159–170. [Google Scholar] [CrossRef]

- Afroz, F.; Subramanian, R.; Heidary, R.; Sandrasegaran, K.; Ahmed, S. SINR, RSRP, RSSI and RSRQ Measurements in Long Term Evolution Networks. Int. J. Wirel. Mob. Netw. 2015, 7, 113–123. [Google Scholar] [CrossRef]

- Nasrabadi, A.T.; Prakash, R. Layer-Assisted Adaptive Video Streaming. In Proceedings of the 28th ACM SIGMM Workshop on Network and Operating Systems Support for Digital Audio and Video—NOSSDAV, Amsterdam, The Netherlands, 12–15 June 2018; pp. 31–36. [Google Scholar] [CrossRef]

- Ramamurthi, V.; Oyman, O. Link aware HTTP Adaptive Streaming for enhanced quality of experience. In Proceedings of the 2013 IEEE Global Communications Conference (GLOBECOM), Atlanta, GA, USA, 9–13 December 2013. [Google Scholar] [CrossRef]

- Marwat, S.N.K.; Meyer, S.; Weerawardane, T.; Goerg, C. Congestion-Aware Handover in LTE Systems for Load Balancing in Transport Network. ETRI J. 2014, 36, 761–771. [Google Scholar] [CrossRef]

- Kua, J.; Armitage, G.; Branch, P. A Survey of Rate Adaptation Techniques for Dynamic Adaptive Streaming Over HTTP. IEEE Commun. Surv. Tutor. 2017, 19, 1842–1866. [Google Scholar] [CrossRef]

- Atawia, R.; Hassanein, H.S.; Noureldin, A. Robust Long-Term Predictive Adaptive Video Streaming Under Wireless Network Uncertainties. IEEE Trans. Wirel. Commun. 2018, 17, 1374–1388. [Google Scholar] [CrossRef]

- Marai, O.E.; Taleb, T.; Menacer, M.; Koudil, M. On Improving Video Streaming Efficiency, Fairness, Stability, and Convergence Time Through Client–Server Cooperation. IEEE Trans. Broadcast. 2018, 64, 11–25. [Google Scholar] [CrossRef]

- Poojary, S.; El-Azouzi, R.; Altman, E.; Sunny, A.; Triki, I.; Haddad, M.; Jimenez, T.; Valentin, S.; Tsilimantos, D. Analysis of QoE for adaptive video streaming over wireless networks. In Proceedings of the 2018 16th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), Shanghai, China, 7–11 May 2018; pp. 1–8. [Google Scholar]

- Kumar, S.; Sarkar, A.; Sur, A. A resource allocation framework for adaptive video streaming over LTE. J. Netw. Comput. Appl. 2017, 97, 126–139. [Google Scholar] [CrossRef]

- Su, G.M.; Su, X.; Bai, Y.; Wang, M.; Vasilakos, A.V.; Wang, H. QoE in video streaming over wireless networks: Perspectives and research challenges. Wirel. Netw. 2015, 22, 1571–1593. [Google Scholar] [CrossRef]

- Fan, Q.; Yin, H.; Min, G.; Yang, P.; Luo, Y.; Lyu, Y.; Huang, H.; Jiao, L. Video delivery networks: Challenges, solutions and future directions. Comput. Electr. Eng. 2018, 66, 332–341. [Google Scholar] [CrossRef]

- Ong, D.; Moors, T. Deferred discard for improving the quality of video sent across congested networks. In Proceedings of the 38th Annual IEEE Conference on Local Computer Networks, Sydney, NSW, Australia, 21–24 October 2013. [Google Scholar] [CrossRef]

- Narváez, E.A.T.; Bonilla, C.M.H. Handover algorithms in LTE networks for massive means of transport. Sist. Telemát. 2018, 16, 21–36. [Google Scholar] [CrossRef]

- Abdelmohsen, A.; Abdelwahab, M.; Adel, M.; Darweesh, M.S.; Mostafa, H. LTE Handover Parameters Optimization Using Q-Learning Technique. In Proceedings of the 2018 IEEE 61st International Midwest Symposium on Circuits and Systems (MWSCAS), Windsor, ON, Canada, 5–8 August 2018; pp. 194–197. [Google Scholar]

- Ahmad, R.; Sundararajan, E.A.; Othman, N.E.; Ismail, M. Efficient Handover in LTE-A by Using Mobility Pattern History and User Trajectory Prediction. Arab. J. Sci. Eng. 2018, 43, 2995–3009. [Google Scholar] [CrossRef]

- Broyles, D.; Jabbar, A.; Sterbenz, J.P. Design and analysis of a 3–D gauss-markov mobility model for highly-dynamic airborne networks. In Proceedings of the international telemetering conference (ITC), San Diego, CA, USA, 25–28 October 2010; pp. 25–28. [Google Scholar]

- Vijaykumar, M.; Rao, S. A cross-layer frame work for adaptive video streaming over wireless networks. In Proceedings of the 2010 International Conference on Computer and Communication Technology (ICCCT), Allahabad, Uttar Pradesh, India, 17–19 September 2010. [Google Scholar] [CrossRef]

- Korhonen, J.; You, J. Improving objective video quality assessment with content analysis. In Proceedings of the International Workshop on Video Processing and Quality Metrics for Consumer Electronics, Scottsdale, AZ, USA, 13–15 January 2010; pp. 1–6. [Google Scholar]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Li, S.; Ngan, K.N. Influence of the Smooth Region on the Structural Similarity Index. In Pacific-Rim Conference on Multimedia; Springer: Berlin/Heidelberg, Germany, 2009; pp. 836–846. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Channappayya, S.S.; Bovik, A.C.; Heath, R.W. A Linear Estimator Optimized for the Structural Similarity Index and its Application to Image Denoising. In Proceedings of the 2006 International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006. [Google Scholar] [CrossRef]

- Judd, G.; Wang, X.; Steenkiste, P. Efficient channel-aware rate adaptation in dynamic environments. In Proceedings of the 6th International Conference on Mobile Systems, Applications, and Services, Breckenridge, CO, USA, 17–20 June 2008. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, R.; Lim, T.J. Throughput maximization for UAV-enabled mobile relaying systems. IEEE Trans. Commun. 2016, 64, 4983–4996. [Google Scholar] [CrossRef]

- Lyu, J.; Zeng, Y.; Zhang, R. Cyclical multiple access in UAV-aided communications: A throughput-delay tradeoff. IEEE Wirel. Commun. Lett. 2016, 5, 600–603. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, W.; Wang, W.; Yang, L.; Zhang, W. Research Challenges and Opportunities of UAV Millimeter-Wave Communications. IEEE Wirel. Commun. 2019, 26, 58–62. [Google Scholar] [CrossRef]

- Khawaja, W.; Guvenc, I.; Matolak, D.W.; Fiebig, U.C.; Schneckenberger, N. A survey of air-to-ground propagation channel modeling for unmanned aerial vehicles. IEEE Commun. Surv. Tutor. 2019. [Google Scholar] [CrossRef]

- Zeng, Y.; Lyu, J.; Zhang, R. Cellular-Connected UAV: Potential, Challenges, and Promising Technologies. IEEE Wirel. Commun. 2019, 26, 120–127. [Google Scholar] [CrossRef]

- Mozaffari, M.; Saad, W.; Bennis, M.; Nam, Y.H.; Debbah, M. A tutorial on UAVs for wireless networks: Applications, challenges, and open problems. IEEE Commun. Surv. Tutor. 2019. [Google Scholar] [CrossRef]

- Zhang, G.; Wu, Q.; Cui, M.; Zhang, R. Securing UAV communications via joint trajectory and power control. IEEE Trans. Wirel. Commun. 2019, 18, 1376–1389. [Google Scholar] [CrossRef]

- Eclipse. Available online: https://www.eclipse.org/downloads/packages/release/neon/3 (accessed on 15 July 2019).

- FFmpeg Website. Available online: https://ffmpeg.org/ (accessed on 15 July 2019).

- GERCOM Group. Available online: http://www.gercom.ufpa.br (accessed on 15 July 2019).

- PSNR and SSIM Computation. Available online: http://totalgeekout.blogspot.com/2013/04/evalvid-on-ns-3-on-ubuntu-1204.html (accessed on 15 July 2019).

- Evalvid Binaries. Available online: http://www2.tkn.tu-berlin.de/research/evalvid/fw.html (accessed on 15 July 2019).

- YUV CIF Reference Videos (Lossless H.264 Encoded). Available online: http://www2.tkn.tu-berlin.de/research/evalvid/cif.html (accessed on 15 July 2019).

- YUV Video Sequences.

- Raffelsberger, C.; Muzaffar, R.; Bettstetter, C. A Performance Evaluation Tool for Drone Communications in 4G Cellular Networks. arXiv 2019, arXiv:1905.00115. [Google Scholar]

- MP4Box Online Resource. Available online: https://gpac.wp.imt.fr/mp4box/ (accessed on 15 July 2019).

- Pongsapan, F.P. Hendrawan. Evaluation of HEVC vs H.264/A VC video compression transmission on LTE network. In Proceedings of the 2017 11th International Conference on Telecommunication Systems Services and Applications (TSSA), Lombok, Indonesia, 26–27 October 2017. [Google Scholar] [CrossRef]

- Uhrina, M.; Frnda, J.; Ševčík, L.; Vaculik, M. Impact of H. 264/AVC and H. 265/HEVC compression standards on the video quality for 4K resolution. Open J. Syst. 2014. [Google Scholar] [CrossRef]

- Lin, Y.C.; Wu, S.C. An Accelerated H. 264/AVC Encoder on Graphic Processing Unit for UAV Videos. In International Symposium Computational Modeling of Objects Represented in Images; Springer: Cham, Switzerland, 2016; pp. 251–258. [Google Scholar]

- De Rango, F.; Tropea, M.; Fazio, P. Multimedia Traffic over Wireless and Satellite Networks. In Digital Video; IntechOpen: London, UK, 2010. [Google Scholar]

- Sun, K.; Yan, Y.; Zhang, W.; Wei, Y. An Interference-Aware Uplink Power Control in LTE Heterogeneous Networks. In Proceedings of the TENCON 2018-2018 IEEE Region 10 Conference, Jeju, Korea, 28–31 October 2018; pp. 0937–0941. [Google Scholar]

- Abreu, R.; Jacobsen, T.; Berardinelli, G.; Pedersen, K.; Kovács, I.Z.; Mogensen, P. Power control optimization for uplink grant-free URLLC. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; pp. 1–6. [Google Scholar]

- Gora, J.; Pedersen, K.I.; Szufarska, A.; Strzyz, S. Cell-specific uplink power control for heterogeneous networks in LTE. In Proceedings of the 2010 IEEE 72nd Vehicular Technology Conference-Fall, Ottawa, ON, Canada, 6–9 September 2010; pp. 1–5. [Google Scholar]

- Deb, S.; Monogioudis, P. Learning-based uplink interference management in 4G LTE cellular systems. IEEE/ACM Trans. Netw. (TON) 2015, 23, 398–411. [Google Scholar] [CrossRef]

- Recommended Drones for 4G-LTE. Available online: http://g-uav.com/en/index.html (accessed on 15 July 2019).

- Ohm, J.R.; Sullivan, G.J.; Schwarz, H.; Tan, T.K.; Wiegand, T. Comparison of the coding efficiency of video coding standards—including high efficiency video coding (HEVC). IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1669–1684. [Google Scholar] [CrossRef]

| SCRAS | FESTIVE [12] | piStream [13] | AVSS [17] | [16] | [38] | [35] | [24] | [29] | [34] | [33] | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2019 | 2012 | 2015 | 2018 | 2017 | 2013 | 2017 | 2015 | 2013 | 2018 | 2018 | |

| Real-time Surveillance | Yes | No | No | Yes | Yes | No | No | Yes | No | No | No |

| UAVs based communication | Yes | No | No | Yes | No | No | No | Yes | No | No | No |

| Rate-adaptation Client/Server based | Server | Client | Client | Client | Client | Client | Client | Client | Client | Client | Client/Server |

| Transport-layer | UDP | TCP | TCP | UDP | UDP | TCP | TCP | UDP | TCP | TCP | TCP |

| Crosslayer adaptation | Yes | No | Yes | No | No | No | No | No | Yes | No | No |

| Considering GoP for QoE of video | Yes | No | No | No | No | Yes | No | No | No | No | No |

| 4G-LTE Support | Yes | No | Yes | No | No | No | Yes | Yes | Yes | No | No |

| Link-awareness | Yes | No | Yes | No | Yes | No | Yes | No | Yes | Yes | No |

| Wireless link for Communication | Yes | No | Yes | Yes | Yes | No | Yes | Yes | Yes | Yes | No |

| Congestion awareness | No | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes | Yes |

| Buffer based approach | No | Yes | Yes | No | No | No | Yes | No | Yes | Yes | Yes |

| Considering effects of Handovers | Yes | No | No | No | No | No | No | No | No | No | No |

| Channels | Ranges | |

|---|---|---|

| BC | dBm | −75 to −90 |

| GC | dBm | −90 to −100 |

| PC | dBm | −100 to −120 |

| Parameters | Description |

|---|---|

| Ho | Inter- or Intracellular Handover |

| LRV | Low Resolution Video |

| HRV | High Resolution Video |

| MRV | Medium Resolution Video |

| PC | Poor Channel |

| GC | Good Channel |

| BC | Best Channel |

| EoV | End of Video |

| Parameters | HRV | MRV | LRV |

|---|---|---|---|

| Video Format | H.264 | H.264 | H.264 |

| Resolution | 1920 × 1080 | 352 × 288 | 176 × 144 |

| Frame rate | 30 | 30 | 30 |

| Frame rate type | CFR | CFR | CFR |

| Average Bit-Rate | 5 Mbps | 1 Mbps | 500 Kbps |

| Parameter | Units | Values |

|---|---|---|

| macroEnbSites | numb | 1–4 |

| Area Margin Factor | numb | 0.5 |

| macroUE Density | numb/sq m | 0.00002 |

| macroUEs | numb | 20 |

| macroEnb Tx Power | dBm | 46 |

| macroEnb DLEARFCN | numb | 100 |

| macroEnb ULEARFCN | numb | 18,100 |

| macroEnb Bandwidth | Resource Blocks | 100 |

| Bearers per UE | numb | 1 |

| SRS Periodicity | ms | 80 |

| Scheduler | - | Proportional Fair |

| Parameter | Values | |

|---|---|---|

| Time Step | sec | 0.5 |

| Alpha | numb | 0.85 |

| Mean Velocity | m/s | Variable 1–10 |

| Mean Direction | URV | Min = 0, Max = 6.28 |

| Mean Pitch | URV | Min = 0.05, Max = 0.05 |

| Normal Velocity | GRV | Mean = 0, Var = 0, Bound = 0 |

| Normal Direction | GRV | MEAN = 0, Var = 0.2, Bound = 0.4 |

| Normal Pitch | GRV | Mean = 0,Var = 0.02, Bound = 0.04 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Naveed, M.; Qazi, S.; Atif, S.M.; Khawaja, B.A.; Mustaqim, M. SCRAS Server-Based Crosslayer Rate-Adaptive Video Streaming over 4G-LTE for UAV-Based Surveillance Applications. Electronics 2019, 8, 910. https://doi.org/10.3390/electronics8080910

Naveed M, Qazi S, Atif SM, Khawaja BA, Mustaqim M. SCRAS Server-Based Crosslayer Rate-Adaptive Video Streaming over 4G-LTE for UAV-Based Surveillance Applications. Electronics. 2019; 8(8):910. https://doi.org/10.3390/electronics8080910

Chicago/Turabian StyleNaveed, Muhammad, Sameer Qazi, Syed Muhammad Atif, Bilal A. Khawaja, and Muhammad Mustaqim. 2019. "SCRAS Server-Based Crosslayer Rate-Adaptive Video Streaming over 4G-LTE for UAV-Based Surveillance Applications" Electronics 8, no. 8: 910. https://doi.org/10.3390/electronics8080910

APA StyleNaveed, M., Qazi, S., Atif, S. M., Khawaja, B. A., & Mustaqim, M. (2019). SCRAS Server-Based Crosslayer Rate-Adaptive Video Streaming over 4G-LTE for UAV-Based Surveillance Applications. Electronics, 8(8), 910. https://doi.org/10.3390/electronics8080910