Abstract

In this paper, an altered adaptive algorithm on block-compressive sensing (BCS) is developed by using saliency and error analysis. A phenomenon has been observed that the performance of BCS can be improved by means of rational block and uneven sampling ratio as well as adopting error analysis in the process of reconstruction. The weighted mean information entropy is adopted as the basis for partitioning of BCS which results in a flexible block group. Furthermore, the synthetic feature (SF) based on local saliency and variance is introduced to step-less adaptive sampling that works well in distinguishing and sampling between smooth blocks and detail blocks. The error analysis method is used to estimate the optimal number of iterations in sparse reconstruction. Based on the above points, an altered adaptive block-compressive sensing algorithm with flexible partitioning and error analysis is proposed in the article. On the one hand, it provides a feasible solution for the partitioning and sampling of an image, on the other hand, it also changes the iteration stop condition of reconstruction, and then improves the quality of the reconstructed image. The experimental results verify the effectiveness of the proposed algorithm and illustrate a good improvement in the indexes of the Peak Signal to Noise Ratio (PSNR), Structural Similarity (SSIM), Gradient Magnitude Similarity Deviation (GMSD), and Block Effect Index (BEI).

1. Introduction

The traditional Nyquist sampling theorem states that the sampling frequency of a signal must be more than twice its highest frequency to ensure that the original signal is completely reconstructed from the sampled value, while the compressive sensing (CS) theory breaks through the traditional limitation of the Nyquist sampling theorem in signal acquisition and can achieve reconstructing a high-dimensional sparse signal or compressible signal from the lower-dimensional measurement [1]. As an alternative to the Nyquist sampling theorem, CS theory is being widely studied, especially in the current image processing. The research of CS theory mainly focuses on several important aspects such as sparse representation, measurement matrix construction, and the reconstruction algorithm [2,3]. The main research hotspot of sparse representation is how to construct a sparse dictionary of the orthogonal system and an over-complete dictionary for suboptimal approximation [4,5]. The construction of the measurement matrix mainly includes the universal random measurement matrix and the improved deterministic measurement matrix [6]. The research of the reconstruction algorithm mainly focuses on the suboptimal solution problem and a training algorithm based on self-learning [7,8]. With the advancement of research and application about CS theory, especially in 2D or 3D image processing, the CS technology faces several challenges, including computational dimensional disaster and the spatial storage problem with the increase of the images geometric scale. To solve these challenges, the researchers proposed many fast-compressive sensing algorithms to solve the computation cost and the block-compressive sensing (BCS) algorithm to solve the space storage problem [9,10,11,12]. This article is based on the analysis of the above two points.

The CS recovery algorithm of images can mainly be divided into convex optimization recovery algorithms, non-convex recovery algorithms, and hybrid algorithms. The convex optimization algorithms include basis pursuit (BP), greedy basis pursuit (GBP), iterative hard threshold (IHT), etc. Non-convex algorithms include orthogonal matching pursuit (OMP), subspace matching basis pursuit (SP), and iteratively reweighted least square (IRLS), etc. The hybrid algorithms include sparse Fourier description (SF), chain pursuit (CP), and heavy hitters on steroids pursuit (HHSP) and other mixed algorithms [13,14,15]. The convex optimization algorithms based on l1 minimization have benefits on the reconstruction effect, but with large computational complexity and high time complexity. Compared with convex optimization algorithms, the non-convex algorithms, such as the greedy pursuit algorithm, operate quickly, with a slightly poor accuracy based on l0 minimization, and can also meet the general requirements of practical applications. In addition, the iterative threshold method has also been widely used in both of them with excellent performance. However, the iterative threshold method is sensitive to the selection of the threshold and the initial value of the iteration that affects the efficiency and accuracy of the algorithm [16,17]. The selection of thresholds in this process often uses simple error values (including absolute or relative values) or quantitative iterations as stopping criterion of the algorithm, which does not guarantee algorithm optimization [18,19].

The focus of this paper is on three aspects, namely, the block partitioning under weighted information entropy, the adaptive sampling based on synthetic features, and the iterative reconstruction through error analysis. The mean information entropy (MIE) and texture saliency (TS) are introduced in the block partitioning to provide a basis for promoting the algorithm. This part of adaptive sampling mainly improves the overall image quality through designing the block sampling rate by means of variance and local saliency (LS). The iterative reconstruction part mainly uses the relationship of three errors to provide the number of iterations required for the best reconstructed image in different noise backgrounds. Based on the above points, this paper proposes an altered adaptive block-compression sensing algorithm with flexible partitioning and error analysis, which is called FE-ABCS.

The remainder of this paper is organized as follows. In Section 2, we focus on the preliminaries of BCS. Section 3 includes the problem formulation and important factors. Then, the structure of the proposed FE-ABCS algorithm is presented in Section 4. In Section 5, the experiments and results analysis are listed to show the benefit of the FE-ABCS. The paper concludes with Section 6.

2. Preliminaries

2.1. Compressive Sensing

The algorithm theory of compressive sensing is derived from the sparse characteristic of natural signals that can be sparsely represented under a certain sparse transform basis, enabling direct sampling of sparse signals (sampling and compressing simultaneously). Set the sparse representation of an original digital signal which can be obtained by the transformation of sparse basis with K sparse coefficients and the signal is observed by a measurement matrix , then the observation signal can be expressed as:

where, , , and . Consequently, is the product of the matrix and , named the sensing matrix, and the value of M is much less than N because of the compressive sensing theory.

The reconstruction process is an NP-hard problem which restores the N-dimensional original signal from the M-dimensional measurement value through nonlinear projection and cannot be solved directly. Candès et al. pointed out that, the number M must meet the condition in order to reconstruct the N-dimensional signal accurately, and the sensing matrix must satisfy the Restricted Isometry Property (RIP) [20]. Furthermore, the former theories proved that the original signal can be accurately reconstructed from the measured value by solving the norm optimization problem:

In the above formula, is the norm of a vector, which represents the number of non-zero elements in the vector.

With the wide application of CS technology, especially for 2D/3D image signal processing, it inevitably leads to a dimensional computing disaster problem (because the amount of calculation increases with the square/cube of dimensions), which is not directly overcome by CS technology itself. Here, it is necessary to introduce block partitioning and parallel processing to improve the algorithm, that is, the BCS algorithm improves its universality.

2.2. Block-Compressive Sensing (BCS)

The traditional method of BCS used in image signal processing is to segment the image and process the sub-images in parallel for reducing the cost of storage and calculation. Suppose the original image () with pixels in total, the observation with M-dimension and the definition of total sampling rate (TSR = M/N), in the normal processing of BCS, the image is divided into small blocks with a size of , each of which is sampled with the same operator. Let represent the vectorized signal of the i-th block through raster scanning, and the output vector of BCS measurement can be written as:

where, is an matrix with and . The matrix is usually taken as an orthonormalized i.i.d Gaussian matrix. For the whole image, the equivalent sampling operator in (1) is thus a block diagonal matrix taking the following form:

2.3. Problems of BCS

The mentioned BCS algorithm for solving the storage space, dividing image into multiple sub-images, reduces the scale of the measurement matrix on the one hand, and on the other hand could be conducive to the parallel processing of the sub-images. However, BCS still has the following problems that need to be investigated and solved:

- Most existing research papers of BCS do not perform useful analysis on image partitioning and then segment according to the analysis result [21,22]. The common partitioning method (n = B × B) of BCS only considers reducing the computational complexity and storage space problem without considering the integrity of the algorithm and other potential effects, such as providing a better foundation for subsequent sampling and reconstructing by combining the structural features and the information entropy of the image.

- The basic sampling method used in BCS is to sample each sub-block uniformly according to the total sampling rate (TSR), while the adaptive sampling method selects different sampling rates according to the sampling feature of each sub-block [23]. Specifically, the detail block allocates a larger sampling rate, and the smooth block matches a smaller sampling rate, thereby improving the overall quality of the reconstructed image at the same TSR. But the crux is that the studies of criteria (feature) used to assign adaptive sampling rates are rarely seen in recent articles.

- Although there are many studies on the improvement of the BCS iterative construction algorithm [24], few articles focus on optimizing the performance of the algorithm from the aspect of iteration stop criterion in the image reconstruction process, especially in the noise background.

In addition, the improvement on BCS also includes blockiness elimination and engineering implementation of the algorithm. Finally, although BCS technology still has some aspects to be solved, due to its advantages, the technology has been widely applied to optical/remote sensing imaging, medical imaging, wireless sensor networks, and so on [25].

3. Problem Formulation and Important Factors

3.1. Flexible Partitioning by Mean Information Entropy (MIE) and Texture Structure (TS)

Reasonable block partitioning reduces the information entropy (IE) of each sub-block to improve the performance of the BCS algorithm at the same total sampling rate (TSR), and ultimately improves the quality of the entire reconstructed image. In our paper, we adopt flexible partitioning with image sub-block shape to remove the blindness of image partitioning with the help of texture structure (TS) and mean information entropy (MIE) instead of the primary shape . The expression of TS is based on the gray-tone spatial-dependence matrices and the angular second moment (ASM) [26,27]. The value of TS is defined as follows using ASM:

where, is the (i,j)-th entry in a gray-tone spatial-dependence matrix, is the normalized form of , is the neighboring pixel pair with distance , orientation , and gray value in the image, and denotes the number of neighboring resolution cell pairs. The definition of MIE of the whole image is as follows:

where, is the proportion of pixels with gray value in the i-th sub-image, and is the number of sub-images.

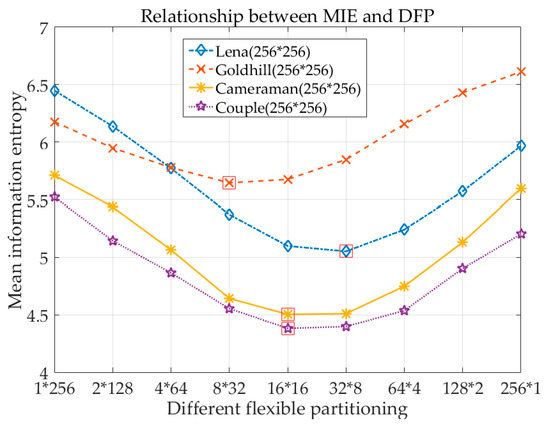

Suppose the flexible partitioning of BCS is reasonable, increasing the similarity between pixels in each sub-block and reducing the MIE of the whole image sub-blocks will inevitably bring about a decrease in the difficulty of image sampling and recovery, which means that the flexible partitioning itself is a process of reducing order and rank. Figure 1 shows the effect on the MIE of four 256 × 256-pixel-testing gray images with 256 gray levels by different partitioning methods when the number of pixels per sub-image is limited to 256. The abscissa represents different 2-base partitioning modes, the ordinate represents the MIE of the whole image in different partitioning modes. Figure 1 indicates that images with different structures reach minimum MIE on different partitioning points which will be used in flexible partitioning to provide a basis for block segmentation.

Figure 1.

Effect of different partitioning methods on mean information entropy (MIE) of images (the abscissa represents flexible partitioning with shape ).

Furthermore, the MIE guiding the partitioning of the image only considers the pixel level of the image, i.e., gray scale distribution, without considering the image optimization of the spatial level, i.e., texture structure. In fact, TS information is also very important for image restoration algorithms. Therefore, this paper uses the method of combined with to provide the basis for flexible partitioning, that is, weighted MIE (WM) which is defined as follows:

where, is the weighting coefficient, is the weighting coefficient function, and its value is related to the TS information .

3.2. Adaptive Sampling with Variance and Local Salient Factor

The feature selection for distinguishing the detail block and the smooth block is very important on the process of adaptive sampling. Information entropy, variance, and local standard deviation are often used as criteria for features, respectively. The shortcomings are found in using the above features individually as criteria for adaptive sampling, such as information entropy only reflects the probability of gray distribution, the variance is also only related to the degree of dispersion of the pixels, and the local standard deviation only focuses on the spatial distribution of the pixels. Secondly, the adaptive sampling rate is mostly set using segment adaptive sampling instead of step-less adaptive sampling in the previous literature [28], which leads to the discontinuity of sampling rate and the inadequacy utilization of the distinguishing feature.

In order to overcome the shortcomings of individual features, this paper uses the synthetic feature to distinguish between smooth blocks and detail blocks. The synthetic feature for adaptive sampling is defined as:

where, and denote the variance and local salient factor in the i-th sub-image, and and are the corresponding weighting coefficients. The expressions of variance and local salient factor for the sub-block are as follows:

where, is the gray value of the j-th pixel in the i-th sub-image, is the gray mean of the i-th sub-block image, is the gray value of the k-th pixel in the salient operator domain around the center pixel , and represents the number of pixels in the salient operator domain. The synthetic feature can not only reflect the degree of dispersion and relative difference of sub-image pixels, but also combines the relationship between sensory amount and physical quantity of Weber’s Law [29].

In order to avoid the disadvantage of segmented adaptive sampling, step-less adaptive sampling is adopted in this literature [30,31,32]. The key point of step-less adaptive sampling is how to select a continuous sampling rate accurately based on the synthetic feature. The selection of continuous sampling rates is achieved by setting the sampling rate factor () based on the relationship between the sensory amount and the physical quantity in Weber’s Law. The sampling rate factor () and the step-less adaptive sampling rate () are defined as follows:

where, TSR is the total sampling rate of the whole image.

3.3. Error Estimation and Iterative Stop Criterion in Reconstruction Process

The goal of the reconstruction process is to provide a good representative of the original signal:

Given the noisy observed output () and finite-length sparsity (K), the performance of reconstruction is usually measured by the similarity or the error function between and . In addition, the reconstruction method, whether it is a convex optimization algorithm or a non-convex optimization algorithm, needs to solve the NP-hard problem by linear programming (LP), wherein the number of the correlation vectors is crucial. Therefore, the error estimation and the selection of the number of correlation vectors are two important factors of reconstruction. Especially in some non-convex optimization restoration algorithms, such as the OMP algorithm, the selection of the number of correlation vectors is linearly related to the number () of iterations of the algorithm. So, the two points (error estimation and optimal iteration) need to be discussed below.

3.3.1. Reconstruction Error Estimation in Noisy Background

In the second section, Equation (1) was used to describe the relationship model between the original signal and the noiseless observed signal, but the actual observation is always in the noise background, so the observed signal in this noisy environment is as shown in the following equation:

where, is the observed output in the noisy environment, and is an additive white Gaussian noise (AWGN) with zero-mean and standard deviation . The M-dimension AWGN is independent of the signal . Here, we discuss the reconstruction error in two steps, the first step confirms the entire reconstruction model, and the second step derives the relevant reconstruction error.

Since the original signal () itself is not sparse, it is K-sparse under sparse basis (), so we have:

where, is a vector of length N which only has K non-zero elements, i.e., the remaining N-K micro elements are zero or much smaller than any of the K non-zero elements. Assuming that the first K elements of the sparse representation s are just non-zero elements without loss of generality, we can have:

where, is a K dimensional vector and is a vector of length N-K. The actual observed signal obtained by Equations (13) and (15) can be described as follows:

where, is an M × N matrix generated of N vectors with M-dimension.

In order to estimate the error of the recovery algorithm accurately, we define three error functions using the norm:

where, represent the reconstructed values of , respectively. The three reconstructed values are obtained by maximum likelihood (ML) estimation using minimization. The number of iterations in the restoration algorithm is times, that is, the number of correlation vectors. In addition, in the process of solving by using pseudo-inverse, which is based on the least-squares algorithm, the value of is smaller than M. Using Equations (13) and (15), the expressions of are listed as follows:

where, is the pseudo inverse of , and its expression is .

Using Equations (20–22), the three error functions are rewritten as follows:

According to the definition of and the RIP, we know:

where, represents a coefficient associated with and K. According to Gershgorin circle theorem [33], for all , where denotes the coherency of :

Using Equations (26) and (27), the boundaries of the original data error are as follows:

Therefore, from the above analysis, we can conclude that the three errors are consistent, and the minimizing of the three errors is equivalent. Due to the complexity and reliability of the calculation (-too complicated, -insufficient dimensions), is used as the target in the optimization function of the recovery algorithm.

3.3.2. Optimal Iterative Recovery of Image in Noisy Background

The optimal iterative recovery of image discussed in this paper refers to the case where the error function of the image is the smallest, as can be seen from the minimization of in the form of norm:

while is a projection matrix of rank , is a projection matrix of rank . Since the projection matrices and in Equation (30) are orthogonal, the inner product of the two vectors and is zero and therefore:

According to [34], the observed data error is a Chi-square random variable with degree of freedom , and the expected value and the variance of are as follows:

The expected value of has two parts. The first part is the noise-related part, which is a function that is positively related to the number . The second part is a function of the unstable micro element , which is decreased as the number increases. Therefore, the observed data error is normally called a bias-variance tradeoff.

Due to the existence of the uncertain part , this results in an impossible-to-seek optimal number of iterations by solving the minimum value of directly. As a result, another bias-variance tradeoff is introduced to provide probabilistic bounds on the by using the noisy output instead of noiseless output :

According to [35], the second observed data error is a Chi-square random variable of order , and the expected value and the variance of are as follows:

So, we can derive probabilistic bounds for the observed data error using probability distribution of the two Chi-square random variables:

where, is the confidence probability on a random variable of the observed data error , and is the validation probability on a random variable of the second observed data error . As both of the two errors satisfy the Chi-square distribution, Gaussian distribution can be used to estimate them. Therefore, confidence probability and validation probability can be calculated as:

where, and denote the tuning parameters of confidence and validation probabilities, respectively. Furthermore, the worst case is considered when calculating the minimum value of , that is, by calculating the minimum value of the upper bound of :

Normally, based on Akaike information criterion (AIC) or Bayesian information criterion (BIC), the optimum number of iterations can be chosen as follows:

AIC: Set

BIC: Set and .

where, can be calculated based on the noisy observation data and the reconstruction algorithm.

3.3.3. Application of Error Estimation on BCS

The proposed algorithm (FE-ABCS) is based on block-compressive sensing, so the optimal number of iterations () derived in the above section also requires a variant to be applied to the above algorithm:

where, represents the serial number of all sub-images. Similarly, the values of and can be valued according to the AIC and BIC criteria.

4. The Proposed Algorithm (FE-ABCS)

With the knowledge presented in the previous section, the altered ABCS (FE-ABCS) is proposed for the recovery of block sparse signals in noiseless or noise backgrounds. The workflow of the proposed algorithm is presented in Section 4.1 while the specific parameter settings of the proposed algorithm is introduced in Section 4.2.

4.1. The Workflow and Pseudocode of FE-ABCS

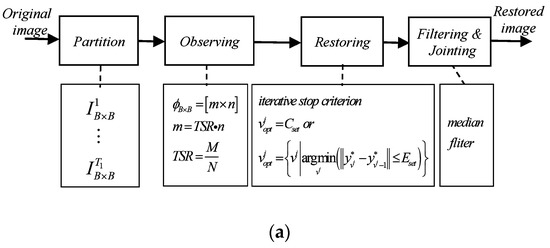

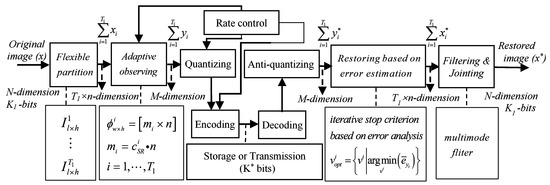

In order to better express the idea of the proposed algorithm, the workflow of the typical BCS algorithm and the FE-ABCS algorithm are presented, as shown in Figure 2.

Figure 2.

The workflow of two block-compressive sensing (BCS) algorithms. (a) Typical BCS algorithm, (b) FE-ABCS algorithm.

According to Figure 2, compared with the traditional BCS algorithm, the main innovations of this paper can be reflected in the following points:

- Flexible partitioning: using the weighted MIE as the block basis to reduce the average complexity of the sub-images from the pixel domain and the spatial domain;

- Adaptive sampling: adopting synthetic feature and step-less sampling to ensure a reasonable sample rate for each subgraph;

- Optimal number of iterations: using the error estimate method to ensure the minimum error output of the reconstructed image in the noisy background.

Furthermore, since the FE-ABCS algorithm is based on iterative recovery algorithm, especially the non-convex optimization iterative recovery algorithm, this paper uses the OMP algorithm as the basic comparison algorithm without loss of generality. The full pseudocode of the proposed algorithm is presented as follows.

| FE-ABCS Algorithm based on OMP (Orthogonal Matching Pursuit) | |

| 1: Input: Original image I, total sampling rate TSR, sub-image dimension n (), sparse matrix , initialized measurement matrix 2: Initialization: ; ; // ; // step1: flexible partitioning (FP) 3: for j = 1,…,T2 do 4: ; ; 5: ; ; 6: ; // Weighted MIE--Base of FP 7: end for 8:; 9:; ; ; step2: adaptive sampling (AS) 10: for i = 1,…,T1 do 11: ; ; ; // synthetic feature (J)--Base of AS 12: end for 13: ; 14: ; // --AS ratio of sub-images ; 15: ; 16: ; 17: ; | step3: restoring based on error estimation 18:; // 19: ; 20: for i = 1,…,T1 do 21: ; //-- column vector of 22: ; // calculate optimal iterative of sub-images 23: for do 24: ; 25: ; 26: ; 27: end for 28: ; // --reconstructed sparse representation 29: ; // --reconstructed original signal 30: end for 31: ; // --reconstructed image without filter step4: multimode filtering (MF) 32: ; // 33: ; 34: 35: ; // 36: ; 37: ; 38: 39: // --reconstructed image with M |

4.2. Specific Parameter Setting of FE-ABCS

4.2.1. Setting of the Weighting Coefficient

The most important step in achieving flexible partitioning is to the minimum of the weighted MIE, where the design of the weighting coefficient function is the most critical point. Therefore, this section focuses on the specific design of the function which ensures optimal partitioning of the image:

where, and represent the value of horizontal and vertical TS by using ASM, and represents the threshold at which the TS feature value reaches a significant degree.

4.2.2. Setting of the Adaptive Sampling Rate

In the process of adaptive observation, the most important thing is to design a reasonable random observation matrix, and the dimension of this matrix needs to be constrained by the adaptive sampling rate, so as to assign the different sampling of each sub-image with a different complexity. Therefore, the setting of is crucial, and its basic form is mainly determined by the synthetic feature () and the sampling rate factor ().

The definition of can be implemented by setting the corresponding weighting coefficients . This article obtains the optimization values for through analysis and partial verification experiments: .

The purpose of setting is to establish the mapping function relationship between and by Equations (10) and (11). However, the mapping relationship established by Equation (10) does not consider the minimum sampling rate. In fact, the minimum sampling rate factor (MSRF) is considered in the proposed algorithm to improve performance, that is, the function between and should be modified as follows.

- Initial value calculation of : get the initial value of the sampling factor by Equation (10).

- Judgment of through MSRF (): if the corresponding sampling rate factor of all image sub-blocks meets the minimum threshold requirement (), there is no need for modification, however, if it is not satisfied, modify it.

- Modifying of : if , then ; if , then use the following equation to modify the value:where, is the number of sub-images that can meet the requirement of the minimum threshold.

4.2.3. Setting of the Iteration Stop Condition

The focus of the proposed algorithm in the iterative reconstruction part is to make the best effect of the rebuilt image by choosing in the actual noisy background. This paper combines BIC and BCS to propose the calculation formula of the optimal iteration number of the proposed algorithm:

5. Experiments and Results Analysis

In order to evaluate the FE-ABCS algorithm, experimental verification is performed in three scenarios. This paper first discusses the performance of the improved algorithm by flexible partitioning and adaptive sampling in the absence of noise, and secondly discusses how to combine the number of optimal iterations to eliminate the noise effect and achieve the best quality (comprehensive indicator) under noisy conditions. Finally, the differences between this proposed algorithm and other non-CS image compression algorithms is analyzed. The experiments were carried out in the matlab2016b software environment, and 20 typical grayscale images with 256 × 256 resolution were used for testing, which were selected from the LIVE Image Quality Assessment Database, the SIPI Image Database, the BSDS500 Database, and other digital image processing standard test Databases. The performance indicators mainly adopt Peak Signal to Noise Ratio (PSNR), Structural Similarity (SSIM), Gradient Magnitude Similarity Deviation (GMSD), Block Effect Index (BEI), and Computational Cost (CC). The above five performance indicators are defined as follows:

The PSNR indicator is an index that shows the amplitude error between the reconstructed image and the original image, which is the most common and widely used objective measure of image quality:

where, and are the gray value of i-th sub-image of the reconstructed image and the original image.

The SSIM indicator is adopted to indicate similarity between the reconstructed image and the original image:

where, and are the mean of x and x*, and are the standard deviation of x and x*, represents the covariance of x and x*, constant and , and L is the range of pixel values.

The GMSD indicator is mainly used to characterize the degree of distortion of the reconstructed image. The larger the value, the worse the quality of the reconstructed image:

where, is the standard deviation operator, GMS is the gradient magnitude similarity between x and x*, and are the gradient magnitude of x(i) and x*(i), and represent the Prewitt operator of horizontal and vertical direction, and is an adjustment constant.

The main purpose of introducing BEI is to evaluate the blockiness of the algorithm in a noisy condition, which means that the larger the value, the more obvious the block effect:

where, denotes the edge acquisition function of the image, represents the function of finding the number of all edge points of the image, and is an absolute value operator.

The Computational Cost is introduced to measure the efficiency of the algorithm, which is usually represented by Computation Time (CT). The smaller the value of CT, the higher the efficiency of the algorithm:

where, and indicate the start time and end time, respectively.

In addition, the sparse basis and the random measurement matrices use discrete cosine orthogonal basis and orthogonal symmetric Toeplitz matrices [36,37], respectively.

5.1. Experiment and Analysis without Noise

5.1.1. Performance Comparison of Various Algorithms

In order to verify the superiority of the proposed ABCS algorithm, this paper mainly uses the OMP algorithm as the basic reconstruction algorithm. Based on the OMP reconstruction algorithm, eight BCS algorithms (including the proposed algorithm with the idea of flexible partitioning and adaptive sampling) are listed, and the performance of these algorithms under different overall sampling rates is compared, which is shown in Table 1. In this experiment, four normal grayscale standard images are used for performance testing, the dimension of the subgraph and the iterative number of reconstructions are limited to 256 and one quarter of the measurement’s dimension, respectively.

Table 1.

The Peak Signal to Noise Ratio (PSNR) and Structural Similarity (SSIM) of reconstructed images with eight BCS algorithms based on OMP. (TSR = total sampling rate).

These 8 BCS algorithms are named as M-B_C, M-B_S, M-FB_MIE, M-FB_WM, M-B_C-A_S, M-FB_WM-A_I, M-FB_WM-A_V, and M-FB_WM-A_S respectively, which in turn represent BCS with a fixed column block, BCS with a fixed square block, BCS with flexible partitioning by MIE, BCS with flexible partitioning by WM, BCS with a fixed column block and IE-adaptive sampling, BCS with WM-flexible partitioning and IE-adaptive sampling, BCS with WM-flexible partitioning and variance-adaptive sampling, and BCS with WM-flexible partitioning and SF-adaptive sampling (A form of FE-ABCS algorithm in the absence of noise). Comparing the data in Table 1, there are the following consensuses:

- Analysis of the performance indicators of the first four algorithms shows that for the BCS algorithm, BCS with a fixed column block is inferior to BCS with a fixed square block because square partitioning makes good use of the correlation of intra-block regions. MIE-based partitioning minimizes the average amount of information entropy of the sub-images. However, when the overall image has obvious texture characteristics, simply using MIE as the partitioning basis may not necessarily achieve a good effect, and the BCS algorithm based on weighted MIE combined with the overall texture feature can achieve better performance indicators.

- Comparing the adaptive BCS algorithms under different features in Table 1, variance has obvious superiority to IE among the single features, because the variance not only contains the dispersion of gray distribution but also the relative difference of the individual gray distribution of sub-images. In addition, the synthetic feature (combined local saliency) has a better effect than a single feature. The main reason for this is that the synthetic feature not only considers the overall difference of the subgraphs, but also the inner local-difference of the subgraphs.

- Combining experimental results of the eight BCS algorithms in Table 1 reveals that using adaptive sampling or flexible partitioning alone does not provide the best results, but the proposed algorithm combining the two steps will have a significant effect on both PSNR and SSIM.

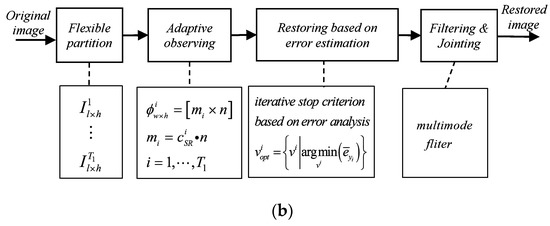

Figure 3 shows the reconstructed images of Cameraman using the above eight BCS algorithms and multimode filter at the overall sampling rate of 0.5. Compared with other algorithms, the graph (i) reconstructed by the proposed algorithm has good quality both in performance indicators and subjective vision. Adding multimode filtering has improved the performance of the above eight BCS algorithms. While comparing the corresponding data (SR = 0.5) in Figure 3 and Table 1, it was found that PSNR has a certain improvement after adding multimode filtering, and so as to SSIM under the first six BCS algorithms except the latter two algorithms. The reason is that the adaptive sampling rates of the latter two algorithms are both related to the variance (the more variance, the more sampling rate), and SSIM is related to both the variance and the covariance. In addition, to filter out high-frequency noise, the filtering process will also lose some high-frequency components (a contribution to the improvement of SSIM) of signal itself. Therefore, the latter two algorithms will reduce the value of SSIM for images with a lot of high-frequency components (SSIM value of graph (h) and (i) of Figure 3 is a little smaller than the corresponding value in Table 1), but for most images without lots of high-frequency information, the value of SSIM is improved.

Figure 3.

Reconstructed images of Cameraman and performance indicators with different BCS algorithms (TSR = 0.5).

Secondly, in order to evaluate the effectiveness and universality of the proposed algorithm, in addition to the OMP reconstruction algorithm as the basic comparison algorithm, the IRLS and BP reconstruction algorithms were also adopted and combined with the proposed method to generate eight BCS algorithms, respectively. Table 2 shows the experimental data records of the above two types of algorithms tested with the standard image Lena. From the resulting data, the proposed method has a certain improvement for the BCS algorithm based on IRLS and BP, although it will bring a slightly higher cost in computation time due to the increase of the proposed algorithm’s complexity itself.

Table 2.

The PSNR and SSIM of reconstructed images with eight BCS algorithms based on iteratively reweighted least square (IRLS) and basis pursuit (BP).

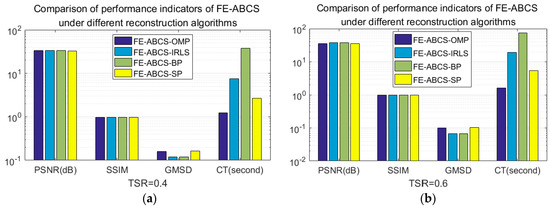

Furthermore, comparative experiments of the proposed algorithm combined with different image reconstruction algorithms (OMP, IRLS, BP, and SP) have also been carried out. Figure 4 is the data record of the above experiments tested with the standard image Lena under the conditions of TSR = 0.4 and TSR = 0.6, respectively. The experimental data shows that the proposal using these four algorithms has little difference between the PSNR and SSIM performance index. However, in terms of the GMSD index, the IRLS and BP algorithms are obviously better than the OMP and SP. In terms of calculation time, BP is based on the norm, its performance is significantly worse than the other three, which is also consistent with the content of Section 1.

Figure 4.

The comparison of the proposed algorithm with 4 reconstruction algorithms (OMP, IRLS, BP, SP): (a) TSR = 0.4, (b) TSR = 0.6.

5.1.2. Parametric Analysis of the Proposed Algorithm

The main points of this proposed algorithm involves the design and verification of weighting coefficients (, , ) and minimum sampling rate factor (). The design of the three weighting coefficients of the algorithm in this paper was introduced in Section 4.2, and its effect on performance was reflected in the comparison of the eight algorithms in Section 5.1.1. Here, only the selection and effect of need to be researched, and the influence of on the PSNR under different TSR is analyzed.

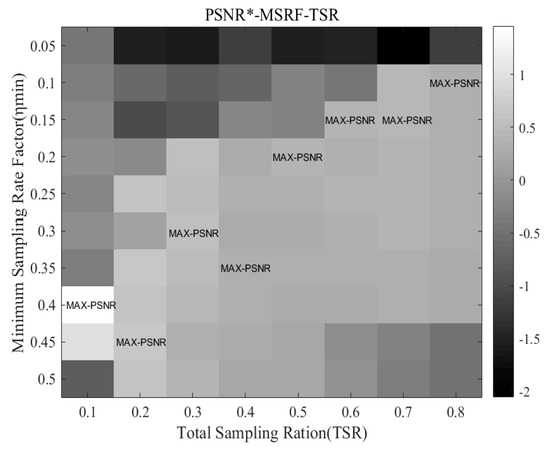

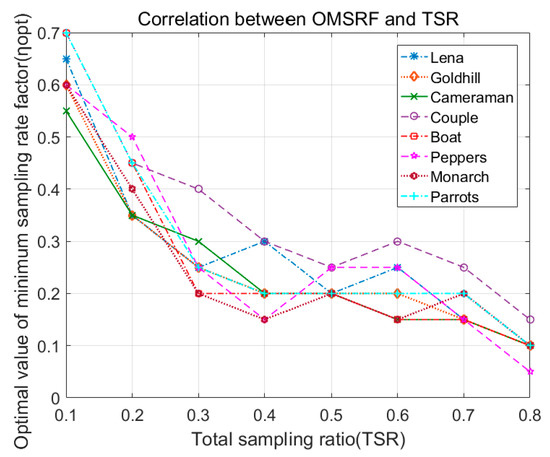

Figure 5 shows the analysis results of the test image Lena on the correlation between PSNR, TSR, and MSRF (). It can be seen from Figure 5 that the optimal value (maximizing the PSNR of Lena’s recovery image) of minimum sampling rate factor (OMSRF) decreases as the TSR increases. In addition, the gray value in Figure 5 means the revised PSNR of the recovery image ().

Figure 5.

Correlation between PSNR*, MSRF, and TSR of test image Lena.

Then, many other test images were analyzed in this paper to verify the relationship between TSR and OMSRF (), and the experimental results of eight typical test images are shown in Figure 6. According to the data, the reasonable setting of MSRF () in the algorithm can be obtained by the curve fitting method. The baseline fitting method (a simple curve method) is used in the proposed algorithm of this article ().

Figure 6.

Correlation between OMSRF () and TSR.

5.2. Experiment and Analysis Under Noisy Conditions

5.2.1. Effect Analysis of Different Iteration Stop Conditions on Performance

In the case of noiseless, the larger the iterative number () of the reconstruction algorithm, the better the effect of the reconstructed image. But in the noisy condition, the quality of the reconstructed image does not become monotonous with the increase of , which has been carefully analyzed in Section 3.3. The usual iteration stop conditions are: (1) using the sparsity () of signal as the stopping condition, i.e., fixed number of iterations (), and (2) using the certain differential threshold () of the recovery value as the stopping condition, i.e., the difference between the adjacent two results of the iterative output less than the threshold (). Since the above two methods could not guarantee the optimal recovery of the original signal in the noisy background, the innovation of the FE-ABCS algorithm is to make up for the above deficiency and propose a constraint () based on error analysis to ensure the best iterative reconstruction. Then the rationality of the proposed scheme would be verified through experiments in this section, and without loss of generality, OMP is used as the basic reconstruction algorithm, just like what was done in Section 5.1.

The specific experimental results of test image Lena for selecting different iteration stop conditions under different noise backgrounds are recorded in Table 3. The value of Noise-std represents the standard deviation of additional Gaussian noise signal. From the overall trend of Table 3, selecting has better performance than selecting as the stop condition for iterative reconstruction. This advantage is especially pronounced as the Noise-std increases.

Table 3.

The experimental results of Lena at different stop conditions and noise background (TSR = 0.4).

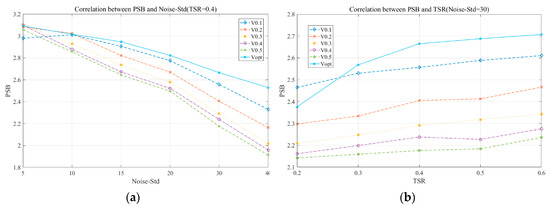

In addition, in order to comprehensively evaluate the impact of different iteration stop conditions on the performance of reconstructed images, this paper combined the above three indicators to form a composite index PSB (PSB = PSNR × SSIM/BEI) for evaluating the quality of reconstructed images. The relationship between PSB and Noise-std of the reconstructed image under different iterations was researched in this article, so as to explore the relationship between PSB and TSR. Figure 7 shows the corresponding relationship between the PSB, Noise-std, and TSR under the above six different iteration stop conditions of Lena. It can be seen from Figure 7a that compared with the other five sparsity-based () reconstruction algorithms, the -based error analysis reconstruction algorithm generally has relatively good performance under different noise backgrounds. Similarly, Figure 7b shows that the -based error analysis reconstruction algorithm has advantages over other algorithms at different total sampling rates.

Figure 7.

The correlation between the PSB, Noise-std, and TSR under the six different iteration stop conditions of Lena: (a) PSB changes with Noise-std, (b) PSB changes with TSR.

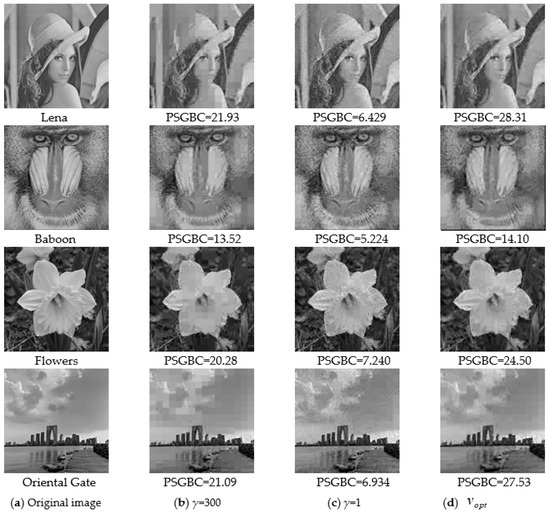

Furthermore, the differential threshold (γ)-based reconstruction algorithm and the -based error analysis reconstruction algorithm were compared in this article. Two standard test images and two real-nowadays images are adopted for the comparative experiment at the condition of Noise-std = 20 and TSR = 0.5. Experimental results show that the -based error analysis reconstruction algorithm has a significant advantage over the γ-based reconstruction algorithm in both PSNR and PSGBC (another composite index: PSGBC = PSNR × SSIM/GMSD/BEI/CT), which can be seen from Table 4, although there is a slight loss in BEI. Figure 8 shows the reconstruction images of these four images with differential threshold (γ) and error analysis () as the iterative stop condition.

Table 4.

The performance indexes of test images under different iterative stop condition.

Figure 8.

Iterative reconstruction images based on and at the condition of Noise-std = 20 and TSR = 0.5.

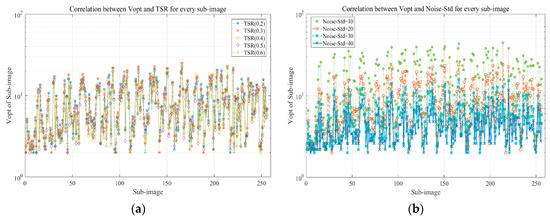

5.2.2. Impact of Noise-Std and TSR on

Since is important to the proposed algorithm in this paper, it is necessary to analyze its influencing factors. According to Equation (44), is mainly determined by the measurement dimension of the signal and the added noise intensity under the BIC condition. In this section, the test image Lena is divided into 256 sub-images, and the relationship between the optimal iterative recovery stop condition () of each sub-image, the TSR and the Noise-std is analyzed, and the experimental results are recorded in Figure 9. It can be seen from Figure 9a that the correlation between and TSR is small, but it can be seen from Figure 9b that has a strong correlation with Noise-std, that is, the larger the Noise-std, the smaller the .

Figure 9.

Correlation between , TSR, and Noise-std of sub-images: (a) Noise-std = 20, (b) TSR = 0.4.

5.3. Application and Comparison Experiment of FE-ABCS Algorithm in Image Compression

5.3.1. Application of FE-ABCS Algorithm in Image Compression

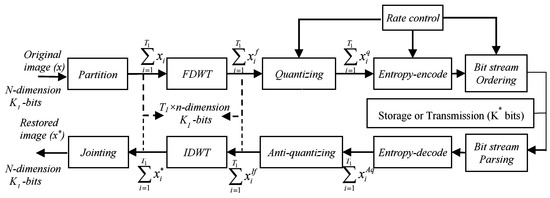

Although the FE-ABCS algorithm belongs to the CS theory which is mainly used for reconstruction of sparse images at low sampling rates, the algorithm can also be used for image compression after modification. The purpose of conventional image compression algorithms (such as JPEG, JPEG2000, TIFF, and PNG) is to reduce the amount of data and maintain a certain image quality through quantization and encoding. Therefore, the quantization and encoding module are added to the FE-ABCS in Figure 2b to form a new algorithm for image compression, which is shown in Figure 10 and named FE-ABCS-QC.

Figure 10.

The workflow of the FE-ABCS-QC algorithm.

In order to demonstrate the difference between the proposed algorithm and the traditional image compression, without loss of generality, the JPEG2000 algorithm is selected as the comparison algorithm and is shown in Figure 11. Comparing Figure 10 and Figure 11, it is found that the modules of FDWT and IDWT in the JPEG2000 algorithm are replaced by the observing module and the restoring module in the proposal respectively, and the dimensions of the input and output signals are both different in the observing and restoring module (M < T1 × n = N), that is different from the modules of FDWT and IDWT in which dimensions of the input and output signals are the same (both T1 × n = N). These differences make the proposed algorithm have a larger compression ratio (CR) and smaller bits per pixel (bpp) than JPEG2000 under the same quantization and encoding conditions.

Figure 11.

The workflow of the JPEG2000 algorithm.

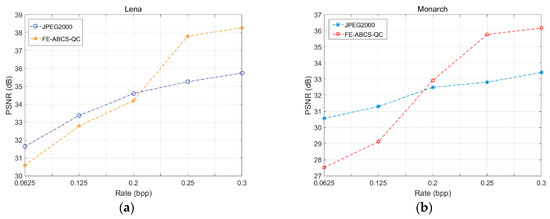

5.3.2. Comparison Experiment between the Proposed Algorithm and the JPEG2000 Algorithm

In general, the evaluation of image compression algorithms is performed by rate-distortion performance. For the comparing of the FE-ABCS-QC and JPEG2000 algorithms, the indicators of PSNR, SSIM, and GMSD are adopted in this section. In addition, the definition of Rate (bpp) in the above two algorithms is as follows:

where, K* is the number of bits in the code stream after encoding, and N is the number of pixels in the original image.

In order to compare the performance of the above two algorithms, multiple standard images are tested, and Table 5 records the complete experimental data for the two algorithms at various rates when using Lena and Monarch as test images. At the same time, the relationship between PSNR (used as the main distortion evaluation index) and the Rate of the two test images is illustrated in Figure 12. Based on the objective data of Table 5 and Figure 12, it can be seen that, compared with the JPEG2000 algorithm, the advantage of the FE-ABCS-QC algorithm becomes stronger with the increase of the rate, that is, at the small rate, the JPEG2000 algorithm is superior to the FE-ABCS-QC algorithm, while at medium and slightly larger rates, the JPEG2000 algorithm is not as good as the FE-ABCS-QC algorithm.

Table 5.

The comparison results of different test-images under the various conditions (bits per pixel (bpp)) based on the JPEG2000 algorithm and the FE-ABCS-QC algorithm.

Figure 12.

Rate-distortion performance for JPEG2000 and FE-ABCS-QC: (a) Lena, (b) Monarch.

Furthermore, the experiment results are recorded in the form of images in addition to the objective data comparison. Figure 13 shows the two algorithms’ comparison of the compressed image restoration effects in the case of bpp = 0.25 when using Bikes as the test image. Comparing (b) and (c) of Figure 13, the image generated by the FE-ABCS-QC algorithm is slightly better than the one of the JPEG2000 algorithm, either from the perception of objective data or subjective sense.

Figure 13.

The two algorithms’ comparison of test image Bikes at the condition of bpp = 0.25: (a) original image, (b) JPEG2000 image (PSNR = 29.80, SSIM = 0.9069, GMSD = 0.1964), (c) image by the FE-ABCS-QC algorithm (PSNR = 30.50, SSIM = 0.9366, GMSD = 0.1574).

Finally, the following conclusions could be gained by observing experimental data and theoretical analysis.

- Small Rate (bpp): the reason why the performance of the FE-ABCS-QC algorithm is worse than the JPEG2000 algorithm at this condition is that the small value of M which changes with Rate causes the observing process to fail to cover the overall information of the image.

- Medium or slightly larger Rate (bpp): the explanation for the phenomenon that the performance of the FE-ABCS-QC algorithm is better than the JPEG2000 algorithm in this situation is that the appropriate M can ensure the complete acquisition of image information and can also provide a certain image compression ratio to generate a better basis for quantization and encoding.

- Large Rate (bpp): this case of the FE-ABCS-QC algorithm is not considered because the algorithm belongs to the CS algorithm and requires M << N itself.

6. Conclusions

Based on the traditional block-compression sensing theory model, an improved algorithm (FE-ABCS) was proposed in this paper, and its overall workflow and key points were specified. Compared with the traditional BCS algorithm, firstly, a flexible partition was adopted in order to improve the rationality of partitioning in the proposed algorithm, secondly the synthetic feature was used to provide a more reasonable adaptive sampling basis for each sub-image block, and finally error analysis was added in the iterative reconstruction process to achieve minimum error between the reconstructed signal and the original signal in the noisy background. The experimental results show that the proposed algorithm can improve the image quality in both noiseless and noisy backgrounds, especially in the improvement of a reconstructed image’s composite index under a noisy background, and will be beneficial to the practical application of the BCS algorithm, and the application of the FE-ABCS algorithm in image compression.

Author Contributions

Conceptualization, methodology, software, validation and writing, Y.Z.; data curation and visualization, Q.S. and Y.Z.; formal analysis, W.L. and Y.Z. supervision, project administration and funding acquisition, W.L.

Funding

This work was supported by the National Natural Science Foundation of China (61471191).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candès, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Shi, G.; Liu, D.; Gao, D. Advances in theory and application of compressed sensing. Acta Electron. Sin. 2009, 37, 1070–1081. [Google Scholar]

- Sun, Y.; Xiao, L.; Wei, Z. Representations of images by a multi-component Gabor perception dictionary. Acta Electron. Sin. 2008, 34, 1379–1387. [Google Scholar]

- Xu, J.; Zhang, Z. Self-adaptive image sparse representation algorithm based on clustering and its application. Acta Photonica Sin. 2011, 40, 316–320. [Google Scholar]

- Wang, G.; Niu, M.; Gao, J.; Fu, F. Deterministic constructions of compressed sensing matrices based on affine singular linear space over finite fields. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2018, 101, 1957–1963. [Google Scholar] [CrossRef]

- Li, S.; Wei, D. A survey on compressive sensing. Acta Autom. Sin. 2009, 35, 1369–1377. [Google Scholar] [CrossRef]

- Palangi, H.; Ward, R.; Deng, L. Distributed compressive sensing: A deep learning approach. IEEE Trans. Signal Process. 2016, 64, 4504–4518. [Google Scholar] [CrossRef]

- Chen, C.; He, L.; Li, H.; Huang, J. Fast iteratively reweighted least squares algorithms for analysis-based sparse reconstruction. Med. Image Anal. 2018, 49, 141–152. [Google Scholar] [CrossRef]

- Gan, L. Block compressed sensing of natural images. In Proceedings of the 15th International Conference on Digital Signal Processing, Cardiff, UK, 1–4 July 2007; pp. 403–406. [Google Scholar]

- Unde, A.S.; Deepthi, P.P. Fast BCS-FOCUSS and DBCS-FOCUSS with augmented Lagrangian and minimum residual methods. J. Vis. Commun. Image Represent. 2018, 52, 92–100. [Google Scholar] [CrossRef]

- Kim, S.; Yun, U.; Jang, J.; Seo, G.; Kang, J.; Lee, H.N.; Lee, M. Reduced computational complexity orthogonal matching pursuit using a novel partitioned inversion technique for compressive sensing. Electronics 2018, 7, 206. [Google Scholar] [CrossRef]

- Qi, R.; Yang, D.; Zhang, Y.; Li, H. On recovery of block sparse signals via block generalized orthogonal matching pursuit. Signal Process. 2018, 153, 34–46. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Areas Commun. 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Lotfi, M.; Vidyasagar, M. A fast noniterative algorithm for compressive sensing using binary measurement matrices. IEEE Trans. Signal Process. 2018, 66, 4079–4089. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Y. Alternating direction algorithms for l1 problems in compressive sensing. SIAM J. Sci. Comput. 2011, 33, 250–278. [Google Scholar] [CrossRef]

- Yin, H.; Liu, Z.; Chai, Y.; Jiao, X. Survey of compressed sensing. Control Decis. 2013, 28, 1441–1445. [Google Scholar]

- Dinh, K.Q.; Jeon, B. Iterative weighted recovery for block-based compressive sensing of image/video at a low subrate. IEEE Trans. Circ. Syst. Video Technol. 2017, 27, 2294–2308. [Google Scholar] [CrossRef]

- Liu, L.; Xie, Z.; Yang, C. A novel iterative thresholding algorithm based on plug-and-play priors for compressive sampling. Future Internet 2017, 9, 24. [Google Scholar]

- Wang, Y.; Wang, J.; Xu, Z. Restricted p-isometry properties of nonconvex block-sparse compressed sensing. Signal Process. 2014, 104, 1188–1196. [Google Scholar] [CrossRef]

- Mahdi, S.; Tohid, Y.R.; Mohammad, A.T.; Amir, R.; Azam, K. Block sparse signal recovery in compressed sensing: Optimum active block selection and within-block sparsity order estimation. Circuits Syst. Signal Process. 2018, 37, 1649–1668. [Google Scholar]

- Wang, R.; Jiao, L.; Liu, F.; Yang, S. Block-based adaptive compressed sensing of image using texture information. Acta Electron. Sin. 2013, 41, 1506–1514. [Google Scholar]

- Amit, S.U.; Deepthi, P.P. Block compressive sensing: Individual and joint reconstruction of correlated images. J. Vis. Commun. Image Represent. 2017, 44, 187–197. [Google Scholar]

- Liu, Q.; Wei, Q.; Miao, X.J. Blocked image compression and reconstruction algorithm based on compressed sensing. Sci. Sin. 2014, 44, 1036–1047. [Google Scholar]

- Wang, H.L.; Wang, S.; Liu, W.Y. An overview of compressed sensing implementation and application. J. Detect. Control 2014, 36, 53–61. [Google Scholar]

- Xiao, D.; Xin, C.; Zhang, T.; Zhu, H.; Li, X. Saliency texture structure descriptor and its application in pedestrian detection. J. Softw. 2014, 25, 675–689. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Texture features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Cao, Y.; Bai, S.; Cao, M. Image compression sampling based on adaptive block compressed sensing. J. Image Graph. 2016, 21, 416–424. [Google Scholar]

- Shen, J. Weber’s law and weberized TV restoration. Phys. D Nonlinear Phenom. 2003, 175, 241–251. [Google Scholar] [CrossRef]

- Li, R.; Cheng, Y.; Li, L.; Chang, L. An adaptive blocking compression sensing for image compression. J. Zhejiang Univ. Technol. 2018, 46, 392–395. [Google Scholar]

- Liu, H.; Wang, C.; Chen, Y. FBG spectral compression and reconstruction method based on segmented adaptive sampling compressed sensing. Chin. J. Lasers 2018, 45, 279–286. [Google Scholar]

- Li, R.; Gan, Z.; Zhu, X. Smoothed projected Landweber image compressed sensing reconstruction using hard thresholding based on principal components analysis. J. Image Graph. 2013, 18, 504–514. [Google Scholar]

- Gershgorin, S.; Donoho, D.L. Ueber die Abgrenzung der Eigenwerte einer Matrix. Izv. Akad. Nauk. SSSR Ser. Math. 1931, 1, 749–754. [Google Scholar]

- Beheshti, S.; Dahleh, M.A. Noisy data and impulse response estimation. IEEE Trans. Signal Process. 2010, 58, 510–521. [Google Scholar] [CrossRef]

- Beheshti, S.; Dahleh, M.A. A new information-theoretic approach to signal denoising and best basis selection. IEEE Trans. Signal Process. 2005, 53, 3613–3624. [Google Scholar] [CrossRef]

- Bottcher, A. Orthogonal symmetric Toeplitz matrices. Complex Anal. Oper. Theory 2008, 2, 285–298. [Google Scholar] [CrossRef]

- Duan, G.; Hu, W.; Wang, J. Research on the natural image super-resolution reconstruction algorithm based on compressive perception theory and deep learning model. Neurocomputing 2016, 208, 117–126. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).