Machine Learning Prediction Approach to Enhance Congestion Control in 5G IoT Environment

Abstract

1. Introduction

2. Related Work

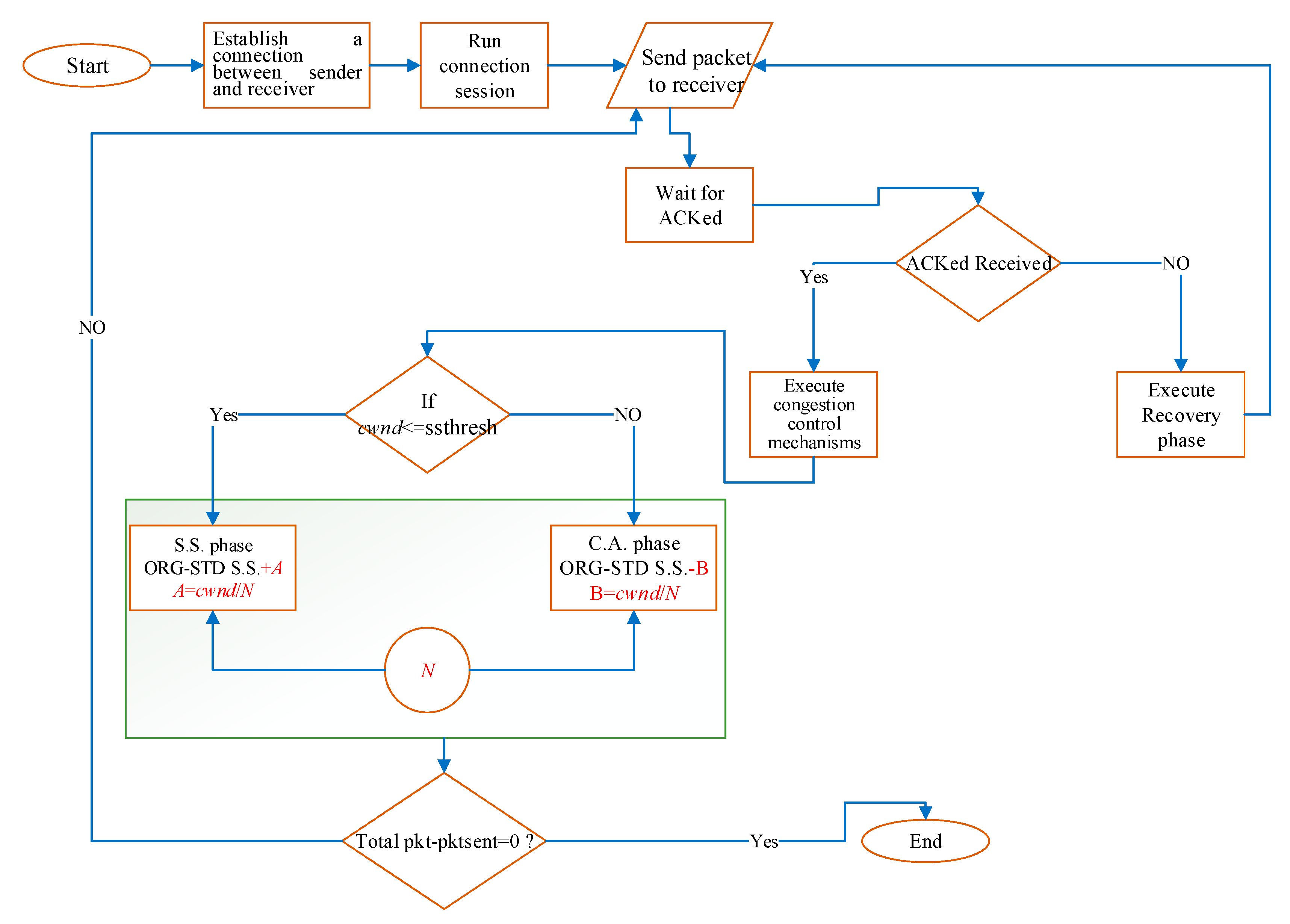

3. Proposed Congestion Control Prediction Approach

3.1. Modeling and Mathematical Formulation

3.1.1. Enhanced Slow-Start

3.1.2. Enhanced Congestion Avoidance

3.2. Development Phase

3.3. Preparing the Training Dataset

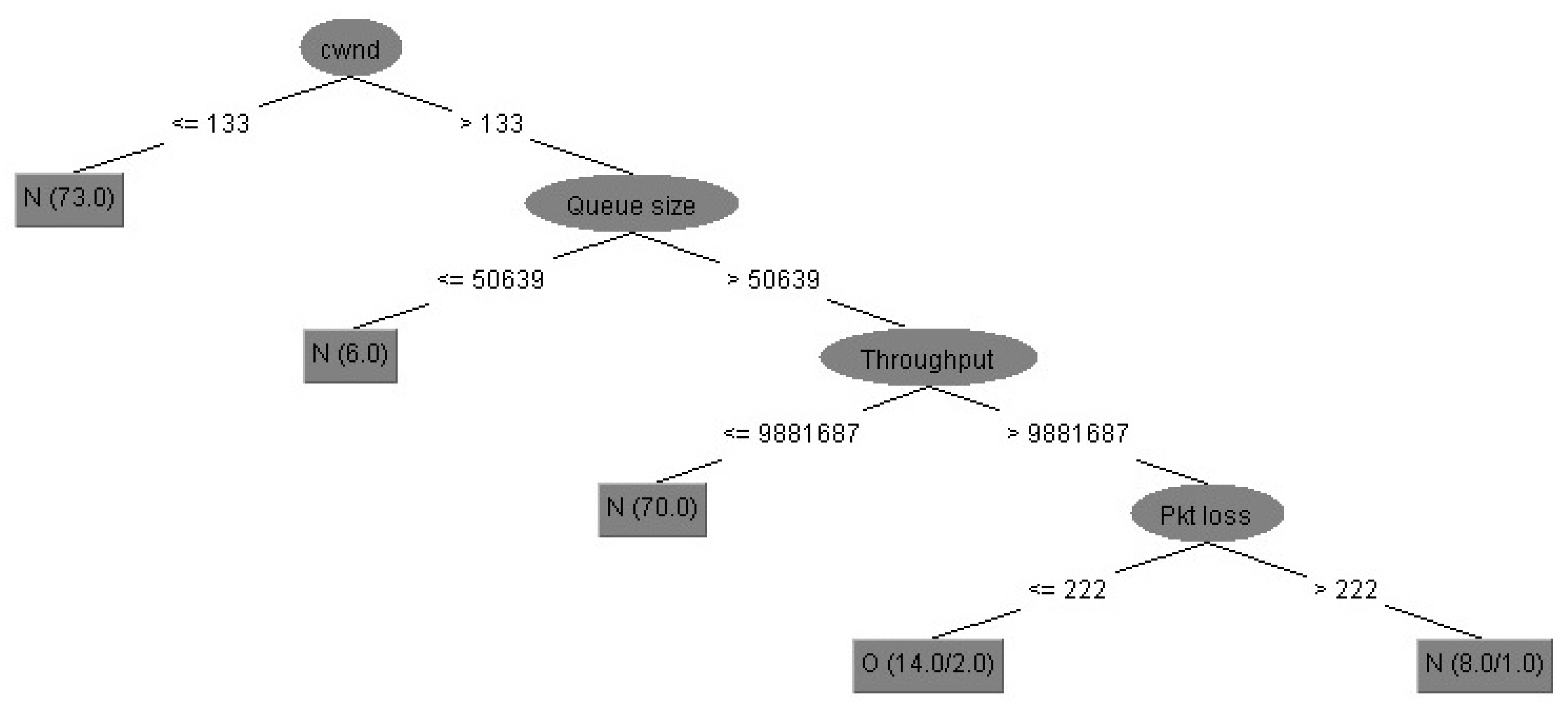

3.3.1. Applying the Machine Learning Approach

- Verify if all issues belong to the same class. Thus, a tree is a leaf that is labeled according to class.

- Compute the information gain and the information for a piece of the attribute.

- On the basis of the present selection criterion, locate the optimal splitting attribute.

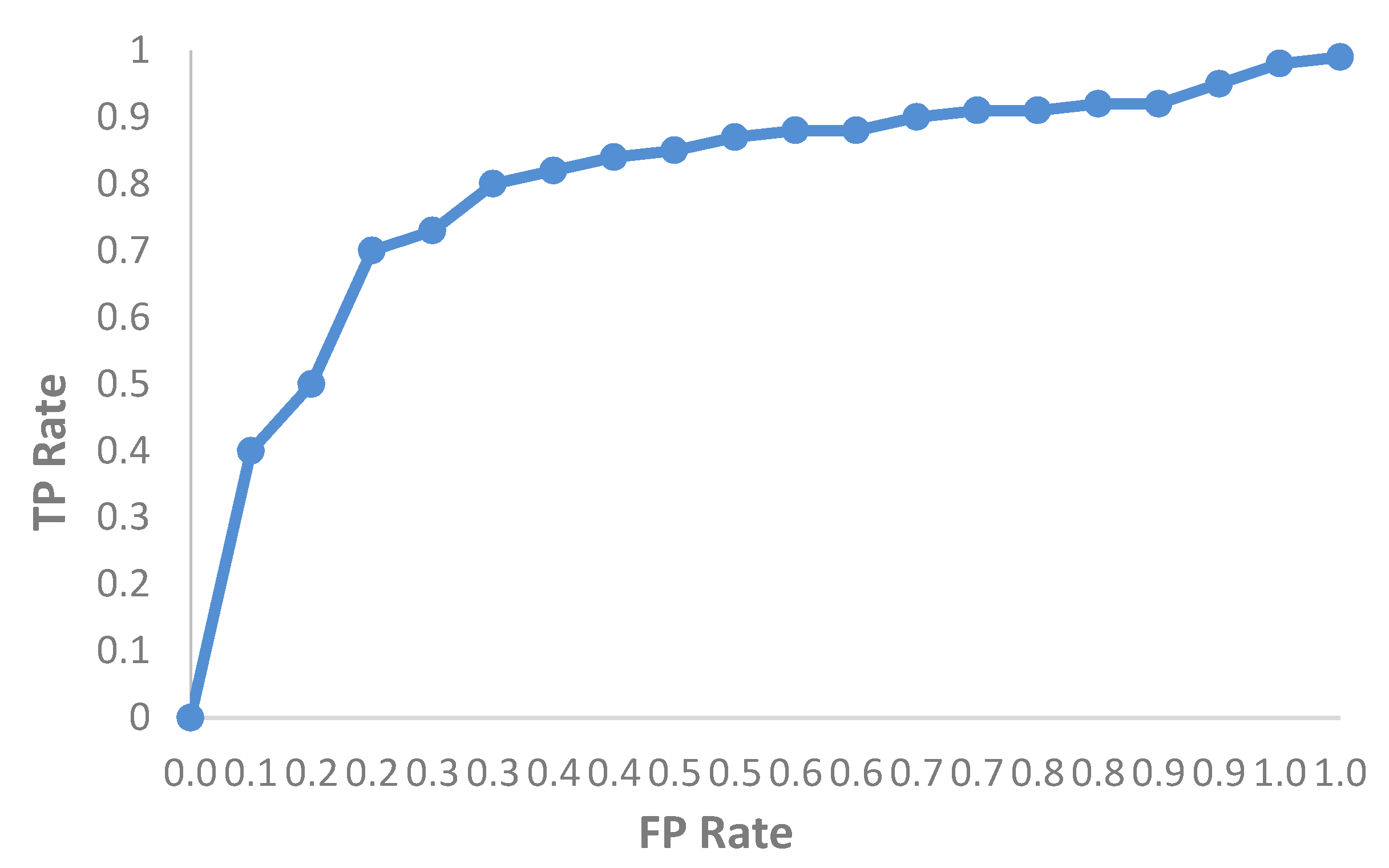

3.3.2. Model Evaluation

4. Performance Evaluation

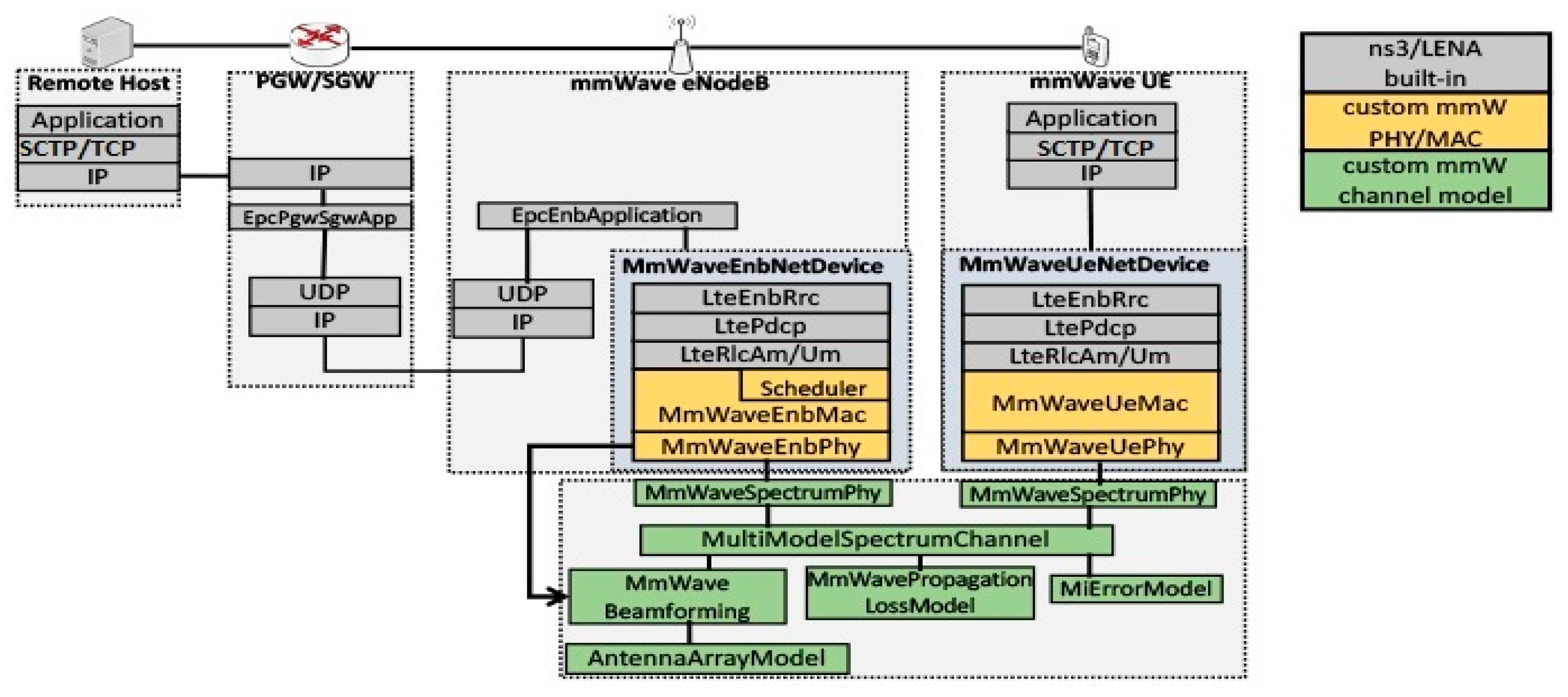

4.1. Simulation Setup

4.2. Machine Learning Implementation

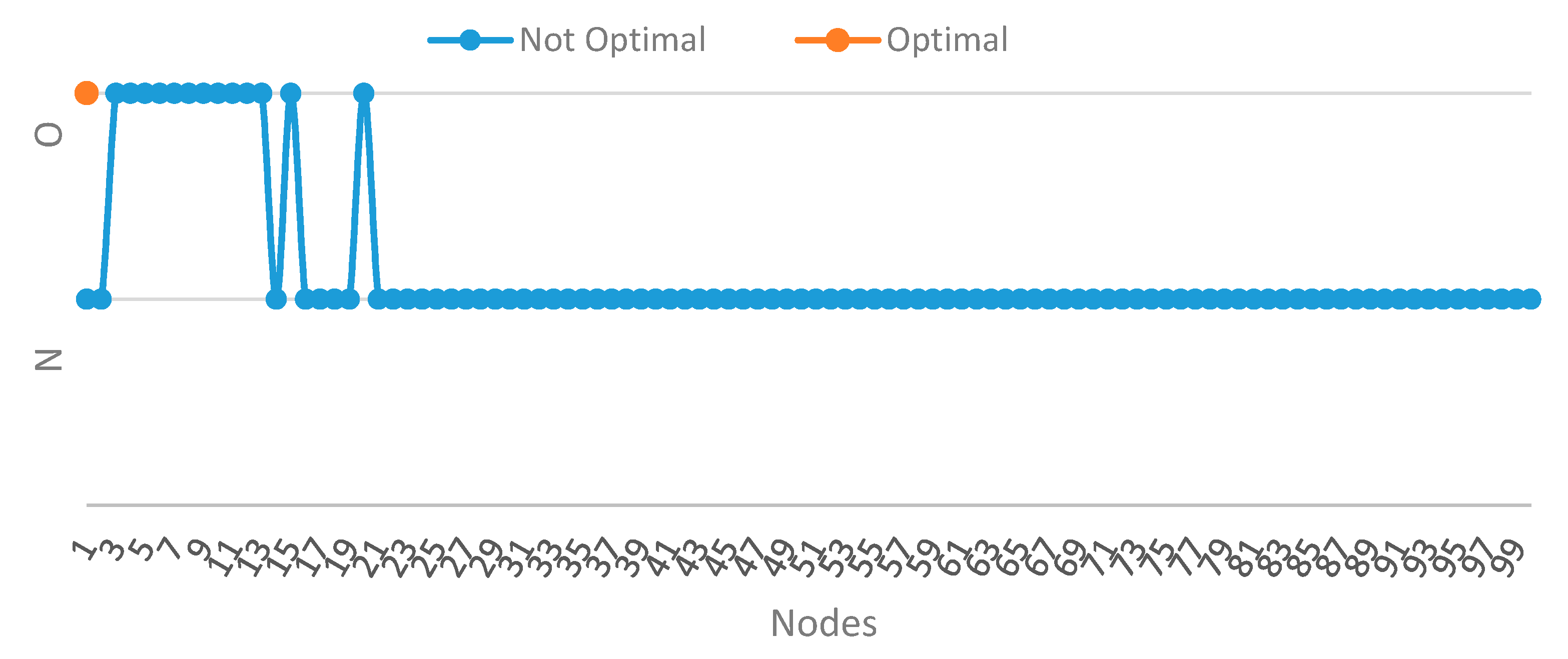

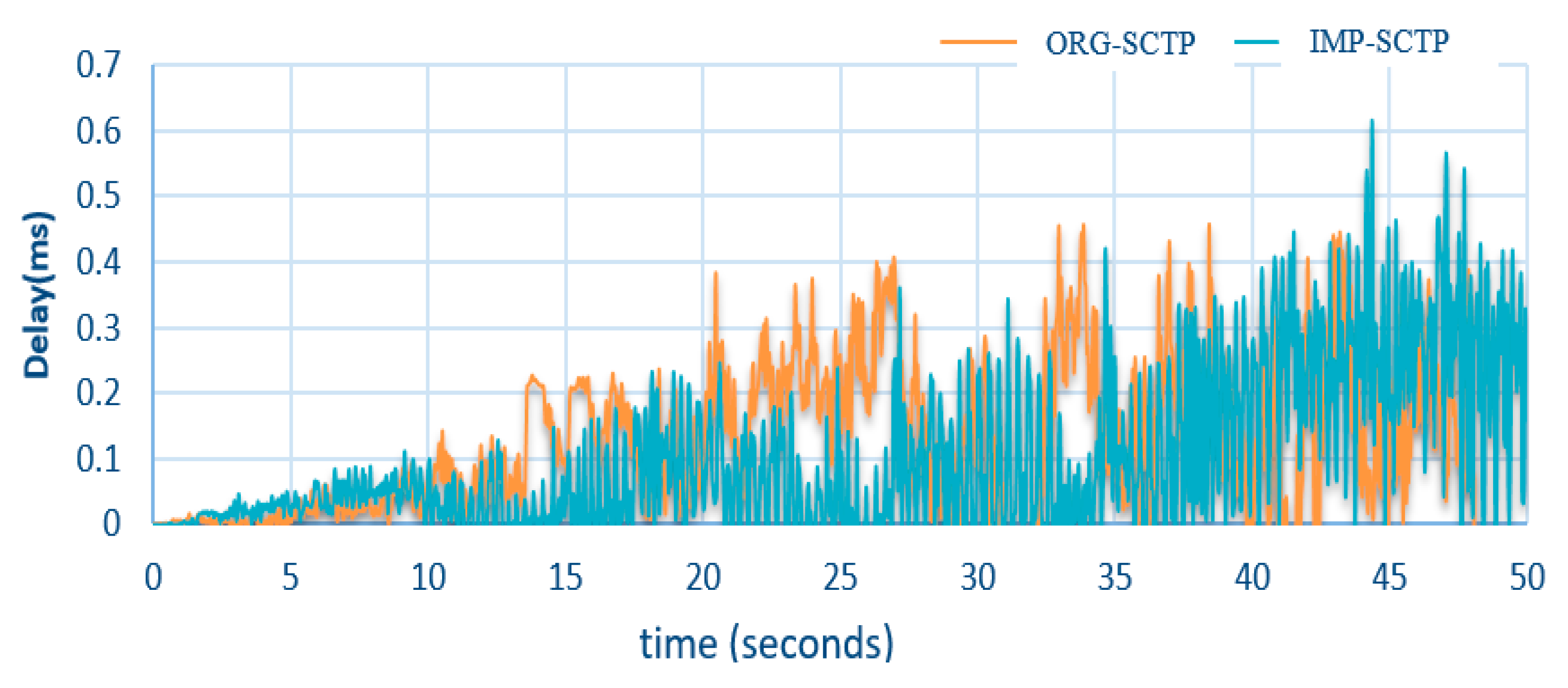

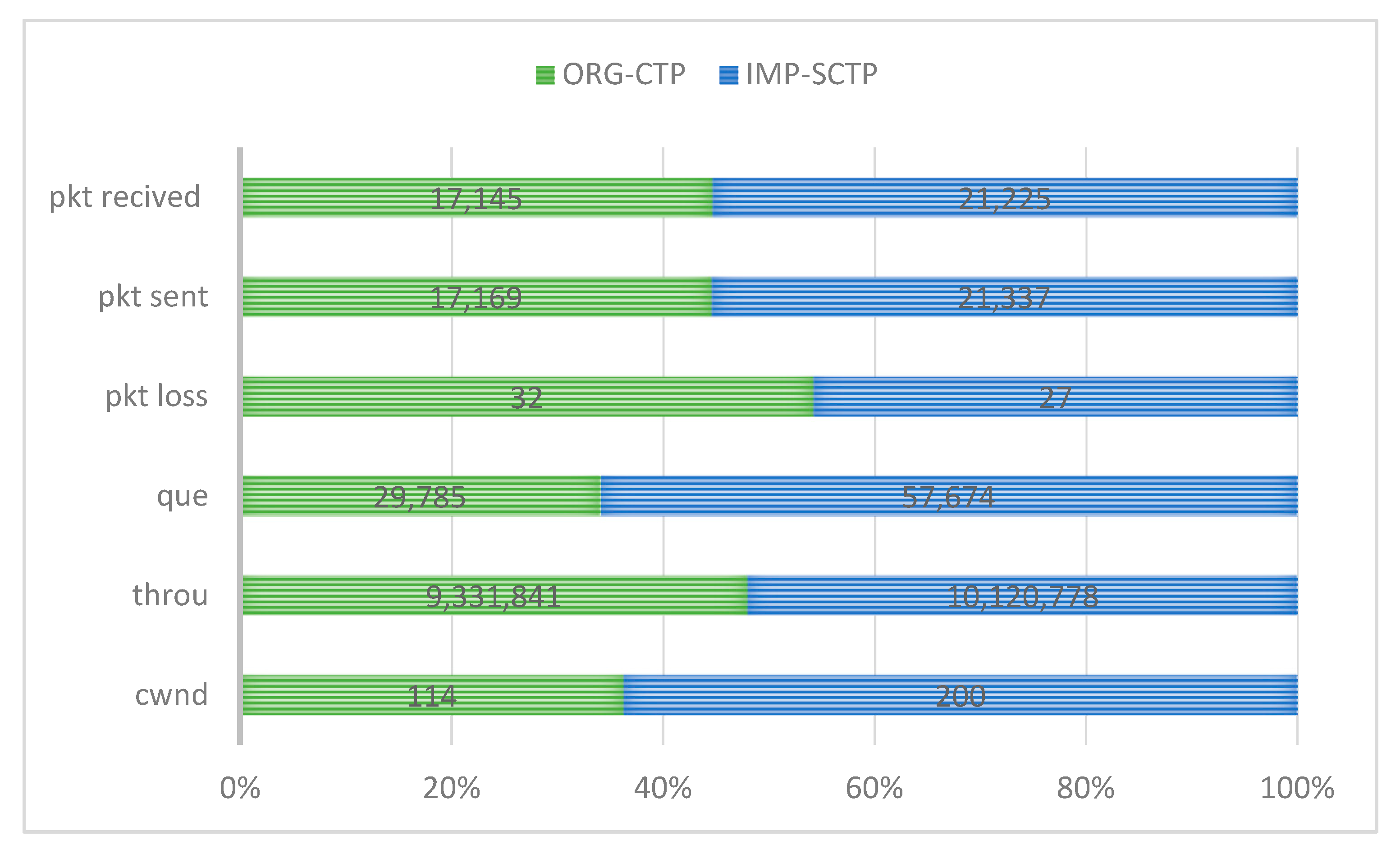

4.3. Effect of DT Prediction

4.4. Effect of Delay

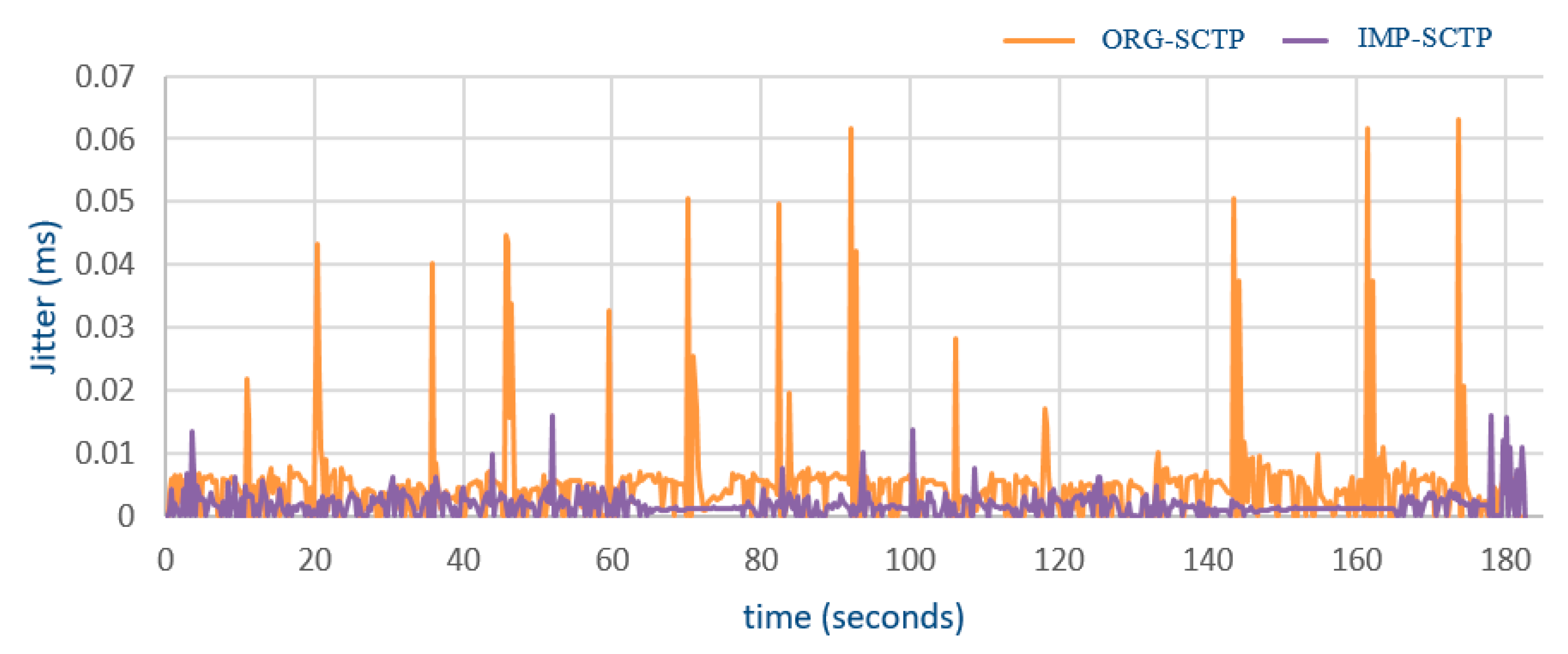

4.5. Effect of Jitter

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Najm, A.; Ismail, M.; Rahem, T.; Al Razak, A. Wireless implementation selection in higher institution learning environment. J. Theor. Appl. Inf. Technol. 2014, 67, 477–484. [Google Scholar]

- Rahem, A.A.T.; Ismail, M.; Najm, I.A.; Balfaqih, M. Topology sense and graph-based TSG: Efficient wireless ad hoc routing protocol for WANET. Telecommun. Syst. 2017, 65, 739–754. [Google Scholar] [CrossRef]

- Aalsalem, M.Y.; Khan, W.Z.; Gharibi, W.; Khan, M.K.; Arshad, Q. Wireless Sensor Networks in oil and gas industry: Recent advances, taxonomy, requirements, and open challenges. J. Netw. Comput. Appl. 2018, 113, 87–97. [Google Scholar] [CrossRef]

- Flora, D.J.; Kavitha, V.; Muthuselvi, M. A survey on congestion control techniques in wireless sensor networks. In Proceedings of the 2011 International Conference Emerging Trends in Electrical and Computer Technology (ICETECT), Nagercoil, India, 23–24 March 2011; pp. 1146–1149. [Google Scholar]

- Sunny, A.; Panchal, S.; Vidhani, N.; Krishnasamy, S.; Anand, S.; Hegde, M.; Kuri, J.; Kumar, A. A generic controller for managing TCP transfers in IEEE 802.11 infrastructure WLANs. J. Netw. Comput. Appl. 2017, 93, 13–26. [Google Scholar] [CrossRef]

- Karunakaran, S.; Thangaraj, P. A cluster based congestion control protocol for mobile ad hoc networks. Int. J. Inf. Technol. Knowl. Manag. 2010, 2, 471–474. [Google Scholar]

- Jain, R. Congestion Control in Computer Networks: Issues and. IEEE Netw. Mag. 1990, 4, 24–30. [Google Scholar] [CrossRef]

- Kafi, M.A.; Djenouri, D.; Ben-Othman, J.; Badache, N. Congestion control protocols in wireless sensor networks: A survey. IEEE Commun. Surv. Tutor. 2014, 16, 1369–1390. [Google Scholar] [CrossRef]

- Floyd, S. Congestion Control Principles. 2000. [Google Scholar] [CrossRef]

- Firoiu, V.; Borden, M. A study of active queue management for congestion control. In Proceedings of the INFOCOM 2000, Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies, Tel Aviv, Israel, 26–30 March 2000; pp. 1435–1444. [Google Scholar]

- Qazi, I.A.; Znati, T. On the design of load factor based congestion control protocols for next-generation networks. Comput. Netw. 2011, 55, 45–60. [Google Scholar] [CrossRef][Green Version]

- Katabi, D.; Handley, M.; Rohrs, C. Congestion control for high bandwidth-delay product networks. Acm Sigcomm Comput. Commun. Rev. 2002, 32, 89–102. [Google Scholar] [CrossRef]

- Subramanian, S.; Werner, M.; Liu, S.; Jose, J.; Lupoaie, R.; Wu, X. Congestion control for vehicular safety: Synchronous and asynchronous MAC algorithms. In Proceedings of the ninth ACM International Workshop on Vehicular Inter-Networking, Systems, and Applications, Low Wood Bay, Lake District, UK, 25 June 2012; pp. 63–72. [Google Scholar]

- Wang, Y.; Rozhnova, N.; Narayanan, A.; Oran, D.; Rhee, I. An improved hop-by-hop interest shaper for congestion control in named data networking. Acm Sigcomm Comput. Commun. Rev. 2013, 43, 55–60. [Google Scholar] [CrossRef]

- Wischik, D.; Raiciu, C.; Greenhalgh, A.; Handley, M. Design, Implementation and Evaluation of Congestion Control for Multipath TCP. NSDI 2011, 11, 8. [Google Scholar]

- Mirza, M.; Sommers, J.; Barford, P.; Zhu, X. A machine learning approach to TCP throughput prediction. IEEE/ACM Trans. Netw. (Ton) 2010, 18, 1026–1039. [Google Scholar] [CrossRef]

- Kong, Y.; Zang, H.; Ma, X. Improving TCP Congestion Control with Machine Intelligence. In Proceedings of the 2018 Workshop on Network Meets AI & ML, Budapest, Hungary, 24 August 2018; pp. 60–66. [Google Scholar]

- Nunes, B.A.; Veenstra, K.; Ballenthin, W.; Lukin, S.; Obraczka, K. A machine learning approach to end-to-end rtt estimation and its application to tcp. In Proceedings of the 20th International Conference on Computer Communications and Networks (ICCCN), Maui, HI, USA, 31 July–4 August 2011; pp. 1–6. [Google Scholar]

- Taherkhani, N.; Pierre, S. Centralized and localized data congestion control strategy for vehicular ad hoc networks using a machine learning clustering algorithm. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3275–3285. [Google Scholar] [CrossRef]

- Fadlullah, Z.; Tang, F.; Mao, B.; Kato, N.; Akashi, O.; Inoue, T.; Mizutani, K. State-of-the-art deep learning: Evolving machine intelligence toward tomorrow’s intelligent network traffic control systems. IEEE Commun. Surv. Tutor. 2017, 19, 2432–2455. [Google Scholar] [CrossRef]

- González-Landero, F.; García-Magariño, I.; Lacuesta, R.; Lloret, J. PriorityNet App: A mobile application for establishing priorities in the context of 5G ultra-dense networks. IEEE Access 2018, 6. [Google Scholar] [CrossRef]

- Lloret, J.; Parra, L.; Taha, M.; Tomas, J. An architecture and protocol for smart continuous eHealth monitoring using 5G. Comput. Netw. 2017, 129, 340–351. [Google Scholar] [CrossRef]

- Taha, M.; Parra, L.; Garcia, L.; Lloret, J. An Intelligent handover process algorithm in 5G networks: The use case of mobile cameras for environmental surveillance. In Proceedings of the 2017 IEEE International Conference on Communications Workshops (ICC Workshops), Paris, France, 21–25 May 2017; pp. 840–844. [Google Scholar]

- Khan, I.; Zafar, M.H.; Jan, M.T.; Lloret, J.; Basheri, M.; Singh, D. Spectral and Energy Efficient Low-Overhead Uplink and Downlink Channel Estimation for 5G Massive MIMO Systems. Entropy 2018, 20, 92. [Google Scholar] [CrossRef]

- Sangeetha, G.; Vijayalakshmi, M.; Ganapathy, S.; Kannan, A. A Heuristic Path Search for Congestion Control in WSN. Ind. Int. Innov. Sci. Eng. Technol. 2018, 11, 485–495. [Google Scholar]

- Elappila, M.; Chinara, S.; Parhi, D.R. Survivable path routing in WSN for IoT applications. Pervasive Mob. Comput. 2018, 43, 49–63. [Google Scholar] [CrossRef]

- Singh, K.; Singh, K.; Aziz, A. Congestion control in wireless sensor networks by hybrid multi-objective optimization algorithm. Comput. Netw. 2018, 138, 90–107. [Google Scholar] [CrossRef]

- Shelke, M.; Malhotra, A.; Mahalle, P.N. Congestion-Aware Opportunistic Routing Protocol in Wireless Sensor Networks. Smart Comput. Inf. 2018, 77, 63–72. [Google Scholar]

- Godoy, P.D.; Cayssials, R.L.; Garino, C.G.G. Communication channel occupation and congestion in wireless sensor networks. Comput. Electr. Eng. 2018, 72, 846–858. [Google Scholar] [CrossRef]

- Najm, I.A.; Ismail, M.; Lloret, J.; Ghafoor, K.Z.; Zaidan, B.; Rahem, A.A.R.T. Improvement of SCTP congestion control in the LTE-A network. J. Netw. Comput. Appl. 2015, 58, 119–129. [Google Scholar] [CrossRef]

- Najm, I.A.; Ismail, M.; Abed, G.A. High-performance mobile technology LTE-A using the stream control transmission protocol: A systematic review and hands-on analysis. J. Appl. Sci. 2014, 14, 2194–2218. [Google Scholar] [CrossRef][Green Version]

- Geurts, P.; El Khayat, I.; Leduc, G. A machine learning approach to improve congestion control over wireless computer networks. In Proceedings of the Fourth IEEE International Conference on Data Mining (ICDM’04), Brighton, UK, 1–4 November 2004; pp. 383–386. [Google Scholar]

- Brahma, S.; Chatterjee, M.; Kwiat, K. Congestion control and fairness in wireless sensor networks. In Proceedings of the 8th IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), Mannheim, Germany, 29 March–2 April 2010; pp. 413–418. [Google Scholar]

- Jagannathan, S.; Almeroth, K.C. Using tree topology for multicast congestion control. In Proceedings of the International Conference on Parallel Processing, Valencia, Spain, 3–7 September 2001; p. 313. [Google Scholar]

- Katuwal, R.; Suganthan, P.; Zhang, L. An ensemble of decision trees with random vector functional link networks for multi-class classification. Appl. Soft Comput. 2018, 70, 1146–1153. [Google Scholar] [CrossRef]

- Gómez, S.E.; Martínez, B.C.; Sánchez-Esguevillas, A.J.; Callejo, L.H. Ensemble network traffic classification: Algorithm comparison and novel ensemble scheme proposal. Comput. Netw. 2017, 127, 68–80. [Google Scholar] [CrossRef]

- Hasan, M.; Hossain, E.; Niyato, D. Random access for machine-to-machine communication in LTE-advanced networks: Issues and approaches. IEEE Commun. Mag. 2013, 51, 86–93. [Google Scholar] [CrossRef]

- Leng, B.; Huang, L.; Qiao, C.; Xu, H. A decision-tree-based on-line flow table compressing method in software defined networks. In Proceedings of the 2016 IEEE/ACM 24th International Symposium on Quality of Service (IWQoS), Beijing, China, 20–21 June 2016; pp. 1–2. [Google Scholar]

- Liang, D.; Zhang, Z.; Peng, M. Access Point Reselection and Adaptive Cluster Splitting-Based Indoor Localization in Wireless Local Area Networks. IEEE Internet Things. J. 2015, 2, 573–585. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, H. Prediction of Road Traffic Congestion Based on Random Forest. In Proceedings of the 2017 10th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 9–10 December 2017; pp. 361–364. [Google Scholar]

- Park, H.; Haghani, A.; Samuel, S.; Knodler, M.A. Real-time prediction and avoidance of secondary crashes under unexpected traffic congestion. Accid. Anal. Prev. 2018, 112, 39–49. [Google Scholar] [CrossRef]

- Shu, J.; Liu, S.; Liu, L.; Zhan, L.; Hu, G. Research on link quality estimation mechanism for wireless sensor networks based on support vector machine. Chin. J. Electron. 2017, 26, 377–384. [Google Scholar] [CrossRef]

- Riekstin, A.C.; Januário, G.C.; Rodrigues, B.B.; Nascimento, V.T.; Carvalho, T.C.; Meirosu, C. Orchestration of energy efficiency capabilities in networks. J. Netw. Comput. Appl. 2016, 59, 74–87. [Google Scholar] [CrossRef]

- Soltani, S.; Mutka, M.W. Decision tree modeling for video routing in cognitive radio mesh networks. In Proceedings of the IEEE 14th International Symposium on “A World of Wireless, Mobile and Multimedia Networks” (WoWMoM), Madrid, Spain, 4–7 June 2013; pp. 1–9. [Google Scholar]

- Adi, E.; Baig, Z.; Hingston, P. Stealthy Denial of Service (DoS) attack modelling and detection for HTTP/2 services. J. Netw. Comput. Appl. 2017, 91, 1–13. [Google Scholar] [CrossRef]

- Stimpfling, T.; Bélanger, N.; Cherkaoui, O.; Béliveau, A.; Béliveau, L.; Savaria, Y. Extensions to decision-tree based packet classification algorithms to address new classification paradigms. Comput. Netw. 2017, 122, 83–95. [Google Scholar] [CrossRef]

- Singh, D.; Nigam, S.; Agrawal, V.; Kumar, M. Vehicular traffic noise prediction using soft computing approach. J. Environ. Manag. 2016, 183, 59–66. [Google Scholar] [CrossRef]

- Xia, Y.; Chen, W.; Liu, X.; Zhang, L.; Li, X.; Xiang, Y. Adaptive multimedia data forwarding for privacy preservation in vehicular ad-hoc networks. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2629–2641. [Google Scholar] [CrossRef]

- Tariq, F.; Baig, S. Machine Learning Based Botnet Detection in Software Defined Networks. Int. J. Secur. Appl. 2017, 11, 1–11. [Google Scholar] [CrossRef]

- Wu, T.; Petrangeli, S.; Huysegems, R.; Bostoen, T.; De Turck, F. Network-based video freeze detection and prediction in HTTP adaptive streaming. Comput. Commun. 2017, 99, 37–47. [Google Scholar] [CrossRef]

- Chen, Y.-y.; Lv, Y.; Li, Z.; Wang, F.-Y. Long short-term memory model for traffic congestion prediction with online open data. In Proceedings of the 2016 IEEE 19th International Conference Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 132–137. [Google Scholar]

- Abar, T.; Letaifa, A.B.; El Asmi, S. Machine learning based QoE prediction in SDN networks. In Proceedings of the 13th International Wireless Communications and Mobile Computing Conference (IWCMC), Valencia, Spain, 26–30 June 2017; pp. 1395–1400. [Google Scholar]

- Pham, T.N.D.; Yeo, C.K. Adaptive trust and privacy management framework for vehicular networks. Veh. Commun. 2018, 13, 1–12. [Google Scholar] [CrossRef]

- Mohamed, M.F.; Shabayek, A.E.-R.; El-Gayyar, M.; Nassar, H. An adaptive framework for real-time data reduction in AMI. J. King Saud Univ. Comput. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Tierney, B.; Kissel, E.; Swany, M.; Pouyoul, E. Efficient data transfer protocols for big data. In Proceedings of the IEEE 8th International Conference on E-Science, Chicago, IL, USA, 8–12 October 2012; pp. 1–9. [Google Scholar]

- Brennan, R.; Curran, T. SCTP congestion control: Initial simulation studies. In Proceedings of the International Teletraffic Congress (ITC 17), Salvador da Bahia, Brazil, 2–7 December 2001. [Google Scholar]

- Louvieris, P.; Clewley, N.; Liu, X. Effects-based feature identification for network intrusion detection. Neurocomputing 2013, 121, 265–273. [Google Scholar] [CrossRef]

- Chen, J. Congestion control mechanisms of transport protocols. In Proceedings of the ACM/IEEE Conference on Supercomputing, New York, NY, USA, 8–12 June 2009. [Google Scholar]

- Ahmed, I.; Yasuo, O.; Masanori, K. Improving performance of SCTP over broadband high latency networks. In Proceedings of the 28th Annual IEEE International Conference on Local Computer Networks, 2003, LCN’03, Bonn/Konigswinter, Germany, 20–24 October 2003; pp. 644–645. [Google Scholar]

- Ye, G.; Saadawi, T.; Lee, M. SCTP congestion control performance in wireless multi-hop networks. In Proceedings of the MILCOM 2002, Proceedings, Anaheim, CA, USA, 7–10 October 2002; pp. 934–939. [Google Scholar]

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques; Morgan Kaufmann: Cambridge, MA, USA, 2016. [Google Scholar]

- HAMOUD, A.K. Classifying Students’ Answers Using Clustering Algorithms Based on Principle Component Analysis. J. Theor. Appl. Infor. Technol. 2018, 96, 1813–1825. [Google Scholar]

- Verma, P.K.; Verma, R.; Prakash, A.; Agrawal, A.; Naik, K.; Tripathi, R.; Alsabaan, M.; Khalifa, T.; Abdelkader, T.; Abogharaf, A. Machine-to-Machine (M2M) communications: A survey. J. Netw. Comput. Appl. 2016, 66, 83–105. [Google Scholar] [CrossRef]

- Ibrahim, Z.; Rusli, D. Predicting students’ academic performance: Comparing artificial neural network, decision tree and linear regression. In Proceedings of the 21st Annual SAS Malaysia Forum, Shangri-La Hotel, Kuala Lumpur, Malaysia, 5 September 2007; pp. 1–6. [Google Scholar]

- Hamoud, A.K. Selection of Best Decision Tree Algorithm for Prediction and Classification of Students’ Action. Am. Int. J. Res. Sci. Technol. Eng. Math. 2016, 16, 26–32. [Google Scholar]

- Hamoud, A.K.; Hashim, A.S.; Awadh, W.A. Predicting Student Performance in Higher Education Institutions Using Decision Tree Analysis. Int. J. Interact. Multimed. Artif. Intell. 2018, 5, 26–31. [Google Scholar] [CrossRef]

- Hamoud, A.K. Applying Association Rules and Decision Tree Algorithms with Tumor Diagnosis Data. Int. Res. J. Eng. Technol. 2017, 3, 27–31. [Google Scholar]

- Lavanya, D.; Rani, K.U. Ensemble decision tree classifier for breast cancer data. Int. J. Inf. Technol. Converg. Serv. 2012, 2, 17. [Google Scholar] [CrossRef]

- Polat, K.; Güneş, S. Classification of epileptiform EEG using a hybrid system based on decision tree classifier and fast Fourier transform. Appl. Math. Comput. 2007, 187, 1017–1026. [Google Scholar] [CrossRef]

- Rajput, A.; Aharwal, R.P.; Dubey, M.; Saxena, S.; Raghuvanshi, M. J48 and JRIP rules for e-governance data. Int. J. Comput. Sci. Secur. (Ijcss) 2011, 5, 201. [Google Scholar]

- Cayirci, E.; Tezcan, H.; Dogan, Y.; Coskun, V. Wireless sensor networks for underwater survelliance systems. Ad Hoc Netw. 2006, 4, 431–446. [Google Scholar] [CrossRef]

- Feng, T.; Liu, Z.; Kwon, K.-A.; Shi, W.; Carbunar, B.; Jiang, Y.; Nguyen, N. Continuous mobile authentication using touchscreen gestures. In Proceedings of the 2012 IEEE Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 13–15 November 2012; pp. 451–456. [Google Scholar]

- Stein, G.; Chen, B.; Wu, A.S.; Hua, K.A. Decision tree classifier for network intrusion detection with GA-based feature selection. In Proceedings of the 43rd annual Southeast regional conference, Kennesaw, Georgia, 18–20 March 2005; pp. 136–141. [Google Scholar]

- Sahu, S.; Mehtre, B.M. Network intrusion detection system using J48 Decision Tree. In Proceedings of the 2015 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Kochi, India, 10–13 August 2015; pp. 2023–2026. [Google Scholar]

- Wilkinson, L. Classification and regression trees. Systat 2004, 11, 35–56. [Google Scholar]

- Bhargava, N.; Sharma, G.; Bhargava, R.; Mathuria, M. Decision tree analysis on j48 algorithm for data mining. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2013, 3, 1114–1119. [Google Scholar]

- Korting, T.S. C4. 5 Algorithm and Multivariate Decision Trees. Image Processing Division, National Institute for Space Research; INPE: Sao Jose dos Campos, SP, Brazil, 2006. [Google Scholar]

- Hamoud, A.; Humadi, A.; Awadh, W.A.; Hashim, A.S. Students’ Success Prediction based on Bayes Algorithms. Int. J. Comput. Appl. 2017, 178, 6–12. [Google Scholar]

- Mezzavilla, M.; Zhang, M.; Polese, M.; Ford, R.; Dutta, S.; Rangan, S.; Zorzi, M. End-to-end simulation of 5g mmwave networks. IEEE Commun. Surv. Tutor. 2018, 20, 2237–2263. [Google Scholar] [CrossRef]

- Rebato, M.; Polese, M.; Zorzi, M. Multi-Sector and Multi-Panel Performance in 5G mmWave Cellular Networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018. [Google Scholar]

| N | CWND | Throughput | Queue Size | Packet Loss | Optimal |

|---|---|---|---|---|---|

| 1 | 120 | 9,881,687 | 48424 | 209 | N |

| . | . | . | . | . | . |

| . | . | . | . | . | O |

| . | . | . | . | . | . |

| 100 | cwnd | throu | que | pkt loss | N |

| Sequence | Step |

|---|---|

| 1 | K: = empty set of rules |

| 2 | while not P is empty |

| 3 | k: = best single rule for P |

| 4 | K: = add k to P |

| 5 | remove those instances from P that are covered by k |

| 6 | return K |

| Machine Learning | Algorithm | TP Rate | FP Rate | Precision | Recall | ROC Area | PRC Area |

|---|---|---|---|---|---|---|---|

| C4.5 | 0.924 | 0.205 | 0.927 | 0.924 | 0.889 | 0.915 | |

| DT | RepTree | 0.913 | 0.207 | 0.919 | 0.913 | 0.891 | 0.916 |

| Random Tree | 0.913 | 0.207 | 0.919 | 0.913 | 0.891 | 0.916 | |

| Clustering | Simple K Means | 0.891 | 0.018 | 0.939 | 0.891 | 0.937 | 0.923 |

| Hierarchical Clustering | 0.857 | 0.870 | 0.752 | 0.857 | 0.527 | 0.771 | |

| Stacking | Zero + Decision Table | 0.859 | 0.859 | 0.737 | 0.859 | 0.413 | 0.737 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Najm, I.A.; Hamoud, A.K.; Lloret, J.; Bosch, I. Machine Learning Prediction Approach to Enhance Congestion Control in 5G IoT Environment. Electronics 2019, 8, 607. https://doi.org/10.3390/electronics8060607

Najm IA, Hamoud AK, Lloret J, Bosch I. Machine Learning Prediction Approach to Enhance Congestion Control in 5G IoT Environment. Electronics. 2019; 8(6):607. https://doi.org/10.3390/electronics8060607

Chicago/Turabian StyleNajm, Ihab Ahmed, Alaa Khalaf Hamoud, Jaime Lloret, and Ignacio Bosch. 2019. "Machine Learning Prediction Approach to Enhance Congestion Control in 5G IoT Environment" Electronics 8, no. 6: 607. https://doi.org/10.3390/electronics8060607

APA StyleNajm, I. A., Hamoud, A. K., Lloret, J., & Bosch, I. (2019). Machine Learning Prediction Approach to Enhance Congestion Control in 5G IoT Environment. Electronics, 8(6), 607. https://doi.org/10.3390/electronics8060607