Enforcing Optimal ACL Policies Using K-Partite Graph in Hybrid SDN

Abstract

1. Introduction

- As already discussed, the SDN establishment is costly. A large amount is needed to buy new SDN devices. Even after the establishment of pure SDN, network operators, administrators, and staff need the training to develop, configure and operate the SDN which also requires a large budget [23]. Hybrid SDN can easily relax these budgets.

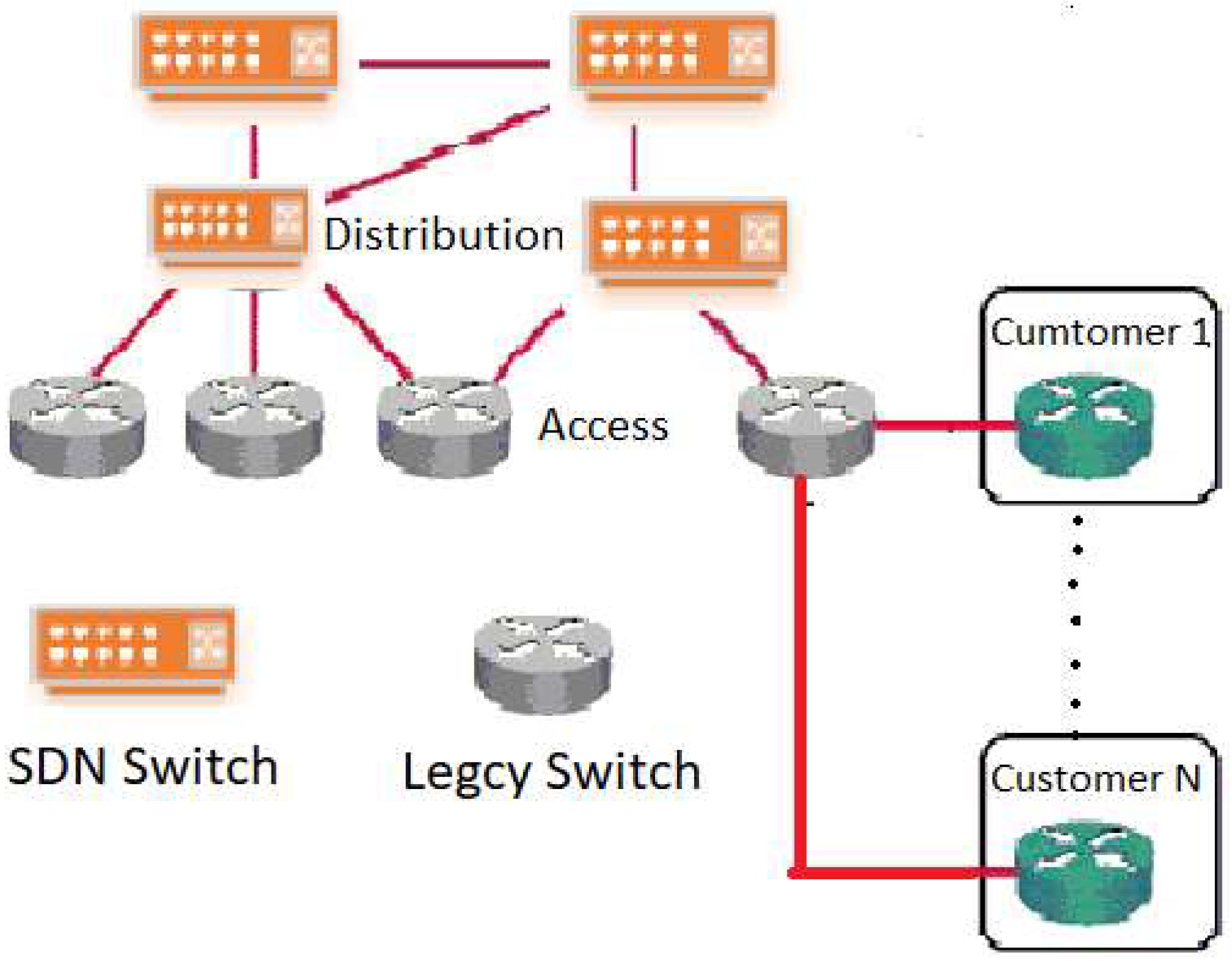

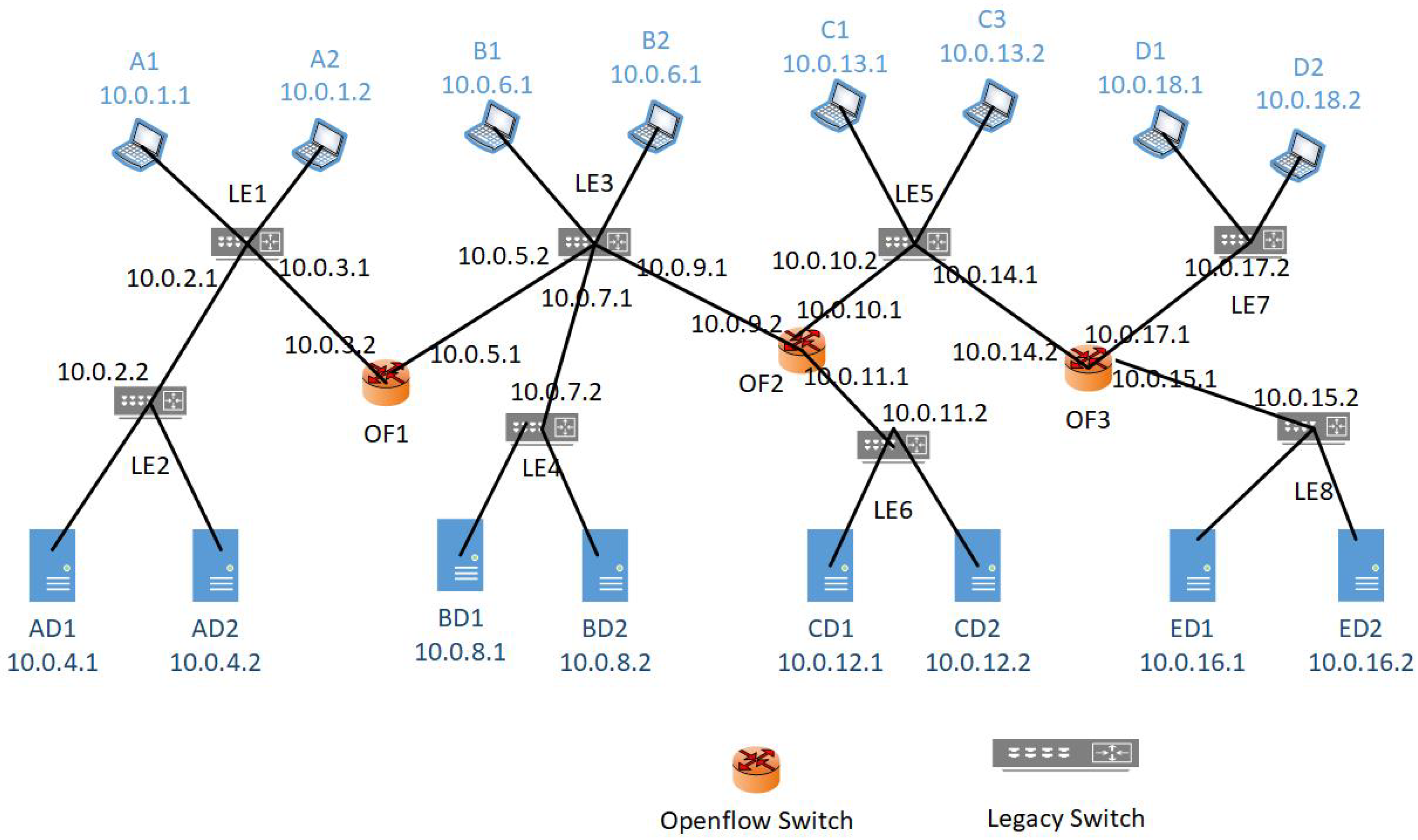

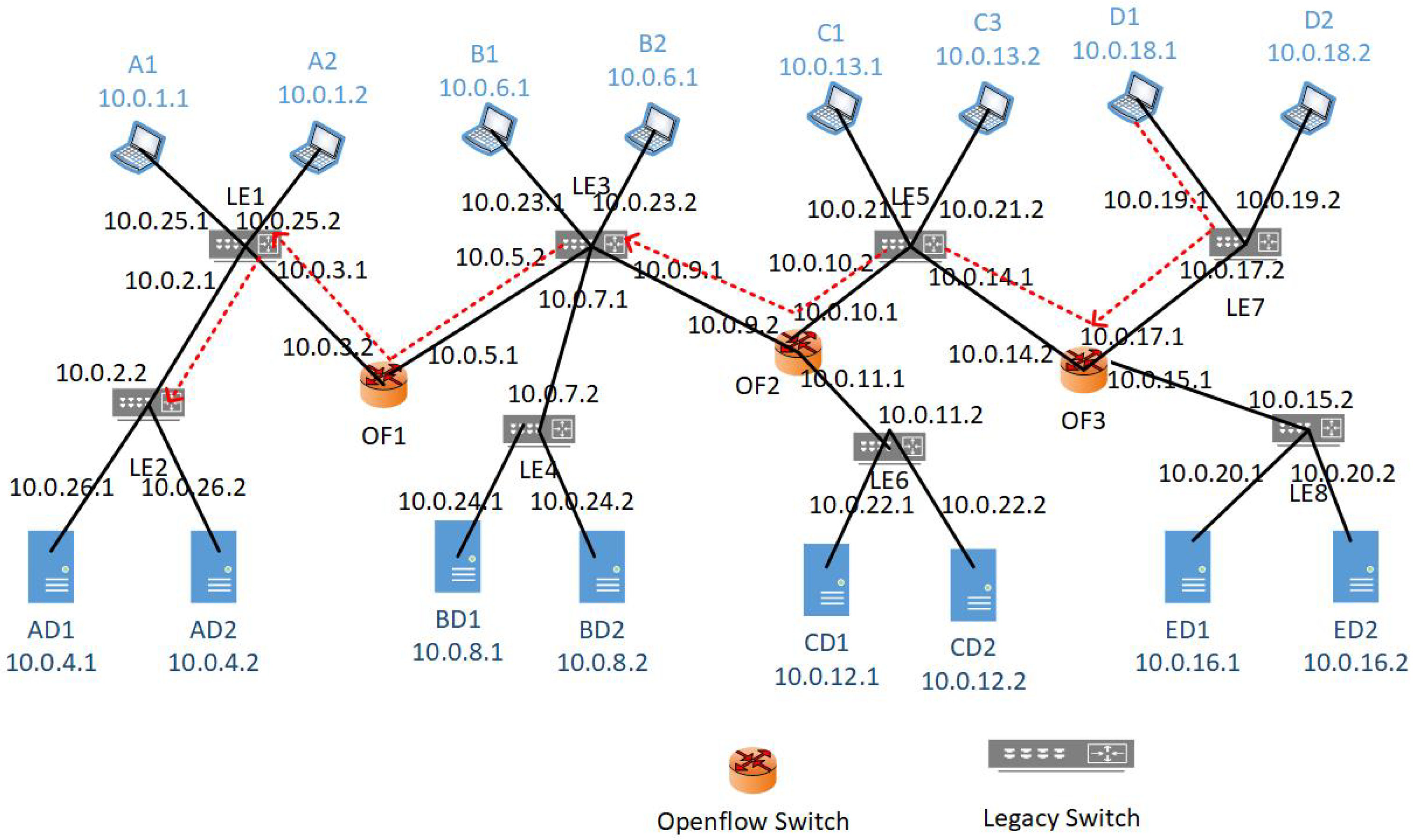

- By using Hybrid SDN, some benefits of the SDN model can adhere to [24]. For example, in an Internet Service Provider (ISP), there are millions of forwarding entries while OpenFlow network switches can support tens of thousands of forwarding entries [24]. In an ISP distribution network, OpenFlow devices can be used while the ISP access network can use legacy network devices as shown in Figure 1. In this way, ISP can observe a hybrid SDN in which OpenFlow switches are deployed in the distributed network to obtain the benefits of pure SDN while millions of forwarding entries are handled in the access network by using the legacy devices.

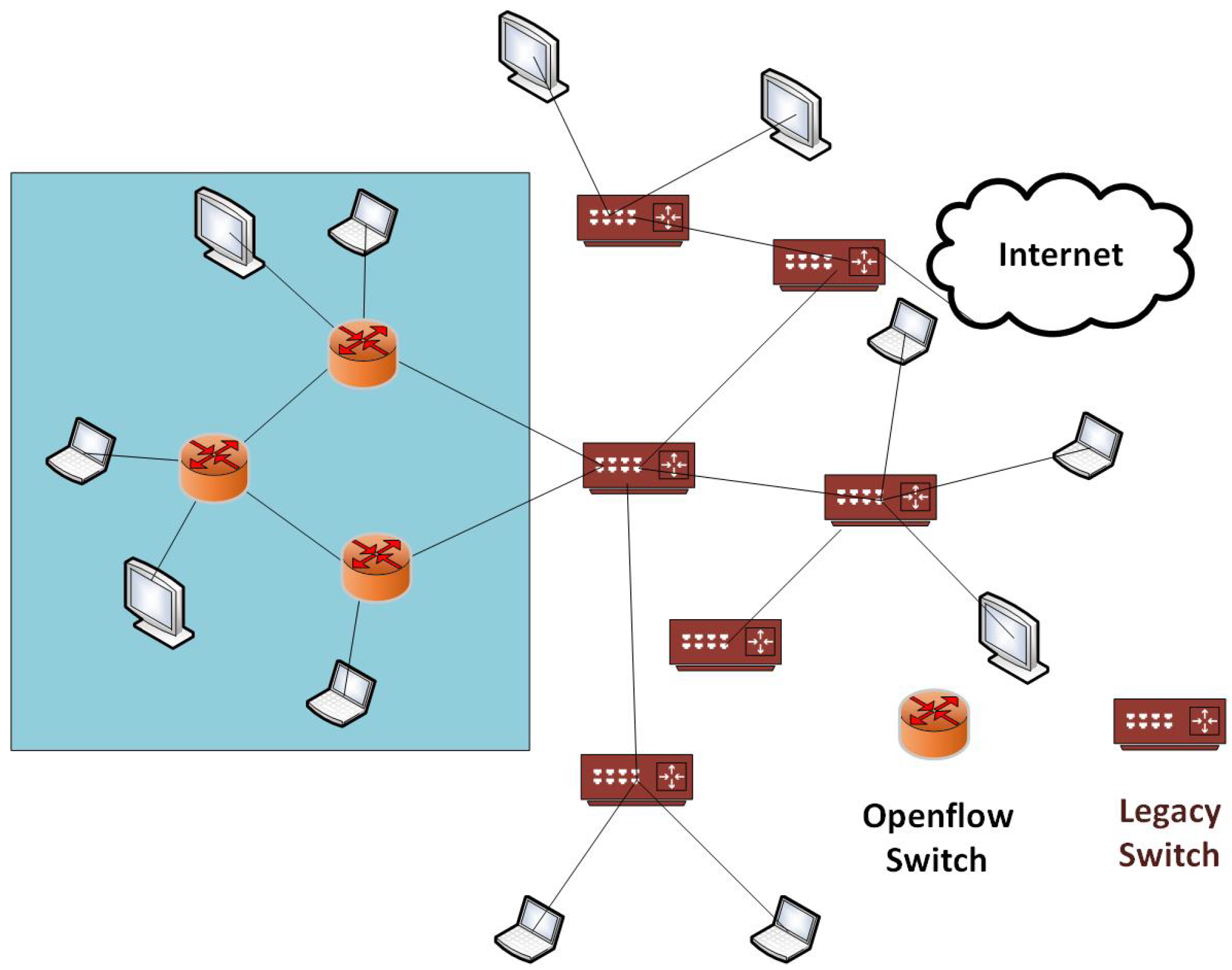

- SDN provides fine-grained control for data traffic flows. If we require fine-grained control for a small portion of the network, then hybrid SDN can be deployed by using SDN devices in a small portion of the network [24]. For example, if a company, having network infrastructure shown in Figure 2, needs fine-grained control for the small part of the network in as indicated using the blue box, then only that portion will be upgraded to an SDN.

- In case of in-band connection between SDN switches and the SDN controller and connectivity among SDN controllers, traditional routing protocols are very useful. Thus, by using a hybrid SDN, an SDN controller can be released from these tasks that can be handled by traditional protocols effectively.

- The SDN infrastructure has recently appeared. OpenFlow switches are not as mature as traditional switches. So, network administrators vacillate to replace traditional network device with SDN devices at once. Hybrid SDN can comfort the transition from traditional network devices to SDN devices. For instance, Google [16] has deployed SDN in several steps over several years for management and control of their data centers.

- In some scenarios, two SDNs are interconnected by traditional network switches. For these scenarios, hybrid SDN is required to allocate resources for the SDN interconnection suitably.

2. Related Work

2.1. ACL Implementation in Traditional Network

2.2. ACL Implementation in SDN

2.3. ACL Implementation in Hybrid SDN

- In case of hybrid SDN, legacy network devices need to be configured for ACL implementation. There is a need for a mechanism that can automatically enforce commands on legacy devices to configure policies.

- If there is a large number of nodes to implement policies, then what are the the optimum ways for policy implementation?

- If there are multiple policies, then these policies cannot be implemented in one go. It needs any mechanism to simplify these policies so that we can implement these in a single iteration.

- Network Loops may occur when policies are implemented; how to deal with these network loops.

- Due to complex policy implementation, some network nodes may not be reachable.

- During policy implementation, load balancing should be considered, so that no network devices are overwhelmed.

3. Problem Formulation

3.1. Different Ways of ACL Policy Implementation

- i

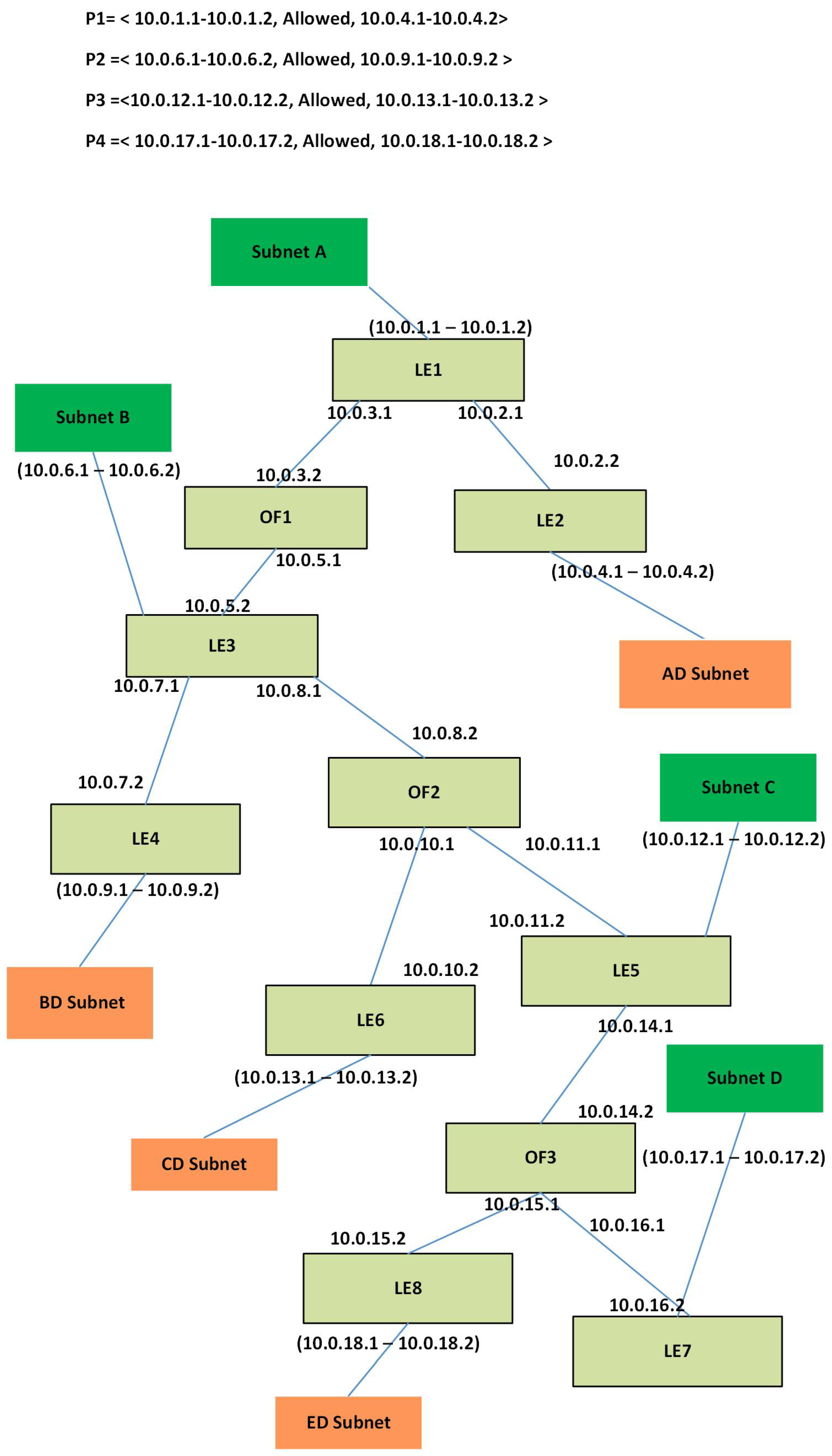

- Near the source policy implementation: Near the source policy implementation is more efficient by blocking the unwanted traffic as generated by the source node; if the source nodes are distributed then this way of policy implementation requires a large number of ACL policies and, in turn, causes a longer packet process at switches.Suppose that a company has an enterprise network shown in Figure 5. This network contains eight legacy switches LE1-LE-8, and three OpenFlow switches OF1-OF3. Users are connected to switches, i.e., LE1, LE3, LE5 and LE7, and data centers are connected to switches, i.e., LE2, LE4, LE6, and LE8 as shown in Figure 5. The company has the network policy, say P1, that the traffic generated by only subnet A1–A2 is allowed to reach the data center AD1–AD2. Near the source, policy implementation requires to implement Eight number of policies at the switches LE1, LE3, LE5 and LE7 that the traffic destined for AD1–AD2 should be blocked as shown in Figure 5. Now every packet generated by the nodes attached to the switches (LE1, LE3, LE5, LE7) will have to pass through these policies. Consequently, it causes a longer delay for the packets.

- ii

- Near the destination policy implementation: Continuing with the previous paragraph, we can implement P1 by using near to the destination policy implementation as follows. We implement at the egress interface of switch LE2 to allow the packets generated by A1–A2 and to drop all other packets as shown in Figure 5. This requires only two policies implemented at one switch. Thus, the packets generated by the nodes attached to the switches LE3 to LE7 will not have to pass through ACL policies and, subsequently, reducing the packet delay. However, in this case, unwanted traffic traverse through the entire network. That is, the traffic generated by D1 would traverse up to LE2 and then will be dropped here. This consumes more network resources like bandwidth, energy and processing power on the path from D1 to LE2 as shown is Figure 6 using a red dotted line.

- iii

- Egress port policy implementation: Similarly, we can implement policies at the egress ports of the switch LE2 where data centers AD1 and AD2 are attached. In the case, if destination nodes are less, e.g., 5–10 nodes, then it may be feasible to configure all the ports but if nodes are greater in number, e.g., more than 100 then it is challenging and sometimes impossible to configure all the ports for the desired policy. Similarly, any update in that policy is also quite cumbersome to configure.

- iv

- Ingress port policy implementation: we can implement policies at the ingress port of the switch LE2 where it is connected with LE1. In this case, we have to implement only two policies to block the traffic from all other subnets, i.e., B, C, and D, but in case of topology updates, this scheme may not be more feasible. So, optimum policy implementation is required that has less number of rules, on less number of interfaces, stop unwanted traffic as soon as possible, load balancing strategy for all routers, etc.

3.2. The Formal Technique to Deploy ACL Policies

4. Proposed Solution

4.1. Decision Tree Construction

| Algorithm 1 Decision Tree Construction |

| 1: Input: L is a set of links, V is a set of vertices |

| 2: Output: Graph H |

| 3: H= 0 // H is empty |

| 4: while the Instance is not solved do |

| 5: Select the link from the L and vertices from V |

| 6: if the edge connects two vertices in disjoint subsets then |

| 7: merge the subsets; |

| 8: add the edge to H; |

| 9: end if |

| 10: if All the subsets are merged then |

| 11: the instance is solved |

| 12: end if |

| 13: end while |

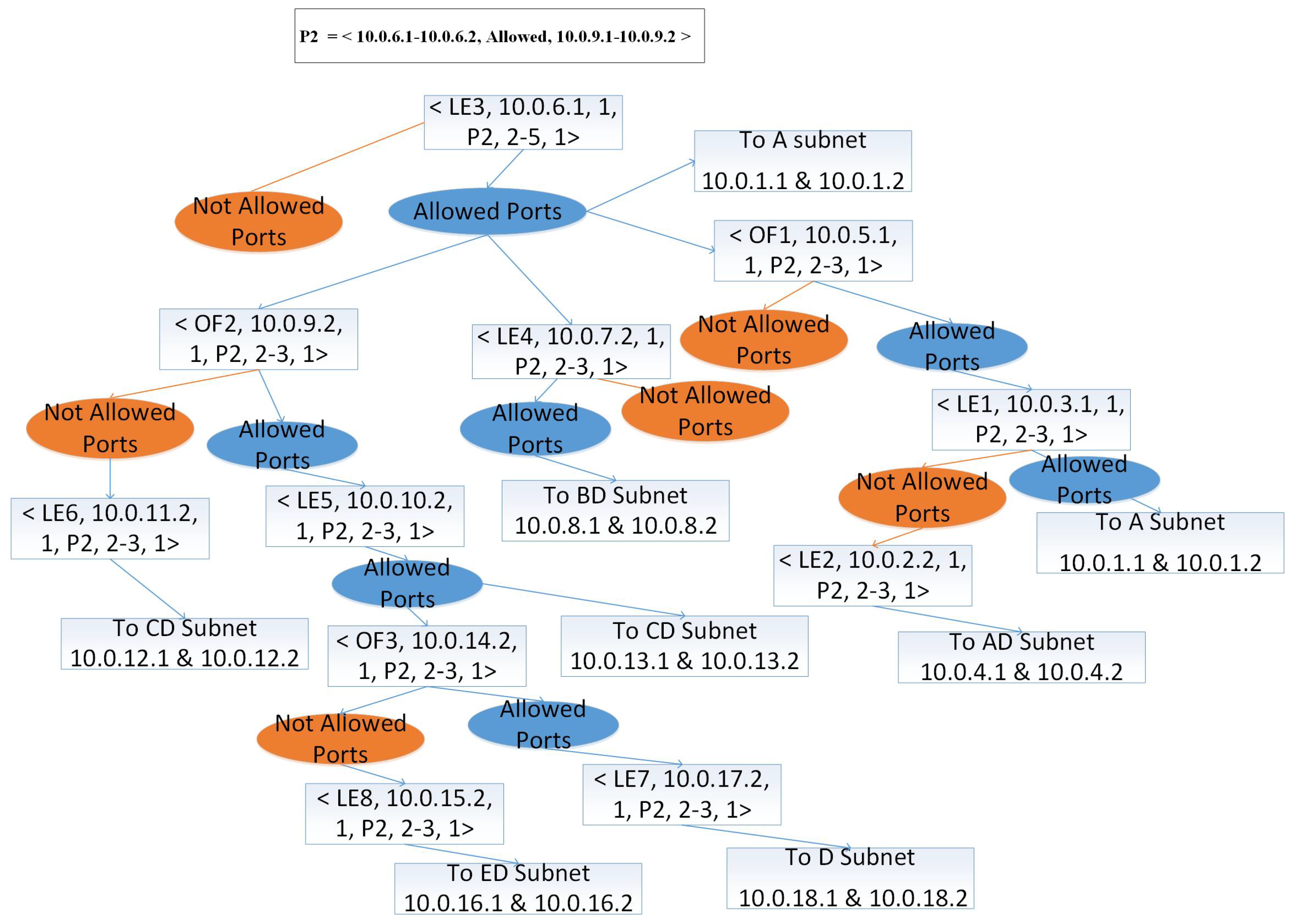

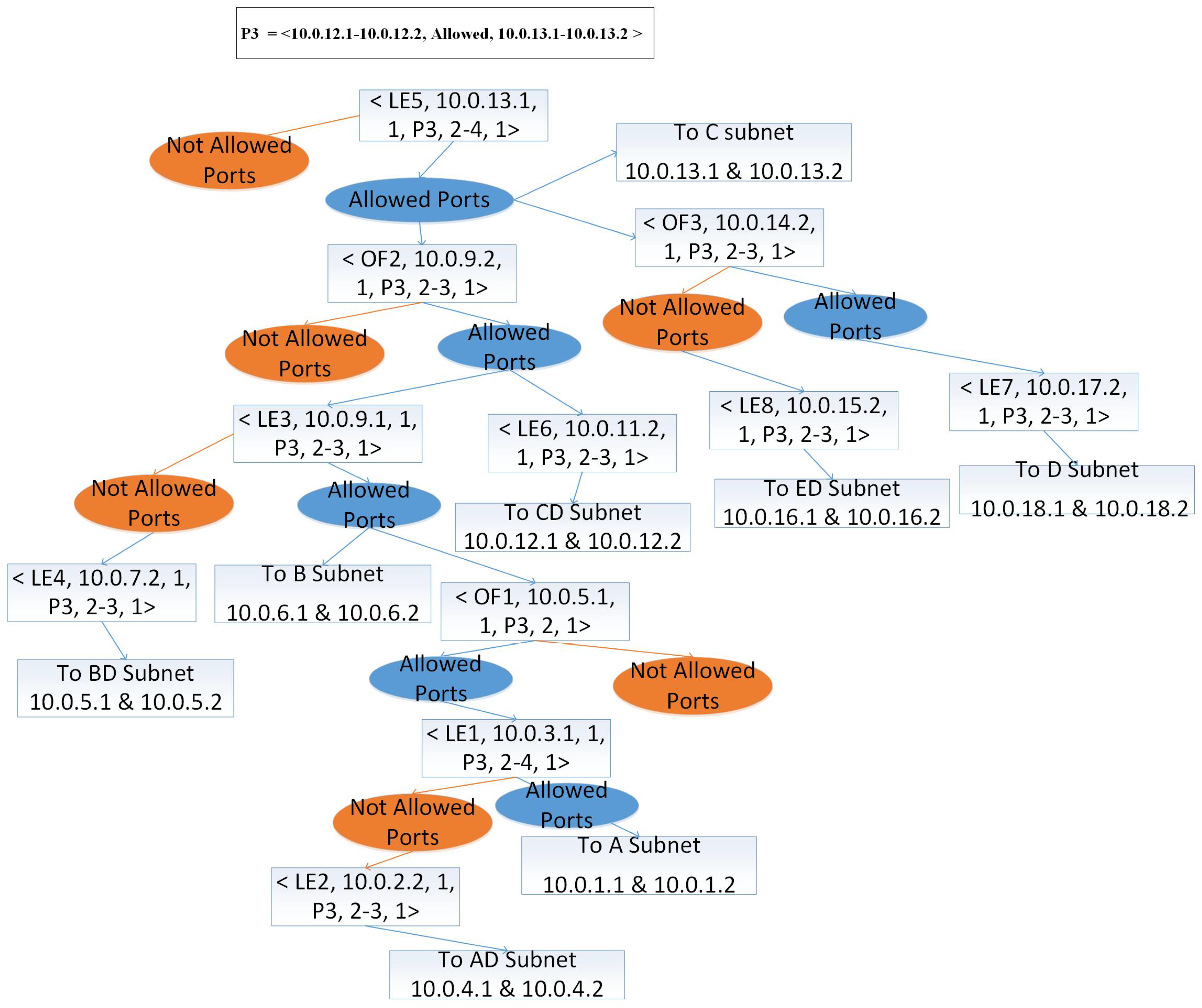

4.2. Network Policy Representation

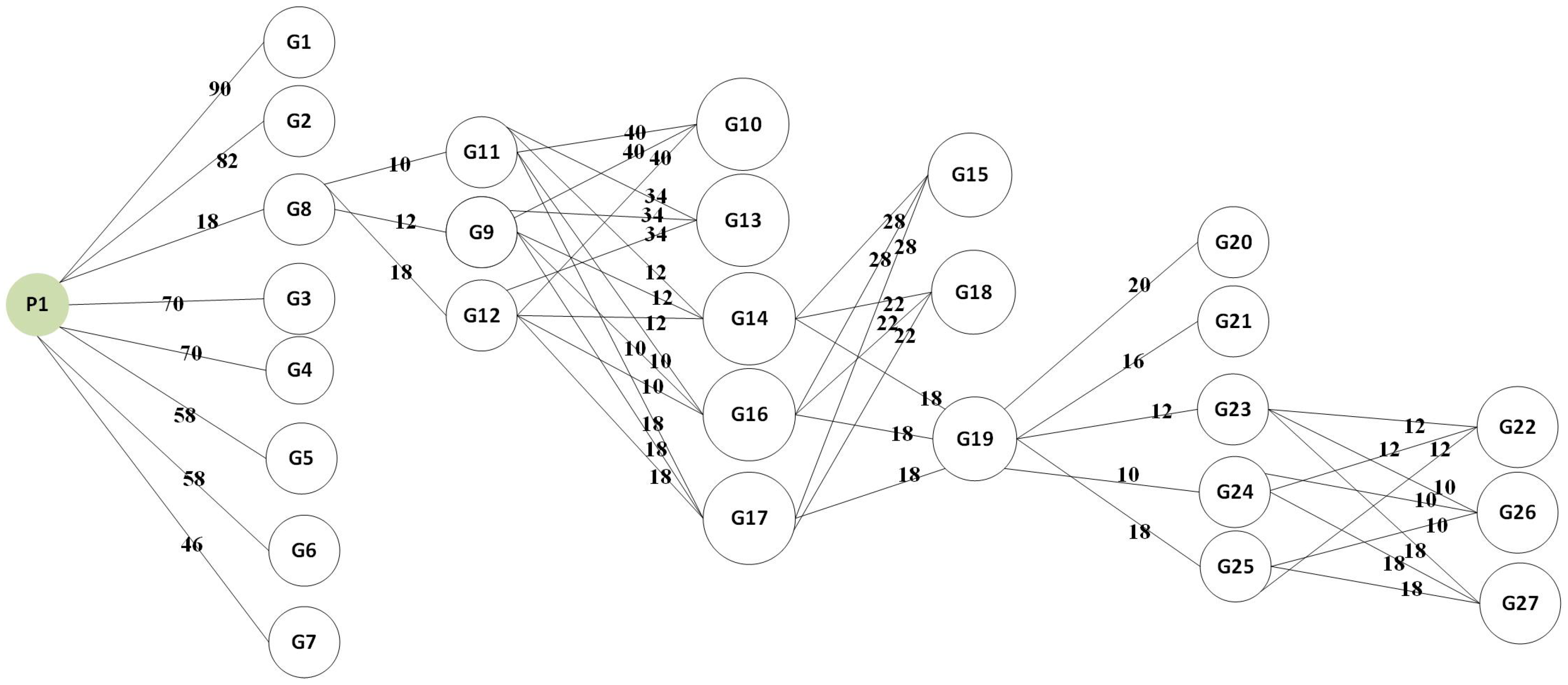

4.3. Decision Tree Traversing for Multiple ACL Policies

| Algorithm 2 Tree Traversing |

| 1: Input: |

| 2: is set of network policies |

| 3: DT is the decision tree constructed in Section 4.1 |

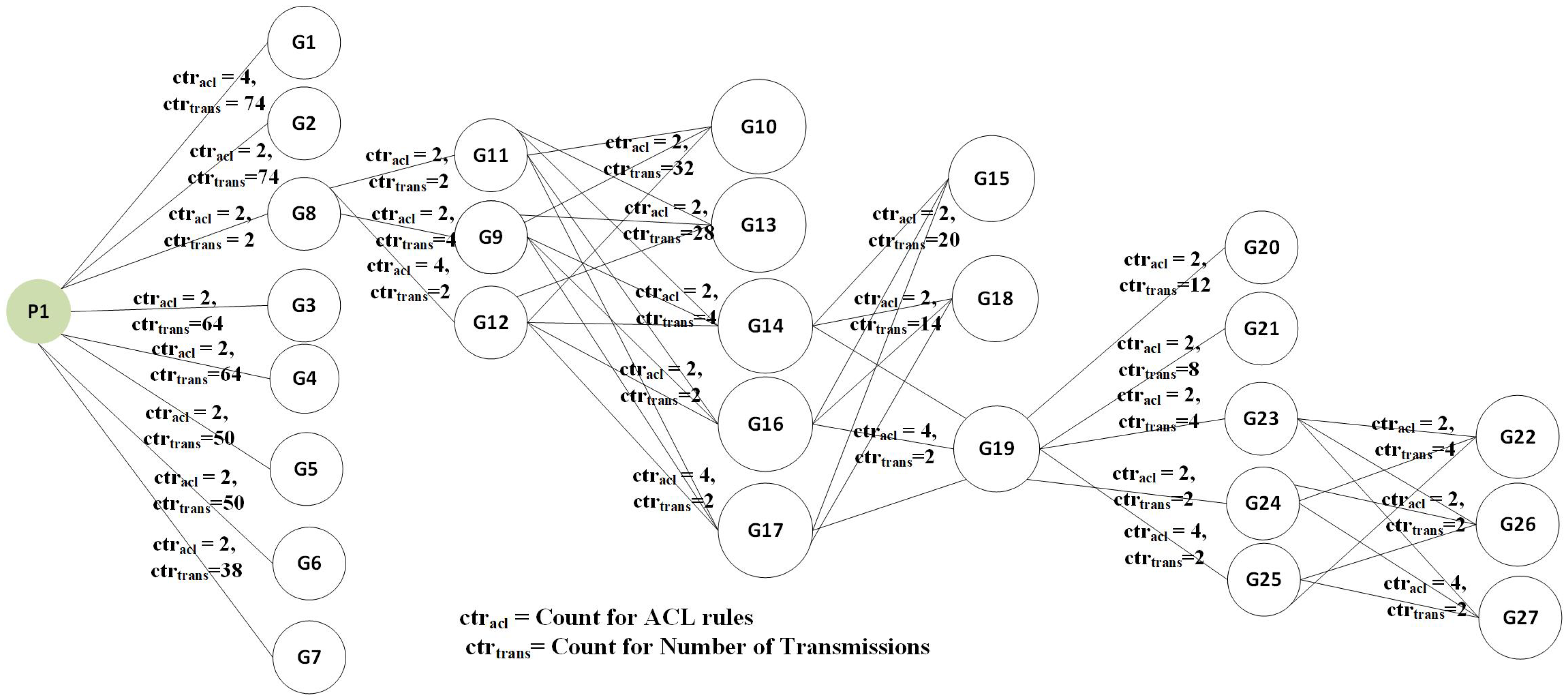

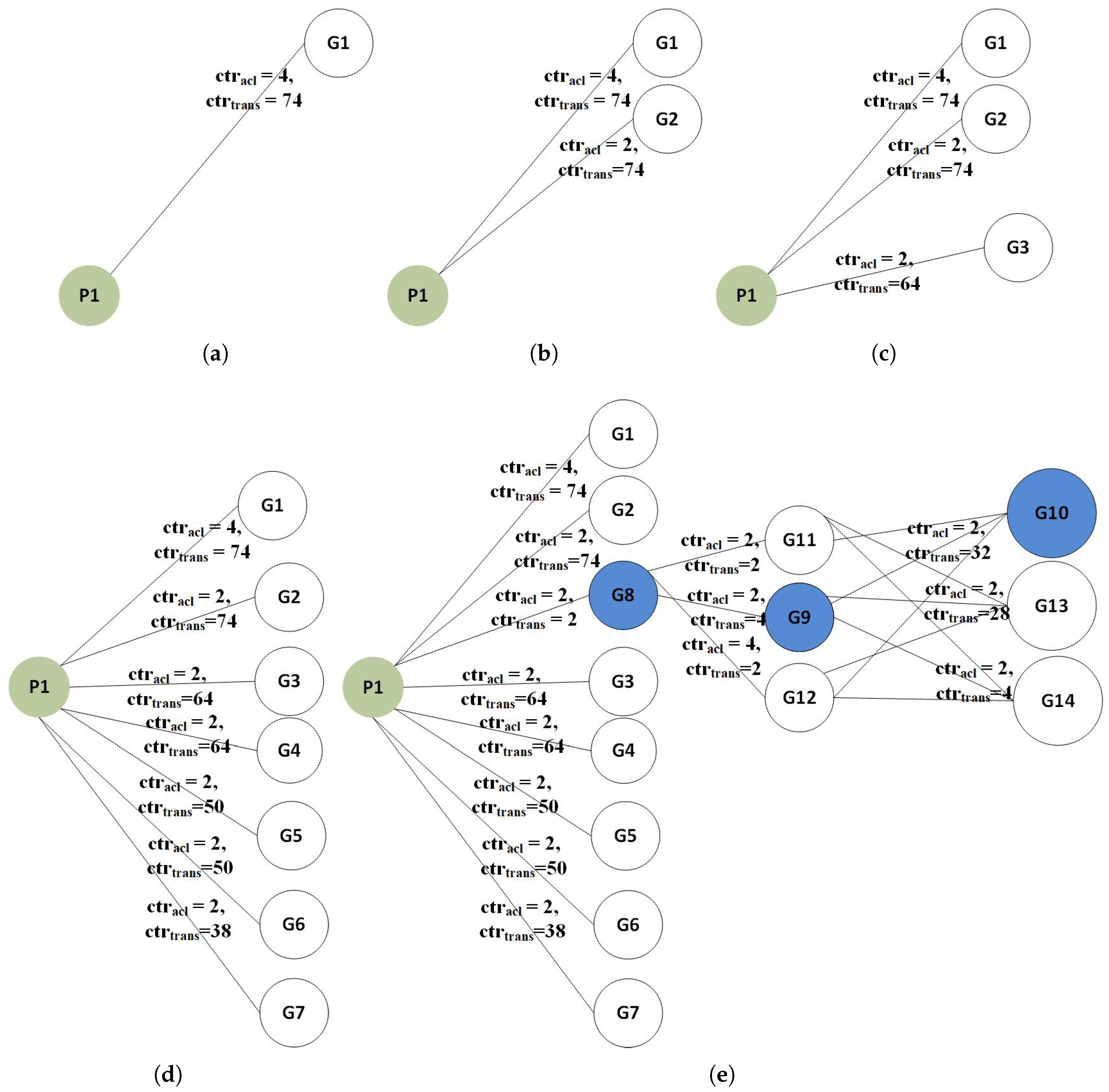

| 4: G is a adjacency list that contains the groups numbers where i = 0, 1, 2, …, k against and values where and indicate the transmissions and rules count. |

| 5: We take a network policy, say Pi <Source IP, Access Policy, Destination IP>. For example, we take P1 = < 10.0.1.1-10.0.1.2, Allowed, 10.0.4.1-10.0.4.2> |

| 6: We take Destination IP of . In our example Destination IP of , it is 10.0.4.1-10.0.4.2. |

| 7: while Node in DT do |

| 8: if node in destination list then |

| 9: Find all similar nodes and create a group |

| 10: Count number of and for |

| 11: is connected to forming a K-partite graph |

| 12: if Other Interfaces exist then |

| 13: if Interface in Source IP list then |

| 14: No rule installed |

| 15: else |

| 16: if Single Interface then |

| 17: Group is formed for this interface |

| 18: Count number of and for |

| 19: is added to K-Partite graph |

| 20: end if |

| 21: if Multiple Interfaces then |

| 22: Take all interfaces jointly, count two rules for each |

| 23: For each interface separate group is formed |

| 24: Count number of and for |

| 25: All is added to K-Partite graph in connecting each other in a series |

| 26: end if |

| 27: end if |

| 28: end if |

| 29: else |

| 30: if Node is leaf then |

| 31: Explore other similar nodes (leaves) connected to the switch |

| 32: if Nodes Interfaces in Source IP list then |

| 33: No rule installed |

| 34: else |

| 35: Create group of all similar leaves connected to the switch |

| 36: Count number of and for |

| 37: is added to K-Partite graph |

| 38: end if |

| 39: end if |

| 40: if Other Interfaces exist then |

| 41: if Single Interface then |

| 42: Group is formed for this interface |

| 43: Count number of and for |

| 44: is added to K-Partite graph |

| 45: end if |

| 46: if Multiple Interfaces then |

| 47: Take all interfaces jointly, count two rules for each |

| 48: For each interface separate group is formed |

| 49: Count number of and for |

| 50: All is added to K-Partite graph in connecting each other in a series |

| 51: end if |

| 52: end if |

| 53: end if |

| 54: end while |

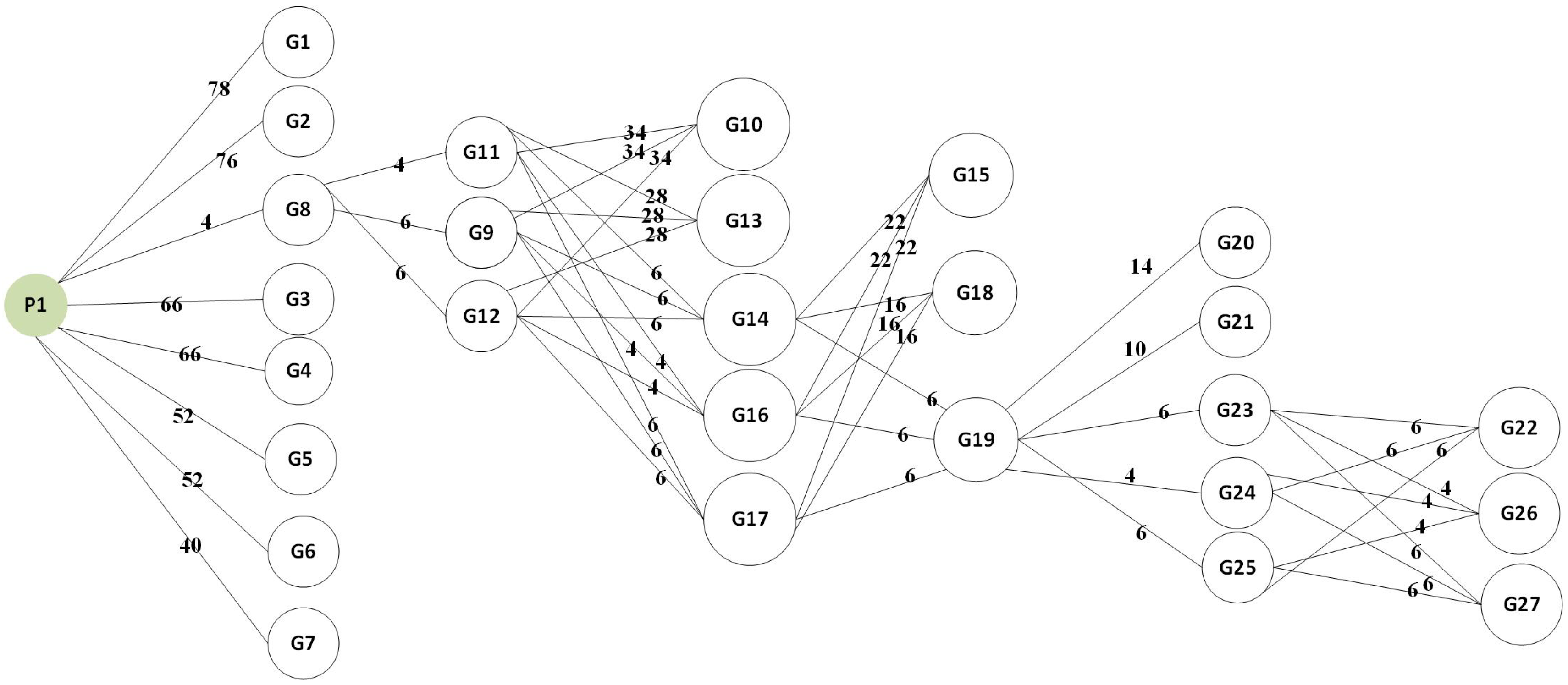

| Algorithm 3 Shortest Path for K-Partite graph |

| 1: Set M = m, d(m) = 0 |

| 2: for All neighbor nodes u to m, set d(u) = w(m,u) do |

| 3: for All other non neighbor nodes, set do |

| 4: while do |

| 5: Choose vertex with minimum |

| 6: Set |

| 7: for All neighbor nodes q to v such that do |

| 8: if d(q) > d(v) + w(v,q) then |

| 9: Set d(q) = d(v) + w(q,v) |

| 10: end if |

| 11: end for |

| 12: end while |

| 13: end for |

| 14: end for |

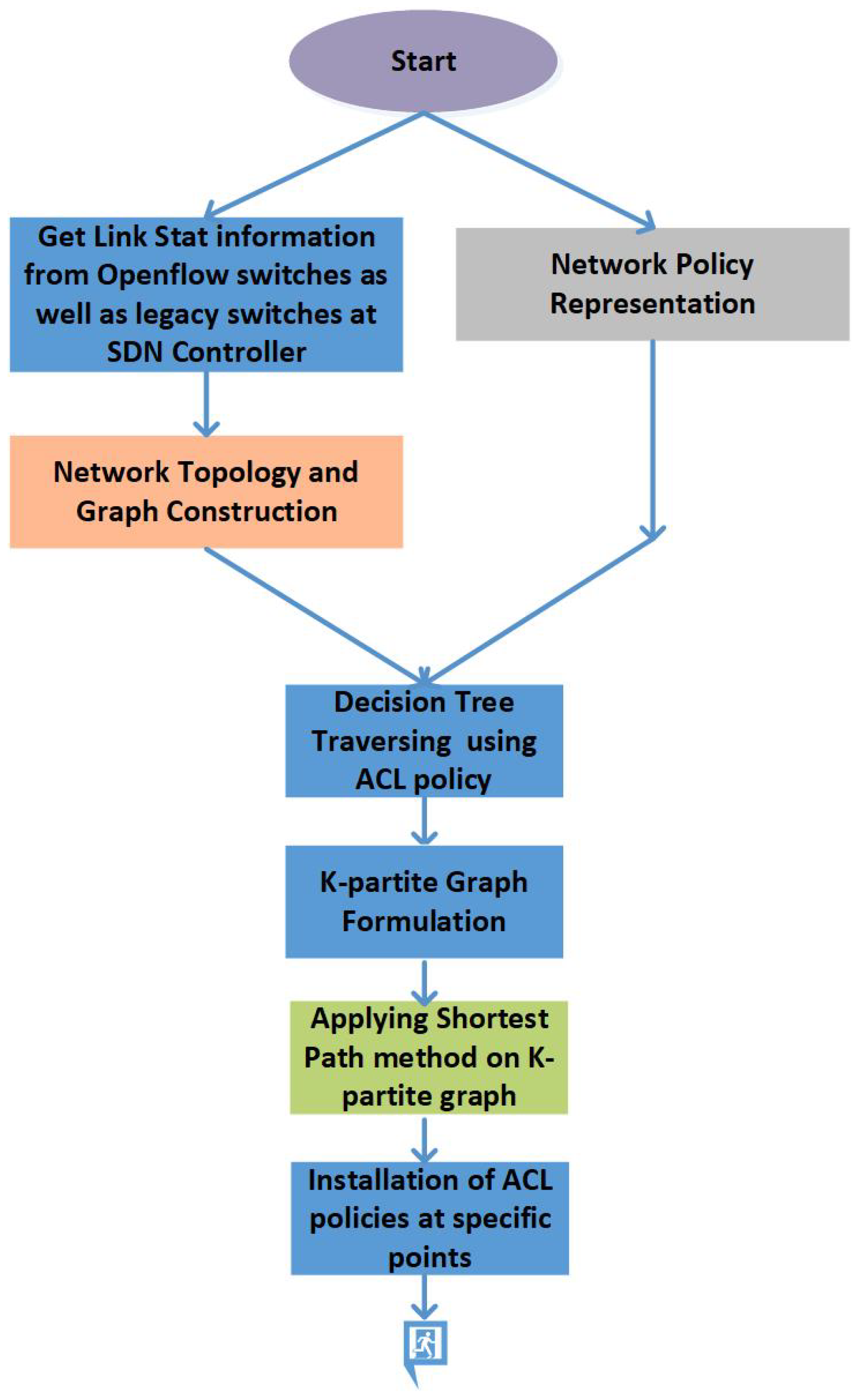

5. Simulation Results

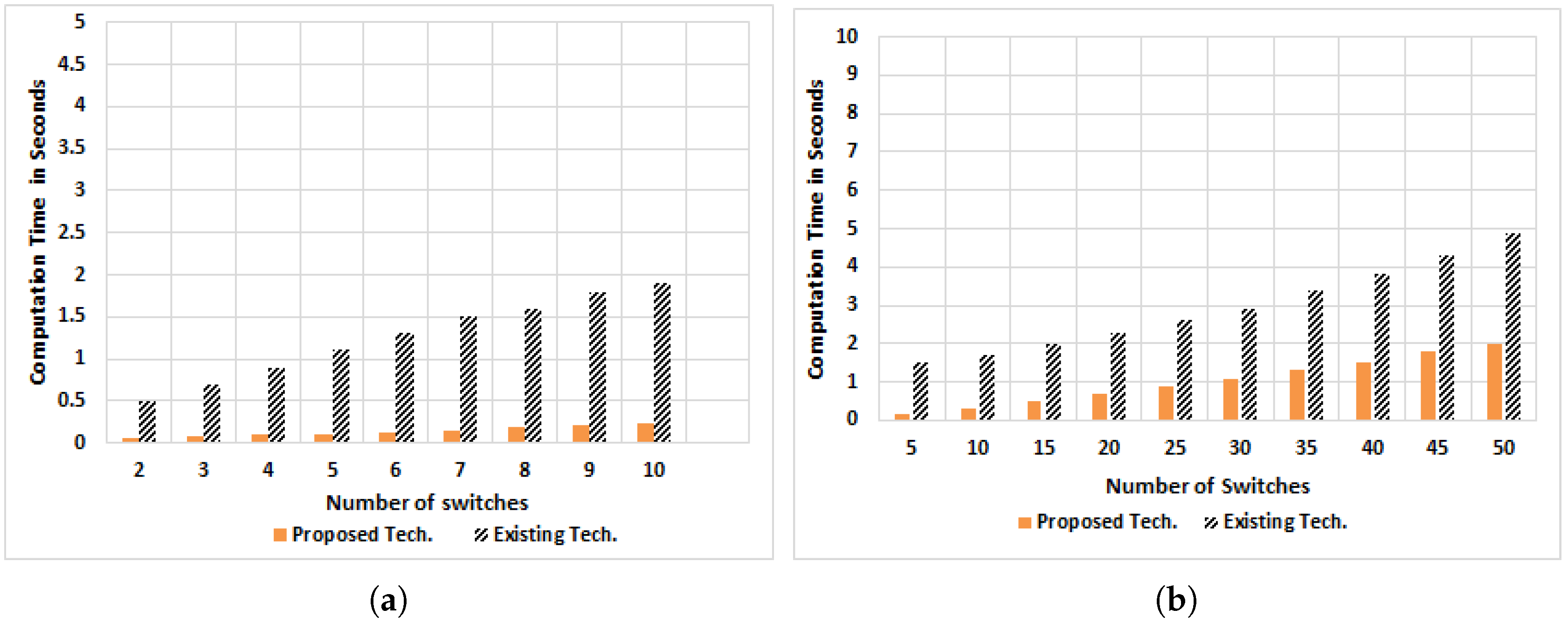

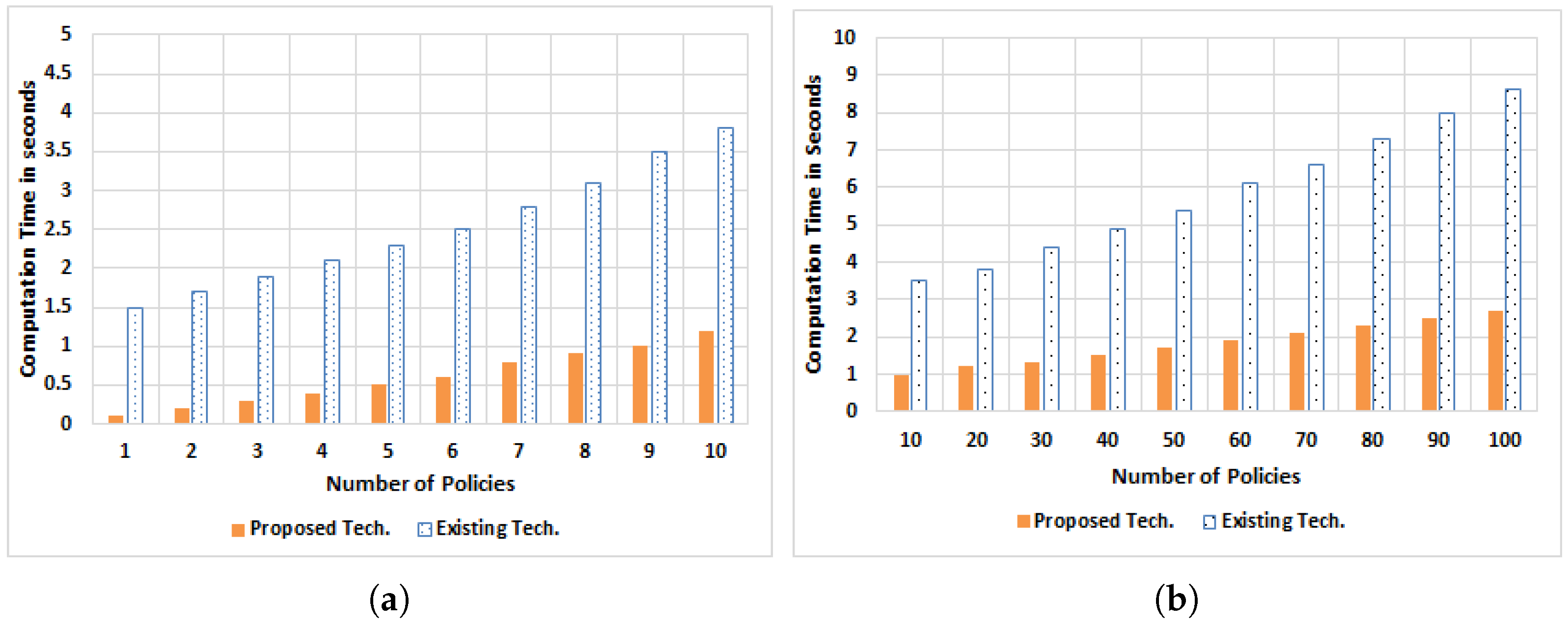

- Graph Computation Time: Graphs are traversed for a specific policy to find appropriate places (interfaces) for rule installation. Firstly, network policy is traversed on the decision tree, and the K-partite graph is created. Secondly, the shortest path is computed on a K-partite graph having a subset of the interface where policies can be installed.

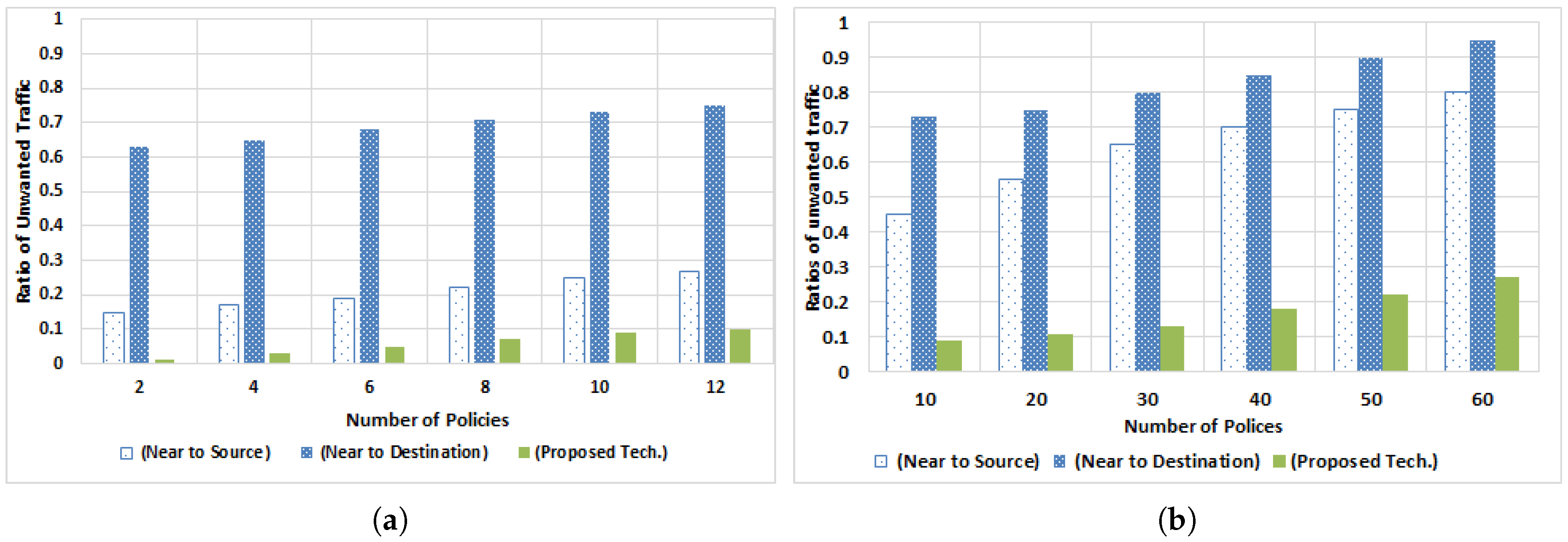

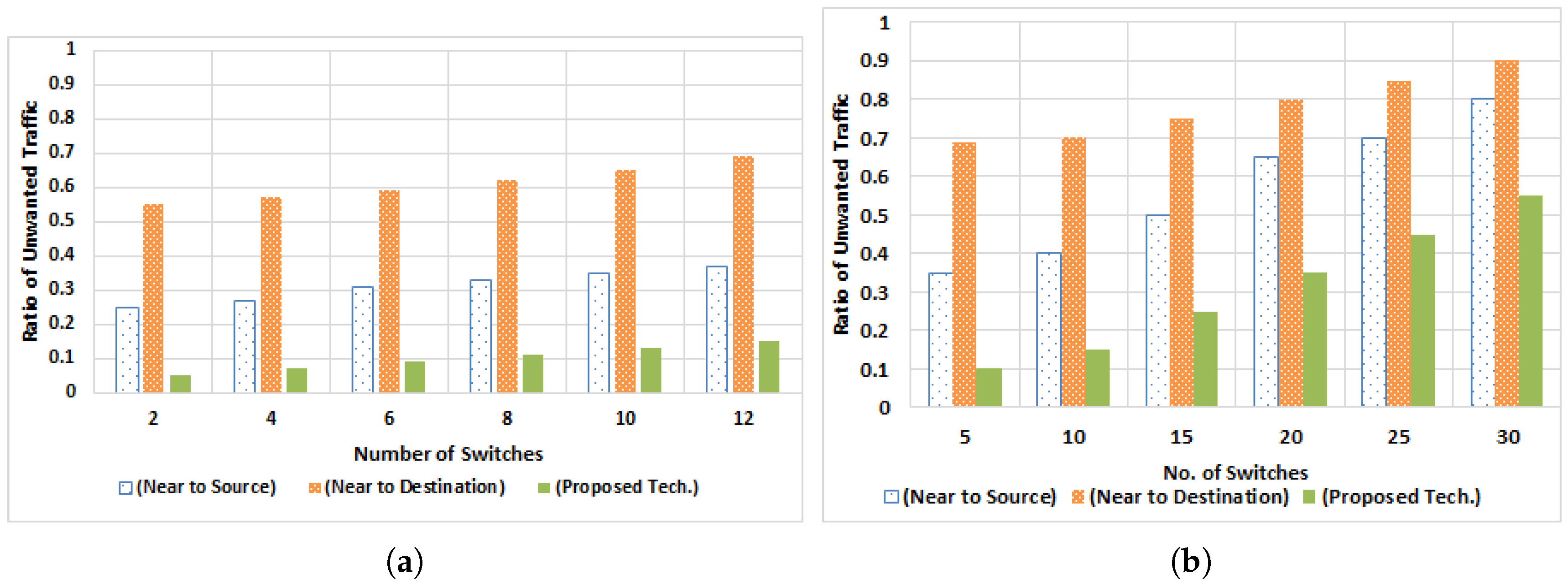

- Traffic Optimization: Policy implementation plays important role in traffic optimization. If policies are deployed at the proper places, then network congestion can be avoided and traffic losses are eliminated. By using a K-partite graph, we regularized the overall network traffic, so that the minimum number of rules are installed with the best route for network traffic.

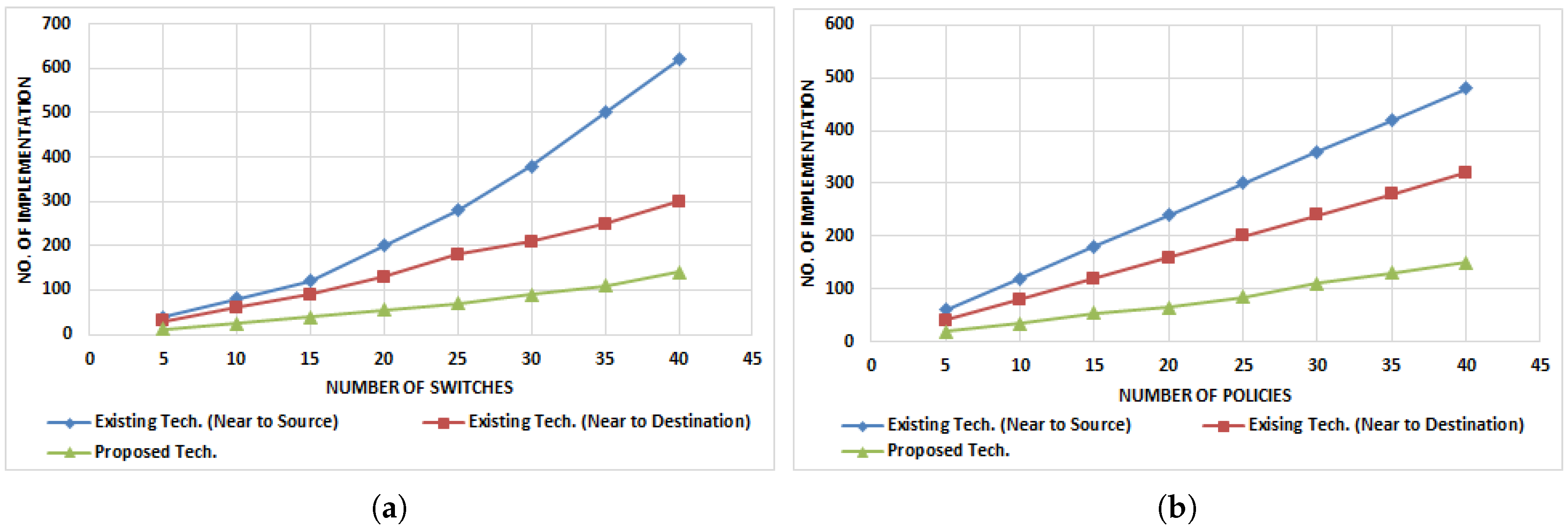

- Number of ACL rule versus Number implementations: To secure the entire network, ACL rules are implemented on the network devices. If we install ACL rules at the egress ports of the switch where end nodes are connected, then the number of rules is directly proportional to the number of nodes. If ACL rules are installed at the ingress port of the switch, then a fewer number of rules are needed. When policies are implemented at proper places, then a fewer number of rules are required.

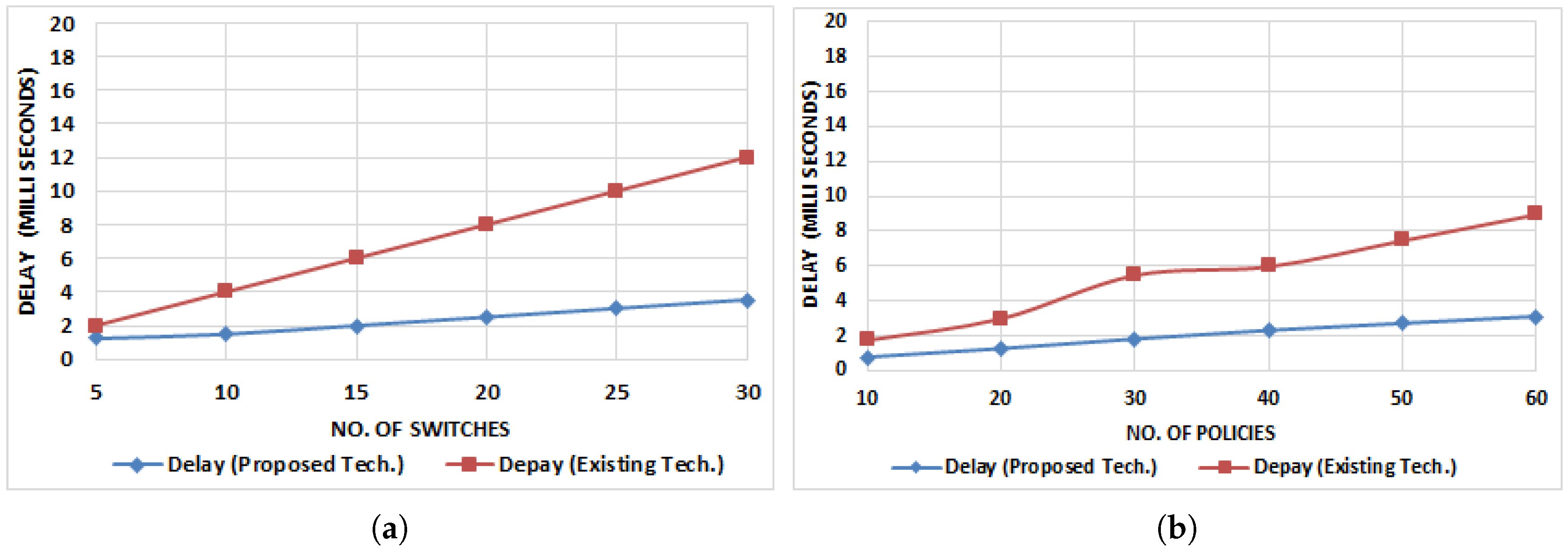

- End-to-End Delay Computation: End-to-End delay defines the total time consumed for a data packet to be reached across a network from the source node to the destination node. End-to-End Delay = Number of switches + Transmission of N number of packets − (Transmission delay + Processing + Queuing).

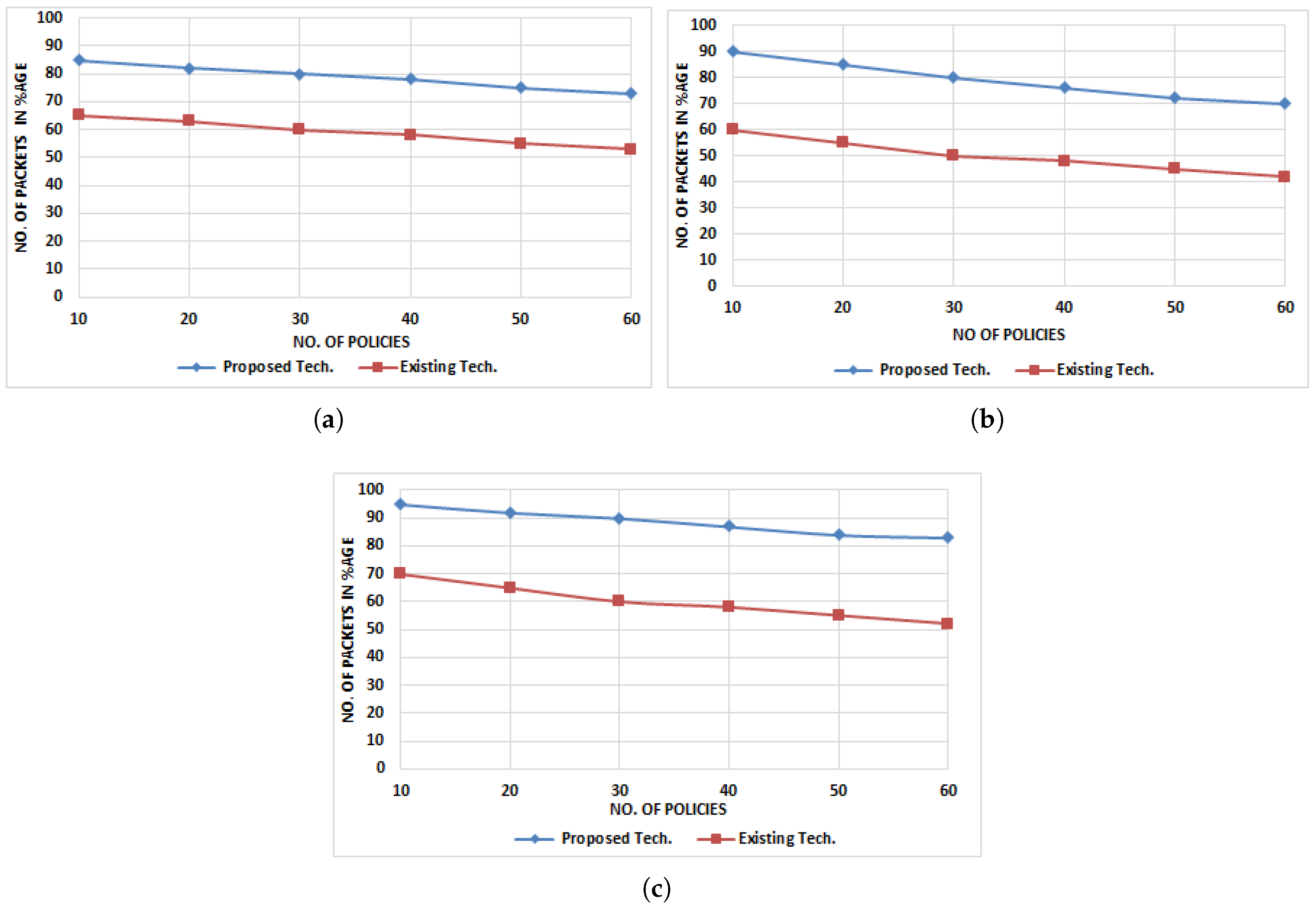

- Successful Packet Delivery (SPD) ratio: This implies the total number of packets received at destination nodes according to the specific policy to the total number of packets generated from the source nodes by considering different policy implementation schemes, i.e., near the source, near the destination, etc.

5.1. Graph Computation Time

5.1.1. Graph Computation Time by Varying Number of Switches

5.1.2. Graph Computation Time by Varying Number of Policies

5.2. Traffic Optimization

5.2.1. Traffic Optimization by Varying Number of Policies

5.2.2. Traffic Optimization by Varying Number of Switches

5.3. Number of ACL Implementations against ACL Policies

5.4. End-to-End Delay Computation

5.4.1. When Number of Switches Are Varying

5.4.2. When Number of Policies Are Varying

5.5. Successful Packet Delivery

5.5.1. When Policies Are Placed near the Source

5.5.2. When Policies Are Placed near the Destination

5.5.3. When Policies Are Placed Optimally

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nguyen, X.N.; Saucez, D.; Barakat, C.; Turletti, T. Rules placement problem in openflow networks: A survey. IEEE Commun. Surv. Tutor. 2016, 18, 1273–1286. [Google Scholar] [CrossRef]

- Masood, R.; Shibli, M.A.; Ghazi, Y.; Kanwal, A.; Ali, A. Cloud authorization: Exploring techniques and approach towards effective access control framework. Front. Comput. Sci. 2015, 9, 297–321. [Google Scholar] [CrossRef]

- Wang, K.; Qi, Y.; Yang, B.; Xue, Y.; Li, J. LiveSec: Towards effective security management in large-scale production networks. In Proceedings of the 2012 32nd International Conference on IEEE Distributed Computing Systems Workshops (ICDCSW), Macau, China, 18–21 June 2012; pp. 451–460. [Google Scholar]

- Ali, M.; Khan, S.U.; Vasilakos, A.V. Security in cloud computing: Opportunities and challenges. Inf. Sci. 2015, 305, 357–383. [Google Scholar] [CrossRef]

- Wei, L.; Zhu, H.; Cao, Z.; Dong, X.; Jia, W.; Chen, Y.; Vasilakos, A.V. Security and privacy for storage and computation in cloud computing. Inf. Sci. 2014, 258, 371–386. [Google Scholar] [CrossRef]

- Amin, R.; Shah, N.; Shah, B.; Alfandi, O. Auto-Configuration of ACL Policy in Case of Topology Change in Hybrid SDN. IEEE Access 2016, 4, 9437–9450. [Google Scholar] [CrossRef]

- Sherry, J.; Hasan, S.; Scott, C.; Krishnamurthy, A.; Ratnasamy, S.; Sekar, V. Making middleboxes someone else’s problem: Network processing as a cloud service. ACM SIGCOMM Comput. Commun. Rev. 2012, 42, 13–24. [Google Scholar] [CrossRef]

- Sezer, S.; Scott-Hayward, S.; Chouhan, P.K.; Fraser, B.; Lake, D.; Finnegan, J.; Viljoen, N.; Miller, M.; Rao, N. Are we ready for SDN? Implementation challenges for software-defined networks. IEEE Commun. Mag. 2013, 51, 36–43. [Google Scholar] [CrossRef]

- Yoon, C.; Park, T.; Lee, S.; Kang, H.; Shin, S.; Zhang, Z. Enabling security functions with SDN: A feasibility study. Comput. Netw. 2015, 85, 19–35. [Google Scholar] [CrossRef]

- Ding, A.Y.; Crowcroft, J.; Tarkoma, S.; Flinck, H. Software defined networking for security enhancement in wireless mobile networks. Comput. Netw. 2014, 66, 94–101. [Google Scholar] [CrossRef]

- Kim, H.; Feamster, N. Improving network management with software defined networking. IEEE Commun. Mag. 2013, 51, 114–119. [Google Scholar] [CrossRef]

- Fayazbakhsh, S.K.; Chiang, L.; Sekar, V.; Yu, M.; Mogul, J.C. Enforcing Network-Wide Policies in the Presence of Dynamic Middlebox Actions using FlowTags. In Proceedings of the 11th USENIX Conference on Networked Systems Design and Implementation, Seattle, WA, USA, 2–4 April 2014; Volume 14, pp. 533–546. [Google Scholar]

- Haque, M.R.; Tan, S.C.; Yusoff, Z.; Lee, C.K.; Kaspin, R. DDoS Attack Monitoring using Smart Controller Placement in Software Defined Networking Architecture. In Computational Science and Technology; Springer: Singapore, 2019; pp. 195–203. [Google Scholar]

- Specification, O.S. OpenFlow Switch Specification: Version 1.5.1 [Online]. Available online: https://www.opennetworking.org/wp-content/uploads/2014/10/openflow-switch-v1.5.1.pdf (accessed on 30 December 2018).

- Hoelzle, U. Openflow@ google. Open Netw. Summit 2012, 17, 136. [Google Scholar]

- Jain, S.; Kumar, A.; Mandal, S.; Ong, J.; Poutievski, L.; Singh, A.; Venkata, S.; Wanderer, J.; Zhou, J.; Zhu, M.; et al. B4: Experience with a globally-deployed software defined WAN. In ACM SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2013; Volume 43, pp. 3–14. [Google Scholar]

- Hong, C.Y.; Kandula, S.; Mahajan, R.; Zhang, M.; Gill, V.; Nanduri, M.; Wattenhofer, R. Achieving high utilization with software-driven WAN. In ACM SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2013; Volume 43, pp. 15–26. [Google Scholar]

- Maltz, D.A.; Greenberg, A.G.; Patel, P.K.; Sengupta, S.; Lahiri, P. Data Center Interconnect and Traffic Engineering. U.S. Patent 8,160,063, 17 April 2012. [Google Scholar]

- Levin, D.; Canini, M.; Schmid, S.; Schaffert, F.; Feldmann, A. Panopticon: Reaping the Benefits of Incremental SDN Deployment in Enterprise Networks. In Proceedings of the USENIX Annual Technical Conference, Philadelphia, PA, USA, 19–20 June 2014; pp. 333–345. [Google Scholar]

- Amin, R.; Reisslein, M.; Shah, N. Hybrid SDN Networks: A Survey of Existing Approaches. IEEE Commun. Surv. Tutor. 2018, 20, 3259–3306. [Google Scholar] [CrossRef]

- Markovitch, M.; Schmid, S. Shear: A highly available and flexible network architecture marrying distributed and logically centralized control planes. In Proceedings of the 2015 IEEE 23rd International Conference on Network Protocols (ICNP), San Francisco, CA, USA, 10–13 November 2015; pp. 78–89. [Google Scholar]

- Canini, M.; Feldmann, A.; Levin, D.; Schaffert, F.; Schmid, S. Panopticon: Incremental Deployment of Software-Defined Networking. In Proceedings of the ACM Symposium on SDN Research, Santa Clara, CA, USA, 14–15 March 2016. [Google Scholar]

- Vissicchio, S.; Vanbever, L.; Bonaventure, O. Opportunities and research challenges of hybrid software defined networks. ACM SIGCOMM Comput. Commun. Rev. 2014, 44, 70–75. [Google Scholar] [CrossRef]

- Vissicchio, S.; Vanbever, L.; Cittadini, L.; Xie, G.G.; Bonaventure, O. Safe Update of Hybrid SDN Networks. IEEE/ACM Trans. Netw. 2017, 25, 1649–1662. [Google Scholar] [CrossRef]

- Ahmad, I.; Kumar, T.; Liyanage, M.; Okwuibe, J.; Ylianttila, M.; Gurtov, A. Overview of 5G security challenges and solutions. IEEE Commun. Stand. Mag. 2018, 2, 36–43. [Google Scholar] [CrossRef]

- Kellerer, W.; Kalmbach, P.; Blenk, A.; Basta, A.; Reisslein, M.; Schmid, S. Adaptable and Data-Driven Softwarized Networks: Review, Opportunities, and Challenges. Proc. IEEE 2019, 107, 711–731. [Google Scholar] [CrossRef]

- Lain, A.; Goldsack, P. Distributed Network Connection Policy Management. U.S. Patent 9,178,850, 3 November 2015. [Google Scholar]

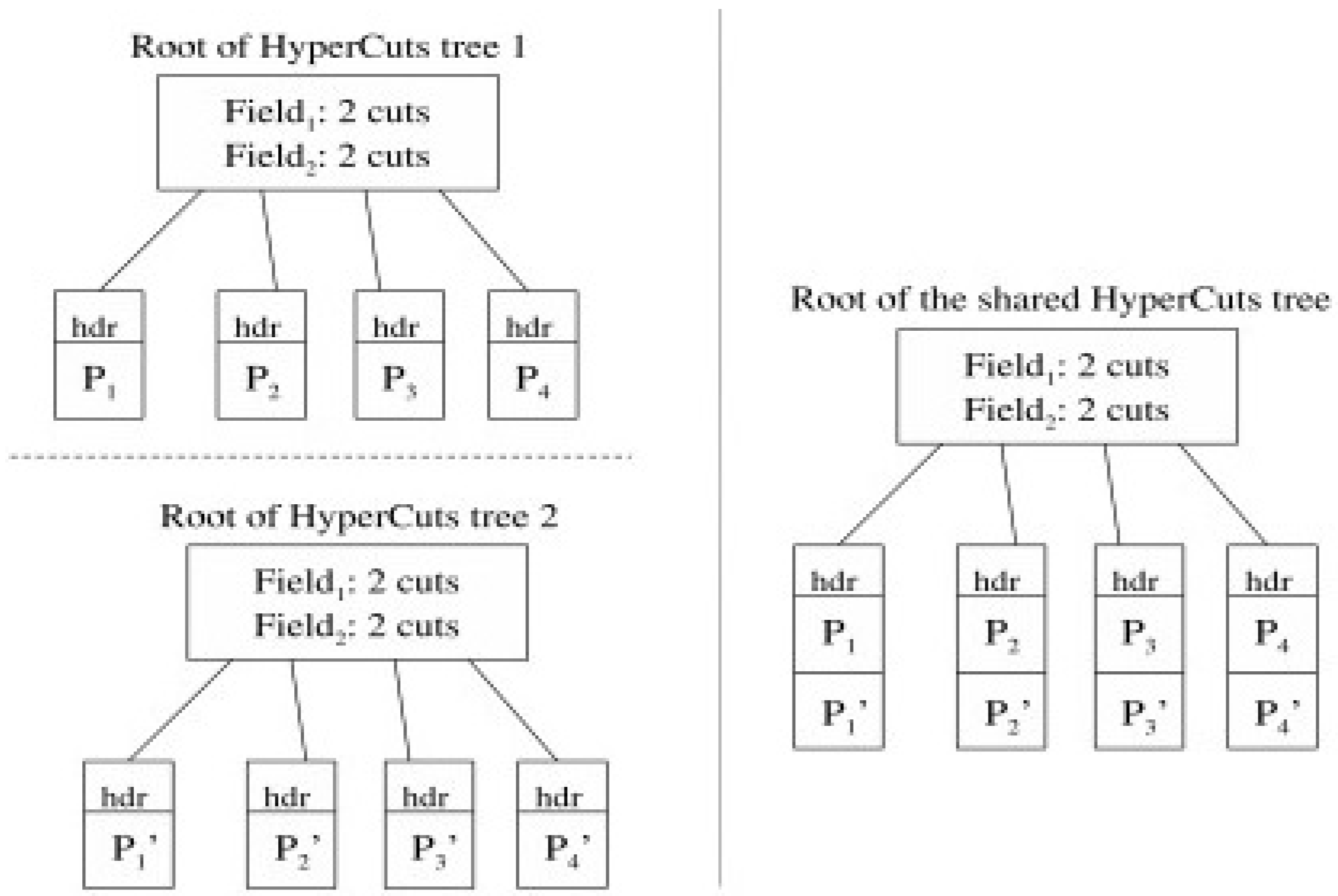

- Zhang, B.; Ng, T.S.E. On Constructing Efficient Shared Decision Trees for Multiple Packet Filters. In Proceedings of the 2010 IEEE INFOCOM, San Diego, CA, USA, 14–19 March 2010. [Google Scholar]

- Singh, S.; Baboescu, F.; Varghese, G.; Wang, J. Packet classification using multidimensional cutting. In Proceedings of the 2003 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communications, Karlsruhe, Germany, 25–29 August 2003; pp. 213–224. [Google Scholar]

- Daly, J.; Liu, A.X.; Torng, E. A difference resolution approach to compressing access control lists. IEEE/ACM Trans. Netw. 2016, 24, 610–623. [Google Scholar] [CrossRef]

- Liu, J.; Li, Y.; Wang, H.; Jin, D.; Su, L.; Zeng, L.; Vasilakos, T. Leveraging software-defined networking for security policy enforcement. Inf. Sci. 2016, 327, 288–299. [Google Scholar] [CrossRef]

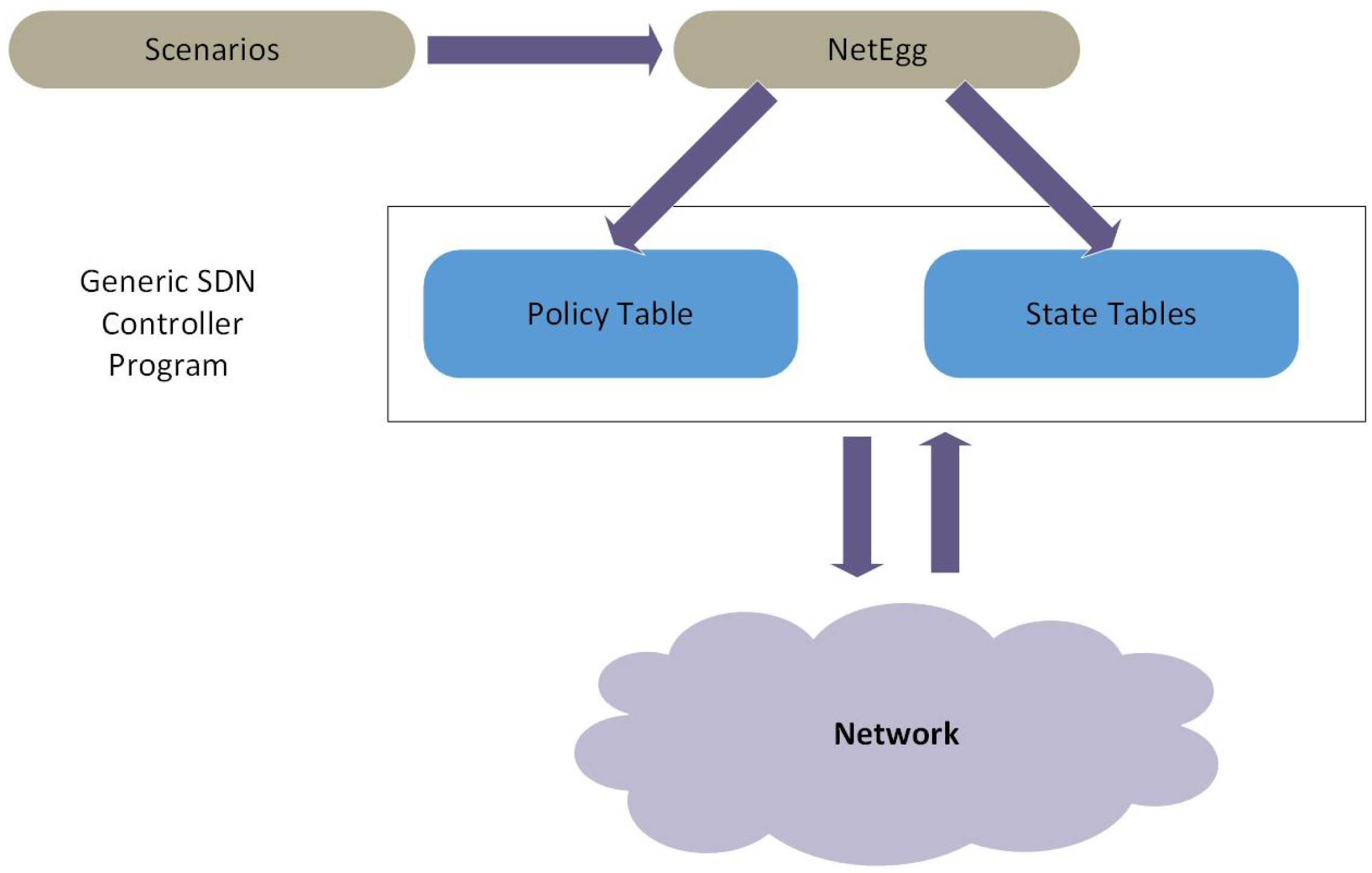

- Yuan, Y.; Alur, R.; Loo, B.T. NetEgg: Programming Network Policies by Examples. In Proceedings of the 13th ACM Workshop on Hot Topics in Networks—HotNets-XIII, Los Angeles, CA, USA, 27–28 October 2014; pp. 1–7. [Google Scholar] [CrossRef]

- Vissicchio, S.; Vanbever, L.; Rexford, J. Sweet little lies: Fake topologies for flexible routing. In Proceedings of the 13th ACM Workshop on Hot Topics in Networks—HotNets-XIII, Los Angeles, CA, USA, 27–28 October 2014; pp. 1–7. [Google Scholar]

- Kim, D. A framework for hierarchical clustering of a link-state internet routing domain. In International Conference on Information Networking; Springer: Berlin/Heidelberg, Germany, 2003; pp. 839–848. [Google Scholar]

- Smit, H.; Li, T. Intermediate System to Intermediate System (IS-IS) Extensions for Traffic Engineering (TE). 2004. Available online: https://tools.ietf.org/html/rfc3784 (accessed on 28 May 2019). RFC 3784, RFC Editor.

- Vissicchio, S.; Tilmans, O.; Vanbever, L.; Rexford, J. Central control over distributed routing. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 43–56. [Google Scholar] [CrossRef]

- Tilmans, O.; Vissicchio, S.; Vanbever, L.; Rexford, J. Fibbing in action: On-demand load-balancing for better video delivery. In Proceedings of the 2016 ACM SIGCOMM Conference, Florianopolis, Brazil, 22–26 August 2016; pp. 619–620. [Google Scholar]

- Fayazbakhsh, S.K.; Sekar, V.; Yu, M.; Mogul, J.C. Flowtags: Enforcing network-wide policies in the presence of dynamic middlebox actions. In Proceedings of the Second ACM SIGCOMM Workshop on Hot Topics in Software Defined Networking, Hong Kong, China, 16 August 2013; pp. 19–24. [Google Scholar]

- Sharma, P.K.; Rathore, S.; Jeong, Y.S.; Park, J.H. SoftEdgeNet: SDN Based Energy-Efficient Distributed Network Architecture for Edge Computing. IEEE Commun. Mag. 2018, 56, 104–111. [Google Scholar] [CrossRef]

- Team, M. An Instant Virtual Network on Your Laptop (or Other PC). [Online]. Available online: http://mininet.org/ (accessed on 28 May 2019).

- Kaur, S.; Singh, J.; Ghumman, N.S. Network programmability using POX controller. In Proceedings of the ICCCS International Conference on Communication, Computing & Systems, Gurgaon, India, 9–11 September 2014; Volume 138. [Google Scholar]

- Jin, C.; Chen, Q.; Jamin, S. Inet: Internet Topology Generator. Available online: http://topology.eecs.umich.edu/inet/ (accessed on 28 May 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amin, R.; Shah, N.; Mehmood, W. Enforcing Optimal ACL Policies Using K-Partite Graph in Hybrid SDN. Electronics 2019, 8, 604. https://doi.org/10.3390/electronics8060604

Amin R, Shah N, Mehmood W. Enforcing Optimal ACL Policies Using K-Partite Graph in Hybrid SDN. Electronics. 2019; 8(6):604. https://doi.org/10.3390/electronics8060604

Chicago/Turabian StyleAmin, Rashid, Nadir Shah, and Waqar Mehmood. 2019. "Enforcing Optimal ACL Policies Using K-Partite Graph in Hybrid SDN" Electronics 8, no. 6: 604. https://doi.org/10.3390/electronics8060604

APA StyleAmin, R., Shah, N., & Mehmood, W. (2019). Enforcing Optimal ACL Policies Using K-Partite Graph in Hybrid SDN. Electronics, 8(6), 604. https://doi.org/10.3390/electronics8060604