1. Introduction

Over the last two decades, haptic devices have played an important role in human-computer interaction (HCI) by making touch part of the information flow between the user and the computer.

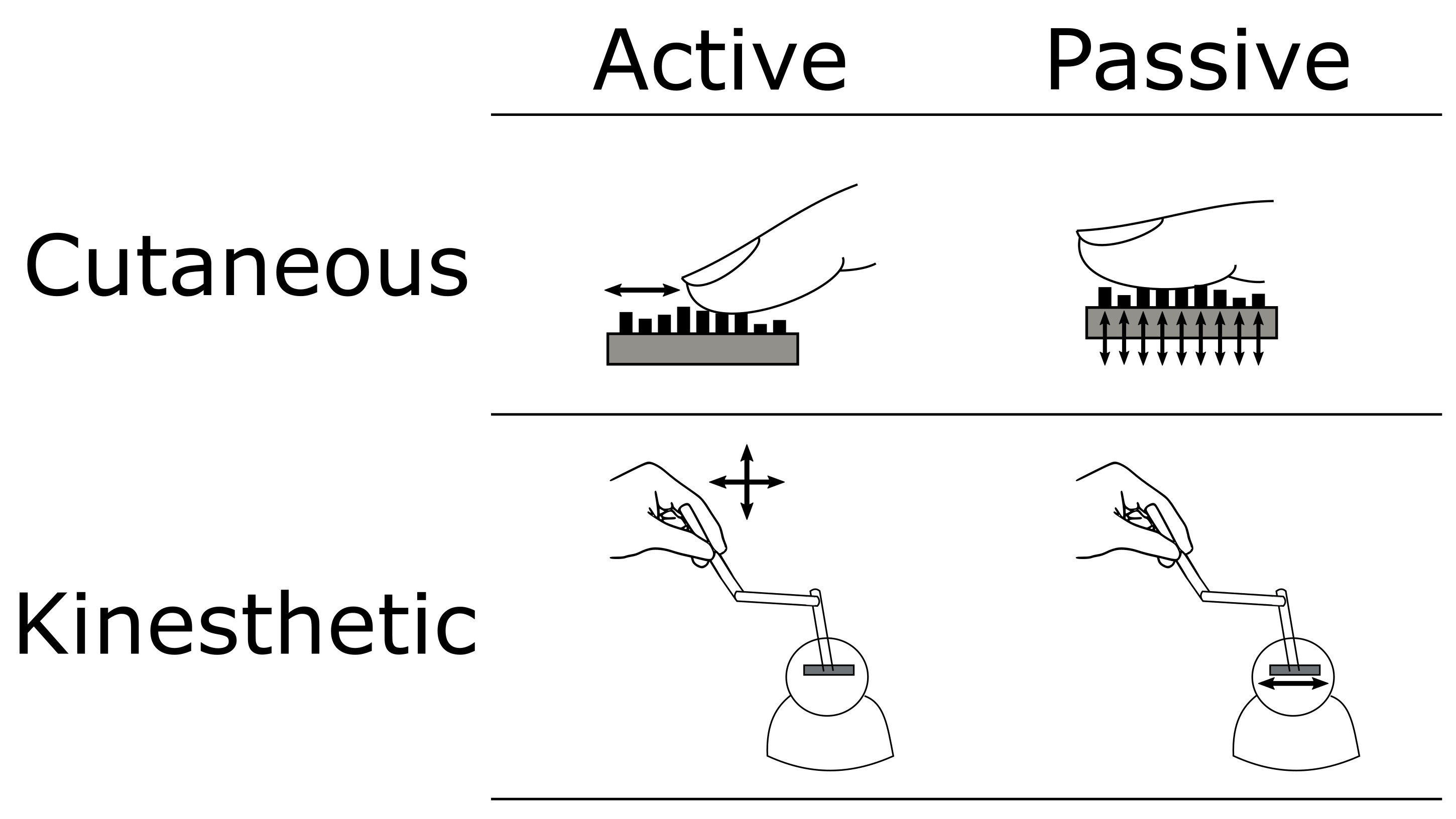

Haptic interaction implies the bidirectional transmission of cutaneous and kinesthetic sensations. Cutaneous sensations refer to pressure, shear, and vibrations applied to the skin while the kinesthetic ones involve forces and motion sensed by the muscles, tendons, and joints. It has been widely demonstrated that haptic interaction reinforces the feedback provided to the user, enhancing the HCI experience [

1,

2].

In general, two types of haptic exploration modes can be distinguished: active and passive. In active exploration, the user is in control of his/her own actions. In contrast, in passive mode, the haptic device is in motor control.

Figure 1 summarizes the haptic interaction types and exploration modes.

In cutaneous active haptics, the user deliberately explores the touchable surface (typically with the fingertips or palms). His/her purposive actions lead to a fully free surface exploration. Skin is deformed or stimulated as the result of the exploration process. In cutaneous passive haptics, the user does not perform any motion while in contact with the touch stimulation device. The device changes its tactile properties to deform or stimulate the skin. In kinesthetic active haptics, the user consciously applies forces and motion to the haptic device. The user gathers information from the reaction forces and motion. In kinesthetic passive haptics, the device imposes the information and guides the user’s actions. Cognitively, the user monitors the inflow of haptic data to build a mental representation of the information displayed.

Pin-based tactile displays, such as Braille cells and matrix displays, are the most representative examples of cutaneous active haptics [

3,

4,

5]. These devices raise and lower pins to recreate touchable surfaces. Users actively explore the tactile surface to gather information.

Wearable tactile devices are the typical examples of cutaneous passive haptics. These devices are mounted on different body parts and normally, while in use, they are in permanent contact with the skin [

6,

7,

8]. They stimulate the target skin area with vibrations or skin deformation.

Kinesthetic devices are n-DoF (degrees of freedom) manipulator-like devices that allow users to push on them and be pushed back. These exchanges of forces and motion provide feedback to the user. Two representative examples of both haptic exploration modes can be found in medical field. For active exploration, a training simulator that allows organ palpation to detect anomalies in shape and consistency [

9]. In this scenario, trainees explore the surface of the virtual organs to gather information. For passive exploration, a surgical simulator that shows how to correctly stitch a wound [

10]. Here, the haptic device guides the trainees’ hand, presenting the correct forces and motion to apply during the procedure.

In particular, kinesthetic haptic devices have generated considerable interest in fields such as robotics, rehabilitation, tele-operation/manipulation, virtual reality, gaming, education and training, among many others.

A literature review of such devices reveals that active exploration is mostly exploited in applications dealing with object manipulation and grasping (shape, hardness, and texture exploration) [

11,

12,

13], while passive exploration is mostly preferred in applications devoted to train new skills [

14,

15,

16].

In this paper, we use a low-cost kinesthetic haptic device to compare both haptic exploration modes in a shape recognition experiment and then explore the potentials of passive exploration in three additional experiments. The purpose of the first experiment is to evaluate whether active exploration offers a clear superiority over the passive mode in shape recognition tasks. The aim of the passive haptics experiments is to further investigate shape recognition and assess if shapes can be understood as pathways that can be used for navigation in space.

The rest of the paper is organized as follows:

Section 2 gives a brief technical overview of the haptic device used.

Section 3 presents the set of four experiments and discusses the results obtained. Finally,

Section 4 concludes summarizing the main concepts and future work perspectives.

2. System Overview

Research on kinesthetic haptics has been done either with self-developed prototypes or commercial platforms (a comprehensive survey on haptic devices can be found in [

17]).

The Phantom (Personal HAptic iNTerface Mechanism), produced by SensAble Technologies (now 3D Systems [

18]), is considered a milestone in the field since it formally began the research in computer haptics. It is a 6-DoF manipulator-like desktop device that connects to the computer and allows users to interact with programmed virtual objects. It is commercially available for some tens of thousands of dollars, which makes it hardly affordable for haptic programmers and enthusiasts.

In 2007, Novint [

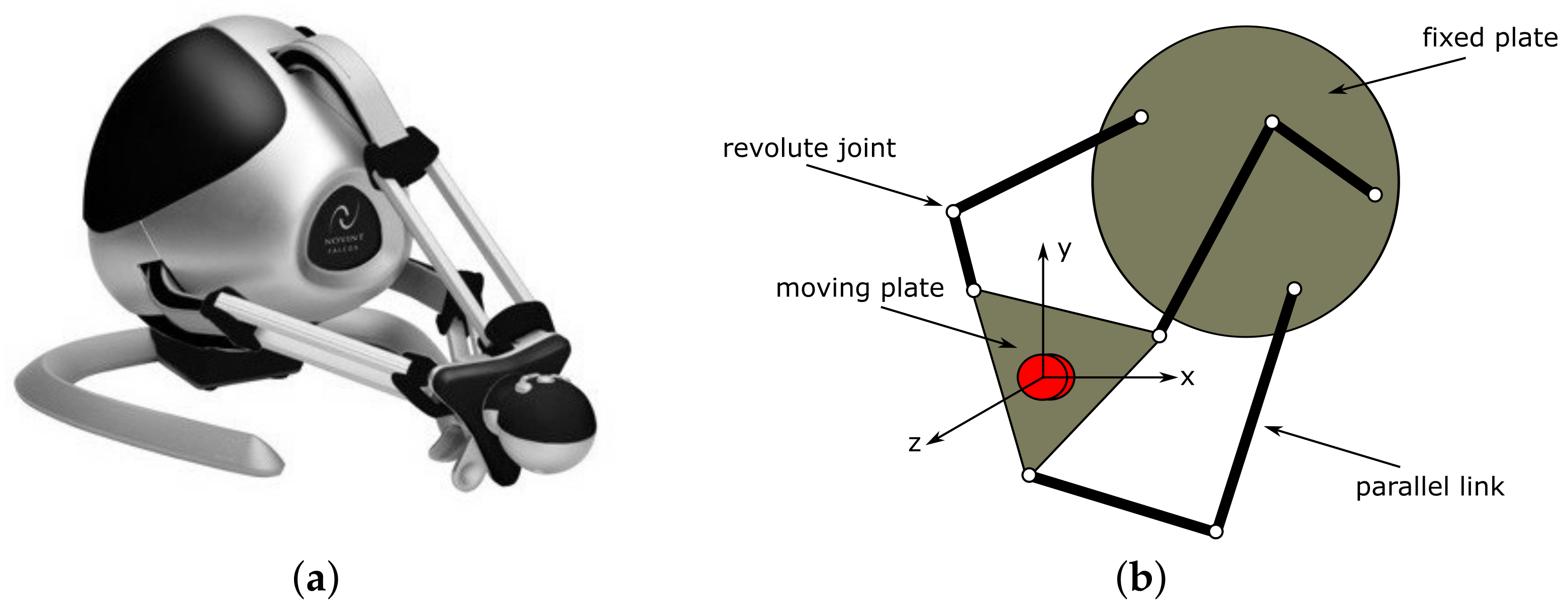

19] released the Falcon, the first consumer haptic device with 3D force feedback, destined mainly for video games and entertainment (

Figure 2a). The Falcon costs under 200 USD, which makes it an inexpensive and interesting option for computer haptics applications.

The Novint Falcon is a 3-DoF haptic device that uses a delta-robot configuration with three servo-actuated parallel links connected to a moving plate (

Figure 2b). All joints in the Falcon are one-DoF revolute joints. Its parallel structure is governed by the equations of motion of a general manipulator robot (Equation (

1)):

where q

is the state vector of generalized coordinates. H

is the inertial forces matrix, C

is the Coriolis tensor matrix, G

is the vector of potentials (such as gravity), and matrix B

maps the control input state vector u

into generalized forces.

A drawback of the delta-robot configuration is the limited workspace. As the three links move together to actuate the end effector, each link is limited by the reach of the other two. According to Novint, the total workspace can be roughly considered a cube with 10 cm side length. However, Martin and Hiller performed in [

20] a full characterization of the Falcon and report a spherical-like workspace in which the end effector can reach at some points 11 cm in the X-Y direction and 15 cm in the Z direction.

All information between the device and the controlling computer is handled via the USB port. The Falcon transmits position to the computer, which returns a force vector. Position is measured using encoders and the force vector is generated by updating the supplied currents to the servomotors in each parallel link. Position achieves approximately a 400 dpi resolution, while force reaches 9 N. The position-force loop occurs at an update rate of 1 kHz.

As a programming interface, Novint provides a software development kit (SDK) based on C++ for Windows. Programmers use functions defined in the SDK to calculate and send force vectors to the device. Alternatively, libnifalcon is an open source C++ cross-platform driver [

21]. With the intent of further cross-platform research, our development employs the open source solution.

3. Haptic Experiments with the Novint Falcon

The aim of our research is to determine the usability of the Falcon for displaying haptic shapes. In four experiments, subjects explored haptic shapes in both active and passive modes. Their ability to recognize them (

Section 3.2,

Section 3.3 and

Section 3.4) and act upon the gathered information (

Section 3.5) was measured. Each of the four experiments is described below.

3.1. Participants

Ten subjects (eight men and two women) participated voluntarily in the experiments. All subjects were healthy (i.e., no known impairments in tactile sensory, cognitive, or motor functions) undergraduate and graduate students at Panamericana University. No special criteria were used to select them but availability. Their ages ranged from 19 to 25 years old, with an average age of 22. None of them reported previous experience using kinesthetic haptic devices. All were right-handed.

Before each experiment, subjects were totally unaware of all aspects and details of the test and were given general instructions concerning the task. A short familiarization time with the Falcon was granted prior to the first experiment.

The first three experiments were conducted consecutively for all subjects. Average duration of the ensemble of experiments was 25 min. For the fourth experiment, the subject exhibiting the median performance on the three experiments was selected for the proof-of-concept.

3.2. Experiment I: Shape Recognition—Comparison between Haptic Exploration Modes

3.2.1. Method

Three haptic shapes were modeled for the test: square, triangle, and circle. Shapes were designed to fit the Falcon’s 10 × 10 cm workspace. To create the illusion of a solid object, shapes were designed as a mechanical spring governed by Hooke’s law with an appropriate stiffness constant so that when the user pushes towards the shape, a restoring force pushes his/her hand back towards equilibrium.

Subjects explored their outer contour and were asked to match their haptic perception with one of these three shapes. Each shape was presented once during the test.

In active exploration mode, subjects were restricted to three free shape contour exploration circuits before providing their definitive answers. Exploration time and movements were recorded for each shape and for each subject.

In passive mode, subjects were asked to avoid or minimize any resistive force to the device’s motion. The Falcon was configured to exert a 0.5 N guiding force with the possibility of gradually increasing it up to 1.5 N depending on the user’s sensed resistive force. Three full contour circuits were provided by the haptic device for each shape. The guiding time was recorded for each shape and for each participant.

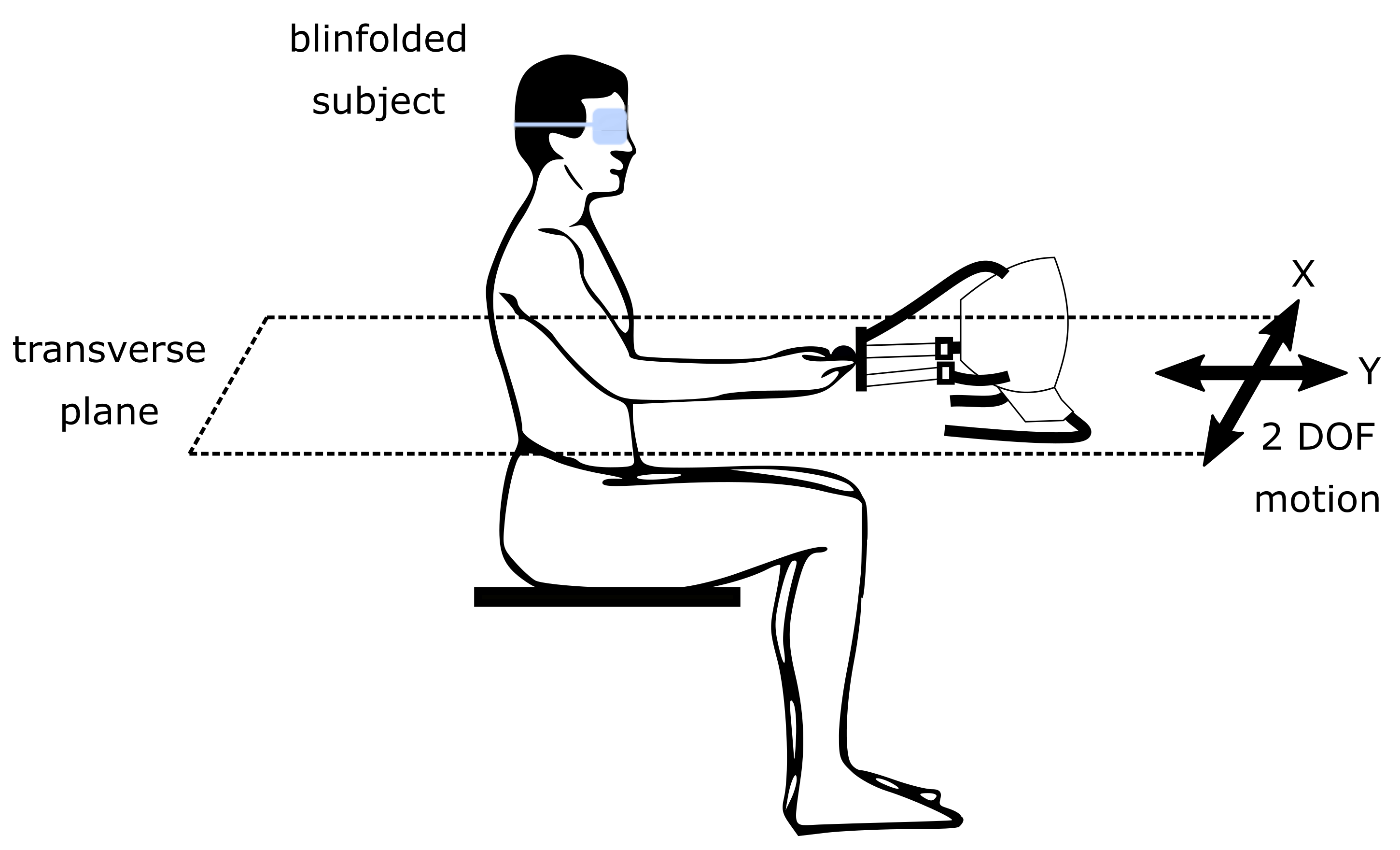

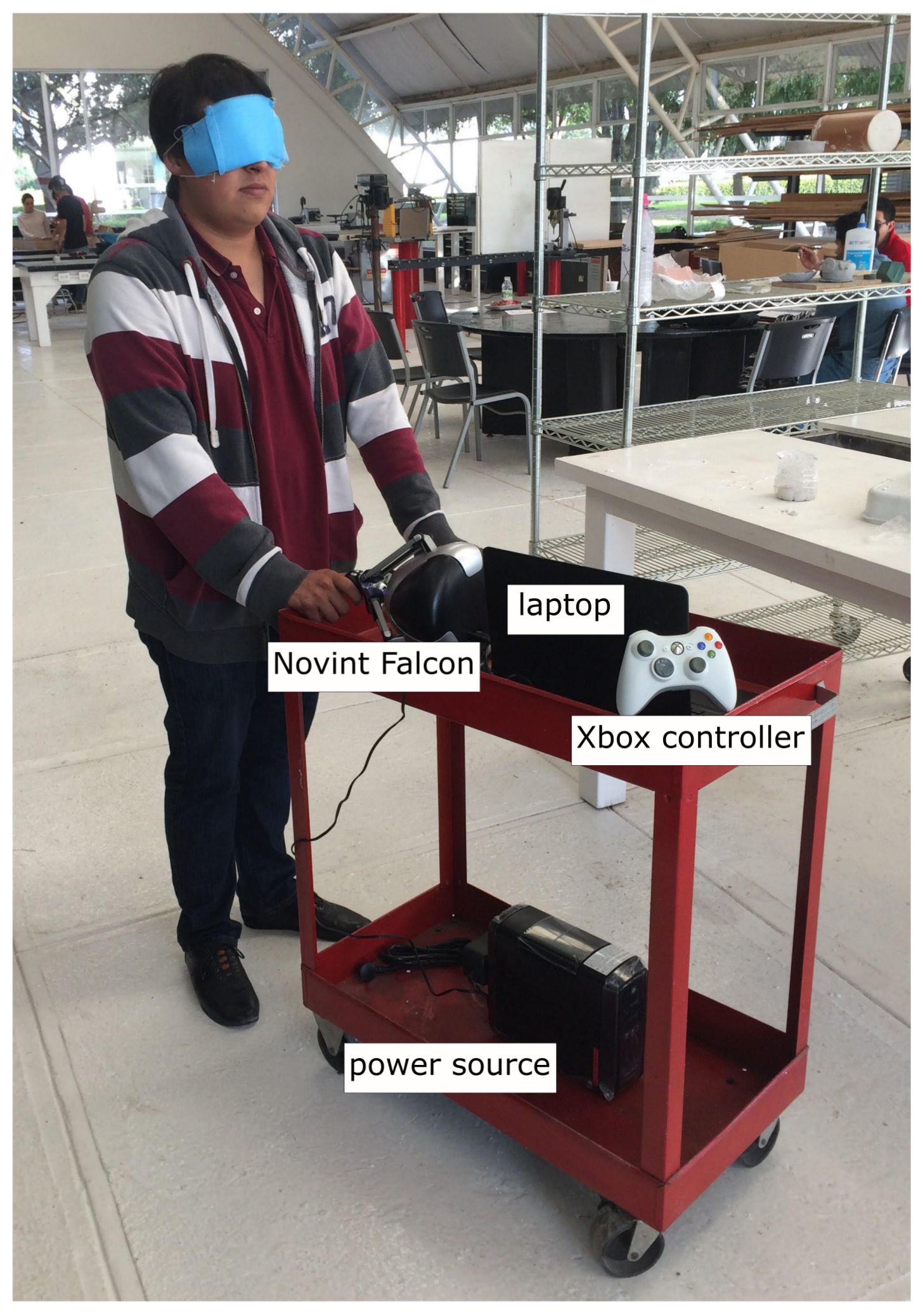

During the experiment, the subjects were seated on a chair in front of the Falcon. Subjects were blindfolded so no information from sight could be obtained. Motion in both active and passive haptic modes was limited to the transverse plane (X-Y motion). All subjects used their dominant hand to grasp the end effector and interact with the haptic device (

Figure 3).

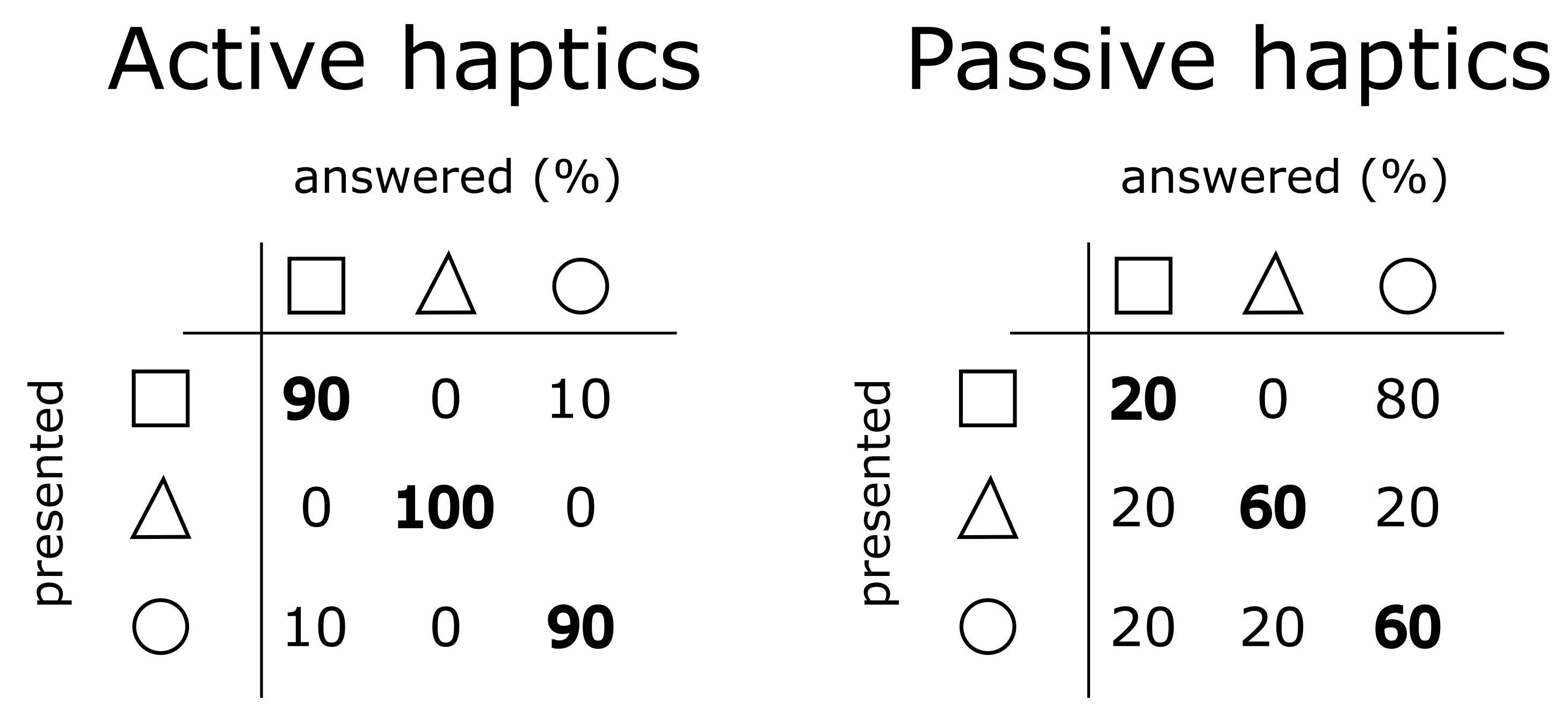

3.2.2. Results

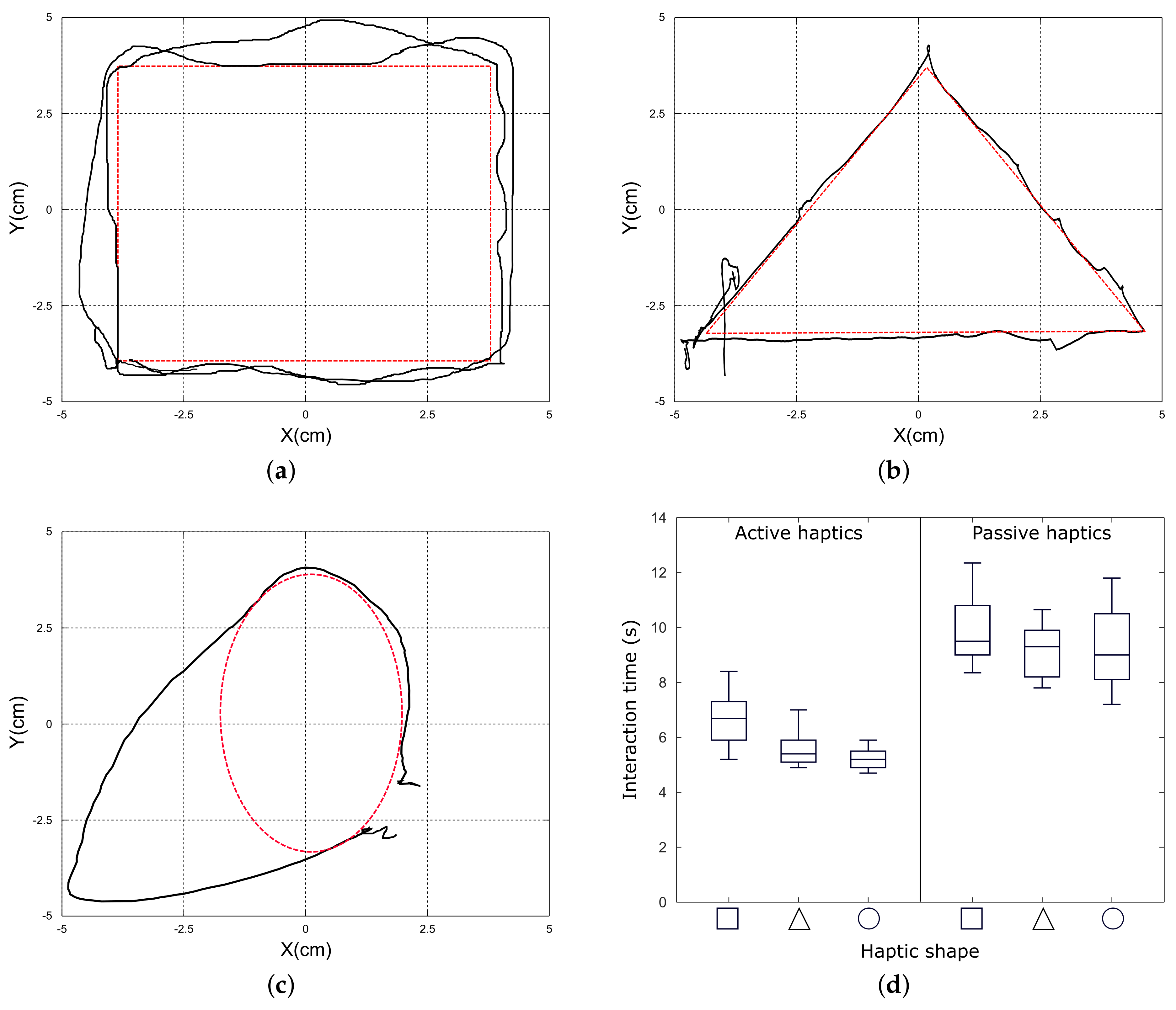

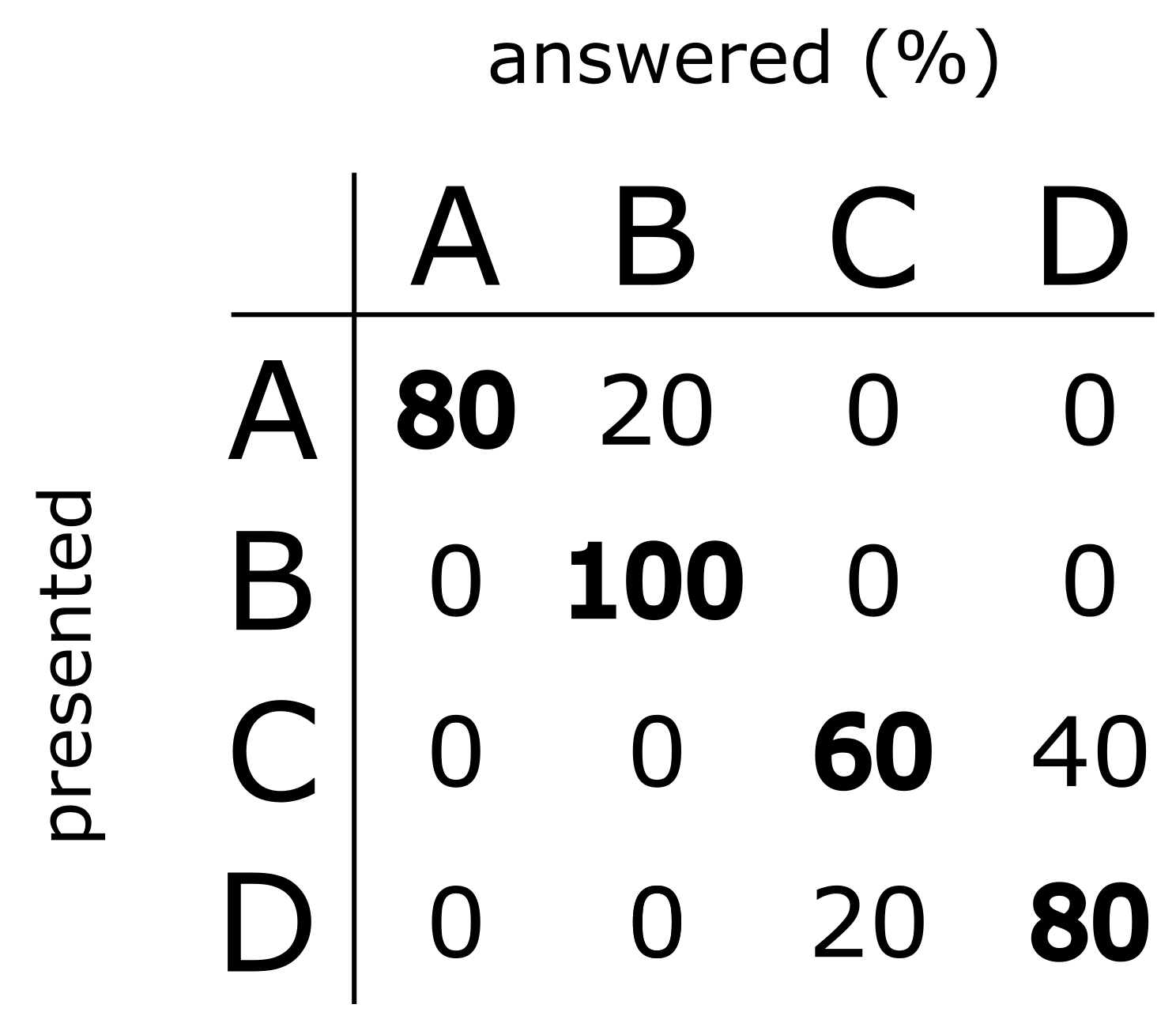

Figure 4 summarizes the results obtained for the ten subjects. The active exploration condition offers a clear superiority over the passive one. In this latter, it is more likely to get confused with haptic shapes. Note that the square was often taken for the circle. This is due to the morphological similarity between these shapes and to the fact that subjects misperceived the round contours that define the circle when being guided by the haptic device.

Active haptics also allows a faster recognition.

Figure 5a–c show the movements’ recordings obtained from one subject while actively exploring the shapes. From the set of recordings, it was observed that subjects did not required the three free shape contours exploration circuits to recognize the shapes. In most cases, subjects recognized them after performing one or two circuits.

Figure 5d compares the interaction times with the haptic device by shape and exploration condition. Being basic shapes, exploration times are similar for all three in the active condition (median values: 6.7, 5.4, and 5.2 s for the square, triangle, and circle, respectively). The same trend is observed for the guiding times in the passive condition (median values: 9.5, 9.3, and 9 s for the square, triangle, and circle, respectively). A two-way analysis of variance (ANOVA) confirmed that there is a significant difference in the interacting times between the active and passive conditions (F = 99.32,

p = 0.009) and that there is no significant difference in the interaction times across the same haptic condition (F = 2.93,

p = 0.255). Passive haptics naturally took longer as three shape contour circuits were imposed during interaction. Note that the passive haptic times are not represented by a single value. This is due to the different resistive forces applied by the subjects during guidance.

Active mode has often been described as yielding “better-quality” information [

22,

23]. Self-generated movements involve the intent to, the planning of, the preparation for, and execution of, movement [

24]. None of these purposive actions are involved in passive mode. Nevertheless, passive exploration also exhibits interesting recognition rates and a fundamental difference with active exploration: there is a reduced sense of surface perception. Volitional movements convey the perception of object solidity. For the same haptic shape, subjects perceive a surface in active exploration mode while a pathway in passive mode.

The following experiments will further investigate this observation and its potential application.

3.3. Experiment II: Simple Pathway Recognition

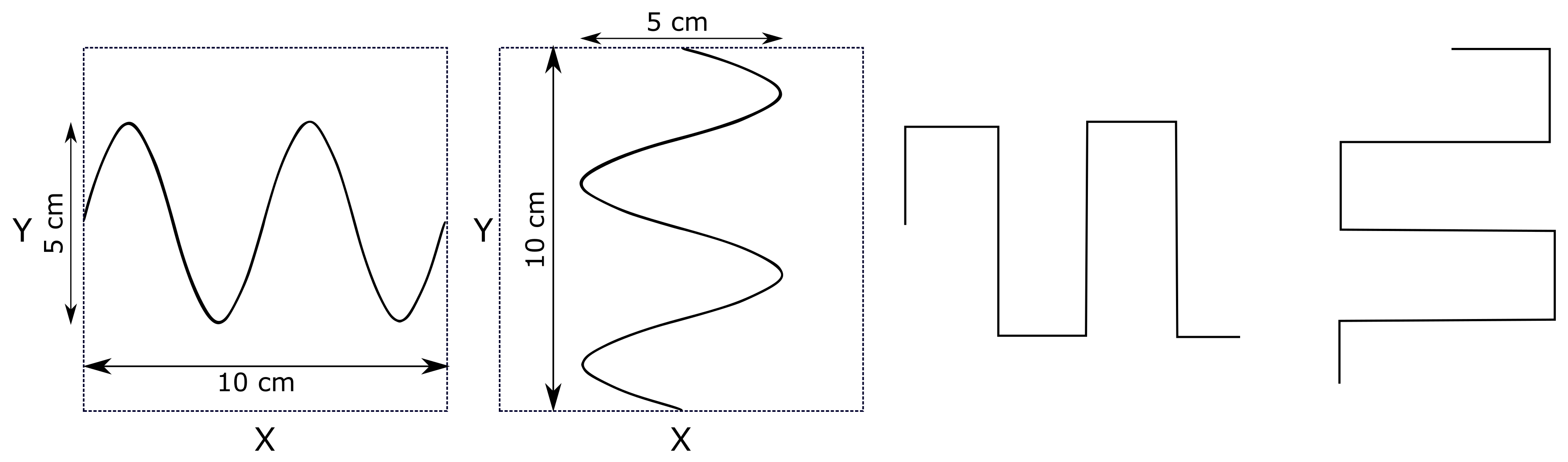

3.3.1. Method

Four haptic pathways were designed for the test: a sinus wave, a square wave, a sinus wave rotated 90

CCW, and a square wave rotated 90

CCW (

Figure 6). The horizontal pathways (sinus and square wave) were displayed in an area of 10 × 5 cm of the X-Y space (see coordinate reference in

Figure 3), while the vertical ones (rotated waves) in 5 × 10 cm. Subjects were guided along the pathways by the haptic device and were asked to identify the trajectory explored.

Each pathway was presented once during the test. Again, participants were asked to avoid or minimize any resistive force to the device’s motion. The haptic device provided three full circuits for each pathway. Only the passive exploration mode was assessed. The experimental conditions depicted in

Figure 3 were observed.

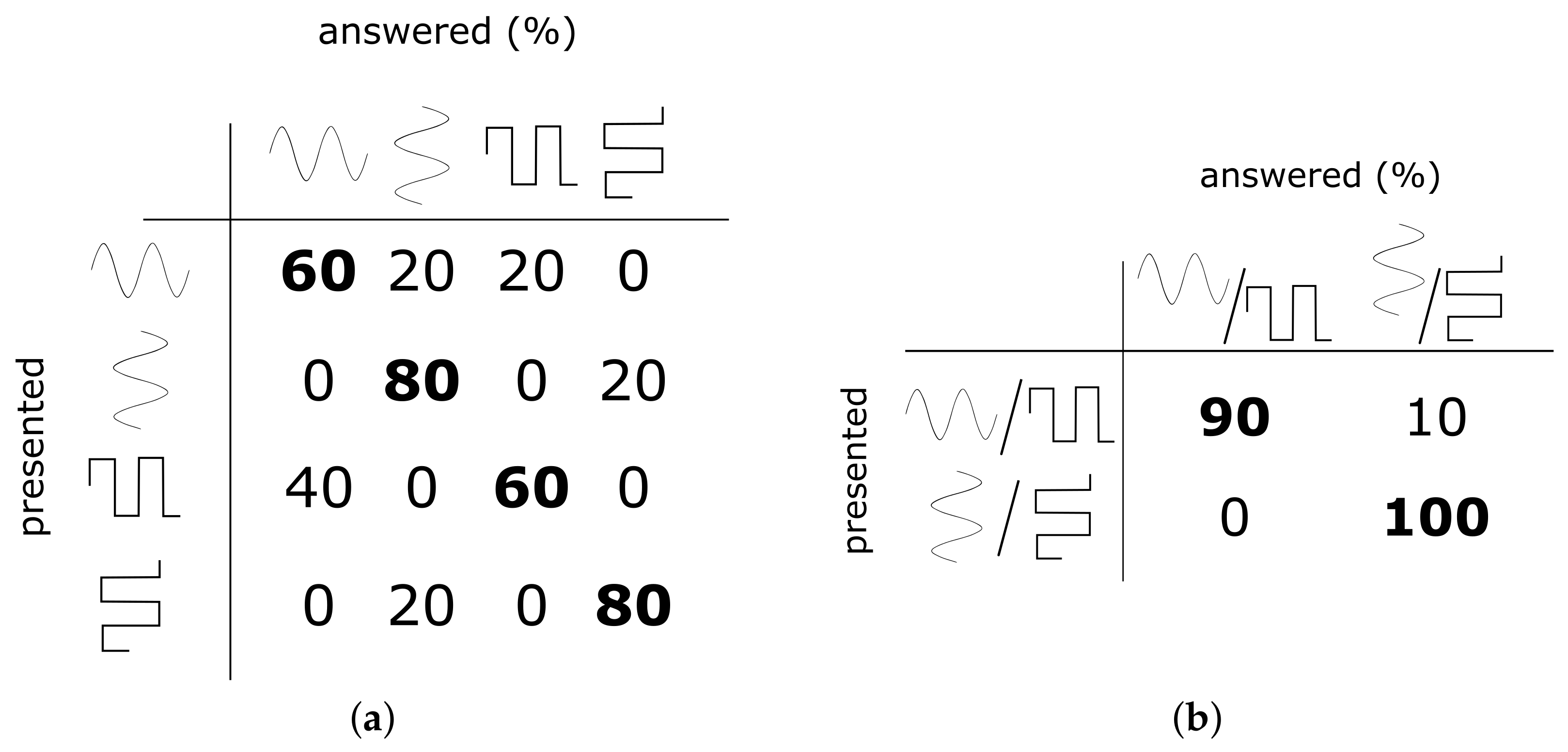

3.3.2. Results

Figure 7a summarizes the results obtained. In addition to the satisfactory recognition rates ranging between 60% and 80%, an interesting observation can be made: the haptic guiding direction can be easily recognized.

Note that, when a pathway was misunderstood, it was taken for the other pathway exhibiting the same direction. For example, when confused, the square wave was taken for the sinus wave or the rotated sinus wave for the rotated square one.

Figure 7b analyses the results obtained by grouping same direction pathways. Note the high recognition rates for the haptic guidance direction.

The following experiment will further investigate haptic guiding direction with more complex pathways.

3.4. Experiment III: Complex Pathway Recognition

3.4.1. Method

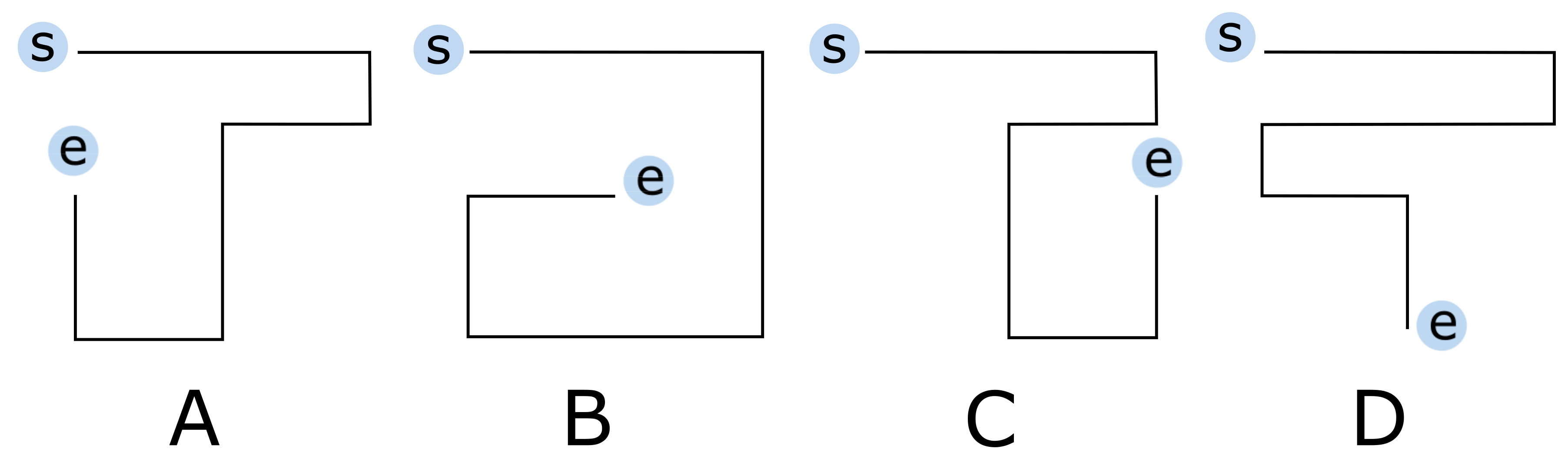

Figure 8 shows four haptic pathways exhibiting a higher level of complexity than those in the previous experiment. These pathways fully fitted the 10 × 10 cm workspace and included four types of guiding distances (2.5, 5, 7.5, and 10 cm) and changes of direction, which had to be understood by the subjects. Participants were guided along the pathways by the haptic device and were asked to identify the trajectory explored.

As in the previous experiment, only the passive exploration condition was evaluated. Each pathway was presented once during the test. The haptic device provided three full circuits for each pathway. The experimental conditions depicted in

Figure 3 were observed.

3.4.2. Results

Figure 9 shows the results obtained. Note an overall good performance.

Changes of direction were easy to recognize. Note, for example, pathways A and C. The only difference between them is a change of direction in the fifth path section. Note that were no confusion among them.

Short guiding distances increase the complexity of the pathways. They are more difficult to discriminate especially within such a small workspace. Note that pathways C and D are quite similar. While pathway C exhibits a short guiding distance in the third path section and a long in the fourth, pathway D exhibits the opposite. Even though the change of direction in the last path section was expected to ease recognition, pathway C was taken for D by 40% of the subjects and D for C by 20% of them.

Results confirm that subjects were capable of recognizing complex pathways satisfactorily. We wondered whether this capability could be used for more challenging tasks such as spatial navigation and mobility assistance. Think of a visually impaired pedestrian with a guide dog. Through its leash, the dog provides kinesthetic passive haptic feedback to guide its user along an environment. Navigational instructions (such as go forward, make a turn, and stop) can be inferred from haptic data.

The next experiment will implement this concept using the Novint Falcon device.

3.5. Experiment IV: Navigation in Space With Passive Haptics

3.5.1. Method

To validate the potential of passive haptics for assisting human navigation, the subject exhibiting the median performance on the three previous experiments was invited to participate in this last study. The median value was found to reflect a standard performance in a meaningful way (not the best, not the worst). The chosen subject was a 23 year old male.

The experimental setup in

Figure 10 was deployed. It comprises the haptic device, a laptop computer, an Xbox 360 wireless controller, and a portable power source. All but the Xbox controller were mounted on a tool cart.

A research assistant located outside of the navigation environment operated the Xbox wireless controller and provided the directional instructions: go forward, turn right/left, and stop. These instructions were sent to the computer and there coded as passive haptic feedback to be displayed by the Novint Falcon. As in the guide dog example, an intuitive haptic code was used: a handle moving forwards (toward the device) meant “go forward”, a handle to the right/left meant “turning”, and a sudden handle moving backwards (toward the subject) meant “stop”.

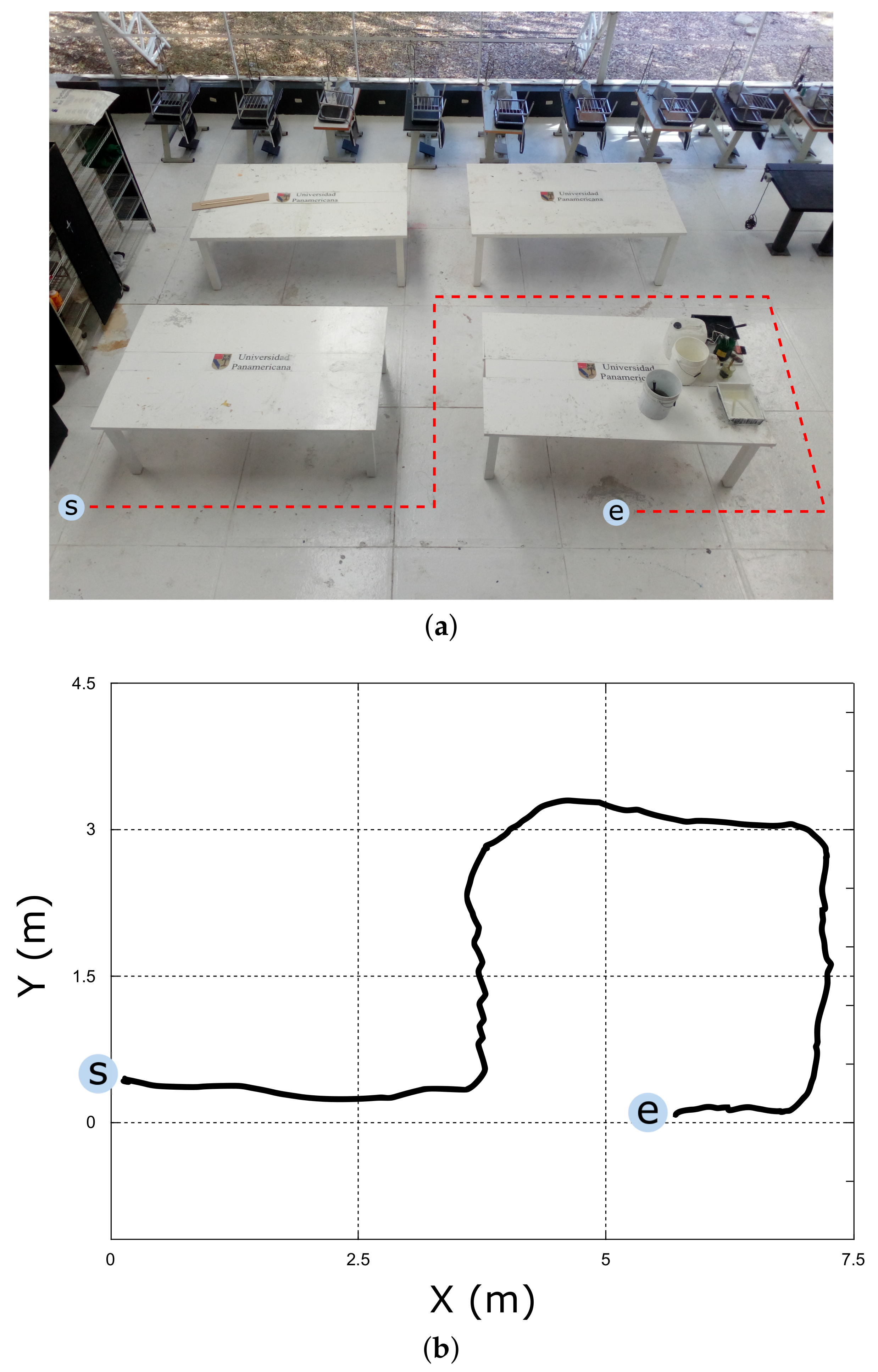

An indoor laboratory was used as navigation environment and a desired trajectory was established (

Figure 11a). The subject was requested to use blindfolds and to walk and move the tool cart according to the haptic instruction provided. He was instructed to walk at a comfortable pace, rather than attempting to finish the test as quick as possible. A camera located 4 m above ground surface recorded RGB video. The acquired video was latter processed offline in Matlab for subject tracking.

3.5.2. Results

Figure 11b shows the trajectory followed by the test subject. All navigational instructions were correctly perceived and timely executed with no mistakes.

After completing the task, the subject stated that the haptic guidance provided by the device was natural as it conveyed a transparent feeling to the user. Cognitive workload was indeed perceived low. No significant mental effort nor frustration for understanding the instructions were observed from the subject. This is certainly due to the user’s passivity during the task (i.e., the haptic device is in charge and guides the user at every moment).

Results confirm that kinesthetic passive haptic feedback is intuitive and can be exploited for assisting human navigation.

4. Conclusions

Kinesthetic active and passive haptic exploration are inherently different. In the first, the individual has complete control over the haptic device. In the latter, the individual surrenders that control to the haptic device.

In this paper, we have presented a set of experiments that highlights the main perceptual features of both exploration modes when interacting with haptic shapes. Four experiments were conducted with the Novint Falcon, a low cost 3D force feedback commercial haptic device. The main findings can be summarized as follows: (1) Active haptics offers a clear superiority over passive haptics for shape recognition tasks. This is mainly due to the nature of purposive actions, which ease the perception of object solidity. Passive haptics conveys the shape exploration process as the sensation of being guided along pathways. (2) In passive haptic exploration, simple and complex pathways can be recognized much better than chance performance. Haptic guiding direction is easy discriminable and (3) it can be effectively exploited in tasks such as navigation/mobility assistance.

Future work aims to convert the experimental setup of

Figure 10 into a compact handheld kinesthetic haptic device that assists the navigation of blind and visually impaired people. The prototype is expected to offer the intuitive passive haptic feedback of a guide dog with a low-cost, efficient, and ergonomic design. As in previous projects [

25], a GPS-based technology will complement the haptic device by enabling wayfinding in urban environments.