Abstract

Imperceptibility and robustness are the two complementary, but fundamental requirements of any digital image watermarking method. To improve the invisibility and robustness of multiplicative image watermarking, a complex wavelet based watermarking algorithm is proposed by using the human visual texture masking and visual saliency model. First, image blocks with high entropy are selected as the watermark embedding space to achieve imperceptibility. Then, an adaptive multiplicative watermark embedding strength factor is designed by utilizing texture masking and visual saliency to enhance robustness. Furthermore, the complex wavelet coefficients of the low frequency sub-band are modeled by a Gaussian distribution, and a watermark decoding method is proposed based on the maximum likelihood criterion. Finally, the effectiveness of the watermarking is validated by using the peak signal-to-noise ratio (PSNR) and the structural similarity index measure (SSIM) through experiments. Simulation results demonstrate the invisibility of the proposed method and its strong robustness against various attacks, including additive noise, image filtering, JPEG compression, amplitude scaling, rotation attack, and combinational attack.

1. Introduction

With the growing popularity of big data and multimedia applications, a large number of digital multimedia data are generated, transmitted, and distributed over the Internet every day. The security of these digital data is a relevant problem. An efficient solution is watermarking technology, which is mainly used for copyright protection, authentication, fingerprinting, etc. [1,2,3]. In general, the main idea of digital watermarking is to embed useful information in a host signal without affecting the perceptual quality of the host signal. For a watermarking method, the three indispensable, but conflicting requirements are robustness, invisibility, and capacity [1]. These requirements are mutually reinforcing and have to be solved together. For instance, when the imperceptibility of watermarking is improved, the robustness of watermarking will be reduced. Therefore, an ideal digital watermarking should achieve good balance among these three requirements.

To achieve the above goal, extensive watermarking methods have been proposed in recent years. These methods can be classified in different ways, e.g., spatial domain methods [4] and frequency domain methods [5,6,7,8,9], based on watermark embedding space. Depending on the manner of embedding, the method can be further categorized into additive [10], multiplicative [11,12], and quantization based methods [13,14]. In addition, the watermarking methods can be categorized as blind [11] and non-blind [15] ones based on watermark decoding.

In terms of embedding region, most current watermarking methods focus on the frequency domain [6,7,16], because frequency domain watermarking algorithms are relatively more robust, invisible, and stable, especially the wavelet based watermarking [16]. The reason is that wavelet based watermarking has two obvious advantages. One of the advantages is that the wavelet transform fits well with the human visual system, which can be exploited in the design of an invisible watermarking [17,18,19,20,21]. The other advantage is that the wavelet transform has good multi-scale analytic characteristics, which can be used to develop a robust watermarking method [21,22,23,24]. Subsequently, many watermarking methods that use wavelets have been proposed in the past two decades. In terms of embedding method, the multiplicative watermarking methods are reportedly more robust, and they provide higher imperceptibility than the additive ones [25,26]. The multiplicative watermarking approaches are dependent on image content [25], and more importantly, they have strong robustness. Therefore, multiplicative watermarking methods are preferred for copyright protection [26]. For this reason, the multiplicative embedding approach is adopted in our study.

The wavelet transform has the advantages of the localization of the time frequency and multi-scale analysis, and it is suitable for describing the characteristics of 1D signals. However, when the signal dimension increases, the wavelet transform cannot sufficiently describe the singularity of the signal [27]. Therefore, to capture the direction information of 2D signals, multi-scale geometric analytic techniques for obtaining the intrinsic geometric structure information of images, such as contours and smooth curves, have emerged in recent years. These technologies include the ridgelets [15,28], wave atoms [29], contourlets [27,30], framelets [31], and dual tree-complex wavelet transform (DT-CWT) [32,33].

Current watermarking algorithms, such as the methods in [16,28,30], have achieved satisfying results; however, some problems need to be solved. Akhaee et al. [16] proposed a robust scaling based watermarking with the multi-objective optimization approach. Although the balance of invisibility and robustness of watermarking has been elaborately addressed by the method [16], the cost of multi-objective optimization is high, which hinders its extension to real applications. Despite the success of the multi-scale geometric analysis technology in various image watermarking-like methods [9,30], the time cost of these methods is also high. As a result, designing a simple and effective digital watermarking method to balance robustness and imperceptibility is necessary.

In addressing the above issues, the quantization watermarking approach with the L1 norm function was proposed in our previous work [33]. This work achieved good imperceptibility, but the robustness of the watermark against some attacks remains insufficient. In the present study, a watermarking that can be easily implemented is developed, and the robustness of watermarking is boosted based on the visual perception model. In this manner, the fidelity of the image can be improved. The developed method can achieve a good balance between invisibility and robustness of watermarking, which is beneficial to the practical use of watermarking technology.

DT-CWT is regarded as an overcomplete transform, which creates redundant complex wavelet coefficients that can be utilized to embed watermarks. In general, shift invariance is the main feature of DT-CWT. We can use this property to produce a watermark that can be decoded even after the host signal has undergone geometric attacks, such as amplitude scaling and rotation. DT-CWT also has good directional selectivity. Therefore, we propose an image watermarking by using DT-CWT in this paper. First, we segment the original image and choose the image blocks with high entropy in this study. Second, we embed watermark data into the low frequency of the complex wavelet coefficients by a visual perceptual model, and we extract the watermark data by using the maximum likelihood estimator (MLE). Finally, we validate the effectiveness of the watermarking algorithm through experimental simulation.

The contributions of the proposed method are twofold. On the one hand, an adaptive watermark embedding method in terms of texture masking and the visual saliency model is developed, which embeds each watermark bit into a set of dual tree complex wavelet coefficients. Using this strategy, the robustness and imperceptibility of the watermark can be well balanced. On the other hand, the low frequency of complex coefficients with high entropy is selected as the watermark embedding space, which can improve the robustness of watermarking against some geometric attacks, such as rotation, scaling, and combinational attack.

The rest of the paper is structured as follows. Section 2 provides the basic concept of DT-CWT. Section 3 introduces the proposed watermarking method, including watermark embedding and watermark decoding. We test and discuss the performance of the proposed watermarking through experiments, and the corresponding findings are discussed in Section 4. The conclusion is presented in Section 5.

2. Dual Tree-Complex Wavelet Transform

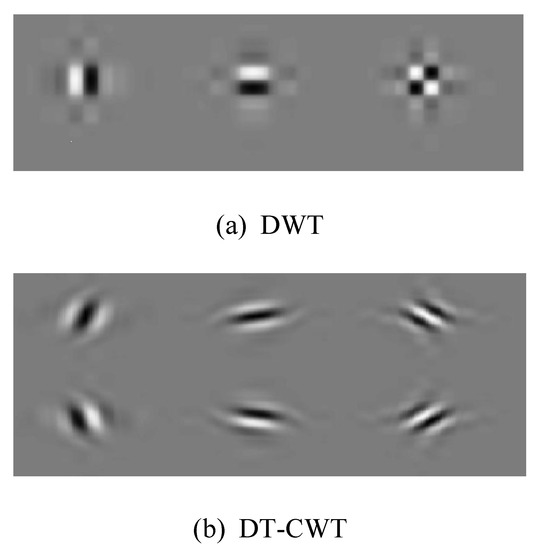

DT-CWT, which was initially proposed by Selesnick, Baraniuk, and Kingsbury [32], inherits the characteristics of wavelets, and it can approximate shift invariances and has good directional selectivity [32]. A DT-CWT with a wavelet transform can produce six directional sub-bands oriented at 75°, 15°, −45°, −75°, −15°, and 45° on a decomposition scale. By contrast, a wavelet transform only has three directional sub-bands oriented at 90°, 0°, and 45° on a scale. A comparison of the impulse responses of these two wavelet transforms is shown in Figure 1. As mentioned above, DT-CWT can effectively approximate shift invariances. This invariance can be used to design watermarking, which then can be used to counter geometric attacks. For instance, if the image block is re-sampled after scaling, then DT-CWT can generate a set of coefficients that are roughly the same as the original patch. This scheme enables the watermarking to counter scaling attacks. Transformations, such as discrete wavelet transform (DWT), discrete cosine transform (DCT), and Fourier transform, do not have this property.

Figure 1.

Impulse responses of the reconstruction filters in two transforms. (a) DWT. (b) DT-complex wavelet transform (CWT).

For a 1D signal, the wavelet coefficients obtained by using two filter trees are twice those of the original wavelet transform. Furthermore, the 1D signal can be decomposed by the 1D DT-CWT with a shifted and dilated mother wavelet function and scaling function [34], i.e.,

where Z denotes the set of natural numbers; J and l refer to the indices of shifts and dilations, respectively; denotes the scaling coefficient; and is the complex wavelet transform coefficient with and , where r and i represent the real and imaginary parts, respectively.

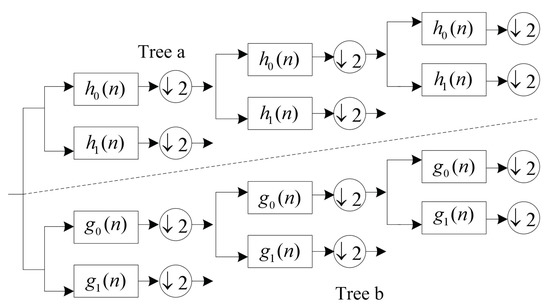

Figure 2 shows the calculation process of the real part and the imaginary part of DT-CWT. For tree a, filters and are used to compute the real part. For tree b, filters and are utilized to calculate the imaginary part. As shown in Figure 2, the output of the two trees can be interpreted as the real part and the imaginary part of the complex wavelet coefficients. For a 2D signal, a 2D image can be decomposed by 2D DT-CWT [34], i.e.,

where denotes the directionality of DT-CWT. At each scale of decomposition, the DT-CWT decomposition of results in six complex valued high pass sub-bands in which each high pass sub-band corresponds to one unique direction .

Figure 2.

Two-level 1D dual tree complex wavelet transform.

3. Watermark Embedding and Detection

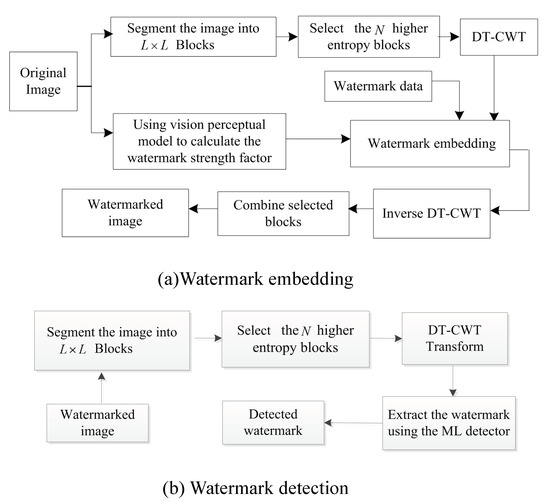

Human eyes are generally less sensitive to high entropy image blocks than smooth ones based on the human visual perception model. The reason is that relatively strong edges usually appear in high entropy image blocks [35]. Inspired by [35], we propose an image watermarking method by using high entropy blocks in this work. The block diagram of the proposed method is illustrated in Figure 3, which consists of watermark encoding and watermark decoding. The main advantage of this proposed method is its simple implementation; moreover, the tradeoff between invisibility and robustness can be resolved by a visual perceptual model. DT-CWT is also adopted in this work to embed watermark information, which can improve the robustness of the watermarking against geometric attacks.

Figure 3.

Block diagram of the proposed watermarking. (a) Embedding. (b) detection.

3.1. Watermark Embedding

As shown in Figure 3a. The procedure of the watermark embedding involves the following steps:

Step 1: The original image is segmented into blocks, and the first blocks in the ascending order of estimated entropy are selected for watermarking purposes.

Step 2: DT-CWT is applied to each selected image block, and a single bit of “0” or “1” is embedded in each block by manipulating the complex wavelet coefficients of the low frequency sub-band as follows:

where x denotes the host coefficients of the low frequency sub-band; denotes the modified coefficients; and is called the watermark strength factor, its value being determined by texture masking and visual saliency in Section 3.2.

Step 3: Repeat Step 2 for each image block.

Step 4: The inverse DT-CWT is applied to the watermarked blocks, and the watermarked blocks are combined with the non-watermarked blocks to obtain the whole watermarked image.

3.2. Visual Saliency Based Watermark Strength Factor

The watermark strength factor can affect imperceptibility. To achieve the transparency of the watermark, two important concepts, texture masking and visual saliency, are used to design the watermark strength factor. The just noticeable difference (JND) threshold is often high in the texture region of an image [36]. Therefore, a high watermark strength factor can be selected to embed more information in the texture region. In addition. The work in [37] studied the spread transform dither modulation (STDM) watermarking algorithm based on the visual saliency model and achieved good results. Furthermore, Wang et al. studied the JND estimation algorithm based on visual saliency in the wavelet domain [38]. The work in [39] utilized the JND scheme in designing a watermarking method. Besides this, in [40], an adaptive quantization watermarking algorithm was proposed. The term “adaptive” in their work [40] was mainly used to describe a process, behavior, and/or a system that is able to interact with its environment. However, in our work, “adaptive” describes the embedding strength of watermark. As a result, the concept of visual saliency [38,41,42] is used to develop an adaptive watermark strength factor in this work. The human eye is inclined to focus on prominent areas, and distortions are more likely hidden in the area far from the image saliency part. However, watermark embedding strength can be enhanced accordingly. The watermark strength factor can be calculated as follows.

First, on the basis of the characteristic of the texture masking, the high frequency energy of the image block is calculated by Equation (5), i.e., the value is the average of the sum of the energies of the six high frequency sub-bands.

where the six sub-bands of , , ⋯, are produced, which correspond to the outputs of the six directional sub-bands oriented at 15°, 45°, 75°, −15°, −45°, and −75°. Subsequently, the high frequency sub-band image energy of the N image sub-block is computed as follows:

where is the average energy of all image blocks. The watermark strength factor can increase with increasing . Hence, the high frequency portion of the watermark strength factor can be computed by employing the relationship proposed in [36] as follows:

where the values of , c, and are set to , , and , respectively.

According to [42], the final strength factor can be computed by exploiting visual saliency. First, saliency distance is calculated for each image block, as denoted by D, and the maximum saliency distance is determined from the image blocks, as denoted by . In this manner, the visual saliency based strength factor can be represented as , where .

In summary, the final watermark strength factor can be calculated as:

where denotes a positive constant, and is subtracted in this work to control the degree of image distortion after the watermark embedding. In this manner, the value of can be set to in this work.

On the basis of the above analysis, texture masking and visual saliency can be utilized to calculate the strength factor. This strength factor can adaptively change with the change of image texture and the degree of saliency. In this manner, the strength of the embedding can be controlled more appropriately, thus further improving watermarking performance.

3.3. Watermark Detection

The effect of attacks at the receiver can simply be modeled as an additive white Gaussian noise (AWGN) [16]. Furthermore, the complex wavelet coefficients of the low frequency sub-band can be modeled by a Gaussian distribution. The distribution of watermark information “1” or “0” can be represented as follows:

where , , is the variance of the noise in the related sub-band coefficients.

Complex wavelet coefficients are assumed to be independent and identically distributed in this work. Therefore, the distribution of these coefficients in a specific block with N coefficients for embedding “1” is:

Similarly, to embed “0”, we have:

According to the ML decision criterion, the watermark extraction process can be written as follows:

Thus, by substituting (11) and (12) in (13) and (14), we have:

We take the logarithm of both sides by calculating:

where , .

Therefore, the watermark detection threshold can be expressed as:

4. Experimental Results

4.1. Imperceptibility of Watermarking

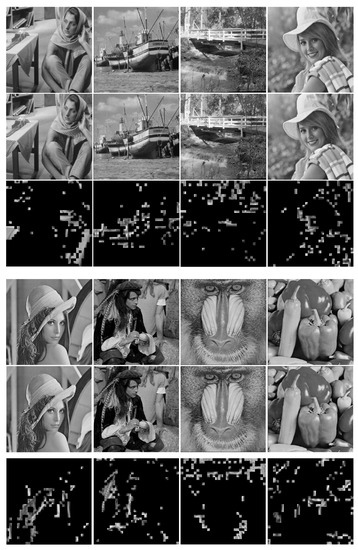

To assess the performance of the proposed watermarking method, experiments are conducted by using real images. In this study, we used eight natural images (Barbara, Boat, Bridge, Elaine, Lena, Man, Mandrill, and Peppers), each with a size of . The host images and their watermarked version with blocks and 128-bit message are shown in Figure 4. Throughout the experiments, three level DT-CWT was used to decompose each selected block, and the filters used were the near-symmetric 13, 19 tap filters and Q-shift 14, 14 tap filters. The watermark strength factor was set to according to Equation (8) in Section 3.2. For each image in Figure 4, the top image is the host image, the middle image the watermarked image, and the bottom image the difference image between the host image and the watermarked version.

Figure 4.

Host, watermarked, and difference images: Barbara, Boat, Bridge, Elaine, Lena, Man, Mandrill, and Peppers.

From Figure 4, the watermark imperceptibility is satisfied. The proposed watermarking method provided an image dependent watermark with strong components in the complex part of the image, which is barely noticeable to the human eyes. This scheme allowed for the setting of the high watermark strength factor, while the visual quality of the watermarked image was kept at an acceptable level. Moreover, the peak signal-to-noise-ratio (PSNR) and the structural similarity index measure (SSIM) [43] were used to evaluate the performance of the proposed watermarking method in a subjective manner. The results are shown Table 1, in which the watermarking evaluation results are satisfactory. Therefore, the embedded watermarks were perceptually invisible.

Table 1.

Evaluation results with different watermark lengths.

4.2. Error of Probability Analysis

The error probability in the presence of AWGN was derived as follows. Error occurred whenever watermark information “1” was embedded into the host image, while watermark information “0” was extracted at the decoder end, and vice versa. The error probability of the watermarking included these two errors.

According to Equation (17), the error probability of embedding watermark information “1” was:

where , , and . According to previous results [16], can be written as:

where , , , and denotes a Gamma function.

Meanwhile, the error probability of embedding watermark information “0” is:

According to [16], can be further written as:

where , , , = τ + ω1N(1 − α)2μ2, ξ(x) = .

The data bits “0” and “1” were assumed to be inserted in the original image with equal probabilities. On the basis of Equations (21) and (23), the total error probability can be written as:

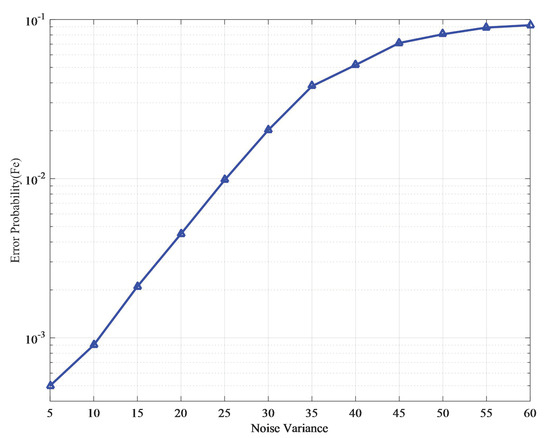

Figure 5 shows the error probability versus the different values of noise variance for the Lena image under AWGN attack. From Figure 5, although the error probability increased with the increase of noise variance, the change value of error probability remained small as the noise attack strength increased.

Figure 5.

Error probability versus noise variance for AWGN attack.

4.3. Performance under Attacks

For testing robustness, several common attacks, as described by [16,28], were utilized for the watermarked images by the proposed method. These attacks included common image processing attacks and geometric distortion attacks. In this study, bit-error rate (BER) was used to evaluate the robustness of the watermarking under several intentional or unintentional attacks. To save on space, the robustness of the proposed method under AWGN, median filtering, Gaussian filtering, JPEG compression, scaling attack, rotation attack, and combinational attack on the eight well known images (i.e., Barbara, Boat, Bridge, Elaine, Lena, Man, Mandrill, and Peppers) was investigated.

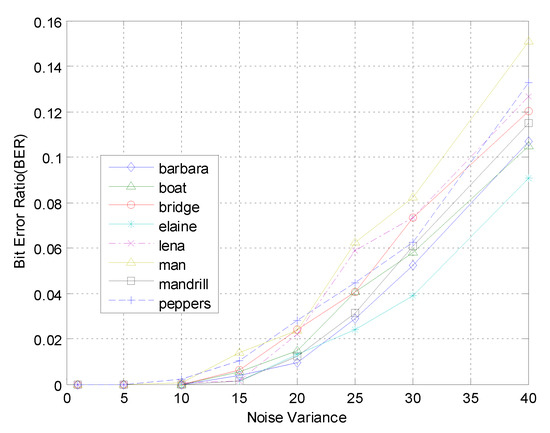

(1) AWGN attack:

Figure 6 shows the results of BER of various test images against AWGN attacks. When the noise variance was less than 10, BER was near zero. When noise variance was less than 33, the corresponding BER was near 0.1. Therefore, the proposed watermarking had good robustness against AWGN attacks.

Figure 6.

AWGN attack with different noise variances.

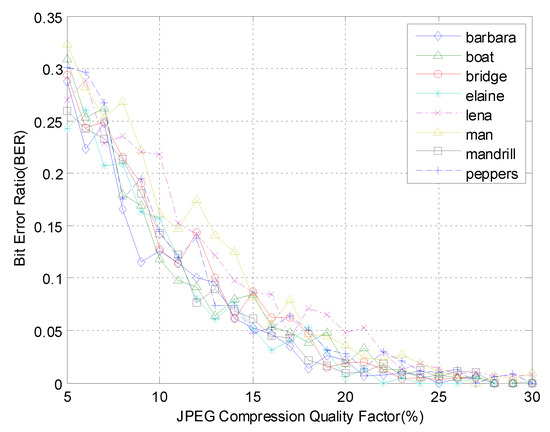

(2) JPEG compression attack:

Figure 7 shows the results of BER against JPEG compression attacks, in which the proposed watermarking demonstrates robustness, even if the quality factor is very low (e.g., quality factor of five). When the strength of the JPEG compression attack was very large (e.g., quality factor of five), the result of BER was less than 0.3. When the JPEG compression quality factor was greater than 25, BER tended to be zero. Therefore, the proposed watermarking algorithm was robust against JPEG compression attacks.

Figure 7.

JPEG attack for various test images with different quality factors.

(3) Scaling attack:

Table 2 shows the BER results under amplitude scaling attacks. The watermarking algorithm was robust to most scaling attacks. However, Table 2 also shows that when the scaling factor was equal to 0.7 or 1.1, BER had a comparatively large value. The reason is not yet clear, and this issue will be explored in our future work.

Table 2.

BER results of the extracted watermark under scaling attack.

(4) Rotation attack:

Table 3 shows the results of the BER of various test images against rotation attacks. The range of the rotation angle was . When the range of the rotation angle was , the range of the BER was . Among the values listed in in Table 3, the maximum BER was , whereas most of the other BER values were small. The effect of BER was not prominent when the angle increased. Thus, the proposed embedding approach was robust against rotation attacks.

Table 3.

BER results of the extracted watermark under rotation attack.

(5) Gaussian filtering and median filtering attacks:

Table 4 shows the BER results against Gaussian filtering and median filtering attacks. The window sizes of Gaussian filtering and median filtering were , , and 7. The proposed method was highly robust against Gaussian filtering attacks. When the window size of the median filtering was , the performance of the proposed scheme was robust. However, with the increase of the window size of the median filtering, the robustness of the watermarking was decreased.

Table 4.

BER results of the extracted watermark under Gaussian filtering and median filtering attacks.

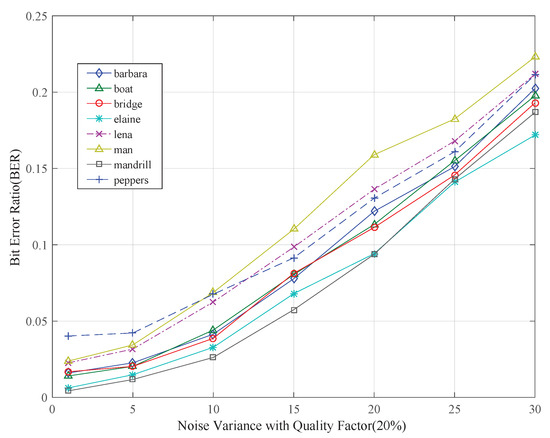

(6) Combinational attack:

Table 5 shows the BER results under the combinational attack for various distortions associated with JPEG compression with a quality factor of . The robustness of the proposed method against this kind of combinational attack was satisfactory. The result of BER against Gaussian noise attack combined with JPEG compression attack for different images is shown in Figure 8, which further confirmed that the proposed scheme had good robustness for this kind of combinational attack.

Table 5.

BER results under JPEG compression (quality factor = ) combined with other attacks.

Figure 8.

Results for JPEG compression (quality factor = ) attack combined with AWGN attack for various test images with different noise variances.

4.4. Comparison with Other Methods

In this part of the study, our method is compared with its most related competitors, particularly the methods reported in [16,28,44,45]. The methods in [16,44] were chosen on the basis of their similarity with the proposed watermarking. For example, the methods all used high entropy image blocks of an original image for watermark embedding. To ensure fairness in comparison, the message lengths and PSNR values used in our experiments were the same as those in the other works. The results in Table 6 depict the same watermark lengths of 256 bits embedded into Barbara, Boat, and Peppers images, while the PSNR of the watermarked image was 45 dB. The results of our method were better than those in the other works for most attacks. For instance, for geometric attacks, such as scaling and rotation attacks, the proposed method outperformed the above three watermarking methods. The main reason was that DT-CWT can effectively approximate shift invariances for geometric transformations. However, the proposed method was slightly ineffective compared to the methods in [28,44] under AWGN attacks. This problem will be investigated thoroughly by studying the statistical properties of DT-CWT coefficients and noise in our future work.

Table 6.

BER (%) results of the extracted watermark under some common attack.

Table 7 and Table 8 show the BER results against median filtering attack and JPEG compression attack by using the proposed method and those methods in [28,44], in which the watermark length was 256 bits and the PSNR of the watermarked image was 45 dB, respectively. As shown in both tables, the proposed method had better results than the methods in [28,44].

Table 7.

BER (%) comparison of the recovered watermark under median filtering attack.

Table 8.

BER (%) comparison of the recovered watermark under JPEG compression attack.

Table 9 shows the BER results under scaling attack with a watermark length of 100 bits and a PSNR of the watermarked image of 45 dB. The results of the proposed method outperformed those of the methods in [44,45] for most scaling attack. As described in the third part of Section 4.2 (i.e., “scaling attack”), when the scaling factor was equal to 0.7 or 1.1, the robustness of the watermarking decreased dramatically. We will investigate this issue in our future work.

Table 9.

BER (%) comparison of the recovered watermark under scaling attack.

5. Conclusions

An image watermarking method was proposed in this study by using DT-CWT and the multiplicative strategy. In this approach, after partitioning a host image into non-overlapping blocks, the high entropy image blocks were selected for watermark embedding. Watermark data extraction was performed by using the MLE decision criterion, and the embedding factor was computed to improve the robustness of the watermarking by using the texture masking and visual saliency scheme. The performance of the proposed scheme was evaluated in terms of image quality and robustness. The experimental results demonstrated the effectiveness of the proposed method.

Author Contributions

J.L. conceived of the idea, designed the experiments, and wrote the manuscript; Y.R. helped to revise this manuscript; Y.H. helped to test and analyze the experimental data.

Funding

This work was supported by the Science and Technology Foundation of Jiangxi Provincial Education Department (Grant No. GJJ170922) and the Natural Science Foundation of Jiangxi (No. 20192BAB207013).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Asikuzzaman, M.; Pickering, M.R. An overview of digital video watermarking. IEEE Trans. Circuit. Syst. Video Technol. 2018, 28, 2131–2153. [Google Scholar] [CrossRef]

- Wang, Y.G.; Zhou, G.P.; Shi, Y.Q. Transportation spherical watermarking. IEEE Trans. Image Process. 2018, 27, 2063–2077. [Google Scholar] [CrossRef] [PubMed]

- Jang, B.-G.; Lee, S.-H.; Lee, Y.-S.; Kwon, K.-R. Biological viral infection watermarking architecture of MPEG/H.264/AVC/HEVC. Electronics 2019, 8, 889. [Google Scholar] [CrossRef]

- Guo, Y.F.; Au, O.C.; Wang, R.; Fang, L.; Cao, X.C. Halftone image watermarking by content aware double-sided embedding error diffusion. IEEE Trans. Image Process. 2018, 27, 3387–3402. [Google Scholar] [CrossRef]

- Ou, B.; Li, L.; Zhao, Y.; Ni, R. Efficient color image reversible data hiding based on channel–dependent payload partition and adaptive embedding. Signal Process. 2015, 108, 642–657. [Google Scholar] [CrossRef]

- Cox, I.J.; Kilian, J.; Leighton, T. Secure spread spectrum watermarking for multimedia. IEEE Trans. Image Process. 1997, 6, 1673–1687. [Google Scholar] [CrossRef]

- Liu, X.; Han, G.; Wu, J.; Shao, Z.; Coatrieux, G.; Shu, H. Fractional krawtchouk transform with an application to image watermarking. IEEE Trans. Signal Process. 2017, 65, 1894–1908. [Google Scholar] [CrossRef]

- Ahmaderaghi, B.; Kurugollu, F.; Rincon, J.M.D.; Bouridane, A. Blind image watermark detection algorithm based on discrete shearlet transform using statistical decision theory. IEEE Trans. Comput. Imaging 2018, 4, 46–59. [Google Scholar] [CrossRef]

- Singh, D.; Singh, S.K. DCT based efficient fragile watermarking scheme for image authentication and restoration. Multimed. Tools Appl. 2017, 76, 953–977. [Google Scholar] [CrossRef]

- Rahman, M.M.; Ahmad, M.O.; Swamy, M.N.S. A new statistical detector for DWT–based additive image watermarking using the Gaussian–Hermite expansion. IEEE Trans. Image Process. 2009, 18, 1782–1796. [Google Scholar] [CrossRef]

- Sadreazami, H.; Ahmad, M.O.; Swamy, M.N.S. A study of multiplicative watermark detection in the contourlet domain using alphastable distributions. IEEE Trans. Image Process. 2014, 23, 4348–4360. [Google Scholar] [CrossRef] [PubMed]

- Amini, M.; Ahmad, M.O.; Swamy, M.N.S. A robust multibit multiplicative watermark decoder using a vector based hidden markov model in wavelet domain. IEEE Trans. Circuit. Syst. Video Technol. 2018, 28, 402–413. [Google Scholar] [CrossRef]

- Hwang, M.J.; Lee, J.S.; Lee, M.S.; Kang, H.G. SVD based adaptive QIM watermarking on stereo audio signals. IEEE Trans. Multimed. 2018, 20, 45–54. [Google Scholar] [CrossRef]

- Zareian, M.; Tohidypour, H.R. A novel gain invariant quantization–based watermarking approach. IEEE Trans. Inf. Forensics Sec. 2014, 9, 1804–1813. [Google Scholar] [CrossRef]

- Sadreazami, H.; Amini, A. A robust spread spectrum based image watermarking in ridgelet domain. Int. J. Electron. Commun. 2012, 66, 364–371. [Google Scholar] [CrossRef]

- Akhaee, M.A.; Sahraeian, S.M.E.; Sankur, B.; Marvasti, F. Robust Scaling–based image watermarking using maximum–likelihood decoder with optimum strength factor. IEEE Trans. Multimed. 2009, 11, 822–833. [Google Scholar] [CrossRef]

- Liu, J.H.; She, K. A hybrid approach of DWT and DCT for rational dither modulation watermarking. Circuits Syst. Signal Process. 2012, 31, 797–811. [Google Scholar] [CrossRef]

- You, X.; Du, L.; Cheung, Y.M.; Chen, Q.H. A blind watermarking scheme using new nontensor product wavelet filter banks. IEEE Trans. Image Process. 2010, 19, 3271–3284. [Google Scholar] [CrossRef]

- Ramanjaneyulu, K.; Rajarajeswari, K. Wavelet based oblivious image watermarking scheme using genetic algorithm. IET Image Process. 2012, 6, 364–373. [Google Scholar] [CrossRef]

- Barni, M.; Bartolini, F.; Piva, A. Improved wavelet–based watermarking through pixel–wise masking. IEEE Trans. Image Process. 2001, 10, 783–791. [Google Scholar] [CrossRef]

- Bhowmik, D.; Abhayaratne, C. Quality scalability aware watermarking for visual content. IEEE Trans. Image Process. 2016, 25, 5158–5172. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Lian, S.; Shi, Y.Q. Hybrid multiplicative multiwatermarking in DWT domain. Multidimens. Syst. Signal Process. 2017, 28, 617–636. [Google Scholar] [CrossRef]

- Xiong, L.; Xu, Z.; Shi, Y.Q. An integer wavelet transform based scheme for reversible data hiding in encrypted images. Multidimens. Syst. Signal Process. 2018, 29, 1191–1202. [Google Scholar] [CrossRef]

- Yu, X.Y.; Wang, C.Y.; Zhou, X. A hybrid transforms based robust video zero-watermarking algorithm for resisting high efficiency video coding compression. IEEE Access 2019, 7, 115708–115724. [Google Scholar] [CrossRef]

- Langelaar, G.C.; Setyawan, I.; Lagendijk, R.L. Watermarking digital image and video data: A state–of–the–art overview. IEEE Trans. Signal Process. Mag. 2000, 17, 20–26. [Google Scholar] [CrossRef]

- Cheng, Q.; Huang, T.S. Robust optimum detection of transform domain multiplicative watermarks. IEEE Trans. Signal Process. 2003, 51, 906–924. [Google Scholar] [CrossRef]

- Do, M.N.; Vetterli, M. The contourlet transform: an efficient directional multi-resolution image processing. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef]

- Kalantari, N.K.; Ahadi, S.M.; Vafadust, M. A robust image watermarking in the ridgelet domain using universally optimum decoder. IEEE Trans. Circuit. Syst. Video Technol. 2010, 20, 396–406. [Google Scholar] [CrossRef]

- Demanet, L.; Ying, L.X. Wave atoms and time upscaling of wave equations. Numer. Math. 2009, 13, 1–71. [Google Scholar] [CrossRef]

- Sadreazami, H.; Ahmad, O.; Swamy, M.N.S. Multiplicative watermark decoder in contourlet domain using the normal inverse Gaussian distribution. IEEE Trans. Multimed. 2016, 18, 196–207. [Google Scholar] [CrossRef]

- Wang, F.; Zhao, X.L.; Ng, M. Multiplicative noise and blur removal by framelet decomposition and L1 based L–curve method. IEEE Trans. Image Process. 2016, 25, 4222–4232. [Google Scholar] [CrossRef]

- Selesnick, I.W.; Baraniuk, R.G.; Kingbury, N.G. The dual–tree complex wavelets transform—A coherent framework for multi-scale signal and image processing. IEEE Signal Process. Mag. 2005, 22, 123–151. [Google Scholar] [CrossRef]

- Liu, J.H.; Xu, Y.Y.; Wang, S.; Zhu, C. Complex wavelet domain image watermarking algorithm using L1 norm function–based quantization. Circuits Syst. Signal Process. 2018, 37, 1268–1286. [Google Scholar] [CrossRef]

- Celik, T.; Ma, K.K. Unsupervised change detection for satellite images using dual–tree complex wavelet transform. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1199–1210. [Google Scholar] [CrossRef]

- Watson, A.B.; Yang, G.Y.; Solomon, J.A.; Villasenor, J. Visibility of wavelet quantization noise. IEEE Trans. Image Process. 1997, 6, 1164–1175. [Google Scholar] [CrossRef]

- Yang, X.; Lin, W.; Liu, Z.; Ongg, Z.; Yao, S. Motion–compensated residue preprocessing in video coding based on just–noticeable–distortion profile. IEEE Trans. Circuits Syst. Video Technol. 2005, 15, 745–752. [Google Scholar]

- Wang, C.; Zhang, T.; Wan, W.; Han, X.; Xu, M. A novel STDM watermarking using visual saliency based JND model. Information 2017, 8, 103. [Google Scholar] [CrossRef]

- Wang, C.; Han, X.; Wan, W.; Li, J.; Sun, J.; Xu, M. Visual saliency based just noticeable difference estimation in DWT domain. Information 2018, 9, 178. [Google Scholar] [CrossRef]

- Akhaee, M.A.; Sahraeian, S.M.; Marvasti, F. Contourlet based image watermarking using optimum detector in a noisy environment. IEEE Trans. Image Process. 2010, 19, 967–980. [Google Scholar] [CrossRef]

- Papakostas, G.A.; Tsougenis, E.D.; Koulouriotis, D.E. Fuzzy knowledge based adaptive image watermarking by the method of moments. Complex Intell. Syst. 2016, 2, 205–220. [Google Scholar] [CrossRef]

- Borji, A.; Itti, L. State-of-the-art in visual attention modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 185–207. [Google Scholar] [CrossRef] [PubMed]

- Borji, A.; Sihite, D.N.; Itti, L. Quantitative analysis of human model agreement in visual saliency modeling: A comparative study. IEEE Trans. Image Process. 2013, 22, 55–69. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R. Image quality assessment from error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Yadav, N.; Singh, K. Robust image–adaptive watermarking using an adjustable dynamic strength factor. Signal Image Video Process. 2015, 9, 1531–1542. [Google Scholar] [CrossRef]

- Tsougenis, E.D.; Papakostas, G.A.; Koulouriotis, D.E.; Tourassis, V.D. Towards adaptivity of image watermarking in polar harmonic transforms domain. Opt. Laser Technol. 2013, 54, 84–97. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).