3.1. Look-Ahead EDF

The laEDF algorithm is shown in Algorithm 1. The major step in this algorithm is the defer() function. The function looks at the interval until the next task deadline, trying to push as much work as it can beyond the deadline. Then, it computes the minimum amount of work during this interval to meet all future deadlines. In Algorithm 1, the periodic tasks are sorted in reverse EDF order to calculate the minimum amount of work to be done until the next deadline in the defer() function. It checks whether

can be executed between the earliest deadline(

) and the deadline of the task(

). If

x is less than or equal to 0, it means that the task can be performed within this interval. In Algorithm 1, the utilization rate excluding the utilization of the periodic task

is calculated by

The amount of work for task

until the earliest deadline is defined as

where

is the earliest deadline and (

) is the utilization that can be allocated to the task

. Then,

U is updated to reflect the actual utilization of the task for the time after

. This calculation is repeated for all tasks.

| Algorithm 1 Look-ahead EDF(laEDF) algorithms. |

- 1:

function select_frequency(x) - 2:

use lowest freq. such that - 3:

end function - 4:

- 5:

upon task_release() - 6:

set - 7:

defer() - 8:

- 9:

upon task_complete() - 10:

set - 11:

defer() - 12:

- 13:

during task_execution() - 14:

decrement - 15:

- 16:

function defer( ) - 17:

set - 18:

set - 19:

for i=1 do n - 20:

set - 21:

set - 22:

set - 23:

set - 24:

end for - 25:

select_frequency - 26:

end function

|

In Equation (

4), the processor utilization between

and

is updated with the amount of work excluding the work to be performed until

. The operating frequency at

t is computed as

where

s means the sum of work to complete up to the earliest deadline

.

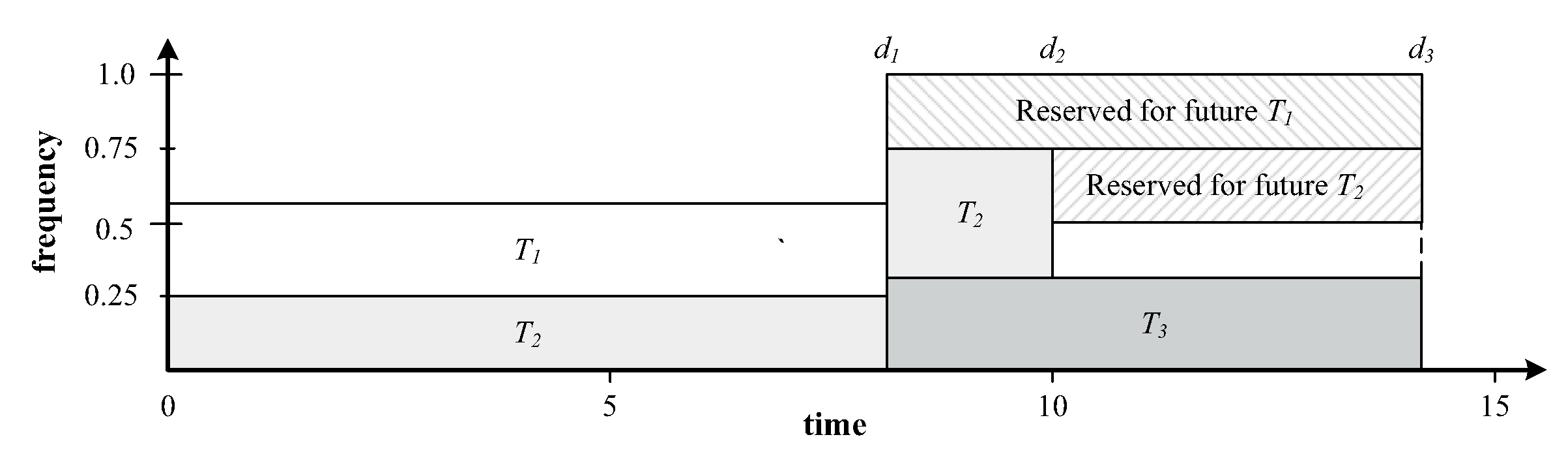

Figure 1 shows an example of the laEDF algorithm, where 3 periodic tasks exist. The deadlines of tasks are

,

, and

, respectively. The deadlines are assumed as

. In the system, the processor is assumed that three normalized and discrete frequencies are available (i.e., 0.5, 0.75, and 1.0). In the algorithm, the goal is to defer work beyond the earliest deadline (

) in the system so that the processor can operate at a low frequency. The algorithm allocates time in the schedule for the worst-case execution of each task, starting the task with the latest deadline,

(i.e., reverse EDF order). The algorithm spreads out

’s work between the earliest deadline (

) and its deadline

(

x of

0), subject to reserving a constant capacity for the future invocation of the other tasks. The algorithm repeats this step for

, which can not entirely fit between

and

(

x of

> 0) after the allocation of

and reserving capacity for the future invocations of

. The remaining work for

and all of

are allotted before

. Therefore, the required minimum frequency is 0.55 to meet all periodic tasks’ deadline. As a result, the frequency of 0.75 can be applied at

.

3.2. The SSML Algorithm

The SSML algorithm takes advantage of the fact that the laEDF algorithm defers as many periodic tasks as possible to lower the frequency of the processor beyond the earliest deadline, satisfying the periodic tasks’ deadline. In the proposed algorithm, we use the fact that the laEDF algorithm calculates the amount of work to be done up to the earliest deadline to satisfy the deadlines. The SSML algorithm calculates the allowable time to an aperiodic task from the current time, the deadline of the earliest periodic task, and the work amount of the periodic task’s work to be done until that point. The SSML algorithm is shown in Algorithm 2.

| Algorithm 2 SSML algorithms. |

- 1:

upon task_release() - 2:

if /* is a periodic task */ - 3:

set - 4:

if there is aperiodic task in ready queue - 5:

defer(); - 6:

- 7:

upon task_complete() - 8:

if /* is a periodic task */ - 9:

set - 10:

if there is aperiodic task in ready queue - 11:

defer(); - 12:

- 13:

during task_execution() - 14:

if /* is a periodic task */ - 15:

decrement - 16:

if /* is an aperiodic task */ - 17:

decrement - 18:

if - 19:

defer(); - 20:

- 21:

function defer( ) - 22:

set /* n is a periodic task number */ - 23:

set - 24:

set - 25:

for do} - 26:

set - 27:

set - 28:

set - 29:

set - 30:

end for - 31:

; /* t is a current time */ - 32:

set_deadline(); - 33:

end function - 34:

- 35:

function set_deadline() - 36:

if - 37:

set as the highest priority - 38:

else - 39:

set as low priority - 40:

end function

|

In lines 1–5, task_release is called when a new task is released. In this function, the task’s becomes the WCET of the task because the actual execution time of the task is unknown. Then, the defer() function is called to calculate the slack () if there is an aperiodic task in the task ready queue. In lines 7–11, task_complete is called when a task is completed. of the task becomes 0. Then, if there is an aperiodic task in the task ready queue, the defer() function is called to check the available time of the aperiodic task. is decremented as the task executes from line 15. If the executing task is an aperiodic task, is also decremented. If the slack is equal to or less than 0 (i.e., lines 18–19), it means there is no time to allocate to the aperiodic task. Therefore, the defer() function is called to lower the aperiodic task’s priority. The defer() function in line 21–33 computes the amount of periodic tasks’ work to meet the earliest deadline. In line 22, the defer() function calculates the total utilization of periodic tasks. Then, line 25–30 is performed by arranging the tasks in reverse EDF order. In line 26, the utilization is obtained by subtracting the utilization of the i-th periodic task. At line 27 computes the amount of periodic task’s work to be done until the earliest deadline. Compared to Algorithm 1, it can be confirmed that is applied instead of to allocate as many processors as possible to the aperiodic task satisfying the deadline of the periodic task among the second argument of max function. In line 28, we can see that the amount of work to be performed between to of the i-th task is updated based on the amounts of work excluded (). It can be seen that s (i.e., the amount of work) of periodic tasks to be performed up to is calculated by adding x. In line 31, we can see that the slack of an aperiodic task is calculated by

Based on the slack, the set_deadline() function is called to adjust the deadline of the aperiodic task. In lines 35–40, the algorithm sets the aperiodic task’s deadline according to the slack. If , then the highest priority is assigned to the aperiodic task to reduce the aperiodic task’s response time. On the other hand, if is less than or equal to 0, it means that there is no spare time to allocate to an aperiodic task. The priority of the aperiodic task is set lower than that of the periodic task to meet the deadline of the periodic tasks.

3.3. Illustrative Example

In this section, an example of TBS and SSML is described. In

Figure 2a,b show the scheduling results of the TBS and SSML, respectively. We assume that there are three periodic tasks (i.e.,

,

, and

) and an aperiodic task

. The period of

is

, and its execution time

.

has the period

, and its execution time

.

has the period

, and its execution time

. Therefore, the total CPU utilization by three periodic tasks is 0.9.

The CPU utilization by the aperiodic server can be

. In TBS, the

k-th aperiodic request arrives at

, and the deadline is defined as

where

is the WCET of the request, and

is the server utilization. In the example, 2 aperiodic jobs arrive at time 1 and 10. Their actual execution times are 0.2 and 0.5, respectively. The request is then inserted into a task ready queue of the system and scheduled by EDF along with any periodic instances. It is noted that we can keep track of the bandwidth assigned to other requests by taking the maximum between

and

.

Figure 2a shows an example of the TBS scheduling result. The first aperiodic request arrives at

and is assigned a deadline

Since

is not the earliest deadline until

, the aperiodic job can not get the processor. But at

, there are no periodic tasks in task ready queue of the system. Therefore, the aperiodic job (

) starts execution and finishes at

. The second aperiodic request arrives at

. By the definition of the TBS,

is calculated as

Since is not the earliest deadline until . The aperiodic job can not get the processor. But at , there are no periodic tasks in the task ready queue of the system. Then, the aperiodic job () starts execution and finishes at , because its actual execution time is 0.5. Consequently, the response times of the two aperiodic jobs become and , respectively.

On the other hand, we can see that the response times of the aperiodic jobs are drastically reduced through

Figure 2b. When the first aperiodic job arrives at

. The slack is calculated by the SSML algorithm.

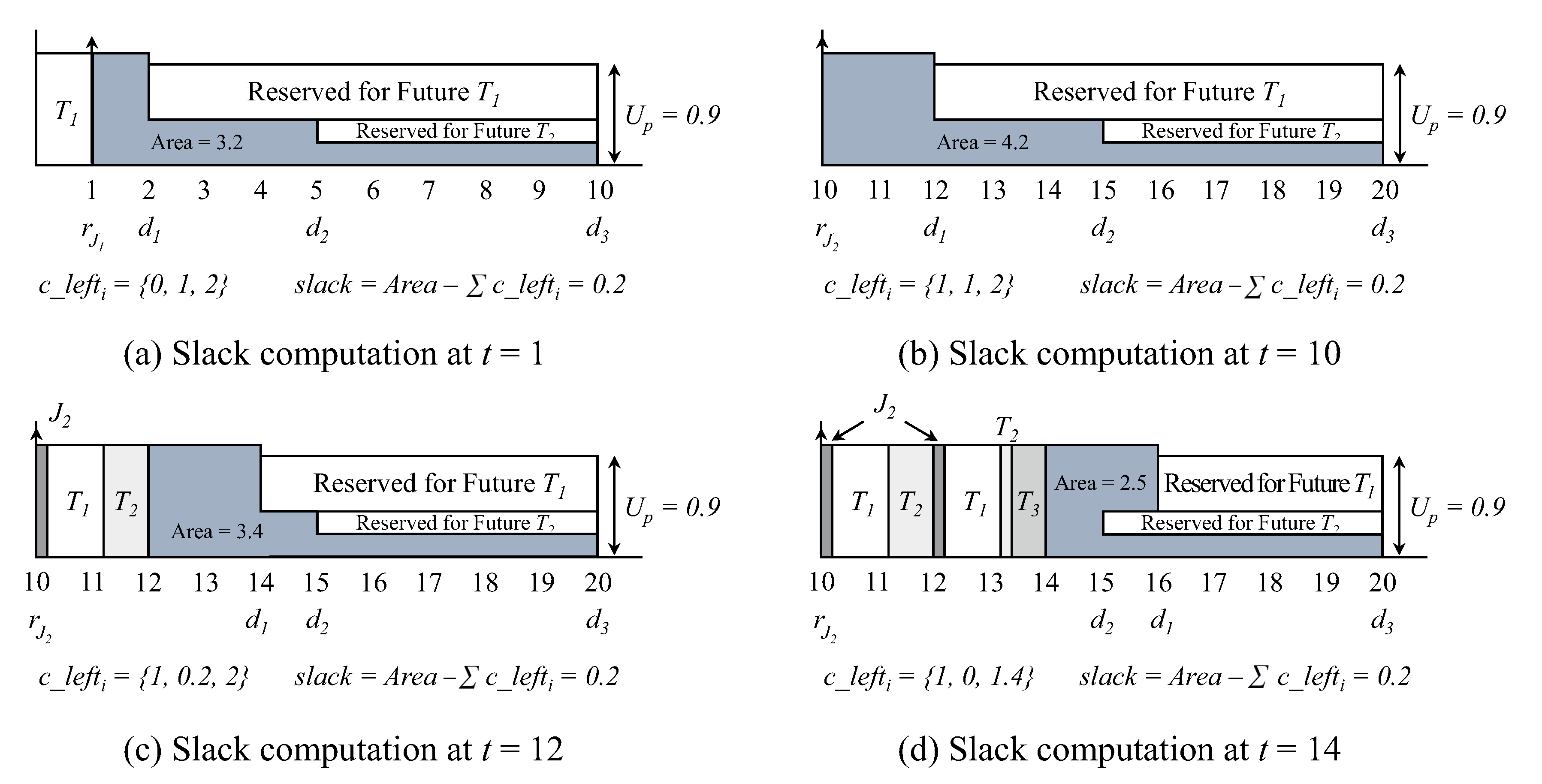

Figure 3a depicts the defer() function result. At

,

are 0, 1, and 2, respectively. As a result of the defer() function, the periodic task’s job should be done until the earliest periodic task’s deadline (

) is 0.8. By the line 28 in Algorithm 2, the slack is 0.2.

Since the slack is greater than zero, the aperiodic job must be assigned to the highest priority. Therefore, the aperiodic job can be performed first. Since the actual execution time of the first aperiodic job is 0.2, the task completes at

. Therefore, the first aperiodic task response time becomes

. The scheduling of subsequent periodic tasks is performed by the EDF algorithm. The second aperiodic job arrives at

. The result of the defer() function at the time is shown in

Figure 3b. The

for 3 periodic tasks are 1, 1, and 2 respectively. The defer() function shows the workload of the periodic task to be performed until the

(i.e., the earliest deadline) is 1.8, resulting in the slack being 0.2.

Therefore, the aperiodic task is performed for 0.2. Since the slack is exhausted at

, the periodic task

is executed, because

has the earliest deadline. Periodic tasks are performed until

. The result of defer() function at

is shown in

Figure 3c. The earliest deadline for periodic tasks is 14, and the amount of work for the periodic task to be performed up to this is found to be 1.8. Therefore, the aperiodic task is executing for 0.2 (i.e.,

), and the periodic task

is executed again. The result of the defer() function at time 14 is shown in

Figure 3d. In this case, the deadline for the earliest periodic task is 15, and the work to be performed until this time is 0.9. Therefore, the slack of 0.1 is used by the aperiodic job. Since the actual execution time of this aperiodic task is 0.5, the task completes its execution at

. Therefore, the response time of the second aperiodic job becomes

. Consequently, the response times of SSML are 0.2 and 4.1 respectively.