Applying the Haar-cascade Algorithm for Detecting Safety Equipment in Safety Management Systems for Multiple Working Environments

Abstract

:1. Introduction

2. Materials and Methods

2.1. Related Work of Machine Learning Algorithm

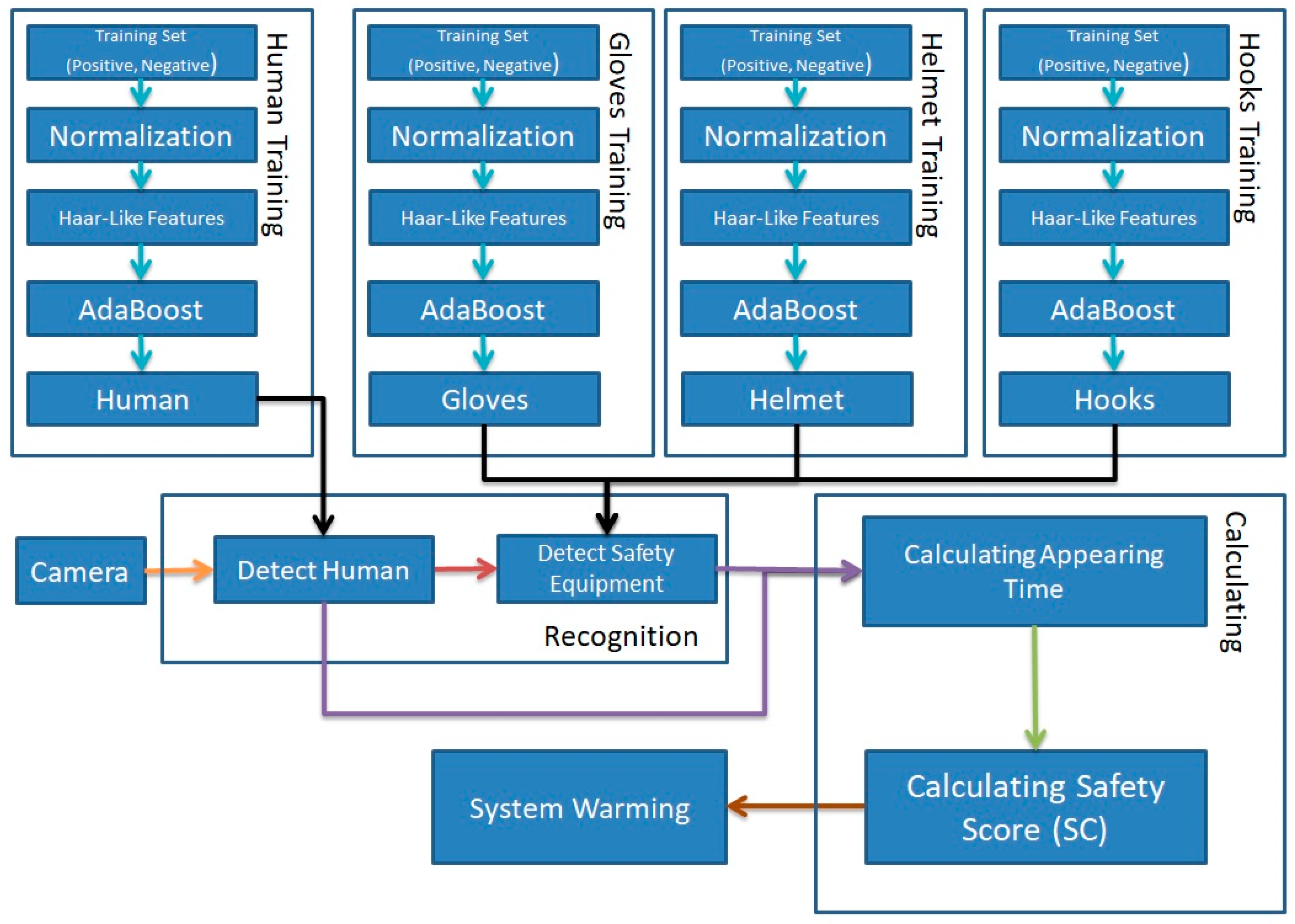

2.2. Applying Haar-Cascade in Chemical Plant Safety System

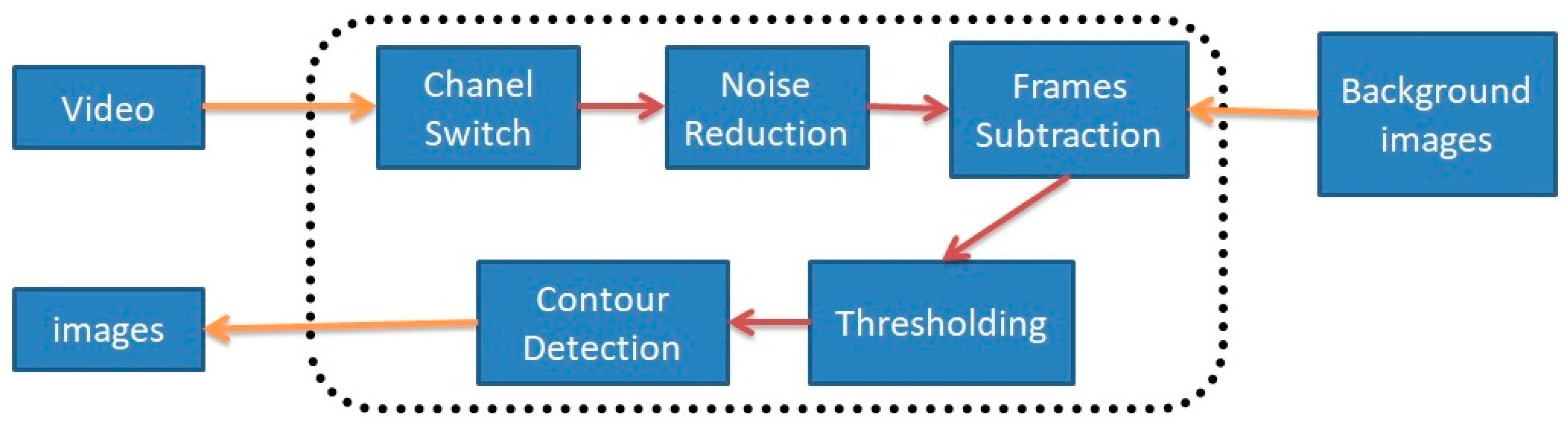

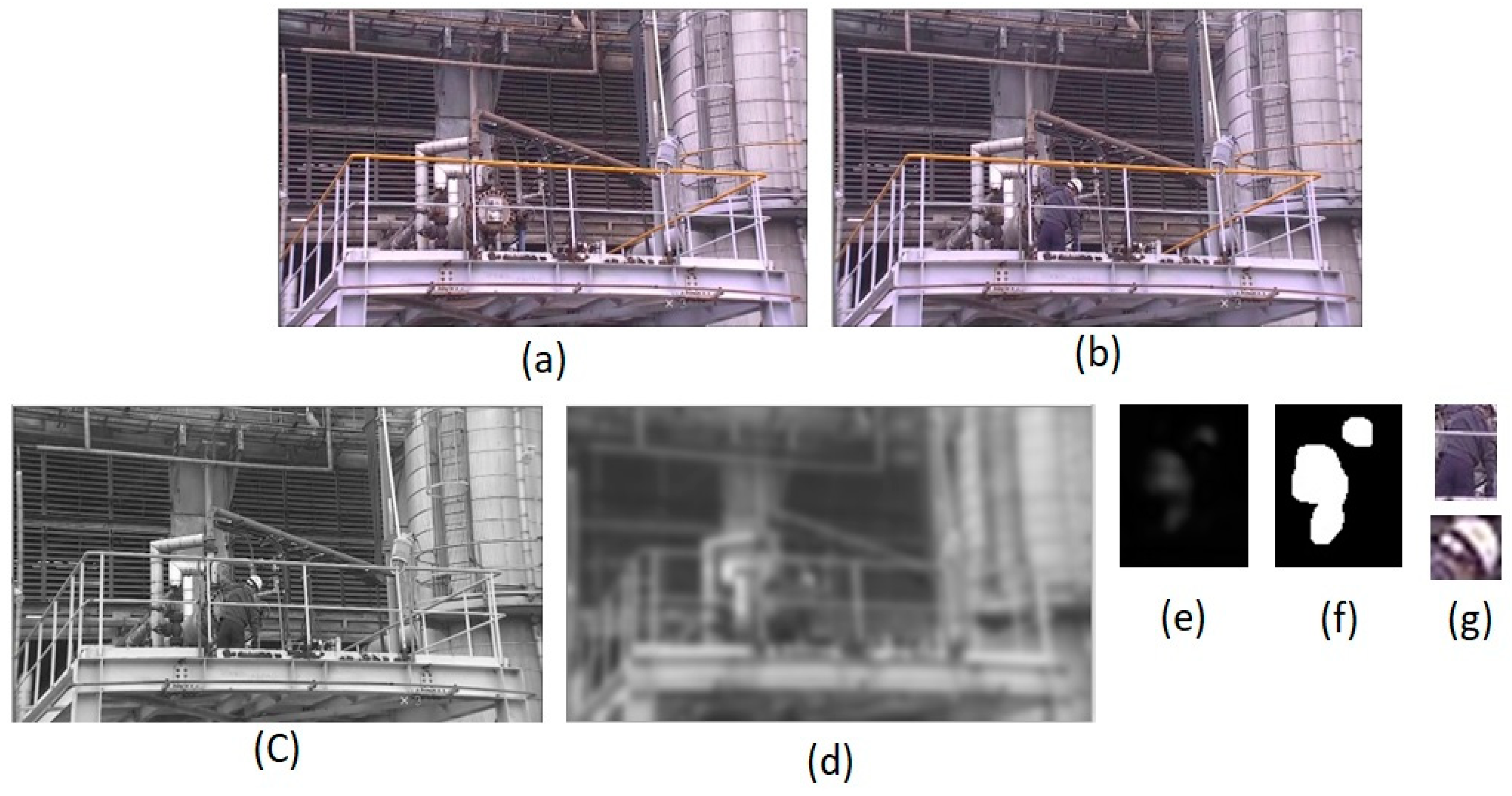

2.2.1. Obtaining Images from Raw Video and Preprocessing and Categorizing Them

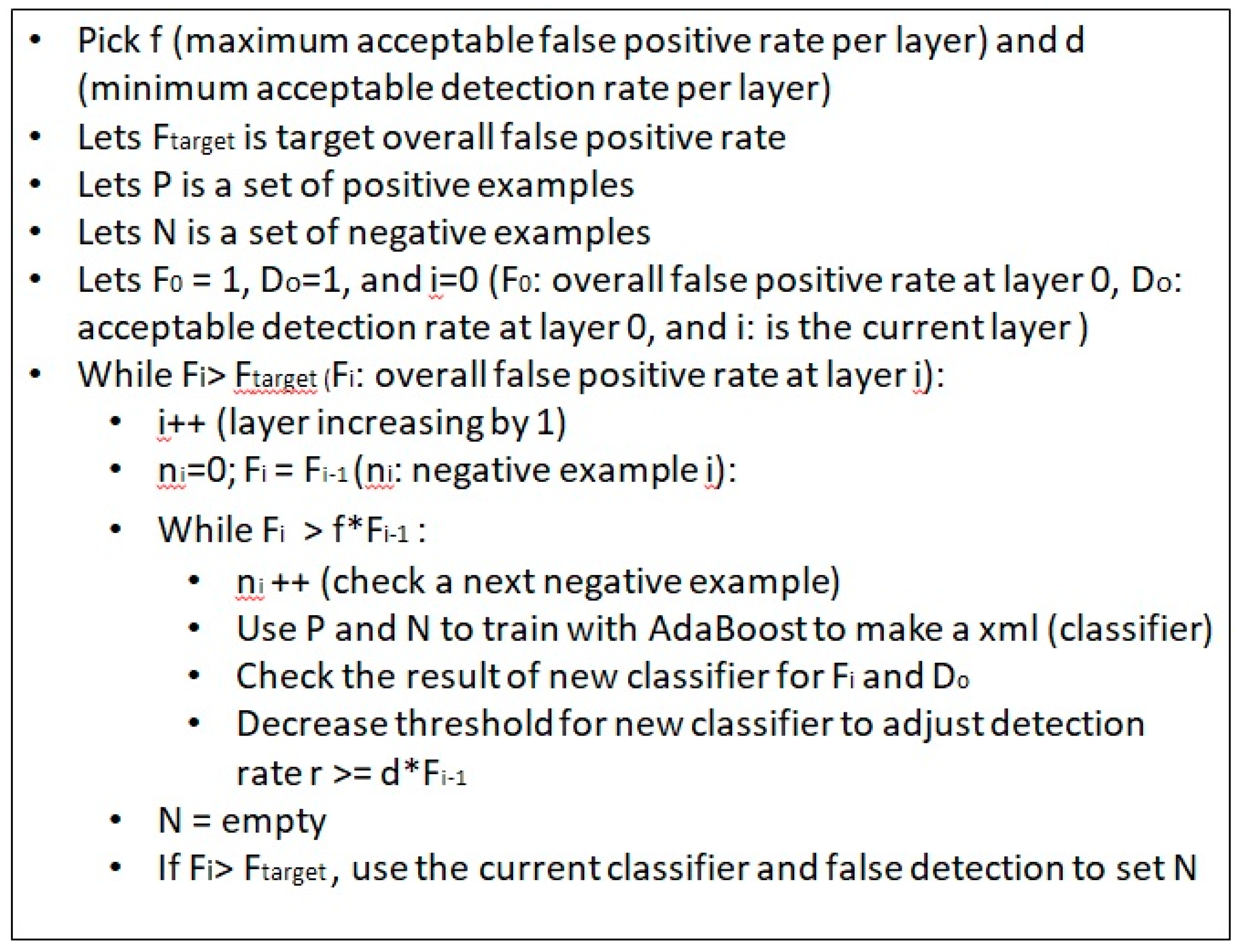

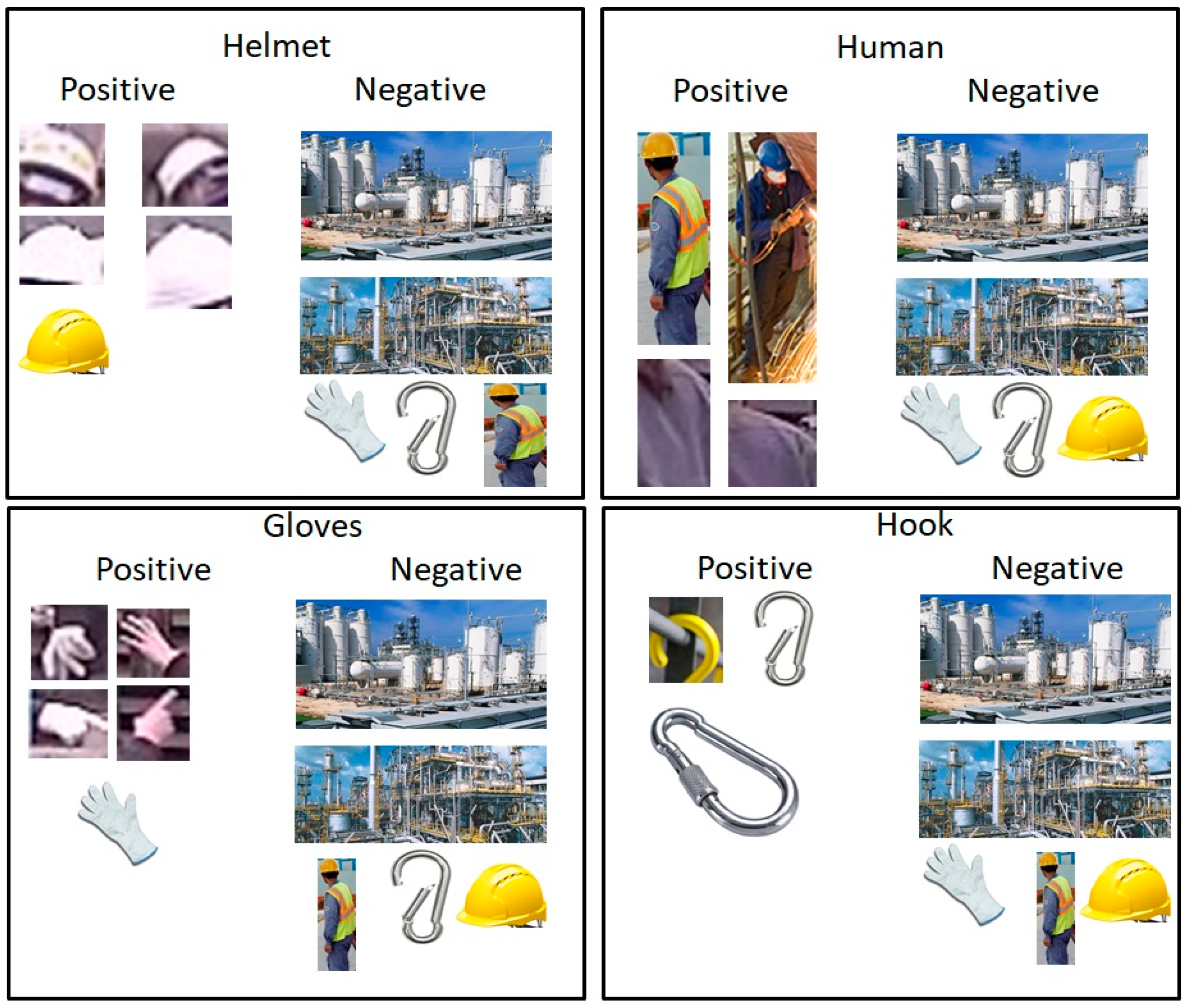

2.2.2. Creating the Haar-Cascade Classifier to Detect Objects

2.2.3. Creating a Safety System for a Chemical Plant Environment

3. Experimental Results

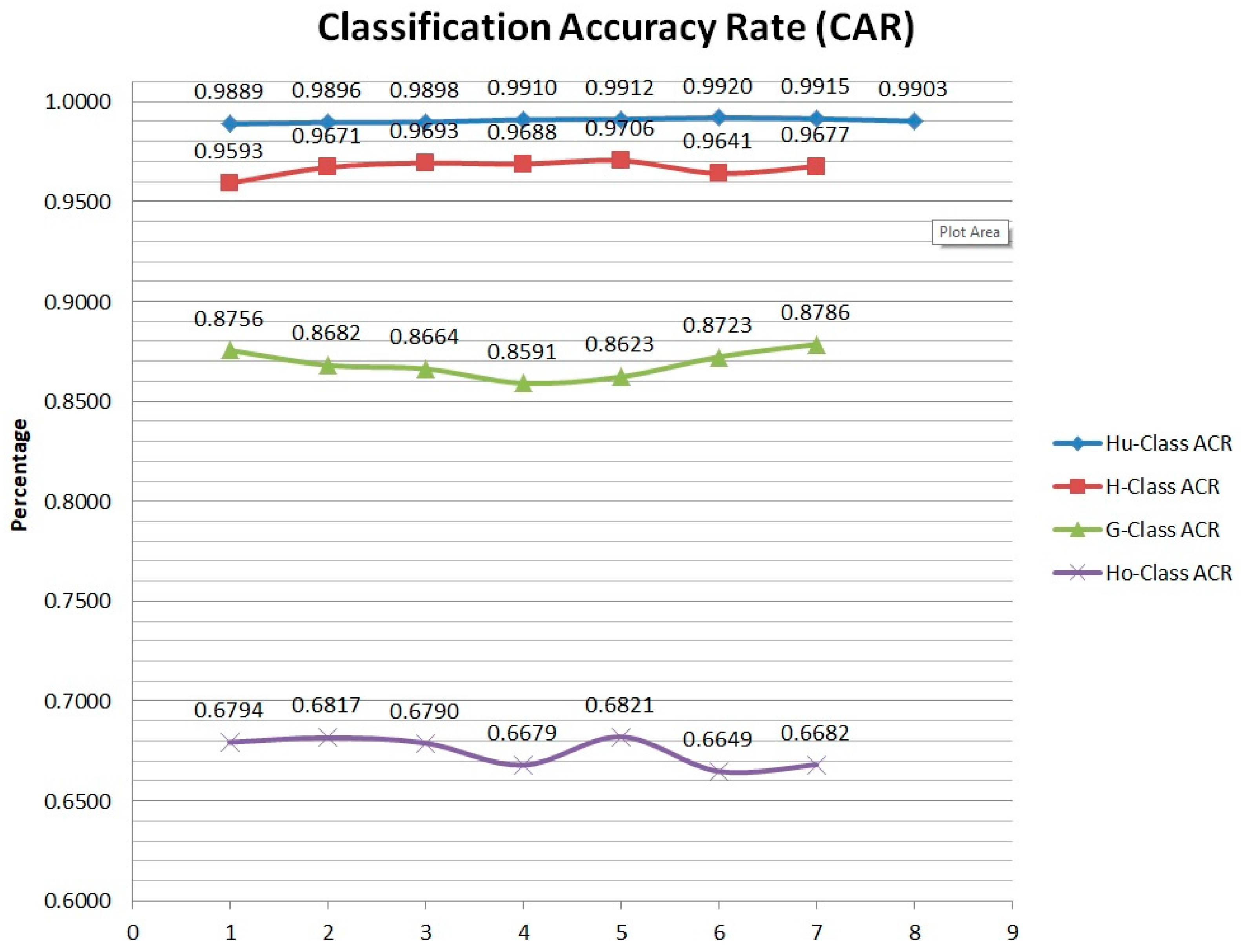

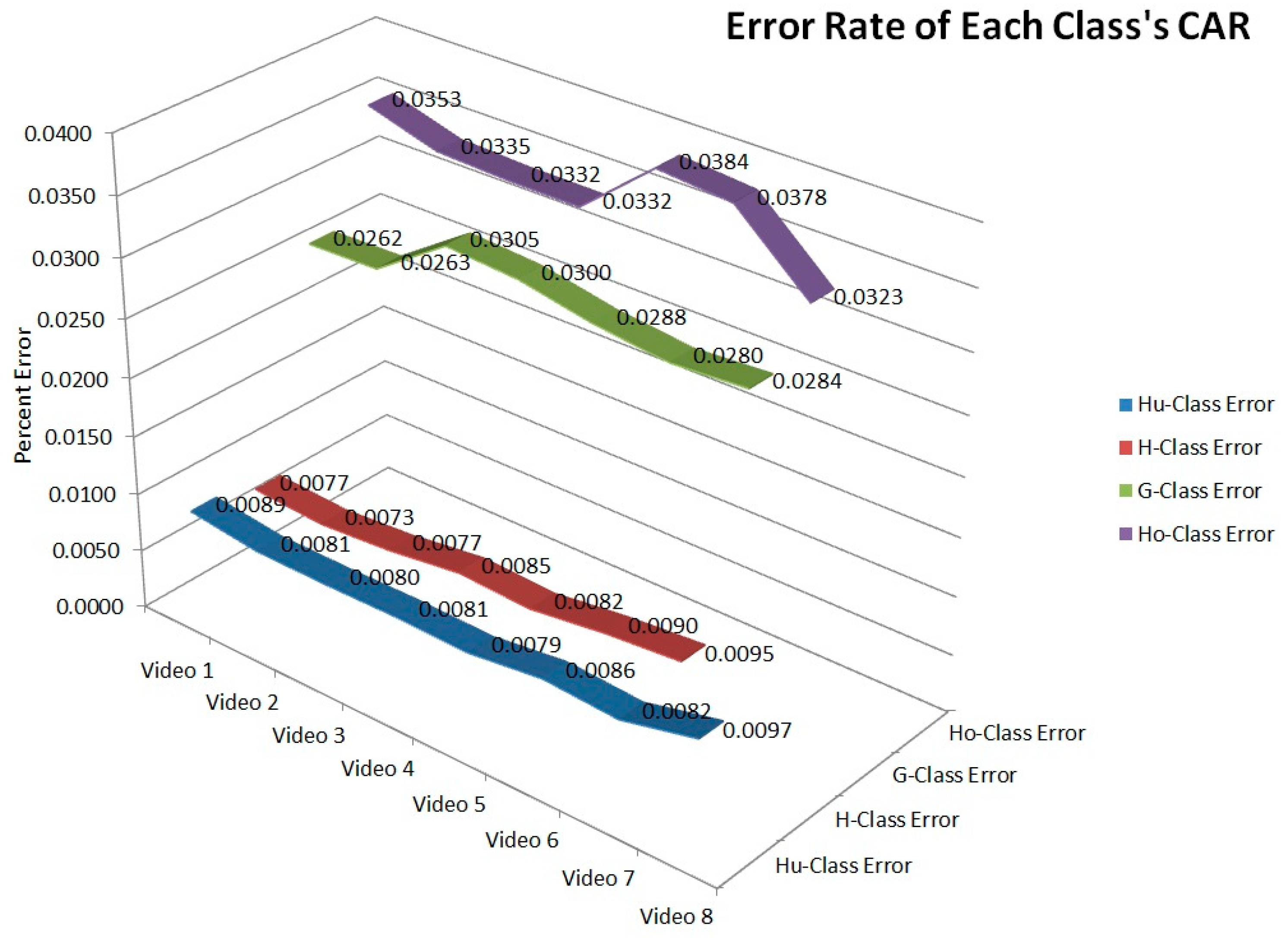

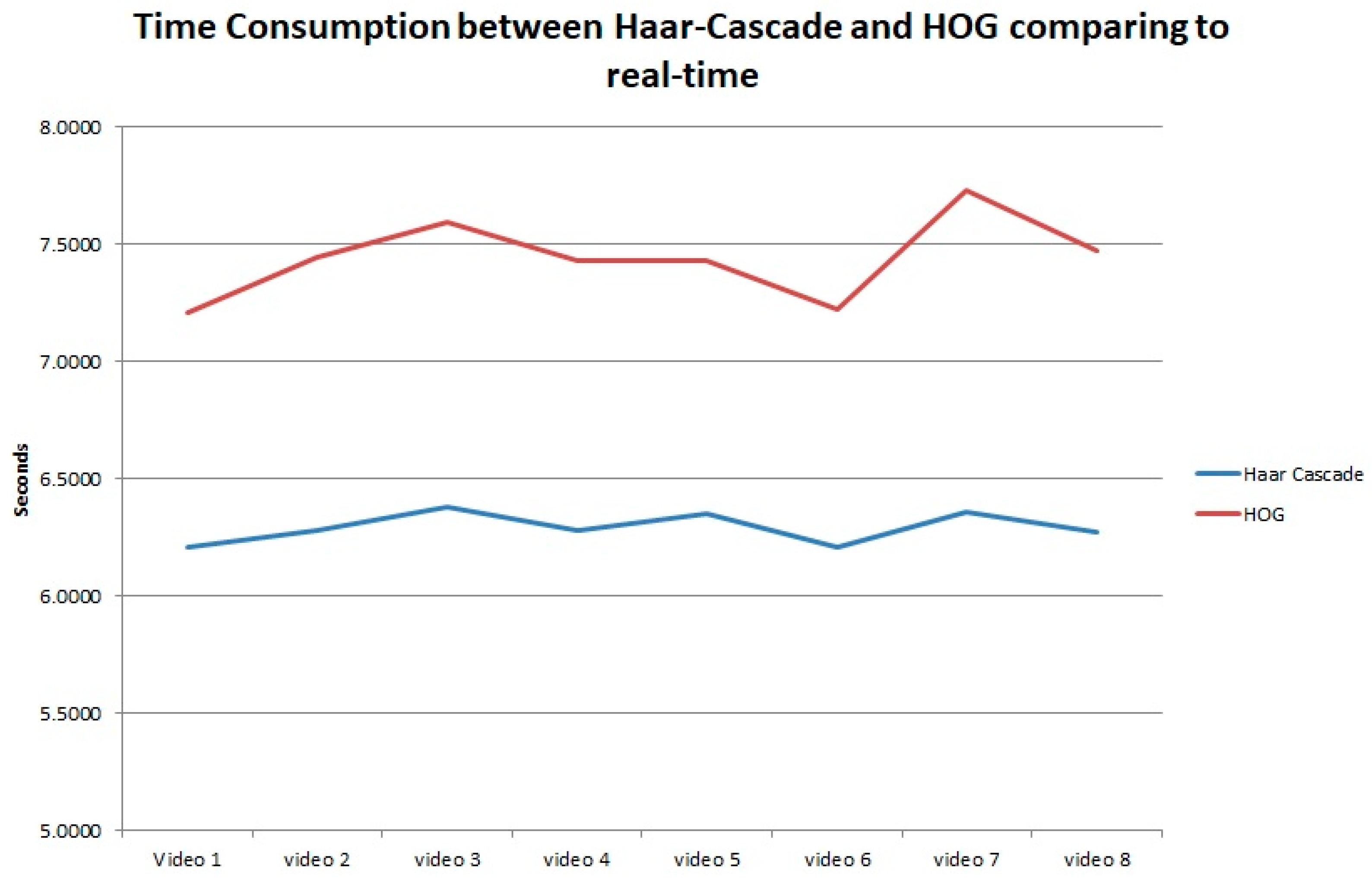

3.1. Performance of Four Class Classifiers

3.2. Performance of the Safety System

4. Discussion

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Slusarczyk, B. Industry 4.0—Are we ready. Pol. J. Manag. Stud. 2018, 17, 232–248. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Robust Real-Time Face Detection, 2001. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Karami, E.; Prasad, S.; Shehata, M. Image Matching Using SIFT, SURF, BRIEF and ORB: Performance Comparison for Distorted Image. In Proceedings of the 2015 Newfoundland Electrical and Computer Engineering Conference, St. John’s, NL, Canada, 5 November 2015. [Google Scholar]

- Freund, Y.; Schapire, R.E. A Short Introduction to Boosting. J. Jpn. Soc. Artif. Intell. 1999, 14, 771–780. [Google Scholar]

- Devi, N.S.; Hemachandran, K. Face Recognition Using Principal Component Analysis. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 6491–6496. [Google Scholar]

- Navaz, A.S.S.; Sri, T.D.; Mazumder, P. Face Recognition Using Principal Component Analysis and Neural Networks. Int. J. Comput. Netw. 2013, 3, 245–256. [Google Scholar]

- Thakur, S.; Sing, J.K.; Basu, D.K.; Nasipuri, M.; Kundu, M. Face Recognition Using Principal Component Analysis and RBF Neural Networks. In Proceedings of the IEEE First International Conference on Emerging Trends in Engineering and Technology, Nagpur, Nagpur, 16–18 July 2008. [Google Scholar]

- Wanjale, K.H.; Bhoomkar, A.; Kulkami, A.; Gosavi, S. Use of Haar Cascade Classifier for Face Tracking System in Real Time Video. Int. J. Eng. Res. Technol. 2013, 2, 2348–2353. [Google Scholar]

- Arreola, L.; Gudino, G.; Flores, G. Object recognition and tracking using Haar-like Features Cascade Classifiers: Application to a quad-rotor UAV. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems, Atlanta, GA, USA, 11–14 June 2019. [Google Scholar]

- Cuimei, L.; Zhiliang, Q.; Nan, J.; Jianhua, W. Human Face Detection Algorithm via Haar Cascade Classifier Combined with three additional classifiers. In Proceedings of the IEEE 13th International Conference on Electronic Measurement and Instruments, Yangzhou, China, 20–22 October 2017; pp. 483–487. [Google Scholar]

- Ulfa, D.K.; Widyantoro, D.H. Implementation of Haar Cascade Classifier for Motorcycle Detection. In Proceedings of the IEEE International Conference on Cybernetics and Computational Intelligence (CyberneticsCom), Phuket, Thailand, 20–22 November 2017. [Google Scholar]

- Cruz, J.E.C.; Shiguemori, E.H.; Guimaraes, L.N.F. A Comparison of Haar-like, LBP and HOG Approaches to Concrete and Asphalt Runaway Detection in High Resolution Imagery. J. Comput. Int. Sci. 2015, 6, 121–136. [Google Scholar]

- Arunmozhi, A.; Park, J. Comparison of HOG, LBP and Haar-Like Features for On-Road Vehicle Detection. In Proceedings of the IEEE International Conference on Electro/Information Technology (EIT), Rochester, MI, USA, 3–5 May 2018. [Google Scholar]

- Guennouni, S.; Ahaitouf, A.; Mansouri, A. A Comparative Study of Multiple Object Using Haar-like Feature Selection and Local Binary Patterns in Several Platforms. Model. Simul. Eng. 2015, 2015, 17. [Google Scholar] [CrossRef]

- Qi, F.; Li, H.; Luo, X.; Ding, L.; Rose, T.; An, W. Detecting Non-hardhat-use by a Deep Learning method from Far-field Surveillance Videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar]

- Ge, Y.; Zhang, R.; Wu, L.; Wang, X.; Tang, X.; Lou, P. Deepfashion2: A versatile Benchmark for Detection, Pose Estimation, Segmentation and Re-Identification of Clothing Images. arXiv 2019, arXiv:1901.070973. [Google Scholar]

- Yang, M.; Yu, K. Real-Time Clothing Recognition in Surveillance Videos. In Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 2937–2940. [Google Scholar]

- Senthilkumaran, N.; Vaithegi, S. Image Segmentation by Using Thresholding Techniques for Medical Images. Comput. Sci. Eng. Int. J. 2016, 6, 1–13. [Google Scholar]

- Bulent, S.; Sezgin, M. Image thresholding techniques: A survey over categories. Pattern Recognit. 2001, 34, 1573–1583. [Google Scholar]

- Sezgin, M.; Sankur, B.; Bebek, I. Survey Over Image Thresholding Techniques. J Electron. Imaging 2004, 13, 146–168. [Google Scholar]

| Items | Data/Type |

|---|---|

| Number of States | 20 |

| Number of Threads | 7 |

| Acceptance Ratio Break Value | −1 |

| Feature Type | Haar |

| Haar Feature Type | Basic |

| Boost Type | GAB |

| Minimal Hit Rate | 0.995 |

| Maximal False Alarm Rate | 0.5 |

| Weight Trim Rate | 0.95 |

| Maximal Depth Weak Tree | 1 |

| Maximal Weak Tree | 100 |

| Number of Positives | 1150 |

| Number of Negatives | 8850 |

| Helmet (H-Class) | Human (Hu-Class) | ||

| Positive | Negative | Positive | Negative |

| 3000 | 8000 | 2500 | 8500 |

| Gloves (G-Class) | Hook (Ho-Class) | ||

| Positive | Negative | Positive | Negative |

| 2000 | 7000 | 2000 | 7000 |

| No. | No. Frame | Hu-Class | H-Class | G-Class | Ho-Class | ||||

|---|---|---|---|---|---|---|---|---|---|

| True Positive | False Positive | True Positive | False Positive | True Positive | False Positive | True Positive | False Positive | ||

| Video 1 (positive) | 28,000 | 10,325 | 116 | 8611 | 365 | 19,632 | 2790 | 462 | 218 |

| Video 2 (positive) | 54,000 | 12,233 | 128 | 11,069 | 376 | 20,051 | 3045 | 529 | 247 |

| Video 3 (positive) | 84,000 | 14,587 | 151 | 11,370 | 360 | 23,437 | 3615 | 531 | 251 |

| Video 4 (positive) | 95,000 | 14,390 | 130 | 12,010 | 387 | 22,014 | 3610 | 523 | 260 |

| Video 5 (positive) | 108,000 | 14,104 | 125 | 10,590 | 321 | 20,110 | 3211 | 515 | 240 |

| Video 6 (negative) | 60,000 | 3100 | 25 | 430 | 16 | 560 | 82 | 123 | 62 |

| Video 7 (negative) | 92,000 | 3500 | 30 | 510 | 17 | 680 | 94 | 145 | 72 |

| Video 8 (Background) | 45,000 | 204 | 2 | 0 | 2 | 0 | 4 | 0 | 1 |

| 10’ | 20’ | 30’ | 40’ | 50’ | 60’ | 70’ | 80’ | |

|---|---|---|---|---|---|---|---|---|

| Video 1 (School Zone) | 0.683905501 | 0.837146454 | 0 | 0 | 0 | 0 | 0 | 0 |

| Video 2 (Construction Site) | 0.017571454 | 0.270406121 | 0.393198 | 0 | 0 | 0 | 0 | 0 |

| Video 3 (Construction Site) | 0.914408648 | 0.667811467 | 0.908317 | 0.160757 | 0 | 0 | 0 | 0 |

| Video 4 (Construction Site) | 0.149662675 | 0.10061122 | 0.649835 | 0.920592 | 0.305502 | 0 | 0 | 0 |

| Video 5 (Chemical plant Site) | 0.570131747 | 0.499383685 | 0.554334 | 0.560503 | 0.460353 | 0.867991 | 0 | 0 |

| Video 6 (Chemical plant Site) | 0.480631829 | 0.665851305 | 0.668991 | 0.423596 | 0.077771 | 0.852459 | 0.089224 | 0 |

| Video 7 (Chemical plant Site) | 0.656125594 | 0.212195501 | 0.203729 | 0.442033 | 0.270916 | 0.342695 | 0.115809 | 0 |

| Video 8 (School Zone) | 0.632075504 | 0.754045491 | 0.277832 | 0.37724 | 0.511964 | 0.845904 | 0.896601 | 0.705319 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Phuc, L.T.H.; Jeon, H.; Truong, N.T.N.; Hak, J.J. Applying the Haar-cascade Algorithm for Detecting Safety Equipment in Safety Management Systems for Multiple Working Environments. Electronics 2019, 8, 1079. https://doi.org/10.3390/electronics8101079

Phuc LTH, Jeon H, Truong NTN, Hak JJ. Applying the Haar-cascade Algorithm for Detecting Safety Equipment in Safety Management Systems for Multiple Working Environments. Electronics. 2019; 8(10):1079. https://doi.org/10.3390/electronics8101079

Chicago/Turabian StylePhuc, Le Tran Huu, HyeJun Jeon, Nguyen Tam Nguyen Truong, and Jung Jae Hak. 2019. "Applying the Haar-cascade Algorithm for Detecting Safety Equipment in Safety Management Systems for Multiple Working Environments" Electronics 8, no. 10: 1079. https://doi.org/10.3390/electronics8101079

APA StylePhuc, L. T. H., Jeon, H., Truong, N. T. N., & Hak, J. J. (2019). Applying the Haar-cascade Algorithm for Detecting Safety Equipment in Safety Management Systems for Multiple Working Environments. Electronics, 8(10), 1079. https://doi.org/10.3390/electronics8101079