1. Introduction

Pedestrian detection is one of the hot research topics in computer vision, with widespread applications in autonomous driving, security, intelligent transportation, robotics, and other fields. With the continuous emergence of large shopping malls and urban complexes, person detection and counting have become key technological issues in public safety and emergency management. Detecting people in real-time allows public spaces to be monitored more effectively, reducing risks like crowd crushes and improving emergency response. For instance, during the “12.31” crowd crush incident at The Bund in Shanghai, despite the presence of surveillance cameras, insufficient video data analysis capabilities failed to accurately assess the number of people and safety risks, ultimately leading to the incident. Therefore, research on computer vision-based pedestrian detection holds significant practical significance and application value.

To date, research on person detection has yielded substantial results, which can be broadly categorized into three approaches: (1) detection based on hand-crafted features [

1]; (2) deep learning-based object detection [

2]; and (3) transformer-based detection methods. In the domain of manually extracted features, representative detection models include Viola–Jones [

3], histogram of oriented gradients (HOG) [

4], and deformable part model (DPM) [

5]. Lei H et al. employed features extracted from HOG and local binary patterns (LBP) for rapid pedestrian detection in still images [

6]. Hongzhi Zhou and colleagues proposed using HOG for feature extraction followed by SVM classification for pedestrian detection [

7]. Although these traditional methods involve lower computational costs compared to deep learning-based object-detection algorithms, they suffer from relatively low accuracy and are difficult to further improve.

With the continuous development and maturation of deep learning technologies, deep learning-based object detection has gradually replaced traditional methods. Current mainstream deep learning object-detection algorithms are mainly divided into three categories: two-stage algorithms (such as RCNN [

8], Faster-RCNN [

9], Mask-RCNN [

10]), single-stage algorithms (such as the SSD series [

11] and YOLO series [

12]), and end-to-end detection approaches [

13]. While two-stage algorithms generally achieve higher detection accuracy, they are hampered by lower prediction efficiency and slower processing speeds. In contrast, single-stage algorithms feature simpler architectures and higher efficiency. Given the high mobility of people and the complexity of scenes in public spaces, single-stage algorithms are more suitable for person detection. To improve the detection accuracy of single-stage algorithms, researchers have extensively improved the YOLO series models by adopting deeper network architectures, automatic anchor box learning, improved loss functions, multi-scale training, and data augmentation [

14]. Jiwoong, for example, built a bounding-box framework model with Gaussian parameters on the basis of YOLOv3, proposing a new prediction localization algorithm that significantly enhanced detection accuracy [

15]. Sha Cheng optimized both the model and loss function of YOLOv3 for pedestrian detection in campus surveillance videos, achieving indoor pedestrian detection [

16], although the results were heavily affected by lighting conditions. Fanxin Yu integrated the backbone and neck of the YOLOv5s network using the CBAM attention module to enhance pedestrian features and improve detection accuracy [

17]. Lihu Pan and colleagues addressed the challenge of pedestrian detection on roadways by introducing an advanced model, HF-YOLO, which alleviates the complexity of detecting pedestrians in intricate traffic scenes [

18]. Although these approaches achieved promising detection results, few studies have focused on densely populated environments with severe occlusions.

Transformer-based person detection leverages the transformer architecture for human–object localization and recognition, representing a cutting-edge computer vision paradigm. To tackle accuracy bottlenecks arising from diverse pedestrian poses and complex backgrounds, Maxime Oquab et al. developed DINOv2 [

19], a transformer-based method enabling real-time person detection. Building on transformer networks, Wenyu Lv and co-workers proposed RT-DETRv2 [

20]. These methods employ global self-attention and end-to-end design to achieve high-precision detection in complex scenarios without the need for manually designed anchor boxes or post-processing. However, they entail high computational complexity and hardware demands, exhibit limited performance on small objects, and thus struggle in highly crowded settings.

The aforementioned methods primarily address issues of low detection accuracy. However, in densely populated public spaces, occlusions between pedestrians are a critical factor affecting detection accuracy. Some researchers have begun to facilitate overall pedestrian detection by focusing on partial features of the human body. Since surveillance cameras in public areas are usually installed at elevated positions, the human head has naturally become a focal point for research. In densely populated public spaces, head detection faces multiple severe challenges. First, human heads in crowded scenes often appear as only a few pixels, making detailed feature extraction difficult. Second, frequent occlusions and overlaps blur the boundaries between heads or hide them completely, increasing localization difficulty; third, variations in camera mounting heights and viewing angles lead to drastic changes in head scale, and a single receptive field cannot accommodate all targets; moreover, complex and varied backgrounds in settings such as shopping malls and subway stations frequently confuse head textures, resulting in false positives; and finally, factors such as lighting conditions and compression noise further weaken small-object signals, undermining model stability. Luo Jie proposed an improved algorithm based on YOLOv5s for head detection and counting on the SCUT_HEAD dataset [

21]. Chen Yong introduced a multi-feature-fusion pedestrian detection method that combines head information with the overall appearance of the pedestrian [

22].

Existing studies have largely focused on optimizing individual modules, with few exploring the potential of multi-module collaborative enhancement for YOLOv7. Notably, multi-task collaborative training mechanisms have demonstrated the ability to resolve subtask conflicts in end-to-end person search—such as the BWIC-TIMC [

23] system and the SWIC-TMC [

24] approach—by dynamically balancing the learning weights across tasks, thereby significantly improving model performance. This paradigm offers valuable inspiration for multi-module collaborative optimization in dense scenarios. This paper optimizes the YOLOv7 model in the following ways:

Adding a CBAM attention module in the neck part, so that the network focuses more on target features while suppressing irrelevant regions;

Introducing a Gaussian receptive field-based label-assignment strategy at the junction between the feature-fusion module and the detection head to enhance the network’s receptive field for small targets;

Replacing the multi-space pyramid pooling with an SPPFCSPC module to improve inference efficiency while maintaining the same receptive field.

In our method design, we chose YOLOv7 as the base model because it maintains high accuracy while achieving state-of-the-art real-time detection performance through a series of bag-of-freebies and bag-of-specials techniques, making it highly valuable for engineering applications. We integrated the CBAM attention module primarily due to its lightweight structure, which dynamically enhances critical information along both channel and spatial dimensions, significantly improving feature representation for tiny head regions. We adopted a Gaussian receptive field-based label-assignment strategy (RFLAGauss) because it adaptively assigns positive and negative sample weights according to the distance from an object’s center—ideal for handling extremely small, pixel-sparse head targets. Finally, we introduced the SPPFCSPC multi-scale fusion module because it substantially reduces computational complexity while preserving the same receptive field as traditional SPP, further boosting inference speed and effectively integrating multi-scale information. The main contributions of this paper are as follows:

Construction of a head-detection database: A head-detection dataset was built, comprising images from various environments such as classrooms, shopping malls, internet cafes, offices, airport security checkpoints, and staircases.

Optimized YOLOv7 network design for head detection: An improved YOLOv7 network was proposed for head detection. Specifically, the CBAM attention module was introduced in the neck section; a Gaussian receptive field-based label-assignment strategy was implemented between the feature-fusion module and the detection head; and finally, the SPPFCSPC module was employed to replace the multi-space pyramid pooling.

2. YOLOv7 Model Optimization

2.1. Overall Model Framework

The YOLOv7 model [

25] was proposed in 2022 and consists of four main modules: the input, backbone, neck, and head. The input module scales the input images to a uniform size to meet the network training requirements. The backbone is used for extracting features from the target images, the neck fuses features extracted at different scales, and the head adjusts the image channels of these multi-scale features and transforms them into bounding boxes, class labels, and confidence scores to achieve detection across various scales.

Although YOLOv7 shows significant improvements in overall prediction accuracy and operational efficiency compared to its predecessors, there remain some noteworthy issues in small-object detection. First, small objects generally occupy few pixels in an image, resulting in relatively vague feature representations; this makes it difficult for the model to capture sufficient detailed information during feature extraction, leading to missed or false detections. Second, since small objects are easily confused with the background or noise, the model might struggle to adequately differentiate between target and background during training, thereby affecting detection accuracy. Moreover, the existing network architecture may not fully address the feature representation requirements of both large and small objects during multi-scale feature fusion, which can lead to the attenuation of small object features and reduced detection performance. Finally, in real-world scenarios, small objects often exhibit diverse shapes and severe occlusion, further increasing detection challenges. Therefore, despite YOLOv7’s excellent overall performance, structural and strategic optimizations are urgently needed to enhance the accuracy and robustness of small-object detection.

In this study, we enhance the original YOLOv7 architecture to better address small-object detection, as illustrated in

Figure 1. First, we embed the CBAM attention mechanism into the neck module (yellow region) to more effectively capture the fine-grained features of small objects. Next, we insert a Gaussian receptive field-based label-assignment strategy, RFLAGauss, between the standard feature-fusion module and the detection head (orange region), using the CBAM-refined features as input to perform precise boundary- and shape-aware label allocation. Finally, we replace the conventional multi-space pyramid pooling with the SPPFCSPC module (brown region), which aggregates multi-scale contextual information without enlarging the receptive field, thereby preventing information loss during feature fusion.

2.2. CBAM

YOLOv7 employs convolutional neural networks (CNNs) for feature extraction. However, traditional convolution operations are fixed in nature and cannot dynamically adjust their focus based on varying input content. Designed with an emphasis on computational efficiency and fast inference, YOLOv7 may overlook subtle yet critical information within feature maps, especially when dealing with complex backgrounds or small-scale targets. In dense scenarios, small objects like heads often appear with blurred features due to sparse pixels and background interference. Conventional convolutional layers lack adaptive focusing ability, which increases the likelihood of missed detections.

Since the vanilla YOLOv7 network does not incorporate any attention mechanism, it struggles to capture the faint features of small targets. To address this, we embed a convolutional block attention module (CBAM) into the neck module. By applying both channel-wise and spatial attention, CBAM more precisely focuses on small-object regions, thereby significantly improving recall for these targets.

CBAM is a lightweight yet effective attention module designed for feedforward convolutional neural networks [

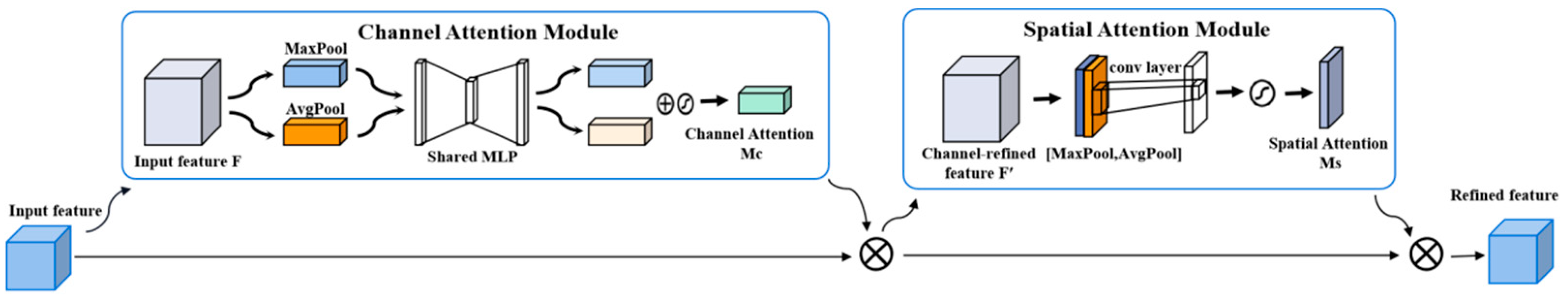

26]. It consists of two sequential sub-modules: the channel attention module (CAM) and the spatial attention module (SAM). CAM helps the network concentrate more on foreground and semantically meaningful regions, while SAM allows the model to emphasize spatially informative areas rich in contextual information across the entire image. The structure of the CBAM module is illustrated in

Figure 2.

The core concept of the channel attention mechanism is consistent with that of the squeeze-and-excitation networks (SENet) [

27]. As shown in

Figure 2, the input feature map

F(

H ×

W ×

C) is first subjected to both global max pooling and global average pooling along the spatial dimensions (height

H and width

W), producing two feature descriptors of size 1 × 1 ×

C. These two descriptors are then passed through a shared-weight two-layer multilayer perceptron (MLP) to learn inter-channel dependencies. A reduction ratio

r is applied between the two layers to reduce dimensionality. Finally, the outputs from the MLP are combined via element-wise addition, followed by a sigmoid activation function to generate the final channel attention weights, denoted as

Mc. The formulation is as follows:

where

F is the input feature map;

AvgPool(

F),

MaxPool(

F) are the global average pooling and max pooling outputs;

MLP is a two-layer fully connected network with a bottleneck structure using a reduction ratio

r;

σ denotes the sigmoid activation function;

Mc is the channel attention weight vector.

The spatial attention mechanism takes the feature map F output from the channel attention module as its input [

28]. First, it applies global max pooling and average pooling along the channel dimension to generate two feature maps of size

H ×

W × 1. These two maps are then concatenated along the channel axis.

A convolution operation with a 7 × 7 kernel is then applied to reduce the dimensionality, resulting in a single-channel spatial attention map. Finally, a sigmoid activation function is used to learn the spatial dependencies among elements, producing the spatial attention weights

Ms [

20]. The formulation is as follows:

where

F is the input feature map; [.;.] indicates concatenation along the channel axis;

f7×7 represents a convolution operation with a 7 × 7 kernel;

σ denotes the sigmoid activation function;

Ms is the learned spatial attention map.

The channel attention mechanism dynamically adjusts the weight of each channel based on the global information of each channel in the image, allowing the model to focus more on feature channels with higher information content. Spatial attention, on the other hand, helps the model focus on the location of the target object by emphasizing significant spatial features, rather than being distracted by the background or noise.

First, the channel attention module processes each feature channel by applying global average pooling and max pooling to extract global information. These are then passed through a shared multilayer perceptron (MLP) to generate channel-wise attention weights. This step enables the network to automatically identify the feature channels that are more important for the detection task while suppressing those with irrelevant or noisy information, thus enhancing the quality of feature representation.

Next, the spatial attention module processes the channel-weighted feature maps by aggregating channel information (e.g., via average pooling and max pooling) to generate a spatial descriptor, followed by a convolution layer to generate the spatial attention map. This mechanism clearly identifies the regions in the image that require more attention, thereby strengthening the prominence of target regions during the feature-fusion phase.

For small-object detection, this detailed attention mechanism is particularly critical because small objects often occupy a small area in the image and have weak features, making them prone to being ignored or confused with the background during traditional convolution operations. By incorporating the CBAM module, YOLOv7 is able to more effectively focus on these subtle regions, enhancing the feature expression of small objects and consequently improving detection accuracy and robustness.

2.3. Receptive Field Enhancement

YOLOv7 employs a label-assignment strategy based on fixed intersection over union (IoU) thresholds. However, this fixed approach often struggles to correctly classify borderline samples as positive or negative, and it proves limited when dealing with targets of varying scales or irregular shapes. In this study, we introduce a label-assignment strategy based on Gaussian receptive field (RFLAGauss), which is applied between the feature-fusion module and the detection head. This strategy dynamically adjusts the assignment weights such that anchor points closer to the target center receive higher importance, thereby enabling a more reasonable allocation of positive and negative samples.

The Gaussian receptive field adapts well to different object sizes. It concentrates more around the center for small objects, while expanding suitably for larger ones to capture broader features. This dynamic mechanism also helps mitigate the imbalance between positive and negative samples, preventing excessive negative samples from suppressing the learning process. Moreover, it enhances the quality of positive samples, enabling the detection head to learn object features more accurately.

The underlying principle of the Gaussian receptive field-based label-assignment strategy, RFLAGauss, is illustrated in

Figure 3. The process begins with feature extraction, followed by convolution with a Gaussian kernel. Finally, the features are aggregated into a single feature point, resulting in an effective receptive field. Label assignment is then performed based on this adaptive receptive field to better accommodate objects of various sizes.

Traditional label-assignment methods often rely on fixed or simplistic rules to determine which regions should be regarded as positive samples. However, such methods tend to struggle with small-object detection due to their limited pixel representation and indistinct features, resulting in inaccurate label allocation and degraded detection performance. The RFLAGauss strategy addresses this issue by introducing a Gaussian-based weighting mechanism within the receptive field, enabling more fine-grained and adaptive label assignment.

Specifically, this strategy is applied between the feature-fusion module and the detection head, where a Gaussian weighting function is computed for each candidate region or feature point. Regions closer to the object center are assigned higher weights, while those farther away receive lower ones. This Gaussian receptive field-based allocation mechanism captures core object features more effectively and helps suppress background noise during training. For small objects, where only a limited number of pixels are available, such precise label distribution is particularly critical: it ensures that even minimal object regions are given sufficient positive sample weight, thereby enhancing the model’s sensitivity and accuracy in detecting small targets.

Furthermore, the Gaussian receptive field approach alleviates issues in multi-scale feature alignment. Conventional methods often lose critical details of small targets during scale transformations. In contrast, RFLAGauss dynamically adjusts the weights, guiding the detection head to focus more on the central and adjacent regions of the target across different scales. This improves the localization accuracy and confidence scores for small objects. As a result, this refined label-assignment strategy not only boosts the recall rate for small-object detection but also reduces false positives, ultimately enhancing the model’s overall robustness and detection performance.

2.4. SPPFCSPC

In the previous section, the label-assignment strategy between the feature-fusion module and the detection head was optimized, improving the efficiency of feature utilization and the rationality of sample distribution. However, in object-detection tasks, the size of the receptive field is equally critical for capturing multi-scale targets and global contextual information.

To address this, the YOLOv7 network employs spatial pyramid pooling connected spatial pyramid convolution (SPPCSPC) to expand the receptive field and enhance the model’s adaptability to input images of varying resolutions. The underlying mechanism is illustrated in

Figure 4a. The pooling operation within the SPPCSPC module is computed using the following formula:

where

R denotes the output;

represents the result of tensor concatenation;

F represents the input feature map;

k represents the kernel size used in the pooling;

p denotes the padding applied to the input feature map.

The SPPCSPC structure, however, increases the computational complexity of the network [

29] and suffers from severe information loss when detecting small objects. In this work, we draw on the fast spatial pyramid pooling of SPPF and the feature split–merge–fusion concept of CSP to design the SPPFCSPC module. The SPPFCSPC structure is an optimization of the SPPCSPC structure, incorporating the design principles of the SPPF module. The structure of the SPPFCSPC module is shown in

Figure 4b.

The pooling part of the SPPFCSPC module is calculated as shown in Equations (4)–(7). By connecting the output of three independent pooling layers with smaller pooling kernels, the computational load is reduced, and the pooling layer with larger pooling kernels produces results that accelerate the process while maintaining a constant receptive field [

30].

where

R1 denotes the pooling layer result with the smallest pooling kernel;

R2 represents the pooling layer result with a medium pooling kernel;

R3 indicates the pooling layer result with the largest pooling kernel;

R4 represents the final output;

denotes the tensor concatenation.

By introducing the SPPFCSPC module to replace the traditional multi-scale pyramid pooling, the SPPFCSPC module combines the advantages of fast spatial pyramid pooling (SPPF) and the cross stage partial (CSP) structure. It expands the receptive field through multi-scale pooling operations to capture information at different scales in the image, while also using the CSP structure to achieve efficient segmentation and fusion of feature maps. This reduces redundant computations and improves gradient flow efficiency.

In traditional multi-scale pyramid pooling, although multi-scale features can be obtained, there may be limitations in information integration and detail preservation, especially in small-object-detection scenarios, where key information is often lost. The SPPFCSPC module, however, effectively aggregates global features while better preserving local and fine-grained information, enabling the model to more sensitively capture small object features.

As a result, when the detection head of YOLOv7 receives feature maps processed by the SPPFCSPC module, the information passed through is richer and more accurate. This not only improves the recall rate for small-object detection but also effectively reduces false-positive rates, thereby enhancing overall detection performance and robustness.

4. Conclusions

This study focuses on personnel detection in dense environments and proposes detecting human heads to address issues like occlusion. The YOLOv7 model was selected as the base network for head detection in this study. Based on the characteristics of the dataset used, several improvements were made to the YOLOv7 network. Firstly, the CBAM attention module was added. Secondly, a Gaussian receptive field-based label-assignment strategy was applied at the feature-fusion and detection-head connection in the original model. Finally, the SPPFCSPC module was used to replace the multi-scale pyramid pooling (SPP). The experimental comparative analysis led to the following conclusions.

In this dataset, the YOLOv7 model with the CBAM attention mechanism achieved a precision of 93.7%, recall of 93.0%, and mAP@0.5 of 0.906. The precision outperformed the SE, ECA, and GAM attention mechanisms, while the recall outperformed SE, CA, and ECA, and the mAP value was superior to SE, CA, ECA, and GAM attention mechanisms.

The three improvements made to YOLOv7 enhanced the model’s precision and inference capability. The precision increased from 92.4% to 94.4%, the recall improved from 90.5% to 93.9%, and the inference speed improved from 87.2 FPS to 94.2 FPS. Compared to single-stage object-detection models like YOLOv8, the improved YOLOv7 model exhibited better accuracy and inference speed. Compared to two-stage detection models, the model’s inference speed is significantly superior to networks like Faster R-CNN, Mask R-CNN, DINOv2, and RT-DETRv2.

Future research will focus on improving the efficiency and generalization ability of the model. On the one hand, by further expanding the data set to cover more diverse scenes and lighting conditions, the adaptability and robustness of the model can be enhanced. On the other hand, more efficient network architecture and optimization algorithms are explored to further improve the detection performance. Meanwhile, it may be beneficial to explore the development of adaptive confidence threshold algorithms to adjust the detection sensitivity in real time according to the scene risk level, taking into account efficiency and accuracy. Further, future studies may wish to consider deploying the model on different devices for testing to improve its application value.