Abstract

The advancement in Artificial Intelligence, particularly the application of deep learning methodologies, has allowed for the implementation of modern smart transportation systems, which are making the driver experience increasingly reliable and safe. Unfortunately, a literature review revealed that no survey paper provides a collective overview of all the machine learning applications involved in smart transportation systems. To fill this gap, this paper provides a discussion on the role and advancement of deep learning methodologies in all the smart mobility aspects, highlighting their mutual dependencies. To this end, three key pillar areas are considered: smart vehicles, smart planning, and vehicle network and security. In each area, the subtasks commonly addressed by machine learning are pointed out, and state-of-the-art techniques are reviewed, with a final discussion about advancements according to recent findings in machine learning.

1. Introduction

In the last two decades, roads have significantly increased the number and type of vehicles on the streets, severely affecting several related issues such as road safety, traffic congestion, and environmental pollution. In 2022, there were 42,500 fatalities in the United States [1] and 20,600 in the European Union [2]. Research indicates that 90% of car accidents are due to human error, whereas only 10% can be attributed to vehicle malfunction or other factors [3]. Consequently, most accidents can be significantly reduced by employing vehicles fitted with advanced assistive technologies based on emerging innovations.

In this context, advances in Artificial Intelligence, particularly the application of deep learning (DL) methods, have been game-changers that largely improved detection and prediction capabilities, increasing reliability and safety provided by driver assistance systems.

These capabilities have enabled the development of vehicles with varying levels of automation. Smart vehicles with Advanced Driver Assistance Systems (ADASs) offer features such as lane keeping, adaptive cruise control, and collision avoidance. As automation increases, we are moving towards the idea of Connected and Autonomous Vehicles (CAVs), which use data collected by sensors, cameras, and connectivity features as input for Artificial Intelligence to make decisions about how to operate at different levels [4].

1.1. Related Works

Some works have already tried to summarise deep learning applications for smart transportation systems. In [5], the authors present a comprehensive survey on smart vehicles. They provide a generic overview covering hardware, software, and network aspects, focusing their attention on the technological trends. Applications of Artificial Intelligence (AI) are generically presented, while the work mainly focuses on networking infrastructure, standards, challenges, and security issues. A vehicle-centred survey is presented in [6], where the problem is mainly treated from the point of view of the levels of autonomy. A similar approach is used in [7], where principal clustering is performed depending on major embodiments (measurement, analysis, and execution) of autonomous driving systems. A work surveying the area of publicly available datasets oriented towards autonomous driving is instead performed in [8], whereas smart mobility in traffic management is provided in [9], where a generic traffic management architecture is presented and a set of recently proposed research prototypes are compared in depth. State-of-the-art technologies for infrastructure monitoring are presented in [10], where the authors discuss contact and noncontact measurements and evaluation techniques for pavement distress evaluation systems. A comprehensive overview of the architecture associated with intelligent vehicles, network functionalities, security issues, vulnerabilities, and possible countermeasures is provided in [11,12]. Further, in terms of network perspective, a detailed discussion on the necessity of dedicated sub-networks devoted to the specific needs of different agents is provided in [13]. In the work, the authors present a comprehensive investigation and analysis of network slicing management, both in general use cases and in its specific application to smart transportation. In the smart transportation system, many tasks are strictly related but often treated separately. Indeed, most of the proposed models can only work on one single task, leading to redundant computations, which may cause efficiency problems due to the limited computation power. In [14], a wide discussion on the application of Multi-Task Learning, i.e., a technique to train a single model that can work for multiple tasks, in the smart transportation field is presented. A comprehensive overview of available datasets for smart navigation is provided in [15]. Datasets are classified depending on the specific task (e.g., Perception Datasets, Mapping Datasets, and Planning Datasets), and an analysis of their impact is provided to select the most important ones. Finally, foundation models, i.e., models trained on extremely large datasets and highly computationally demanding, are discussed from a transport system perspective in [16].

1.2. Motivation and Contribution

The discussion so far highlights how smart mobility is a complex ecosystem made up of multiple specific topics that, even though focused on specific aspects and/or research topics, are strictly related to each other. Unfortunately, the literature review revealed that no survey covers all the DL applications involved in smart transport systems. They either cover a specific aspect (hardware, networking, applications, or datasets) or focus on a single application (autonomous driving, traffic management, or infrastructure monitoring). Additionally, they do not discuss how recent DL technologies have impacted the cross-application, lower-level tasks of smart mobility, which is fundamental to keeping abreast of open challenges and potential future directions.

To fill this gap, this paper provides an overview of the role and evolution of DL methods in smart mobility, considering three key pillar areas: smart vehicles, smart planning and vehicle network and security. Mutual interactions and interdependencies are emphasised to make the reader aware of how each specific research work must be guided by advancements and the criticality of the related fields. In each area, the subtasks commonly addressed by machine learning (ML) are discussed, and the state of the art is reviewed, with a concluding discussion on the expected advances according to the latest findings in ML.

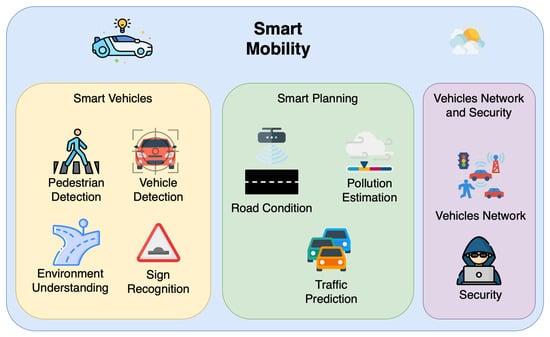

Figure 1 schematically shows the taxonomy proposed above, and the rest of the paper is structured according to it.

Figure 1.

Smart mobility represented as the contribution of three main perspectives: the vehicle, the environment, and security.

1.3. Methodology

The preliminary selection of papers followed a systematic and multi-stage approach to ensure comprehensive and relevant coverage of the literature. To capture a wide range of scholarly work, searches were conducted across three major academic databases: Elsevier Scopus, chosen for its broad interdisciplinary coverage; Web of Science, selected for its focus on high-impact journals and robust citation tracking; and Google Scholar, included to account for influential preprints and grey literature. The search queries were carefully designed by combining task-specific keywords derived from Figure 1 with the terms “machine learning” OR “deep learning” and Boolean operators. For instance, an example query for one task took the following form: (“pedestrian detection” AND (“machine learning” OR “deep learning”)). This initial search yielded thousands of papers, necessitating a structured filtering process. First, duplicates were removed by using reference management tools such as Zotero or EndNote. Next, non-English publications, technical reports, and studies lacking empirical validation were excluded to maintain academic rigour. The remaining papers were then screened based on their publication venue rankings, prioritising peer-reviewed articles from top-tier journals and conferences, including those indexed in CORE and SCI/SCIE or ranked within JCR Q1-Q2. Additionally, citation counts were normalised by publication year to account for differences in exposure time—for example, a paper published in 2020 with 100 citations was weighted more heavily than a 2022 paper with 50 citations. To focus on recent advancements, the final selection was restricted to papers published after 2015. Finally, backward and forward snowballing techniques were applied to seminal works, examining their references and citations to ensure no key contributions were overlooked. This thorough and multi-criterion approach ensured a balanced yet selective representation of the most relevant and high-quality literature in the field.

1.4. Structure

The paper is structured as follows: Section 2 is dedicated to smart vehicles through a complete analysis of both hardware and software components, while how to address the monitoring of the environment, traffic, and road conditions is discussed in Section 3. The role of networks in linking vehicles and infrastructure is the subject of Section 4, together with security issues. All these aspects are then brought together in the analysis of available datasets, which forms the core of Section 5. Then, Section 6 reports the most relevant implementation of AI in cities, and Section 7 discusses open challenges, new research horizons, and the overall conclusions. Finally, Section 8 concludes the paper.

2. Smart Vehicles

The integration of sensors and microprocessors into vehicles to enhance braking, stability, and overall comfort began in the late 1960s. Today, modern vehicles can feature up to a hundred microprocessors, along with numerous sensors and actuators, and are commonly referred to as smart vehicles.

The automation capabilities of vehicles introduced by the above-mentioned technologies were ranked from 0 to 5 by the Society of Automotive Engineers (SAE) [7]. Level 0 refers to fully manual vehicles, whereas Levels 1 and 2 include the level of automation guaranteed by Advanced Driver Assistance Systems (ADASs) that exploit sensors to provide features such as adaptive cruise control, lane keeping, and collision avoidance. From Level 3 onwards, we can talk of Connected and Autonomous Vehicles (CAVs) that use sensor data and connectivity features to perform higher-level decisions, such as how to steer, accelerate, and brake autonomously up to Level 5, in which any human control is missing.

Anyway, it is worth noting that automation capabilities arise not just from the availability of various data sources (local or remote) and computing resources to process the generated data but primarily from the set of algorithms employed to perform intelligent tasks most effectively. Accordingly, the hardware and software levels can be distinguished to provide a schematic overview of the technologies used in the following subsections.

2.1. Hardware Layer

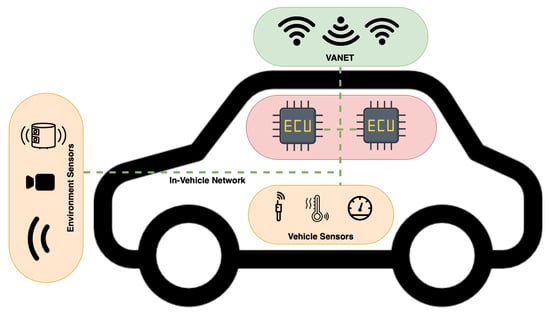

Smart vehicle hardware can be roughly divided into sensors, which are responsible for sensing the environment and in-vehicle state; Electronic Control Units (ECUs), which are responsible for processing the data; and vehicle networks, which are responsible for connecting the sensors, ECUs, and vehicle controllers but also for data exchange with other entities (i.e., other vehicles and remote data sources). A schematic reference of such a division is reported in Figure 2.

Figure 2.

A representation of the main hardware pillars in smart vehicles: environmental and in-vehicle sensors (orange boxes), like LiDAR, camera, radar, tire pressure, temperature, and speed sensors, retrieve data that are processed by ECUs (red box). The network (green box and dotted line) guarantees the correct in-vehicle and out-of-vehicle exchange of information.

Sensors primarily embrace in-vehicle monitoring and control and can be oxygen sensors, accelerometers, gyroscopes, tyre pressure sensors, fuel level sensors, and all the sensors dedicated to engine monitoring [12]. Beyond the vehicle perspective, passive and active sensors such as cameras, LiDAR sensors, and radars provide information about the outside world. Cameras are passive sensors that capture images of the environment and can be used for object detection, lane following, and traffic sign recognition. In this category, we can include monocular cameras, stereo-cameras, and infrared (IR) or thermal cameras. Monocular cameras provide comprehensive information about the scene, showing shapes and appearance properties that can be used to determine road geometry, object class, and road signs, but do not provide depth knowledge. This can be compensated by stereo-cameras, which require additional processing for correct calibration. Both of them suffer from poor image quality in low-light conditions, which can be overcome by using IR and thermal cameras, which, anyway, are much more expensive. In contrast, LiDAR is a type of active sensor that uses a laser beam to create a 3D point cloud that provides a reliable measure of the distance of objects. Unfortunately, LiDAR sensors are expensive, suffer under certain weather conditions such as fog or heavy rain, and are unsuitable in some situations, such as crowded environments, where visual information is required for a correct understanding of the scene. Finally, radars use radio waves to detect objects and measure their distance, speed, and angle of arrival and are mostly dedicated to the interaction with other vehicles at high speed [17]. Radar measurements can be properly performed even under adverse conditions such as low light, fog, rain, or night-time, but they are affected by the problem of signal cluttering and low resolution, which does not allow the precise shape of objects to be retrieved [18].

The data produced by all the above-mentioned sensors are then processed by ECUs, which retrieve and process the data and make decisions to regulate the response of the vehicle’s systems. It is worth noting that depending on the type of processing required, ECUs can range from low-power microprocessors, responsible for specific ad hoc processing, to high-performance Graphic Processing Units (GPUs), usually dedicated to the deployment of Artificial Intelligence features.

Finally, vehicle networks are responsible for in-vehicle and out-of-vehicle communication. More precisely, in-vehicle networks allow for communication between ECUs and sensors within the vehicle. There are several types of IVNs, each with its characteristics. The most common types include Controller Area Network (CAN), Local Interconnect Network (LIN), FlexRay, and Ethernet [19]. On the other hand, Vehicular Ad hoc Networks (VANETs) deal with external communication, enabling vehicles to exchange information online, including remote vehicle monitoring.

2.2. Perception and Control Layer

The data coming from vehicle sensors need to be processed to obtain higher-level information useful to perceive and understand the road environment dynamically and implement necessary countermeasures for autonomous behaviours.

The road environment can be divided into two main categories: moving and fixed entities. The former are represented by pedestrians and other vehicles; their detection is crucial to avoiding collisions. Fixed entities refer to all road infrastructure, such as lanes, traffic lights, and traffic signs, which need to be properly detected so that a vehicle can navigate according to the road rules while avoiding violations or risky manoeuvres caused by the driver’s carelessness.

2.2.1. Pedestrians and Vehicles

Pedestrians and other vehicles are active agents, with complex and interactive movements, sharing both on- and off-the-road spaces (e.g., parking areas). It is, therefore, mandatory for smart vehicles to be equipped with AI features capable of providing alerts for the driver or implementing autonomous countermeasures (steering and braking) to avoid injuring people or causing accidents. This can be realised by the cooperation of multiple AI models, each addressing specific tasks, from low-level machine vision detection to high-level behaviour prediction [20].

Detecting vehicles and people, tracking their positions, and predicting their movements over time to avoid colliding with them are all tasks included in more general fields such as object detection and classification and multi-object tracking (MOT). A summary of some relevant works in the literature among the ones cited in the following is listed in Table 1.

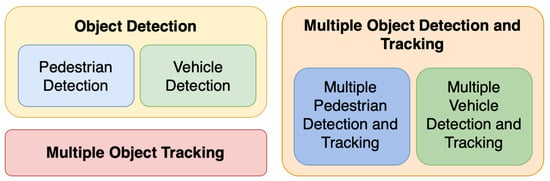

The road context poses specific challenges that require dedicated investigations. In particular, pedestrians and other vehicles have different sizes and different usual dynamics, which, in some cases, introduces the need for even more specific algorithm tuning. Additionally, depending on the specific object of interest and driving conditions, each of the previously discussed sensors shows specific advantages and disadvantages, leading to the involvement of sensor fusion strategies [21]. An additional level of complexity in defining a well-structured literature review is given by the strong interdependence of the involved tasks, which has led many works to treat detection and tracking as a single objective. Figure 3 attempts to give a schematic representation of the main computational tasks involved in this type of research. Each task will be analysed in the following.

Figure 3.

A compact representation of the computational tasks involved in detection and tracking in intelligent vehicles.

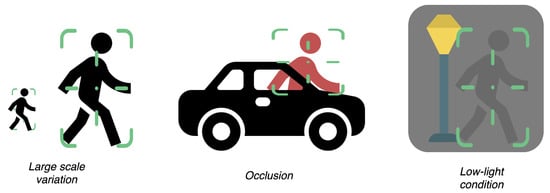

Pedestrian monitoring in the road environment means going beyond their detection, tracking their path, and predicting their dynamics and behaviours. This goal goes through three main steps: the detection, tracking (even multiple entities over time [22]), and finally, understanding and predicting the behaviour of entities [23]. Anyway, standard algorithms for object detection and tracking tasks may fail and do not provide enough information for behaviour understanding. Figure 4 reports some of the challenges that mainly limit reliable detection (e.g., large-scale variations, frequent partial or total occlusions, and low-light conditions).

Figure 4.

An intuitive representation of the main challenges involving pedestrian detection. From left to right: large-scale variation, occlusion, and low-light conditions.

In recent years, many surveys have been devoted to pedestrian detection, tracking, and behaviour prediction. A discussion covering the whole pipeline is the focus of a two-part review where Part I [20] investigates image detection and tracking, while Part II [20] is dedicated to methods for understanding human behaviour. In [24], a review of the research progress in pedestrian detection is presented, focusing on the occlusion problem, while in [25], a broad discussion on the type of features used in the detection process is made.

Recent detection methods have been largely based on DL approaches and can be divided into two groups: two-stage detectors, based on the regional proposal method and sparse prediction, and single-stage detectors. Two-stage detectors work by generating proposal regions of interest (ROI) that are successively classified as pedestrian or non-pedestrian and include approaches such as regional-fast convolutional network (RFCN) [26] and region-CNN (R-CNN) [27]. A notable solution is provided in [28], where the authors propose a two-stage detection architecture which eliminates the current two-stage detectors’ redundancy by replacing the region proposal network and bounding box head with a novel focal detection network and fast suppression head. On the other hand, non-regional approaches, such as YOLO [29] and Single-Shot Detector (SSD) [30], perform detection in a single step. These approaches perform detection in a single pass, enabling fast, accurate, and scalable detection capabilities that classical methods struggle to achieve due to their complex, multi-stage approach and limited feature representations. Additionally, SSD improves multi-scale detection efficiency. In this category, the authors of [31] proposed Localised Semantic Feature Mixers (LSFM), a novel, anchor-free pedestrian detection architecture capable of outperforming the state of the art on several datasets.

Part of the literature has focused on the problems of occlusion. In [32], the authors use R-CNN to deal with occlusions of pedestrians in crowds. A notable contribution is provided in [33], where DeepParts is proposed, a powerful detector that can detect pedestrians by observing only part of a proposal.

Dealing with different lighting conditions is another key problem, which is usually handled by multi-spectral images. Most works address this issue by using a multi-spectral pedestrian dataset published in 2015 [34]. The authors of [35] process far infrared and RGB images simultaneously by using a CNN. In [36], a Single-Shot Detector (SSD) is used for multi-spectral pedestrian tracking.

In [37], the authors leveraged GANs and proposed a new architecture using a cascaded SSD to improve pedestrian detection at a distance. To avoid the problem caused by illumination changes in [38], a LiDAR sensor for pedestrian identification is employed, while the combination of LiDAR and camera sensors is proposed in [39]. In the latter work, 3D LiDAR data are used to generate object region proposals that are mapped onto the image space, from which regions of interest (ROI) are fed into a CNN for final object recognition. A strategy using camera and radar data fusion is presented in [40] to address scenarios where pedestrians are crossing behind parked vehicles.

Detected elements can be further processed with a recognition step that outputs attributes such as body pose and facial features. These features can help to understand whether the pedestrian’s head is turned towards the vehicle or away from it, while a particular emotion may be less central to the jaywalking process [41]. Body language also plays a key role: deliberate gestures, body posture, stance, and walking style can also predict pedestrian behaviour [42]. A more general understanding of pedestrian behaviour can be obtained by activity recognition [43], for example, by combining different aspects of information, e.g., poses and trajectories [44]. In [45], the authors propose a benchmark based on two public datasets [46,47] for pedestrian behaviour understanding and provide a rank of several models considering their performance concerning specific properties of the data. Recently proposed works leverage multi-modal methods that jointly exploit inputs from multiple sources (i.e., onboard cameras and vehicle ego-motion) [45,46,48,49].

Table 1.

This is a summary of some relevant works in the literature. From the leftmost column, the table presents the corresponding reference number and year of publication (in brackets), the target to be investigated (P: pedestrians; V: vehicles), the application goal (D: detection; T: tracking; A: action/activity), and the key aspect that made the work noteworthy.

Table 1.

This is a summary of some relevant works in the literature. From the leftmost column, the table presents the corresponding reference number and year of publication (in brackets), the target to be investigated (P: pedestrians; V: vehicles), the application goal (D: detection; T: tracking; A: action/activity), and the key aspect that made the work noteworthy.

| Work (Y) | Target | Goal | Key Aspect |

|---|---|---|---|

| [33] (2015) | P | D | Detection by parts |

| [50] (2017) | V | D | Convolutional 3D detection |

| [51] (2017) | P | T | Recurrent neural networks |

| [52] (2018) | V | D | Multi-modality (LiDAR + RGB) |

| [39] (2020) | P | D | LiDAR–RGB fusion |

| [26] (2021) | P | D | Region proposal |

| [49] (2021) | P | A | Multi-modality (LiDAR + RGB) |

| [53] (2021) | V | DT | YOLO and DeepSORT |

| [54] (2022) | V | D | Convolutional block attention |

| [31] (2023) | P | D | Anchor-free detection |

| [55] (2024) | PD | T | Unifying Foundation Trackers |

Distinguishing between different types of road users is essential due to both their differences in appearance and dynamic characteristics. Pedestrians typically move at low speeds, often with irregular or unpredictable trajectories, and have small, upright silhouettes. In contrast, vehicles tend to be larger, move faster, and follow more constrained paths governed by road infrastructure. Moreover, substantial variability exists even within the broader category of vehicles. For instance, bicycles and motorcycles are smaller, more agile, and capable of navigating through tighter spaces, while trucks and vans are bulkier, with limited manoeuvrability and larger turning radii. Cars, which are the most common vehicles, are somewhere in between, and their behaviour differs based on context, driving style, and vehicle capabilities. These intra-class variations in physical dimensions, acceleration profiles, and interaction patterns with other road users introduce an additional layer of complexity for detection and subsequent tasks. It is clear that effectively modelling this heterogeneity is crucial to accurate detection, classification, and behaviour forecasting.

The solution proposed in [56] consists in projecting radar signals onto the camera plane to obtain radar range velocity images. The authors of [53] provide a vehicle detector based on YOLO and simultaneously address the tracking problem with DeepSORT. A convolutional block attention module (CBAM) is introduced into the YOLOv5 backbone network to highlight critical information for the vehicle detection task and ignore useless information [54]. YOLO is also the core of [57], which highlights that modern techniques based on DL are more optimised and accurate. In [50], a fully convolutional network-based 3D detection technique is applied to point cloud data, increasing performance over previous point cloud-based detection approaches on the KITTY dataset. Data fusion of LiDAR and camera sources is exploited on the YOLO backbone for improved multi-modal detection [52]. A scale-insensitive Convolutional Neural Network (SINet) is developed in [58] for the fast detection of vehicles with large-scale variance.

In smart transportation systems, object detection and tracking are inherently interconnected tasks that, working in tandem, ensure complete situational awareness. This allows for monitoring and predicting the movement of vehicles, pedestrians, and other road users in real time. Detection identifies and localises objects of interest, while tracking associates these detection results over time to estimate the objects’ trajectories. Reliable tracking depends heavily on the accuracy and consistency of the initial detection step, as missed or false detections can lead to tracking errors or failures. More specifically, once the element of interest has been detected, its localised window (visual or other sensor data) is sent to the tracking stage, which generally consists of two main steps: (1) a prediction phase, which estimates the possible future state of the object, and (2) an update phase, which uses detection to refine successive estimates. Furthermore, tracking operations often involve more than one object. In this case, one speaks of multi-object tracking (MOT), whose additional task is to distinguish objects and correctly associate their identities with the corresponding tracks. This task is particularly challenging when tracks overlap or when pedestrians or vehicles are obscured by obstacles, a situation that often occurs in crowded scenarios.

The works in [59,60] propose a comprehensive review of the recent literature, whereas an interesting perspective on multiple-pedestrian tracking is indeed performed in [22]. Multiclass multi-object tracking is the focus of [61], while in [51], the authors present an end-to-end human tracking approach based on recurrent neural networks. The tracking of a pedestrian on thermal images at night is proposed in [62], which implements an encoder–decoder CNN. In [63], the authors propose a novel online process for track validity that temporally distinguishes between legitimate and ghost tracks, along with a multi-stage observational gating mechanism for incoming observations. Scene understanding can also provide contextual information that improves the tracking algorithm, especially in crowded scenes. In [64], a model called ’interaction feature strings’, which is based on the decomposition of an image and extracts features from the observed scene, has been developed. A separate discussion can be reserved for the works dealing with both detection and tracking issues and/or involving specific data fusion to compensate for the pros and cons of different sensors [21,65]. Another recent solution is proposed in [66], where the authors propose a Stepwise Goal-Driven Network that estimates and uses goals at multiple temporal scales. More precisely, they use an encoder that captures historical information, a stepwise goal estimator that predicts successive future goals, and a decoder devoted to the prediction of future trajectory. A query-centric paradigm for scene encoding is the key point of [67]; it enables the reuse of past computations by learning representations independent of the global spacetime coordinate system. Additionally, the authors propose an anchor-free query strategy to generate trajectory proposals recurrently, allowing for the use of different scene contexts when decoding waypoints at different horizons.

Lastly, it is worth noting how the need for an even greater capacity for generalisation is shifting research towards the use of foundation models, large, pre-trained models that can be adapted to a wide range of tasks with minimal additional training. In [68], the authors propose the integration of the Segment Anything Model (SAM) [69] into a multiple-pedestrian tracking method. The authors of [55] present a general framework for unifying different tracking tasks based on a foundation tracker [70].

2.2.2. Road Infrastructure

Automated driving and vehicle safety systems need object detection. Object detection must be accurate overall, be robust to weather and environmental conditions, and run in real time.

Images of traffic signs taken in real-world situations exhibit various distortions because of varying light directions, fluctuating light intensity, and different weather conditions. Noise, partial or complete underexposure, partial or complete overexposure, and significant variations in colour saturation (caused by light intensity) of the traffic signs are all introduced by images taken under multiple conditions. Furthermore, the traffic sign recognition task is difficult for a computer vision system due to the vast range of viewing angles, viewing distance, and shape/colour deformations of traffic signs. To depict the shapes of traffic signs, researchers typically extract human-defined local features from input images before using a classifier to predict the class label. Convolutional Neural Network (CNN)-based solutions have recently gained popularity in the computer vision community due to their powerful capacity to automatically learn features through an internal process [71]. Numerous CNN-based strategies, even with energy-efficient solutions [72], have been presented for this task [73]. A pivotal point, which is the core in [74], regards the generalisation capabilities under extreme weather conditions. In particular, the authors propose an efficient feature extraction module based on a Contextual Transformer and a CNN with the aim to utilise the static and dynamic features of images to provide stronger feature enhancement capabilities and improve inference speed. YOLO (You Only Look Once) is an algorithm based on Deep Neural Networks with real-time object detection capabilities. It is the state-of-the-art technology in road sign detection and recognition due to its speed and precision [75]. It has also been enhanced by targeted fine-tuning [76], slightly modified architectures [77,78], and pooling strategies [79]. Despite progress, all existing technologies still struggle under challenging conditions such as adverse weather and complex roadway environments. They also fail to detect small targets due to their inherent down-sampling operations to obtain high-level feature maps. To overcome these obstacles, combined models, e.g., YOLO and Mamba, have been recently introduced to enhance the accuracy and robustness of traffic sign detection [80], whereas ref. [81] uses a Space-to-Depth module to compress spatial information into depth channels, expanding the receptive field and enhancing the detection capabilities for objects of varying sizes.

The semantic road detection task aims to distinguish variously sized and shaped road objects and open spaces from the scene’s background. Semantic segmentation and stereo-matching are two essential components of 3D environmental perception systems for autonomous driving. Semantic segmentation concerns a monocular pixel-level understanding of the environment, while 3D stereo-matching simulates human binocular vision to acquire accurate and dense depth information. An interesting methodology is proposed in [82], where the authors decompose a road scene into different regions and represent each region as a node in a graph formed by establishing pairwise relationships between nodes based on their similarity in feature distribution. Data-driven, geometry-based, and recently, DL strategies usually carry out the two aforementioned tasks separately. One of the most recent and relevant works is the one in [83], in which transformer attention [84] and CNN modules are exploited to merge multi-scale features across different scales and receptive fields. It effectively fused heterogeneous features and improved accuracy compared with previous semantic segmentation approaches. A joint learning framework developed to perform semantic segmentation and stereo-matching simultaneously is presented in [85]. This end-to-end joint learning framework yields improved performance compared with the models trained separately for each task. A solution based on fish-eye image segmentation is proposed in [86], where a framework featuring three semi-supervised components, namely, pseudo-label filtering, dynamic confidence thresholding, and robust strong augmentation, is introduced. The problem of inaccurate depth estimation in regions where the depth changes significantly (depth jump) using camera-only and multi-model 3D object detection models is treated in [87]. The authors propose an edge-aware depth fusion module to alleviate the “depth jump” problem and a fine-grained depth module to enforce refined supervision on depth. Most recently, foundation models have proven to be transformative in understanding the surrounding scene. A multi-modal and multi-task foundation model for road scene understanding is defined in [88] as a framework that inputs multi-modal data and outputs multi-task results.

Lane markers are pivotal components of autonomous driving and driver assistance systems. They must be detected among various objects. Lane detection paradigms can be grouped as segmentation-based (bottom-up pixel-based estimation) [89], keypoint-based, parametric (a lane instance is represented as a set of curve parameters), row-based, and anchor-based (the lane instance is represented as a set of x-coordinates at the fixed rows) [90] methods. More effective methods leverage multiple features and mathematical models [91]. Even though early neural network models that used anchor-based object detection and instance segmentation have demonstrated progress, they still have trouble detecting lanes in complicated configurations and with low visibility. In [92], the authors develop a multi-stage framework exploiting PETRv2 to detect the centreline and the popular YOLOv8 to detect traffic elements. Alternatively, precise global semantics and local characteristics must be combined with sophisticated loss functions to provide accurate lane prediction in complicated settings [93].

Real-time and accurate traffic light status recognition can provide reliable data support for autonomous vehicle decision making and control systems. Currently, status recognition methods for traffic lights include machine learning- and deep learning-based methods. More effective approaches are enhanced versions of state-of-the-art end-to-end object detectors with the direct generation of object bounding boxes and category labels. For example, in [94], the proposed algorithm for traffic light status recognition is based on the DINO [95] object detector.

Many accidents and fatalities on the road are caused by high-speed cars and infractions of traffic laws. Governments put speed breakers in place as a safety precaution to lessen this problem. However, many speed breakers are not properly signboarded, and vehicles frequently fail to notice them, which results in accidents. Numerous DL techniques can be used for detecting speed breakers in real time: R-CNN, YOLO, and Mask R-CNN. In particular, the use of YOLO v7 with the usage of anchor boxes is one of the recent fundamental advancements in this research field [96].

For postprocessing, detectors usually need Non-Maximum Suppression (NMS), which slows down the inference speed and creates hyperparameters that lead to speed and accuracy instability. The next objective is to increase speed without sacrificing accuracy and then to increase speed without sacrificing precision. The academic community has recently paid close attention to end-to-end transformer-based detectors (DETRs) [97] because of their simplified architecture and removal of manually constructed parts. Their potential for detecting road infrastructure has not yet been investigated.

In addition to objects that usually populate road infrastructure, many other elements appear less frequently and represent a risk factor. A dataset for addressing this problem has been proposed by the authors of [98], who proposed an approach exploiting the difference between the original image and the resynthesised image to highlight spurious objects. They started their analysis with the Lost and Found dataset [99] and then produced new annotated data to enrich the provided results. Recently, the solution proposed in [100] outperformed the most recent proposals with the introduction of a novel outlier scoring function called RbA, which defines the event of being an unexpected object as being rejected by all known classes. Another solution of interest that uses a mask classification has been proposed in [101]. A richer dataset, but still unexplored, for the detection of anomalous objects as the main task is proposed in [102]. A collection of the most relevant works in this context is reported in Table 2.

Table 2.

A summary of some relevant works in the literature. From the leftmost column, the table presents the corresponding reference number and year of publication (in brackets), the target to be investigated (TSD: traffic sign detection; LD: lane detection; TLD: traffic light detection; UO: unknown object), and the key aspect that made the work noteworthy.

3. Smart Planning

Smart planning represents a paradigm shift in urban and transportation management, integrating advanced technologies to enhance efficiency, sustainability, and infrastructure resilience. The application of cloud computing, Artificial Intelligence (AI), and real-time data analytics has revolutionised how cities address challenges such as traffic congestion, pollution control, and road infrastructure maintenance. By leveraging data-driven insights, smart planning enables proactive decision making, minimises resource wastage, and improves overall urban mobility.

This section explores three key aspects of smart planning: traffic prediction, pollution estimation, and road condition assessment. These interconnected domains illustrate how AI and computational models contribute to the development of intelligent transportation systems, sustainable environmental policies, and effective road maintenance strategies.

3.1. Traffic Prediction

Accurate traffic prediction is a cornerstone of modern intelligent transportation systems (ITSs), aiming to alleviate congestion, enhance navigation, and improve urban planning. By leveraging AI and advanced data collection methodologies, traffic prediction provides actionable insights for both short-term and long-term transportation planning. A summary of some of the most relevant works on traffic prediction is given in Table 3.

Different authors have defined various components of traffic prediction. Yuan and Li [103] described traffic prediction as a combination of traffic status prediction, traffic flow prediction, and travel demand prediction. Similarly, the authors of [104] highlighted key tasks, such as predicting traffic flow, speed, demand (future request predictions), travel time, and occupancy (extent of road space utilisation), while the authors of [105] proposed a Gaussian process path planning (GP3) algorithm to calculate the a priori optimal path as the reliable shortest-path solution. The role of public vehicle systems, focusing on online ride sharing, is considered in [106], where an efficient path-planning strategy based on a greedy algorithm is proposed, leveraging a limited potential search area for each vehicle and excluding the requests that violate the passenger service quality level.

Recent research has introduced sophisticated AI-based approaches to improve traffic forecasting accuracy. The proposal of [107] regards the Traffic State Anticipation Network (TSANet), a model based on graph and transformer architectures [84] to predict congestion in laneless traffic scenarios. The study introduces three traffic states—clumping, unclumping, and neutral—enabling detailed congestion tracking and improving forecasting precision.

The Graph Spatiotemporal Transformer Network (GSTTN) presented in [108] addresses the challenges posed by complex nonlinearity and dynamic spatiotemporal dependencies in traffic data. This framework integrates a multi-view Graph Convolutional Network (GCN) to capture spatial patterns and a transformer network with multi-head attention to model time-series disturbances. Similarly, ref. [109] introduces the Spatiotemporal Dual-Adaptive Graph Convolutional Network (ST-DAGCN), which dynamically learns both global and local traffic state features by using a dual-adaptive adjacency matrix while capturing temporal dependencies through Gated Recurrent Units (GRUs).

The authors of [110] investigated Traffic Speed Forecasting (TSF) by using GPS probe data from transport vehicles in Vietnam, focusing on challenges such as abnormal or missing GPS values. Their approach optimises traffic speed predictions on parallel multilane roads in Hai Phong City by integrating advanced optimisation algorithms, specifically Particle Swarm Optimisation (PSO) and the Genetic Algorithm (GA), with Long Short-Term Memory (LSTM) networks. S. Wu [111] explored a dynamic traffic flow prediction model for urban road networks (URNs) by combining Spatiotemporal Graph Convolution Networks (STGCNs) with Bi-directional Long Short-Term Memory (BiLSTM) networks [112]. In [113], the authors introduced Propagation Delay-aware Dynamic Long-Range Transformer (PDFormer), which considers long-range dependencies in traffic forecasting, whereas a Graph Multi-Attention Network (GMAN) designed to predict traffic volume and speed for multiple future time steps at different locations was proposed in [114].

Table 3.

This is a summary of some relevant works on traffic prediction. TP: traffic prediction; ST: spatiotemporal; LR: literature review; TCP: traffic congestion prediction; TFP: traffic flow prediction; TSP: traffic speed prediction; TVP: traffic volume prediction.

Table 3.

This is a summary of some relevant works on traffic prediction. TP: traffic prediction; ST: spatiotemporal; LR: literature review; TCP: traffic congestion prediction; TFP: traffic flow prediction; TSP: traffic speed prediction; TVP: traffic volume prediction.

| Work (Y) | Goal/Target | Method | Metrics | Data |

|---|---|---|---|---|

| [103] (2021) | Survey of ST data in TP | LR | N/A | Various public datasets |

| [104] (2021) | Survey of DL in TP | LR | N/A | METR-LA, PEMS, etc. |

| [105] (2021) | Shortest-path planning | GP3 | Path reliability and runtime | Transportation network data |

| [106] (2018) | Ride-sharing path planning | Online path planning | Travel time and efficiency | Simulated and GPS data |

| [107] (2024) | TCP | TSANet | Accuracy and F1-Score | Aerial video datasets |

| [108] (2024) | TFP using ST data | GSTTN | MAE, RMSE, and MAPE | METR-LA and PEMS-BAY |

| [109] (2024) | TP | ST-DAGCN | MAE, RMSE, and MAPE | PEMS-BAY and Los-loop |

| [110] (2024) | TSP | PSO+GA+LSTM | RMSE, MAE, and MDAE | Registered vehicle probe data |

| [111] (2021) | TFP | STGCN+BiLSTM | MAE, RMSE, and MAPE | Urban sensor data |

| [113] (2023) | Delay-aware long-range TFP prediction | MAE, RMSE, and MAPE | Delay-tagged traffic data | Public traffic data |

| [114] (2020) | TVP and TSP | GMAN | MAE, RMSE, and MAPE | Xiamen and PEMS |

3.2. Pollution Estimation

Accurate air quality prediction plays a fundamental role in pollution management and public health protection. The ability to anticipate pollutant concentrations enables policymakers and environmental agencies to implement proactive measures, reducing human exposure to harmful air contaminants. The increasing complexity of pollution patterns, influenced by meteorological conditions, industrial emissions, vehicular activity, and other anthropogenic factors, has driven the adoption of advanced computational models. Traditional statistical techniques have been largely replaced by AI-driven approaches due to their ability to model complex, nonlinear relationships in air quality data [115]. Table 4 reports a summary of the key literature on Air Pollution Estimation.

Table 4.

This is a summary of the key literature on air pollution estimation. APP: air pollution prediction; AQ: air quality; AQP: air quality prediction.

Machine learning and deep learning methods have become essential to air pollution estimation, allowing for improved accuracy and robustness in predictive models. Some of the most widely applied techniques include Artificial Neural Networks (ANNs), Deep Neural Networks (DNNs) [116], Fuzzy Logic (FL), and Support Vector Machines (SVMs). These models facilitate the identification of intricate patterns in environmental data, enabling reliable short-term and long-term air quality forecasts.

Recent research has focused on developing models that integrate various data sources, such as meteorological observations, emission inventories, and remote sensing data, to enhance air quality predictions. The authors of [117] propose models to estimate in-vehicle pollution exposure by considering driving patterns and ventilation settings. Their study compares a mass-balance model with an ML model, with the latter demonstrating superior predictive performance, particularly for NO2 concentrations, and achieving greater accuracy when applied to unseen data.

A hybrid model is introduced in [118]. It combines LSTM networks with the Multi-Verse Optimisation (MVO) algorithm to predict NO2 and SO2 emissions from combined cycle power plants. The LSTM model forecasts pollutant levels, while MVO optimises its hyperparameters, improving predictive accuracy. Similarly, in [119], a combination of SVM and LSTM networks to predict the Air Quality Index (AQI) is employed. Before conducting the predictive analysis, the study applies extensive preprocessing techniques, such as handling missing values, removing redundancies, and extracting features by using the Grey-Level Co-occurrence Matrix (GLCM) method. To further enhance predictive accuracy, a Modified Fruit Fly Optimisation Algorithm (MFOA) is used for feature selection and parameter tuning.

Graph-based models have gained prominence in pollution forecasting. The authors of [120] proposed an Attention Temporal Graph Convolutional Network (A3T-GCN) for NO2 prediction in Madrid. The model integrates GCN, GRU, and an attention mechanism to better capture spatial and temporal dependencies in pollution data. By leveraging graph structures, the approach improves air quality predictions over traditional DL models.

To improve interpretability and robustness in PM2.5 predictions, the solution proposed in [121] exploits a hybrid interpretable predictive ML model that focuses on peak value prediction accuracy while ensuring model explainability. Similarly, the authors of [122] developed a novel DL framework for PM2.5 estimation, leveraging a spatiotemporal Graph Neural Network (BGGRU) with self-optimisation. This approach integrates a spatial module (GraphSAGE) and a temporal module (BGraphGRU) while utilising Bayesian optimisation for hyperparameter selection, thereby improving forecasting accuracy and generalisation performance.

Cloud-based AI and Internet of Things (IoT) integration have also contributed significantly to advancements in air pollution forecasting. In [123], a cloud-implemented AI model that employs IoT devices to monitor real-time AQI levels is proposed. The approach, known as BO-HyTS, combines Seasonal Autoregressive Integrated Moving Average (SARIMA) with LSTM networks, using Bayesian optimisation to refine predictive accuracy. This methodology effectively captures both linear and nonlinear temporal dependencies in air quality data, enhancing forecast precision.

Transformer-based architectures [84] have gained traction in air pollution modelling due to their ability to process long-range dependencies in time-series data. AirFormer is introduced in [124]; it consists of a transformer-based model for air quality prediction across China. This framework incorporates self-attention mechanisms to capture spatiotemporal dependencies while incorporating a stochastic component to model uncertainty. Another transformer-based approach was presented by Zhang and Zhang [125], who used Sparse Attention-based Transformer Networks (STNs) to predict PM2.5 levels in Beijing and Taizhou, achieving improved performance when dealing with missing or sparse environmental data.

3.3. Road Conditions

The condition of road pavements is crucial to economic development and transportation efficiency. According to the World Bank, road density varies significantly by economic level, ranging from 40 km per million inhabitants in low-income economies to 8550 km in high-income economies [126]. As urbanisation accelerates and climate change impacts intensify, the need for frequent and precise pavement monitoring has grown. Ensuring that roads are well maintained reduces accident risks, improves driving comfort, and optimises maintenance budgets. A summary of some relevant studies on road conditions is reported in Table 5.

Table 5.

This is a summary of some relevant studies on road conditions. PCA: pavement condition assessment; P: prediction; D: detection; C: classification; S: segmentation; Q: quantification; MMTransformer: multi-modal transformer model; RSCD: road surface condition dataset; M: macro; MIoU: mean intersection of union; KolektorSDD: Kolektor Surface-Defect Dataset; P: average precision; FN: False Negatives; FP: False Positives; Acc: accuracy; Rec: recall; Prc: precision; F1s: F1-Score.

Traditional pavement inspection methods rely heavily on manual assessments, which are time-consuming, costly, and prone to subjectivity. The integration of AI, computer vision (CV), and ML has revolutionised road condition assessment, enhancing accuracy, efficiency, and scalability. These technologies enable both dynamic monitoring, where data are actively collected with vehicle-mounted cameras and drones, and static monitoring, where fixed sensors continuously track road surface conditions. The adoption of smart monitoring solutions supports transportation agencies in making data-driven maintenance decisions, ensuring road longevity and public safety.

Pavement assessment tasks include distress detection, classification, segmentation, and condition quantification. While traditional methods require extensive data collection and manual evaluation, DL-based approaches offer more robust and automated solutions. In [127], a hybrid system combining a YOLO-based crack classification model with a U-Net segmentation model to quantify crack density is proposed. Their method introduces a pavement condition index derived from both models’ outputs, improving crack detection even in challenging environments. Similarly, the authors of [128] present EdgeFusionViT, a transformer-based architecture designed for real-time pavement classification. The system, deployed on an edge device (Nvidia Jetson Orin Nano), processes road images at 12 frames per second, efficiently categorising surface conditions such as “dry gravel” and “dry asphalt-smooth”.

Beyond classification, DL models have improved pavement segmentation and distress quantification. A multi-modal transformer model for detecting and classifying winter road surface conditions is introduced in [129]. Their approach integrates multiple sensor inputs by using a cross-attention mechanism, enhancing classification accuracy by capturing variations in road textures and weather-induced changes. ISTD-DisNet is introduced in [130], a transformer-based model for multi-type pavement distress segmentation. By leveraging a Mix Transformer (MiT) and a Mixed Attention Module (MAM), the model extracts multi-scale pavement features, while a Learnable Transposed Convolution Up-sampling Module (TCUM) refines distress details.

Crack detection is a particularly critical aspect of pavement evaluation, requiring high accuracy to prevent infrastructure deterioration. The authors of [131] present a DeepLabv3+ CNN model combined with a crack quantification algorithm that analyses crack length, average width, maximum width, and affected pavement area. The authors of [132] proposed a two-stage defect detection approach, where a CNN-based segmentation network predicts pixel-wise crack locations, followed by a decision network that confirms defect presence across entire-pavement images. A DL model for crack detection across diverse pavement types and environmental conditions is introduced in [133]. The model employs context-aware analysis to adaptively adjust its predictions based on variations in lighting, road texture, and surface materials. Additionally, an iterative feedback mechanism enables the model to learn from misclassifications, significantly improving accuracy over time.

Beyond crack detection, efforts have been made to quantify overall road conditions by using sensor-based measurements. The application of Convolutional Neural Networks (CNNs) to detect pavement distress from orthoframes collected via mobile mapping systems is considered in [134]. The study finds that fine-tuning pre-trained CNNs and applying extensive preprocessing significantly enhances detection accuracy. However, challenges remain in handling visual artefacts such as shadows from tree branches, which can cause misclassification.

A semi-supervised learning framework is proposed in [135] to estimate the International Roughness Index (IRI) from in-car vibrations. By using Power Spectral Density (PSD) analysis and a Linear Time-Invariant (LTI) system, the study formulates the connection between pavement roughness and vehicle vibrations. By leveraging a self-training approach, their model effectively estimates IRI values across road networks while addressing issues related to incomplete datasets and sensor inconsistencies.

While DL has significantly advanced road condition assessment, several challenges persist. The reliance on high-quality labelled datasets remains a major limitation, as annotating pavement distress images is resource-intensive and time-consuming. Research efforts are exploring semi-supervised and self-supervised learning techniques to reduce dependency on large labelled datasets. Additionally, AI models often struggle to generalise across different geographical regions due to variations in road materials, climatic conditions, and construction practices. To mitigate this issue, Transfer Learning and domain adaptation strategies are being explored to improve model adaptability.

Real-time road monitoring presents another challenge, as most DL models are computationally demanding. The integration of edge computing [136], IoT sensors [137], and cloud-based AI platforms offers potential solutions by enabling real-time processing and automated data collection. Future advancements are expected to focus on optimising model efficiency, reducing false detections, and improving interpretability. By refining AI-driven pavement monitoring systems, transportation agencies can implement more effective and cost-efficient road maintenance strategies, ultimately enhancing infrastructure sustainability and public safety.

4. Vehicle Networks and Security

The term Internet of Vehicles (IoV) refers to integrating vehicles with the Internet, enabling them to exchange information online and supporting new applications and services, including remote vehicle monitoring and autonomous driving. Smart vehicles create ad hoc networks, known as Vehicular Ad hoc Networks (VANETs), using short-range wireless communication technologies like Wi-Fi. Among the specific types of communication, we can cite Vehicle-to-Vehicle (V2V), Vehicle-to-Device (V2D), Vehicle-to-Building (V2B), Vehicle-to-Grid (V2G), and Vehicle-to-Infrastructure (V2I) communication, which can be grouped in the macro-category of Vehicle-to-Everything (V2X) communication (i.e., the communication between a vehicle and any entity in the surrounding environment). Additionally, beyond these communication channels, the sensing layer also serves as a means for vehicles to interact with the external environment.

This makes it clear that while communication capabilities greatly enhance the vehicle’s contextual awareness, they also introduce vulnerabilities to malicious attacks targeting these systems, making the protection against unauthorised access a critical priority [138]. Indeed, smart vehicles provide a wide attack surface, i.e., the collection of vulnerabilities, entry points and techniques that an attacker can exploit [139].

Physical access refers to physical interfaces, such as OBD-II port, traditionally utilised by service professionals, whereas Wireless access regards wireless or remote communication capabilities like Wi-Fi or Bluetooth, but also broadcast channels, including GNSS, Traffic Message Channel, Digital Radio, and Satellite Radio. These channels can be exploited by attackers to access ECUs and IVNs [140,141], allowing them to display false information on the instrument panel by altering frame data. Another attack vector is represented by the sensing layer, usually with the aim of altering the vehicle’s perception of the surrounding environment. Off-the-shelf hardware can be used to perform jamming and spoofing attacks, which can cause blindness and/or malfunctions in vehicles under attack. Finally, a transversal attach surface is represented by ML capabilities that can be corrupted in several ways, causing erroneous deduction and consequent unexpected vehicle behaviour.

4.1. Type of Attack and ML-Based Security Solution

Such a complex ecosystem is susceptible to various forms of attacks, and numerous countermeasures have been proposed in the literature to address these threats. While traditional hard-coded algorithms are effective against deterministic attack scenarios, deep learning architectures can identify a broader range of attacks by leveraging experience and shared data. As a result, DL models are increasingly becoming the standard de facto to deal with these kinds of attacks. Most relevant works are summarised in Table 6.

Table 6.

This is a summary of some relevant works in the literature. From the leftmost column, the table presents the corresponding reference number and year of publication (in brackets), the target to be investigated (AD: attack detection; ID: intrusion detection; PP: privacy protection; AN: adversarial network attack), the application goal, and the key aspect that made the work noteworthy.

4.1.1. Attack Detection

A non-trivial challenge consists in detecting running attacks aimed at violating the integrity of both vehicles and vehicle networks. Solutions proposed in the literature vary depending on the specific type of attack.

Platoon Attack: A platoon refers to a group of vehicles travelling in the same lane at close distances and maintaining similar speeds to enhance energy efficiency and contribute to more effective traffic management. An attack on this kind of pattern could cause car accidents and serious injuries. An approach devoted to detecting this kind of attack and providing the opportune countermeasure is proposed in [142], where the authors try to detect and locate the attacker exploiting velocity range and distance data provided by LiDAR and radar sensors. The data are sent to a network made up of convolutional (CNN) and fully connected (FCNN) layers that return a high-value output corresponding to the attacker node.

Distributed Down of Service (DDoS): Exploiting Software-Defined Network is another type of attack devoted to making the network services unavailable. In [143], the proposed algorithm aims to perform attack detection by using live traffic data, capturing packets with Fuzzy Logic and successively implementing a Q-learning approach. In [155], the authors present a multi-agent system, exploiting the collaborative nature of intelligent agents for anomaly detection, with each agent implementing a Graph Neural Network. In a recent work [150], the authors proposed a novel Deep Multi-modal Learning (DML) approach for detecting DDoS attacks in the IoV by the integration of LSTM and Gated Recurrent Unit (GRU).

Black/grey hole: This type of attack refers to a node that tries to stop messages/packets from being forwarded to a receiver. More precisely, a black hole corresponds to a complete drop of packets, whereas the grey version refers to the case where packets are partially dropped or corrupted. In [144], the authors propose to use an Artificial Neural Network (ANN) devoted to analysing three types of trace files to extract features devoted to characterising data as normal and abnormal.

Sybil attack: A sybil attack creates virtual nodes in the vehicle networks to launch an attack. Unfortunately, the use of pseudonyms in vehicular networks for the privacy of user identity makes it difficult for the system to detect such virtual nodes and consequently to detect sybil attacks. In this context, an interesting solution was proposed in [145], in which a generic RNN-based solution to perform the global detection of sybil attacks is introduced. It performs several information checks (e.g., range plausibility, position plausibility, and speed plausibility), using them as an input for an LSTM-based RNN network, which detects the correct type of sybil attack.

Jamming attack: A jammer aims to compromise the transmission of data from a sensor by sending false alerts or creating a spoofed environment around the sensor. The solution suggested in [147] proposes an algorithm for jamming attack prevention in mobile ad hoc networks. The proposed algorithm uses a Q-learning approach to learn the history of actions (past) and then inputs it into the deep Q-network (DQN) to predict Q-values for the present states.

Spoofing attack: Spoofing attacks refer to an attacker pretending to be a legitimate network user to gain access to personal information. Among them, we can cite GNSS spoofing attacks devoted to sending fake GPS signals to the target receiver, making the user think that he/she is in a false location. Time-series analysis can be used to detect these kinds of attacks as proposed in [146], where the authors use an LSTM-RNN model to anticipate the distance travelled between two consecutive locations of a vehicle working with a publicly available dataset, comma2k19 [156].

Vehicle position data are also personal data that can be the target of an attacker exploiting existing V2V communication to track a vehicle’s CAM-trace. In [157], the authors use a VANET topology learning methodology that can use any existing Graph Learning framework.

4.1.2. Intrusion Detection and Misbehaviour

Intrusion Detection Systems (IDSs) include all the approaches devoted to identifying unknown types of attacks. Numerous studies in the literature have explored the challenges associated with detecting intrusions or misbehaviour caused by malicious nodes.

In [158,159], the authors exploit anomaly detection strategies to find malicious messages transmitted to ECUs, whereas approaches based on the analysis of sensor data are proposed in [160,161]. Recently, a technique exploiting the identification of the driver based on their real-world driving data by LSTM time-series analysis was proposed in [162], whereas a CNN-based system working on CAN messages was used in [163] to detect attacks on the IoV.

Recently, some works have exploited the Transfer Learning paradigm. In [148], the authors train a convolution LSTM-based model with a previously known intrusion dataset, and successively, a one-shot TL approach is used to re-train the model for the detection of new attacks to make it able to detect new intrusions by exploiting only one sample. The authors of [149] propose two TL-based model update schemes to detect new types of attacks. The possibility of using a small number of data to achieve high detection accuracy is one of the key advantages of this work.

Finally, it is worth noting that a perspective on the explainability aspect is provided by the authors of [151], who propose a novel feature ensemble framework that integrates multiple explainable AI (XAI) methods with various AI models to improve both anomaly detection and interpretability.

4.1.3. Adversarial ML Attacks

Even relying on ML and DL capabilities can represent a vulnerability. In this case, Adversarial ML attacks are among the most exploited scenarios [164]. Such an approach consists of creating or modifying input signals by adding imperceptible changes that can compromise the reliability of the ML/DL model. This kind of attack can occur either during the training or inference phase. The first case is known as a poisoning attack and is performed by manipulating the training data to compromise the ML/DL model [165]. On the contrary, an evasion attack regards the inference phase and aims to produce a false result from the deployed model. In recent years, researchers have shown high interest in this topic: Security attacks against sign recognition by exploiting Adversarial ML approaches are explored in [166], whereas a cyber-attacks targeting the ML policy of an autonomous vehicle in a dynamic environment are performed in [164]; finally, a more general analysis of adversarial attacks on CAVs is the topic of [12]. Most recent works, like [167], propose an adversarial attack framework allowing the attacker to easily fool LiDAR semantic segmentation by placing some simple objects (e.g., cardboard and road signs) at some locations in the physical space. In [153], instead, the authors attack adaptive traffic control systems (ATCSs) based on deep reinforcement learning (DRL) by using a group of vehicles that cooperatively send falsified information to “cheat” the DRL-based ATCSs.

Consequently, another part of the literature focuses on the defence against adversarial attacks, which can be performed by two different strategies: focusing on the detection of adversarial inputs after the training of ML models or proactively increasing the robustness of the ML model against such attacks [12,168,169]. Some works focus their attention on preventing unknown scenarios, usually with the enhancement of model robustness by training models on adversarially perturbed data. For example, in [170], the authors developed a framework named Closed-loop Adversarial Training to generate adversarial scenarios for training to improve driving safety, especially in safety-critical traffic scenarios. The integration of objectness information into the adversarial training process is instead used in [154], where the authors applied this technique to enhance the robustness of YOLO detectors on datasets such as KITTI [171]. On the other hand, known hazardous situations are pivotal to advancing safety standards. Deep learning architectures (e.g., autoencoders and GANs) have been utilised to enhance recognition model performance in attack scenarios, as proposed in [172].

4.2. Privacy Protection

Privacy protection deals with the management of sensitive information. In the context of vehicular networks, it is further classified into location privacy and user privacy. Several privacy schemes have been proposed in the literature with the purpose of obtaining services from the network without exposing the real identity of other users. In recent years, ML-based approaches are gaining attention as a solution to overcome the drawbacks of the current techniques and protect the privacy of users from attackers, while ensuring secure availability to the service-providing entity is quite challenging. The proposal of [173] is a federated learning-based data privacy protection scheme for vehicular cyber–physical systems. It consists of data transformation and collaborative data leakage detection. An approach exploiting federated learning to detect misbehaviour, preserving user data privacy, is proposed in [174], where the authors propose the use of federated learning where the personal information of a vehicle is kept on the vehicle and training is performed without sending data to the central node. In [152], an innovative approach leveraging federated learning and edge computing techniques is proposed to predict vehicles’ subsequent locations by using large-scale data, concurrently prioritising user privacy.

5. Datasets

Datasets are crucial in the smart mobility field, as they provide the real-world data needed to properly train and validate algorithms devoted to providing intelligent features. High-quality datasets enable vehicles to understand and respond to various driving scenarios, improving safety and decision making. Moreover, the availability of diverse datasets guarantees adaptability and reliability in different environments. In this section, some of the most used and complete datasets have been briefly described and listed in Table 7.

The detection and tracking of pedestrians and vehicles have attracted much attention in various contexts (e.g., security surveillance, road monitoring, etc.), resulting in an abundance of datasets including well-annotated images and video sequences. However, the increasing demand in the automotive industry has shifted the attention to the driver assistance and autonomous navigation perspective. Among these, the Calthec pedestrian dataset [175], dedicated exclusively to pedestrian detection and tracking, is one of the largest for this task and provides a valuable benchmark for occlusion handling. The KITTI dataset [171] is a larger dataset covering multiple tasks; it provides multiple sources for 3D object detection and tracking in video sequences, road/lane detection, and semantic instance segmentation. Up to 15 cars and 30 pedestrians are visible per frame, and detailed benchmarks and metrics are provided. The number of available images/sequences varies according to the specific task. The CityPersons [176] dataset consists of a large and diverse set of stereo-video sequences recorded in the streets of different cities in Germany and neighbouring countries, providing high diversity and allowing for high generalisation capabilities. In terms of tracking, MOT Challenge provides a framework for the fair evaluation of multiple people-tracking algorithms, consisting of a large collection of datasets (including some new challenging sequences) and including detections for all sequences, with a common evaluation tool providing several measures, from recall to precision to running time. Also, in this case, the dataset size varies according to the specific subset/task. To go deeper into the context of action recognition, it is worth mentioning two datasets: PIE [46], a large dataset (1842 pedestrian samples) designed for estimating pedestrian intentions in traffic scenes, and JAAD [47], which considers pedestrians with behavioural annotations, distant pedestrians not interacting with the driver, and groups of pedestrians.

Given the priority of making smart vehicles capable of perceiving other road users, it is obvious that the security, as well as the ability to perform autonomous driving tasks, depends on the understanding of the surrounding environment. To this end, several datasets, exploiting different sensors, have been provided. In this context, two notable datasets are represented by ApolloScape [177] and WoodScape [178]. The former exploits front view and the latter 360°view, and both benefit from additional data based on GPS and CAN-bus info. Mapillary [179] provides pixel-accurate and instance-specific human annotations for understanding street scenes with 124 semantic object categories and worldwide coverage. A rich portfolio of sensors is the strength of nuScenes, which provides 360°, LiDAR, GPS, CAN, IMU, radar, and HD-Map data. HD-Map data are also included in the Argoverse dataset, which comes with more than 300k images. OpenLaneV2 focuses its attention on recognising the dynamic drivable states of lanes in the surrounding environment, exploiting multi-view images and LiDAR data.

Among the datasets for traffic sign detection, we can cite the recently released Mapillary Traffic Sign Dataset [179]. The dataset contains 100k images (52,000 fully annotated and 48,000 partially annotated). It is the largest and most diverse collection of traffic sign images globally, with detailed annotations for various traffic sign categories. A valid alternative is given by CCTSDB2021 [180], which counts more than 16,000 annotated images acquired under several weather conditions.

A less explored field regards the detection of unexpected objects in the road context. Among the datasets dealing with non-common road elements, we can cite Road Anomaly [98], which attracted the attention of many researchers. The dataset contains images, with associated per-pixel labels, of unusual dangers which can be encountered in a road environment (e.g., traffic cones, animals, and rocks). An older but still exploited dataset is Lost and Found [99], which comprises 112 stereo-video sequences with 2104 annotated frames.

In terms of unpredictable situations, it is worth citing RDD-2020 [181], a large-scale heterogeneous dataset including 26,620 images of road damage collected from multiple countries.

Aspects related to traffic prediction and navigation planning represent another hot topic that led to the release of datasets such as NYC Taxi Data [182], a public dataset containing detailed trip records of New York City taxi rides in 2014 including several specific data (e.g., pickup and drop-off dates and times, locations, and trip distances). The Transportation Network [183] dataset comes instead from ride-hailing services like Uber or Lyft. A comprehensive dataset for the development and validation of GNSS algorithms and mapping algorithms designed to work with commodity sensors is comma2k19 [156], a collection of 33 h of commute time on California’s 280 highways, containing various features, such as speed, acceleration, steering wheel angle, and distance travelled between two consecutive points, extracted from CAN, GNSS, and additional smart vehicle sensors.

Air quality monitoring and forecasting are also pivotal applications that need continuous monitoring at multiple points to be treated properly. Within this scope, Beijing Multi-Site AQ [184] provides Beijing’s PM2.5 data of the past 4 years at 36 monitoring sites, whereas Madrid AQ [185] reports data (e.g PM2, PM10, and SO) from 24 stations in the city of Madrid collected over 18 years.

In the context of security, a valuable dataset is VeReMi [186], a simulated dataset that consists of message logs for vehicles, containing both GPS data about the local vehicle and BSM messages received from other vehicles. Its primary purpose is to assess how misbehaviour detection mechanisms operate on a city scale.

A comprehensive overview of available datasets for smart navigation is provided in [15]. Datasets are classified depending on the specific task (e.g., Perception Datasets, Mapping Datasets, and Planning Datasets), and an analysis of their impact is provided to select the most important ones.

Table 7.

Collection of the most relevant datasets serving the discussed topic. DT: detection and tracking, PD: pedestrian detection; VDT: vehicle detection and tracking; PDT: pedestrian detection and tracking; SU: scene understanding; AR: action recognition; SD: traffic signal detection; RA: road anomaly; N: navigation; MD: misbehaviour detection; FVC: front-view camera; MVI: multi-view images; HM: HD-MAP; E: environment; AQS: Air Quality Sensor; LID: LiDAR; IMU: Inertial Measurement Unit.

Table 7.

Collection of the most relevant datasets serving the discussed topic. DT: detection and tracking, PD: pedestrian detection; VDT: vehicle detection and tracking; PDT: pedestrian detection and tracking; SU: scene understanding; AR: action recognition; SD: traffic signal detection; RA: road anomaly; N: navigation; MD: misbehaviour detection; FVC: front-view camera; MVI: multi-view images; HM: HD-MAP; E: environment; AQS: Air Quality Sensor; LID: LiDAR; IMU: Inertial Measurement Unit.

| Dataset | Year | Sensor | Task | Size | Ref. |

|---|---|---|---|---|---|

| Caltech [175] | 2009 | FVC, LID, GPS, and IMU | VDT and PDT | ~100 k | [28] |

| KITTY [171] | 2012 | FVC | 3D DT and SU | ~500 | [63] |

| CityPersons [176] | 2017 | FVC | PD | ~5000 | [66] |

| MOT Challenge [187] | 2017 | FVC | DT | ~60 k | [31] |

| PIE [46] | 2019 | FVC | AR | ~2 k | [66] |

| JAAD [47] | 2017 | FVC | AR | ~350 | [66] |

| ApolloScape [177] | 2018 | FVC, GPS, and IMU | SU | ~2.5 h | [82] |

| WoodScape [178] | 2019 | 360°view, GPS, CAN, and IMU | SU | ~100 k | [86] |

| Mapillary [188] | 2020 | FVC | SU | 25,000 | [189] |

| nuScenes [190] | 2019 | 360°view, LID, GPS CAN, IMU, radar, and HM | SU | 1000 | [87] |

| Argoverse [191,192] | 2019 | 360°view, HM | SU | 324 k | [67] |