1. Introduction

Advancements in artificial intelligence, cloud computing, mobile technologies, and the blockchain have transformed the financial services industry [

1]. These innovations, part of the FinTech movement, have become quite popular in recent years and are an important part of the regulatory and policy-making process. In today’s business environment, financial institutions cannot afford to ignore digital capabilities focused on data analytics, automation, and customer experience if they wish to improve their competitive positioning and foster innovation [

2].

Indeed, in contemporary financial contexts, institutions are faced with ever-expanding data volumes and data types (logs, documents, multimedia for fraud forensics, etc.) and increasingly stringent regulatory demands, rendering traditional relational databases insufficient for today’s heterogeneous data environments [

3]. Although such databases offer robust schema enforcement and efficient handling of structured records, they often falter when required to process high-velocity or unstructured content. In contrast, big data frameworks and cloud-based services have emerged as formidable solutions to accommodate scale and complexity [

4]. However, integrating these newer tools into established relational infrastructures is far from straightforward, particularly in large organizations that focus on compliance [

5].

In response to these challenges, modern financial institutions are those organizations that operate in the financial sector with a technology-driven approach that promotes innovation. In addition, balancing performance, scalability, and compliance requirements is something traditional financial systems struggle to achieve in isolation. These players offer a wide range of financial services that have been transformed through technology and data-driven innovations. They provide a range of digital banking services that process transactions in real time: automated loans that provide instant approvals; data-driven investment services that include robo-advisory and algorithmic trading; personalized insurance with automated claims processing; sophisticated capital market services; smart risk management solutions; digital personal finance tools and a wide range of corporate but also consumer-oriented financial services; and some oriented to customers previously not catered by financial institutions [

6]. Such firms use advanced databases together with big data and operate over a hybrid infrastructure with an on-premise and cloud environment, along with real-time capabilities and a superior customer experience. They integrate regulatory compliance into their structure, have strong defense against cyber attacks, and use agile operational behavior to respond quickly.

Despite these advancements, there is evidence for the standalone value of relational systems, big data technologies, and cloud computing, but comprehensive studies of their combined deployment in finance remain underwhelming [

7]. This shortfall becomes especially problematic under stringent data governance regimes, as demonstrated by GDPR and evolving cross-border transfer protocols, which impose additional complexities on performance, scalability, and data sovereignty. Indeed, reconciling real-time analytics and strict regulatory mandates is an ongoing challenge for cross-border financial operations.

Although there is substantial literature on each of the three pillars, relational databases, big data frameworks, and cloud platform, as well as research that addresses the combinations of these pillars [

8], recent literature has presented the issue of the fragmentation of data architecture in financial institutions and a lack of a unified approach. Several banks have separate systems. For example, companies can have a traditional data warehouse for reporting and business intelligence and a separate data lake for raw and minimally processed data. Some may even have a new cloud stack for digital channels or new innovation projects. It is common to find “fragmented data warehouses and data lakes” in banking, where old and new platforms are managed simultaneously without full integration. In such situations, companies may face duplicate costs for storage, processing resources, software licensing, and maintenance across multiple environments. Separate environments can lead to duplicate data. Even more concerning is the likelihood of security and compliance problems, since when data sit in silos, governance becomes harder. For example, a European bank has customer transactions in an on-premise Oracle database. It exports subsets to a Hadoop-based risk analytics system and then copies the data a third time to a cloud data lake for AI-related projects. Clearly, tracking the lineage and ensuring all copies comply with GDPR erasure or audit trail is quite difficult.

Researchers have advocated a unified, integrated framework while noting significant gaps—particularly in performance benchmarking and scalability testing under mixed workloads. Traditionally, this has resulted in the study of one environment at a time (such as, for instance, benchmarking the database or benchmarking the Hadoop cluster). Compliance discussions also usually separate the cloud outsourcing or big data governance topics. Few studies have studied architectures that combine all three, that is, an end-to-end financial data pipeline that entails transaction capture in an RDBMS, big data processing for analytics, and being deployed on-premises and in the cloud.

There are studies that have shown how current database benchmarks do not accurately reflect the reality of financial workloads, such as those spanning different types of data and have strict security requirements. This limitation highlights the necessity for more comprehensive research methodologies in the field. They recommend new benchmarking methodologies that take into account complex business logic, diverse data, and strong security—in other words, they note that financial use cases merge transactional and analytic system characteristics. A survey reported on bank data management, stating that balancing and integrating traditional and new tools is imperative to meet dynamic business needs.

To address this research gap, this research considers how relational databases, big data architectures, and cloud infrastructures can be orchestrated to meet both operational demands and legal requirements. In doing so, it compares performance metrics, such as processing speed, scalability, and resource utilization, and explores the practical feasibility of an integrated multilayer solution. Although relational systems have historically served structured reporting needs, they offer limited scope for growing volumes of semistructured and unstructured content [

5]. While big data and machine learning approaches provide deeper insight, they can escalate processing costs as datasets expand, prompting greater reliance on the scalability and flexibility of cloud platforms [

7]. By proposing a multi-layer architecture and benchmarking potential integration strategies, this study offers new directions for modernizing legacy systems without compromising governance standards or incurring unsustainable costs.

Building on the results presented in [

9], this extended research significantly expands the scope and depth of the analyses on the technical and regulatory requirements of financial institutions, showing how technologies, such as real-time streaming (Kafka) and distributed processing (Spark and Hadoop), can be orchestrated with standard relational systems in a multilayer hybrid architecture. Specifically, this research was guided by the following research questions:

RQ1: What are the main trends, challenges, and strategies in integrating relational databases, big data, and cloud computing in financial institutions based on a systematic review of the recent literature?

RQ2: How can financial institutions implement a hybrid cloud architecture that optimizes operational efficiency while ensuring compliance with EU data protection requirements in the context of EU-US data transfers?

RQ3: How do these technologies impact financial data management and analysis in terms of scalability, processing speed, and cost-effectiveness?

The following sections analyze the current literature on system integration, investigate practical implementation problems (especially with security and regulatory compliance), and offer an empirical evaluation of various platforms across diverse workloads.

2. Literature Review

2.1. Relational and NoSQL Databases in Financial Services

For many years, relational database management systems (RDBMS) have been in use in most financial IT systems. This is because they feature strong consistency (with ACID properties), reliability, and well-defined schema. Studies show that RDBMS are still the predominant choice for operational finance data storage—around 80% of the operational databases of enterprises are still relational. RDBMS remain in use 70% of the time in some form, even for new financial applications as new solutions emerge [

10].

The widespread use of RDBMS is largely due to the characteristics of financial data. The transactional nature of financial data, such as payments, trades, account balances, etc., fits well in structured tables. Furthermore, regulatory requirements require accurate and auditable records that are easily compliant with RDBMS. For example, core banking systems and payment processing platforms traditionally run on a commercial RDBMS (Oracle, SQL Server, DB2, etc.) or a modern open-source SQL database to ensure that each transfer or trade is processed transactionally.

Relational databases have long been used for managing financial data as they are capable of handling both Online Transaction Processing (OLTP); systems specialized in the efficient, real-time execution of transactional tasks, such as order entries, payments, and account updates; and Online Analytical Processing (OLAP) systems, which are designed to aggregate and analyze larger volumes of historical data to generate added value for business intelligence. In particular, OLAP systems can be used during risk assessment and strategic decision making [

11,

12,

13]. Furthermore, state-of-the-art cloud native OLTP and OLAP databases offer storage layer consistency, compute layer consistency, multilayer recovery, and HTAP optimization [

14,

15].

However, despite these strengths, as data volumes and velocity increase, performance and scalability problems arise. Except for some products (such as Oracle RAC, which uses clustering technology to distribute workloads across nodes while maintaining data consistency and integrity [

16]), traditional RDBMS are not built for the “dynamic and distributed” environment of modern IT architecture and for horizontal scaling.

In particular, high-frequency trading (HFT) systems create huge streams of tick data and demand microsecond latency. In these cases, systems such as in-memory databases or specialized hardware solutions (e.g., FPGA implementations or kdb+) are preferred, since conventional relational engines often introduce excessive latency.

Additionally, banks are dealing with ever more diverse data (logs, documents, multimedia for fraud forensics, etc.) that do not fit in RDBMS tables. Consequently, the new literature insists that RDBMS need not be replaced but must be complemented.

Moreover, relational databases and data warehouse rely on pre-defined schema. This presents challenges in adapting to unstructured or semi-structured data formats, which limits their scalability and flexibility. Traditional relational databases operate on a schema-on-write system, which, while efficient for structured data, proves to be rigid and less adaptable to evolving data requirements in big data environments. This rigidity makes them less suitable for current financial contexts, where real-time analytics and hybrid data environments are critical [

17,

18,

19].

In response to these limitations, vendors of RDBMS systems have advanced their technologies to address the performance requirements of current workloads. Nevertheless, RDBMS, big data, and their integration appear ever more crucial to handle complex workloads.

Furthermore, despite these advancements, HTAP technology is still emerging and can be complicated to implement (ensuring isolation, synchronization across row/column stores, etc.).

The analytics companion of RDBMS, namely data warehouses, needs to be upgraded and integrated with newer technologies like cloud and NoSQL data models for better flexibility. Many banks have started to extend their relational databases by horizontal partitioning, in-memory acceleration, or sharding. However, these options have limits without a complete re-architecture.

As an alternative approach, NoSQL databases based on models, such as document-based, key value-based, columnar-based, or graph-based, can ingest data (JSON documents, logs, graphs, etc.) without a fixed schema at speed [

20]. This is valuable in finance, where data come from diverse sources (mobile apps, social feeds, IoT, etc.) and change frequently. For example, a document database can store a customer profile with varying attributes and update it on the fly, unlike a rigid relational schema. As NoSQL does not require predefined schema, financial teams can adopt agile development for new data-centric applications. Data models can evolve as quickly as business needs change (e.g., due to new regulatory fields and new product data) without lengthy migrations. Furthermore, most NoSQL databases are natively designed to scale out across commodity servers or cloud instances, handling massive data volumes and throughput. Financial institutions often need to retain years of historical data (for risk modeling or compliance) and handle spikes (e.g., Black Friday transactions or market volatility). Some key use cases of NoSQL in the financial sector are shown in

Table 1.

In the cloud context, a large number of banks and other financial players are starting to attack this by moving relational databases to the cloud (for elasticity) and consolidating them into data lakes fueled by streaming pipelines. Cloud-managed database services (like Amazon RDS and Azure SQL) are gaining market share as they improve scalability and lower operational costs compared to an on-premises setup. Cloud-managed database solutions have their own issues, such as vendor lock (i.e., difficulties to change from one cloud provide to another). However, research has shown that lock-in dissolution practices can be easily implemented [

27].

2.2. Big Data Frameworks and Analytics in Finance

Lately, the financial sector has taken huge steps to use big data analytics frameworks for obtaining insight through large datasets. Big data technologies can ingest and process large volume of heterogeneous data—trading feeds, customer clickstreams, social media sentiment, transaction graphs, etc. These are unlike the RDBMS that store data in structured tables. Hadoop and Spark are two frameworks that are often mentioned. They allow for distributed storage and parallel computing in clusters, which is essential when the data sizes run into petabytes.

The evolution of big data technologies underwent a significant change. Tools like Hadoop can play a vital role in managing large, diverse datasets and providing scalable storage solutions [

28]. By offloading heavy read-only analyses to Hadoop, the system relieved the OLTP database and achieved faster insight generation. Apache Spark has been the next step forward. It surpasses traditional big data frameworks by processing data faster and efficiently handling streaming data and machine learning tasks [

29,

30,

31,

32].

Big data in financial services can have many benefits, such as better customer insight and engagement, improved fraud detection, and improved market analytics [

33,

34]. Large datasets can be mined to identify subtle patterns in customer behavior, leading to better services and inclusion. Similarly, fraud schemes concealed within millions of transactions can be easily exposed – utilizing larger datasets to track down trends that can help with loss prevention. In trading operations, big data analysis can help formulate the trading strategy such as backtesting thousands of scenarios and monitoring the market sentiment in real-time from news and social media.

Research from both academia and industry consistently shows that big data has a positive impact on risk management and operations. A recent review found evidence that big data enhances risk management and boosts operational efficiency in FinTech applications [

34]. Big data enables the financial sector to scale up, which involves an improvement in predicting and eliminating risk (e.g., credit, market, etc.) through processing diverse data (e.g., economic indicators, customer portfolios, etc.). Big data and AI-powered models operationally speed up intensive tasks, like customer service or credit underwriting, because they can process data much faster than manual processes. This has direct implications for financial inclusion because speedier analytics can enable services (like microloans or real-time payments) to a wider audience while controlling risks.

For real-time applications, new streaming frameworks are used to process events in real time. For example, processing each card transaction for fraud in a matter of seconds (or less). Data streaming has found various applications in capital markets and payments, as it effectively manages real-time data within trading and fraud detection [

35]. Demand for real-time analytics has resulted in the use of Lambda and Kappa architecture using the batch and stream layers.

Despite these benefits, big data has its challenges. Although these frameworks can be scaled horizontally, achieving low latency comparable to a relational system is hard. The operational latency of traditional Hadoop batch processing is on the order of minutes, which does not suit time-sensitive financial operations.

Additionally, despite these advantages, big data technologies face significant challenges. Their lack of robust transactional capabilities hinders real-time decision-making processes in financial institutions. Additionally, ensuring data quality and consistency during integration with other technologies, such as relational databases, remains a complex task. The schema-on-read approach, while flexible, often complicates data governance and standardization efforts [

36,

37]. Moreover, while big data tools provide scalability, the computational resources required for their operation can become costly and difficult to manage, especially for smaller financial institutions. These challenges raise the need for better integration frameworks to bridge the gap between big data technologies and traditional database systems.

Furthermore, it is not trivial to ensure the accuracy and consistency of these fast-moving big data pipelines with the authoritative data in relational stores.

Another main concern in the big data literature for finance is data governance, privacy, and quality. Financial data often contain sensitive personal information and are subject to strict regulations, such as GDPR in the EU. A continuing concern is to ensure that there are controls in place to enforce compliance (who can access what data, how long data will be retained, and ensuring the anonymity of data for analytics) [

38].

Data lakes—large central repositories hosted on HDFS or cloud and similar platforms—are widely used in finance. However, early implementations resulted in “data swamps” without proper governance [

39]. Research on financial data lakes makes clear the importance of data quality controls and metadata modeling [

40]. These challenges include a need for, practically, real-time processing, better data quality techniques, and more. These gaps suggest that, while big data technology is very powerful, it is not yet fully mature in addressing all of finance’s needs (e.g., ultra-low latency trading or rigid compliance reporting).

In recent developments, it is interesting that an increasing number of frameworks are run on a cloud, so that makes it a bit difficult to distinguish this section from the next. Several financial institutions are using cloud-based data lakes or analytics services (like AWS EMR, Google BigQuery, or Databricks Spark on Azure) for on-demand scalability. This has brought about new hybrid architectures like “data lakehouse”, which aim to bring the schema and reliability of data warehouses together with the scalability of data lakes. The Lakehouse is a new data architecture that unifies analytics on all data sources in one platform. The idea is that mixing structured and unstructured data will be one seamless task. This is obviously relevant to finance, where the same dataset might need to serve both traditional SQL queries and AI model training in high-dimensional similarity spaces. For instance, Databricks presented a Lakehouse for Financial Services solution in 2022 to assist organizations in managing compliance to customer analytics on a platform.

2.3. Cloud Computing in Financial Services

In the last five years, cloud computing has become a widely used computing model in financial services. An increasing number of banks and insurers are utilizing public cloud providers (Amazon Web Services, Microsoft Azure, Google Cloud, etc.) and private clouds. According to a 2023 US report, across the spectrum, financial institutions view cloud services as an important part of their technology program, and most big banks are opting for a hybrid cloud strategy [

41]. According to Technavio [

42], the private and public cloud sector in the arena of financial services will see a rise of USD 106.43 billion during 2024–2028 due to the demand for big data storage and AI. An increasing number of companies are going for hybrid cloud solutions that offer the best of both worlds but on a limited basis. But data safety and privacy issues are still serious problems.

Indeed, cloud computing can represent the next significant technological transformation. Cloud computing is designed to provide computing services over the Internet and is characterized by scalable and flexible platforms that process and store massive datasets [

36,

43,

44]. Therefore, the need for extensive on-premises infrastructure has greatly diminished; thus, this represents a shift in the paradigm for data management and analysis. Although there are concerns related to security, financial institutions have recognized the significant advantages of cloud computing. The large variety of benefits it presents can overcome the challenges as, overall, their role is significant in data management, and most current financial strategies are based on cloud computing [

45,

46].

From a regulatory perspective, financial supervisors around the world have taken a good look at cloud outsourcing generally—the European Banking Authority issued detailed Guidelines on Cloud Outsourcing and the DORA regulation to ensure operational resilience [

47]. The main concerns are the privacy of customer data and ensuring that the data stored in the cloud are not illegally transferred to others and across borders. There is the risk that certain cloud providers will experience operational outages or incidents that could affect banks. There is also a concentration risk when too much of the industry relies on one or a few big providers. Lastly, there is a security risk as banks need to be sure that the cloud platforms they are using themselves have robust security. In addition, banks also need to ensure proper access and encryption controls.

One report [

48] highlighted myths about cloud supervision, asserting that there is a similarity of risk types between on-premise and cloud models; instead, it is the governance of risk that is different. Using a cloud does not create entirely new forms of risk; banks just need to adapt their vendor risk and cybersecurity models. The CEPS analysis also points out two top fears—concentration risk and vendor lock-in—and it argues they can be mitigated (e.g., through multi-cloud strategies and contractual safeguards).

More specifically, concerns about data security, privacy, and regulatory compliance are significant barriers to the widespread adoption of cloud computing in the financial sector. Migration of sensitive financial data to the cloud requires reliable encryption, strict access controls, and adherence to data protection regulations [

49,

50].

Another challenge is integration with legacy systems. Many banks’ core banking systems were created decades ago and are not cloud-native. Moving these to cloud directly may not help and could create more problems for banks. Reports in the literature suggest refactoring applications or using cloud-native principles (e.g., microservices). The US Treasury report identified gaps in human capital and tools to securely deploy clouds—this means that many financial firms are lacking the expertise to migrate and operate in the cloud. Despite these challenges, some banks are forming partnerships with FinTech companies or consulting firms to build cloud skills and DevOps practices.

From a performance perspective, cloud technology can provide cost efficiency, enhanced cybersecurity, and operational resilience by allowing the automatic scaling of computing resources to meet surges in demand. For example, cloud platforms can dynamically add processing capacity during peak trading periods or fraud spikes, avoiding the performance bottlenecks of legacy on-premises systems.

Furthermore, financial organizations seek architectures that can scale to large volumes of data, provide fast processing for real-time insights, and still comply with stringent regulations. There is evidence that the COVID-19 pandemic further accelerated cloud adoption in finance as institutions have sought more agile and scalable IT solutions [

51].

In addition, the integration of artificial intelligence (AI) and machine learning (ML) represents another milestone in the digital revolution designed to speed up financial data analysis. Their purpose is to empower organizations that face constant, even more complex than before, challenges. The financial sector has been greatly influenced, especially in terms of fraud detection, risk management, and high-frequency trading, where the implementation of AI and ML has been a long-needed success, turning them into real assets for those critical areas [

52,

53].

2.4. EU General Data Protection Regulation vs. US Data Privacy Frameworks

EU’s General Data Protection Regulation (GDPR), which came into force in May 2018, has changed data governance in financial services. The GDPR has strict requirements related to lawful processing, explicit consent, rights of data subjects (access, erasure, and portability), etc., along with penalties of up to 4% of global turnover. The Data Protection Authority (DPA) of each EU member state monitors compliance, thus enforcing a new regulatory paradigm, which further accentuates the importance of privacy by design and prompt breach notification (72 h). Financial institutions have been leading the way in this matter due to enormous amount of personal and transactional data they handle. In the US, no one law has the scope of GDPR. The law that concerns financial institutions the most is the Gramm-Leach-Bliley Act (GLBA) for financial privacy and security, together with state data breach notification statutes and new state privacy laws like the California Consumer Privacy Act (CCPA [

54], amended by CPRA in 2020 [

55]). A proposed federal law, the American Data Privacy and Protection Act (ADPPA) [

56]), suggests that the US is getting closer to, but will not achieve, GDPR-like standards. The key features of the EU and US data protection standards are shown in

Table 2.

Since 2018, GDPR has helped improve data protection in financial institutions, not just in Europe, but also indirectly all over the world. Since 2018, most EU banks have transitioned from an uncertain position, where parties were concerned whether they will ever be fully GDPR-compliant, to a more steady state of affairs. Today, privacy governance is accepted as business as usual, and it an important exercise in building customer trust. Enforcement cases have sent a strong message: every organization must follow data protection rules. No one is above the rules, not even the biggest banks, who will be penalized if their practices are not continuously improved. American banks and FinTech companies were somewhat protected by the US rules, but have nevertheless experienced a global backlash. They reinforced security (which was already a focus due to financial regulation) and started to embrace more consumer-friendly data practices as state laws emerged. The anticipated introduction of broad federal privacy legislation (such as ADPPA) is encouraging US entities to catch up with GDPR.

A trend that is good news is the increasing collaboration of legal, IT, cybersecurity, and business units of financial institutions, particularly those considering AI-related privacy concerns [

59,

60]. GDPR and privacy laws have forced silos to break down. The chief compliance officer may often work together with the CISO (chief information security officer) and CTO (chief technology officer) on projects to ensure compliance is baked in. Cybersecurity conferences are now including privacy tracks and vice versa [

61] to discuss the different kinds of issues in the field. For instance, data governance will range between pure legal issues—what does the law require?—to technical execution—can we easily retrieve all of the data of one person? This has led to the emergence of new roles, such as, for instance, privacy engineers, who are technologists that can implement privacy features in software. Banks such as ING and Wells Fargo are putting together teams of privacy engineers and working with anonymization and consent technology. Academically, this is where the law, computer science, and policy come together. Thus, it is where many future gains (or failures) in privacy protection will occur.

2.5. Sustainable Practices

Financial institutions increasingly want sustainable computing solutions as they grow their cloud infrastructure and increase their big data usage.

There is evidence that the cloud is more energy-efficient than on-premise data centers [

62], especially if certain improvements are implemented [

63,

64]. Financial institutions may find that they could reduce energy-related costs by directing IT resources to one of these data centers, such as those operated by Amazon AWS, Google Cloud, or Microsoft Azure. This trend along with virtualization and AI-driven efficiencies could substantially curb greenhouse gas emissions related to IT. Current estimates [

65,

66] place their electricity usage at approximately 1–2% of global electricity consumption, with carbon emissions ranging from 0.6% to over 3% of global greenhouse gas emissions. According to some higher-end projections, cloud data centers are expected to use 20% of global electricity and generate up to 5.5% of Earth’s carbon emissions [

67]. As such, energy modeling and prediction for data centers are essential [

68].

The amount of data we have has increased exponentially and will reach nearly 181 zettabytes by 2025, which is three times more than what we had in 2020. The rising use of AI and other new digital technologies will increase their footprints [

69]. The demand for electricity in data centers across the globe will reach 800–1200 TWh by 2026 [

70]. This will almost be double the level in 2019 and will happen under scenarios of high growth. Thanks to efficiency improvements and a cleaner energy mix, ICT emissions growth has thus far been constrained. For example, data center GHG emissions actually fell by 8% in the 2015–2020 period, despite a 24% increase in energy use in Europe over this period [

71].

The performance of the sector benchmarks shows continued improvements in IT sustainability, although it is still a work in progress. Data centers leveraging power usage effectiveness (PUE) are plateauing at1.56 globally, while many older facilities have not optimized PUE as of yet [

72]. Meanwhile, the best-in-class hyperscale centers operate at around 1.1 PUE when optimization strategies have been put forward.

To illustrate these trends better, we have examples of sector-wide implementations. Flowe Bank (Italy) is a cloud-native green neobank that deployed its IT operations on a cloud banking platform on Microsoft Azure with ∼95–98% lower emissions than on premise. EQ Bank (Canada) also has about 94–97% lower emissions due to utilizing a cloud infrastructure [

73]. Big banks are increasingly downsizing their own data centers and partnering with cloud providers.

Although infrastructure is important, financial institutions are also looking at the software layer and data management to drive sustainability. Financial firms run complex models (e.g., risk simulations, option pricing, and credit scoring). Traditionally, the focus was on accuracy and speed, not energy. Now, there is a nascent movement to write more efficient code and use more efficient algorithms to achieve the same business outcome with less computation. For example, instead of running an extremely high-resolution Monte Carlo simulation for a risk that takes 1000 CPU-h, a bank might find a smarter statistical technique or use a surrogate model that takes 100 CPU-h with a negligible loss of precision—thus saving energy proportionally. Similarly, in AI, techniques like model pruning, quantization (using lower precision math), or using smaller pre-trained models can cut down the computation needed for tasks like fraud detection. The carbon footprint has emerged as a performance metric, so development teams are beginning to consider the carbon cost of a given computation, especially for large-scale or repetitive tasks. There are even tools emerging that integrate with code repositories to estimate the energy consumption of code changes. While still an emerging practice, this mindset is growing especially in Europe, influenced by the concept of “Green Software Engineering” [

74].

In implementing these technology strategies, a cross-disciplinary approach is often required. IT departments work with corporate sustainability teams, facilities managers, and, sometimes, external energy consultants. For instance, configuring a data center’s electrical design might involve an outside engineering firm, while setting cloud migration targets involves CIOs and CFOs making cost–benefit evaluations, including carbon accounting. It is also worth mentioning techno-ethical governance here, as banks implement advanced tech, like AI, to optimize themselves, and they are also aware of ethical considerations—ensuring algorithms do not inadvertently compromise reliability or fairness while optimizing for energy. For example, an AI that aggressively powers down servers to save energy must not risk the availability of critical systems. Governance frameworks are put in place to review such trade-offs. In essence, sustainable computing must still uphold the primary directives of banking IT: security, availability, and integrity. The good news is many sustainable practices align well with these (e.g., removing old inefficient servers reduces security vulnerabilities and failure rates). When conflicts arise (like needing redundancy vs. saving energy), governance bodies weigh priorities and sometimes choose a middle ground (like having redundancy but on a standby low-power mode rather than fully active).

2.6. Integration Approaches and Findings

Moving to the integration aspect, the integration of relational databases, big data technologies, and cloud computing brings significant opportunities, but also different challenges. The rigidity of schema-on-write in relational databases contrasts with the flexibility of schema-on-read approaches in big data systems, highlighting a divide in managing complex data structures [

75,

76]. Advanced integration strategies, including hybrid cloud models and ETL workflows, are needed to harmonize these technologies and unlock their full potential for the financial sector [

77,

78].

Using a mix of database technologies, each for what it does best, can overcome the one-size-fits-all limitation. In practice, this means maintaining relational databases for core transactional data while employing NoSQL or Hadoop-based stores for high-volume analytics data [

79]. A polyglot or multi-model strategy allows institutions to combine SQL and NoSQL systems, although it requires careful design to ensure consistency and efficient data exchange. In some cases, organizations convert relational schema into a NoSQL format or migrate subsets of data to NoSQL systems to improve scalability [

80]. This transformation can improve performance for certain read-heavy or analytical workloads, but it requires robust synchronization mechanisms between the two worlds.

Many financial institutions rely on data lakes [

81], i.e., centralized repositories on scalable storage (often cloud-based) that ingest data from relational databases, transaction feeds, and external sources. Cloud-based data lakes enable the aggregation of diverse datasets (structured and unstructured) in one place, supporting advanced analytics and AI on a unified platform. For example, the adoption of cloud data lakes has reshaped operations by providing robust storage, advanced analytics, and on-demand scalability. Unlike traditional data warehouses, data lakes can store raw data in its native form, which is then processed by big data tools as needed. Another option is the “lakehouse” architecture [

82], which combines the schema and performance benefits of data warehouses with the flexibility of data lakes, allowing SQL queries with ACID guarantees and big data processing on the same repository. This approach simplifies integration by reducing data movement between separate systems.

However, relying on public cloud platforms has its legal, operational, and reputational risks. For example, since the EU’s first major data protection law in 1995 (the Data Protection Directive, which was replaced by the GDPR in 2018), exporting personal data outside the EU is only allowed under strict conditions. In contrast, the United States has strong intelligence-gathering powers [

83]. But many European financial institutions use cloud services based in the US (such as, for example, Amazon Web Services, Microsoft Azure, Google Cloud, and Oracle Cloud) for critical operations—such as online banking platforms, data analytics, or customer relationship management—and they currently rely on the EU–US Data Privacy Framework (DPF) for transatlantic data transfers (which replaced the Transatlantic Data Privacy Framework (TADPF) [

84]). If the DPF is weakened or even annulled, financial institutions may have to quickly switch to Standard Contractual Clauses (SCCs) with additional technical or contractual controls [

85], or they may need to seek data localization solutions within the EU, including on-premise solutions. Any of these solutions would likely lead to an increase in operational costs. Financial institutions also face compliance challenges in ensuring consistent data quality and lineage across integrated systems. When the data flow from a core banking SQL database into a Hadoop-based lake and then to a cloud analytics service, maintaining an audit trail is critical. Regulators (and internal risk officers) demand transparency on how data are transformed and used, especially for automated decision making (credit scoring, algorithmic trading, etc.). This can be implemented using automated tagging strategies that label records with attributes like the origin system (SQL vs. Hadoop), processing stage, or user role. This ensures a robust audit trail of "who did what, when, and why." While such rule-based systems excel at applying granular policies and generating detailed logs, permissioned blockchain solutions can add immutable, decentralized storage for critical audit events. A smart contract can be used for compliance enforcement. For example, when data cross borders (e.g., EU → US), a compliance contract can automatically validate whether appropriate legal mechanisms (SCCs, DPF, etc.) are in place. If not, the transaction is blocked or flagged.

To summarize our findings, we synthesize the findings of the literature review and offer our guidance on integration strategies for the different financial domains, together with expected performance outcomes, and compliance-related challenges in

Table 3.

Cloud solutions make it easy to scale for unpredictable workloads and for use cases that include regulatory reporting and AI analytics. However, data locality rules and security issues can make this ad hoc elasticity difficult to implement. Many organizations combine on-premise systems with cloud ecosystems to adopt hybrid designs with container orchestration and fine-tuned security for their needs.

4. System Architecture and Integration

4.1. Technology Comparison

As shown in the literature review, relational databases, big data systems, and cloud computing platforms are the main technologies that influence financial data management. Each of them offers its individual strengths. When harmonized, they can significantly influence financial modeling and data analysis [

93].

Relational databases (

Figure 2) are traditionally considered the golden standard of structured data management. They are purpose built for efficient query processing, rigorous schema enforcement, and robust transactional support. Their strengths lie in established query optimizations and concurrency controls, with some DBs offering schema distribution [

94]. In financial contexts, these systems ensure consistency and integrity when recording high-frequency transactions, managing account balances, or generating regulatory reports. Well-known examples include Enterprise DBs (Oracle Database, Microsoft SQL Server, and IBM DB2) and Open-Source DBs (PostgreSQL, MariaDB, and MySQL Community Edition). Of the Open-Source DBs, PostgreSQL offers the most permissive BSD-style license, which comes with minimal restrictions [

95]. Both MySQL and MariaDB use the GPLv2 license [

96,

97]. They are free to use within the company, but distributing them with proprietary software creates copyleft obligations. As such, PostgreSQL is the most flexible solution for enterprises that want to maximize license flexibility while reducing legal expense. MySQL provides a well-established dual-licensing option for those who are satisfied with GPL compliance or are prepared to pay for commercial licenses. MariaDB strikes a balance between strong open-source commitments and commercial flexibility.

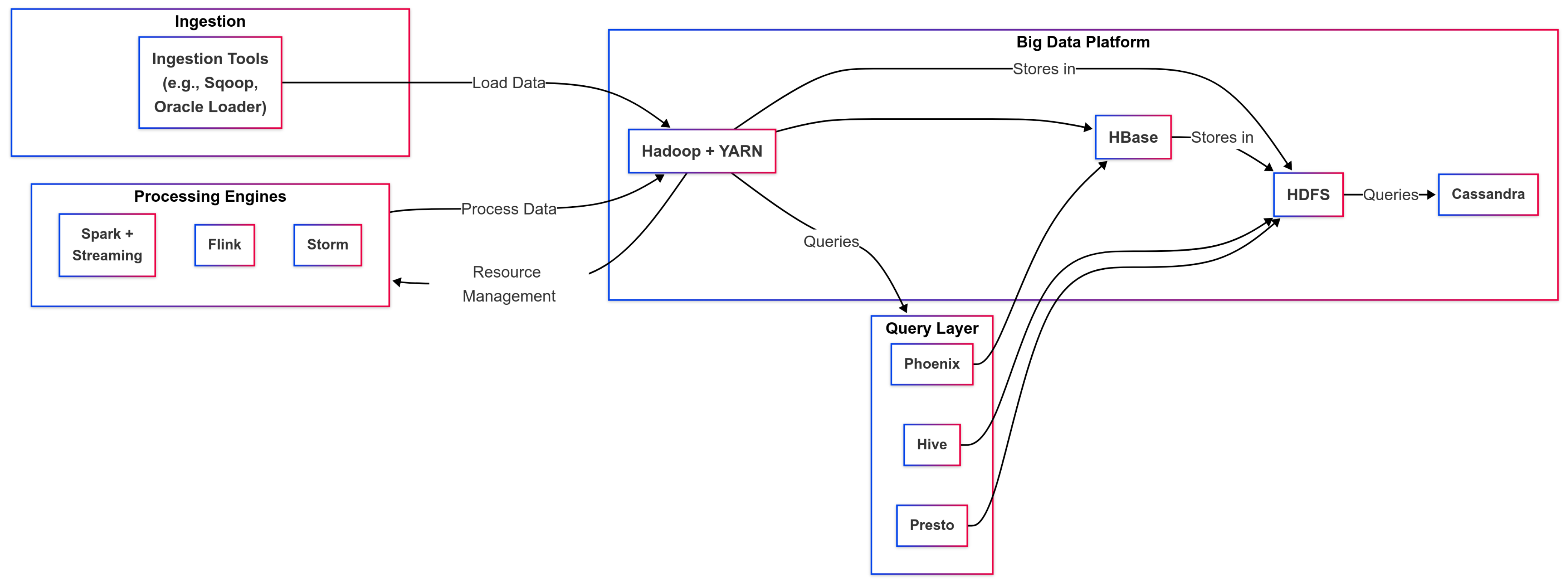

As financial institutions have to deal with ever-growing and increasingly heterogeneous datasets, big data frameworks, such as Hadoop and Apache Spark, are key platforms for distributed processing and storage (

Figure 3) beyond relational databases. Hadoop accommodates large-scale data through its distributed file system, making it suitable for high-volume, batch-oriented analytics, while Apache Spark delivers rapid in-memory data processing and is especially valuable for time-sensitive operations, such as real-time market data analysis and iterative machine learning workflows [

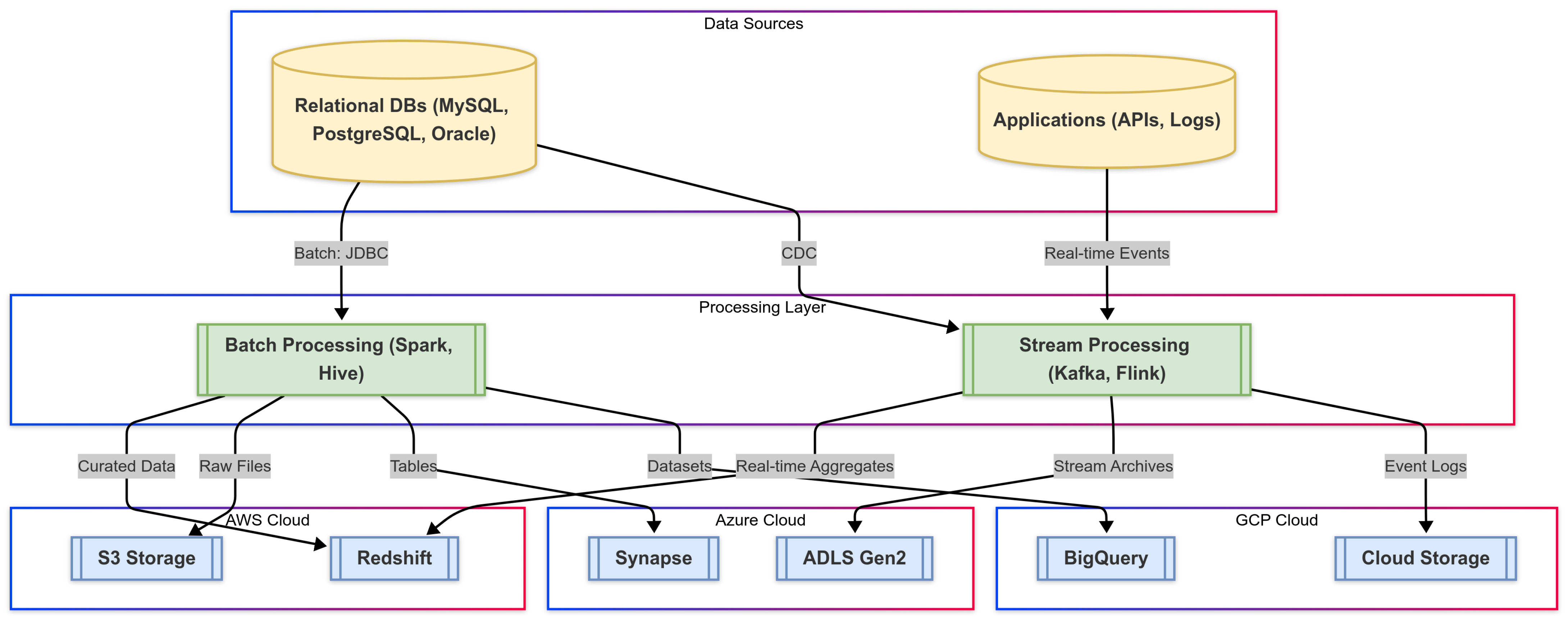

98]. These frameworks expand the analytical capabilities of organizations that must handle data that exceed the limits of traditional databases.

Cloud-based offerings (

Figure 4) represent an alternative to on-site solutions in data management and computational resource allocation. By providing on-demand scalability, cloud providers, such as Amazon Web Services, Microsoft Azure, Google Cloud Platform, and IBM Cloud, effectively integrate relational and big data infrastructures with minimal on-premises overhead. This elastic model allows financial institutions to align infrastructure costs with workload intensity, while also leveraging advanced AI and analytics services for diverse business applications.

Figure 4 shows the capabilities of cloud computing to the requirements of the finance industry with a bipartite mapping diagram. The upper part in the diagram highlights three core cloud attributes: elastic scalability, fault tolerance with cost optimization, and analytics with AI/ML support. The lower part in the diagram captures the four major cloud service providers (AWS, Microsoft Azure, Google Cloud Platform, and IBM Cloud) with their technical differentiators. All providers promise elasticity, availability, and analytics, but implementation differs between architecture, tooling, and SLA. The arrows that connect the diagrams signal the interrelationships between the above capabilities and which cloud service can do what. For example, AWS’s cross-region replication architecture claims to provide 99.95% SLA compliance for payment systems. Azure’s paired region model enables automated failover for core banking with low RPO and RTO during regional incidents. GCP’s multiregion databases enable real-time fraud detection with confidential computing. IBM’s validated financial services stack claims to provide hybrid deployment with FIPS 140-2 encryption. Each of the connections here do not rest on any airy claims about the cloud. Instead, they are all tied to specific measurable service-level agreements, such as the 99.99% up time for Azure virtual machines that are regulatory-compliant.

The comparison in

Table 4 outlines the features, advantages, and drawbacks of the technologies.

Relational databases are essential for processing standard financial transactions because of their powerful query processing and strong data integrity functionality. Hadoop, as well as Apache Spark, allow for distributed storage and high-throughput processing, thus tackling the challenges posed by large volumes of unstructured data [

99]. Cloud computing platforms, like AWS and Azure, provide dynamic capabilities on a cost-effective basis to financial institutions in order to manage volatile spikes in data. Together, these technologies are strengthening strategic decision-making processes within the sector [

100].

4.2. Integration Strategies

The integration of relational databases, big data, and cloud computing is considered a milestone in addressing modern issues. This section investigates methodologies and technologies for an effective transfer and transformation of data across these platforms. Our objective is to achieve interoperability that utilizes the unique advantages of each technology while focusing on their shortcomings. The fusion of relational databases, big data frameworks, and cloud services represents a reliable method for navigating the management of large complex volumes of structured and unstructured data. This framework (

Figure 5) establishes the basis for a dynamic ecosystem comprising data transformation and integration, primarily through ETL (Extract, Transform, Load) processes and analytical operations, enhancing decision making accurately in real time. Employing Apache Sqoop for smooth data migration between Hadoop systems and relational databases, alongside Apache Kafka for real-time data streaming, shows that interoperability can be achieved [

101]. Such an approach enables the analyses of a a large spectrum of data, which is necessary for the deep analytics and informed strategic directions needed to surpass conventional data management limitations.

For an efficient migration process between big data and relational databases, data transformation is necessary. This process necessitates advanced ETL workflows to provide accurate data translation and transportation between systems. Apache Sqoop is utilized for the bulk transfer of data and effectively manages both import and export operations, while Apache Kafka facilitates dynamic streaming data between relational and big data systems, entailing prompt analysis and fast decision-making processes [

102]. In addition to these tools, the integration of relational databases with cloud computing offers even more advantages. Cloud data warehousing platforms, such as Amazon Redshift and Google BigQuery, offer strong capabilities concerning the analysis of information from relational databases within a cloud framework. Migration tools and hybrid cloud models not only facilitate the transition of databases to the cloud, but also enhance flexibility and scalability by integrating on-premises resources with cloud-based services [

103,

104].

Leveraging cloud storage and computational services, ideally, offers scalable solutions for big data projects, utilizing platforms like Amazon S3 and EC2, Google Cloud Storage, and Compute Engine to provide extensive data storage and processing capabilities. At the same time, services such as AWS EMR and Google Dataproc simplify the management and scaling of these initiatives while providing scalability and ease of operation within the cloud [

105].

In addition, applications that span relational databases, big data, and cloud systems equally benefit from APIs that allow easy data transfer across systems and applications. Having the data in a standard format within systems, e.g., JSON, Avro, etc., helps in integration. Moreover, it is important to keep an eye on the data security and regulatory compliance across integration points. Some of the key issues are encryption, access controls, and adherence to data protection laws [

106].

The integration of different technologies and approaches can be quite complex because they come with their own set of advantages and disadvantages (

Table 5). Thus, any integration initiative should tackle the issues of the compatibly of data and interoperability of the system. Moreover, a unified environment should work smoothly and efficiently. To optimize cloud and big data resources, there are needs for the management of resources, the proper allocation of these resources, and security. Banks and other financial institutions should do this for reliable performance and regulatory compliance.

4.3. Hybrid Architecture and Practical Implementation

Integrating technology within the financial sector is a critical challenge that also requires data security and privacy. It should be designed for compliance with financial regulations, advanced security measures, and encryption techniques intended to protect sensitive financial data and guarantee integrity and confidentiality [

107].

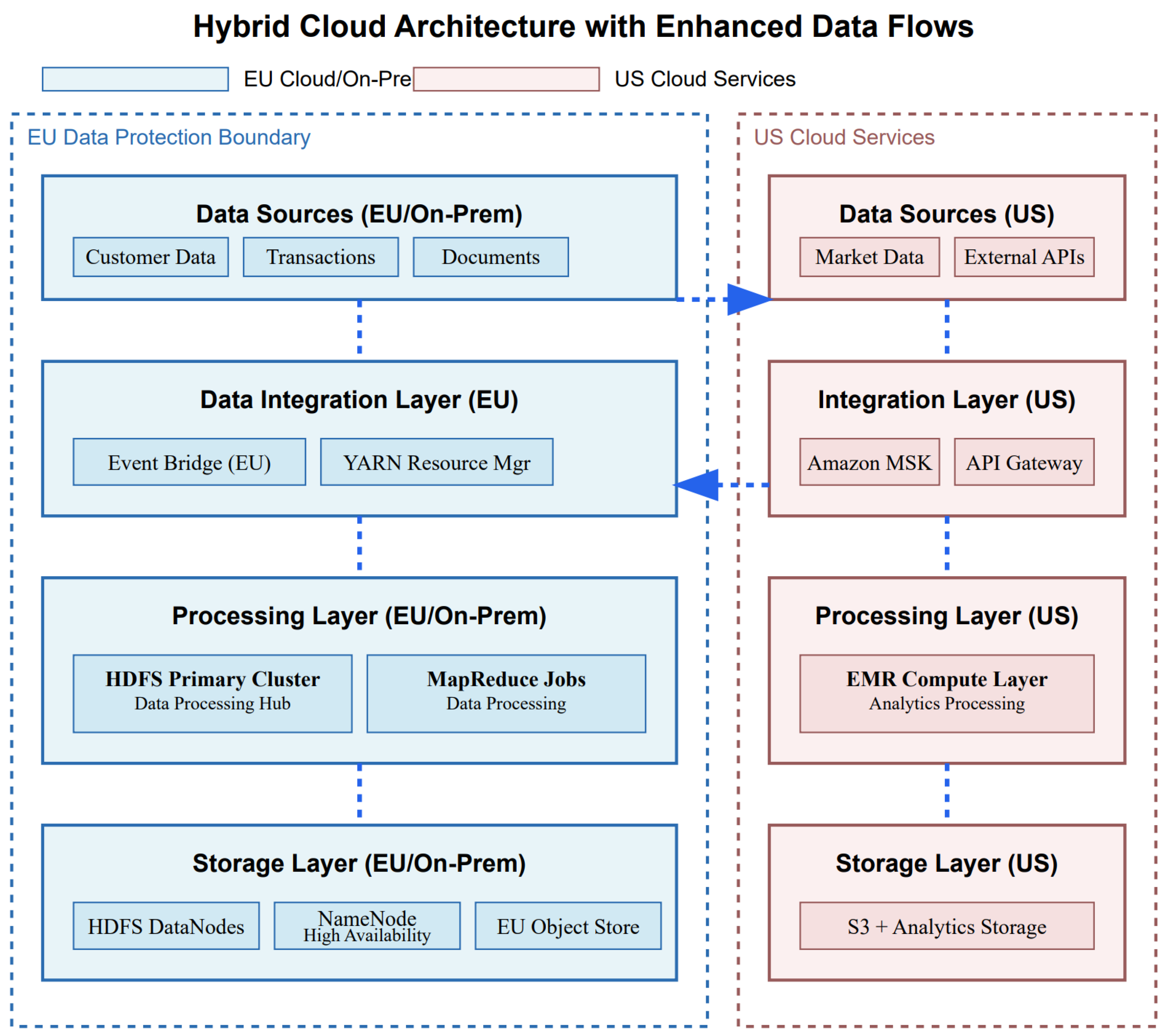

The proposed architectural design and integration tactics for relational databases, big data solutions, and cloud platforms aim at improving financial performance analysis, particularly through machine learning. Thus, the unique needs of financial entities are met, with efficient data handling, scalable processing, and the incorporation of analytical innovations. Ensuring system compatibility, optimal resource usage in cloud and big data settings, and robust security compliance not only supports financial modeling and analysis, but also allows for flexible adjustments to future technological advances. A hybrid cloud model allows for financial or highly regulated institutions (e.g., EU institutions) to keep sensitive or regulated data within their own private data centers or in EU-based clouds. In such cases, non-sensitive data or workloads that require high elasticity can be placed in a public cloud, potentially outside the EU. This helps ensure compliance with data protection rules for critical datasets. Public cloud usage (including US-based services) can be limited to less privacy-critical applications or pseudonymized datasets. This approach helps ensure adherence to data protection rules for critical datasets while optimizing infrastructure costs. However, implementing such a model requires careful planning to avoid common pitfalls, including hidden data exposures, vendor lock-in, and encryption vulnerabilities.

Table 6 summarizes these key advantages, potential challenges, and implementation steps for EU financial institutions when adopting a hybrid cloud strategy.

In addition, a hybrid setup can also provide redundancy and valuable business continuity protection. If regulatory or political changes invalidate certain transfers to the public cloud, the institution can switch critical operations to the private cloud to reduce downtime and compliance risks. However, splitting data and workloads between private/on-premises and public clouds can introduce governance and security challenges that might not be worth the effort if the data are not particularly sensitive or heavily regulated. Furthermore, some applications, especially modern cloud native services, may be difficult to split across regions while maintaining full functionality [

108]. If the data are sensitive and heavily regulated, and if storing on-premises or in EU clouds is not an option, then, for example, due to a lack of needed features, a Transfer Impact Assessment (TIA) can be run to assess whether US transfer can still be legal if Standard Contractual Clauses (SCCs) are signed and enforced with technical safeguards (e.g., encryption, split processing, etc.). Other solutions, such as Binding Corporate Rules (BCRs) or derogations under GDPR Article 49, can be hard to secure, or consent can be rendered invalid if it is bundled or not freely given (e.g., in an employment context).

As shown in

Figure 6, we propose a hybrid cloud approach. The architecture implements two primary data flow patterns based on data classification. Personal data, including customer information and transactions, flows through EU-compliant systems, while non-personal data, like market analytics, can remain on US cloud services. Of course, the final designs must be tailored to the compliance obligations of each firm, especially if the local/EU data residency laws are stricter than the DPF, or if the DPF is challenged. The integration layer handles three important patterns.

The first pattern refers to event-driven integration. Amazon Managed Streaming for Apache Kafka (Amazon MSK) in the US zone and Event Bridge in the EU zone work together through a sophisticated event routing mechanism. Such managed systems, where the major cloud providers excel, are difficult to replicate on premises using other European cloud services. When market data arrive through external APIs, MSK processes these events and can trigger analytical workflows. However, the interaction becomes more complex when dealing with business events that might contain both personal and non-personal data. In these cases, Event Bridge in the EU implements an event-filtering pattern:

Events are first processed through a payload analyzer that classifies data elements;

Personal data remain within the EU boundary, triggering local workflows;

Non-personal elements are extracted and routed to MSK for analytics;

A correlation ID system maintains the relationship context across boundaries.

For example, when processing a trading event, customer details stay in the EU, while anonymized trading patterns can flow to US analytics.

The API Gateway in the US zone implements the second pattern, i.e., a facade one that presents a unified interface for external services while maintaining data sovereignty. This works through the following:

Requesting classification at the gateway level;

Dynamic routing based on data content;

Transformation rules that strip personal data before US processing;

Response aggregation that combines results from both zones.

The third pattern refers to ETL pipelines in the EU that handle sensitive data transformations but coordinate with US analytics systems. This is managed through the following:

A staged ETL approach where initial processing occurs in the EU;

Aggregation and anonymization steps that prepare data for cross-border movement;

Batch windows that optimize data transfer timing;

Checkpointing mechanisms that ensure consistency across zones.

Such a hybrid approach reduces rather than eliminates all hazards through compartmentalization. To guarantee compliance, companies must still routinely review their architecture, maintain appropriate documentation, and closely examine data flows. There should be clear data maps illustrating precisely which kinds of data live in which contexts and their interactions.

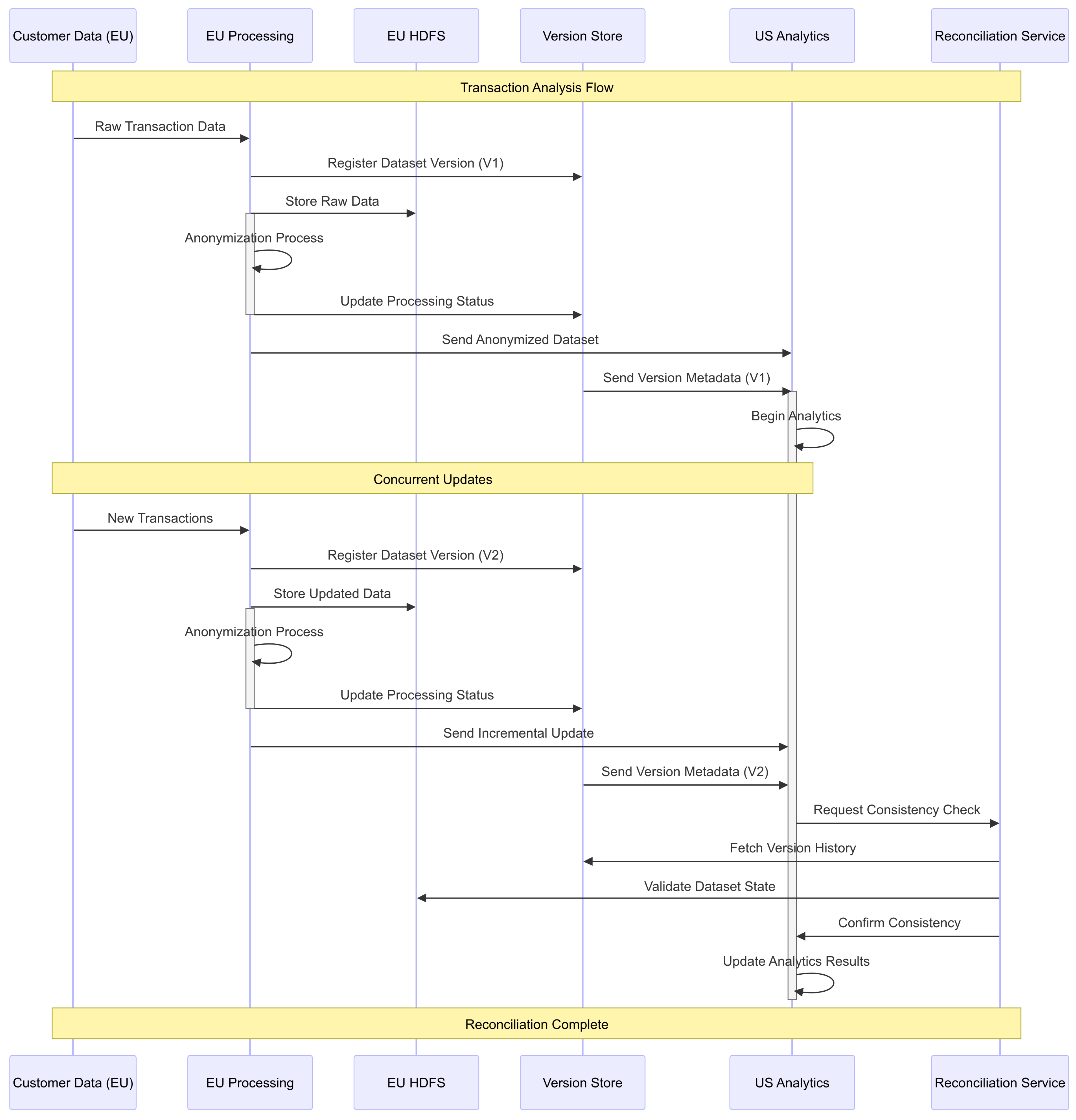

Furthermore, the hybrid architecture introduces eventual consistency challenges. In the proposed hybrid cloud architecture, the eventual consistency manifests itself primarily through the data processing pipeline between the EU and US zones. This differs from traditional BASE (Basically Available, Soft state, Eventual consistency) model scenarios because we are not just dealing with eventually consistent data stores but with an entire processing pipeline that must maintain consistency while respecting data protection boundaries. The challenge is not just about data convergence, but about maintaining analytical integrity across segregated processing environments. When data need to be analyzed across both regions, there are specific consistency considerations.

First, there is the temporal aspect of data synchronization. When the EU HDFS cluster processes personal data and generates anonymized datasets for US analytics, there is a delay before these datasets become available in the US zone. This delay creates a time window in which US analytics systems might be working with slightly outdated information.

Second, there are state management complexities when dealing with long-running analytics processes. For example, if a financial analysis starts in the US zone while data are still being processed in the EU zone, there is a need for rather sophisticated mechanisms to ensure that the analysis incorporates all relevant data points. This often requires implementing versioning and checkpoint systems that can track the state of data across both zones.

A practical example would be analyzing customer transaction patterns. The EU zone processes the raw transaction data, anonymizing them before sending it to the US analytics cluster. During this process, we need to ensure that the anonymization process maintains consistent customer cohorts across different time periods, such that any aggregated metrics maintain their statistical validity. In doing so, the analysis results can be correctly mapped back to the original data contexts. To address these challenges, the architecture implements several key mechanisms: version tracking for all datasets moving between zones, explicit timestamp management for data synchronization, reconciliation processes that validate data consistency across regions, and compensation workflows that can adjust for any inconsistencies detected during processing.

As shown in

Figure 7, we show a scenario in which the data originate in the EU. They are anonymized and versioned before being transferred for US analytics. Concurrent updates are handled through incremental versions, and consistency is ensured through a reconciliation process that validates the state of data across zones by checking the version metadata from the Version Store, validating the dataset state in EU HDFS, and confirming the consistency before analytics results are updated. By following this strategy, while we may have temporary inconsistencies as data flow through the system, we can maintain data integrity and provide accurate analytics results while respecting data protection boundaries.

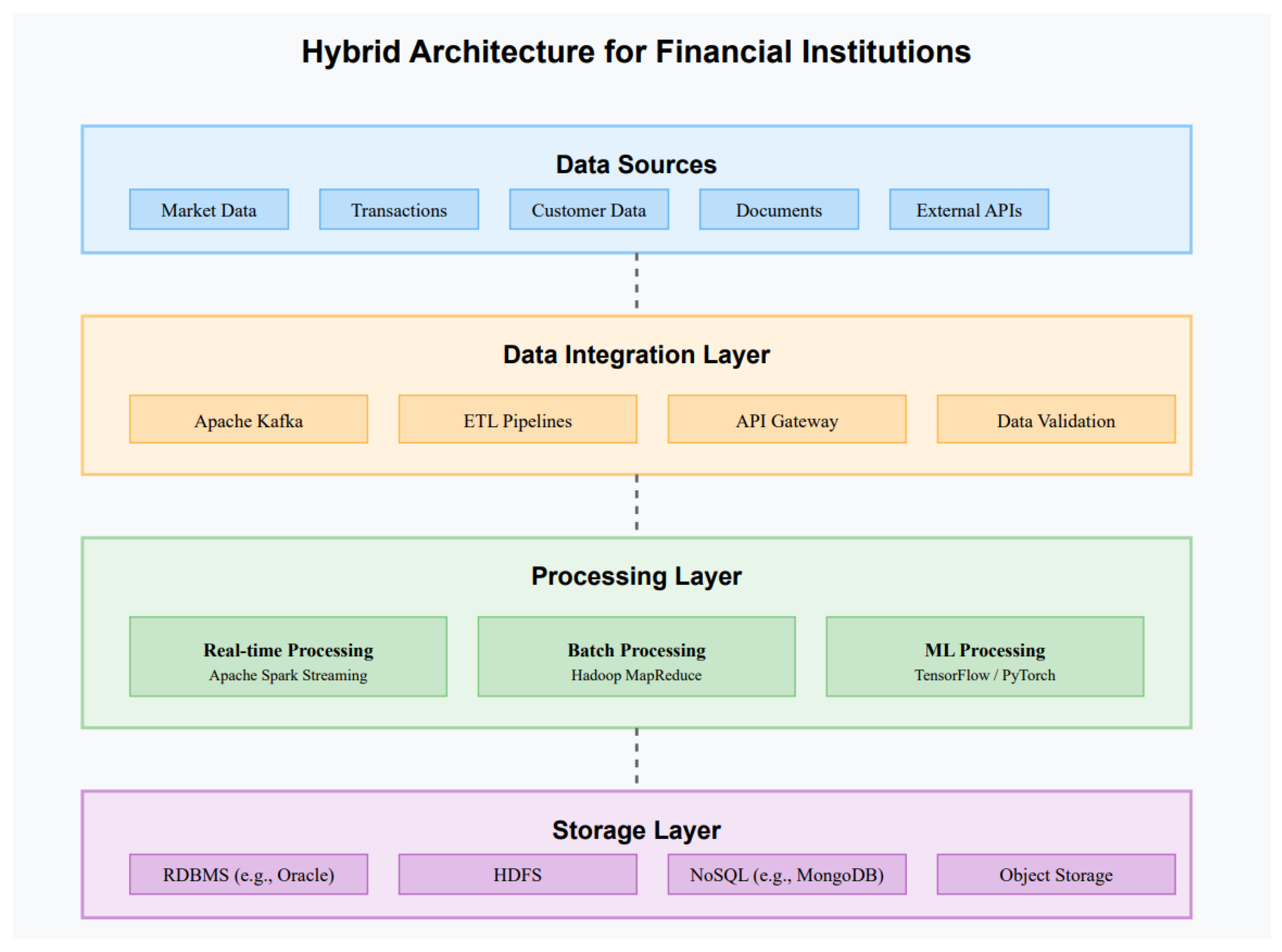

Building on these compliance and consistency foundations, we propose a comprehensive four-layer architecture (

Figure 8) that integrates various technological components to address the complex requirements of modern financial data processing and analysis.

The data sources layer consists of several inputs that are characteristic of financial institutions. This layer manages market data feeds, transaction records, client information, documentary proof, and outside API connections. From structured transaction logs to unstructured documents, the range of various data sources represents the heterogeneous character of financial data, therefore requiring a flexible and strong architectural approach.

The data integration layer refers to the flow between processing systems and sources. The real-time data streaming features of Apache Kafka are welcomed for time-sensitive financial activities, such as fraud detection and trading. While the API gateway handles outside connectors, ETL pipelines can manage data transitions across several formats and platforms. For financial organizations under strict regulatory control, data validation systems that guarantee the accuracy and quality of the incoming data are vital.

The Processing layer can easily meet varying computing needs. Apache Spark Streaming drives real-time processing for rapid data analysis demands, including trade algorithms and fraud detection. Hadoop MapReduce handles batch processing tasks such as risk analysis and regulatory reporting, among other large-scale analytical tasks. Powered by frameworks such as TensorFlow and PyTorch, the specialized ML processing component allows for a comprehensive analysis of jobs, such as credit scoring and market prediction.

The architecture’s foundation is the storage layer, which maximizes data management using a variety of storage options. Typical RDBMS systems, such as Oracle, manage structured transactional data that require ACID compliance. While NoSQL databases give extra freedom for semistructured data, HDFS offers distributed storage for massive datasets. Cloud platforms typically provide object storage in a multi-tiered schema: with hot storage for frequently accessed data, warm storage for occasionally accessed data, and cold storage for rarely accessed archive data. It is the core of a company’s data lake and can effectively manage unstructured data, such as papers and images, with automatic policies that move the data between tiers based on access patterns and age, optimizing both cost and performance. Each tier has different price points and access latencies: hot storage provides subsecond access but costs more per gigabyte, whereas cold storage is significantly cheaper but may have retrieval delays of several hours. This strategy enables organizations to balance performance requirements and storage costs throughout the data lifecycle.

LLM-enhanced models, like the NL2SQL models, can help financial firms democratize access to data and make better decisions. The NL2SQL model converts natural language queries to SQL [

109]. Finance firms can leverage NL2SQL models to empower non-technical users to easily extract complex financial information—performance metrics, risk assessments, compliance reports, etc.—without learning SQL. They reduce the burden on IT experts and enable real-time access to data. The ability to analyze themselves will encourage firms to eliminate IT dependency. They will be able to obtain reports faster, reduce turnaround time, and be compliant with regulatory reporting. Firms will also be able to deploy other applications like chatbots or automated risk management applications.

The dashed lines in the architectural diagram show the connections between layers, i.e., the bidirectional flow of data over the system. This architecture supports the analytical needs of financial organizations by allowing both the real-time processing of incoming data and the retroactive examination of old information. The modular character of the architecture allows component scaling and replacement to be possible without affecting the whole system, therefore offering the flexibility needed to fit changing financial technology needs. It offers a template for companies trying to upgrade their data processing capacity while preserving the dependability and resilience needed in financial operations, therefore reflecting major progress in financial technology infrastructure.

This architectural design combines various storage types improves and management of different data types without impairing performance and compliance requirements. The variety of processing layers, here, can deal with anything from long-term risk assessment to real-time trading decisions. Furthermore, the robust integration layer ensures data consistency and reliability throughout the system, which is essential to maintain operational integrity and meet regulatory requirements.

In practice, organizations benefit from (1) advanced data classification and dynamic event filtering, which are often integrated into managed streaming tools (like Apache Kafka and Amazon MSK) that route data according to pre-established definitions; (2) versioning and reconciliation across EU/non-EU spaces to minimize latency and temporary divergences; (3) robust encryption and zero-trust principles, which ensure decryption keys and cryptographic controls stay compliant and within the EU; and (4) flexible scaling, with mission-critical/regulated operations remaining on-premises and computationally intensive processes being offloaded to the public cloud.

5. Performance Analysis

5.1. Problem Definition

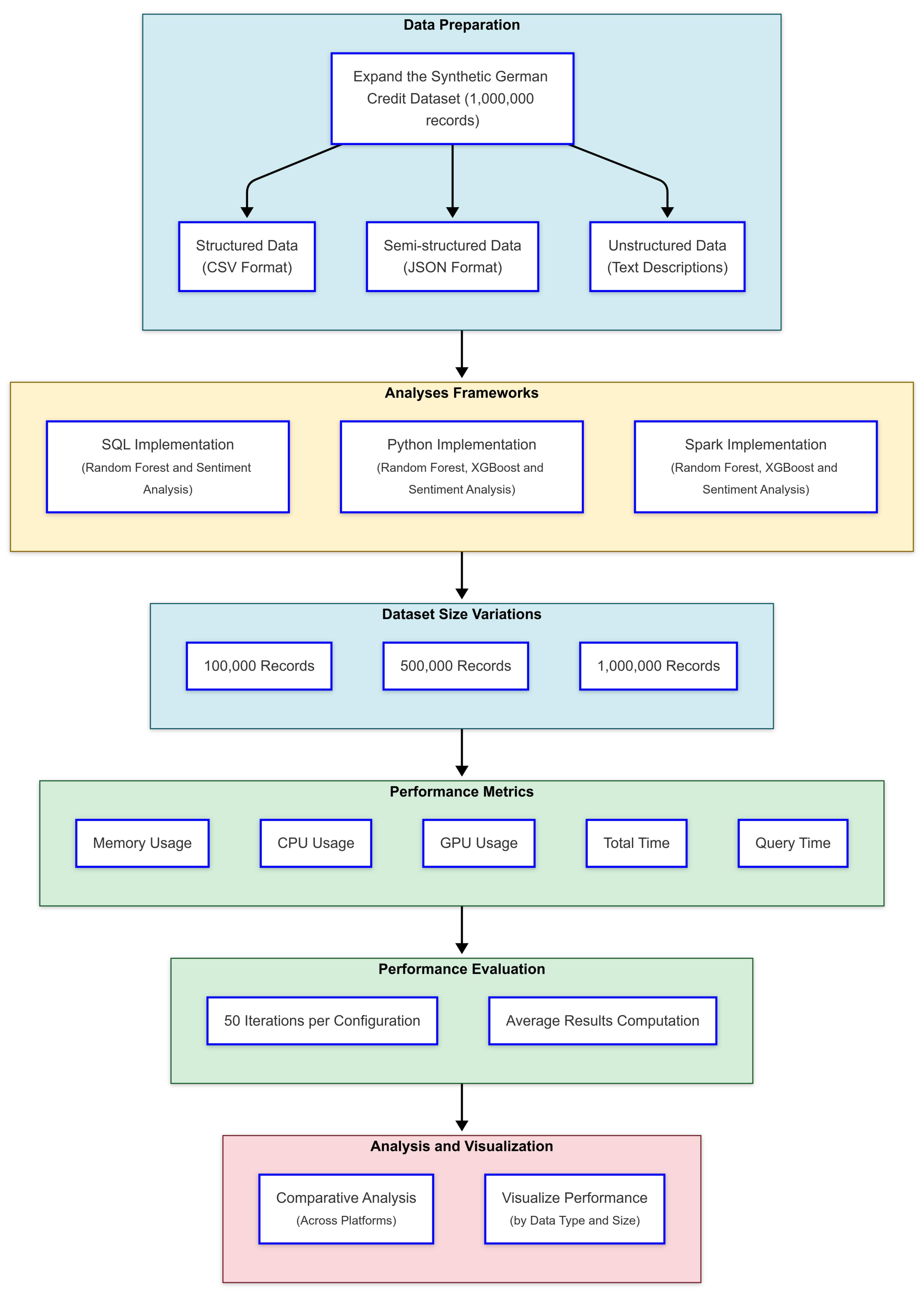

In this section, we evaluate the performance of various technologies in handling structured, semi-structured, and unstructured data within the financial sector. Financial institutions frequently resort to data-driven decisions, but the diversity and volume of their datasets often expose significant limitations in current technologies. Relational databases like Oracle SQL excel at processing structured data, but they face inefficiencies when handling semi-structured or unstructured datasets. Big data technologies, such as Hadoop and Spark, address scalability and distributed processing needs but struggle with real-time analytics and transactional integrity. Similarly, cloud platforms provide scalable infrastructure, but they often introduce challenges in compliance, data integration, and security.

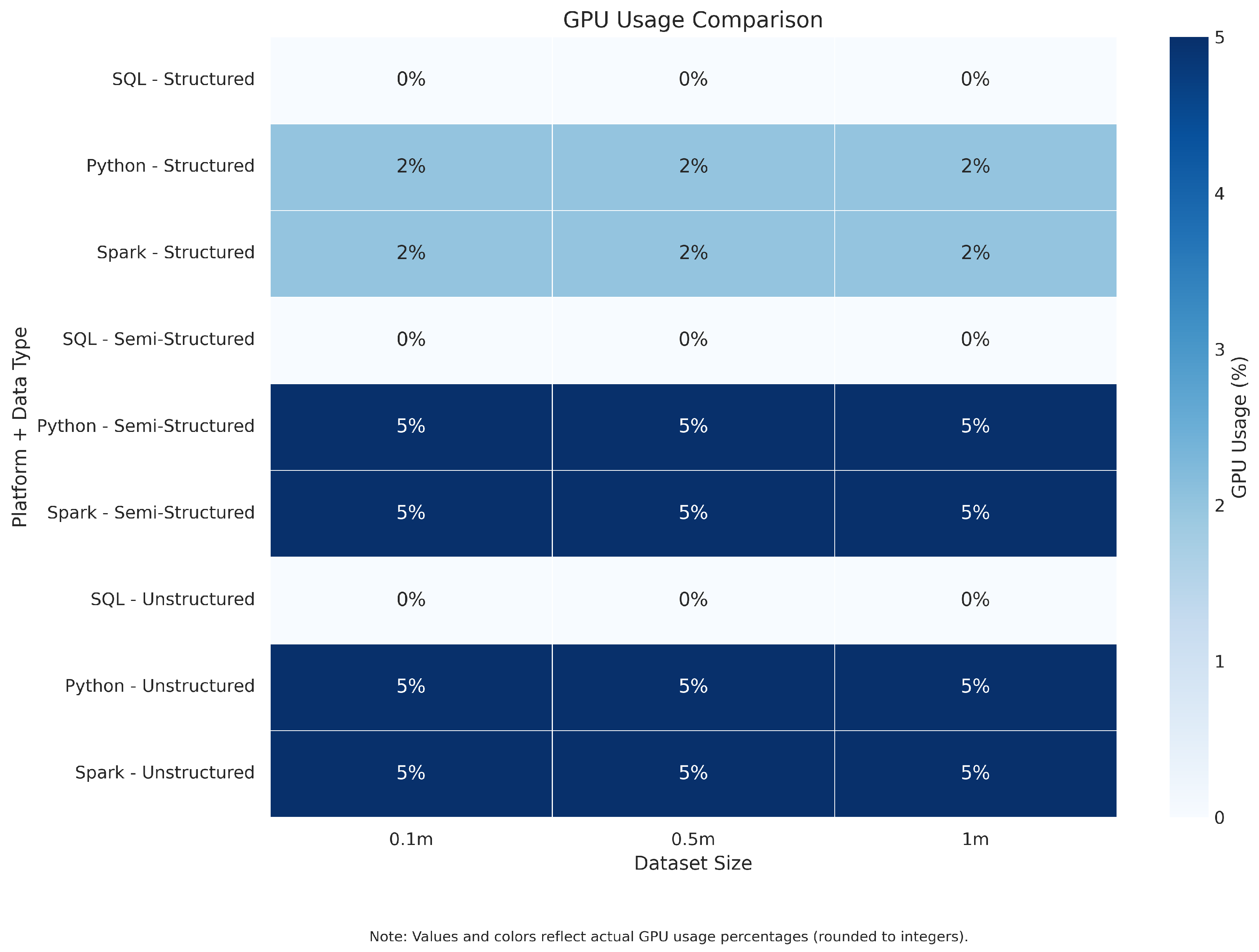

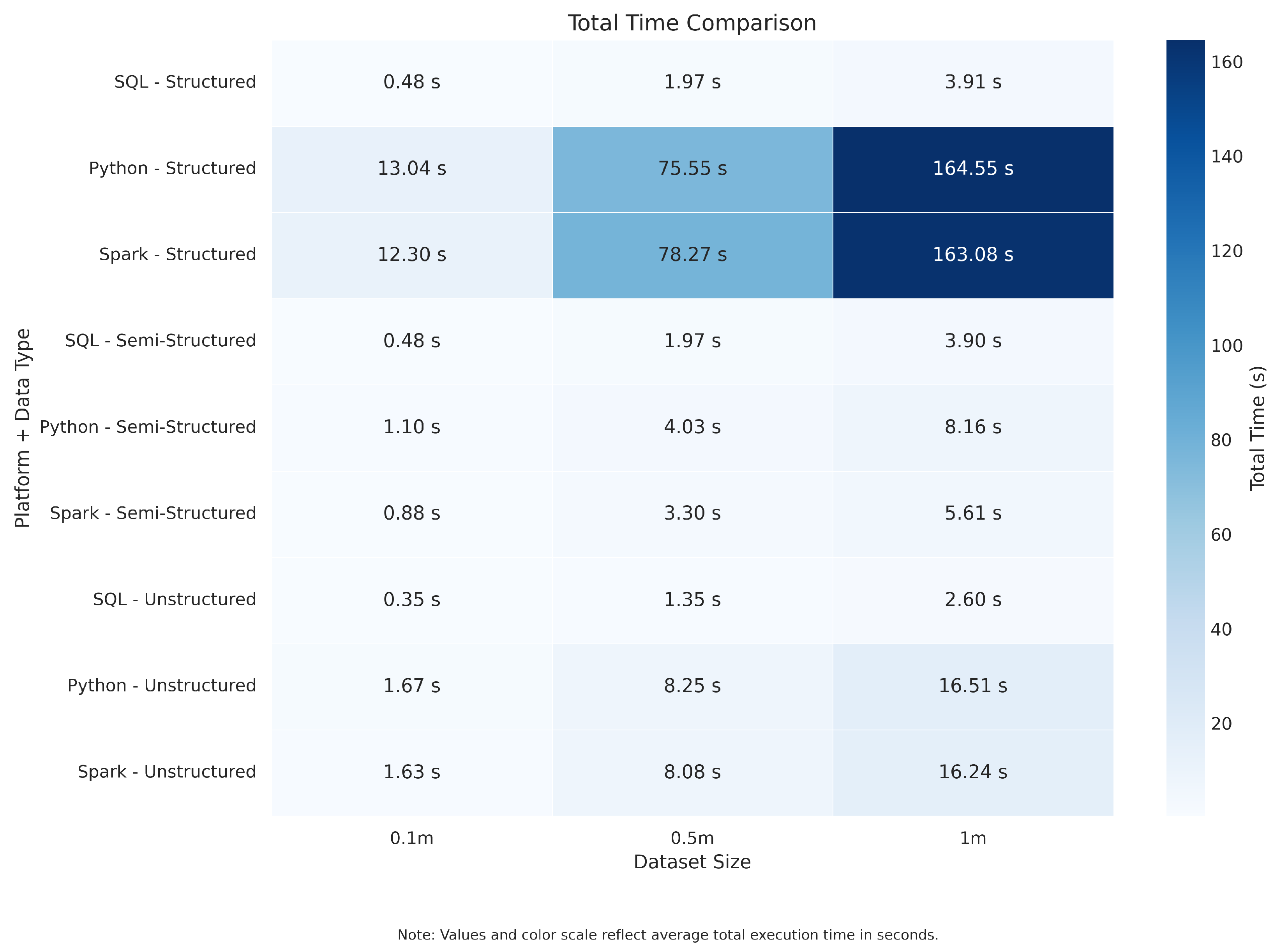

This study tested the ability of these technologies to overcome these challenges by implementing tailored machine learning models for each data type. Structured data were analyzed using the Random Forest algorithm, semi-structured data were processed through Gradient Boosted Trees (GBT), and unstructured data were examined via Sentiment Analysis. Performance was measured using key metrics, i.e., memory usage, CPU usage, GPU usage, total time, and query time, to provide a comprehensive understanding of the strengths and weaknesses of SQL, Python, and Spark in these contexts.

By exploring these technologies, our aim was to enrich the data analytics capabilities of the financial sector, proposing integrated solutions that optimize performance and address technological limitations in different types of data.

Although the SQL testing approach offers preliminary data insights and hypothetical decision-making criteria without actual predictive modeling, using Python and PySpark allows for the direct implementation of a Random Forest classifier for creditworthiness prediction. These methods are similar in terms of training, prediction, and evaluation, but their accuracy metric is variable. When comparing Python and PySpark, specific factors, such as dataset size, computational resources, and the intended scope of analysis, are considered. Python’s scikit-learn is more user-friendly for smaller datasets, whereas PySpark is the preferable choice for processing large data volumes [

110,

111].

5.2. Performance Analysis and Results

This section discusses the results from performance tests carried out using SQL, Python, and Apache Spark across structured, semi-structured, and unstructured datasets. The findings directly address Research Question 3 (RQ3), which explores the influence of relational databases, big data platforms, and cloud technologies on financial data management and analysis. Analyzing execution time, memory usage, and CPU utilization allows us to build a practical evaluation framework, which is relevant for financial institutions aiming to improve the efficiency of their data storage and analytics systems.

The chosen evaluation metrics were used due to their importance in financial scenarios, where execution time directly shows the responsiveness and transaction processing speed, which is critical for financial institutions when dealing with real-time transactions and analytics. Memory usage metrics help evaluate how efficiently resources are being used, which has a direct impact on both cost-effectiveness and the scalability of financial systems. CPU utilization offers insight into computational efficiency and can highlight processing bottlenecks that might compromise system performance or stability, especially during peak financial workloads.

To improve the reliability of the results, each performance test was run 50 times. Tests were conducted on datasets, ranging from 100,000 to 1,000,000 records, using a consistent hardware setup on Google Colab with an NVIDIA A100 GPU. This environment was selected for its accessibility, reliability, and stable performance, which are important for ensuring the reproducibility and comparability of the results. The NVIDIA A100 GPU played a key role due to its strong parallel processing capabilities, making it well suited for handling the large datasets, complex computations, and high processing demands that are typical of financial data workflows. In addition, GPU acceleration led to noticeable reductions in execution time and enhanced the performance of the distributed tasks, aligning with the computational requirements of modern financial systems.

Future evaluations could include additional factors to improve the depth and relevance of the testing framework. For example, monitoring GPU utilization may provide more detailed insights into how effectively hardware acceleration is used in data processing. Measuring network latency and disk I/O throughput would also be useful, particularly in the distributed or cloud-based environments common in financial institutions, offering a more complete picture of system performance. Finally, including metrics related to reliability and fault tolerance would help evaluate how well the platforms can handle real-world operational demands.

Table 7 summarizes the results observed during testing, highlighting SQL’s superior performance in handling structured datasets (primarily due to its low memory consumption and quick execution times), Python’s adaptability for semi-structured data, and Spark’s excellent capability with unstructured and large-scale semi-structured data, leveraging its distributed computing capabilities. These are, of course, synthetic tests that attempt to mimic real-world scenarios. Actual performance can be highly dependent on the nature of the queries, indexes, cluster size, network, etc. In our tests, we used Python 3.11 and Spark 3.5.4.

Cross-validation was performed using a k-fold methodology for machine learning components, helping to verify the consistency of our findings across different data partitions. The error analysis revealed several important considerations.

Structured datasets (e.g.,

Table 8) are characterized by a consistent schema, making them ideal for systems like SQL. In this study, these datasets included information, such as customer account details, transaction logs, and financial histories. SQL efficiently processed these datasets due to its ability to handle predefined schema and execute complex queries rapidly.

Semi-structured datasets (e.g.,

Table 9), such as JSON files, combine structured elements with hierarchical data formats. This flexibility allows for varied data organization, making Python a suitable choice due to its extensive libraries, like Pandas for data manipulation and Scikit-learn for machine learning tasks.

Unstructured datasets, as illustrated in

Table 10, include free-form text and customer feedback that pose unique challenges due to their lack of a predefined format. Spark excelled in processing these datasets, leveraging its distributed architecture to manage the computational load effectively. Textual data, such as customer reviews or social media sentiments, were analyzed using Spark’s ability to scale and perform real-time processing, enabling actionable insights for financial institutions. These datasets often require advanced natural language processing to extract meaningful information, such as identifying sentiment trends or detecting fraudulent patterns. Spark’s ability to transform disorganized, unstructured data into meaningful analytics demonstrates its critical role in modern financial data management.

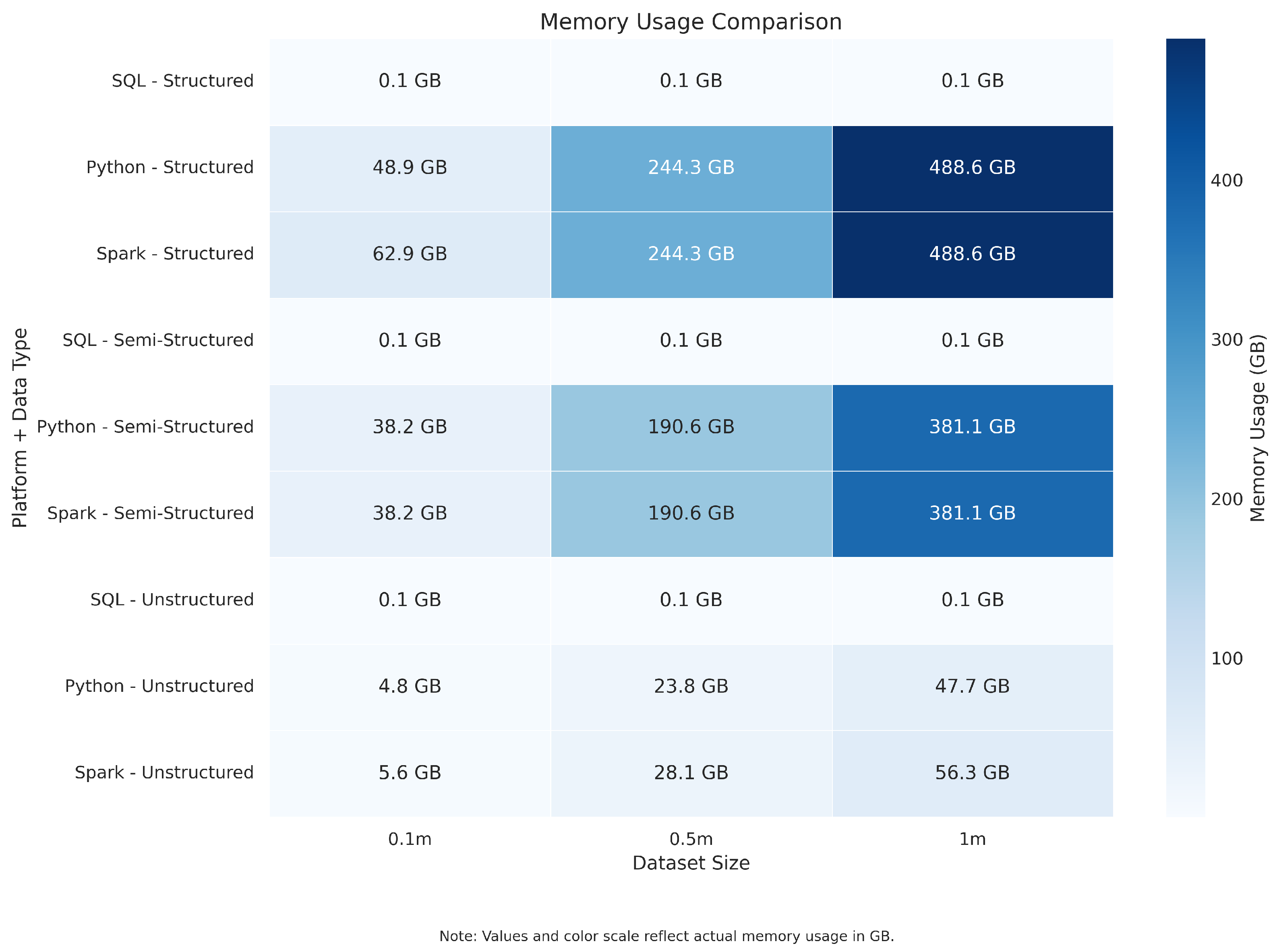

As shown in

Figure 9, SQL consistently demonstrated its efficiency in keeping memory usage remarkably low, even as the dataset size expanded from 100,000 records to 500,000 records and finally to 1,000,000 records. This trend holds not only for structured datasets but also for semi-structured and unstructured data, which is a testament to its design’s resourcefulness. Python, on the other hand, shows a notable increase in memory usage as the datasets grew, particularly for structured data, where it reached 488 MB at the largest size. This heavy reliance on memory shows Python’s limitations in handling scalability efficiently. Spark was able to strike a balance, maintaining stable memory usage across all dataset types and sizes. This makes it particularly effective when dealing with large unstructured datasets, where managing resources is key.

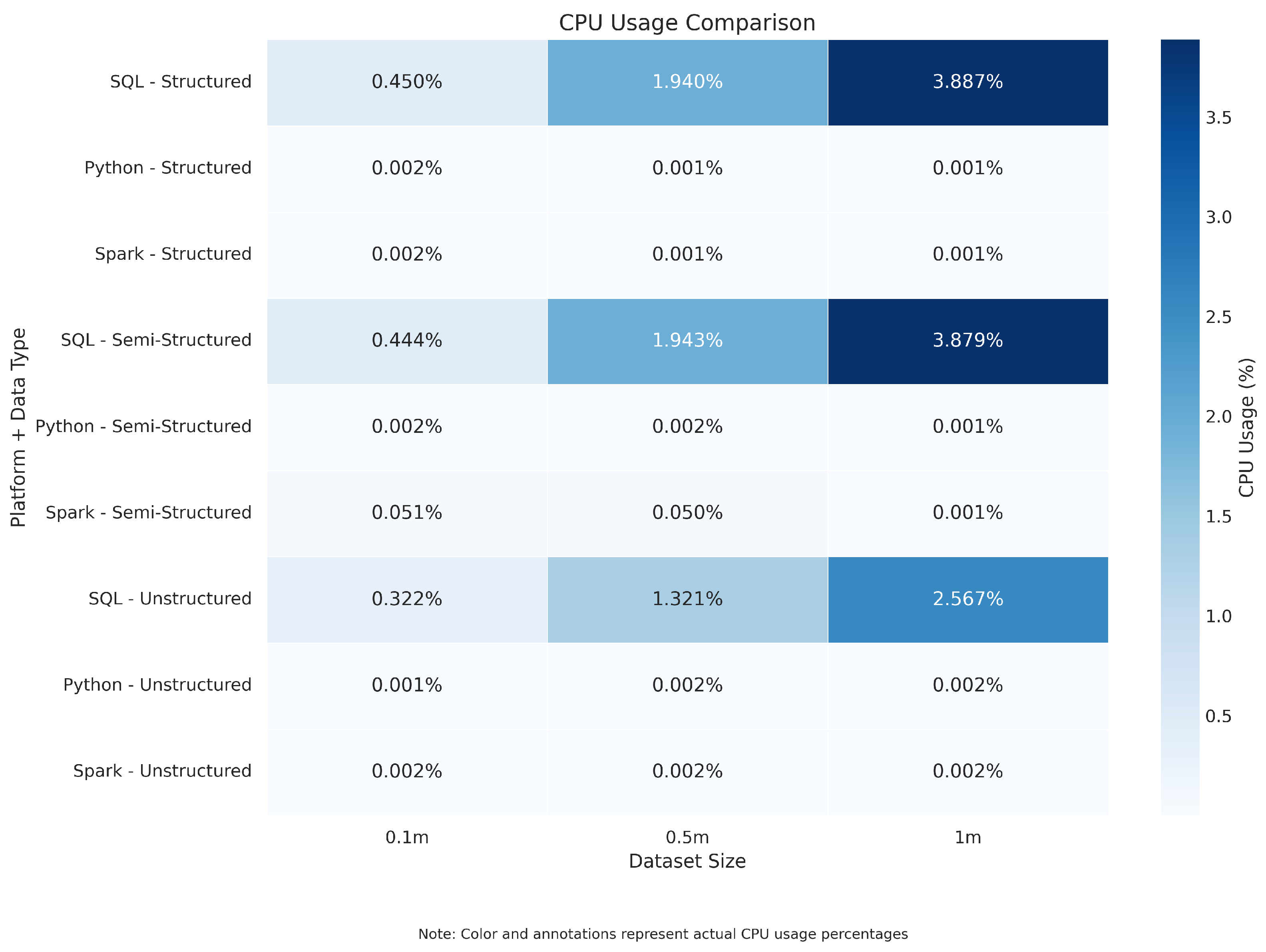

As depicted in

Figure 10, SQL efficiently managed CPU usage, delivering consistent performance across all dataset sizes, particularly for structured data. This stability reflects its ability to optimize processing power effectively. Spark also showed impressive performance, distributing tasks efficiently across its architecture, which was especially evident in its handling of large unstructured datasets. Python exhibited minimal CPU involvement, relying heavily on memory resources.

Both Python and Spark effectively utilize GPUs for processing semi-structured and unstructured data, but they do so in very different ways (

Figure 11). Spark, in particular, stands out for how well it leverages GPUs to significantly reduce processing times for large datasets. This makes it a powerful choice for high-performance analytics. Python also uses GPUs, though its overall scalability limitations diminish its effectiveness. SQL, by design, does not rely on GPU resources, instead leveraging CPU power to achieve its results.

The performance of SQL continued to stand out for structured data, where it managed to process even the largest datasets with impressive speed (

Figure 12). There was a slight increase in processing time for the unstructured data, but SQL still delivered reliable and consistent results. Python struggled more noticeably as the dataset sizes increased, particularly with structured data, which suggests its scalability challenges when handling larger workloads. In contrast, Spark proved its robustness and adaptability. It reliably handled large datasets of all types, with scalability becoming particularly evident when datasets exceed 500,000 records, especially in the case of unstructured data.

SQL is clearly the leader in query performance, maintaining low query times across structured, semi-structured, and unstructured datasets, even at the largest scales (

Figure 13). This reliability makes it ideal for scenarios where speed and precision are critical. Spark performs well too, especially when dealing with large unstructured datasets, where it often outpaces Python. However, Python continues to lag behind in query performance, with slower times across all data types.

5.3. Implications for Financial Data Management and Applications

By connecting these visualizations to the respective data types and dataset sizes, this study provides a comprehensive road map for financial institutions. This guidance helps institutions align technology choices with their specific needs, ensuring optimal performance and scalability across diverse data workloads.

SQL stands out as the most reliable choice for structured data, which plays a critical role in in financial operations, like transaction processing and regulatory reporting. Python’s versatility makes it ideal for experimental and semi-structured data tasks, including predictive modeling. Spark’s ability to scale efficiently makes it the top choice for unstructured data, addressing the growing demand for advanced analytics in areas like fraud detection and customer insights. These findings highlight the need for a tailored approach in leveraging these technologies to address diverse data challenges.

Financial institutions can profit by integrating these technologies. SQL can efficiently handle operational workloads, Python can drive machine learning and transformation tasks, and Spark can process large-scale data in real-time. Advanced ETL workflows and hybrid cloud deployments further amplify the synergy between these tools, creating seamless and scalable interconnected set of systems.

From the above results and other previous studies [

8,

93,

112,

113], we observe an alignment in findings despite different contexts (finance vs. other domains): relational databases continue to be central for high-performance management of structured data and transactional integrity; big data frameworks like Spark provide the necessary scalability and capability to handle the growing variety and volume of data; and Python (and associated data science tools) adds flexibility for complex analytics and AI tasks. Rather than one replacing the others, the trend is clearly toward architectural convergence—making best use of each where appropriate and integrating them. Financial institutions, in particular, have moved in this direction to modernize their data infrastructure. The concept of a unified data platform (where data can be stored once and accessed through SQL, Python, or Spark as needed) is becoming reality, as seen with lakehouse implementations and cloud offerings.

One key trend is the improvement of performance in each area so that the gaps narrow. Spark’s continuous improvement of the DataFrame API and SQL support [