Abstract

Deploying thousands of sensors across remote and challenging environments—such as the Amazon rainforest, Californian wilderness, or Australian bushlands—is a critical yet complex task for forest fire monitoring, while our backyard emulation confirmed the feasibility of small-scale deployment as a proof of concept, large-scale scenarios demand a scalable, efficient, and fault-tolerant network design. This paper proposes a Hierarchical Wireless Sensor Network (HWSN) deployment strategy with adaptive head node selection to maximize area coverage and energy efficiency. The network architecture follows a three-level hierarchy as follows: The first level incorporates cells of individual sensor nodes that connect to dynamically assigned cell heads. The second level involves the aggregated clusters of such cell heads, each with an assigned cluster head. Finally, dividing all cluster heads into regions, each with a region head, directly reports all the collected information from the forest floor to a central control sink room for decision making analysis. Unlike traditional centralized or uniformly distributed models, our adaptive approach leverages a greedy coverage maximization algorithm to dynamically select head nodes that contribute to the best forest sensed data coverage at each level. Through extensive simulations, the adaptive model achieved over 96.26% coverage, using significantly fewer nodes, while reducing node transmission distances and energy consumption. This facilitates the real-world deployment of our HWSN model in large-scale, remote forest regions, with very promising performance.

1. Introduction

The increase in forest fires across the United States and other regions has raised significant concerns due to the immense destruction they cause to human lives, property, wildlife, and the environment [1]. In addition to contributing to air pollution, wildfires are becoming more frequent and intense, exacerbated by climate change and human activities [2]. The early detection and accurate prediction of forest fires are crucial for minimizing their impact. However, achieving reliable early warning systems remains a challenge due to the difficulty of predicting fire outbreaks in large and remote areas [3]. Wildfires are driven by natural causes like hot lightning and dry vegetation, intensified by climate change. However, human activities such as arson, debris burning, and negligence are responsible for most wildfire incidents globally [4].

Traditional forest fire prediction and detection methods rely heavily on satellite imagery, watchtowers, and physical sensor networks, while these systems provide valuable information, they often suffer from limited scalability, high operational costs, battery life constraints, and coverage limitations in remote regions [5,6,7,8,9]. Moreover, existing methods primarily focus on post-ignition detection rather than predictive modeling, limiting their effectiveness in preventing large-scale wildfire outbreaks. Additionally, the current detection approaches struggle with adapting to complex environmental changes and ensuring the security and integrity of fire-related data [8,9]. To address these limitations, Wireless Sensor Networks (WSNs) have emerged as a promising solution for real-time data collection and fire prediction in large-scale forests [10,11]. WSNs are equipped with diverse sensing capabilities, including motion, temperature, humidity, smoke, sound, and air pressure detection. These sensors can either process data locally or transmit it wirelessly for remote analysis [12,13].

Wireless Sensor Networks (WSNs) offer a scalable, low-cost, and energy-efficient solution for environmental monitoring. They consist of spatially distributed autonomous sensors that cooperatively monitor physical or environmental conditions like temperature, humidity, smoke, and sound. These sensors communicate wirelessly, forming a network capable of transmitting collected data to centralized processing units. WSNs excel in the coverage of inaccessible terrains, allow real-time data collection, and can operate in harsh conditions with minimal human intervention. By enabling multi-hop routing and data aggregation, WSNs reduce communication overhead and energy consumption—making them ideal for large-scale forest fire detection and prediction systems [14].

Our previous research introduced a Smart and Secure Wireless Sensor Network (SSWSN) [15], designed to predict forest fire scenarios by leveraging data collected from a network of physical sensors. The SSWSN demonstrated high accuracy in classifying different fire-related scenarios, highlighting its potential as an advanced solution for forest fire management. This approach effectively tackled the limitations of traditional fire prediction methods by incorporating security mechanisms to safeguard data integrity and enhance system reliability.

Building on our previous research [16], we explored the integration of virtual sensors to enhance the predictive capabilities of our Smart and Secure Wireless Sensor Network (SSWSN) model. Virtual sensing transforms wireless sensor networks (WSNs) by estimating unmeasured variables through computational models and data-driven techniques such as machine learning. By leveraging deterministic system dynamics and adaptive learning algorithms, virtual sensors significantly improve efficiency, adaptability, and scalability across various applications, including environmental monitoring, healthcare, and automation. This approach addresses the limitations of traditional sensing methods, providing a cost-effective, accurate, and intelligent solution for complex systems while reducing dependence on extensive physical sensor deployments.

However, scaling such systems to larger and more complex environments remains a challenge due to the logistical and financial constraints associated with deploying a greater number of physical sensors [17]. Building on our previous research, this study presents an adaptive and hierarchical deployment strategy for Wireless Sensor Networks (WSNs), targeting large-scale environments such as the Amazon rainforest, Californian forests, and Australian bushlands. Instead of static placement, our approach dynamically selects cell heads, cluster heads, and region heads based on greedy coverage optimization to minimize redundancy and reduce the number of required head nodes. This significantly lowers deployment costs while maximizing area coverage using fewer physical sensors. The adaptive clustering technique ensures that sensor resources are intelligently allocated in response to spatial distribution and environmental demands. Overall, the proposed strategy enhances scalability, energy efficiency, and the practical feasibility of deploying robust forest fire prediction systems in expansive, remote, and complex terrains.

While coverage optimization in WSNs has been studied for years, existing methods often assume small-scale or idealized environments. In contrast, this work addresses the unique challenges of achieving scalable and energy-efficient coverage in real-world, large-scale deployments by introducing a hierarchical framework with integrated virtual sensing and adaptive clustering strategies.

Key Contributions

The key contributions of this work are as follows:

- We propose a novel Hierarchical Wireless Sensor Network (HWSN) framework, tailored for large-scale forest fire prediction, enabling scalable and energy-efficient deployment across vast geographical regions.

- We introduce a greedy-based adaptive head node selection strategy within each cluster to dynamically optimize data aggregation and reduce energy consumption.

- We integrate virtual sensors alongside physical sensor nodes to expand coverage without additional hardware, improving cost-effectiveness and spatial observability.

- We design a multi-level tree-structured deployment architecture that enhances fault tolerance, reduces communication overhead, and supports modular expansion.

- We evaluate the proposed system through simulation experiments and demonstrate its effectiveness in improving network lifetime, coverage, and energy efficiency compared to conventional flat and distributed strategies.

In summary, virtual sensors provide an effective means of reducing physical deployment costs and improving the scalability of large-scale monitoring systems. However, deploying them in complex environments requires intelligent and adaptive strategies to maintain network efficiency, ensure resilience to node failures, and achieve comprehensive coverage. To address these challenges, this work introduces a hierarchical deployment framework that employs a greedy optimization strategy to dynamically assign sensor roles and maximize network performance, enhancing spatial coverage and optimizing resource utilization.

2. Related Works

The authors in [17] conducted a comprehensive survey on coverage problems in wireless sensor networks (WSNs). They highlighted coverage as a fundamental performance metric for assessing how effectively a sensor network monitors a given field. Coverage problems are classified into three primary categories as follows: point (target), area, and barrier coverage. Each category addresses unique scenarios and objectives, emphasizing various solutions, including mathematical programming, heuristic, and approximation algorithms. They discussed different sensor coverage models, such as the Boolean sector, Boolean disk, attenuated disk, truncated attenuated disk, detection, and estimation coverage models. Furthermore, the authors elaborated on design issues like deployment strategies, coverage degree, coverage ratio, activity scheduling, and network connectivity, providing insights into algorithmic approaches for optimization and operational efficiency.

The authors in [18] addressed the fundamental issue of ensuring complete coverage within wireless sensor networks (WSNs). They formulated the coverage problem as determining whether every point in a given service area is covered by at least k sensors, introducing the concept of k-coverage. They presented polynomial-time algorithms suitable for both unit disk and non-unit disk sensing ranges, significantly simplifying the computational complexity compared to existing approaches. The algorithms proposed facilitate the easy translation into distributed protocols, enhancing their practical applicability. Moreover, their approach focused on perimeter coverage, enabling sensors to verify coverage collectively and efficiently, ensuring high reliability in WSN deployment.

The authors in [19] explored the application of mobile sensing technology for effective node placement planning and on-site calibration in wireless sensor networks (WSNs), specifically for monitoring indoor air quality (IAQ). They utilized mobile sensing combined with genetic algorithm-optimized back propagation (GA-PB) neural networks to predict indoor CO concentrations and guide optimal sensor deployment. Experimental studies were conducted under various ventilation systems and environmental conditions, demonstrating that mobile sensing effectively predicted CO levels with acceptable accuracy. Additionally, they employed wavelet decomposition techniques to effectively reduce noise in sensor data. Their clustering analysis, performed using K-means, identified the optimal sensor locations, significantly enhancing the efficiency and accuracy of sensor networks deployed for environmental monitoring in smart buildings.

The authors in [20] conducted a comprehensive review addressing coverage, deployment, and localization challenges in Wireless Sensor Networks (WSNs) using artificial intelligence (AI) techniques. They explored and compared various AI methodologies, including fuzzy logic, neural networks, evolutionary computation, and nature-inspired algorithms, highlighting their effectiveness in solving complex WSN problems. The authors identified key research trends, evaluated recent studies from 2010 to 2021, and provided a detailed comparison of AI methods applied to enhance coverage, optimize deployment strategies, and improve localization accuracy. Furthermore, they outlined promising research directions, emphasizing AI’s role in enhancing performance metrics such as energy efficiency, reliability, and network lifetime for future WSN developments.

The authors in [21] proposed a novel optimization algorithm called the Desert Golden Mole Optimization Algorithm (DGMOA) for wireless sensor network (WSN) deployment in challenging and extreme environments. Inspired by the behaviors of desert golden moles, the DGMOA integrates unique strategies like sand swimming for extensive global search and hiding for effective local optimization. Through these strategies, the DGMOA achieves faster convergence, higher coverage uniformity, and improved energy efficiency compared to traditional optimization methods. The simulation results showed significant performance enhancements, particularly in complex deployment scenarios, confirming the algorithm’s capability to efficiently manage sensor placement, maximize coverage, and prolong network lifetime.

The authors in [22] proposed an Arithmetic Optimization Algorithm (AOA)-based localization and deployment model for Wireless Sensor Networks (WSNs). The authors emphasized the importance of accurate localization, particularly in randomly deployed sensor networks where node locations are initially unknown. They addressed the localization issue by formulating it as an optimization problem solved using the Arithmetic Optimization Algorithm (AOA). This novel approach showed significant improvements in localization accuracy, reducing the average localization error (ALE) to less than 0.27%. Additionally, their proposed method efficiently identified coverage holes and disconnected networks by leveraging estimated locations and neighborhood information, demonstrating robustness across varying node densities and heterogeneity levels.

The authors in [23] proposed two improved versions of the Particle Swarm Optimization (PSO) algorithm, specifically Cooperative PSO (CPSO) and Cooperative PSO enhanced with fuzzy logic, to address the sensor deployment problem in Wireless Sensor Networks (WSNs). They focused on the target coverage problem, aiming to maximize network lifetime by optimizing sensor node placements. Their algorithms addressed three variations of coverage as follows: simple coverage, K-coverage, and Q-coverage, ensuring the effective monitoring of predetermined targets. The simulation results demonstrated that these improved PSO methods significantly prolonged network lifetime compared to traditional optimization algorithms due to their dynamic adaptation capability through fuzzy logic, effectively balancing exploration and exploitation.

Synthesis and Research Gap

While the existing approaches in the literature have shown promise in addressing the coverage, deployment, and localization challenges in Wireless Sensor Networks (WSNs), limitations remain—particularly in achieving scalability and practical applicability across vast regions such as the Amazon Rainforest, the Californian forests, and the Australian bushlands. Our novel HWSN approach is essential for managing large-scale deployments spanning thousands of square kilometers. In this work, we propose an optimized hierarchical structure that significantly enhances key performance metrics, including energy efficiency, reliability, and network longevity. Our research advances this field by combining hierarchical deployment strategies with adaptive, greedy-based clustering techniques and the hybrid integration of physical and virtual sensors. This enables the development of scalable, resilient WSNs tailored for real-world, large-scale environmental monitoring and forest fire prediction.

While previous studies have contributed significantly to addressing coverage, deployment, and localization challenges in Wireless Sensor Networks (WSNs), they fall short in enabling scalable, energy-efficient solutions for real-world, large-scale forest monitoring. Many approaches assume ideal conditions, lack support for dynamic environments, or are not evaluated for deployment over thousands of square kilometers—typical of regions like the Amazon, California, or Australian forests.

Moreover, existing strategies rarely integrate physical and virtual sensors in a unified, hierarchical structure. This limits their flexibility, responsiveness, and long-term sustainability in practical deployments.

Our work addresses these gaps by proposing a Hierarchical Wireless Sensor Network (HWSN) architecture that achieves the following:

- Supports large-scale, modular deployment via a multilevel cluster hierarchy;

- Enables adaptive and lightweight head node selection through a greedy algorithm;

- Incorporates virtual sensing to expand coverage without additional hardware.

This design improves scalability, energy efficiency, and robustness, thereby advancing the current state of forest fire prediction and environmental monitoring systems.

3. Our Proposed Methodology

This section outlines the methodology used to design a hierarchical Wireless Sensor Network (WSN) deployment strategy for large-scale environments, ensuring efficient coverage, communication, and anomaly detection. The proposed hierarchical deployment strategy is designed to enhance sensing coverage, energy efficiency, and responsiveness—core requirements for reliable early forest fire prediction over large and remote areas.

3.1. Sensor Deployment and Initial Setup

In our simulation environment, a large number of low-cost, commercially available sensor nodes—such as IRIS and MicaZ—are randomly deployed within a backyard emulation setup. These nodes are commonly used in real-world applications due to their affordability, reliability, and suitability for environmental monitoring. Each node is equipped with sensors to measure parameters such as temperature, humidity, smoke, sound, and light intensity. Operated by embedded microcontrollers and wireless transceivers, the nodes periodically sense their surroundings and either process the data locally or transmit it through a hierarchical multi-hop network. Given their limited power supply and constrained communication range, efficient clustering and routing strategies are required to enable scalable and energy-aware data aggregation [15].

3.2. Hierarchical Network Structure

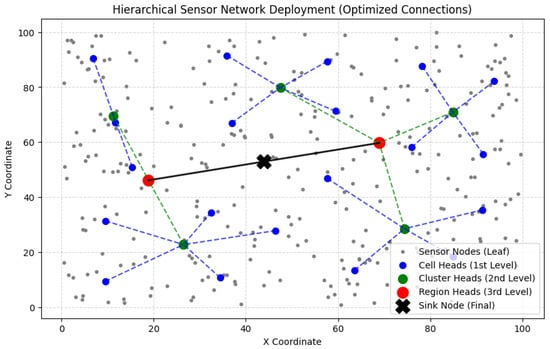

To optimize data collection, a multi-tier hierarchical network was implemented, comprising the following four levels: cell heads, cluster heads, region heads, and a sink node. The selection of each level was based on connectivity, energy efficiency, and geographical proximity. Figure 1 illustrates the high-level architecture of the hierarchical deployment strategy, depicting the organization of sensor nodes into a multi-tiered structure comprising cell heads, cluster heads, region heads, and a centralized sink node for efficient data aggregation and communication.

Figure 1.

Our Proposed HWSN Model Topology.

In our HWSN simulation model, cell heads are assumed to be slightly more powerful than regular leaf nodes, and they are equipped with extended communication and processing capabilities. Cluster heads are simulated as devices comparable to Raspberry Pi-class microcomputers, capable of local aggregation and coordination. Region heads represent even more capable embedded systems, akin to NVIDIA Jetson or edge AI devices, with sufficient resources for regional analytics and decision making. The sink node, acting as the central base station, is modeled as a high-performance computer equipped with GPUs, typically located in an air-conditioned control room, ensuring the uninterrupted operation and centralized monitoring of the entire proposed HWSN.

The selection of head nodes at each level is based on residual energy, connectivity, and spatial proximity. This ensures balanced energy consumption and prevents early node death, which is critical in maintaining network longevity.

3.2.1. Selection of Cell Heads (1st-Level Hierarchy)

- The vast deployment area was divided into small cells, each containing a small fixed number of leaf sensor nodes (we simulated 15 nodes).

- Dynamically, the sensor node with the highest residual energy and best connectivity was selected as the cell head.

- Cell heads aggregate data from their respective sensor nodes and transmit it to the next level of cluster heads.

3.2.2. Selection of Cluster Heads (2nd-Level Hierarchy)

- Then, the total cell heads in the topology are divided into clusters based on geographical proximity.

- Among the cell heads in a cluster, the node with the highest energy and shortest average distance to other cell heads is to be selected as the cluster head.

- Dynamically, based on the above selection criteria, a rotation mechanism ensures load balancing by reassigning cluster heads periodically.

- Cluster heads aggregate data from cell heads, hence reducing the traffic into the next level of nodes.

3.2.3. Selection of Region Heads (3rd-Level Hierarchy)

- Then, the entire cluster heads are divided into regions to improve scalability and efficiency.

- Dynamically, based on the above selection criteria, a rotation mechanism among region heads to ensure better performance is carried out periodically.

- Region heads reduce the traffic into the final processing node.

3.2.4. Selection of the Sink Node (Final Level)

- The sink node was placed at the geometric center of all region heads to optimize data transmission efficiency.

- This ensured load balancing, reduced communication overhead, and extended network lifetime.

- The sink node processes and transmits critical information to an external monitoring system.

The sink node is strategically placed at the geometric center of all region heads to minimize average transmission distances. It functions as the network’s central aggregator, responsible for compiling high-level summaries and forwarding them to external monitoring or decision-making systems. The sink node is assumed to be a high-capacity system, such as an edge server or datacenter node, capable of executing intensive computations, real-time alerts, and data visualization.

3.3. Multi-Hop Communication Strategy

In our HWSN model simulation, we used a multi-hop routing strategy to make data transmission more efficient. Instead of sending data directly over long distances, each sensor node forwards its data through a chain of nearby nodes. This approach helps conserve energy by breaking the journey into shorter, less demanding hops. In our hierarchical setup, the data travels from individual sensor nodes to cell heads, then to cluster heads, followed by region heads, and finally reaches the central sink node. This layered routing structure not only improves energy efficiency but also helps distribute the communication load and ensures that data can still be reliably delivered even in large, remote, or complex environments.

- Each node forwarded data to its nearest higher-tier node, minimizing energy consumption.

- If a node failed, an automatic role reassignment mechanism dynamically selected the next best candidate.

3.4. Coverage Evaluation and Optimization

- The coverage area of each hierarchical level was monitored to prevent connectivity gaps.

- A fault tolerance mechanism reassigned roles when energy depletion was detected.

- If coverage gaps were identified, additional sensor nodes were deployed in under-served areas.

- This hierarchical approach effectively organizes sensor nodes as follows:

- –

- Efficient data aggregation at different network levels.

- –

- Energy-efficient routing to prolong sensor lifespan.

- –

- Scalability for large-scale deployments.

- –

- Fault tolerance mechanisms to maintain network connectivity.

Unlike conventional clustering algorithms, our methodology relies on geographical partitioning, energy-based selection, and dynamic connectivity analysis, providing a more practical and adaptive WSN deployment strategy. Scalability is not guaranteed in our proposed approach, as the hierarchical deployment strategy allows for seamless expansion regardless of the forest size, whether it is a Californian forest or an Amazon rainforest. In the case of extremely vast forest fields, it is possible to repeat our above HWSN topology, recursively, with more than 4 levels.

3.5. Strategies for Maximizing Coverage in Large-Scale WSNs

This section outlines our step-by-step approach for deploying and evaluating hierarchical Wireless Sensor Network (HWSN) coverage. We conducted a series of experiments to analyze different deployment strategies and their impact on coverage. Initially, we examined centralized cluster head deployment, followed by a distributed approach, and finally, we implemented an adaptive hierarchical controlling method based on the Maximum Coverage Problem (MCP) [24].

Greedy coverage optimization refers to the iterative selection of sensor head nodes that contribute the most to overall area coverage with the least redundancy. Inspired by the Maximum Coverage Problem (MCP), our method begins by selecting the sensor node that covers the highest number of previously uncovered areas. Subsequent selections prioritize nodes that offer the greatest marginal gain in coverage. This process continues until the desired or total coverage is achieved, optimizing network efficiency by minimizing the number of active nodes while maintaining robust spatial monitoring.

Our simulator probed different arrangements of the HWSN 4-level architectures, with dynamic reassignments of the controlling head at each level. Then, we report our observation of the results associated with each level simulation arrangement.

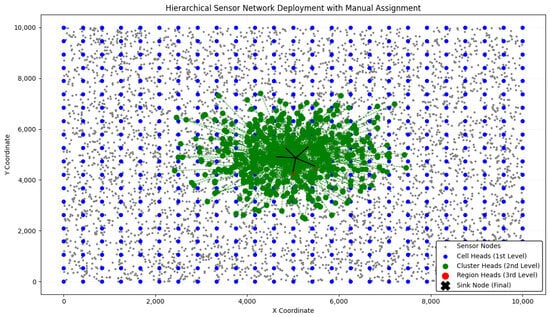

3.5.1. Experiment 1: Centralized Cluster Head Deployment

Objective: In the first experiment, all cluster heads were assigned near the center of the deployment area. Figure 2 illustrates the hierarchical structure and placement of nodes in this centralized approach as implemented in the simulation environment.

Figure 2.

Centralized hierarchical WSN deployment with cluster and region heads concentrated near the center to evaluate coverage efficiency.

- Simulation

- Sensor deployment: A total of 5000 sensor nodes were randomly placed within a deployment area.

- Cell head selection (Level 1): A total of 500 cell heads were assigned using a grid-based approach.

- Cluster head selection (Level 2): A total of 500 cluster heads were placed in the center of the deployment area by averaging randomly selected nearby cell heads.

- Region head selection (Level 3): Five region heads were assigned near the center by averaging the positions of the cluster heads.

- Sink node placement: The final sink node (base station) was placed at the centroid of all region heads.

- Observation and HWSN Performance

- Dense communication near the center.

- Poor coverage at the outer regions, leaving significant sensor nodes unconnected.

- High energy consumption due to long-distance communication for outer nodes.

- Non-uniform load distribution, leading to congestion near the center.

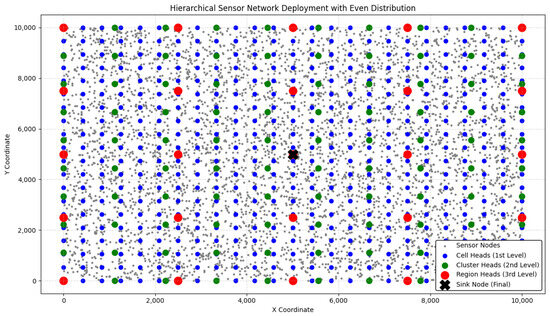

3.5.2. Experiment 2: Distributed Hierarchical Deployment

Objective: The second experiment focused on spreading cluster heads across the deployment area instead of concentrating them in the center. Figure 3 illustrates the hierarchical structure and placement of nodes in this distributed approach as implemented in the simulation environment.

Figure 3.

Distributed hierarchical WSN deployment with uniformly placed cluster and region heads.

- Simulation

- Sensor deployment: The same 5000 sensor nodes were deployed as in Experiment 1.

- Cell head selection (Level 1): A uniform grid-based strategy was used to assign 500 cell heads evenly across the entire area.

- Cluster head selection (Level 2): Instead of placing them in the center, 49 cluster heads were evenly distributed throughout the area using a structured grid layout.

- Region head selection (Level 3): Five region heads were distributed across the network using a grid layout to cover different areas.

- Sink node placement: The base station was positioned at the centroid of the region heads.

- Observation and HWSN Performance

- Better overall sensor coverage compared to centralized deployment.

- Reduced energy consumption as sensor-to-head distances were smaller.

- Coverage dropped when reducing the number of heads.

- Some areas had overlapping coverage while others were underutilized.

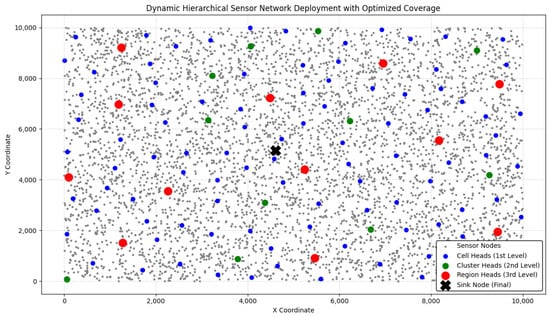

3.5.3. Experiment 3: Adaptive Hierarchical Deployment (Greedy Maximum Coverage)

The selection strategy in our adaptive deployment is directly inspired by the Maximum Coverage Problem (MCP), a classical optimization problem that aims to select a subset of sets (in this case, sensor head nodes) such that their union covers the maximum possible area. We translated this concept to WSNs by iteratively choosing nodes that provided the greatest incremental coverage, thereby achieving near-complete sensing with fewer resources [24]. Figure 4 illustrates the hierarchical structure and placement of nodes in this adaptive approach as implemented in the simulation environment.

Figure 4.

Adaptive hierarchical WSN deployment using Greedy Maximum Coverage strategy.

Objective: To further optimize coverage and minimize redundant cluster heads, we implemented a greedy heuristic-based adaptive clustering approach, inspired by the Maximum Coverage Problem (MCP).

- Simulation

- 1.

- Sensor deployment: 5000 sensor nodes were placed randomly in the environment.

- 2.

- Cell head selection (Level 1):

- Instead of pre-selecting a fixed number of 119 cell heads, a greedy approach was used.

- The first cell head was chosen as the sensor covering the most uncovered nodes.

- Subsequent cell heads were selected iteratively, ensuring that each new head maximally increased coverage.

- This process was repeated until 100% coverage was reached.

- 3.

- Cluster head selection (Level 2):

- Only the selected cell heads were considered as candidates for cluster heads.

- The selection was made by iteratively picking 24 cluster heads that maximized the additional coverage of uncovered nodes.

- This process was repeated until 92.54% coverage was reached.

- 4.

- Region head selection (Level 3):

- Only 12 region heads were selected based on the same greedy maximum coverage.

- The region heads were chosen such that they maintained connectivity and ensured coverage across the entire sensor deployment area.

- This process was repeated until 96.26% coverage was reached.

- 5.

- Sink node placement: The final base station was placed at the centroid of the region heads to optimize long-range data transmission.

Table 1 presents the key differences from previous experiments, highlighting variations in deployment strategy and performance within the simulation environment:

Table 1.

Comparison of Centralized, Distributed, and Adaptive WSN Deployment Strategies.

- Observation and HWSN Performance

- Achieved near-100% coverage while reducing unnecessary nodes.

- Efficiently selected only the necessary number of cell, cluster, and region heads.

- Better load balancing, preventing central congestion.

- Scalable for large forest areas, dynamically adapting to different environments (dense forests, rivers, and mountains).

Through three hierarchical deployment strategies, we found that a fully adaptive selection approach, inspired by the Maximum Coverage Problem, provides the best balance between coverage, energy efficiency, and scalability. Unlike traditional fixed-head assignment, our greedy adaptive selection minimizes redundancy, ensures full sensor coverage, and optimally places communication nodes for large-scale forest monitoring.

4. Results

This section presents the performance evaluation of the following three different WSN deployment strategies: (i) centralized placement, where cluster heads are concentrated in a specific region; (ii) distributed placement, where heads are spread across the network in a structured grid; and (iii) adaptive deployment, which dynamically selects heads based on the maximum additional coverage. The evaluation focuses on coverage, energy efficiency, scalability, and network performance.

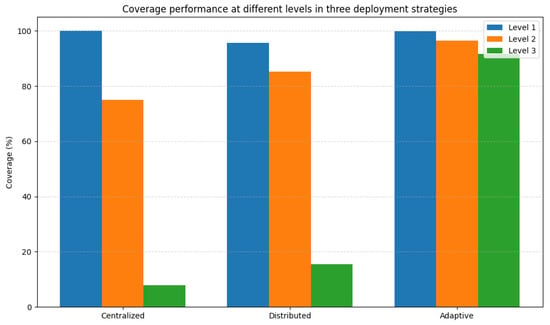

Coverage Performance Analysis

The effectiveness of each deployment strategy was assessed by measuring the percentage of sensor nodes covered at different hierarchical levels (cell heads, cluster heads, and region heads). Table 2 provides a comparative analysis of the coverage performance.

Table 2.

Coverage performance comparison across Centralized, Distributed, and Adaptive WSN strategies.

Figure 5 presents a visual representation of the coverage performance.

Figure 5.

Coverage performance at different levels in three deployment strategies.

5. Discussion

5.1. Key Insights and Comparative Analysis

The comprehensive simulated experimental results presented in the previous section demonstrate the distinct advantages of the adaptive deployment strategy in Wireless Sensor Networks (WSNs), especially in scenarios requiring reliable coverage across large-scale, heterogeneous environments such as forests. Table 3 offers a consolidated view of the most critical parameters across the three strategies evaluated—centralized, distributed, and adaptive.

Table 3.

Comparison of different deployment strategies.

5.2. Limitations of the Centralized and Distributed Approaches

The centralized model, while conceptually simple, suffers from serious drawbacks in practical deployment. Placing cluster and region heads near the center of the deployment area leads to poor coverage at the boundaries and long-distance transmissions, which increases energy consumption and leads to faster battery drain in critical nodes. Moreover, centralized clustering becomes unreliable in obstructed terrains, such as dense forests or mountainous areas, where communication links may be disrupted.

On the other hand, the distributed model shows improvement by employing grid-based placement, which achieves more uniform coverage and a balanced load distribution across the network. However, this approach demands significantly more resources, as it requires a large number of sensor nodes, cell heads, cluster heads, and region heads, making it less practical for real-world deployment in large-scale environments. Furthermore, the model lacks adaptive intelligence, treating all areas uniformly, without accounting for variations in sensor density or communication needs. This often leads to the redundant placement of cluster heads in already well-covered zones and insufficient coverage in sparse or critical areas, reducing the overall efficiency of the deployment.

5.3. Strengths of Adaptive Deployment

In contrast, the adaptive deployment model introduces a novel, greedy-based approach to cluster and region head selection. The algorithm dynamically chooses head nodes based on the maximum incremental coverage, ensuring that every new head node adds meaningful utility to the network.

- At Level 1 (cell heads), the model selects 119 nodes covering nearly 100% of all sensor nodes.

- At Level 2 (cluster heads), only 24 nodes are selected while maintaining over 92.54% coverage.

- At Level 3 (region heads), the adaptive placement of 12 region heads achieves an impressive 96.26% high-level coverage, far outperforming the other two models.

This approach not only improves network efficiency but also reduces redundancy, saving valuable resources such as memory, power, and communication bandwidth.

5.4. Implications for Real-World Forest Monitoring

The benefits of adaptive deployment become especially clear in critical applications like forest fire monitoring, where maintaining strong sensor coverage and enabling rapid communication can make all the difference. By adjusting to the environment in real time and maximizing spatial coverage, our method is well suited for deployment across vast, diverse regions such as the Amazon rainforest, the Californian wilderness, or the Australian bushlands.

One of the key strengths of this approach lies in its resilience. In the event of a head node failure, the system automatically re-evaluates and selects a new head based on updated sensor information. This ensures the network remains connected and operational without human intervention, making it not only efficient but also robust and adaptable under changing conditions.

This resilience is achieved through built-in fault tolerance—the network’s ability to maintain performance even when individual components fail. In wireless sensor networks, where nodes operate in challenging outdoor environments, this is essential. Our model includes automatic role reassignment as follows: when a head node depletes its energy or becomes unreachable, another node with sufficient energy and better connectivity is promoted to take its place. This process keeps the network running smoothly, extends its lifespan, and supports uninterrupted coverage, which is vital for early fire detection and prevention.

Taken together, the results from our study demonstrate that adaptive deployment offers a significant advantage over static centralized or distributed models. By leveraging greedy coverage optimization, the system achieves broader coverage, lower energy consumption, and greater scalability. These qualities make it a strong candidate for next-generation smart wireless sensor network deployments, particularly in areas where the real-time monitoring of critical infrastructure or environmental conditions is required.

Compared to previous approaches using centralized or distributed models [17,18], the proposed adaptive hierarchical deployment strategy achieves over 96% coverage while significantly reducing the number of required head nodes. Prior studies, such as [17], have addressed fundamental coverage models—ranging from point and area to barrier coverage—and proposed heuristic or approximation algorithms to improve sensor placement. However, these models often assume static configurations and do not account for real-time adaptability in dynamic environments. Similarly, works such as [18] introduced k-coverage formulations with polynomial-time algorithms for perimeter coverage, yet they lack scalability for large deployment areas. More recent optimization techniques like DGMOA [21] and AOA [22] demonstrate enhanced coverage uniformity and localization accuracy using nature-inspired or arithmetic-based strategies, respectively. Nonetheless, these methods often involve high algorithmic complexity or rely on centralized control mechanisms that limit their real-world feasibility in energy-constrained networks. In contrast, our greedy, MCP-inspired approach offers a practical balance between performance and computational simplicity, enabling dynamic head node selection and fault tolerance with minimal overhead. Furthermore, by incorporating virtual sensors alongside physical ones, our method reduces deployment cost while ensuring high spatial coverage—making it well suited for large-scale, mission-critical applications such as forest fire prediction in regions like the Amazon, California, and Australian bushlands.

6. Conclusions

This paper presents a comparative analysis of three hierarchical deployment strategies for Wireless Sensor Networks (HWSNs) in large-scale forest environments—centralized, distributed, and adaptive. Through extensive simulations, we demonstrates that the adaptive deployment strategy, based on greedy coverage maximization, significantly outperforms traditional static approaches regarding coverage, energy efficiency, and scalability. The simulation results showed that the centralized approach suffers from poor edge coverage and high energy consumption due to long transmission distances. The distributed model improved upon this with grid-based placement, but this approach requires numerous sensor nodes and head nodes, making it a resource-intensive method that is less practical for large-scale real-world deployments. In contrast, the adaptive strategy dynamically selects head nodes (cell, cluster, and region heads) to maximize incremental coverage while minimizing redundancy and resource usage. The adaptive model achieved over 96.26% high-level coverage using only 119 cell heads, and 24 and 12 cluster and region heads, respectively. This resulted in substantial energy savings and robust scalability, making it suitable for deployment in vast, complex terrains, e.g., the Amazon, California, or Australian forests. Such adaptivity provides resilience to node failure and adaptability to dynamic network conditions as a viable solution for real-world forest fire monitoring systems. Future work will enhance this framework by incorporating real-time sensor failure detection, mobility-aware clustering, and machine learning-based head selection for continuous self-optimization in dynamic environments. Moreover, we will investigate the expansion of our HWSN model to cover significantly larger and more diverse terrain by recursively adding more levels to the proposed four levels. In addition, to facilitate such huge forest spaces and the need for an immense number of nodes, we will explore the incorporation of zero-cost virtual sensors.

Author Contributions

Conceptualization, H.S. and A.H.; methodology, A.H. and H.S.; software, A.H.; validation, A.H. and H.S.; formal analysis, H.S. and A.H.; investigation, A.H.; resources, A.H.; data curation, A.H.; writing—original draft preparation, A.H.; writing—review and editing, H.S.; visualization, A.H. and H.S.; supervision, H.S.; project administration, H.S.; funding acquisition, H.S. and A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Stephens Scott, L. Forest fire causes and extent on United States Forest Service lands. Int. J. Wildland Fire 2005, 14, 213–222. [Google Scholar] [CrossRef]

- Alibašić, H.; Morgan, J.D. Coastal Climate Readiness and Preparedness: Comparative Review of the State of Florida and Cuba. In Perception, Design and Ecology of the Built Environment; Ghosh, M., Ed.; Springer Geography; Springer: Cham, Switzerland, 2020; pp. 121–133. [Google Scholar] [CrossRef]

- Sharma, S.; Khanal, P. Forest Fire Prediction: A Spatial Machine Learning and Neural Network Approach. Fire 2024, 7, 205. [Google Scholar] [CrossRef]

- Igini, M. “What Causes Wildfires?”. Earth.Org, 13 April 2023. Available online: http://earth.org/what-causes-wildfires/ (accessed on 13 March 2025).

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Sherstjuk, V.; Zharikova, M.; Sokol, I. Forest Fire Monitoring System Based on UAV Team, Remote Sensing, and Image Processing. In Proceedings of the 2018 IEEE Second International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2018; pp. 590–594. [Google Scholar] [CrossRef]

- Sakr, G.E.; Elhajj, I.H.; Mitri, G.; Wejinya, U.C. Artificial intelligence for forest fire prediction. In Proceedings of the 2010 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Montreal, QC, Canada, 6–9 July 2010; pp. 1311–1316. [Google Scholar] [CrossRef]

- Pang, Y.; Li, Y.; Feng, Z.; Feng, Z.; Zhao, Z.; Chen, S.; Zhang, H. Forest Fire Occurrence Prediction in China Based on Machine Learning Methods. Remote Sens. 2022, 14, 5546. [Google Scholar] [CrossRef]

- Gaikwad, A.; Bhuta, N.; Jadhav, T.; Jangale, P.; Shinde, S. A Review On Forest Fire Prediction Techniques. In Proceedings of the 2022 6th International Conference On Computing, Communication, Control Furthermore, Automation (ICCUBEA), Pune, India, 26–27 August 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Dampage, U.; Bandaranayake, L.; Wanasinghe, R.; Kottahachchi, K.; Jayasanka, B. Forest fire detection system using wireless sensor networks and machine learning. Sci. Rep. 2022, 12, 46. [Google Scholar] [CrossRef] [PubMed]

- Dogra, R.; Rani, S.; Sharma, B. A Review to Forest Fires and Its Detection Techniques Using Wireless Sensor Network. In Advances in Communication and Computational Technology. ICACCT 2019; Lecture Notes in Electrical Engineering; Hura, G.S., Singh, A.K., Siong Hoe, L., Eds.; Springer: Singapore, 2021; Volume 668. [Google Scholar] [CrossRef]

- Kizilkaya, B.; Ever, E.; Yatbaz, H.Y.; Yazici, A. An Effective Forest Fire Detection Framework Using Heterogeneous Wireless Multimedia Sensor Networks. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 47. [Google Scholar] [CrossRef]

- S, T.; Samhitha, J.S.S.; Sagar, K.A.; Yaswanth, J.S.; Haritha, K. Early Forest Fire Prediction System Using Wireless Sensor Network. In Proceedings of the 2024 2nd International Conference on Device Intelligence, Computing and Communication Technologies (DICCT), Dehradun, India, 15–16 March 2024; pp. 232–237. [Google Scholar] [CrossRef]

- Ponniran, A.B.; Vajravelu, A.; Zaki, W.S.B.W.; Yamunarani, T.; Ahammed, S.R.; Sivaranjani, S. loT/WSN-based Security/privacy Methods for Forest Monitoring. In Proceedings of the 2024 International Conference on Future Technologies for Smart Society (ICFTSS), Kuala Lumpur, Malaysia, 7–8 August 2024; pp. 146–151. [Google Scholar] [CrossRef]

- Soliman, H.; Haque, A. A Smart and Secure Wireless Sensor Network for Early Forest Fire Prediction: An Emulated Scenario Approach. In Advances in Information and Communication. FICC 2025; Lecture Notes in Networks and Systems; Arai, K., Ed.; Springer: Cham, Switzerland, 2025; Volume 1284. [Google Scholar] [CrossRef]

- Haque, A.; Soliman, H. Smart Wireless Sensor Networks with Virtual Sensors for Forest Fire Evolution Prediction Using Machine Learning. Electronics 2025, 14, 223. [Google Scholar] [CrossRef]

- Wang, B. Coverage problems in sensor networks: A survey. ACM Comput. Surv. 2011, 43, 32. [Google Scholar] [CrossRef]

- Huang, C.F.; Tseng, Y.C. The Coverage Problem in a Wireless Sensor Network. Mob. Netw. Appl. 2005, 10, 519–528. [Google Scholar] [CrossRef]

- Ren, J.; Li, Z.; Cao, X.; Kong, X. Experimental study on the application of mobile sensing in wireless sensor networks development: Node placement planning and on-site calibration. Sens. Actuators B Chem. 2025, 429, 137338. [Google Scholar] [CrossRef]

- Osamy, W.; Khedr, A.M.; Salim, A.; Ali, A.I.A.; El-Sawy, A.A. Coverage, Deployment and Localization Challenges in Wireless Sensor Networks Based on Artificial Intelligence Techniques: A Review. IEEE Access 2022, 10, 30232–30257. [Google Scholar] [CrossRef]

- Wang, Z.; Guo, C.; Sui, J.; Cui, C. Optimization of wireless sensor network deployment based on desert golden mole optimization algorithm. Intell. Robot. 2025, 5, 1–18. [Google Scholar] [CrossRef]

- Bhat, S.J.; K V, S. A localization and deployment model for wireless sensor networks using arithmetic optimization algorithm. Peer-to-Peer Netw. Appl. 2022, 15, 1473–1485. [Google Scholar] [CrossRef]

- Yarinezhad, R.; Hashemi, S.N. A sensor deployment approach for target coverage problem in wireless sensor networks. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 5941–5956. [Google Scholar] [CrossRef]

- Cohen, R.; Katzir, L. The Generalized Maximum Coverage Problem. Inf. Process. Lett. 2008, 108, 15–22. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).