Trust and Trustworthiness from Human-Centered Perspective in Human–Robot Interaction (HRI)—A Systematic Literature Review

Abstract

1. Introduction

1.1. Research Scope

1.1.1. Industry 5.0 Vision

1.1.2. Human-Centric Vision

1.1.3. Human-Centric Trust Vision

2. Research Methodology

- RQ1:

- What are the most common methodologies to study users’ trust in HRI?

- RQ2:

- What has been the focus of HRI researchers when investigating trust?

- RQ3:

- What are the barriers and facilitators for fostering trustworthy HRI?

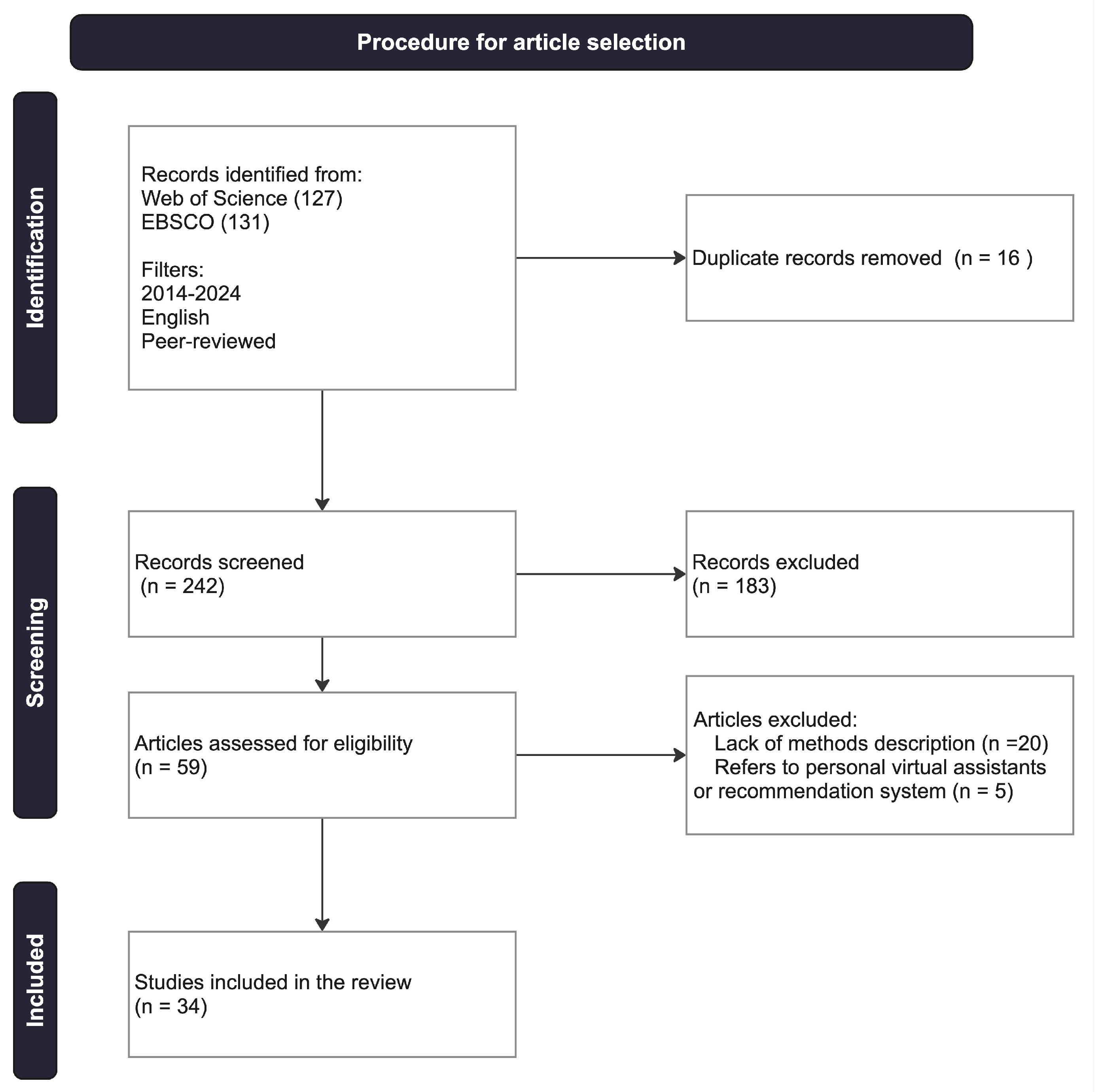

2.1. Literature Search and Strategy

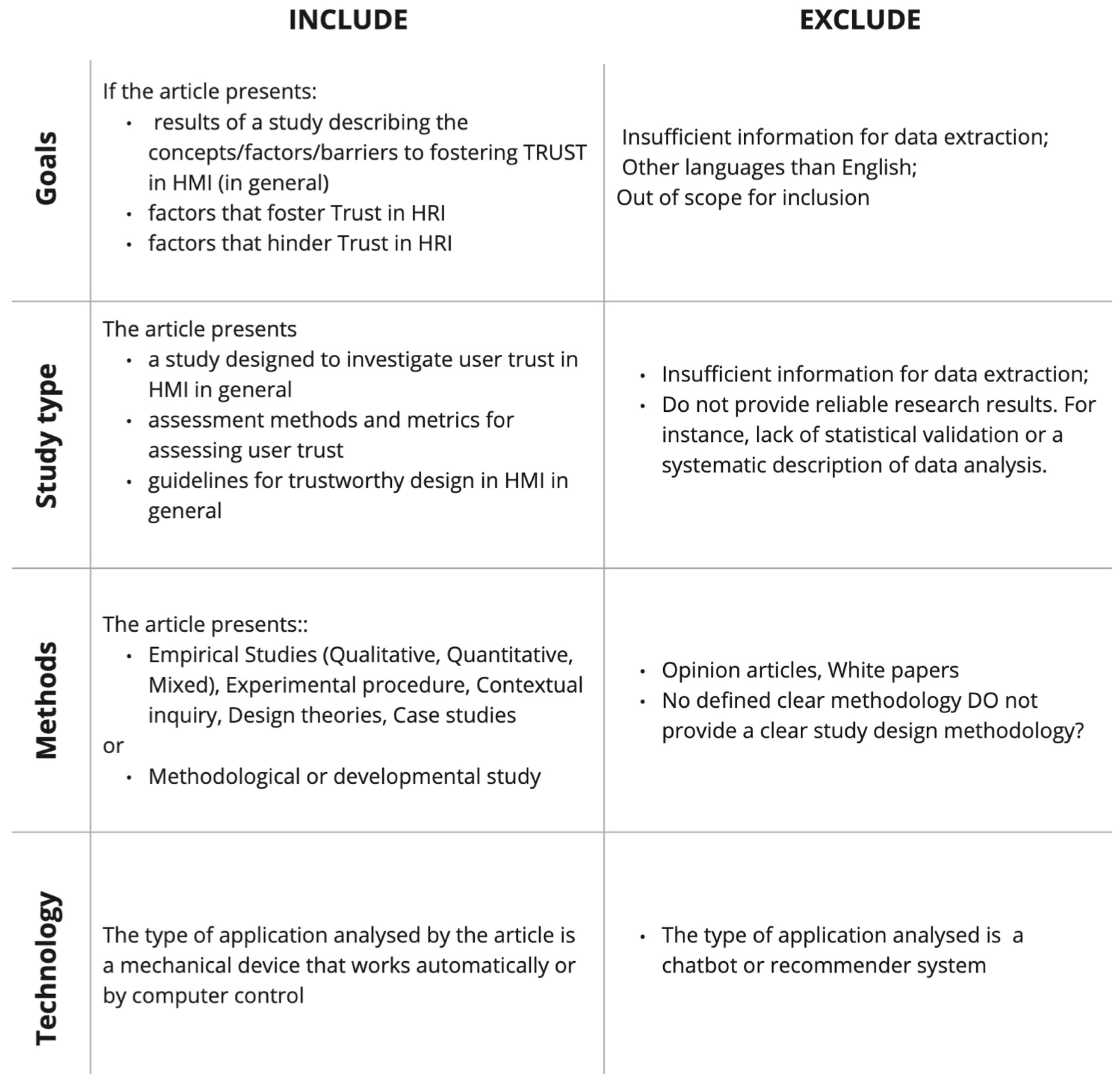

2.1.1. Screening

2.1.2. Data Extraction

2.1.3. Analysis and Synthesis

3. Analysis

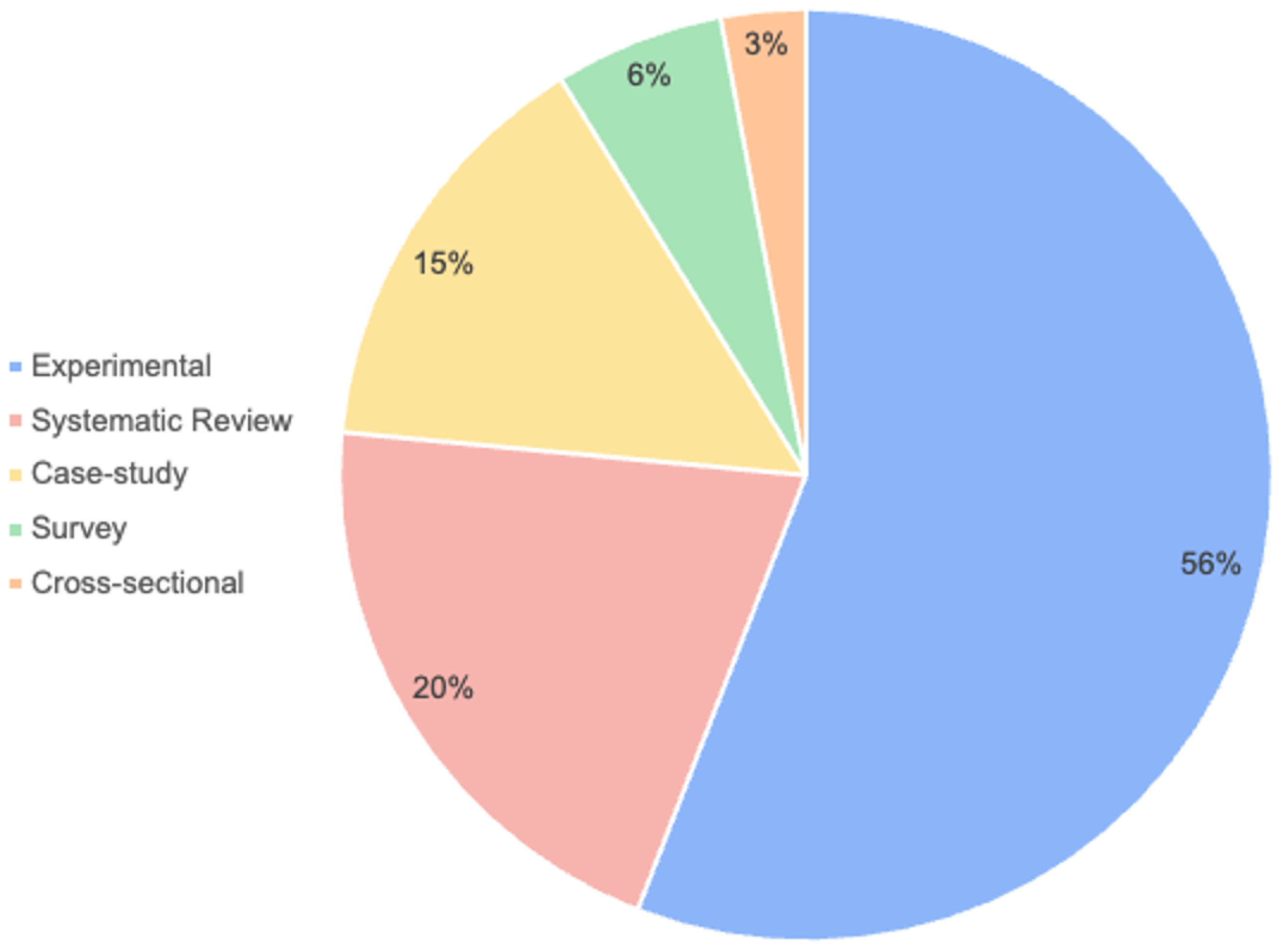

3.1. What Are the Most Common Methodologies to Study Users’ Trust in HRI?

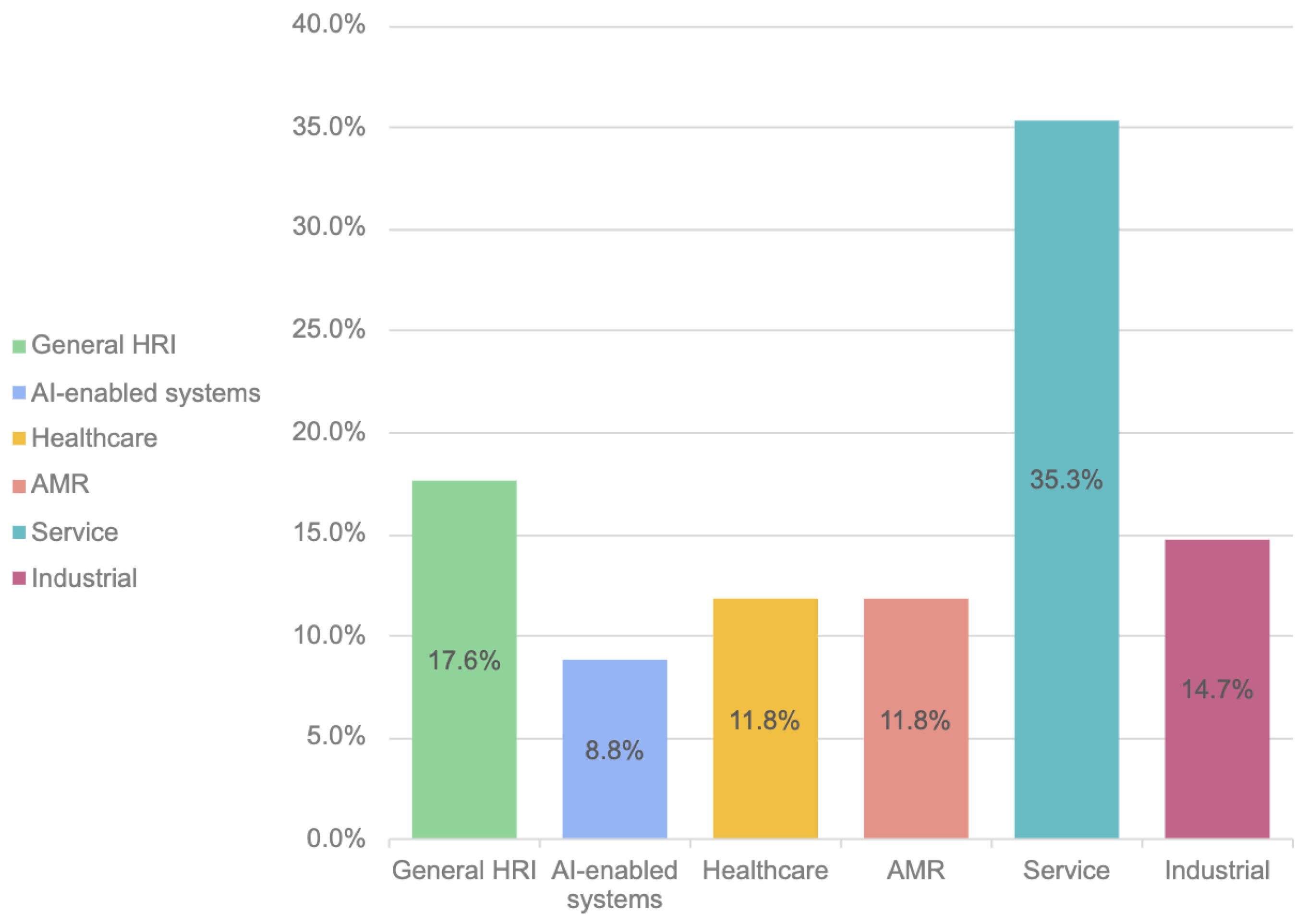

3.1.1. Robot Deployment Domains

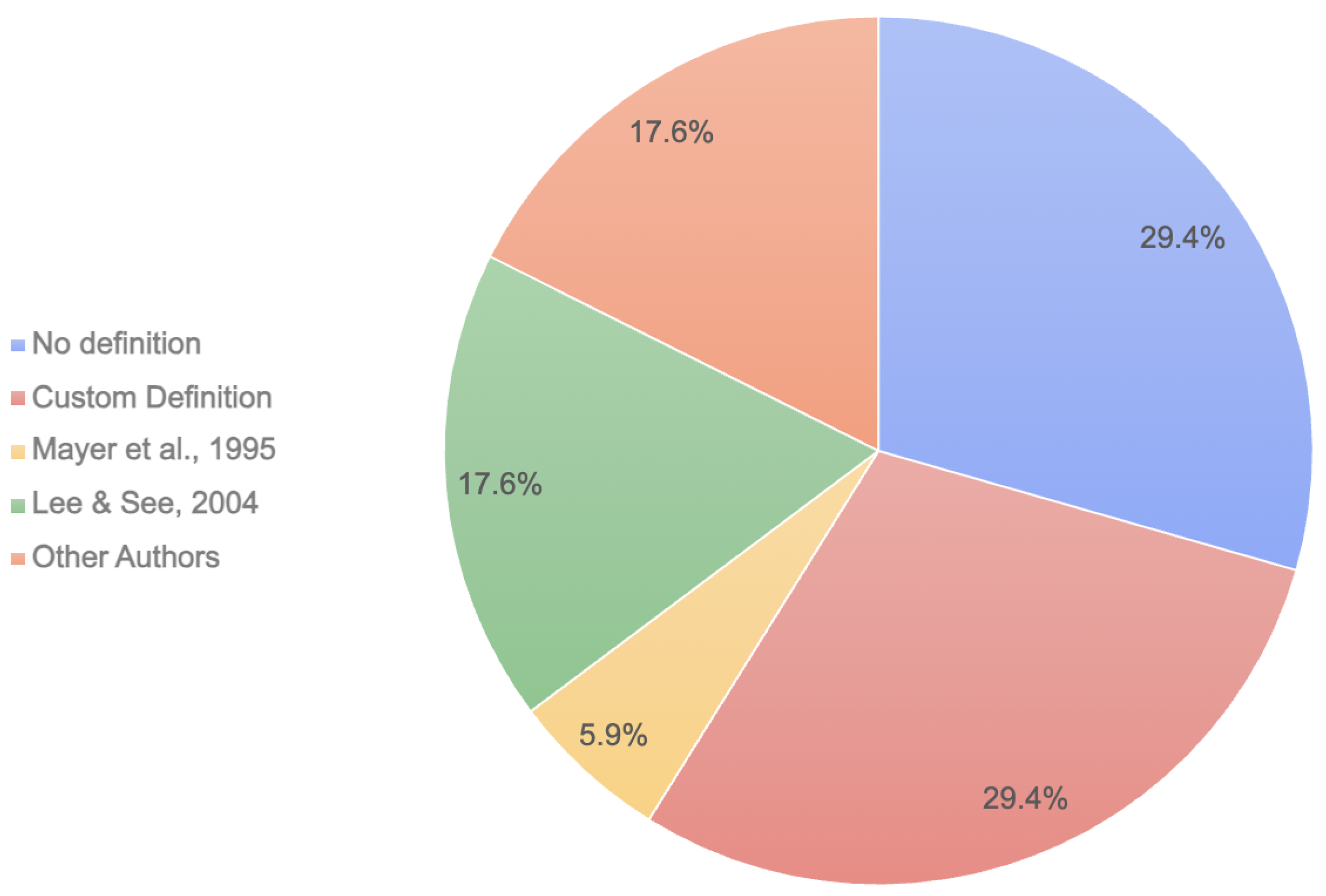

3.1.2. Conceptualization of User Trust in HRI

3.1.3. How Do the Studies Assess Trust?

3.2. What Has Been the Focus of HRI Researchers When Investigating Trust?

3.3. What Are the Barriers and Facilitators for Fostering Trustworthy HRI?

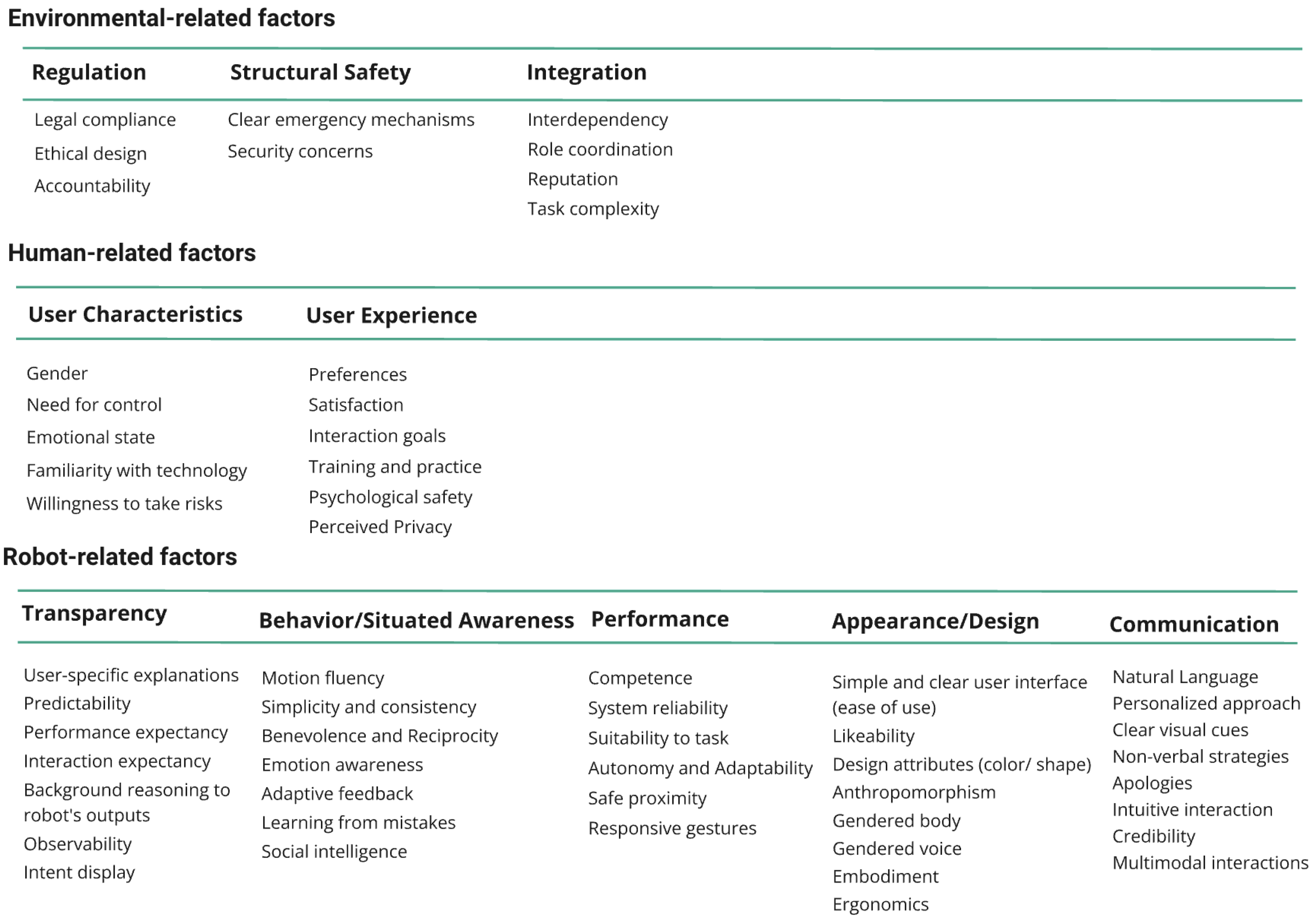

Facilitators and Barriers

- Environmental-related factors representing contextual considerations that include regulation, safety, and integration;

- Human-related factors, which refer to user characteristics and their experiences; and

- Robot-related factors, the attributes concerning robotic systems, are divided into transparency, communication, performance, behavior and situated awareness, and appearance and design.

4. Discussion

4.1. Lack of Conceptual Clarity

4.2. Diverse Focus

4.3. Context-Bound Factors

5. Conclusions

5.1. Future Considerations

5.2. Limitations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Pinto, A.; Sousa, S.; Simões, A.; Santos, J. A Trust Scale for Human-Robot Interaction: Translation, Adaptation, and Validation of a Human Computer Trust Scale. Hum. Behav. Emerg. Technol. 2022, 2022, 6437441. [Google Scholar] [CrossRef]

- European Commission; Directorate-General for Research and Innovation; Renda, A.; Schwaag Serger, S.; Tataj, D.; Morlet, A.; Isaksson, D.; Martins, F.; Mir Roca, M.; Morlet, A.; et al. Industry 5.0, a Transformative Vision for Europe–Governing Systemic Transformations Towards a Sustainable Industry; Publications Office of the European Union: Luxembourg, 2021. [Google Scholar] [CrossRef]

- Abeywickrama, D.B.; Bennaceur, A.; Chance, G.; Demiris, Y.; Kordoni, A.; Levine, M.; Moffat, L.; Moreau, L.; Mousavi, M.R.; Nuseibeh, B.; et al. On specifying for trustworthiness. Commun. ACM 2023, 67, 98–109. [Google Scholar] [CrossRef]

- Gebru, B.; Zeleke, L.; Blankson, D.; Nabil, M.; Nateghi, S.; Homaifar, A.; Tunstel, E. A Review on Human–Machine Trust Evaluation: Human-Centric and Machine-Centric Perspectives. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 952–962. [Google Scholar] [CrossRef]

- Commission, E. Artificial Intelligence Act. Available online: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai (accessed on 20 March 2025).

- Sousa, S.; Lamas, D.; Cravino, J.; Martins, P. Human-Centered Trustworthy Framework: A Human–Computer Interaction Perspective. Computer 2024, 57, 46–58. [Google Scholar] [CrossRef]

- Naiseh, M.; Bentley, C.; Ramchurn, S.D. Trustworthy autonomous systems (TAS): Engaging TAS experts in curriculum design. In Proceedings of the 2022 IEEE Global Engineering Education Conference (EDUCON), Tunis, Tunisia, 28–31 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 901–905. [Google Scholar]

- Daronnat, S.; Azzopardi, L.; Halvey, M.; Dubiel, M. Impact of Agent Reliability and Predictability on Trust in Real Time Human-Agent Collaboration. In Proceedings of the 8th International Conference on Human-Agent Interaction, Virtual, 10–13 November 2020; pp. 131–139. [Google Scholar] [CrossRef]

- Eban, E. Jidoka: Automation with a human touch. Softw. Syst. Model 2024. [Google Scholar] [CrossRef]

- Krijnen, A. The Toyota Way: 14 Management Principles from the World’s Greatest Manufacturer; Taylor & Francis: Abingdon, UK, 2007. [Google Scholar]

- Koichi, S.; Fujimoto, T.; Miller, W.; Shook, J. The Birth of Lean; Lean Enterprise Institute, Incorporated: Boston, MA, USA, 2012. [Google Scholar]

- Ergonomics of Human-System Interaction ISO 9241-210:2019 Human-Centred Design for Interactive Systems. 2019. Available online: https://www.iso.org/standard/77520.html (accessed on 30 March 2025).

- Rogers, E.M.; Singhal, A.; Quinlan, M.M. Diffusion of Innovations; Routledge: Oxfordshire, UK, 2014. [Google Scholar]

- Brynjolfsson, E.; Mcafee, A. Artificial intelligence, for real. Harv. Bus. Rev. 2017, 1, 1–31. [Google Scholar]

- European Commission: Directorate-General for Communications Networks Ethics Guidelines for Trustworthy AI. 2019. Available online: https://data.europa.eu/doi/10.2759/346720 (accessed on 30 March 2025).

- Gulati, S.; Sousa, S.; Lamas, D. Design, development and evaluation of a human–computer trust scale. Behav. Inf. Technol. 2019, 38, 1004–1015. [Google Scholar] [CrossRef]

- Gulati, S.; McDonagh, J.; Sousa, S.; Lamas, D. Trust models and theories in human–computer interaction: A systematic literature review. Comput. Hum. Behav. Rep. 2024, 16, 100495. [Google Scholar] [CrossRef]

- Sousa, S.; Cravino, J.; Martins, P. Challenges and Trends in User Trust Discourse in AI Popularity. Multimodal Technol. Interact. 2023, 7, 13. [Google Scholar] [CrossRef]

- De Visser, E.J.; Peeters, M.M.; Jung, M.F.; Kohn, S.; Shaw, T.H.; Pak, R.; Neerincx, M.A. Towards a theory of longitudinal trust calibration in human–robot teams. Int. J. Soc. Robot. 2020, 12, 459–478. [Google Scholar] [CrossRef]

- Pilacinski, A.; Pinto, A.; Oliveira, S.; Araújo, E.; Carvalho, C.; Silva, P.A.; Matias, R.; Menezes, P.; Sousa, S. The robot eyes don’t have it. The presence of eyes on collaborative robots yields marginally higher user trust but lower performance. Heliyon 2023, 9, e18164. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An integrative model of organizational trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Saßmannshausen, T.; Burggräf, P.; Hassenzahl, M.; Wagner, J. Human trust in otherware—A systematic literature review bringing all antecedents together. Ergonomics 2023, 66, 976–998. [Google Scholar] [CrossRef]

- Soh, H.; Xie, Y.; Chen, M.; Hsu, D. Multi-task trust transfer for human–robot interaction. Int. J. Robot. Res. 2020, 39, 233–249. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Prisma Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Bach, T.A.; Khan, A.; Hallock, H.; Beltrão, G.; Sousa, S. A Systematic Literature Review of User Trust in AI-Enabled Systems: An HCI Perspective. Int. J. Hum.–Comput. Interact. 2024, 40, 1251–1266. [Google Scholar] [CrossRef]

- Iba, T.; Yoshikawa, A.; Munakata, K. Philosophy and methodology of clustering in pattern mining: Japanese anthropologist Jiro Kawakita’s KJ method. In Proceedings of the 24th Conference on Pattern Languages of Programs, Vancouver, ON, Canada, 22–25 October 2017; pp. 1–11. [Google Scholar]

- Daniel, B.; Thomessen, T.; Korondi, P. Simplified Human-Robot Interaction: Modeling and Evaluation. Model. Identif. Control A Nor. Res. Bull. 2013, 34, 199–211. [Google Scholar] [CrossRef]

- Jung, Y.; Cho, E.; Kim, S. Users’ Affective and Cognitive Responses to Humanoid Robots in Different Expertise Service Contexts. Cyberpsychol. Behav. Soc. Netw. 2021, 24, 300–306. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, T.; Wang, C.; Quan, W.; Tang, M. Complexity-Driven Trust Dynamics in Human–Robot Interactions: Insights from AI-Enhanced Collaborative Engagements. Appl. Sci. 2023, 13, 12989. [Google Scholar] [CrossRef]

- Esterwood, C.; Robert, L.P. The theory of mind and human–robot trust repair. Sci. Rep. 2023, 13, 9877. [Google Scholar] [CrossRef] [PubMed]

- Alam, S.; Johnston, B.; Vitale, J.; Williams, M.A. Would you trust a robot with your mental health? The interaction of emotion and logic in persuasive backfiring. In Proceedings of the 2021 30th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Vancouver, ON, Canada, 8–12 August 2021; pp. 384–391. [Google Scholar] [CrossRef]

- Brule, R.v.d.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.J.; Haselager, P. Do Robot Performance and Behavioral Style affect Human Trust? Int. J. Soc. Robot. 2014, 6, 519–531. [Google Scholar] [CrossRef]

- Kraus, J.M.; Merger, J.; Gröner, F.; Pätz, J. “Sorry” Says the Robot. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; pp. 436–441. [Google Scholar] [CrossRef]

- Gaudiello, I.; Zibetti, E.; Lefort, S.; Chetouani, M.; Ivaldi, S. Trust as indicator of robot functional and social acceptance. An experimental study on user conformation to iCub answers. Comput. Hum. Behav. 2016, 61, 633–655. [Google Scholar] [CrossRef]

- Lim, M.Y.; Robb, D.A.; Wilson, B.W.; Hastie, H. Feeding the Coffee Habit: A Longitudinal Study of a Robo-Barista. In Proceedings of the 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, Republic of Korea, 28–31 August 2023; pp. 1983–1990. [Google Scholar] [CrossRef]

- Yun, H.; Yang, J.H. Configuring user information by considering trust threatening factors associated with automated vehicles. Eur. Transp. Res. Rev. 2022, 14, 9. [Google Scholar] [CrossRef]

- Babel, F.; Kraus, J.; Baumann, M. Findings From A Qualitative Field Study with An Autonomous Robot in Public: Exploration of User Reactions and Conflicts. Int. J. Soc. Robot. 2022, 14, 1625–1655. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, G.; Ge, W.; Duan, J.; Chen, Z.; Wen, L. Perceived Safety Assessment of Interactive Motions in Human–Soft Robot Interaction. Biomimetics 2024, 9, 58. [Google Scholar] [CrossRef]

- Chen, N.; Zhai, Y.; Liu, X. The Effects of Robots’ Altruistic Behaviours and Reciprocity on Human-robot Trust. Int. J. Soc. Robot. 2022, 14, 1913–1931. [Google Scholar] [CrossRef]

- Clement, P.; Veledar, O.; Könczöl, C.; Danzinger, H.; Posch, M.; Eichberger, A.; Macher, G. Enhancing Acceptance and Trust in Automated Driving through Virtual Experience on a Driving Simulator. Energies 2022, 15, 781. [Google Scholar] [CrossRef]

- Sanders, T.; Kaplan, A.; Koch, R.; Schwartz, M.; Hancock, P.A. The Relationship Between Trust and Use Choice in Human-Robot Interaction. Hum. Factors J. Hum. Factors Ergon. Soc. 2018, 61, 614–626. [Google Scholar] [CrossRef]

- Miller, L.; Kraus, J.; Babel, F.; Baumann, M. More Than a Feeling—Interrelation of Trust Layers in Human-Robot Interaction and the Role of User Dispositions and State Anxiety. Front. Psychol. 2021, 12, 592711. [Google Scholar] [CrossRef]

- Huang, H.; Rau, P.L.P.; Ma, L. Will you listen to a robot? Effects of robot ability, task complexity, and risk on human decision-making. Adv. Robot. 2021, 35, 1156–1166. [Google Scholar] [CrossRef]

- Adami, P.; Rodrigues, P.B.; Woods, P.J.; Becerik-Gerber, B.; Soibelman, L.; Copur-Gencturk, Y.; Lucas, G. Impact of VR-Based Training on Human–Robot Interaction for Remote Operating Construction Robots. J. Comput. Civ. Eng. 2022, 36, 04022006. [Google Scholar] [CrossRef]

- Ambsdorf, J.; Munir, A.; Wei, Y.; Degkwitz, K.; Harms, H.M.; Stannek, S.; Ahrens, K.; Becker, D.; Strahl, E.; Weber, T.; et al. Explain yourself! Effects of Explanations in Human-Robot Interaction. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 29 August–2 September 2022; pp. 393–400. [Google Scholar] [CrossRef]

- Kraus, M.; Wagner, N.; Untereiner, N.; Minker, W. Including Social Expectations for Trustworthy Proactive Human-Robot Dialogue. In Proceedings of the 30th ACM Conference on User Modeling, Adaptation and Personalization, Barcelona, Spain, 4–7 July 2022; pp. 23–33. [Google Scholar] [CrossRef]

- Pompe, B.L.; Velner, E.; Truong, K.P. The Robot That Showed Remorse: Repairing Trust with a Genuine Apology. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022; pp. 260–265. [Google Scholar] [CrossRef]

- Cameron, D.; Saille, S.d.; Collins, E.C.; Aitken, J.M.; Cheung, H.; Chua, A.; Loh, E.J.; Law, J. The effect of social-cognitive recovery strategies on likability, capability and trust in social robots. Comput. Hum. Behav. 2021, 114, 106561. [Google Scholar] [CrossRef]

- Schaefer, K.E.; Straub, E.R.; Chen, J.Y.; Putney, J.; Evans, A. Communicating intent to develop shared situation awareness and engender trust in human-agent teams. Cogn. Syst. Res. 2017, 46, 26–39. [Google Scholar] [CrossRef]

- Koren, Y.; Polak, R.F.; Levy-Tzedek, S. Extended Interviews with Stroke Patients Over a Long-Term Rehabilitation Using Human–Robot or Human–Computer Interactions. Int. J. Soc. Robot. 2022, 14, 1893–1911. [Google Scholar] [CrossRef] [PubMed]

- Alonso, V.; Puente, P.d.l. System Transparency in Shared Autonomy: A Mini Review. Front. Neurorobot. 2018, 12, 83. [Google Scholar] [CrossRef]

- Yuan, F.; Klavon, E.; Liu, Z.; Lopez, R.P.; Zhao, X. A Systematic Review of Robotic Rehabilitation for Cognitive Training. Front. Robot. AI 2021, 8, 605715. [Google Scholar] [CrossRef]

- Xu, K.; Chen, M.; You, L. The Hitchhiker’s Guide to a Credible and Socially Present Robot: Two Meta-Analyses of the Power of Social Cues in Human–Robot Interaction. Int. J. Soc. Robot. 2023, 15, 269–295. [Google Scholar] [CrossRef]

- Tian, L.; Oviatt, S. A Taxonomy of Social Errors in Human-Robot Interaction. ACM Trans. Hum.-Robot. Interact. (THRI) 2021, 10, 1–32. [Google Scholar] [CrossRef]

- Akalin, N.; Kiselev, A.; Kristoffersson, A.; Loutfi, A. A Taxonomy of Factors Influencing Perceived Safety in Human–Robot Interaction. Int. J. Soc. Robot. 2023, 15, 1993–2004. [Google Scholar] [CrossRef]

- Schoeller, F.; Miller, M.; Salomon, R.; Friston, K.J. Trust as Extended Control: Human-Machine Interactions as Active Inference. Front. Syst. Neurosci. 2021, 15, 669810. [Google Scholar] [CrossRef]

- Forbes, A.; Roger, D. Stress, social support and fear of disclosure. Br. J. Health Psychol. 1999, 4, 165–179. [Google Scholar] [CrossRef]

- Schaefer, K.E. The Perception and Measurement of Human-Robot Trust. Ph.D. Thesis, College of Sciences, Atlanta, GA, USA, 2013. [Google Scholar]

- Sousa, S.; Kalju, T. Modeling trust in COVID-19 contact-tracing apps using the human-computer Trust Scale: Online survey study. JMIR Hum. Factors 2022, 9, e33951. [Google Scholar] [CrossRef] [PubMed]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E. Can you trust your robot? Ergon. Des. 2011, 19, 24–29. [Google Scholar] [CrossRef]

- McKnight, D.H.; Chervany, N.L. What trust means in e-commerce customer relationships: An interdisciplinary conceptual typology. Int. J. Electron. Commer. 2001, 6, 35–59. [Google Scholar] [CrossRef]

- Beltrão, G.; Sousa, S.; Lamas, D. Unmasking Trust: Examining Users’ Perspectives of Facial Recognition Systems in Mozambique. In Proceedings of the 4th African Human Computer Interaction Conference, New York, NY, USA, 21–23 June 2024; AfriCHI’23. pp. 38–43. [Google Scholar] [CrossRef]

- Beltrão, G.; Sousa, S. Factors Influencing Trust in WhatsApp: A Cross-Cultural Study. In Proceedings of the International Conference on Human-Computer Interaction, Bari, Italy, 30 August–3 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 495–508. [Google Scholar]

- Nomura, T.; Suzuki, T.; Kanda, T.; Kato, K. Measurement of negative attitudes toward robots. Interact. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 2006, 7, 437–454. [Google Scholar] [CrossRef]

- Jian, J.Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Bartneck, C. Godspeed questionnaire series: Translations and usage. In International Handbook of Behavioral Health Assessment; Springer: Berlin/Heidelberg, Germany, 2023; pp. 1–35. [Google Scholar]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.; De Visser, E.J.; Parasuraman, R. A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Kohn, S.C.; Visser, E.J.d.; Wiese, E.; Lee, Y.C.; Shaw, T.H. Measurement of Trust in Automation: A Narrative Review and Reference Guide. Front. Psychol. 2021, 12, 604977. [Google Scholar] [CrossRef] [PubMed]

- Kok, B.C.; Soh, H. Trust in Robots: Challenges and Opportunities. Curr. Robot. Rep. 2020, 1, 297–309. [Google Scholar] [CrossRef] [PubMed]

- Gihleb, R.; Giuntella, O.; Stella, L.; Wang, T. Industrial robots, workers’ safety, and health. Labour Econ. 2022, 78, 102205. [Google Scholar] [CrossRef]

- Analysis, I. The Collaborative Robot Market. 2019. Available online: https://www.interactanalysis.com/wp-content/uploads/2019/12/Cobot-Market-to-account-for-30-of-Total-Robot-Market-by-2027-–-Interact-Analysis-PR-Dec-19.pdf (accessed on 20 March 2025).

- Laux, J.; Wachter, S.; Mittelstadt, B. Trustworthy artificial intelligence and the European Union AI act: On the conflation of trustworthiness and acceptability of risk. Regul. Gov. 2024, 18, 3–32. [Google Scholar] [CrossRef] [PubMed]

- Towers-Clark, C. Keep The Robot In The Cage—How Effective (And Safe) Are Co-Bots, 2019. Forbes. Available online: https://www.forbes.com/sites/charlestowersclark/2019/09/11/keep-the-robot-in-the-cagehow-effective--safe-are-co-bots/ (accessed on 20 March 2025).

- Watson. EU’s Expert Group Releases Policy and Investment Recommendations for Trustworthy AI. 2021. Available online: https://www.ibm.com/policy/eu-ai-trust/ (accessed on 20 March 2025).

- Shneiderman, B. Human-centered artificial intelligence: Reliable, safe & trustworthy. Int. J. Hum.–Comput. Interact. 2020, 36, 495–504. [Google Scholar]

- Paramonova, I.; Sousa, S.; Lamas, D. Exploring Factors Affecting User Perception of Trustworthiness in Advanced Technology: Preliminary Results; Springer: Denmark, 2023; pp. 366–383. [Google Scholar] [CrossRef]

| How the Study Measures Trust | Study Type | ||||||

|---|---|---|---|---|---|---|---|

| Experimental | Systematic Review | Case-Study | Survey | Cross-Sectional | Total | % | |

| Custom Question | |||||||

| Daniel et al. (2013) [29] | x | ||||||

| Jung et al. (2021) [30] | x | ||||||

| Zhu et al. (2023) [31] | x | ||||||

| Esterwood et al. (2023) [32] | x | ||||||

| Alam et al. (2021) [33] | x | ||||||

| Brule et al. (2014) [34] | x | ||||||

| Kraus et al. (2023) [35] | x | ||||||

| Gaudiello et al. (2016) [36] | x | ||||||

| Soh et al. (2018) [25] | x | ||||||

| Total | 9 | 26.5 | |||||

| Combined measures | |||||||

| Lim et al. (2023) [37] | x | ||||||

| Yun et al. (2022) [38] | x | ||||||

| Babel et al. (2022) [39] | x | ||||||

| Wang et al. (2024) [40] | x | ||||||

| Chen et al. (2022) [41] | x | ||||||

| Clement et al. (2022) [42] | x | ||||||

| Sanders et al. (2018) [43] | x | ||||||

| Miller et al. (2021) [44] | x | ||||||

| Total | 8 | 23.5 | |||||

| Validaded Scale | |||||||

| Pinto et al. (2022) [2] | x | ||||||

| Huang et al. (2021) [45] | x | ||||||

| Gulati et al. (2019) [17] | x | ||||||

| Adami et al. (2022) [46] | x | ||||||

| Ambsdorf et al. (2022) [47] | x | ||||||

| Kraus et al. (2022) [48] | x | ||||||

| Pompe et al. (2022) [49] | x | ||||||

| Total | 7 | 20.6 | |||||

| Self-report | |||||||

| Cameron et al. (2021) [50] | x | ||||||

| Schaefer et al. (2017) [51] | x | ||||||

| Koren et al. (2022) [52] | x | ||||||

| Total | 3 | 8.8 | |||||

| N/A | |||||||

| Alonso et al. (2018) [53] | x | ||||||

| Yuan et al. (2021) [54] | x | ||||||

| Bach et al. (2024) [27] | x | ||||||

| Xu et al. (2023) [55] | x | ||||||

| Tian et al. (2021) [56] | x | ||||||

| Akalin et al. (2023) [57] | x | ||||||

| Schoeller et al. (2021) [58] | x | ||||||

| Total | 7 | 20.6 | |||||

| Grand Total | 34 | 100 | |||||

| Application Area | ||||||||

|---|---|---|---|---|---|---|---|---|

| General | Industrial | Service | Healthcare | AMR | AI-Enabled Systems | Total | % | |

| Study Focus | ||||||||

| Human–robot communication | ||||||||

| Alonso et al. (2018) [53] | x | |||||||

| Lim et al. (2023) [37] | x | |||||||

| Huang et al. (2021) [45] | x | |||||||

| Tian et al. (2021) [56] | x | |||||||

| Ambsdorf et al. (2022) [47] | x | |||||||

| Kraus et al. (2022) [48] | x | |||||||

| Pompe et al. (2022) [49] | x | 7 | 20.6% | |||||

| Robot behaviors | ||||||||

| Brule et al. (2014) [34] | x | 1 | 2.9% | |||||

| Human–robot teaming | ||||||||

| Schaefer et al. (2017) [51] | x | |||||||

| Zhu et al. (2023) [31] | x | 2 | 5.9% | |||||

| User studies | ||||||||

| Daniel et al. (2013) [29] | x | |||||||

| Yuan et al. (2021) [38] | x | |||||||

| Adami et al. (2022) [46] | x | 3 | 8.8% | |||||

| Trust indicators & measurements | ||||||||

| Pinto et al. (2022) [2] | x | |||||||

| Jung et al. (2021) [30] | x | |||||||

| Yun et al. (2022) [38] | x | |||||||

| Bach et al. (2024) [27] | x | |||||||

| Gulati et al. (2019) [17] | x | |||||||

| Koren et al. (2022) [52] | x | |||||||

| Schoeller et al. (2021) [58] | x | |||||||

| Soh et al. (2018) [25] | x | 8 | 23.5% | |||||

| User studies & trust measurements | ||||||||

| Clement et al. (2022) [42] | x | |||||||

| Cameron et al. (2021) [50] | x | |||||||

| Miller et al. (2021) [44] | x | 3 | 8.8% | |||||

| User studies & human–robot teaming | ||||||||

| Sanders et al. (2018) [43] | x | 1 | 2.9% | |||||

| User studies & Human–robot communication | ||||||||

| Babel et al. (2022) [39] | x | |||||||

| Alam et al. (2021) [33] | x | |||||||

| Gaudiello et al. (2016) [36] | x | 3 | 8.8% | |||||

| Trust measurements & robot behaviors | ||||||||

| Chen et al. (2022) [41] | x | 1 | 2.9% | |||||

| Trust measurements & human–robot communication | ||||||||

| Xu et al. (2023) [55] | x | 1 | 2.9% | |||||

| Robot behaviors & human–robot communication | ||||||||

| Esterwood et al. (2023) [32] | x | |||||||

| Kraus et al. (2023) [35] | x | 2 | 5.9% | |||||

| Robot behaviors & perceived safety | ||||||||

| Wang et al. (2024) [40] | x | 1 | 2.9% | |||||

| Human–robot teaming & perceived safety | ||||||||

| Akalin et al. (2023) [57] | x | 1 | 2.9% | |||||

| Grand Total | 34 | 100% | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Firmino de Souza, D.; Sousa, S.; Kristjuhan-Ling, K.; Dunajeva, O.; Roosileht, M.; Pentel, A.; Mõttus, M.; Can Özdemir, M.; Gratšjova, Ž. Trust and Trustworthiness from Human-Centered Perspective in Human–Robot Interaction (HRI)—A Systematic Literature Review. Electronics 2025, 14, 1557. https://doi.org/10.3390/electronics14081557

Firmino de Souza D, Sousa S, Kristjuhan-Ling K, Dunajeva O, Roosileht M, Pentel A, Mõttus M, Can Özdemir M, Gratšjova Ž. Trust and Trustworthiness from Human-Centered Perspective in Human–Robot Interaction (HRI)—A Systematic Literature Review. Electronics. 2025; 14(8):1557. https://doi.org/10.3390/electronics14081557

Chicago/Turabian StyleFirmino de Souza, Debora, Sonia Sousa, Kadri Kristjuhan-Ling, Olga Dunajeva, Mare Roosileht, Avar Pentel, Mati Mõttus, Mustafa Can Özdemir, and Žanna Gratšjova. 2025. "Trust and Trustworthiness from Human-Centered Perspective in Human–Robot Interaction (HRI)—A Systematic Literature Review" Electronics 14, no. 8: 1557. https://doi.org/10.3390/electronics14081557

APA StyleFirmino de Souza, D., Sousa, S., Kristjuhan-Ling, K., Dunajeva, O., Roosileht, M., Pentel, A., Mõttus, M., Can Özdemir, M., & Gratšjova, Ž. (2025). Trust and Trustworthiness from Human-Centered Perspective in Human–Robot Interaction (HRI)—A Systematic Literature Review. Electronics, 14(8), 1557. https://doi.org/10.3390/electronics14081557